Professional Documents

Culture Documents

Task Performance (Principles)

Task Performance (Principles)

Uploaded by

mendonezarmin3Copyright:

Available Formats

You might also like

- Ir Problems Ir Problems 4: F Reacts Further With Acidified Potassium Dichromate (VI) To Form EDocument1 pageIr Problems Ir Problems 4: F Reacts Further With Acidified Potassium Dichromate (VI) To Form Echarlesma123No ratings yet

- Ac Servo Amplifier Maintenance GFZ 65005e 07 CompressedDocument53 pagesAc Servo Amplifier Maintenance GFZ 65005e 07 CompressedSelmi AchrefNo ratings yet

- Vuniq User's ManualDocument96 pagesVuniq User's ManualMelchor VasquezNo ratings yet

- Heat Transfer VacuumDocument25 pagesHeat Transfer Vacuumpatrick1101No ratings yet

- ES ID EF S Ls - Es S Description Ls LF - Dur LS DUR LF Es + Dur Ef Ef LFDocument16 pagesES ID EF S Ls - Es S Description Ls LF - Dur LS DUR LF Es + Dur Ef Ef LFPragya NidhiNo ratings yet

- Lecture 4 Practice Question AnswersDocument5 pagesLecture 4 Practice Question AnswersAkanksha jainNo ratings yet

- 3mn0304 00 MN Pa 15900 Premier New by Acr Rev 4Document2 pages3mn0304 00 MN Pa 15900 Premier New by Acr Rev 4Muhammad Nur SalimNo ratings yet

- Infra-Red Spectroscopy: Hex-2-Ene Pentane Methylpropan-1-Ol 2-Methylpentan-3-OneDocument2 pagesInfra-Red Spectroscopy: Hex-2-Ene Pentane Methylpropan-1-Ol 2-Methylpentan-3-OneAmanda Mohammed100% (1)

- UntitledDocument4 pagesUntitledJonathan SeejattanNo ratings yet

- Abba GoldDocument2 pagesAbba Goldcarlosreismusic2022No ratings yet

- Foods From ChileDocument1 pageFoods From ChileAsraelNo ratings yet

- Hasil Praktikum Job 4 TSEAVDocument41 pagesHasil Praktikum Job 4 TSEAVintanNo ratings yet

- Ir Problems Ir Problems 3: Hex-2-Ene Pentane Methylpropan-1-Ol 2-Methylpentan Methylpentan-3-OneDocument1 pageIr Problems Ir Problems 3: Hex-2-Ene Pentane Methylpropan-1-Ol 2-Methylpentan Methylpentan-3-OneAmal Abu KhalilNo ratings yet

- Formwork Design QIPDocument64 pagesFormwork Design QIPMadhava PadiyarNo ratings yet

- Contoh Biaya ProduksiDocument6 pagesContoh Biaya ProduksiRobbenWNo ratings yet

- Contoh Biaya ProduksiDocument6 pagesContoh Biaya ProduksiRobbenWNo ratings yet

- Chemsheets As 1090 IR Problems 3 ANS (25-March-2024)Document1 pageChemsheets As 1090 IR Problems 3 ANS (25-March-2024)kalvin dudetteNo ratings yet

- Session 8, 9, 10, 11 - 2023Document32 pagesSession 8, 9, 10, 11 - 2023Ishani AryaNo ratings yet

- Frequency by PeriodDocument1 pageFrequency by PeriodIsobel V LauzonNo ratings yet

- Manpower Leveling and Pert CPM (Crashed) PDFDocument2 pagesManpower Leveling and Pert CPM (Crashed) PDFJonathan Marvin Bacares DueNo ratings yet

- FLYER E-Bikes Rearshok Table MY21 enDocument6 pagesFLYER E-Bikes Rearshok Table MY21 enHitNo ratings yet

- Graf Prestasi Diri Murid (Headcount) SPMDocument3 pagesGraf Prestasi Diri Murid (Headcount) SPMNorlly Mohd IsaNo ratings yet

- 18in Pa 18838 Excellent by AcrspeakersDocument2 pages18in Pa 18838 Excellent by AcrspeakersjetsauNo ratings yet

- Extra Sheets-Extra QuestionsDocument6 pagesExtra Sheets-Extra QuestionsShNo ratings yet

- 3MN0259 00 MN Acr 15500 Black Platinum Series Rev 3Document2 pages3MN0259 00 MN Acr 15500 Black Platinum Series Rev 3RezaRisdiNo ratings yet

- Lemper Filtrasi ElanDocument10 pagesLemper Filtrasi ElanElan Patria NusadiNo ratings yet

- 3 Component PyramidDocument1 page3 Component Pyramidshifaghannam2024No ratings yet

- f22 hw7 SolDocument12 pagesf22 hw7 SolPeter RosenbergNo ratings yet

- DIP Lecture5Document20 pagesDIP Lecture5Anonymous 0aNRkBg1100% (1)

- Task 3 Block 3Document11 pagesTask 3 Block 3marta lis tuaNo ratings yet

- Luminaire Property: Ningbo Sunshinelux Lighting CO.,LDocument6 pagesLuminaire Property: Ningbo Sunshinelux Lighting CO.,LGonzalo LunaNo ratings yet

- Exterior Placards and MarkingsDocument2 pagesExterior Placards and MarkingsMozerNo ratings yet

- Kunduz Distribution 125 A. Cable DesignationDocument4 pagesKunduz Distribution 125 A. Cable Designationconf useuseNo ratings yet

- Arithmetic Mean PDFDocument29 pagesArithmetic Mean PDFDivya Gothi100% (1)

- Fluid Mechanics Exp Flow OverCylinder2016Document5 pagesFluid Mechanics Exp Flow OverCylinder2016Ahmad Mohammad Abu GabahNo ratings yet

- Ing Reiyi T01 EHS Tutorial 5Document2 pagesIng Reiyi T01 EHS Tutorial 5IngReiyiNo ratings yet

- Contoh Biaya ProduksiDocument6 pagesContoh Biaya Produksijenifir marchNo ratings yet

- Boldface t37Document4 pagesBoldface t37Sebastian Riaño CNo ratings yet

- Production and SupplyDocument26 pagesProduction and Supplykajani nesakumarNo ratings yet

- Prac 2Document11 pagesPrac 2baaaaNDSNo ratings yet

- Scoresheet Electronic QbowlDocument30 pagesScoresheet Electronic QbowlAnand KamannavarNo ratings yet

- Aipc21 21 00 05aDocument6 pagesAipc21 21 00 05aramosvilledasraulNo ratings yet

- Cost SteetDocument5 pagesCost SteetGaurav KhannaNo ratings yet

- Anima - Lili (Guerreira)Document7 pagesAnima - Lili (Guerreira)Tiago MarinhoNo ratings yet

- Luminaire Property: Industrias Jelco EIRLDocument19 pagesLuminaire Property: Industrias Jelco EIRLAntony FloresNo ratings yet

- Actual Man-Hours Rendered: MonthDocument1 pageActual Man-Hours Rendered: MonthMikee FelipeNo ratings yet

- DCP On TP 4Document5 pagesDCP On TP 4Demsew AdelahuNo ratings yet

- StatisticsDocument64 pagesStatisticsAyush NautiyalNo ratings yet

- WATER PATROL Sci-Dama (Cu.m) POWER PATROL Sci-Dama (KWH) : Property Of: Pinky R. Jandoc Guindapunan ESDocument3 pagesWATER PATROL Sci-Dama (Cu.m) POWER PATROL Sci-Dama (KWH) : Property Of: Pinky R. Jandoc Guindapunan ESMajedah Mohammad Taha100% (3)

- Alumbrado Público COB 100W Certificación SecDocument18 pagesAlumbrado Público COB 100W Certificación SecgmvchileNo ratings yet

- Lec 5Document19 pagesLec 5Akram TaNo ratings yet

- Event Round Moderator Room TeamsDocument40 pagesEvent Round Moderator Room TeamsAnand KamannavarNo ratings yet

- Luminaire Property: Industrias Jelco EIRLDocument16 pagesLuminaire Property: Industrias Jelco EIRLAntony FloresNo ratings yet

- Production AnalysisDocument22 pagesProduction Analysisnikitapaul2121No ratings yet

- Monday Reps Week 1 Week 2 Week 3 Squat: Assistance ExercisesDocument4 pagesMonday Reps Week 1 Week 2 Week 3 Squat: Assistance ExercisesTrevor Albert100% (1)

- Tugas Diskusi 3Document4 pagesTugas Diskusi 3DITA PRADITIA AGUSTIANINo ratings yet

- Stronglifts 5x5 AdvancedDocument6 pagesStronglifts 5x5 Advancedrbn brbNo ratings yet

- Jim Wendler's 5 - 3 - 1 Building The MonolithDocument2 pagesJim Wendler's 5 - 3 - 1 Building The MonolithIGNACIO ALONSO CHAVEZ RUBILARNo ratings yet

- 16 MaxflowalgsDocument6 pages16 MaxflowalgspillushnNo ratings yet

- Basics of Reversible Logic GatesDocument22 pagesBasics of Reversible Logic GatesRashika AggarwalNo ratings yet

- A330 B2 Ata31.2 Recording Enhanced Vaeco PDFDocument64 pagesA330 B2 Ata31.2 Recording Enhanced Vaeco PDFAaron Harvey50% (2)

- XK 3190 C 602Document87 pagesXK 3190 C 602andre rizoNo ratings yet

- Ds28e07 3122240Document24 pagesDs28e07 3122240ManunoghiNo ratings yet

- 1.1 Information Representation - ExerciseDocument15 pages1.1 Information Representation - Exerciseekta sharmaNo ratings yet

- FANUC Series 0+-MODEL D FANUC Series 0+ Mate-MODEL D: Parameter ManualDocument528 pagesFANUC Series 0+-MODEL D FANUC Series 0+ Mate-MODEL D: Parameter Manual游本豐No ratings yet

- C++ and Programming FundamentalsDocument86 pagesC++ and Programming FundamentalsteklitNo ratings yet

- CH12 Instruction Sets Characteristics and FunctionsDocument36 pagesCH12 Instruction Sets Characteristics and FunctionsNguyen Phuc Nam Giang (K18 HL)No ratings yet

- Communication Protocol of GpsDocument37 pagesCommunication Protocol of GpsShubham AcharyaNo ratings yet

- Accuload Comm ManualDocument166 pagesAccuload Comm ManuallataNo ratings yet

- 07 PackedDecimalDocument36 pages07 PackedDecimalsamuelsujithNo ratings yet

- ECE2021-2-Number Systems and CodesDocument65 pagesECE2021-2-Number Systems and CodesKhai Hua MinhNo ratings yet

- Bose IR Receiver Spec 2.2Document6 pagesBose IR Receiver Spec 2.2Denny PolzinNo ratings yet

- Consort R36xx - ComputerControlDocument17 pagesConsort R36xx - ComputerControlMisterMachineNo ratings yet

- PT2272 PDFDocument18 pagesPT2272 PDFalperdaNo ratings yet

- CG RomDocument14 pagesCG RomvijaysatawNo ratings yet

- Computer Link Ethernet DriverDocument30 pagesComputer Link Ethernet DriverCristian CanazaNo ratings yet

- 1W-H0-05 BZ (Eng)Document7 pages1W-H0-05 BZ (Eng)Nawfal El-khadiriNo ratings yet

- Dynamixel: User's Manual 2006-06-14Document38 pagesDynamixel: User's Manual 2006-06-14Mohamed LahnineNo ratings yet

- Living in The It Era: Data Representation and Digital ElectronicsDocument3 pagesLiving in The It Era: Data Representation and Digital ElectronicsshandominiquefelicitycaneteNo ratings yet

- Ict Notes 2022Document66 pagesIct Notes 2022Alex MitemaNo ratings yet

- SAIL Rourkela Operator-Cum-Technician Trainee (OCTT) Electrical 2019 Solved Question Paper Part OneDocument11 pagesSAIL Rourkela Operator-Cum-Technician Trainee (OCTT) Electrical 2019 Solved Question Paper Part OnevectorNo ratings yet

- Hydrotechnik MultihandyDocument8 pagesHydrotechnik MultihandyDANIZACHNo ratings yet

- Music Cards Music Cards: Make A CardDocument9 pagesMusic Cards Music Cards: Make A CardBurime GrajqevciNo ratings yet

- Page 49 & 50Document11 pagesPage 49 & 50Qazi SaadNo ratings yet

- Basic MCQs of ComputerDocument16 pagesBasic MCQs of Computerjaveed67% (3)

- Profibus Module (Opmp) Operating Instructions: EnglishDocument22 pagesProfibus Module (Opmp) Operating Instructions: EnglishchochoroyNo ratings yet

- Programming ProblemsDocument9 pagesProgramming ProblemsAkhil SanthoshNo ratings yet

Task Performance (Principles)

Task Performance (Principles)

Uploaded by

mendonezarmin3Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Task Performance (Principles)

Task Performance (Principles)

Uploaded by

mendonezarmin3Copyright:

Available Formats

Armin D.

Mendonez

BT303

Task Performance

Answer:

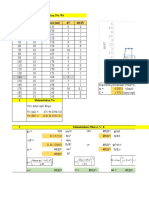

a. Input Entropy: The input entropy is calculated using the formula: H(X) = -Σ p(x) * log2(p(x)) where X is

the random variable representing the input states, and p(x) is the probability of each input state. In this

case, all four input states are equiprobable, so each p(x) = 1/4. Therefore, the input entropy is: H(X) = -Σ

(1/4) * log2(1/4) = 2 bits

b. Noise Entropy: Similarly, the noise entropy is calculated using the same formula, but for the random

variable representing the noise values: H(N) = -Σ p(n) * log2(p(n)) where N is the random variable

representing the noise values, and p(n) is the probability of each noise value. Again, all three noise

values are equiprobable, so each p(n) = 1/3. Therefore, the noise entropy is: H(N) = -Σ (1/3) * log2(1/3) ≈

1.585 bits

c. Outputs of each equiprobable input states: The outputs are simply the sum of the corresponding input

state and noise value. For example, the output of the first input state (35) with the first noise value (5) is

35 + 5 = 40.

Input State Noise Value Output

35 5 40

35 10 45

35 15 50

65 5 70

65 10 75

65 15 80

95 5 100

95 10 105

95 15 110

125 5 130

125 10 135

125 15 140

d. Output Entropy: The output entropy is calculated using the same formula as the input and noise

entropy, but for the joint probability distribution of the input and noise values. This can be a bit more

complex to calculate, but fortunately, we can use the shortcut that the entropy of the sum of two

independent random variables is equal to the sum of their individual entropies.

Therefore, the output entropy is:

H (X + N) = H(X) + H(N) = 2 bits + 1.585 bits ≈ 3.585 bits

You might also like

- Ir Problems Ir Problems 4: F Reacts Further With Acidified Potassium Dichromate (VI) To Form EDocument1 pageIr Problems Ir Problems 4: F Reacts Further With Acidified Potassium Dichromate (VI) To Form Echarlesma123No ratings yet

- Ac Servo Amplifier Maintenance GFZ 65005e 07 CompressedDocument53 pagesAc Servo Amplifier Maintenance GFZ 65005e 07 CompressedSelmi AchrefNo ratings yet

- Vuniq User's ManualDocument96 pagesVuniq User's ManualMelchor VasquezNo ratings yet

- Heat Transfer VacuumDocument25 pagesHeat Transfer Vacuumpatrick1101No ratings yet

- ES ID EF S Ls - Es S Description Ls LF - Dur LS DUR LF Es + Dur Ef Ef LFDocument16 pagesES ID EF S Ls - Es S Description Ls LF - Dur LS DUR LF Es + Dur Ef Ef LFPragya NidhiNo ratings yet

- Lecture 4 Practice Question AnswersDocument5 pagesLecture 4 Practice Question AnswersAkanksha jainNo ratings yet

- 3mn0304 00 MN Pa 15900 Premier New by Acr Rev 4Document2 pages3mn0304 00 MN Pa 15900 Premier New by Acr Rev 4Muhammad Nur SalimNo ratings yet

- Infra-Red Spectroscopy: Hex-2-Ene Pentane Methylpropan-1-Ol 2-Methylpentan-3-OneDocument2 pagesInfra-Red Spectroscopy: Hex-2-Ene Pentane Methylpropan-1-Ol 2-Methylpentan-3-OneAmanda Mohammed100% (1)

- UntitledDocument4 pagesUntitledJonathan SeejattanNo ratings yet

- Abba GoldDocument2 pagesAbba Goldcarlosreismusic2022No ratings yet

- Foods From ChileDocument1 pageFoods From ChileAsraelNo ratings yet

- Hasil Praktikum Job 4 TSEAVDocument41 pagesHasil Praktikum Job 4 TSEAVintanNo ratings yet

- Ir Problems Ir Problems 3: Hex-2-Ene Pentane Methylpropan-1-Ol 2-Methylpentan Methylpentan-3-OneDocument1 pageIr Problems Ir Problems 3: Hex-2-Ene Pentane Methylpropan-1-Ol 2-Methylpentan Methylpentan-3-OneAmal Abu KhalilNo ratings yet

- Formwork Design QIPDocument64 pagesFormwork Design QIPMadhava PadiyarNo ratings yet

- Contoh Biaya ProduksiDocument6 pagesContoh Biaya ProduksiRobbenWNo ratings yet

- Contoh Biaya ProduksiDocument6 pagesContoh Biaya ProduksiRobbenWNo ratings yet

- Chemsheets As 1090 IR Problems 3 ANS (25-March-2024)Document1 pageChemsheets As 1090 IR Problems 3 ANS (25-March-2024)kalvin dudetteNo ratings yet

- Session 8, 9, 10, 11 - 2023Document32 pagesSession 8, 9, 10, 11 - 2023Ishani AryaNo ratings yet

- Frequency by PeriodDocument1 pageFrequency by PeriodIsobel V LauzonNo ratings yet

- Manpower Leveling and Pert CPM (Crashed) PDFDocument2 pagesManpower Leveling and Pert CPM (Crashed) PDFJonathan Marvin Bacares DueNo ratings yet

- FLYER E-Bikes Rearshok Table MY21 enDocument6 pagesFLYER E-Bikes Rearshok Table MY21 enHitNo ratings yet

- Graf Prestasi Diri Murid (Headcount) SPMDocument3 pagesGraf Prestasi Diri Murid (Headcount) SPMNorlly Mohd IsaNo ratings yet

- 18in Pa 18838 Excellent by AcrspeakersDocument2 pages18in Pa 18838 Excellent by AcrspeakersjetsauNo ratings yet

- Extra Sheets-Extra QuestionsDocument6 pagesExtra Sheets-Extra QuestionsShNo ratings yet

- 3MN0259 00 MN Acr 15500 Black Platinum Series Rev 3Document2 pages3MN0259 00 MN Acr 15500 Black Platinum Series Rev 3RezaRisdiNo ratings yet

- Lemper Filtrasi ElanDocument10 pagesLemper Filtrasi ElanElan Patria NusadiNo ratings yet

- 3 Component PyramidDocument1 page3 Component Pyramidshifaghannam2024No ratings yet

- f22 hw7 SolDocument12 pagesf22 hw7 SolPeter RosenbergNo ratings yet

- DIP Lecture5Document20 pagesDIP Lecture5Anonymous 0aNRkBg1100% (1)

- Task 3 Block 3Document11 pagesTask 3 Block 3marta lis tuaNo ratings yet

- Luminaire Property: Ningbo Sunshinelux Lighting CO.,LDocument6 pagesLuminaire Property: Ningbo Sunshinelux Lighting CO.,LGonzalo LunaNo ratings yet

- Exterior Placards and MarkingsDocument2 pagesExterior Placards and MarkingsMozerNo ratings yet

- Kunduz Distribution 125 A. Cable DesignationDocument4 pagesKunduz Distribution 125 A. Cable Designationconf useuseNo ratings yet

- Arithmetic Mean PDFDocument29 pagesArithmetic Mean PDFDivya Gothi100% (1)

- Fluid Mechanics Exp Flow OverCylinder2016Document5 pagesFluid Mechanics Exp Flow OverCylinder2016Ahmad Mohammad Abu GabahNo ratings yet

- Ing Reiyi T01 EHS Tutorial 5Document2 pagesIng Reiyi T01 EHS Tutorial 5IngReiyiNo ratings yet

- Contoh Biaya ProduksiDocument6 pagesContoh Biaya Produksijenifir marchNo ratings yet

- Boldface t37Document4 pagesBoldface t37Sebastian Riaño CNo ratings yet

- Production and SupplyDocument26 pagesProduction and Supplykajani nesakumarNo ratings yet

- Prac 2Document11 pagesPrac 2baaaaNDSNo ratings yet

- Scoresheet Electronic QbowlDocument30 pagesScoresheet Electronic QbowlAnand KamannavarNo ratings yet

- Aipc21 21 00 05aDocument6 pagesAipc21 21 00 05aramosvilledasraulNo ratings yet

- Cost SteetDocument5 pagesCost SteetGaurav KhannaNo ratings yet

- Anima - Lili (Guerreira)Document7 pagesAnima - Lili (Guerreira)Tiago MarinhoNo ratings yet

- Luminaire Property: Industrias Jelco EIRLDocument19 pagesLuminaire Property: Industrias Jelco EIRLAntony FloresNo ratings yet

- Actual Man-Hours Rendered: MonthDocument1 pageActual Man-Hours Rendered: MonthMikee FelipeNo ratings yet

- DCP On TP 4Document5 pagesDCP On TP 4Demsew AdelahuNo ratings yet

- StatisticsDocument64 pagesStatisticsAyush NautiyalNo ratings yet

- WATER PATROL Sci-Dama (Cu.m) POWER PATROL Sci-Dama (KWH) : Property Of: Pinky R. Jandoc Guindapunan ESDocument3 pagesWATER PATROL Sci-Dama (Cu.m) POWER PATROL Sci-Dama (KWH) : Property Of: Pinky R. Jandoc Guindapunan ESMajedah Mohammad Taha100% (3)

- Alumbrado Público COB 100W Certificación SecDocument18 pagesAlumbrado Público COB 100W Certificación SecgmvchileNo ratings yet

- Lec 5Document19 pagesLec 5Akram TaNo ratings yet

- Event Round Moderator Room TeamsDocument40 pagesEvent Round Moderator Room TeamsAnand KamannavarNo ratings yet

- Luminaire Property: Industrias Jelco EIRLDocument16 pagesLuminaire Property: Industrias Jelco EIRLAntony FloresNo ratings yet

- Production AnalysisDocument22 pagesProduction Analysisnikitapaul2121No ratings yet

- Monday Reps Week 1 Week 2 Week 3 Squat: Assistance ExercisesDocument4 pagesMonday Reps Week 1 Week 2 Week 3 Squat: Assistance ExercisesTrevor Albert100% (1)

- Tugas Diskusi 3Document4 pagesTugas Diskusi 3DITA PRADITIA AGUSTIANINo ratings yet

- Stronglifts 5x5 AdvancedDocument6 pagesStronglifts 5x5 Advancedrbn brbNo ratings yet

- Jim Wendler's 5 - 3 - 1 Building The MonolithDocument2 pagesJim Wendler's 5 - 3 - 1 Building The MonolithIGNACIO ALONSO CHAVEZ RUBILARNo ratings yet

- 16 MaxflowalgsDocument6 pages16 MaxflowalgspillushnNo ratings yet

- Basics of Reversible Logic GatesDocument22 pagesBasics of Reversible Logic GatesRashika AggarwalNo ratings yet

- A330 B2 Ata31.2 Recording Enhanced Vaeco PDFDocument64 pagesA330 B2 Ata31.2 Recording Enhanced Vaeco PDFAaron Harvey50% (2)

- XK 3190 C 602Document87 pagesXK 3190 C 602andre rizoNo ratings yet

- Ds28e07 3122240Document24 pagesDs28e07 3122240ManunoghiNo ratings yet

- 1.1 Information Representation - ExerciseDocument15 pages1.1 Information Representation - Exerciseekta sharmaNo ratings yet

- FANUC Series 0+-MODEL D FANUC Series 0+ Mate-MODEL D: Parameter ManualDocument528 pagesFANUC Series 0+-MODEL D FANUC Series 0+ Mate-MODEL D: Parameter Manual游本豐No ratings yet

- C++ and Programming FundamentalsDocument86 pagesC++ and Programming FundamentalsteklitNo ratings yet

- CH12 Instruction Sets Characteristics and FunctionsDocument36 pagesCH12 Instruction Sets Characteristics and FunctionsNguyen Phuc Nam Giang (K18 HL)No ratings yet

- Communication Protocol of GpsDocument37 pagesCommunication Protocol of GpsShubham AcharyaNo ratings yet

- Accuload Comm ManualDocument166 pagesAccuload Comm ManuallataNo ratings yet

- 07 PackedDecimalDocument36 pages07 PackedDecimalsamuelsujithNo ratings yet

- ECE2021-2-Number Systems and CodesDocument65 pagesECE2021-2-Number Systems and CodesKhai Hua MinhNo ratings yet

- Bose IR Receiver Spec 2.2Document6 pagesBose IR Receiver Spec 2.2Denny PolzinNo ratings yet

- Consort R36xx - ComputerControlDocument17 pagesConsort R36xx - ComputerControlMisterMachineNo ratings yet

- PT2272 PDFDocument18 pagesPT2272 PDFalperdaNo ratings yet

- CG RomDocument14 pagesCG RomvijaysatawNo ratings yet

- Computer Link Ethernet DriverDocument30 pagesComputer Link Ethernet DriverCristian CanazaNo ratings yet

- 1W-H0-05 BZ (Eng)Document7 pages1W-H0-05 BZ (Eng)Nawfal El-khadiriNo ratings yet

- Dynamixel: User's Manual 2006-06-14Document38 pagesDynamixel: User's Manual 2006-06-14Mohamed LahnineNo ratings yet

- Living in The It Era: Data Representation and Digital ElectronicsDocument3 pagesLiving in The It Era: Data Representation and Digital ElectronicsshandominiquefelicitycaneteNo ratings yet

- Ict Notes 2022Document66 pagesIct Notes 2022Alex MitemaNo ratings yet

- SAIL Rourkela Operator-Cum-Technician Trainee (OCTT) Electrical 2019 Solved Question Paper Part OneDocument11 pagesSAIL Rourkela Operator-Cum-Technician Trainee (OCTT) Electrical 2019 Solved Question Paper Part OnevectorNo ratings yet

- Hydrotechnik MultihandyDocument8 pagesHydrotechnik MultihandyDANIZACHNo ratings yet

- Music Cards Music Cards: Make A CardDocument9 pagesMusic Cards Music Cards: Make A CardBurime GrajqevciNo ratings yet

- Page 49 & 50Document11 pagesPage 49 & 50Qazi SaadNo ratings yet

- Basic MCQs of ComputerDocument16 pagesBasic MCQs of Computerjaveed67% (3)

- Profibus Module (Opmp) Operating Instructions: EnglishDocument22 pagesProfibus Module (Opmp) Operating Instructions: EnglishchochoroyNo ratings yet

- Programming ProblemsDocument9 pagesProgramming ProblemsAkhil SanthoshNo ratings yet