Professional Documents

Culture Documents

Chapter 2 Part 2

Chapter 2 Part 2

Uploaded by

زياد عبدالله عبدالحميد0 ratings0% found this document useful (0 votes)

3 views18 pagesThis document discusses several topics related to microprocessors and computer architecture. It describes direct memory access (DMA), where an I/O device can access memory directly without intervention of the microprocessor. It also discusses serial data transfer between processors. The document outlines architectural advancements like pipelining, where instructions are broken into stages to improve throughput. Cache memory and its role in improving memory access speed is explained. Finally, the document introduces virtual memory and how it uses memory management techniques like paging, translation of virtual to physical addresses using MMU, and page replacement policies.

Original Description:

Original Title

Chapter 2 part 2

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThis document discusses several topics related to microprocessors and computer architecture. It describes direct memory access (DMA), where an I/O device can access memory directly without intervention of the microprocessor. It also discusses serial data transfer between processors. The document outlines architectural advancements like pipelining, where instructions are broken into stages to improve throughput. Cache memory and its role in improving memory access speed is explained. Finally, the document introduces virtual memory and how it uses memory management techniques like paging, translation of virtual to physical addresses using MMU, and page replacement policies.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

3 views18 pagesChapter 2 Part 2

Chapter 2 Part 2

Uploaded by

زياد عبدالله عبدالحميدThis document discusses several topics related to microprocessors and computer architecture. It describes direct memory access (DMA), where an I/O device can access memory directly without intervention of the microprocessor. It also discusses serial data transfer between processors. The document outlines architectural advancements like pipelining, where instructions are broken into stages to improve throughput. Cache memory and its role in improving memory access speed is explained. Finally, the document introduces virtual memory and how it uses memory management techniques like paging, translation of virtual to physical addresses using MMU, and page replacement policies.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 18

Chapter 2

Lecture 3

WHAT A

MICROPROCESSOR IS

Dr. Marwa Gamal

Direct memory access

Direct memory access

In programmed I/O and interrupt I/O, data is transferred to the memory

through the accumulator.

the operation sequence in case of Direct Memory Access.

1. The microprocessor checks for DMA request signal once in each

machine cycle.

2. The I/O device sends signal on DMA request pin.

3. The microprocessor tristates address, data and control buses.

4. The microprocessor sends acknowledgement signal to I/O device on

DMA acknowledgement pin.

5. The I/O device uses the bus system to perform data transfer operation

on memory.

6. On completion of data transfer, the I/O device withdraws DMA request

signal.

7.The microprocessor continuously checks the DMA request signal.

When the signal is withdrawn, the microprocessor resumes the control

of buses and resumes normal operation.

Serial Data Transfer

The data transfer between two processors

is in serial mode.

The data is transferred bit by bit on a

single line

have two pins for input and output of serial

data

special software instructions to affect the

data transfer.

Architectural advancements of

microprocessors

A number of advancements that had

taken place in the computer have

migrated to microprocessor field

The pipelined microprocessors have a

number of smaller independent units

connected in series like a pipeline. Each

unit is able to perform its tasks

independently and the system as a whole

is able to give much more throughput

than the single microprocessor.

Pipelining

Pipelining in computer is used to enhance

the execution speed.

An instruction execution contains the

following four independent sub-tasks.

(a) Instruction Fetch

(b) Instruction Decode

(c) Operand Fetch

(d) Execute

Pipelining

An example of four processing elements

P(1) to P(4). The task T has to be

subdivided into four sub-tasks T(1) to T(4).

Each processor takes 1 clock cycle to

execute its sub-task as depicted below.

Thus a single task T will take 4 clock

cycles which is same as a non-pipelined

processor.

a single task there is no advantage

derived from a pipelined processor.

Pipelining

an example in which there is continuous

flow of tasks T(1), T(2), T(3), T(4) … each

of which can be divided into four sub-tasks

will get completed at the end of cycle 6.

Pipelining

Problems::

not all instructions are independent. If

instruction I2 has to work on the result of

the previous instruction I1.

Control logic inserts stall or wasted

clock cycles into pipeline until such

dependencies are resolved.

Another problem frequently encountered

is that relating to branch instructions.

The problem could be solved by (branch

prediction).

Cache Memory

Increasing memory throughput will

increase the execution speed of the

processor. To achieve this, cache

between the processor and the main

memory are provided.

The cache memory consists of a few

kilobytes of high speed Static RAM

(SRAM), whereas the main memory

consists of a few Megabytes to

Gigabytes of slower but cheaper Dynamic

RAM (DRAM).

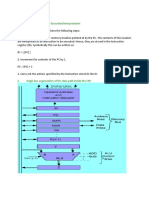

Cache Memory

When the CPU wants to

read a byte or word, it outputs

address on the Address Bus.

The cache controller checks

whether the required contents

are available in cache

memory.

If the addressed byte/word

is available in cache memory,

the cache controller enables

the cache memory to output

the addressed byte/word on

Data Bus.

Cache Memory

If the addressed byte/word is

not present in cache

memory, the cache controller

enables the DRAM

controller.

The DRAM controller sends

the address to main memory

to get the byte/word. Since

DRAM is slower, this access

will require some wait states

to be inserted.

The byte/word which is read

is transferred to CPU as well

as to cache memory. If CPU

needs this byte/word again,

it can be accessed without

any wait state

Multilevel caches

•Multilevel caches can be either inclusive or exclusive.

•an inclusive cache design the data in L1 cache will also be in

L2 cache.

•In exclusive caches, data is present either in L1 or L2 caches

Virtual Memory

in complexity and consequently computer programs

became complex and lengthy, it became evident that

physical memory size would become a bottleneck.

The virtual memory system was evolved as a

compromise in which the complete program was

divided into fixed/variable size pages and stored in

hard disk.

The basic idea is that pages can be swapped

between the disk and the main memory as and when

needed. This task is performed by the operating

system.

Virtual Memory

The task of translation of virtual address to

physical address is carried out by the

Memory Management Unit (MMU). The

MMU is a hardware device

Virtual Memory

Virtual Memory

Dirty bit: If bit = 1, the page in the memory

has been modified

Referenced bit: If bit = 1, the program has

made reference to the page, meaning that

the page may be currently in use.

Protection bits: These bits specify whether

the data in the page can be read/written/

executed

Assignments

Page 30 Q.13

Deadline 25/4

You might also like

- Both Oss and Application Software Are Written in Coding Schemes Called Programming LanguagesDocument3 pagesBoth Oss and Application Software Are Written in Coding Schemes Called Programming LanguagesЯрослав ЗвягинцевNo ratings yet

- PYE 422 Lecture NoteDocument11 pagesPYE 422 Lecture Notecoker yusufNo ratings yet

- CC-04 Unit5Document19 pagesCC-04 Unit5hksingh7061No ratings yet

- Prof. Ajeet K. Jain - CSE Group (FST) - IFHE HyderabadDocument48 pagesProf. Ajeet K. Jain - CSE Group (FST) - IFHE HyderabadatishkediaNo ratings yet

- Engine 2018Document45 pagesEngine 2018AloyceNo ratings yet

- Module 4 DfcoDocument25 pagesModule 4 DfcoRISHAB MAHAJANNo ratings yet

- 18cs34 SolutionDocument9 pages18cs34 SolutionsantoshNo ratings yet

- CO2: 1. Concept of Program Execution/InterpretationDocument22 pagesCO2: 1. Concept of Program Execution/InterpretationYasmeen SyedNo ratings yet

- Computer Architecture 31 PDFDocument7 pagesComputer Architecture 31 PDFAjit SrivastavaNo ratings yet

- Computer ArchitectureDocument14 pagesComputer ArchitectureMartine Mpandamwike Jr.No ratings yet

- Chapter 2 Embedded MicrocontrollersDocument5 pagesChapter 2 Embedded MicrocontrollersyishakNo ratings yet

- csc708 - Computer ArchitectureDocument7 pagescsc708 - Computer ArchitectureMichael T. BelloNo ratings yet

- Principles and Applications of MicrocontrollersDocument8 pagesPrinciples and Applications of MicrocontrollersLahiru0% (1)

- Coa Unit-3,4 NotesDocument17 pagesCoa Unit-3,4 NotesDeepanshu krNo ratings yet

- Homework 4 CAP 208: Submitted ToDocument13 pagesHomework 4 CAP 208: Submitted Tosachinraj2No ratings yet

- DMADocument5 pagesDMAPrabir K DasNo ratings yet

- Computer Architecture 31Document7 pagesComputer Architecture 31Mukhtar AhmedNo ratings yet

- Lokesh MadanDocument10 pagesLokesh MadanDattha PrasadNo ratings yet

- Need For Memory Hierarchy: (Unit-1,3) (M.M. Chapter 12)Document23 pagesNeed For Memory Hierarchy: (Unit-1,3) (M.M. Chapter 12)Alvin Soriano AdduculNo ratings yet

- Hamacher 5th ChapterDocument45 pagesHamacher 5th Chapterapi-3760847100% (1)

- Module 4 MPDocument11 pagesModule 4 MPvreshmi885113No ratings yet

- Introduction To Motorola 68HC11: 1.1 ObjectivesDocument36 pagesIntroduction To Motorola 68HC11: 1.1 ObjectivesRaoul ToludNo ratings yet

- Chapter 4Document22 pagesChapter 4samebisa3404No ratings yet

- The University of Zambia in Conjuction With Zambia Ict CollegeDocument12 pagesThe University of Zambia in Conjuction With Zambia Ict CollegeMartine Mpandamwike Jr.No ratings yet

- Digital Signal Processing AdvancedDocument14 pagesDigital Signal Processing AdvancedMathi YuvarajanNo ratings yet

- Unit 2 Computer Systems: StructureDocument13 pagesUnit 2 Computer Systems: StructureCamilo AmarcyNo ratings yet

- COE381 Microprocessors Marking Scheame: Q1 (A) - Write Explanatory Note On General-Purpose ComputersDocument6 pagesCOE381 Microprocessors Marking Scheame: Q1 (A) - Write Explanatory Note On General-Purpose ComputersEric Leo AsiamahNo ratings yet

- Computer Organization and Architecture Module 1 (Kerala University) NotesDocument30 pagesComputer Organization and Architecture Module 1 (Kerala University) NotesAssini Hussain100% (11)

- Computer Organization and Architecture Module 1Document46 pagesComputer Organization and Architecture Module 1Assini Hussain100% (1)

- CC C CCC ! "#!$%Document28 pagesCC C CCC ! "#!$%Suresh PatelNo ratings yet

- Untitled DocumentDocument24 pagesUntitled Documentdihosid99No ratings yet

- Hamacher 5th ChapterDocument54 pagesHamacher 5th ChapterRajeshwari R PNo ratings yet

- HTTP P.quizletDocument3 pagesHTTP P.quizletAileen LamNo ratings yet

- CoDocument80 pagesCogdayanand4uNo ratings yet

- IO SystemDocument32 pagesIO Systemnanekaraditya06No ratings yet

- AnswersDocument10 pagesAnswersSello HlabeliNo ratings yet

- COA CH 5Document31 pagesCOA CH 5Bifa HirpoNo ratings yet

- Comp ExamDocument11 pagesComp ExamPrerak DedhiaNo ratings yet

- Input Output ModuleDocument3 pagesInput Output ModuleJerome 高自立 KoNo ratings yet

- Coa Assignment-4: Akshit Aggarwal CS63 171294Document7 pagesCoa Assignment-4: Akshit Aggarwal CS63 171294Rajput RishavNo ratings yet

- Computer System Architecture Group 5-3Document25 pagesComputer System Architecture Group 5-3Jean Marie Vianney UwizeyeNo ratings yet

- Mod 2Document20 pagesMod 2PraneethNo ratings yet

- Architecture Part 2Document3 pagesArchitecture Part 2araik.gevorgyan.20021907No ratings yet

- 2.1 Hardware and Communication TextBook (Theteacher - Info)Document42 pages2.1 Hardware and Communication TextBook (Theteacher - Info)AhmadNo ratings yet

- CHAPTER 2 Memory Hierarchy Design & APPENDIX B. Review of Memory HeriarchyDocument73 pagesCHAPTER 2 Memory Hierarchy Design & APPENDIX B. Review of Memory HeriarchyRachmadio Nayub LazuardiNo ratings yet

- Microprocessor 2Document33 pagesMicroprocessor 2Jean de LeonNo ratings yet

- GalliDocument23 pagesGalliDilip gandhamNo ratings yet

- Microprocessors and Micro Controllers: 2 Mark Questions With AnswersDocument26 pagesMicroprocessors and Micro Controllers: 2 Mark Questions With AnswersrameshsophidarlaNo ratings yet

- Device Manager AssignmentDocument4 pagesDevice Manager Assignmentsusmita lamsalNo ratings yet

- Computer ArchitectureDocument10 pagesComputer ArchitectureRohith PeddiNo ratings yet

- Assignment4-Rennie RamlochanDocument7 pagesAssignment4-Rennie RamlochanRennie RamlochanNo ratings yet

- Week 10: Chapter 4 Instruction Set ArchitecturesDocument42 pagesWeek 10: Chapter 4 Instruction Set ArchitecturesMohamed SalahNo ratings yet

- Introduction To Computer: 1. Microcomputers - Personal ComputersDocument8 pagesIntroduction To Computer: 1. Microcomputers - Personal Computersضياء عبد مجيد 2T1No ratings yet

- Question 1Document4 pagesQuestion 1chriskathunkumi12No ratings yet

- Week 4Document12 pagesWeek 4rafiqueuddin93No ratings yet

- Magzhan KairanbayDocument27 pagesMagzhan Kairanbaybaglannurkasym6No ratings yet

- EB20102076 - Muhammad Hammad KhalidDocument20 pagesEB20102076 - Muhammad Hammad KhalidHASHIR KHANNo ratings yet

- Digital Computer: Hardware SoftwareDocument13 pagesDigital Computer: Hardware SoftwareAshis MeherNo ratings yet

- Preliminary Specifications: Programmed Data Processor Model Three (PDP-3) October, 1960From EverandPreliminary Specifications: Programmed Data Processor Model Three (PDP-3) October, 1960No ratings yet

- Operating Systems Interview Questions You'll Most Likely Be AskedFrom EverandOperating Systems Interview Questions You'll Most Likely Be AskedNo ratings yet

- SAS Programming Guidelines Interview Questions You'll Most Likely Be AskedFrom EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be AskedNo ratings yet

- .......... ! 3) (! 2) (1 (1 1 Lim 1 1 Lim: Chapter (2) Mathematical BackgroundDocument7 pages.......... ! 3) (! 2) (1 (1 1 Lim 1 1 Lim: Chapter (2) Mathematical Backgroundزياد عبدالله عبدالحميدNo ratings yet

- Chapter (2) Mathematical BackgroundDocument12 pagesChapter (2) Mathematical Backgroundزياد عبدالله عبدالحميدNo ratings yet

- Revision 8Document15 pagesRevision 8زياد عبدالله عبدالحميدNo ratings yet

- General English SkillsDocument189 pagesGeneral English Skillsزياد عبدالله عبدالحميدNo ratings yet

- Report Logic2Document12 pagesReport Logic2زياد عبدالله عبدالحميدNo ratings yet

- ميكانيكا 6Document17 pagesميكانيكا 6زياد عبدالله عبدالحميدNo ratings yet

- حاسب1Document20 pagesحاسب1زياد عبدالله عبدالحميدNo ratings yet

- Sheet No. (1) : Suez Canal UniversityDocument1 pageSheet No. (1) : Suez Canal Universityزياد عبدالله عبدالحميدNo ratings yet

- 3Document20 pages3زياد عبدالله عبدالحميدNo ratings yet

- Revision 9Document7 pagesRevision 9زياد عبدالله عبدالحميدNo ratings yet

- Lecture 4Document30 pagesLecture 4زياد عبدالله عبدالحميدNo ratings yet

- Lecture 3 ADocument15 pagesLecture 3 Aزياد عبدالله عبدالحميدNo ratings yet

- Assignment 1 الطالب زياد عبدالله عبدالحميدDocument6 pagesAssignment 1 الطالب زياد عبدالله عبدالحميدزياد عبدالله عبدالحميدNo ratings yet

- Lecture 2Document27 pagesLecture 2زياد عبدالله عبدالحميدNo ratings yet

- Lecture 5ADocument14 pagesLecture 5Aزياد عبدالله عبدالحميدNo ratings yet

- شيت الميكانيكيةDocument10 pagesشيت الميكانيكيةزياد عبدالله عبدالحميدNo ratings yet

- Chapter 2 Part 1Document16 pagesChapter 2 Part 1زياد عبدالله عبدالحميدNo ratings yet

- Chapter 1Document31 pagesChapter 1زياد عبدالله عبدالحميدNo ratings yet

- Chapter 4Document25 pagesChapter 4زياد عبدالله عبدالحميدNo ratings yet

- LAB02Document23 pagesLAB02زياد عبدالله عبدالحميد100% (1)

- مارياااااام تاسك2Document2 pagesمارياااااام تاسك2زياد عبدالله عبدالحميدNo ratings yet

- Hardware and SoftwareDocument2 pagesHardware and SoftwareAmanNo ratings yet

- VMware Vsphere CPU Sched PerfDocument26 pagesVMware Vsphere CPU Sched PerfbrianvoipNo ratings yet

- Unit Unit: Installation and Configuration of Operating SystemDocument17 pagesUnit Unit: Installation and Configuration of Operating Systemanshuman singhNo ratings yet

- Week 4 - Computer Organization & ArchitectureDocument59 pagesWeek 4 - Computer Organization & ArchitecturedindNo ratings yet

- G 10 ICT WorkSheetc 2.2 English MDocument8 pagesG 10 ICT WorkSheetc 2.2 English MMohamaad SihatthNo ratings yet

- Module 4Document16 pagesModule 4CABADONGA, Justin M.No ratings yet

- A Guide To Hygienic Pigging For Beverage Processors and Manufacturers PDFDocument15 pagesA Guide To Hygienic Pigging For Beverage Processors and Manufacturers PDFMohit GoutiNo ratings yet

- Microcontrollers - UNIT IDocument45 pagesMicrocontrollers - UNIT IyayavaramNo ratings yet

- List of CPU ArchitecturesDocument3 pagesList of CPU Architecturessivakumarb92No ratings yet

- Practice Set Ibps Cwe Clerk-IVDocument14 pagesPractice Set Ibps Cwe Clerk-IVDinesh Kumar Rout0% (1)

- Computer OrganisationDocument12 pagesComputer OrganisationSachuNo ratings yet

- Mdesnoyers Userspace Rcu Tutorial Linuxcon 2013Document82 pagesMdesnoyers Userspace Rcu Tutorial Linuxcon 2013Nitin rajNo ratings yet

- Important Instructions To Examiners:: Calculate The Number of Address Lines Required To Access 16 KB ROMDocument17 pagesImportant Instructions To Examiners:: Calculate The Number of Address Lines Required To Access 16 KB ROMC052 Diksha PawarNo ratings yet

- Best Practices Virrtualizing MS SQL Server On NutanixDocument59 pagesBest Practices Virrtualizing MS SQL Server On NutanixCCIE DetectNo ratings yet

- Intel® Desktop Compatibility Tool: Intel® Xeon® X3360 - Frequency: 2.83 GHZDocument33 pagesIntel® Desktop Compatibility Tool: Intel® Xeon® X3360 - Frequency: 2.83 GHZguilvsNo ratings yet

- Half Yearly Paper (Autosaved) (Repaired)Document74 pagesHalf Yearly Paper (Autosaved) (Repaired)anandNo ratings yet

- Chapter 6 Parallel ProcessorDocument21 pagesChapter 6 Parallel Processorq qqNo ratings yet

- Computer Memory ? Different Types of Memory in Computer With ExamplesDocument13 pagesComputer Memory ? Different Types of Memory in Computer With Examplesgabriel chinechenduNo ratings yet

- Microprocessor, Microcomputer and Assembly LanguageDocument22 pagesMicroprocessor, Microcomputer and Assembly LanguageAdityaNo ratings yet

- Microprocessors: MicrocontrollersDocument5 pagesMicroprocessors: MicrocontrollersSureshNo ratings yet

- Arm ProcessorDocument92 pagesArm ProcessorReddappa ReddyNo ratings yet

- An Efficient SRAM-based Reconfigurable Architecture For EmbeddedDocument13 pagesAn Efficient SRAM-based Reconfigurable Architecture For EmbeddedDr. Ruqaiya KhanamNo ratings yet

- CH 6 - Flow Control InstructionsDocument23 pagesCH 6 - Flow Control Instructionsapi-237335979No ratings yet

- 9 Low Voltage DetectDocument8 pages9 Low Voltage Detectnicolas8702No ratings yet

- Assignment 10Document6 pagesAssignment 10dasari_reddyNo ratings yet

- Novametrix 7300 - Service ManualDocument107 pagesNovametrix 7300 - Service ManualErnesto AcostaNo ratings yet

- Chapter1 Computer Abstractions and TechnologyDocument52 pagesChapter1 Computer Abstractions and Technologytruongquangthinh21092004No ratings yet

- Multiple Case Studies in Project Management-Scope ManagementDocument7 pagesMultiple Case Studies in Project Management-Scope ManagementIrshad AliNo ratings yet

- MIC MicroprojectDocument11 pagesMIC Microprojectgirish desaiNo ratings yet