Professional Documents

Culture Documents

FPT Poster Aaai

FPT Poster Aaai

Uploaded by

hui luo0 ratings0% found this document useful (0 votes)

5 views1 pageOriginal Title

fpt_poster_aaai

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

5 views1 pageFPT Poster Aaai

FPT Poster Aaai

Uploaded by

hui luoCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 1

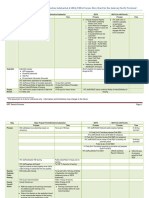

Pretrained Transformers as Universal Computation Engines

Kevin Lu, Aditya Grover, Pieter Abbeel, Igor Mordatch

Can the learned self-attention

mechanism generalize across modalities? Photo:

Quanta Magazine

We take GPT-2 and freeze the self-attention and

feedforward parameters.

Despite tokens coming from new modalities, we find the

frozen model exhibits strong classification performance. modality-specific “universal” data subnetwork “universal” subnetwork application-

encoders processor selection knowledge selection specific outputs

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5822)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Spring 2021 GPT Full Schedule FINALDocument1 pageSpring 2021 GPT Full Schedule FINALhui luoNo ratings yet

- E AB 62774 ElabscienceDocument1 pageE AB 62774 Elabsciencehui luoNo ratings yet

- Terms GrandprixticketsgesmbhDocument2 pagesTerms Grandprixticketsgesmbhhui luoNo ratings yet

- ZF Katalog Drive Gearboxes-For Winches GPT-W Customer Specification GPT-W FormularDocument2 pagesZF Katalog Drive Gearboxes-For Winches GPT-W Customer Specification GPT-W Formularhui luoNo ratings yet

- Whatcom County Major Project Permit - Shoreline Substantial and SEPA NEPA Flow Chart (PDF) - 201501021022227388Document2 pagesWhatcom County Major Project Permit - Shoreline Substantial and SEPA NEPA Flow Chart (PDF) - 201501021022227388hui luoNo ratings yet

- Revolutionizing Out-of-Home Advertising - COMMB Launches OOH-GPT Beta Harnessing The Power of AIDocument2 pagesRevolutionizing Out-of-Home Advertising - COMMB Launches OOH-GPT Beta Harnessing The Power of AIhui luoNo ratings yet

- ObjectiveDocument1 pageObjectivehui luoNo ratings yet

- The GPT-2 Historian - Can A Language Model Write HistoryDocument2 pagesThe GPT-2 Historian - Can A Language Model Write Historyhui luoNo ratings yet

- Upgrade) - : - Dead Link ( (SOLVED) Mirror Raid1 Error After Upgrade-Need Disk Recovery)Document1 pageUpgrade) - : - Dead Link ( (SOLVED) Mirror Raid1 Error After Upgrade-Need Disk Recovery)hui luoNo ratings yet

- GPT 815Document2 pagesGPT 815hui luoNo ratings yet

- Blackcat GPT Thermal Retrievable Sealbore PackerDocument2 pagesBlackcat GPT Thermal Retrievable Sealbore Packerhui luoNo ratings yet

- GPT (Mouse) Recombinant Protein: ApplicationsDocument2 pagesGPT (Mouse) Recombinant Protein: Applicationshui luoNo ratings yet

- GPT Spec Sheet New FormatDocument2 pagesGPT Spec Sheet New Formathui luoNo ratings yet

- 2024 7 GPTDocument2 pages2024 7 GPThui luoNo ratings yet

- GPT 12000Document3 pagesGPT 12000hui luoNo ratings yet

- GF - Consolidation of GPT Group SecuritiesDocument2 pagesGF - Consolidation of GPT Group Securitieshui luoNo ratings yet

- 2024 2 GPTDocument3 pages2024 2 GPThui luoNo ratings yet

- Wca 4Document1 pageWca 4hui luoNo ratings yet