Professional Documents

Culture Documents

Coa Poster Content

Coa Poster Content

Uploaded by

sparenilesh0 ratings0% found this document useful (0 votes)

2 views2 pagesOriginal Title

coa_poster_content

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

0 ratings0% found this document useful (0 votes)

2 views2 pagesCoa Poster Content

Coa Poster Content

Uploaded by

sparenileshCopyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

You are on page 1of 2

What is Cache Memory: -

Cache memory is a small-sized type of volatile computer memory that provides

high-speed data access to a processor and stores frequently used computer

programs, applications, and data. It acts as a temporary storage of memory,

making data retrieval easier and more efficient. Cache operates on the principle

of locality, storing frequently accessed information closer to the CPU to reduce

access times. It is the fastest memory in a computer, typically integrated onto

the motherboard and directly embedded in the processor or main random-access

memory (RAM).

What is cache optimization: -

Cache optimization encompasses a spectrum of techniques, from fundamental

principles like cache organization to advanced strategies such as multi-level

caching and prefetching. These methods collectively aim to minimize cache

misses, thereby reducing latency and enhancing system efficiency. By

optimizing cache access patterns, data retrieval is expedited, leading to

improved system responsiveness. Moreover, these optimizations contribute to

conserving energy and maximizing throughput in modern computing

environments. Overall, cache optimization plays a critical role in ensuring

optimal system performance, effectively meeting the demands of diverse

applications and workloads.

Basic Techniques: -

Larger Block size: -Optimal block size depends on latency and bandwidth of lower-level

memory. High bandwidth and high latency encourage large blocks. Low bandwidth and low

latency encourage small blocks.

Small and simple caches: This technique is used to reduce the miss time, If lesser hardware

is required for the implementation of caches, then it decreases the Hit time because of the

shorter critical path through the Hardware.

Larger cache size: This technique is used to reduce the miss rate by increasing the cache

size, thereby decreasing the miss rate. But they increase the hit time and power consumption.

Multi-Level Caches: This technique is used to reduce miss penalty let us consider a two-

level cache. The first level cache is smaller in size and has faster clock cycles comparable to

that of the CPU. Second-level cache is larger than the first-level cache but has faster clock

cycles compared to that of main memory.

Advance Techniques: -

Compiler-Controlled Prefetching to Reduce Miss Penalty or Miss Rate – Compiler can

also insert prefetch instructions to request data before the processor needs it. There are two

Flavors of prefetch, register prefetch will load the value into a register and Cache prefetch

loads data only into the cache and not the register.

Way prediction and pseudo associativity: This is another approach that reduces conflict

misses and maintains the hit speed of direct-mapped caches. In this case, extra bits are kept in

the cache, called block predictor bits, to predict the way, or block within the set of the next

cache access.

Critical Word First and Early Restart to Reduce Miss Penalty -

Critical word first: Request the missed word first from memory and send it to the processor

as soon as it arrives; let the processor continue execution while filling the rest of the words in

the block.

Early restart: Fetch the words in normal order, but as soon as the requested

word of the block arrives send it to the processor and let the processor continue execution.

Blocking: - This optimization improves temporal locality to reduce misses. Instead of

operating on entire rows or columns of an array, blocked algorithms operate on submatrices

or blocks. The goal is to maximize accesses to the data loaded into the cache before the data

are replaced.

You might also like

- Car Rental Business Plan PDF SampleDocument121 pagesCar Rental Business Plan PDF SampleAnonymous qZT72R9No ratings yet

- CCDM Manual PDFDocument715 pagesCCDM Manual PDFrehanaNo ratings yet

- CPU Cache: From Wikipedia, The Free EncyclopediaDocument19 pagesCPU Cache: From Wikipedia, The Free Encyclopediadevank1505No ratings yet

- Data Prefetching: Naveed Ahmed Muhammad Haseeb - Ul-Hassan ZahidDocument19 pagesData Prefetching: Naveed Ahmed Muhammad Haseeb - Ul-Hassan ZahidMuhammad Haseeb ul Hassan ZahidNo ratings yet

- CacheDocument30 pagesCachemysthicriousNo ratings yet

- Cache: Why Level It: Departamento de Informática, Universidade Do Minho 4710 - 057 Braga, Portugal Nunods@ipb - PTDocument8 pagesCache: Why Level It: Departamento de Informática, Universidade Do Minho 4710 - 057 Braga, Portugal Nunods@ipb - PTsothymohan1293No ratings yet

- Design of Cache Memory Mapping Techniques For Low Power ProcessorDocument6 pagesDesign of Cache Memory Mapping Techniques For Low Power ProcessorhariNo ratings yet

- CHAPTER 2 Memory Hierarchy Design & APPENDIX B. Review of Memory HeriarchyDocument73 pagesCHAPTER 2 Memory Hierarchy Design & APPENDIX B. Review of Memory HeriarchyRachmadio Nayub LazuardiNo ratings yet

- Research Paper On Cache MemoryDocument8 pagesResearch Paper On Cache Memorypib0b1nisyj2100% (1)

- Basic Optimization Techniques in Cache Memory 2.2.4Document4 pagesBasic Optimization Techniques in Cache Memory 2.2.4harshdeep singhNo ratings yet

- Memory HierarchyDocument2 pagesMemory Hierarchycheruuasrat30No ratings yet

- Evaluating Stream Buffers As A Secondary Cache ReplacementDocument10 pagesEvaluating Stream Buffers As A Secondary Cache ReplacementVicent Selfa OliverNo ratings yet

- CPU Cache: Details of OperationDocument18 pagesCPU Cache: Details of OperationIan OmaboeNo ratings yet

- Cache MemoryDocument60 pagesCache MemoryShahazada Ali ImamNo ratings yet

- COUnit2restDocument3 pagesCOUnit2resttauqeerahmed886No ratings yet

- Understand CPU Caching ConceptsDocument11 pagesUnderstand CPU Caching Conceptsabhijitkrao283No ratings yet

- Term Paper: Cahe Coherence SchemesDocument12 pagesTerm Paper: Cahe Coherence SchemesVinay GargNo ratings yet

- UntitledDocument3 pagesUntitledPrincess Naomi AkinboboyeNo ratings yet

- Advance Programming Week 7-8Document4 pagesAdvance Programming Week 7-8jellobaysonNo ratings yet

- Assignment1-Rennie Ramlochan (31.10.13)Document7 pagesAssignment1-Rennie Ramlochan (31.10.13)Rennie RamlochanNo ratings yet

- Unit 5 Memory ManagementDocument20 pagesUnit 5 Memory ManagementlmmincrNo ratings yet

- Chapter 3 CacheDocument38 pagesChapter 3 CacheSetina AliNo ratings yet

- CA Classes-181-185Document5 pagesCA Classes-181-185SrinivasaRaoNo ratings yet

- CHAPTER FIVcoaEDocument18 pagesCHAPTER FIVcoaEMohammedNo ratings yet

- CO & OS Unit-3 (Only Imp Concepts)Document26 pagesCO & OS Unit-3 (Only Imp Concepts)0fficial SidharthaNo ratings yet

- Cache EntriesDocument13 pagesCache EntriesAnirudh JoshiNo ratings yet

- Cache Memory ThesisDocument5 pagesCache Memory Thesisjenniferwrightclarksville100% (2)

- A Crash Course in Caching - Part 1 - by Alex XuDocument9 pagesA Crash Course in Caching - Part 1 - by Alex XuSusantoNo ratings yet

- A Branch Target Instruction Prefetchnig Technique For Improved PerformanceDocument6 pagesA Branch Target Instruction Prefetchnig Technique For Improved PerformanceRipunjayTripathiNo ratings yet

- A Survey On Computer System Memory ManagementDocument7 pagesA Survey On Computer System Memory ManagementMuhammad Abu Bakar SiddikNo ratings yet

- Pentium-4 Cache Organization - FinalDocument13 pagesPentium-4 Cache Organization - FinalNazneen AkterNo ratings yet

- Assignment4-Rennie RamlochanDocument7 pagesAssignment4-Rennie RamlochanRennie RamlochanNo ratings yet

- Unit 4 - Operating System - WWW - Rgpvnotes.in PDFDocument16 pagesUnit 4 - Operating System - WWW - Rgpvnotes.in PDFPrakrti MankarNo ratings yet

- Assignment 3Document2 pagesAssignment 3harichandanaNo ratings yet

- Cache Memory Presentation SlidesDocument25 pagesCache Memory Presentation SlidesJamilu usmanNo ratings yet

- Module 5Document39 pagesModule 5adoshadosh0No ratings yet

- Memory Hierarchy: Memory Hierarchy Design in A Computer System MainlyDocument16 pagesMemory Hierarchy: Memory Hierarchy Design in A Computer System MainlyAnshu Kumar TiwariNo ratings yet

- Term Paper On Cache MemoryDocument4 pagesTerm Paper On Cache Memoryaflslwuup100% (1)

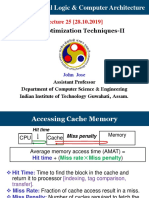

- CS 322M Digital Logic & Computer Architecture: Cache Optimization Techniques-IIDocument14 pagesCS 322M Digital Logic & Computer Architecture: Cache Optimization Techniques-IIsai rishiNo ratings yet

- Caches in Multicore Systems: Universitatea Politehnica Din Timisoara Facultatea de Automatica Şi CalculatoareDocument7 pagesCaches in Multicore Systems: Universitatea Politehnica Din Timisoara Facultatea de Automatica Şi CalculatoarerotarcalinNo ratings yet

- CPS QaDocument30 pagesCPS QaVijayakumar GandhiNo ratings yet

- Lec 07Document15 pagesLec 07Rama DeviNo ratings yet

- Cache Memory Term PaperDocument6 pagesCache Memory Term Paperafdttricd100% (1)

- MTP 01 Final J.raghunat b15216Document10 pagesMTP 01 Final J.raghunat b15216Raghunath JeyaramanNo ratings yet

- Advanced Computer Architecture-06CS81-Memory Hierarchy DesignDocument18 pagesAdvanced Computer Architecture-06CS81-Memory Hierarchy DesignYutyu YuiyuiNo ratings yet

- Lecture NotesDocument10 pagesLecture Notesibraheembello31No ratings yet

- Computer Memory By: Manzoor Ali SolangiDocument41 pagesComputer Memory By: Manzoor Ali Solangiمنظور سولنگیNo ratings yet

- Computer Virtual MemoryDocument18 pagesComputer Virtual MemoryPrachi BohraNo ratings yet

- "Cache Memory" in (Microprocessor and Assembly Language) : Lecture-20Document19 pages"Cache Memory" in (Microprocessor and Assembly Language) : Lecture-20MUHAMMAD ABDULLAHNo ratings yet

- Module 4 Coa CoeDocument10 pagesModule 4 Coa CoeVanshima Kaushik 2003289No ratings yet

- CSC204 - Chapter 3.2 OS Performance Issue (Memory Management) - NewDocument40 pagesCSC204 - Chapter 3.2 OS Performance Issue (Memory Management) - NewanekumekNo ratings yet

- CachememDocument9 pagesCachememVu Trung Thanh (K16HL)No ratings yet

- Cache MemorDocument3 pagesCache MemorVikesh KumarNo ratings yet

- Implementation of Cache MemoryDocument15 pagesImplementation of Cache MemoryKunj PatelNo ratings yet

- 4 Unit Speed, Size and CostDocument5 pages4 Unit Speed, Size and CostGurram SunithaNo ratings yet

- Computer ArchitectureDocument5 pagesComputer ArchitecturerudemaverickNo ratings yet

- Advanced Processor Architecture: Summer 1997Document28 pagesAdvanced Processor Architecture: Summer 1997Fuck turdNo ratings yet

- Cache Memory: Computer Architecture Unit-1Document54 pagesCache Memory: Computer Architecture Unit-1KrishnaNo ratings yet

- Cache World 2.-0Document10 pagesCache World 2.-0Shravani KhurpeNo ratings yet

- Computer Organization and Architecture Module 3Document34 pagesComputer Organization and Architecture Module 3Assini Hussain100% (1)

- Computer MemoryDocument51 pagesComputer MemoryWesley SangNo ratings yet

- SAS Programming Guidelines Interview Questions You'll Most Likely Be AskedFrom EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be AskedNo ratings yet

- Pem TopicsDocument2 pagesPem TopicssparenileshNo ratings yet

- 4 BJT CCDocument93 pages4 BJT CCsparenileshNo ratings yet

- 3 BJT CeDocument69 pages3 BJT CesparenileshNo ratings yet

- 1 Introduction BJTDocument95 pages1 Introduction BJTsparenileshNo ratings yet

- Communication SkillsDocument6 pagesCommunication SkillssparenileshNo ratings yet

- Belzona High Performance Linings For Storage Tanks: Guide Only Contact Belzona For Specific Chemicals Zero WastageDocument2 pagesBelzona High Performance Linings For Storage Tanks: Guide Only Contact Belzona For Specific Chemicals Zero WastagepgltuNo ratings yet

- Placa Mãe HD7Document3 pagesPlaca Mãe HD7ALBANO2No ratings yet

- Dot MapDocument7 pagesDot MapRohamat MandalNo ratings yet

- ScottishDocument6 pagesScottishluca_sergiuNo ratings yet

- Integer Programming Part 2Document41 pagesInteger Programming Part 2missMITNo ratings yet

- Themes of A Story: - To Build A Fire: Instinctual Knowledge vs. Scientific KnowledgeDocument3 pagesThemes of A Story: - To Build A Fire: Instinctual Knowledge vs. Scientific Knowledgemoin khanNo ratings yet

- Trishasti Shalaka Purusa Caritra 4 PDFDocument448 pagesTrishasti Shalaka Purusa Caritra 4 PDFPratik ChhedaNo ratings yet

- 1kd FTV Rev 2 PDFDocument45 pages1kd FTV Rev 2 PDFEder BarreirosNo ratings yet

- Lesson 9 Tax Planning and StrategyDocument31 pagesLesson 9 Tax Planning and StrategyakpanyapNo ratings yet

- Sigmazinc™ 102 HS: Product Data SheetDocument4 pagesSigmazinc™ 102 HS: Product Data Sheetyogeshkumar121998No ratings yet

- 2016 July Proficiency Session 2 A VersionDocument2 pages2016 July Proficiency Session 2 A VersionSanem Hazal TürkayNo ratings yet

- Piyush Kumar RaiDocument6 pagesPiyush Kumar RaiAyisha PatnaikNo ratings yet

- SAP SD Consultant Sample ResumeDocument10 pagesSAP SD Consultant Sample ResumemarishaNo ratings yet

- Fe 6 SpeechDocument588 pagesFe 6 SpeechLuiz FalcãoNo ratings yet

- Fomulation of Best Fit Hydrophile - Lipophile Balance Dielectric Permitivity DemulsifierDocument10 pagesFomulation of Best Fit Hydrophile - Lipophile Balance Dielectric Permitivity DemulsifierNgo Hong AnhNo ratings yet

- 30 Qualities That Make People ExtraordinaryDocument5 pages30 Qualities That Make People Extraordinaryirfanmajeed1987100% (1)

- MachinesDocument2 pagesMachinesShreyas YewaleNo ratings yet

- Potret, Esensi, Target Dan Hakikat Spiritual Quotient Dan ImplikasinyaDocument13 pagesPotret, Esensi, Target Dan Hakikat Spiritual Quotient Dan ImplikasinyaALFI ROSYIDA100% (1)

- Wearable 2D Ring Scanner: FeaturesDocument2 pagesWearable 2D Ring Scanner: FeaturesYesica SantamariaNo ratings yet

- Antecedentes 2Document26 pagesAntecedentes 2Carlos Mario Ortiz MuñozNo ratings yet

- April 19, 2004 Group B1Document19 pagesApril 19, 2004 Group B1jimeetshh100% (2)

- Estimate Electrical Guwahati UniversityDocument19 pagesEstimate Electrical Guwahati UniversityTandon Abhilash BorthakurNo ratings yet

- Brooklyn College - Fieldwork ExperienceDocument6 pagesBrooklyn College - Fieldwork Experienceapi-547174770No ratings yet

- Premature Fatigue Failure of A Spring Due To Quench CracksDocument8 pagesPremature Fatigue Failure of A Spring Due To Quench CracksCamilo Rojas GómezNo ratings yet

- Installation Qualification For Informatic System ExampleDocument7 pagesInstallation Qualification For Informatic System ExampleCarlos SanchezNo ratings yet

- Hosts and Guests - WorksheetDocument2 pagesHosts and Guests - WorksheetbeeNo ratings yet

- Chapter 10 - Concepts of Vat 7thDocument11 pagesChapter 10 - Concepts of Vat 7thEl Yang100% (3)

- Administrative Theory of ManagementDocument7 pagesAdministrative Theory of ManagementAxe PanditNo ratings yet