Professional Documents

Culture Documents

Project Writeup

Project Writeup

Uploaded by

josephCopyright:

Available Formats

You might also like

- AI 900 Master Cheat SheetDocument14 pagesAI 900 Master Cheat SheetSudhakar Rai100% (1)

- C-R8A Operation Guide CR8ADocument224 pagesC-R8A Operation Guide CR8AAlvaro Atenco50% (2)

- Age and Gender Detection-3Document20 pagesAge and Gender Detection-3Anand Dubey67% (12)

- ZinKo Thu (Network Engineer Resume)Document3 pagesZinKo Thu (Network Engineer Resume)Ye HtetNo ratings yet

- Emotion Detection From Facial Images: Rishi Gupta, Mangal Deep Singh MLSP Final Project 2022Document7 pagesEmotion Detection From Facial Images: Rishi Gupta, Mangal Deep Singh MLSP Final Project 2022rishi guptaNo ratings yet

- Hand GestureDocument37 pagesHand GestureUday MunduriNo ratings yet

- American Sign Language Research PaperDocument5 pagesAmerican Sign Language Research PaperVarun GargNo ratings yet

- A5 BatchDocument29 pagesA5 BatchBhagyaLaxmi GullipalliNo ratings yet

- Research 6Document10 pagesResearch 6Deepak SubramaniamNo ratings yet

- Deepti Presentation CSLTSDocument18 pagesDeepti Presentation CSLTSsandeepNo ratings yet

- Image Classification Using CNN: Page - 1Document13 pagesImage Classification Using CNN: Page - 1BhanuprakashNo ratings yet

- Synopsis MainDocument10 pagesSynopsis MainHARSHIT KHATTERNo ratings yet

- Convolutional Neural Networks For Facial Expression RecognitionDocument8 pagesConvolutional Neural Networks For Facial Expression RecognitionPallavi BhartiNo ratings yet

- NLP Manual (1-12) 2Document5 pagesNLP Manual (1-12) 2sj120cpNo ratings yet

- SIGNLANGUAGE PPTDocument15 pagesSIGNLANGUAGE PPTvishnuram1436No ratings yet

- Deep SkinDocument13 pagesDeep SkinShyamaprasad MSNo ratings yet

- Unmasking The Face ExpressionDocument11 pagesUnmasking The Face Expressionshivendra20bcs046No ratings yet

- Project SynopsisDocument3 pagesProject Synopsisshalini100% (1)

- Batch 9 Sec A Review 2.Document15 pagesBatch 9 Sec A Review 2.Bollam Pragnya 518No ratings yet

- SynopsisDocument4 pagesSynopsisZishan KhanNo ratings yet

- Facial Keypoint Recognition: Under The Guidance Of: Prof Amitabha MukerjeeDocument8 pagesFacial Keypoint Recognition: Under The Guidance Of: Prof Amitabha MukerjeeRohitSansiyaNo ratings yet

- Supervised Learning Based Approach To Aspect Based Sentiment AnalysisDocument5 pagesSupervised Learning Based Approach To Aspect Based Sentiment AnalysisShamsul BasharNo ratings yet

- Lecture 5 Emerging TechnologyDocument20 pagesLecture 5 Emerging TechnologyGeorge KomehNo ratings yet

- Case Studies 1,2,3Document6 pagesCase Studies 1,2,3Muhammad aliNo ratings yet

- Report - EC390Document7 pagesReport - EC390Shivam PrajapatiNo ratings yet

- Gender and Age DetectionDocument9 pagesGender and Age DetectionVarun KumarNo ratings yet

- 3.2 PreprocessingDocument10 pages3.2 PreprocessingALNATRON GROUPSNo ratings yet

- Conversion of Sign Language To Text: For Dumb and DeafDocument26 pagesConversion of Sign Language To Text: For Dumb and DeafArbaz HashmiNo ratings yet

- 2017project PaperDocument5 pages2017project PaperÂjáyNo ratings yet

- Sign Language Recognition Using CNNsDocument7 pagesSign Language Recognition Using CNNs陳子彤No ratings yet

- Report PDFDocument29 pagesReport PDFSudarshan GopalNo ratings yet

- Zhang Adding Conditional Control To Text-to-Image Diffusion Models ICCV 2023 PaperDocument12 pagesZhang Adding Conditional Control To Text-to-Image Diffusion Models ICCV 2023 PaperAgraNo ratings yet

- Facial Expression Recognition Based On Tensorflow PlatformDocument4 pagesFacial Expression Recognition Based On Tensorflow PlatformYapo cedric othniel AtseNo ratings yet

- Deep LearningDocument9 pagesDeep LearningAnonymous xMYE0TiNBcNo ratings yet

- First Progress Report FinalDocument12 pagesFirst Progress Report FinalShivaniNo ratings yet

- Sign Language Recognition: Guide: G.Vihari SirDocument31 pagesSign Language Recognition: Guide: G.Vihari SirSyamnadh UppalapatiNo ratings yet

- Hand Gesture Recognition2Document5 pagesHand Gesture Recognition2Arish KhanNo ratings yet

- Exploiting Deep Learning For Persian Sentiment AnalysisDocument8 pagesExploiting Deep Learning For Persian Sentiment AnalysisAyon DattaNo ratings yet

- Voice Recognizationusing SVMand ANNDocument6 pagesVoice Recognizationusing SVMand ANNZinia RahmanNo ratings yet

- U1 NLP App SolvedDocument26 pagesU1 NLP App SolvedArunaNo ratings yet

- Department of Information Science and Engineering Technical Seminar (18Css84) Convolutional Neural NetworksDocument15 pagesDepartment of Information Science and Engineering Technical Seminar (18Css84) Convolutional Neural NetworksS VarshithaNo ratings yet

- Synopsis ReportDocument7 pagesSynopsis ReportShubham SarswatNo ratings yet

- Lip Reading Word Classification: Abiel Gutierrez Stanford University Zoe-Alanah Robert Stanford UniversityDocument9 pagesLip Reading Word Classification: Abiel Gutierrez Stanford University Zoe-Alanah Robert Stanford UniversityRoopali ChavanNo ratings yet

- Deep Learning Model Work FlowDocument2 pagesDeep Learning Model Work FlowAkasha CheemaNo ratings yet

- FML CepDocument10 pagesFML Cepdeepthi kolliparaNo ratings yet

- Major Project On: "Age and Gender Detection Master''Document28 pagesMajor Project On: "Age and Gender Detection Master''Vijay LakshmiNo ratings yet

- Acoustic Detection of Drone:: Introduction: in Recent YearsDocument6 pagesAcoustic Detection of Drone:: Introduction: in Recent YearsLALIT KUMARNo ratings yet

- Hand Gesture Recognition Using Matlab2Document30 pagesHand Gesture Recognition Using Matlab2Rana PrathapNo ratings yet

- Sign Language Recognition Using Python and OpenCVDocument22 pagesSign Language Recognition Using Python and OpenCVpradip suryawanshi100% (1)

- Realtime Sign Language Gesture Word Recognition From Video Seque 2018Document10 pagesRealtime Sign Language Gesture Word Recognition From Video Seque 2018Ridwan EinsteinsNo ratings yet

- Deception & Detection-On Amazon Reviews DatasetDocument9 pagesDeception & Detection-On Amazon Reviews Datasetyavar khanNo ratings yet

- A Knowledge-Driven Approach To Activity Recognition in SmartDocument27 pagesA Knowledge-Driven Approach To Activity Recognition in SmartVPLAN INFOTECHNo ratings yet

- Advanced Skin Category Prediction System For Cosmetic Suggestion Using Deep Convolution Neural Network Report FinalDocument52 pagesAdvanced Skin Category Prediction System For Cosmetic Suggestion Using Deep Convolution Neural Network Report Finalajeyanajeyan3No ratings yet

- IEEE Format 1Document4 pagesIEEE Format 1Tech BondNo ratings yet

- CISC 6080 Capstone Project in Data ScienceDocument9 pagesCISC 6080 Capstone Project in Data ScienceYepu WangNo ratings yet

- DL NotesDocument28 pagesDL NotesjainayushtechNo ratings yet

- MNISTDocument3 pagesMNISTanushajNo ratings yet

- Level Set Segmentation ThesisDocument4 pagesLevel Set Segmentation Thesistiffanylovecleveland100% (2)

- Mnist Handwritten Digit ClassificationDocument26 pagesMnist Handwritten Digit ClassificationRaina SrivastavNo ratings yet

- ML ConceptsDocument2 pagesML ConceptsHari Sree. MNo ratings yet

- Inpainting and Denoising ChallengesFrom EverandInpainting and Denoising ChallengesSergio EscaleraNo ratings yet

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- Abhay Gahirwar Hospital TrainingDocument29 pagesAbhay Gahirwar Hospital TrainingjosephNo ratings yet

- Mini Project ReportDocument29 pagesMini Project ReportjosephNo ratings yet

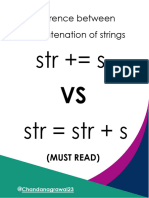

- + Vs + in StringsDocument6 pages+ Vs + in StringsjosephNo ratings yet

- Ritik Verma ResumeDocument1 pageRitik Verma ResumejosephNo ratings yet

- Weather ForecastDocument11 pagesWeather ForecastjosephNo ratings yet

- Mini Project ReportDocument29 pagesMini Project ReportjosephNo ratings yet

- Python CkanDocument73 pagesPython Ckanjamunjiastemal007No ratings yet

- 2019 Summer Model Answer Paper (Msbte Study Resources)Document33 pages2019 Summer Model Answer Paper (Msbte Study Resources)Neha AnkushraoNo ratings yet

- JavascriptDocument145 pagesJavascriptsarthak pasrichaNo ratings yet

- CS 3340 Written Assignment Unit 5Document4 pagesCS 3340 Written Assignment Unit 5pohambadanielNo ratings yet

- VDR Operating ManualDocument32 pagesVDR Operating ManualEnder BolatNo ratings yet

- Product Specification: TFT-LCD Open CellDocument22 pagesProduct Specification: TFT-LCD Open CellMahmoud Digital-DigitalNo ratings yet

- Yeferson Sierra CCNPv7.1 SWITCH Lab6-1 FHRP HSRP VRRP STUDENTDocument41 pagesYeferson Sierra CCNPv7.1 SWITCH Lab6-1 FHRP HSRP VRRP STUDENTSebastianBarreraNo ratings yet

- A67 Vignesh (INS)Document34 pagesA67 Vignesh (INS)Tejas GuptaNo ratings yet

- Course ReactjsDocument137 pagesCourse ReactjsAIT SALAH MassinissaNo ratings yet

- Unit-4 Python PDFDocument26 pagesUnit-4 Python PDF21bit057No ratings yet

- Saurabh SinghDocument6 pagesSaurabh SinghNikita YadavNo ratings yet

- New Cloud Computing PPT OmDocument25 pagesNew Cloud Computing PPT OmShreya SharmaNo ratings yet

- ABIC2 Datasheet PreviewDocument2 pagesABIC2 Datasheet Previewmohamed.khalidNo ratings yet

- SOLIDserver Administrator Guide-7.3Document1,447 pagesSOLIDserver Administrator Guide-7.3Kenirth L100% (1)

- Wi - Fi Control Robot Using Node MCU: January 2018Document5 pagesWi - Fi Control Robot Using Node MCU: January 2018NayaaNo ratings yet

- Ricoh M 2700 M 2701: Digital B&W Multi Function PrinterDocument3 pagesRicoh M 2700 M 2701: Digital B&W Multi Function PrinterAli NasipNo ratings yet

- Electrosurgery AnalyzerDocument2 pagesElectrosurgery AnalyzerMariaaaNo ratings yet

- Esp32-Mini-1 Datasheet enDocument32 pagesEsp32-Mini-1 Datasheet enMr GhostNo ratings yet

- Pan Os New FeaturesDocument156 pagesPan Os New Featuresalways_redNo ratings yet

- ACR II Open Protocol Communication ManualDocument11 pagesACR II Open Protocol Communication ManualCarlos RinconNo ratings yet

- ReportDocument40 pagesReportYillmarSilkerNo ratings yet

- SoftwareDocument2 pagesSoftwaregeethakaniNo ratings yet

- FISAC-2-DSP Take Home AssignmentDocument1 pageFISAC-2-DSP Take Home AssignmentDpt HtegnNo ratings yet

- JavaScript BSC Unit 3.1Document7 pagesJavaScript BSC Unit 3.1Nikhil dhanplNo ratings yet

- SAP HANA Security Checklists and Recommendations enDocument36 pagesSAP HANA Security Checklists and Recommendations engsanjeevaNo ratings yet

- Printing Japanese Characters in C Program - Stack OverflowDocument3 pagesPrinting Japanese Characters in C Program - Stack OverflowFabio CNo ratings yet

- 4th Year CS I Semester ScheduleDocument2 pages4th Year CS I Semester Scheduleadu gNo ratings yet

- Computer and Operating SystemsDocument213 pagesComputer and Operating SystemsDrabrajib Hazarika100% (3)

Project Writeup

Project Writeup

Uploaded by

josephOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Project Writeup

Project Writeup

Uploaded by

josephCopyright:

Available Formats

SIGN LANGUAGE RECOGNITION SYSTEM

Team Members:

Ankit Raj (2001920130024)

Harshit Mishra (2001920130068)

Aditya Kumar (2001920130011)

Aarti (2001920130002)

Objective: Our solution will be very helpful for deaf and some physically challenged people in

communication with other people who don’t know how to understand American sign language (ASL). It will

also be able to classify the various hand gestures used for fingerspelling in American sign language (ASL).

Technology: Artificial Intelligence, Image Processing, Machine Learning, OpenCV, TensorFlow.

Problem Statement: Our project aims to not only overcome communication obstacles faced by the people

who are deaf and mute but also encourages a more inclusive society where communication is accessible and

understandable for everyone. Communication barriers persist for deaf and mute individuals due to the limited

understanding of sign languages like American Sign Language (ASL) among the wider community.

Proposed Solution: Traditional methods for bridging the gap between physically challenged and normal

people often lack efficiency and accessibility. A Sign Language Recognition System is a user-friendly, real-time

system that allows for immediate translation of American Sign Language (ASL) fingerspelling, reducing

communication barriers.

Methodology:

Dataset Collection: Gather a comprehensive dataset of ASL gestures. Ensure diversity in hand shapes,

orientations, and backgrounds. Annotate each image in the dataset with the corresponding ASL label.

Data Preprocessing: Resize all images to a consistent size to maintain uniformity. Normalize pixel

values to a common scale (e.g., 0 to 1) for better convergence during training. Augment the dataset with

techniques like rotation, flipping, and scaling to improve model generalization.

Data Splitting: Divide the dataset into training, validation, and testing sets to assess model performance

accurately.

Feature Extraction: Utilize OpenCV for extracting relevant features from the images, such as contours,

edges, or color histograms. Experiment with different feature extraction techniques to find the most

effective representation of ASL gestures.

Model Selection: Choose a suitable deep learning architecture for image classification. Convolutional

Neural Networks (CNNs) are commonly used for image-related tasks. Employ a pre-trained model or

design a custom architecture based on the complexity of the ASL gestures.

Model Training: Use TensorFlow as the deep learning framework to train the selected model. Feed the

preprocessed images into the model and optimize the model weights using a suitable optimizer.

Validation and Evaluation: Evaluate the model on the validation set to monitor its performance during

training. Use the test set to assess the generalization capabilities of the model. Employ metrics such as

accuracy, precision, recall, and F1 score to evaluate the model's effectiveness.

Integration with OpenCV: Integrate the trained model with OpenCV for real-time image capture and

prediction.

Deployment: Deploy the Sign Language Recognition System to the desired platform, considering factors

like performance, accessibility, and user interface requirements.

You might also like

- AI 900 Master Cheat SheetDocument14 pagesAI 900 Master Cheat SheetSudhakar Rai100% (1)

- C-R8A Operation Guide CR8ADocument224 pagesC-R8A Operation Guide CR8AAlvaro Atenco50% (2)

- Age and Gender Detection-3Document20 pagesAge and Gender Detection-3Anand Dubey67% (12)

- ZinKo Thu (Network Engineer Resume)Document3 pagesZinKo Thu (Network Engineer Resume)Ye HtetNo ratings yet

- Emotion Detection From Facial Images: Rishi Gupta, Mangal Deep Singh MLSP Final Project 2022Document7 pagesEmotion Detection From Facial Images: Rishi Gupta, Mangal Deep Singh MLSP Final Project 2022rishi guptaNo ratings yet

- Hand GestureDocument37 pagesHand GestureUday MunduriNo ratings yet

- American Sign Language Research PaperDocument5 pagesAmerican Sign Language Research PaperVarun GargNo ratings yet

- A5 BatchDocument29 pagesA5 BatchBhagyaLaxmi GullipalliNo ratings yet

- Research 6Document10 pagesResearch 6Deepak SubramaniamNo ratings yet

- Deepti Presentation CSLTSDocument18 pagesDeepti Presentation CSLTSsandeepNo ratings yet

- Image Classification Using CNN: Page - 1Document13 pagesImage Classification Using CNN: Page - 1BhanuprakashNo ratings yet

- Synopsis MainDocument10 pagesSynopsis MainHARSHIT KHATTERNo ratings yet

- Convolutional Neural Networks For Facial Expression RecognitionDocument8 pagesConvolutional Neural Networks For Facial Expression RecognitionPallavi BhartiNo ratings yet

- NLP Manual (1-12) 2Document5 pagesNLP Manual (1-12) 2sj120cpNo ratings yet

- SIGNLANGUAGE PPTDocument15 pagesSIGNLANGUAGE PPTvishnuram1436No ratings yet

- Deep SkinDocument13 pagesDeep SkinShyamaprasad MSNo ratings yet

- Unmasking The Face ExpressionDocument11 pagesUnmasking The Face Expressionshivendra20bcs046No ratings yet

- Project SynopsisDocument3 pagesProject Synopsisshalini100% (1)

- Batch 9 Sec A Review 2.Document15 pagesBatch 9 Sec A Review 2.Bollam Pragnya 518No ratings yet

- SynopsisDocument4 pagesSynopsisZishan KhanNo ratings yet

- Facial Keypoint Recognition: Under The Guidance Of: Prof Amitabha MukerjeeDocument8 pagesFacial Keypoint Recognition: Under The Guidance Of: Prof Amitabha MukerjeeRohitSansiyaNo ratings yet

- Supervised Learning Based Approach To Aspect Based Sentiment AnalysisDocument5 pagesSupervised Learning Based Approach To Aspect Based Sentiment AnalysisShamsul BasharNo ratings yet

- Lecture 5 Emerging TechnologyDocument20 pagesLecture 5 Emerging TechnologyGeorge KomehNo ratings yet

- Case Studies 1,2,3Document6 pagesCase Studies 1,2,3Muhammad aliNo ratings yet

- Report - EC390Document7 pagesReport - EC390Shivam PrajapatiNo ratings yet

- Gender and Age DetectionDocument9 pagesGender and Age DetectionVarun KumarNo ratings yet

- 3.2 PreprocessingDocument10 pages3.2 PreprocessingALNATRON GROUPSNo ratings yet

- Conversion of Sign Language To Text: For Dumb and DeafDocument26 pagesConversion of Sign Language To Text: For Dumb and DeafArbaz HashmiNo ratings yet

- 2017project PaperDocument5 pages2017project PaperÂjáyNo ratings yet

- Sign Language Recognition Using CNNsDocument7 pagesSign Language Recognition Using CNNs陳子彤No ratings yet

- Report PDFDocument29 pagesReport PDFSudarshan GopalNo ratings yet

- Zhang Adding Conditional Control To Text-to-Image Diffusion Models ICCV 2023 PaperDocument12 pagesZhang Adding Conditional Control To Text-to-Image Diffusion Models ICCV 2023 PaperAgraNo ratings yet

- Facial Expression Recognition Based On Tensorflow PlatformDocument4 pagesFacial Expression Recognition Based On Tensorflow PlatformYapo cedric othniel AtseNo ratings yet

- Deep LearningDocument9 pagesDeep LearningAnonymous xMYE0TiNBcNo ratings yet

- First Progress Report FinalDocument12 pagesFirst Progress Report FinalShivaniNo ratings yet

- Sign Language Recognition: Guide: G.Vihari SirDocument31 pagesSign Language Recognition: Guide: G.Vihari SirSyamnadh UppalapatiNo ratings yet

- Hand Gesture Recognition2Document5 pagesHand Gesture Recognition2Arish KhanNo ratings yet

- Exploiting Deep Learning For Persian Sentiment AnalysisDocument8 pagesExploiting Deep Learning For Persian Sentiment AnalysisAyon DattaNo ratings yet

- Voice Recognizationusing SVMand ANNDocument6 pagesVoice Recognizationusing SVMand ANNZinia RahmanNo ratings yet

- U1 NLP App SolvedDocument26 pagesU1 NLP App SolvedArunaNo ratings yet

- Department of Information Science and Engineering Technical Seminar (18Css84) Convolutional Neural NetworksDocument15 pagesDepartment of Information Science and Engineering Technical Seminar (18Css84) Convolutional Neural NetworksS VarshithaNo ratings yet

- Synopsis ReportDocument7 pagesSynopsis ReportShubham SarswatNo ratings yet

- Lip Reading Word Classification: Abiel Gutierrez Stanford University Zoe-Alanah Robert Stanford UniversityDocument9 pagesLip Reading Word Classification: Abiel Gutierrez Stanford University Zoe-Alanah Robert Stanford UniversityRoopali ChavanNo ratings yet

- Deep Learning Model Work FlowDocument2 pagesDeep Learning Model Work FlowAkasha CheemaNo ratings yet

- FML CepDocument10 pagesFML Cepdeepthi kolliparaNo ratings yet

- Major Project On: "Age and Gender Detection Master''Document28 pagesMajor Project On: "Age and Gender Detection Master''Vijay LakshmiNo ratings yet

- Acoustic Detection of Drone:: Introduction: in Recent YearsDocument6 pagesAcoustic Detection of Drone:: Introduction: in Recent YearsLALIT KUMARNo ratings yet

- Hand Gesture Recognition Using Matlab2Document30 pagesHand Gesture Recognition Using Matlab2Rana PrathapNo ratings yet

- Sign Language Recognition Using Python and OpenCVDocument22 pagesSign Language Recognition Using Python and OpenCVpradip suryawanshi100% (1)

- Realtime Sign Language Gesture Word Recognition From Video Seque 2018Document10 pagesRealtime Sign Language Gesture Word Recognition From Video Seque 2018Ridwan EinsteinsNo ratings yet

- Deception & Detection-On Amazon Reviews DatasetDocument9 pagesDeception & Detection-On Amazon Reviews Datasetyavar khanNo ratings yet

- A Knowledge-Driven Approach To Activity Recognition in SmartDocument27 pagesA Knowledge-Driven Approach To Activity Recognition in SmartVPLAN INFOTECHNo ratings yet

- Advanced Skin Category Prediction System For Cosmetic Suggestion Using Deep Convolution Neural Network Report FinalDocument52 pagesAdvanced Skin Category Prediction System For Cosmetic Suggestion Using Deep Convolution Neural Network Report Finalajeyanajeyan3No ratings yet

- IEEE Format 1Document4 pagesIEEE Format 1Tech BondNo ratings yet

- CISC 6080 Capstone Project in Data ScienceDocument9 pagesCISC 6080 Capstone Project in Data ScienceYepu WangNo ratings yet

- DL NotesDocument28 pagesDL NotesjainayushtechNo ratings yet

- MNISTDocument3 pagesMNISTanushajNo ratings yet

- Level Set Segmentation ThesisDocument4 pagesLevel Set Segmentation Thesistiffanylovecleveland100% (2)

- Mnist Handwritten Digit ClassificationDocument26 pagesMnist Handwritten Digit ClassificationRaina SrivastavNo ratings yet

- ML ConceptsDocument2 pagesML ConceptsHari Sree. MNo ratings yet

- Inpainting and Denoising ChallengesFrom EverandInpainting and Denoising ChallengesSergio EscaleraNo ratings yet

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- Abhay Gahirwar Hospital TrainingDocument29 pagesAbhay Gahirwar Hospital TrainingjosephNo ratings yet

- Mini Project ReportDocument29 pagesMini Project ReportjosephNo ratings yet

- + Vs + in StringsDocument6 pages+ Vs + in StringsjosephNo ratings yet

- Ritik Verma ResumeDocument1 pageRitik Verma ResumejosephNo ratings yet

- Weather ForecastDocument11 pagesWeather ForecastjosephNo ratings yet

- Mini Project ReportDocument29 pagesMini Project ReportjosephNo ratings yet

- Python CkanDocument73 pagesPython Ckanjamunjiastemal007No ratings yet

- 2019 Summer Model Answer Paper (Msbte Study Resources)Document33 pages2019 Summer Model Answer Paper (Msbte Study Resources)Neha AnkushraoNo ratings yet

- JavascriptDocument145 pagesJavascriptsarthak pasrichaNo ratings yet

- CS 3340 Written Assignment Unit 5Document4 pagesCS 3340 Written Assignment Unit 5pohambadanielNo ratings yet

- VDR Operating ManualDocument32 pagesVDR Operating ManualEnder BolatNo ratings yet

- Product Specification: TFT-LCD Open CellDocument22 pagesProduct Specification: TFT-LCD Open CellMahmoud Digital-DigitalNo ratings yet

- Yeferson Sierra CCNPv7.1 SWITCH Lab6-1 FHRP HSRP VRRP STUDENTDocument41 pagesYeferson Sierra CCNPv7.1 SWITCH Lab6-1 FHRP HSRP VRRP STUDENTSebastianBarreraNo ratings yet

- A67 Vignesh (INS)Document34 pagesA67 Vignesh (INS)Tejas GuptaNo ratings yet

- Course ReactjsDocument137 pagesCourse ReactjsAIT SALAH MassinissaNo ratings yet

- Unit-4 Python PDFDocument26 pagesUnit-4 Python PDF21bit057No ratings yet

- Saurabh SinghDocument6 pagesSaurabh SinghNikita YadavNo ratings yet

- New Cloud Computing PPT OmDocument25 pagesNew Cloud Computing PPT OmShreya SharmaNo ratings yet

- ABIC2 Datasheet PreviewDocument2 pagesABIC2 Datasheet Previewmohamed.khalidNo ratings yet

- SOLIDserver Administrator Guide-7.3Document1,447 pagesSOLIDserver Administrator Guide-7.3Kenirth L100% (1)

- Wi - Fi Control Robot Using Node MCU: January 2018Document5 pagesWi - Fi Control Robot Using Node MCU: January 2018NayaaNo ratings yet

- Ricoh M 2700 M 2701: Digital B&W Multi Function PrinterDocument3 pagesRicoh M 2700 M 2701: Digital B&W Multi Function PrinterAli NasipNo ratings yet

- Electrosurgery AnalyzerDocument2 pagesElectrosurgery AnalyzerMariaaaNo ratings yet

- Esp32-Mini-1 Datasheet enDocument32 pagesEsp32-Mini-1 Datasheet enMr GhostNo ratings yet

- Pan Os New FeaturesDocument156 pagesPan Os New Featuresalways_redNo ratings yet

- ACR II Open Protocol Communication ManualDocument11 pagesACR II Open Protocol Communication ManualCarlos RinconNo ratings yet

- ReportDocument40 pagesReportYillmarSilkerNo ratings yet

- SoftwareDocument2 pagesSoftwaregeethakaniNo ratings yet

- FISAC-2-DSP Take Home AssignmentDocument1 pageFISAC-2-DSP Take Home AssignmentDpt HtegnNo ratings yet

- JavaScript BSC Unit 3.1Document7 pagesJavaScript BSC Unit 3.1Nikhil dhanplNo ratings yet

- SAP HANA Security Checklists and Recommendations enDocument36 pagesSAP HANA Security Checklists and Recommendations engsanjeevaNo ratings yet

- Printing Japanese Characters in C Program - Stack OverflowDocument3 pagesPrinting Japanese Characters in C Program - Stack OverflowFabio CNo ratings yet

- 4th Year CS I Semester ScheduleDocument2 pages4th Year CS I Semester Scheduleadu gNo ratings yet

- Computer and Operating SystemsDocument213 pagesComputer and Operating SystemsDrabrajib Hazarika100% (3)