Professional Documents

Culture Documents

Ijirt154128 Paper

Ijirt154128 Paper

Uploaded by

tghate91Copyright:

Available Formats

You might also like

- BSBXCM501 - Project Portfolio Template.v1.0Document14 pagesBSBXCM501 - Project Portfolio Template.v1.0Parash Rijal50% (2)

- Lesson Plan 1. Listening & Speaking ENGLISH LANGUAGE KSSR 4 PPKI (MP)Document4 pagesLesson Plan 1. Listening & Speaking ENGLISH LANGUAGE KSSR 4 PPKI (MP)Lily Burhany75% (4)

- GESE Grade 1 - Lesson Plan 3 - Classroom Objects and Numbers (Final)Document4 pagesGESE Grade 1 - Lesson Plan 3 - Classroom Objects and Numbers (Final)MARGUSINo ratings yet

- Ecs 403 ML Module IDocument33 pagesEcs 403 ML Module Isai rohithNo ratings yet

- Module 1Document28 pagesModule 1Tejas SharmaNo ratings yet

- Chapter 1Document3 pagesChapter 1Omkar DeshpandeNo ratings yet

- Eid 403 ML Module I Lecture NotesDocument26 pagesEid 403 ML Module I Lecture NotesbugbountyNo ratings yet

- Designing A Learning System: DR - Chandrika.J Professor CSE Course FacultyDocument22 pagesDesigning A Learning System: DR - Chandrika.J Professor CSE Course FacultyShashank PrasadNo ratings yet

- Module 2 PDFDocument26 pagesModule 2 PDFBirendra Kumar Baniya 1EW19CS024No ratings yet

- Module 1 Notes PDFDocument26 pagesModule 1 Notes PDFLaxmiNo ratings yet

- Module 1Document27 pagesModule 1Devaraj DevNo ratings yet

- Unit 4Document56 pagesUnit 4ShrutiNo ratings yet

- Video Tutorial: Machine Learning 17CS73Document27 pagesVideo Tutorial: Machine Learning 17CS73Mohammed Danish100% (2)

- Machine Learning (Unit-1)Document24 pagesMachine Learning (Unit-1)Supreetha G SNo ratings yet

- Visit:: Join Telegram To Get Instant Updates: Contact: MAIL: Instagram: Instagram: Whatsapp ShareDocument27 pagesVisit:: Join Telegram To Get Instant Updates: Contact: MAIL: Instagram: Instagram: Whatsapp ShareSid MohammedNo ratings yet

- Machine LearningDocument111 pagesMachine LearningDghddfNo ratings yet

- ML Lec 03 Machine Learning ProcessDocument42 pagesML Lec 03 Machine Learning ProcessGhulam RasoolNo ratings yet

- ML Notes TBDocument5 pagesML Notes TBrayudu shivaNo ratings yet

- Effective Applications of Learning: Speech RecognitionDocument52 pagesEffective Applications of Learning: Speech RecognitionSathyan RvksNo ratings yet

- M01 Machine LearningDocument25 pagesM01 Machine Learninganjibalaji52No ratings yet

- ML Module1 Chapter1Document38 pagesML Module1 Chapter1poojaNo ratings yet

- Presentation 1Document16 pagesPresentation 1Its MeNo ratings yet

- Lecture Series On Machine Learning: Ravi Gupta G. Bharadwaja KumarDocument77 pagesLecture Series On Machine Learning: Ravi Gupta G. Bharadwaja KumarfareenfarzanawahedNo ratings yet

- Marking Scheme: Unit: Computer Systems Assignment Title: Practical PortfolioDocument5 pagesMarking Scheme: Unit: Computer Systems Assignment Title: Practical PortfolioedeNo ratings yet

- Svit Dept of Computer Science and Engineering Machine Learning B.Tech IiiyrDocument53 pagesSvit Dept of Computer Science and Engineering Machine Learning B.Tech IiiyrManisha ManuNo ratings yet

- Unit 4Document45 pagesUnit 4farhandevil111No ratings yet

- 1 Introduction To Machine LearningDocument20 pages1 Introduction To Machine LearningRajvir SinghNo ratings yet

- Unit 1.2 Desigining A Learning SystemDocument15 pagesUnit 1.2 Desigining A Learning SystemMCA Department - AIMS InstitutesNo ratings yet

- CSE860 - 16 - Learning System DesignDocument15 pagesCSE860 - 16 - Learning System DesignIfra EjazNo ratings yet

- CS December 2015 Assignment MS SAMPLE PDFDocument4 pagesCS December 2015 Assignment MS SAMPLE PDFBright Harrison MwaleNo ratings yet

- Software Testing - 2024 - Assignment 2 22.01.2024Document6 pagesSoftware Testing - 2024 - Assignment 2 22.01.2024Viraj SawantNo ratings yet

- ML Unit1Document70 pagesML Unit1Hari Karthik ChowdaryNo ratings yet

- Winter 2019 - CS Assignment MS - Final 1Document5 pagesWinter 2019 - CS Assignment MS - Final 1Tafseer DeedarNo ratings yet

- ML-UNIT-1 - Introduction PART-1Document60 pagesML-UNIT-1 - Introduction PART-1Dheeraj Kandoor AITNo ratings yet

- Jonasandersen 20194920 ReportDocument4 pagesJonasandersen 20194920 ReportÖmür BilginNo ratings yet

- Unit 1Document20 pagesUnit 1moviedownloadasNo ratings yet

- Analysis and Research On Gamification Teaching Strategy Used in C Language Programming CourseDocument9 pagesAnalysis and Research On Gamification Teaching Strategy Used in C Language Programming CourseMetode PenelitianNo ratings yet

- Unit 77 Designing Tests For Computer GamesDocument16 pagesUnit 77 Designing Tests For Computer Gamesmfg7025No ratings yet

- Programming Fundamentals For Design and VerificationDocument4 pagesProgramming Fundamentals For Design and VerificationBittU BhagatNo ratings yet

- 08 - Ai-900 36 - 70 - MDocument10 pages08 - Ai-900 36 - 70 - MEdy NugrohoNo ratings yet

- Dfc10042 Lab Activity (Question)Document66 pagesDfc10042 Lab Activity (Question)lokeshNo ratings yet

- SWE3053 Q July2022 - FinalExamDocument6 pagesSWE3053 Q July2022 - FinalExamShaan Yeat Amin RatulNo ratings yet

- Workbook ActivityDocument68 pagesWorkbook Activityazmin asriNo ratings yet

- MLT QuantumDocument138 pagesMLT Quantumkumarsharma74092No ratings yet

- ML Unit-IDocument121 pagesML Unit-IKarthik TNo ratings yet

- Programming in Java Assignment 1: NPTEL Online Certification Courses Indian Institute of Technology KharagpurDocument6 pagesProgramming in Java Assignment 1: NPTEL Online Certification Courses Indian Institute of Technology KharagpurAbhinav ReddyNo ratings yet

- PSPD - Lkat DFC10212 PSPDDocument68 pagesPSPD - Lkat DFC10212 PSPDKayu 1106No ratings yet

- JAVADocument60 pagesJAVASaathwik Galimutti EliaNo ratings yet

- Lecture 1Document31 pagesLecture 1Varun AntoNo ratings yet

- Learningintro NotesDocument12 pagesLearningintro NotesTanviNo ratings yet

- 1 IntroductionDocument11 pages1 IntroductionMatrix BotNo ratings yet

- Machine Learning Unit 1Document56 pagesMachine Learning Unit 1sandtNo ratings yet

- Algorithms Revision PackDocument9 pagesAlgorithms Revision PackmedwindmatNo ratings yet

- St. John College of Engineering and Management, Palghar - MaharashtraDocument11 pagesSt. John College of Engineering and Management, Palghar - MaharashtrapranayNo ratings yet

- Assignment - 2 SolutionDocument5 pagesAssignment - 2 SolutionPragati ojhaNo ratings yet

- Introduction To Machine Learning: Prof. (DR.) Honey Sharma Reference BooksDocument26 pagesIntroduction To Machine Learning: Prof. (DR.) Honey Sharma Reference BooksDirector GGINo ratings yet

- DEEP LEARNING IIT Kharagpur Assignment - 4 - 2024Document7 pagesDEEP LEARNING IIT Kharagpur Assignment - 4 - 2024Mangaiyarkarasi KNo ratings yet

- Ac05 (RN) Mar19 LRDocument114 pagesAc05 (RN) Mar19 LRNiki GeorgievNo ratings yet

- Demystifying GPT Self-Repair For Code Generation 2306.09896Document38 pagesDemystifying GPT Self-Repair For Code Generation 2306.09896Joshua LaferriereNo ratings yet

- Chapter IVDocument10 pagesChapter IVErico VincentciusNo ratings yet

- CENG103 CourseOutline 2017 2018 FALLDocument3 pagesCENG103 CourseOutline 2017 2018 FALLlucasNo ratings yet

- Application Form PDFDocument2 pagesApplication Form PDFMUKTAR REZVINo ratings yet

- Global Contributions of Pharmacists During The COVID-19 PandemicDocument13 pagesGlobal Contributions of Pharmacists During The COVID-19 PandemicSILVIA ROSARIO CHALCO MENDOZANo ratings yet

- Lesson Plan English Form 2Document5 pagesLesson Plan English Form 2Cyra RedzaNo ratings yet

- FYBSc Zoology Syllabus 2018-19 (Autonomous)Document23 pagesFYBSc Zoology Syllabus 2018-19 (Autonomous)Meir SabooNo ratings yet

- American Scholarships pg.2 PDFDocument15 pagesAmerican Scholarships pg.2 PDFMunkhzul JargalsaikhanNo ratings yet

- Civil Engineer ResumeDocument9 pagesCivil Engineer ResumesajidNo ratings yet

- Come Along SiaDocument22 pagesCome Along SiaLilla de Saint BlanquatNo ratings yet

- Quantum Mechanics A Paradigms Approach 1st Edition Mcintyre Solutions ManualDocument31 pagesQuantum Mechanics A Paradigms Approach 1st Edition Mcintyre Solutions Manualatomizerbuffaloh16rp100% (23)

- Boy Scouts of The Philippines National OfficeDocument1 pageBoy Scouts of The Philippines National OfficeKM EtalsedNo ratings yet

- 3248 s19 QP 2Document12 pages3248 s19 QP 2Maheen Hemani100% (1)

- 64b8c25e5077c463397245 05TUITIONFEEANDOTHERDUES 20072023Document6 pages64b8c25e5077c463397245 05TUITIONFEEANDOTHERDUES 20072023manjotsingh08xstudyNo ratings yet

- Connection Framework Ref ManualDocument145 pagesConnection Framework Ref ManualSwaroopNo ratings yet

- Task 1 (Page 13) - Calculuc VectorDocument10 pagesTask 1 (Page 13) - Calculuc VectorRaniNo ratings yet

- IIBM Institute PresentationDocument20 pagesIIBM Institute PresentationVidhi MaheshwariNo ratings yet

- Claritas Training CoursesDocument2 pagesClaritas Training CoursesJose SilvaNo ratings yet

- Tanaman Herbal Cocor Bebek (Kalanchoe Pinnata)Document6 pagesTanaman Herbal Cocor Bebek (Kalanchoe Pinnata)Keimy KatsuraNo ratings yet

- Siwes Report For BuildingDocument27 pagesSiwes Report For BuildingModo SwiftNo ratings yet

- Pestalozzi: Educator of The Senses & EmotionsDocument4 pagesPestalozzi: Educator of The Senses & EmotionsVidan BoumjahedNo ratings yet

- Ancient Athens Brochure Assignment and RubricDocument2 pagesAncient Athens Brochure Assignment and Rubricapi-297382264No ratings yet

- 普通型高中英文 (一) 課文例句中英對照Unit 1Document5 pages普通型高中英文 (一) 課文例句中英對照Unit 1Aurora Zeng100% (1)

- Reader Libraries - Pearson ELT USADocument3 pagesReader Libraries - Pearson ELT USAGabrielNo ratings yet

- National Orientation On The Standardized Administration of Various Assessment Programs For Fiscal Year FY 2023Document201 pagesNational Orientation On The Standardized Administration of Various Assessment Programs For Fiscal Year FY 2023Jener MontemayorNo ratings yet

- Nicole Heyman ResumeDocument2 pagesNicole Heyman Resumeapi-271092363No ratings yet

- Recruitment and Selection at RjilDocument13 pagesRecruitment and Selection at Rjildiksangel100% (2)

- Awareness To Sun Exposure and Use of Sunscreen by The General PopulationDocument5 pagesAwareness To Sun Exposure and Use of Sunscreen by The General PopulationBivek MaharjanNo ratings yet

- BHCS SsipDocument24 pagesBHCS SsipJohn Mark PrudenteNo ratings yet

- Tour Guide CurriculumDocument21 pagesTour Guide CurriculumSiti Zulaiha Zabidin0% (2)

Ijirt154128 Paper

Ijirt154128 Paper

Uploaded by

tghate91Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ijirt154128 Paper

Ijirt154128 Paper

Uploaded by

tghate91Copyright:

Available Formats

© March 2022| IJIRT | Volume 8 Issue 10 | ISSN: 2349-6002

Well-Posed Learning Problems and Designing Learning System

Dr. Kothuri Parashu Ramulu1, Dr. Jogannagari Malla Reddy2

1

Associate Professor, Indur Institute of Engineering & Technology

2

Professor, Mahaveer Institute of Science & Technology

Abstract—Machine learning is the study of computer

algorithms that can improve automatically through

experience and by the use of data. It is seen as a part of

artificial intelligence. A computer program is said to

learn from experience with respect to some set of tasks

and performance measure , if its performance at set of

tasks improves with experience. A well-defined learning

problem will have the features like class of tasks, the

measure of performance to be improved, and the source

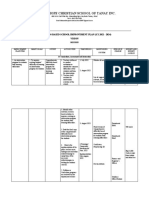

of experience examples. To get a successful learning Figure 1.1

system, it should be designed properly, for a proper A handwriting recognition learning problem: Task

design several steps may be followed for perfect and T→recognizing and classifying handwritten words

efficient system.

within images, Performance measure P→ percent of

words correctly classified, Training experience E→a

Index Terms— Direct feedback, Indirect feedback, database of handwritten words with given

Estimating Training Values, LMS, Performance System, classifications.

Generalizer, Critic, Experiment Generator. A robot driving learning problem: Task T→ driving on

public four-lane highways using vision sensors [2],

I. INTRODUCTION Performance measure P→average distance travelled

before an error (as judged by human overseer)

A computer program is said to learn from experience

Training experience E→ a sequence of images and

E with respect to some set of tasks T and performance

steering commands recorded while observing a human

measure P, if its performance at set of tasks in T, as

driver

measured by P, improves with experience E. A well-

defined learning problem, have to identify three II. DESIGNING LEARNING SYSTEM

features: The class of tasks, the measure of

To get a successful learning system, it should be

performance to be improved, the source of

designed properly, for a proper design several steps

experience Examples [3]. The various examples are

may be followed for perfect and efficient system [1].

Checkers game: A computer program that learns to

The basic design issues and approaches to machine

play checkers might improve its performance as

learning are illustrated by designing a program to learn

measured by its ability to win at the class of tasks

to play checkers, with the goal of entering it in the

involving playing checkers games, through experience

world checkers tournament

obtained by playing games against it.

1. Choosing the Training Experience

A checkers learning problem: Task T→playing

2. Choosing the Target Function

checkers, Performance measure P→ percent of games

3. Choosing a Representation for the Target

won against opponents, Training experience E→

Function

playing practice games against itself. The checkers

4. Choosing a Function Approximation Algorithm

game board will be as in figure 1.1.

a. Estimating training values

b. Adjusting the weights

5. The Final Design

IJIRT 154128 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 166

© March 2022| IJIRT | Volume 8 Issue 10 | ISSN: 2349-6002

Diagrammatical representation of designing learning IV. CHOOSING THE TARGET FUNCTION

system is as in figure 2.1.

The next design step is to determine exactly what type

of knowledge will be learned and how this will be used

by the performance program.

Consider the checkers problem

Let us therefore define the target value V(b) for an

arbitrary board state b in B, as follows:

1. if b is a final board state that is won, then V(b) =

100

2. if b is a final board state that is lost, then V(b) =

-100

3. if b is a final board state that is drawn, then V(b)

Figure 2.1 =0

4. if b is a not a final state in the game, then V(b) =

III. CHOOSING THE TRAINING EXPERIENCE V(b'), where b' is the best final board state that can

be achieved starting from b and playing optimally

The first design step is to choose the type of training

until the end of the game (assuming the opponent

experience from which the system will learn. The type

plays optimally, as well).

of training experience which is available can have

While this recursive definition specifies a value of

significant impact on success or failure of the learning

V(b) for every board state b, this definition is not

system.

usable by our checkers player because it is not

A. Whether the training Experience provides

efficiently computable. Except for the trivial cases

direct or indirect feedback regarding the choices made

(cases 1-3) in which the game has already ended,

by the performance system

determining the value of V(b) for a particular board

To do a task T, performance P needs to be good which

state requires (case 4) searching ahead for the optimal

also requires good experience E.

line of play, all the way to the end of the game!

Good training will give good experience; training is of

Because this definition is not efficiently computable

two types, direct feedback training and indirect

by our checkers playing program, we say that it is a

feedback training. Direct feedback will give the result

nonoperational definition. The goal of learning in this

of the move simultaneously (driving a car with the

case is to discover an operational description of V; that

help of coach sitting besides), indirect feedback will

is, a description that can be used by the checkers-

give the hints on moves (driving a car by listening

playing program to evaluate states and select moves

recorded sessions). Learner can select direct feedback

within realistic time bounds. Thus, we have reduced

or indirect feedback.

the learning task in this case to the problem of

discovering an operational description of the ideal

B. The degree to which the learner controls the

target function V [4]. It may be very difficult in

sequence of training examples

general to learn such an operational form of V

Up to what extent the learner can control the sequence

perfectly. In fact, we often expect learning algorithms

of training examples. Example the learner driving the

to acquire only some approximation to the target

car with full help of the trainer or partial help of the

function, and for this reason the process of learning the

trainer or learner driving the car without the help of the

target function is often called function approximation.

trainer.

In the current discussion we will use the symbol v̂ to

refer to the function that is actually learned by our

C. How well it represents the distribution of

program, to distinguish it from the ideal target function

examples over which the final system performance P

V.

must be measured

The diagrammatical representation of the attributes of

V. CHOOSING A REPRESENTATION FOR THE

choosing the training experience is as in figure 2.2

TARGET FUNCTION

IJIRT 154128 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 167

© March 2022| IJIRT | Volume 8 Issue 10 | ISSN: 2349-6002

Let us choose a simple representation: for any given board state b and the training value Vtrain(b) for b. Each

board state, the function c will be calculated as a linear training example is an ordered pair of the form (b,

combination of the following board features: Vtrain(b)). For example, the following training example

1. xl: the number of black pieces on the board describes a board state b in which black has won the

2. x2: the number of red pieces on the board game, i.e. X2 = 0 indicates that red has no remaining

3. x3: the number of black kings on the board pieces then the target function Vtrain(b) value will be

4. x4: the number of red kings on the board +100.

5. x5: the number of black pieces threatened by red ((X1=3, X2=0, X3=1, X4=0, X5=0, X6=0), +100)

(i.e., which can be captured on red's next turn) Now, we describe a procedure that first derives such

6. x6: the number of red pieces threatened by black training examples from the indirect training

Thus, our learning program will represent v̂(b) as a experience available to the learner, then adjusts the

linear function of the form weights wi to best fit these training examples.

v̂(b) = w0+w1x1+w2x2+w3x3+w4x4+w5x5+w6x6 A. Estimating Training Values

Where Wo through W6 are numerical coefficients, or It is easy to assign a value to board states that

weights, to be chosen by the learning algorithm. correspond to the end of the game (0, -100 or +100)

Learned values for the weights Wl through W6 will [5], but estimating training values of intermediate

determine the relative importance of the various board board states b is Vtrain(b). Assign v̂(Successor(b))for

features in determining the value of the board, whereas Vtrain (b), where v̂ is the learner’s current

the weight Wo will provide an additive constant to the approximation to V and Successor(b) denotes the next

board value. board state following the b for which it is again it is

To summarize our design choices thus far, we have again the program turn to move.

elaborated the original formulation of the learning Rule for estimating the training values.

problem by choosing a type of training experience, a Vtrain(b) v̂(Successor(b))

target function to be learned, and a representation for

this target function [8]. Our elaborated learning task is B. Adjusting the weights

now All that remains is to specify the learning algorithm for

choosing the weights wi to best fit the set of training

Partial design of a checkers learning program: examples {(b, Vtrain(b))}. As a first step we must

Task T: playing checkers define what we mean by the bestfit to the training data.

Performance measure P: percent of games won in the One common approach is to define the best

world tournament hypothesis, or set of weights, as that which minimizes

Training experience E: games played against itself the squared error [6] E between the training values and

Target function: V: Board →R the values predicted by the hypothesis v̂.

Target function representation

v̂(b) = w0+w1x1+w2x2+w3x3+w4x4+w5x5+w6x6

The first three items above correspond to the

specification of the learning task, whereas the final Thus, we seek the weights, or equivalently the v̂, that

two items constitute design choices for the minimize E for the observed training examples. The

implementation of the learning program. Notice the LMS algorithm is defined as follows:

net effect of this set of design choices is to reduce the

problem of learning a checkers strategy to the problem

of learning values for the coefficients w0 through w6

in the target function representation.

VI.CHOOSING A FUNCTION APPROXIMATION

ALGORITHM VII. THE FINAL DESIGN

Set of training examples are required to train the target The final design of checkers learning system can be

function v̂, each training example describe a specific described by four distinct program modules that

IJIRT 154128 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 168

© March 2022| IJIRT | Volume 8 Issue 10 | ISSN: 2349-6002

represent the central components in many learning given performance task, in this case playing checkers,

systems by using the learned target function(s)[7].

These four modules, summarized in Figure 7.1are The

Performance System, is the module that must solve the

Figure 7.1

The Critic takes as input the history or trace of the source of experience examples. To get a successful

game and produces as output a set of training examples learning system, it should be designed properly, for a

of the target function. The Generalizer takes as input proper design several steps may be followed for

the training examples and produces an output perfect and efficient system.

hypothesis that is its estimate of the target function.

The Experiment Generator takes as input the current ACKNOWLEDGMENT

hypothesis (currently learned function) and outputs a

new problem (i.e., initial board state) for the We are thankful to our friends, faculty members and

Performance System to explore. Its role is to pick new management for their help in writing this paper.

practice problems that will maximize the learning rate REFERENCES

of the overall system.

[1] Luis Alfaro1, Claudia Rivera2, Jorge A Luna-

VIII. CONCLUSION Urquizo, “Using Projectbased Learning in a

Hybrid e-Learning System Model”, International

Machine learning is the part of Artificial Intelligence, Journal of Advanced Computer Science and

is the study of computer algorithms that can improve Applications, Vol. 10, No.10, pp. 426-436, 2019.

automatically through experience and by the use of [2] Markowska-Kaczmar U., Kwasnicka H.,

data. A computer program is said to learn from Paradowski M., ”Intelligent Techniques in

experience with respect to some set of tasks and Personalization of Learning in e-Learning

performance measure, if its performance at set of tasks Systems”, Studies in Computational Intelligence,

improves with experience. A well-defined learning Springer, Berlin, Heidelberg, Vol. 273, pp. 1–23,

problem will have the features like class of tasks, the 2010.

measure of performance to be improved, and the

IJIRT 154128 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 169

© March 2022| IJIRT | Volume 8 Issue 10 | ISSN: 2349-6002

[3] Etienne, Wenger. "Artificial intelligence A.and

tutoring systems." Computational and Cognitive

Approaches to the Communication of

Knowledge. Morgan Kauffmann, Los Altos, San

Francisco, CA USA, 1987.

[4] Ohlsson, Stellan. "Some principles of intelligent

tutoring", Instructional Science, Vol.3, No.4, pp.

293-326, 1986.

[5] Mark Nichols, “A theory for eLearning,” Journal

of Educational Technology & Society, Vol. 6, No.

2, pp. 1-10, 2003.

[6] M. Alavi. “Computer mediated collaborative

learning: An empirical, evaluation”, MIS Quart.,

Vol.18, No.2, pp.159-174, 1994.

[7] Nicola Henze, Peter Dolog and Wolfgang Nejdl,

“Reasoning and Ontologies for Personalized E-

Learning in the Semantic Web,” Journal of

Educational Technology & Society, Vol. 7, No. 4,

pp. 82-97, 2004.

[8] Jeroen J. G., van Merriënboer and Paul A.

Kirschner, “Ten Steps to Complex Learning A

Systematic Approach to Four-Component

Instructional Design,” Taylor & Francis Group,

2017.

[9] Zhanga, Ye Jun, and Bo Songb. “A Personalized

e-Learning System Based on GWT.” In:

International Conference on Education,

Management, Commerce and Society, pp. 183-

187, 2015.

IJIRT 154128 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 170

You might also like

- BSBXCM501 - Project Portfolio Template.v1.0Document14 pagesBSBXCM501 - Project Portfolio Template.v1.0Parash Rijal50% (2)

- Lesson Plan 1. Listening & Speaking ENGLISH LANGUAGE KSSR 4 PPKI (MP)Document4 pagesLesson Plan 1. Listening & Speaking ENGLISH LANGUAGE KSSR 4 PPKI (MP)Lily Burhany75% (4)

- GESE Grade 1 - Lesson Plan 3 - Classroom Objects and Numbers (Final)Document4 pagesGESE Grade 1 - Lesson Plan 3 - Classroom Objects and Numbers (Final)MARGUSINo ratings yet

- Ecs 403 ML Module IDocument33 pagesEcs 403 ML Module Isai rohithNo ratings yet

- Module 1Document28 pagesModule 1Tejas SharmaNo ratings yet

- Chapter 1Document3 pagesChapter 1Omkar DeshpandeNo ratings yet

- Eid 403 ML Module I Lecture NotesDocument26 pagesEid 403 ML Module I Lecture NotesbugbountyNo ratings yet

- Designing A Learning System: DR - Chandrika.J Professor CSE Course FacultyDocument22 pagesDesigning A Learning System: DR - Chandrika.J Professor CSE Course FacultyShashank PrasadNo ratings yet

- Module 2 PDFDocument26 pagesModule 2 PDFBirendra Kumar Baniya 1EW19CS024No ratings yet

- Module 1 Notes PDFDocument26 pagesModule 1 Notes PDFLaxmiNo ratings yet

- Module 1Document27 pagesModule 1Devaraj DevNo ratings yet

- Unit 4Document56 pagesUnit 4ShrutiNo ratings yet

- Video Tutorial: Machine Learning 17CS73Document27 pagesVideo Tutorial: Machine Learning 17CS73Mohammed Danish100% (2)

- Machine Learning (Unit-1)Document24 pagesMachine Learning (Unit-1)Supreetha G SNo ratings yet

- Visit:: Join Telegram To Get Instant Updates: Contact: MAIL: Instagram: Instagram: Whatsapp ShareDocument27 pagesVisit:: Join Telegram To Get Instant Updates: Contact: MAIL: Instagram: Instagram: Whatsapp ShareSid MohammedNo ratings yet

- Machine LearningDocument111 pagesMachine LearningDghddfNo ratings yet

- ML Lec 03 Machine Learning ProcessDocument42 pagesML Lec 03 Machine Learning ProcessGhulam RasoolNo ratings yet

- ML Notes TBDocument5 pagesML Notes TBrayudu shivaNo ratings yet

- Effective Applications of Learning: Speech RecognitionDocument52 pagesEffective Applications of Learning: Speech RecognitionSathyan RvksNo ratings yet

- M01 Machine LearningDocument25 pagesM01 Machine Learninganjibalaji52No ratings yet

- ML Module1 Chapter1Document38 pagesML Module1 Chapter1poojaNo ratings yet

- Presentation 1Document16 pagesPresentation 1Its MeNo ratings yet

- Lecture Series On Machine Learning: Ravi Gupta G. Bharadwaja KumarDocument77 pagesLecture Series On Machine Learning: Ravi Gupta G. Bharadwaja KumarfareenfarzanawahedNo ratings yet

- Marking Scheme: Unit: Computer Systems Assignment Title: Practical PortfolioDocument5 pagesMarking Scheme: Unit: Computer Systems Assignment Title: Practical PortfolioedeNo ratings yet

- Svit Dept of Computer Science and Engineering Machine Learning B.Tech IiiyrDocument53 pagesSvit Dept of Computer Science and Engineering Machine Learning B.Tech IiiyrManisha ManuNo ratings yet

- Unit 4Document45 pagesUnit 4farhandevil111No ratings yet

- 1 Introduction To Machine LearningDocument20 pages1 Introduction To Machine LearningRajvir SinghNo ratings yet

- Unit 1.2 Desigining A Learning SystemDocument15 pagesUnit 1.2 Desigining A Learning SystemMCA Department - AIMS InstitutesNo ratings yet

- CSE860 - 16 - Learning System DesignDocument15 pagesCSE860 - 16 - Learning System DesignIfra EjazNo ratings yet

- CS December 2015 Assignment MS SAMPLE PDFDocument4 pagesCS December 2015 Assignment MS SAMPLE PDFBright Harrison MwaleNo ratings yet

- Software Testing - 2024 - Assignment 2 22.01.2024Document6 pagesSoftware Testing - 2024 - Assignment 2 22.01.2024Viraj SawantNo ratings yet

- ML Unit1Document70 pagesML Unit1Hari Karthik ChowdaryNo ratings yet

- Winter 2019 - CS Assignment MS - Final 1Document5 pagesWinter 2019 - CS Assignment MS - Final 1Tafseer DeedarNo ratings yet

- ML-UNIT-1 - Introduction PART-1Document60 pagesML-UNIT-1 - Introduction PART-1Dheeraj Kandoor AITNo ratings yet

- Jonasandersen 20194920 ReportDocument4 pagesJonasandersen 20194920 ReportÖmür BilginNo ratings yet

- Unit 1Document20 pagesUnit 1moviedownloadasNo ratings yet

- Analysis and Research On Gamification Teaching Strategy Used in C Language Programming CourseDocument9 pagesAnalysis and Research On Gamification Teaching Strategy Used in C Language Programming CourseMetode PenelitianNo ratings yet

- Unit 77 Designing Tests For Computer GamesDocument16 pagesUnit 77 Designing Tests For Computer Gamesmfg7025No ratings yet

- Programming Fundamentals For Design and VerificationDocument4 pagesProgramming Fundamentals For Design and VerificationBittU BhagatNo ratings yet

- 08 - Ai-900 36 - 70 - MDocument10 pages08 - Ai-900 36 - 70 - MEdy NugrohoNo ratings yet

- Dfc10042 Lab Activity (Question)Document66 pagesDfc10042 Lab Activity (Question)lokeshNo ratings yet

- SWE3053 Q July2022 - FinalExamDocument6 pagesSWE3053 Q July2022 - FinalExamShaan Yeat Amin RatulNo ratings yet

- Workbook ActivityDocument68 pagesWorkbook Activityazmin asriNo ratings yet

- MLT QuantumDocument138 pagesMLT Quantumkumarsharma74092No ratings yet

- ML Unit-IDocument121 pagesML Unit-IKarthik TNo ratings yet

- Programming in Java Assignment 1: NPTEL Online Certification Courses Indian Institute of Technology KharagpurDocument6 pagesProgramming in Java Assignment 1: NPTEL Online Certification Courses Indian Institute of Technology KharagpurAbhinav ReddyNo ratings yet

- PSPD - Lkat DFC10212 PSPDDocument68 pagesPSPD - Lkat DFC10212 PSPDKayu 1106No ratings yet

- JAVADocument60 pagesJAVASaathwik Galimutti EliaNo ratings yet

- Lecture 1Document31 pagesLecture 1Varun AntoNo ratings yet

- Learningintro NotesDocument12 pagesLearningintro NotesTanviNo ratings yet

- 1 IntroductionDocument11 pages1 IntroductionMatrix BotNo ratings yet

- Machine Learning Unit 1Document56 pagesMachine Learning Unit 1sandtNo ratings yet

- Algorithms Revision PackDocument9 pagesAlgorithms Revision PackmedwindmatNo ratings yet

- St. John College of Engineering and Management, Palghar - MaharashtraDocument11 pagesSt. John College of Engineering and Management, Palghar - MaharashtrapranayNo ratings yet

- Assignment - 2 SolutionDocument5 pagesAssignment - 2 SolutionPragati ojhaNo ratings yet

- Introduction To Machine Learning: Prof. (DR.) Honey Sharma Reference BooksDocument26 pagesIntroduction To Machine Learning: Prof. (DR.) Honey Sharma Reference BooksDirector GGINo ratings yet

- DEEP LEARNING IIT Kharagpur Assignment - 4 - 2024Document7 pagesDEEP LEARNING IIT Kharagpur Assignment - 4 - 2024Mangaiyarkarasi KNo ratings yet

- Ac05 (RN) Mar19 LRDocument114 pagesAc05 (RN) Mar19 LRNiki GeorgievNo ratings yet

- Demystifying GPT Self-Repair For Code Generation 2306.09896Document38 pagesDemystifying GPT Self-Repair For Code Generation 2306.09896Joshua LaferriereNo ratings yet

- Chapter IVDocument10 pagesChapter IVErico VincentciusNo ratings yet

- CENG103 CourseOutline 2017 2018 FALLDocument3 pagesCENG103 CourseOutline 2017 2018 FALLlucasNo ratings yet

- Application Form PDFDocument2 pagesApplication Form PDFMUKTAR REZVINo ratings yet

- Global Contributions of Pharmacists During The COVID-19 PandemicDocument13 pagesGlobal Contributions of Pharmacists During The COVID-19 PandemicSILVIA ROSARIO CHALCO MENDOZANo ratings yet

- Lesson Plan English Form 2Document5 pagesLesson Plan English Form 2Cyra RedzaNo ratings yet

- FYBSc Zoology Syllabus 2018-19 (Autonomous)Document23 pagesFYBSc Zoology Syllabus 2018-19 (Autonomous)Meir SabooNo ratings yet

- American Scholarships pg.2 PDFDocument15 pagesAmerican Scholarships pg.2 PDFMunkhzul JargalsaikhanNo ratings yet

- Civil Engineer ResumeDocument9 pagesCivil Engineer ResumesajidNo ratings yet

- Come Along SiaDocument22 pagesCome Along SiaLilla de Saint BlanquatNo ratings yet

- Quantum Mechanics A Paradigms Approach 1st Edition Mcintyre Solutions ManualDocument31 pagesQuantum Mechanics A Paradigms Approach 1st Edition Mcintyre Solutions Manualatomizerbuffaloh16rp100% (23)

- Boy Scouts of The Philippines National OfficeDocument1 pageBoy Scouts of The Philippines National OfficeKM EtalsedNo ratings yet

- 3248 s19 QP 2Document12 pages3248 s19 QP 2Maheen Hemani100% (1)

- 64b8c25e5077c463397245 05TUITIONFEEANDOTHERDUES 20072023Document6 pages64b8c25e5077c463397245 05TUITIONFEEANDOTHERDUES 20072023manjotsingh08xstudyNo ratings yet

- Connection Framework Ref ManualDocument145 pagesConnection Framework Ref ManualSwaroopNo ratings yet

- Task 1 (Page 13) - Calculuc VectorDocument10 pagesTask 1 (Page 13) - Calculuc VectorRaniNo ratings yet

- IIBM Institute PresentationDocument20 pagesIIBM Institute PresentationVidhi MaheshwariNo ratings yet

- Claritas Training CoursesDocument2 pagesClaritas Training CoursesJose SilvaNo ratings yet

- Tanaman Herbal Cocor Bebek (Kalanchoe Pinnata)Document6 pagesTanaman Herbal Cocor Bebek (Kalanchoe Pinnata)Keimy KatsuraNo ratings yet

- Siwes Report For BuildingDocument27 pagesSiwes Report For BuildingModo SwiftNo ratings yet

- Pestalozzi: Educator of The Senses & EmotionsDocument4 pagesPestalozzi: Educator of The Senses & EmotionsVidan BoumjahedNo ratings yet

- Ancient Athens Brochure Assignment and RubricDocument2 pagesAncient Athens Brochure Assignment and Rubricapi-297382264No ratings yet

- 普通型高中英文 (一) 課文例句中英對照Unit 1Document5 pages普通型高中英文 (一) 課文例句中英對照Unit 1Aurora Zeng100% (1)

- Reader Libraries - Pearson ELT USADocument3 pagesReader Libraries - Pearson ELT USAGabrielNo ratings yet

- National Orientation On The Standardized Administration of Various Assessment Programs For Fiscal Year FY 2023Document201 pagesNational Orientation On The Standardized Administration of Various Assessment Programs For Fiscal Year FY 2023Jener MontemayorNo ratings yet

- Nicole Heyman ResumeDocument2 pagesNicole Heyman Resumeapi-271092363No ratings yet

- Recruitment and Selection at RjilDocument13 pagesRecruitment and Selection at Rjildiksangel100% (2)

- Awareness To Sun Exposure and Use of Sunscreen by The General PopulationDocument5 pagesAwareness To Sun Exposure and Use of Sunscreen by The General PopulationBivek MaharjanNo ratings yet

- BHCS SsipDocument24 pagesBHCS SsipJohn Mark PrudenteNo ratings yet

- Tour Guide CurriculumDocument21 pagesTour Guide CurriculumSiti Zulaiha Zabidin0% (2)