Professional Documents

Culture Documents

Project Coding-Manish Dwari 1807

Project Coding-Manish Dwari 1807

Uploaded by

soniakar3000Copyright:

Available Formats

You might also like

- TIMO Final 2020-2021 P3Document5 pagesTIMO Final 2020-2021 P3An Nguyen100% (2)

- Id Diagnosis Radiusmean Texturemean Perimetermean Areamean Smoothne 0 1 2 3 4 ... 564 565 566 567 568Document5 pagesId Diagnosis Radiusmean Texturemean Perimetermean Areamean Smoothne 0 1 2 3 4 ... 564 565 566 567 568Zaid AhamedNo ratings yet

- 3Document5 pages3Zaid AhamedNo ratings yet

- 3 - Calculating Percentiles CompleteDocument8 pages3 - Calculating Percentiles CompleteLarry AbarcaNo ratings yet

- Direct Shear Test HDocument2 pagesDirect Shear Test HRajinda BintangNo ratings yet

- Id Diagnosis Radiusmean Texturemean Perimetermean Areamean Smoothne 0 1 2 3 4 ... 564 565 566 567 568Document3 pagesId Diagnosis Radiusmean Texturemean Perimetermean Areamean Smoothne 0 1 2 3 4 ... 564 565 566 567 568Zaid AhamedNo ratings yet

- Assignment Fluid2Document8 pagesAssignment Fluid2Amier AziziNo ratings yet

- Resolución Práctica Calificada 3: Subcuenca 01 Subcuenca 02 TC TCDocument2 pagesResolución Práctica Calificada 3: Subcuenca 01 Subcuenca 02 TC TCNicole HuamaníNo ratings yet

- 20BCP021 Assignment 3Document7 pages20BCP021 Assignment 3chatgptplus4usNo ratings yet

- Curvas de Capacidad de Embalse Lampazuni yDocument22 pagesCurvas de Capacidad de Embalse Lampazuni yRaul SanchezNo ratings yet

- Sieve Size (MM) : Mass Retained (Grams) : Percent Finer (%) :: Legend!! 34Document3 pagesSieve Size (MM) : Mass Retained (Grams) : Percent Finer (%) :: Legend!! 34Jhon Russel Cruz AntonioNo ratings yet

- Tablas TransitorioDocument4 pagesTablas Transitoriopatricia carrilloNo ratings yet

- MatrizDocument4 pagesMatrizlesly tiradoNo ratings yet

- Suruh Tembawang Versi 3Document76 pagesSuruh Tembawang Versi 3eko bari wNo ratings yet

- Laxmi Sample2 R7 120degDocument3 pagesLaxmi Sample2 R7 120degAamir KhanNo ratings yet

- American Conductor StrandingDocument1 pageAmerican Conductor Strandingkariboo karibooxNo ratings yet

- Datasheet 1x19 Stainless Steel Strand Wire RopeDocument1 pageDatasheet 1x19 Stainless Steel Strand Wire RopePrattyNo ratings yet

- DSCI Group Project - Schenck's SimulationDocument5 pagesDSCI Group Project - Schenck's SimulationEaston GriffinNo ratings yet

- Perhitungan Uji KSDocument4 pagesPerhitungan Uji KSArlnote ForbidenNo ratings yet

- Distancia (x) θDocument2 pagesDistancia (x) θLIZBETH VELAZQUEZ GONZALEZNo ratings yet

- Project 4Document12 pagesProject 4Manolo CamelaNo ratings yet

- DFB Sample No 326ADocument16 pagesDFB Sample No 326AKuthuraikaranNo ratings yet

- Tabla Diferencia Potencial (V) V/s Corriente (I) : 16.00 F (X) 0.2140585593x + 0.0335509278 R 0.9998985627Document4 pagesTabla Diferencia Potencial (V) V/s Corriente (I) : 16.00 F (X) 0.2140585593x + 0.0335509278 R 0.9998985627IgnacioLamillaNo ratings yet

- FI6051 Dynamic Delta Hedging Example HullTable14!2!3Document4 pagesFI6051 Dynamic Delta Hedging Example HullTable14!2!3fmurphyNo ratings yet

- GeoVES - V1 VES SoftwareDocument5 pagesGeoVES - V1 VES SoftwareAurangzeb JadoonNo ratings yet

- N (0.05) N (0.01) N (0.002) N (0.00064) Soluci Ones: Chart TitleDocument11 pagesN (0.05) N (0.01) N (0.002) N (0.00064) Soluci Ones: Chart TitleMarvin AguirreNo ratings yet

- Curva de DescargaDocument5 pagesCurva de DescargaAshley PeraltaNo ratings yet

- Ecuaciones (3X3) Ecuaciones (4X4) A B CDocument14 pagesEcuaciones (3X3) Ecuaciones (4X4) A B Cjose zegarra castroNo ratings yet

- Regression AnalysisDocument3 pagesRegression AnalysisMinza JahangirNo ratings yet

- Parte 1 Laboratorio 2Document3 pagesParte 1 Laboratorio 2ALEJANDRA GOMEZ ALVAREZNo ratings yet

- B GasturbineDocument11 pagesB GasturbineRIPUDAMAN SINGHNo ratings yet

- Basic Fret CalculatorDocument2 pagesBasic Fret CalculatorBenjamin RivasNo ratings yet

- Lab de FísicaDocument3 pagesLab de FísicaYoana SeseñaNo ratings yet

- Cluster: Case Processing SummaryDocument176 pagesCluster: Case Processing SummarySubhojit ChatterjeeNo ratings yet

- Black Pepper OriginalDocument66 pagesBlack Pepper Originalg6jwt57wm8No ratings yet

- Chilled Water Carbon Steel Pipes and Fittings Above Ground (Interpipe) - 4Document1 pageChilled Water Carbon Steel Pipes and Fittings Above Ground (Interpipe) - 4Vic BayotNo ratings yet

- Primer EjercicioDocument3 pagesPrimer EjercicioSara ReyesNo ratings yet

- Dbsample 327 Center - 1Document6 pagesDbsample 327 Center - 1KuthuraikaranNo ratings yet

- dt x X Normal ón Distribuci π: x Pr (X<x) x Pr (X<x) x Pr (X<x) x Pr (X<x)Document1 pagedt x X Normal ón Distribuci π: x Pr (X<x) x Pr (X<x) x Pr (X<x) x Pr (X<x)Jose Francisco HernándezNo ratings yet

- Tablanormal PDFDocument1 pageTablanormal PDFalterlaboroNo ratings yet

- Homework Materials4Document3 pagesHomework Materials4adahrenelleNo ratings yet

- TAMIZADODocument3 pagesTAMIZADOKathia Rojas MirandaNo ratings yet

- LM6 + 5% Graphite - Tension 3DDocument4 pagesLM6 + 5% Graphite - Tension 3DHareesh R IyerNo ratings yet

- Compressive Load V/S DeformationDocument6 pagesCompressive Load V/S DeformationRahulKumarNo ratings yet

- Boq Sanchez MiraDocument158 pagesBoq Sanchez MiraNCL Builders and Construction SupplyNo ratings yet

- Vacuum Pressure Unit Conversions Chart From IsmDocument2 pagesVacuum Pressure Unit Conversions Chart From IsmChintan BhavsarNo ratings yet

- CFD Report: Submitted by Vipul Agrawal 2018H1410129H M.E Design EngineeringDocument12 pagesCFD Report: Submitted by Vipul Agrawal 2018H1410129H M.E Design EngineeringVipul AgrawalNo ratings yet

- Chart Title: 60 80 F (X) 3.4813214286x + 19.8902857143 R 0.9920386416 Column O Linear (Column O)Document11 pagesChart Title: 60 80 F (X) 3.4813214286x + 19.8902857143 R 0.9920386416 Column O Linear (Column O)Agus barkahNo ratings yet

- Intervalo Oi Ei N/M (Ei-Oi) 2/eiDocument23 pagesIntervalo Oi Ei N/M (Ei-Oi) 2/eiDuvan LeónNo ratings yet

- Rotations PDFDocument15 pagesRotations PDFhamza wajidNo ratings yet

- PCA - Jupyter NotebookDocument5 pagesPCA - Jupyter Notebookvenkatesh mNo ratings yet

- Air Pressure, Psi XY X 2 Height of Nail Out of Board, MMDocument13 pagesAir Pressure, Psi XY X 2 Height of Nail Out of Board, MMravindra erabattiNo ratings yet

- 300921-Footplate Jembatan Boxculvert 28D SDDocument4 pages300921-Footplate Jembatan Boxculvert 28D SDFaujia HamidNo ratings yet

- Laxmi R7 90degDocument3 pagesLaxmi R7 90degAamir KhanNo ratings yet

- bishop simplificadoDocument1 pagebishop simplificadoFavio VelazcoNo ratings yet

- Lab4 Report: Knife Edge Follower and Cam (Base Radius RB 11.33)Document6 pagesLab4 Report: Knife Edge Follower and Cam (Base Radius RB 11.33)Mayuri GedamNo ratings yet

- Tabel Statistika Utk Statin 2Document16 pagesTabel Statistika Utk Statin 2Yulia Pratama Umbu DonguNo ratings yet

- 737 Hand CalculationsDocument59 pages737 Hand CalculationsstefandcamNo ratings yet

- Tabla NORMALDocument2 pagesTabla NORMALFernando Brugarolas navarroNo ratings yet

- Grafi Co de Codo Radio Corto: Grafica de ContraccíonDocument3 pagesGrafi Co de Codo Radio Corto: Grafica de ContraccíonJhon BenavidesNo ratings yet

- Grade 11 Daily Lesson Log: ObjectivesDocument2 pagesGrade 11 Daily Lesson Log: ObjectivesKarla BangFerNo ratings yet

- Math 9 QUARTER 1: Weeks 2-3: CompetencyDocument10 pagesMath 9 QUARTER 1: Weeks 2-3: CompetencyGraceRasdasNo ratings yet

- DLL - Mapeh 6 - Q3 - W7Document9 pagesDLL - Mapeh 6 - Q3 - W7Diana Rose AcupeadoNo ratings yet

- C 99Document53 pagesC 99Amine Mohamad YebedriNo ratings yet

- Android Sqlite BasicsDocument45 pagesAndroid Sqlite BasicsCRM HUGNo ratings yet

- Tantric Texts Series 06 Kalivilasatantram Edited by Parvati Charana Tarkatirtha 1917 GoogleDocument116 pagesTantric Texts Series 06 Kalivilasatantram Edited by Parvati Charana Tarkatirtha 1917 GoogleAntonio CarlosNo ratings yet

- Noting and Drafting Office Communication - PPTsDocument70 pagesNoting and Drafting Office Communication - PPTsGK Tiwari100% (2)

- Stayed GoneDocument4 pagesStayed GoneLizbethPilcoNo ratings yet

- Gmath Continuation Module 1Document30 pagesGmath Continuation Module 1あい アリソンNo ratings yet

- Declamation Piece Mechanics and CriteriaDocument5 pagesDeclamation Piece Mechanics and CriteriaCHRISTIAN MAE LLAMASNo ratings yet

- Present Simple Complex TEORIE+EXERCISESDocument3 pagesPresent Simple Complex TEORIE+EXERCISESPacurar EmmaNo ratings yet

- Daa Lps2 21bps1263Document20 pagesDaa Lps2 21bps1263Debu SinghNo ratings yet

- TRANSLATION CRITICISM TQA Model by J. HouseDocument22 pagesTRANSLATION CRITICISM TQA Model by J. HouseMADINA RAHATBEK KIZINo ratings yet

- Unit1 Whatkindof Students R UDocument5 pagesUnit1 Whatkindof Students R UNagita AdellaNo ratings yet

- Unit-4 USB - SCI - PCI BusDocument4 pagesUnit-4 USB - SCI - PCI BusOm DevNo ratings yet

- L1 SPECIAL TOPICS IN STS - Information SocietyDocument36 pagesL1 SPECIAL TOPICS IN STS - Information SocietyEdreign Pete MagdaelNo ratings yet

- ASMITADocument1 pageASMITAADARSH SINGHNo ratings yet

- Oracle Dataguard Support Issues: Brian Hitchcock Ocp 10G Dba Sun MicrosystemsDocument76 pagesOracle Dataguard Support Issues: Brian Hitchcock Ocp 10G Dba Sun MicrosystemsSachin JainNo ratings yet

- Assignment 2Document3 pagesAssignment 2Kimberly MagolladoNo ratings yet

- Lesson 1.1Document98 pagesLesson 1.1mary gracelyn quinoNo ratings yet

- UNIT 3 Grammar Practice: Active & Passive Forms The CausativeDocument1 pageUNIT 3 Grammar Practice: Active & Passive Forms The CausativeEva Díaz PardoNo ratings yet

- JohnLindow 2002 Hel NorseMythologyAGuideTDocument1 pageJohnLindow 2002 Hel NorseMythologyAGuideTÁron SzárazNo ratings yet

- My Journey To Becoming A Web Developer From ScratchDocument13 pagesMy Journey To Becoming A Web Developer From Scratchricky patealNo ratings yet

- 4 Grading Period: 3 Summative Test EnglishDocument3 pages4 Grading Period: 3 Summative Test Englishsnowy kim50% (2)

- Dr. Hamzah, M.A., M.M.: Introduction To Functional GrammarDocument43 pagesDr. Hamzah, M.A., M.M.: Introduction To Functional GrammarErdiana ErdiansyahNo ratings yet

- Quran As Primary Source of Islamic LawDocument6 pagesQuran As Primary Source of Islamic LawMuhammad Kaif100% (1)

- Unit 5 - Runtime EnvironmentDocument69 pagesUnit 5 - Runtime Environmentaxar kumarNo ratings yet

- 2-Sinf Ingliz Tili Ish Reja YANGIDocument5 pages2-Sinf Ingliz Tili Ish Reja YANGIQodirjonov AsadbekNo ratings yet

- Index: DB Frequency, AC CI HD HD HDDocument10 pagesIndex: DB Frequency, AC CI HD HD HDe616vNo ratings yet

Project Coding-Manish Dwari 1807

Project Coding-Manish Dwari 1807

Uploaded by

soniakar3000Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Project Coding-Manish Dwari 1807

Project Coding-Manish Dwari 1807

Uploaded by

soniakar3000Copyright:

Available Formats

DATA SCIENCE PROJECT

Manish Dwari ,18047

Indian Institute of Science Education and Research (IISER) Berhampur

SUB: Introduction to Data Science (IDC 301)

TOPIC: Breast Cancer Wisconsin (Diagnostics) data Analysis and prediction

IMPORTING FUNCTIONS

In [1]: import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

In [2]: from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import confusion_matrix, classification_report,accuracy_score

from sklearn.preprocessing import StandardScaler

from sklearn import linear_model

from sklearn.model_selection import train_test_split

from sklearn import tree

from sklearn import svm

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import chi2

LOADING AND VISUALISING DATA

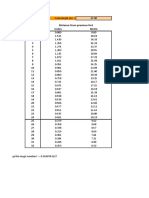

In [3]: data=pd.read_csv('data1.csv')

data.tail(10)

Out[3]: concave

id diagnosis radius_mean texture_mean perimeter_mean area_mean smoothness_mean compactness_mean concavity_mean ... texture_worst perimeter_wor

points_mean

559 925291 B 11.51 23.93 74.52 403.5 0.09261 0.10210 0.11120 0.04105 ... 37.16 82.2

560 925292 B 14.05 27.15 91.38 600.4 0.09929 0.11260 0.04462 0.04304 ... 33.17 100.2

561 925311 B 11.20 29.37 70.67 386.0 0.07449 0.03558 0.00000 0.00000 ... 38.30 75.1

562 925622 M 15.22 30.62 103.40 716.9 0.10480 0.20870 0.25500 0.09429 ... 42.79 128.7

563 926125 M 20.92 25.09 143.00 1347.0 0.10990 0.22360 0.31740 0.14740 ... 29.41 179.1

564 926424 M 21.56 22.39 142.00 1479.0 0.11100 0.11590 0.24390 0.13890 ... 26.40 166.1

565 926682 M 20.13 28.25 131.20 1261.0 0.09780 0.10340 0.14400 0.09791 ... 38.25 155.0

566 926954 M 16.60 28.08 108.30 858.1 0.08455 0.10230 0.09251 0.05302 ... 34.12 126.7

567 927241 M 20.60 29.33 140.10 1265.0 0.11780 0.27700 0.35140 0.15200 ... 39.42 184.6

568 92751 B 7.76 24.54 47.92 181.0 0.05263 0.04362 0.00000 0.00000 ... 30.37 59.1

10 rows × 33 columns

In [4]: data.shape

Out[4]: (569, 33)

In [5]: data['diagnosis'].tail()

Out[5]: 564 M

565 M

566 M

567 M

568 B

Name: diagnosis, dtype: object

In [6]: data.describe()

Out[6]: concave

id radius_mean texture_mean perimeter_mean area_mean smoothness_mean compactness_mean concavity_mean symmetry_mean ... texture_worst

points_mean

count 5.690000e+02 569.000000 569.000000 569.000000 569.000000 569.000000 569.000000 569.000000 569.000000 569.000000 ... 569.000000

mean 3.037183e+07 14.127292 19.289649 91.969033 654.889104 0.096360 0.104341 0.088799 0.048919 0.181162 ... 25.677223

std 1.250206e+08 3.524049 4.301036 24.298981 351.914129 0.014064 0.052813 0.079720 0.038803 0.027414 ... 6.146258

min 8.670000e+03 6.981000 9.710000 43.790000 143.500000 0.052630 0.019380 0.000000 0.000000 0.106000 ... 12.020000

25% 8.692180e+05 11.700000 16.170000 75.170000 420.300000 0.086370 0.064920 0.029560 0.020310 0.161900 ... 21.080000

50% 9.060240e+05 13.370000 18.840000 86.240000 551.100000 0.095870 0.092630 0.061540 0.033500 0.179200 ... 25.410000

75% 8.813129e+06 15.780000 21.800000 104.100000 782.700000 0.105300 0.130400 0.130700 0.074000 0.195700 ... 29.720000

max 9.113205e+08 28.110000 39.280000 188.500000 2501.000000 0.163400 0.345400 0.426800 0.201200 0.304000 ... 49.540000

8 rows × 32 columns

In [7]: print(data['diagnosis'].value_counts())

B 357

M 212

Name: diagnosis, dtype: int64

CHECKING FOR MISSING/NULL VALUES

In [8]: print(data.isnull().isnull().sum())

id 0

diagnosis 0

radius_mean 0

texture_mean 0

perimeter_mean 0

area_mean 0

smoothness_mean 0

compactness_mean 0

concavity_mean 0

concave points_mean 0

symmetry_mean 0

fractal_dimension_mean 0

radius_se 0

texture_se 0

perimeter_se 0

area_se 0

smoothness_se 0

compactness_se 0

concavity_se 0

concave points_se 0

symmetry_se 0

fractal_dimension_se 0

radius_worst 0

texture_worst 0

perimeter_worst 0

area_worst 0

smoothness_worst 0

compactness_worst 0

concavity_worst 0

concave points_worst 0

symmetry_worst 0

fractal_dimension_worst 0

Unnamed: 32 0

dtype: int64

In [9]: data.drop(['id','Unnamed: 32'],axis=1,inplace=True)

In [10]: data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 569 entries, 0 to 568

Data columns (total 31 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 diagnosis 569 non-null object

1 radius_mean 569 non-null float64

2 texture_mean 569 non-null float64

3 perimeter_mean 569 non-null float64

4 area_mean 569 non-null float64

5 smoothness_mean 569 non-null float64

6 compactness_mean 569 non-null float64

7 concavity_mean 569 non-null float64

8 concave points_mean 569 non-null float64

9 symmetry_mean 569 non-null float64

10 fractal_dimension_mean 569 non-null float64

11 radius_se 569 non-null float64

12 texture_se 569 non-null float64

13 perimeter_se 569 non-null float64

14 area_se 569 non-null float64

15 smoothness_se 569 non-null float64

16 compactness_se 569 non-null float64

17 concavity_se 569 non-null float64

18 concave points_se 569 non-null float64

19 symmetry_se 569 non-null float64

20 fractal_dimension_se 569 non-null float64

21 radius_worst 569 non-null float64

22 texture_worst 569 non-null float64

23 perimeter_worst 569 non-null float64

24 area_worst 569 non-null float64

25 smoothness_worst 569 non-null float64

26 compactness_worst 569 non-null float64

27 concavity_worst 569 non-null float64

28 concave points_worst 569 non-null float64

29 symmetry_worst 569 non-null float64

30 fractal_dimension_worst 569 non-null float64

dtypes: float64(30), object(1)

memory usage: 137.9+ KB

In [11]: data.columns

Out[11]: Index(['diagnosis', 'radius_mean', 'texture_mean', 'perimeter_mean',

'area_mean', 'smoothness_mean', 'compactness_mean', 'concavity_mean',

'concave points_mean', 'symmetry_mean', 'fractal_dimension_mean',

'radius_se', 'texture_se', 'perimeter_se', 'area_se', 'smoothness_se',

'compactness_se', 'concavity_se', 'concave points_se', 'symmetry_se',

'fractal_dimension_se', 'radius_worst', 'texture_worst',

'perimeter_worst', 'area_worst', 'smoothness_worst',

'compactness_worst', 'concavity_worst', 'concave points_worst',

'symmetry_worst', 'fractal_dimension_worst'],

dtype='object')

DATA VISUALIZATION AND EXPLORATION GRAPHICALLY

In [12]: sns.countplot(data['diagnosis'])

C:\Users\lenovo\anaconda3\lib\site-packages\seaborn\_decorators.py:36: FutureWarning: Pass the following variable as a keyword arg: x. From version

0.12, the only valid positional argument will be `data`, and passing other arguments without an explicit keyword will result in an error or misinte

rpretation.

warnings.warn(

Out[12]: <AxesSubplot:xlabel='diagnosis', ylabel='count'>

In [13]: cor=data.corr() #checking for correlation

cor

Out[13]: concave

radius_mean texture_mean perimeter_mean area_mean smoothness_mean compactness_mean concavity_mean symmetry_mean fractal_dimensio

points_mean

radius_mean 1.000000 0.323782 0.997855 0.987357 0.170581 0.506124 0.676764 0.822529 0.147741 -

texture_mean 0.323782 1.000000 0.329533 0.321086 -0.023389 0.236702 0.302418 0.293464 0.071401 -

perimeter_mean 0.997855 0.329533 1.000000 0.986507 0.207278 0.556936 0.716136 0.850977 0.183027 -

area_mean 0.987357 0.321086 0.986507 1.000000 0.177028 0.498502 0.685983 0.823269 0.151293 -

smoothness_mean 0.170581 -0.023389 0.207278 0.177028 1.000000 0.659123 0.521984 0.553695 0.557775

compactness_mean 0.506124 0.236702 0.556936 0.498502 0.659123 1.000000 0.883121 0.831135 0.602641

concavity_mean 0.676764 0.302418 0.716136 0.685983 0.521984 0.883121 1.000000 0.921391 0.500667

concave points_mean 0.822529 0.293464 0.850977 0.823269 0.553695 0.831135 0.921391 1.000000 0.462497

symmetry_mean 0.147741 0.071401 0.183027 0.151293 0.557775 0.602641 0.500667 0.462497 1.000000

fractal_dimension_mean -0.311631 -0.076437 -0.261477 -0.283110 0.584792 0.565369 0.336783 0.166917 0.479921

radius_se 0.679090 0.275869 0.691765 0.732562 0.301467 0.497473 0.631925 0.698050 0.303379

texture_se -0.097317 0.386358 -0.086761 -0.066280 0.068406 0.046205 0.076218 0.021480 0.128053

perimeter_se 0.674172 0.281673 0.693135 0.726628 0.296092 0.548905 0.660391 0.710650 0.313893

area_se 0.735864 0.259845 0.744983 0.800086 0.246552 0.455653 0.617427 0.690299 0.223970 -

smoothness_se -0.222600 0.006614 -0.202694 -0.166777 0.332375 0.135299 0.098564 0.027653 0.187321

compactness_se 0.206000 0.191975 0.250744 0.212583 0.318943 0.738722 0.670279 0.490424 0.421659

concavity_se 0.194204 0.143293 0.228082 0.207660 0.248396 0.570517 0.691270 0.439167 0.342627

concave points_se 0.376169 0.163851 0.407217 0.372320 0.380676 0.642262 0.683260 0.615634 0.393298

symmetry_se -0.104321 0.009127 -0.081629 -0.072497 0.200774 0.229977 0.178009 0.095351 0.449137

fractal_dimension_se -0.042641 0.054458 -0.005523 -0.019887 0.283607 0.507318 0.449301 0.257584 0.331786

radius_worst 0.969539 0.352573 0.969476 0.962746 0.213120 0.535315 0.688236 0.830318 0.185728 -

texture_worst 0.297008 0.912045 0.303038 0.287489 0.036072 0.248133 0.299879 0.292752 0.090651 -

perimeter_worst 0.965137 0.358040 0.970387 0.959120 0.238853 0.590210 0.729565 0.855923 0.219169 -

area_worst 0.941082 0.343546 0.941550 0.959213 0.206718 0.509604 0.675987 0.809630 0.177193 -

smoothness_worst 0.119616 0.077503 0.150549 0.123523 0.805324 0.565541 0.448822 0.452753 0.426675

compactness_worst 0.413463 0.277830 0.455774 0.390410 0.472468 0.865809 0.754968 0.667454 0.473200

concavity_worst 0.526911 0.301025 0.563879 0.512606 0.434926 0.816275 0.884103 0.752399 0.433721

concave points_worst 0.744214 0.295316 0.771241 0.722017 0.503053 0.815573 0.861323 0.910155 0.430297

symmetry_worst 0.163953 0.105008 0.189115 0.143570 0.394309 0.510223 0.409464 0.375744 0.699826

fractal_dimension_worst 0.007066 0.119205 0.051019 0.003738 0.499316 0.687382 0.514930 0.368661 0.438413

30 rows × 30 columns

In [14]: plt.figure(figsize=(20,10))

sns.heatmap(cor,annot=True) #STUDYING CORRELATION USING HEATMAP

Out[14]: <AxesSubplot:>

In [15]: dt=data.drop(data.iloc[:,11:32],axis=1) #dropping some features of the data for better visualisation

dt.columns

Out[15]: Index(['diagnosis', 'radius_mean', 'texture_mean', 'perimeter_mean',

'area_mean', 'smoothness_mean', 'compactness_mean', 'concavity_mean',

'concave points_mean', 'symmetry_mean', 'fractal_dimension_mean'],

dtype='object')

In [16]: sns.pairplot(dt, hue='diagnosis')

Out[16]: <seaborn.axisgrid.PairGrid at 0x249e28ad3a0>

In [17]: for i in ('radius_mean', 'texture_mean', 'perimeter_mean',

'area_mean', 'smoothness_mean', 'compactness_mean', 'concavity_mean',

'concave points_mean', 'symmetry_mean', 'fractal_dimension_mean'):

dt[i][dt['diagnosis']=='M'].plot.kde(title=i,c='r')

dt[i][dt['diagnosis']=='B'].plot.kde(title=i,c='g')

plt.show()

In [18]: for i in ('radius_mean', 'texture_mean', 'perimeter_mean',

'area_mean', 'smoothness_mean', 'compactness_mean', 'concavity_mean',

'concave points_mean', 'symmetry_mean', 'fractal_dimension_mean'):

dt[i].plot.kde(title=i)

plt.show()

In [19]: sns.heatmap(dt.corr(),annot=True)

Out[19]: <AxesSubplot:>

PREPROCESSING AND PRIDICTION

In [20]: data.head()

Out[20]: concave

diagnosis radius_mean texture_mean perimeter_mean area_mean smoothness_mean compactness_mean concavity_mean symmetry_mean ... radius_worst texture_

points_mean

0 M 17.99 10.38 122.80 1001.0 0.11840 0.27760 0.3001 0.14710 0.2419 ... 25.38

1 M 20.57 17.77 132.90 1326.0 0.08474 0.07864 0.0869 0.07017 0.1812 ... 24.99

2 M 19.69 21.25 130.00 1203.0 0.10960 0.15990 0.1974 0.12790 0.2069 ... 23.57

3 M 11.42 20.38 77.58 386.1 0.14250 0.28390 0.2414 0.10520 0.2597 ... 14.91

4 M 20.29 14.34 135.10 1297.0 0.10030 0.13280 0.1980 0.10430 0.1809 ... 22.54

5 rows × 31 columns

FEATURE SELECTION TO AVOID CURSE OF DIMENTIONALITY

In [21]: x=data.drop('diagnosis',axis=1)

y=data['diagnosis']

In [22]: bestfeatures = SelectKBest(score_func=chi2, k=16)

fit= bestfeatures.fit(x,y)

dfscores = pd.DataFrame(fit.scores_)

dfcolumns = pd.DataFrame(x.columns)

In [23]: fscores = pd.concat([dfcolumns,dfscores],axis=1)

fscores.columns = ['feat','Score']

In [24]: fscores

Out[24]: feat Score

0 radius_mean 266.104917

1 texture_mean 93.897508

2 perimeter_mean 2011.102864

3 area_mean 53991.655924

4 smoothness_mean 0.149899

5 compactness_mean 5.403075

6 concavity_mean 19.712354

7 concave points_mean 10.544035

8 symmetry_mean 0.257380

9 fractal_dimension_mean 0.000074

10 radius_se 34.675247

11 texture_se 0.009794

12 perimeter_se 250.571896

13 area_se 8758.504705

14 smoothness_se 0.003266

15 compactness_se 0.613785

16 concavity_se 1.044718

17 concave points_se 0.305232

18 symmetry_se 0.000080

19 fractal_dimension_se 0.006371

20 radius_worst 491.689157

21 texture_worst 174.449400

22 perimeter_worst 3665.035416

23 area_worst 112598.431564

24 smoothness_worst 0.397366

25 compactness_worst 19.314922

26 concavity_worst 39.516915

27 concave points_worst 13.485419

28 symmetry_worst 1.298861

29 fractal_dimension_worst 0.231522

In [25]: fscores.plot(kind='barh')

plt.show()

In [26]: print(fscores.nlargest(16,'Score'))

feat Score

23 area_worst 112598.431564

3 area_mean 53991.655924

13 area_se 8758.504705

22 perimeter_worst 3665.035416

2 perimeter_mean 2011.102864

20 radius_worst 491.689157

0 radius_mean 266.104917

12 perimeter_se 250.571896

21 texture_worst 174.449400

1 texture_mean 93.897508

26 concavity_worst 39.516915

10 radius_se 34.675247

6 concavity_mean 19.712354

25 compactness_worst 19.314922

27 concave points_worst 13.485419

7 concave points_mean 10.544035

In [27]: dp=data.drop(['smoothness_mean', 'compactness_mean', 'symmetry_mean',

'fractal_dimension_mean', 'texture_se', 'smoothness_se',

'compactness_se', 'concavity_se', 'concave points_se', 'symmetry_se',

'fractal_dimension_se', 'smoothness_worst', 'symmetry_worst',

'fractal_dimension_worst'],axis=1)

dp.columns

Out[27]: Index(['diagnosis', 'radius_mean', 'texture_mean', 'perimeter_mean',

'area_mean', 'concavity_mean', 'concave points_mean', 'radius_se',

'perimeter_se', 'area_se', 'radius_worst', 'texture_worst',

'perimeter_worst', 'area_worst', 'compactness_worst', 'concavity_worst',

'concave points_worst'],

dtype='object')

In [28]: dp.shape

Out[28]: (569, 17)

SPLITING THE DATA INTO TEST AND TRAIN

In [29]: X=dp.drop('diagnosis',axis=1)

Y=dp['diagnosis']

Y=Y.map({'B':0,'M':1})

Y.tail()

Out[29]: 564 1

565 1

566 1

567 1

568 0

Name: diagnosis, dtype: int64

In [30]: X.shape

Out[30]: (569, 16)

In [31]: X_train,X_test,Y_train,Y_test=train_test_split(X,Y,test_size=0.2,random_state=0)

print(X_train.var())

radius_mean 12.498873

texture_mean 17.296973

perimeter_mean 591.122540

area_mean 128283.011726

concavity_mean 0.006053

concave points_mean 0.001490

radius_se 0.080867

perimeter_se 4.152441

area_se 2278.193100

radius_worst 23.917513

texture_worst 37.217703

perimeter_worst 1147.121834

area_worst 343859.863025

compactness_worst 0.023442

concavity_worst 0.039855

concave points_worst 0.004267

dtype: float64

In [32]: s=StandardScaler() #fitting the data to normal distribution or standadizing for results

X_train=s.fit_transform(X_train)

X_test=s.transform(X_test)

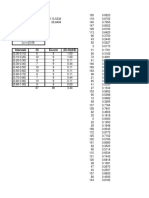

APPLYING DECISION TREE CLASSIFIER MODEL

In [33]: mod=DecisionTreeClassifier()

mod.fit(X_train,Y_train)

p=mod.predict(X_test)

In [34]: print(classification_report(Y_test,p))

precision recall f1-score support

0 0.97 0.96 0.96 67

1 0.94 0.96 0.95 47

accuracy 0.96 114

macro avg 0.95 0.96 0.95 114

weighted avg 0.96 0.96 0.96 114

In [35]: cm=confusion_matrix(Y_test,p)

sns.heatmap(cm,annot=True)

Out[35]: <AxesSubplot:>

In [36]: print(accuracy_score(Y_test,p))

0.956140350877193

In [63]: import graphviz

from IPython.display import Image

In [38]: dt = tree.export_graphviz(mod,out_file=None,feature_names=X.columns,

class_names=data.columns[1],filled=True,rounded=True,special_characters=True)

g = graphviz.Source(dt)

g #PLOTING THE DESICION TREE

concave points_worst ≤ 0.428

Out[38]: gini = 0.462

samples = 455

value = [290, 165]

class = r

True False

area_worst ≤ 0.13 area_worst ≤ -0.259

gini = 0.146 gini = 0.134

samples = 303 samples = 152

value = [279, 24] value = [11, 141]

class = r class = a

perimeter_worst ≤ 0.021 texture_mean ≤ -0.158 concave points_worst ≤ 1.016 radius_se ≤ -0.789

gini = 0.073 gini = 0.231 gini = 0.49 gini = 0.043

samples = 288 samples = 15 samples = 14 samples = 138

value = [277, 11] value = [2, 13] value = [8, 6] value = [3, 135]

class = r class = a class = r class = a

area_se ≤ 1.075 perimeter_mean ≤ 0.004 area_se ≤ 0.466 texture_worst ≤ 0.94 concavity_worst ≤ -0.321

gini = 0.0 gini = 0.0 gini = 0.0

gini = 0.036 gini = 0.432 gini = 0.444 gini = 0.198 gini = 0.029

samples = 12 samples = 5 samples = 1

samples = 269 samples = 19 samples = 3 samples = 9 samples = 137

value = [0, 12] value = [0, 5] value = [1, 0]

value = [264, 5] value = [13, 6] value = [2, 1] value = [8, 1] value = [2, 135]

class = a class = a class = r

class = r class = r class = r class = r class = a

concavity_mean ≤ 0.692 perimeter_se ≤ 0.0 texture_mean ≤ -0.911

gini = 0.0 gini = 0.0 gini = 0.0 gini = 0.0 gini = 0.0 gini = 0.0 gini = 0.0

gini = 0.029 gini = 0.231 gini = 0.015

samples = 1 samples = 4 samples = 2 samples = 1 samples = 8 samples = 1 samples = 1

samples = 268 samples = 15 samples = 136

value = [0, 1] value = [0, 4] value = [2, 0] value = [0, 1] value = [8, 0] value = [0, 1] value = [1, 0]

value = [264, 4] value = [13, 2] value = [1, 135]

class = a class = a class = r class = a class = r class = a class = r

class = r class = r class = a

area_se ≤ 0.182 concavity_worst ≤ 0.948 texture_worst ≤ -0.535 area_worst ≤ -0.012

gini = 0.0 gini = 0.0

gini = 0.022 gini = 0.444 gini = 0.444 gini = 0.32

samples = 12 samples = 131

samples = 265 samples = 3 samples = 3 samples = 5

value = [12, 0] value = [0, 131]

value = [262, 3] value = [2, 1] value = [1, 2] value = [1, 4]

class = r class = a

class = r class = r class = a class = a

texture_worst ≤ 0.756 radius_mean ≤ -0.523

gini = 0.0 gini = 0.0 gini = 0.0 gini = 0.0 gini = 0.0 gini = 0.0

gini = 0.015 gini = 0.444

samples = 1 samples = 2 samples = 1 samples = 2 samples = 1 samples = 4

samples = 262 samples = 3

value = [0, 1] value = [2, 0] value = [1, 0] value = [0, 2] value = [1, 0] value = [0, 4]

value = [260, 2] value = [2, 1]

class = a class = r class = r class = a class = r class = a

class = r class = r

texture_worst ≤ 0.782

gini = 0.0 gini = 0.0 gini = 0.0

gini = 0.117

samples = 230 samples = 1 samples = 2

samples = 32

value = [230, 0] value = [0, 1] value = [2, 0]

value = [30, 2]

class = r class = a class = r

class = r

radius_worst ≤ -0.374

gini = 0.0

gini = 0.062

samples = 1

samples = 31

value = [0, 1]

value = [30, 1]

class = a

class = r

perimeter_worst ≤ -0.374

gini = 0.0

gini = 0.245

samples = 24

samples = 7

value = [24, 0]

value = [6, 1]

class = r

class = r

gini = 0.0 gini = 0.0

samples = 1 samples = 6

value = [0, 1] value = [6, 0]

class = a class = r

In [67]: g.render('Decision Tree')

Out[67]: 'Decision Tree.pdf'

APPLYING LINEAR REGRESSION MODEL

In [39]: mod2=linear_model.LogisticRegression()

mod2.fit(X_train,Y_train)

pe=mod2.predict(X_test)

pe=np.array(mod2.predict(X_test))

In [40]: print(classification_report(Y_test,pe))

precision recall f1-score support

0 0.97 0.96 0.96 67

1 0.94 0.96 0.95 47

accuracy 0.96 114

macro avg 0.95 0.96 0.95 114

weighted avg 0.96 0.96 0.96 114

In [41]: cm1=confusion_matrix(Y_test,pe)

sns.heatmap(cm1,annot=True)

Out[41]: <AxesSubplot:>

In [42]: print(accuracy_score(Y_test,pe))

0.956140350877193

APPLYING SUPPORT VECTOR CLASSIFIER MODEL

In [43]: mod3=svm.SVC()

mod3.fit(X_train,Y_train)

ped=mod3.predict(X_test)

In [44]: print(classification_report(Y_test,ped))

precision recall f1-score support

0 0.98 0.96 0.97 67

1 0.94 0.98 0.96 47

accuracy 0.96 114

macro avg 0.96 0.97 0.96 114

weighted avg 0.97 0.96 0.97 114

In [45]: cm2=confusion_matrix(Y_test,ped)

sns.heatmap(cm2,annot=True)

Out[45]: <AxesSubplot:>

In [46]: print(accuracy_score(Y_test,ped))

0.9649122807017544

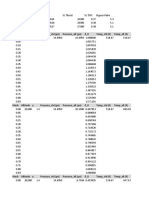

PRIDICTION WITH FULL DATA

In [47]: X=data.drop('diagnosis',axis=1)

Y=data['diagnosis']

Y=Y.map({'B':0,'M':1})

Y.tail()

Out[47]: 564 1

565 1

566 1

567 1

568 0

Name: diagnosis, dtype: int64

In [48]: X_train,X_test,Y_train,Y_test=train_test_split(X,Y,test_size=0.2,random_state=0)

print(X_train.var())

radius_mean 12.498873

texture_mean 17.296973

perimeter_mean 591.122540

area_mean 128283.011726

smoothness_mean 0.000190

compactness_mean 0.002549

concavity_mean 0.006053

concave points_mean 0.001490

symmetry_mean 0.000751

fractal_dimension_mean 0.000046

radius_se 0.080867

texture_se 0.293722

perimeter_se 4.152441

area_se 2278.193100

smoothness_se 0.000008

compactness_se 0.000307

concavity_se 0.000968

concave points_se 0.000035

symmetry_se 0.000067

fractal_dimension_se 0.000007

radius_worst 23.917513

texture_worst 37.217703

perimeter_worst 1147.121834

area_worst 343859.863025

smoothness_worst 0.000512

compactness_worst 0.023442

concavity_worst 0.039855

concave points_worst 0.004267

symmetry_worst 0.003968

fractal_dimension_worst 0.000317

dtype: float64

In [49]: s=StandardScaler()

X_train=s.fit_transform(X_train)

X_test=s.transform(X_test)

APPLYING LINEAR REGRESSION

In [50]: mod2=linear_model.LogisticRegression()

mod2.fit(X_train,Y_train)

pe=mod2.predict(X_test)

pe=np.array(mod2.predict(X_test))

Y=np.array(Y_test)

In [51]: print(accuracy_score(Y_test,pe))

0.9649122807017544

In [52]: print(classification_report(Y_test,pe))

precision recall f1-score support

0 0.97 0.97 0.97 67

1 0.96 0.96 0.96 47

accuracy 0.96 114

macro avg 0.96 0.96 0.96 114

weighted avg 0.96 0.96 0.96 114

In [53]: c1=confusion_matrix(Y_test,pe)

sns.heatmap(c1,annot=True)

Out[53]: <AxesSubplot:>

APPLYING SUPPORT VECTOR CLASSIFIER

In [54]: mod3=svm.SVC()

mod3.fit(X_train,Y_train)

ped=mod3.predict(X_test)

In [55]: print(classification_report(Y_test,ped))

precision recall f1-score support

0 0.97 1.00 0.99 67

1 1.00 0.96 0.98 47

accuracy 0.98 114

macro avg 0.99 0.98 0.98 114

weighted avg 0.98 0.98 0.98 114

In [56]: c2=confusion_matrix(Y_test,ped)

sns.heatmap(c2,annot=True)

Out[56]: <AxesSubplot:>

In [57]: print(accuracy_score(Y_test,ped))

0.9824561403508771

APPLYING DECISION TREE CLASSIFIER

In [58]: mod=DecisionTreeClassifier()

mod.fit(X_train,Y_train)

p=mod.predict(X_test)

In [59]: print(classification_report(Y_test,p))

precision recall f1-score support

0 0.94 0.90 0.92 67

1 0.86 0.91 0.89 47

accuracy 0.90 114

macro avg 0.90 0.91 0.90 114

weighted avg 0.91 0.90 0.90 114

In [60]: print(accuracy_score(Y_test,p))

0.9035087719298246

In [61]: c2=confusion_matrix(Y_test,p)

sns.heatmap(c2,annot=True)

Out[61]: <AxesSubplot:>

You might also like

- TIMO Final 2020-2021 P3Document5 pagesTIMO Final 2020-2021 P3An Nguyen100% (2)

- Id Diagnosis Radiusmean Texturemean Perimetermean Areamean Smoothne 0 1 2 3 4 ... 564 565 566 567 568Document5 pagesId Diagnosis Radiusmean Texturemean Perimetermean Areamean Smoothne 0 1 2 3 4 ... 564 565 566 567 568Zaid AhamedNo ratings yet

- 3Document5 pages3Zaid AhamedNo ratings yet

- 3 - Calculating Percentiles CompleteDocument8 pages3 - Calculating Percentiles CompleteLarry AbarcaNo ratings yet

- Direct Shear Test HDocument2 pagesDirect Shear Test HRajinda BintangNo ratings yet

- Id Diagnosis Radiusmean Texturemean Perimetermean Areamean Smoothne 0 1 2 3 4 ... 564 565 566 567 568Document3 pagesId Diagnosis Radiusmean Texturemean Perimetermean Areamean Smoothne 0 1 2 3 4 ... 564 565 566 567 568Zaid AhamedNo ratings yet

- Assignment Fluid2Document8 pagesAssignment Fluid2Amier AziziNo ratings yet

- Resolución Práctica Calificada 3: Subcuenca 01 Subcuenca 02 TC TCDocument2 pagesResolución Práctica Calificada 3: Subcuenca 01 Subcuenca 02 TC TCNicole HuamaníNo ratings yet

- 20BCP021 Assignment 3Document7 pages20BCP021 Assignment 3chatgptplus4usNo ratings yet

- Curvas de Capacidad de Embalse Lampazuni yDocument22 pagesCurvas de Capacidad de Embalse Lampazuni yRaul SanchezNo ratings yet

- Sieve Size (MM) : Mass Retained (Grams) : Percent Finer (%) :: Legend!! 34Document3 pagesSieve Size (MM) : Mass Retained (Grams) : Percent Finer (%) :: Legend!! 34Jhon Russel Cruz AntonioNo ratings yet

- Tablas TransitorioDocument4 pagesTablas Transitoriopatricia carrilloNo ratings yet

- MatrizDocument4 pagesMatrizlesly tiradoNo ratings yet

- Suruh Tembawang Versi 3Document76 pagesSuruh Tembawang Versi 3eko bari wNo ratings yet

- Laxmi Sample2 R7 120degDocument3 pagesLaxmi Sample2 R7 120degAamir KhanNo ratings yet

- American Conductor StrandingDocument1 pageAmerican Conductor Strandingkariboo karibooxNo ratings yet

- Datasheet 1x19 Stainless Steel Strand Wire RopeDocument1 pageDatasheet 1x19 Stainless Steel Strand Wire RopePrattyNo ratings yet

- DSCI Group Project - Schenck's SimulationDocument5 pagesDSCI Group Project - Schenck's SimulationEaston GriffinNo ratings yet

- Perhitungan Uji KSDocument4 pagesPerhitungan Uji KSArlnote ForbidenNo ratings yet

- Distancia (x) θDocument2 pagesDistancia (x) θLIZBETH VELAZQUEZ GONZALEZNo ratings yet

- Project 4Document12 pagesProject 4Manolo CamelaNo ratings yet

- DFB Sample No 326ADocument16 pagesDFB Sample No 326AKuthuraikaranNo ratings yet

- Tabla Diferencia Potencial (V) V/s Corriente (I) : 16.00 F (X) 0.2140585593x + 0.0335509278 R 0.9998985627Document4 pagesTabla Diferencia Potencial (V) V/s Corriente (I) : 16.00 F (X) 0.2140585593x + 0.0335509278 R 0.9998985627IgnacioLamillaNo ratings yet

- FI6051 Dynamic Delta Hedging Example HullTable14!2!3Document4 pagesFI6051 Dynamic Delta Hedging Example HullTable14!2!3fmurphyNo ratings yet

- GeoVES - V1 VES SoftwareDocument5 pagesGeoVES - V1 VES SoftwareAurangzeb JadoonNo ratings yet

- N (0.05) N (0.01) N (0.002) N (0.00064) Soluci Ones: Chart TitleDocument11 pagesN (0.05) N (0.01) N (0.002) N (0.00064) Soluci Ones: Chart TitleMarvin AguirreNo ratings yet

- Curva de DescargaDocument5 pagesCurva de DescargaAshley PeraltaNo ratings yet

- Ecuaciones (3X3) Ecuaciones (4X4) A B CDocument14 pagesEcuaciones (3X3) Ecuaciones (4X4) A B Cjose zegarra castroNo ratings yet

- Regression AnalysisDocument3 pagesRegression AnalysisMinza JahangirNo ratings yet

- Parte 1 Laboratorio 2Document3 pagesParte 1 Laboratorio 2ALEJANDRA GOMEZ ALVAREZNo ratings yet

- B GasturbineDocument11 pagesB GasturbineRIPUDAMAN SINGHNo ratings yet

- Basic Fret CalculatorDocument2 pagesBasic Fret CalculatorBenjamin RivasNo ratings yet

- Lab de FísicaDocument3 pagesLab de FísicaYoana SeseñaNo ratings yet

- Cluster: Case Processing SummaryDocument176 pagesCluster: Case Processing SummarySubhojit ChatterjeeNo ratings yet

- Black Pepper OriginalDocument66 pagesBlack Pepper Originalg6jwt57wm8No ratings yet

- Chilled Water Carbon Steel Pipes and Fittings Above Ground (Interpipe) - 4Document1 pageChilled Water Carbon Steel Pipes and Fittings Above Ground (Interpipe) - 4Vic BayotNo ratings yet

- Primer EjercicioDocument3 pagesPrimer EjercicioSara ReyesNo ratings yet

- Dbsample 327 Center - 1Document6 pagesDbsample 327 Center - 1KuthuraikaranNo ratings yet

- dt x X Normal ón Distribuci π: x Pr (X<x) x Pr (X<x) x Pr (X<x) x Pr (X<x)Document1 pagedt x X Normal ón Distribuci π: x Pr (X<x) x Pr (X<x) x Pr (X<x) x Pr (X<x)Jose Francisco HernándezNo ratings yet

- Tablanormal PDFDocument1 pageTablanormal PDFalterlaboroNo ratings yet

- Homework Materials4Document3 pagesHomework Materials4adahrenelleNo ratings yet

- TAMIZADODocument3 pagesTAMIZADOKathia Rojas MirandaNo ratings yet

- LM6 + 5% Graphite - Tension 3DDocument4 pagesLM6 + 5% Graphite - Tension 3DHareesh R IyerNo ratings yet

- Compressive Load V/S DeformationDocument6 pagesCompressive Load V/S DeformationRahulKumarNo ratings yet

- Boq Sanchez MiraDocument158 pagesBoq Sanchez MiraNCL Builders and Construction SupplyNo ratings yet

- Vacuum Pressure Unit Conversions Chart From IsmDocument2 pagesVacuum Pressure Unit Conversions Chart From IsmChintan BhavsarNo ratings yet

- CFD Report: Submitted by Vipul Agrawal 2018H1410129H M.E Design EngineeringDocument12 pagesCFD Report: Submitted by Vipul Agrawal 2018H1410129H M.E Design EngineeringVipul AgrawalNo ratings yet

- Chart Title: 60 80 F (X) 3.4813214286x + 19.8902857143 R 0.9920386416 Column O Linear (Column O)Document11 pagesChart Title: 60 80 F (X) 3.4813214286x + 19.8902857143 R 0.9920386416 Column O Linear (Column O)Agus barkahNo ratings yet

- Intervalo Oi Ei N/M (Ei-Oi) 2/eiDocument23 pagesIntervalo Oi Ei N/M (Ei-Oi) 2/eiDuvan LeónNo ratings yet

- Rotations PDFDocument15 pagesRotations PDFhamza wajidNo ratings yet

- PCA - Jupyter NotebookDocument5 pagesPCA - Jupyter Notebookvenkatesh mNo ratings yet

- Air Pressure, Psi XY X 2 Height of Nail Out of Board, MMDocument13 pagesAir Pressure, Psi XY X 2 Height of Nail Out of Board, MMravindra erabattiNo ratings yet

- 300921-Footplate Jembatan Boxculvert 28D SDDocument4 pages300921-Footplate Jembatan Boxculvert 28D SDFaujia HamidNo ratings yet

- Laxmi R7 90degDocument3 pagesLaxmi R7 90degAamir KhanNo ratings yet

- bishop simplificadoDocument1 pagebishop simplificadoFavio VelazcoNo ratings yet

- Lab4 Report: Knife Edge Follower and Cam (Base Radius RB 11.33)Document6 pagesLab4 Report: Knife Edge Follower and Cam (Base Radius RB 11.33)Mayuri GedamNo ratings yet

- Tabel Statistika Utk Statin 2Document16 pagesTabel Statistika Utk Statin 2Yulia Pratama Umbu DonguNo ratings yet

- 737 Hand CalculationsDocument59 pages737 Hand CalculationsstefandcamNo ratings yet

- Tabla NORMALDocument2 pagesTabla NORMALFernando Brugarolas navarroNo ratings yet

- Grafi Co de Codo Radio Corto: Grafica de ContraccíonDocument3 pagesGrafi Co de Codo Radio Corto: Grafica de ContraccíonJhon BenavidesNo ratings yet

- Grade 11 Daily Lesson Log: ObjectivesDocument2 pagesGrade 11 Daily Lesson Log: ObjectivesKarla BangFerNo ratings yet

- Math 9 QUARTER 1: Weeks 2-3: CompetencyDocument10 pagesMath 9 QUARTER 1: Weeks 2-3: CompetencyGraceRasdasNo ratings yet

- DLL - Mapeh 6 - Q3 - W7Document9 pagesDLL - Mapeh 6 - Q3 - W7Diana Rose AcupeadoNo ratings yet

- C 99Document53 pagesC 99Amine Mohamad YebedriNo ratings yet

- Android Sqlite BasicsDocument45 pagesAndroid Sqlite BasicsCRM HUGNo ratings yet

- Tantric Texts Series 06 Kalivilasatantram Edited by Parvati Charana Tarkatirtha 1917 GoogleDocument116 pagesTantric Texts Series 06 Kalivilasatantram Edited by Parvati Charana Tarkatirtha 1917 GoogleAntonio CarlosNo ratings yet

- Noting and Drafting Office Communication - PPTsDocument70 pagesNoting and Drafting Office Communication - PPTsGK Tiwari100% (2)

- Stayed GoneDocument4 pagesStayed GoneLizbethPilcoNo ratings yet

- Gmath Continuation Module 1Document30 pagesGmath Continuation Module 1あい アリソンNo ratings yet

- Declamation Piece Mechanics and CriteriaDocument5 pagesDeclamation Piece Mechanics and CriteriaCHRISTIAN MAE LLAMASNo ratings yet

- Present Simple Complex TEORIE+EXERCISESDocument3 pagesPresent Simple Complex TEORIE+EXERCISESPacurar EmmaNo ratings yet

- Daa Lps2 21bps1263Document20 pagesDaa Lps2 21bps1263Debu SinghNo ratings yet

- TRANSLATION CRITICISM TQA Model by J. HouseDocument22 pagesTRANSLATION CRITICISM TQA Model by J. HouseMADINA RAHATBEK KIZINo ratings yet

- Unit1 Whatkindof Students R UDocument5 pagesUnit1 Whatkindof Students R UNagita AdellaNo ratings yet

- Unit-4 USB - SCI - PCI BusDocument4 pagesUnit-4 USB - SCI - PCI BusOm DevNo ratings yet

- L1 SPECIAL TOPICS IN STS - Information SocietyDocument36 pagesL1 SPECIAL TOPICS IN STS - Information SocietyEdreign Pete MagdaelNo ratings yet

- ASMITADocument1 pageASMITAADARSH SINGHNo ratings yet

- Oracle Dataguard Support Issues: Brian Hitchcock Ocp 10G Dba Sun MicrosystemsDocument76 pagesOracle Dataguard Support Issues: Brian Hitchcock Ocp 10G Dba Sun MicrosystemsSachin JainNo ratings yet

- Assignment 2Document3 pagesAssignment 2Kimberly MagolladoNo ratings yet

- Lesson 1.1Document98 pagesLesson 1.1mary gracelyn quinoNo ratings yet

- UNIT 3 Grammar Practice: Active & Passive Forms The CausativeDocument1 pageUNIT 3 Grammar Practice: Active & Passive Forms The CausativeEva Díaz PardoNo ratings yet

- JohnLindow 2002 Hel NorseMythologyAGuideTDocument1 pageJohnLindow 2002 Hel NorseMythologyAGuideTÁron SzárazNo ratings yet

- My Journey To Becoming A Web Developer From ScratchDocument13 pagesMy Journey To Becoming A Web Developer From Scratchricky patealNo ratings yet

- 4 Grading Period: 3 Summative Test EnglishDocument3 pages4 Grading Period: 3 Summative Test Englishsnowy kim50% (2)

- Dr. Hamzah, M.A., M.M.: Introduction To Functional GrammarDocument43 pagesDr. Hamzah, M.A., M.M.: Introduction To Functional GrammarErdiana ErdiansyahNo ratings yet

- Quran As Primary Source of Islamic LawDocument6 pagesQuran As Primary Source of Islamic LawMuhammad Kaif100% (1)

- Unit 5 - Runtime EnvironmentDocument69 pagesUnit 5 - Runtime Environmentaxar kumarNo ratings yet

- 2-Sinf Ingliz Tili Ish Reja YANGIDocument5 pages2-Sinf Ingliz Tili Ish Reja YANGIQodirjonov AsadbekNo ratings yet

- Index: DB Frequency, AC CI HD HD HDDocument10 pagesIndex: DB Frequency, AC CI HD HD HDe616vNo ratings yet