Professional Documents

Culture Documents

CYBER Threats in Social Networking Websites and Physical System Security

CYBER Threats in Social Networking Websites and Physical System Security

Uploaded by

Tripti GautamOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

CYBER Threats in Social Networking Websites and Physical System Security

CYBER Threats in Social Networking Websites and Physical System Security

Uploaded by

Tripti GautamCopyright:

Available Formats

IITM Journal of Management and IT

Indexed in:

Google Scholar, EBSCO Discovery, indianjournals.com & CNKI Scholar

(China National Knowledge Infrastructure Scholar)

Monitoring water quality by sensors in Wireless Sensor Networks-A Review

Rakesh Kumar Saini

Multimode Summarized Text to Speech Conversion Application

Archit Sehgal , Gitika Khanna

Aadhaar Smart Meter: A Real-Time Bill Generator

Ramneek Kalra, Vijay Rohilla

A Review on Optical Character Recognition

Archit Singhal, Bhoomika

Prediction of Heart Attack Using Machine Learning

Akshit Bhardwaj, Ayush Kundra, Bhavya Gandhi, Sumit Kumar, Arvind Rehalia,.

Manoj Gupta

Detection and Prevention Schemes in Mobile Ad hoc Networks

Jeelani, Subodh Kumar Sharma, Pankaj Kumar Varshney

A Review on Histogram of Oriented Gradient

Apurva Jain, Deepanshu Singh

Breast Cancer Risk Prediction

Pankaj Kumar Varshney, Hemant Kumar , Jasleen Kaur, Ishika Gera

CYBER: Threats in Social Networking Websites and Physical System Security

Tripti Lamba Ashish Garg

Comparative Analysis of Different Encryption Techniques In Mobile Ad-Hoc

Networks

(MANETS)

INSTITUTE OF INFORMATION TECHNOLOGY AND MANAGEMENT Apoorva Sharma, Gitika Kushwaha

NAAC & NBA Accredited, Approved by AICTE, Ministry of HRD, Govt. of India Fuchsia OS - A Threat to Android

Category 'A' Institute, An ISO 9001: 2015 Certified Institute Taranjeet Singh , Rishabh Bhardwaj

Affiliated to Guru Gobind Singh Indraprastha University, Delhi

D-29, Institutional Area, Janakpuri, New Delhi - 110058

Sentiment Analysis using Lexicon based Approach

Tel: 91-011-28525051, 28525882 Telefax: 28520239 Rebecca Williams, Nikita Jindal, Anurag Batra

E-mail: director@iitmipu.ac.in, journal@iitmipu.ac.in

Website: www.iitmjanakpuri.com, www.iitmipujournal.org ISSN (PRINT): 0976-8629

ISSN (ONLINE): 2349-9826

www.iitmipujournal.org

ISSN (Print): 0976-8629 www.iitmipujournal.org

ISSN (Online): 2349-9826

Indexed in:

Google Scholar, EBSCO Discovery, indianjournals.com & CNKI Scholar (China

National Knowledge Infrastructure Scholar)

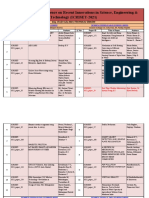

IITM Journal of Management and IT

Volume 10 Issue 1 January - June 2019

CONTENTS

Research Paper & Articles

• Monitoring water quality by sensors in Wireless Sensor Networks-A Review 1

Rakesh Kumar Saini

• Multimode Summarized Text to Speech Conversion Application 6

Archit Sehgal , Gitika Khanna

• Aadhaar Smart Meter: A Real-Time Bill Generator 11

Ramneek Kalra, Vijay Rohilla

• A Review on Optical Character Recognition 15

Archit Singhal, Bhoomika

• Prediction of Heart Attack Using Machine Learning 20

Akshit Bhardwaj, Ayush Kundra, Bhavya Gandhi, Sumit Kumar,

Arvind Rehalia,. Manoj Gupta

• Detection and Prevention Schemes in Mobile Ad hoc Networks 25

Jeelani, Subodh Kumar Sharma, Pankaj Kumar Varshney

• A Review on Histogram of Oriented Gradient 34

Apurva Jain, Deepanshu Singh

• Breast Cancer Risk Prediction 37

Pankaj Kumar Varshney, Hemant Kumar , Jasleen Kaur, Ishika Gera

• CYBER: Threats in Social Networking Websites and Physical System Security 46

Tripti Lamba Ashish Garg

• Comparative Analysis of Different Encryption Techniques In Mobile Ad-Hoc

Networks (MANETS) 55

Apoorva Sharma, Gitika Kushwaha

• Fuchsia OS - A Threat to Android 65

Taranjeet Singh , Rishabh Bhardwaj

Sentiment Analysis using Lexicon based Approach 68

Rebecca Williams, Nikita Jindal, Anurag Batra

Volume 10, Issue 1 • January-June, 2019

Monitoring water quality by sensors in Wireless Sensor

Networks-A Review

Rakesh Kumar Saini

Department of Computer Science & Application, DIT University, Dehradun

Uttrakhand, India

rakeshcool2008@gmail.com

Abstract– Monitoring water quality is critical to has an onboard radio that can be used to send the

human health, hence employing wireless sensor collected data to interested parties. Such

netwoks for such a task requires a system that is technological development has encouraged

robust, secure and has a reliable communication. practitioners to envision aggregating the limited

Water borne diseases have become a major challenge capabilities of the individual sensor in a large scale

to human health. Around 400 million cases of such network that can operate unattended. Numerous civil

diseases are reported annually, causing 6–12 million and military applications can be leveraged by

deaths world-wide. Access to safe drinking water is networked sensors. A network of sensors can be

important as a health and development issue at employed to gather meteorological variables such as

national, regional and local level. The population in temperature and pressure. One of the advantages of

rural India mainly dependent on the ground water as wireless sensor networks (WSNs) is their ability to

a source of drinking water. The main problems at the operate unattended in harsh environments in which

time to effectively implement sensors are that, on one contemporary human-in-the-loop monitoring

hand, there is a lack of standards for contamination schemes are risky, inefficient and sometimes

testing in drinking water on the other hand, there are infeasible. Therefore, sensors are expected to be

poor links between available sensor technologies and deployed randomly in the area of interest by a

water quality regulations. In this paper the relatively uncontrolled means, e.g. dropped by a

application of WSN in environmental monitoring, helicopter, and to collectively form a network in an

with particular emphasis on water quality. Various ad-hoc manner. Given the vast area to be covered, the

WSN based water quality monitoring methods short life span of the battery-operated sensors and the

suggested by other authors are studied and analyzed, possibility of having damaged nodes during

taking into account their coverage, energy and deployment, large population of sensors are expected

security concerns. in most WSNs applications.

Keywords-- Water quality monitoring, Remote, In most part of the world, ground water is the only

Wireless Sensor Network and important supply for production of drinking

water, particularly in areas where water supply is

I. INTRODUCTION

limited. Groundwater quality will directly affect

Recent advances in MEMS-based sensor technology, human health [3]. A sensor is the electronic device

low-power analog and digital electronics, and low- that can detects and responds to the external stimulus

power RF design have enabled the development of from the physical environment. The external stimulus

relatively in- expensive and low-power wireless can be temperature, heat, moisture, pressure in the

micro sensors that are capable of detecting ambient environment. The output of the sensors is generally a

conditions such as temperature and sound. Sensors signal which can be converted into human readable

are generally equipped with data processing and form. Sensors may be classified as analog sensors

communication capabilities [1][2]. The sensing and digital sensors. Analog sensor senses the external

circuitry measures parameters from the environment parameters (wind speed, solar radiation, light

surrounding the sensor and transforms them into an intensity etc.) and gives analog voltage as an output

electric signal. Processing such a signal reveals some [4]. A digital sensor is an electronic or

properties about objects located and/or events electrochemical sensor, where data is digitally

happening in the vicinity of the sensor. Each sensor converted and transmitted. A base station is

Volume 10, Issue 1 ∙January-June 2019 1

responsible for capturing and providing access to all III. CHALLENGES OF MONITORING

measurement data from the nodes, and can WATER QUALITY

sometimes provide gateway services to allow the data

Access to safe drinking water is important as a health

to be managed remotely. The importance of

and development issue at national, regional and local

maintaining good water quality highlights the

level. The population in rural indie mainly dependent

increasing need for advanced technologies to help

on the ground water as a source of drinking water. As

monitor water and manage water quality. Sensors in

India is a developing country and it has wide-spread

Wireless sensor networks offer a promising

emerging technologies, there is a need for system for

infrastructure to municipal water quality monitoring

timely help and to monitor water pollution on the

and surveillance. In this paper we suggest some

total state of the water system [7] [8]. Monitoring of

parameters for drinking water quality and proposed

water quality is very important for good health.

model and methods for water quality [5][6].

Wireless sensor networks can help for control quality

II. SENSORS of water by using some methods but there are some

challenges of monitoring water quality are:

Sensors are electronic devices that can be used for

monitoring physical and environmental condition (a) Sensors Costs and Specifications

such as temperature, vibration and sound. Sensors are (b) Low energy of sensors

defined as the sophisticated devices which aids to (c) Security

detect and respond to electrical or optical signals. It (d) Connectivity

converts the physical parameters like temperature, (e) Sensor location

blood pressure, humidity, speed into a signal that can

(a) Sensors Costs and Specifications

be measured electrically. Sensors can be classified

based on the following criteria and conditions: Site specific installation cost projections need to be

developed. Typical cost components in the total cost

a. Primary Input quantity

of a sensor installation at each potential location

b. Transduction principle

include:

c. Material and Technology

d. Property Applications 1. Land purchase

2. Construction of the vault in which the sensors

Figure 1 represents another category of sensors.

and connections to the distribution piping will be

located

3. Installation of the sensors and RTU

4. Supplying power to the site

5. Installing the communications equipment and

upgrading/installing equipment at the central

control room

6. Design and bidding the construction and

installation work

7. Access to site

(b) Low energy of sensors

In Wireless Sensor Networks, Energy is a scarcest

resource of sensor nodes and it determines the

lifetime of sensor nodes. These are battery powered

sensor nodes. These small batteries have limited

power and also may not easily rechargeable or

removable. Long communication distance between

Figure 1. Classification of Sensors sensors and a sink can greatly drain the energy of

sensors and reduce the lifetime of a network. In

2 IITM Journal of Management and IT

Wireless Sensor Networks, energy of sensors is a strengths. Relative coordinates of neighboring nodes

major issue to be considered [9]. can be obtained by exchanging such information

between neighbors. To save energy, some location

(c) Security

based schemes demand that nodes should go to sleep

Underwater wireless sensor network is a novel type if there is no activity. More energy savings can be

of underwater networked system. Due to the obtained by having as many sleeping nodes in the

characteristics of Underwater Wireless Sensor network as possible.

Network and underwater channel, Underwater

IV. QUALITY RANGE OF SUGGESTED

Wireless Sensor Network are vulnerable to malicious

PARAMETERS FOR DRINKING WATER

attacks. The existing security solutions proposed for

WSN cannot be used directly in Underwater Wireless Parameters examined by US environmental

Sensor Network. Moreover, most of these solutions protection agency, resolved that both chemical and

are layer wise. In figure 2 all sensors sense the data biological waste has an adverse effect on many water

under water and send these data to the base station. monitoring parameters such as pH, ORP, Turbidity,

Different types of sensors can be used under the Nitrates, Dissolved Oxygen, Water temperature,

water for monitoring quality of water [10]. Fluoride, Chlorine, Oxygen. In order to detect the

water contamination or impurities, it is enough to

determine the changes in the suggested parameters

(Table 1).if there is any deviation when compared to

that of the drinking water standards recommended by

WHO or Central pollution control board, India, then

the water is not safe for drinking [13].

Table 1: Quality range of suggested parameters

Quali

Sr Paramete Measure

Units ty

. r d Cost

range

6.5-

1 pH pH Low

9.0

650-

2 ORP mV Low

800

Figure 2. Underwater Wireless Sensor Network

3 Turbidity NTU 0-5 Medium

(d) Connectivity 4 Nitrates mg/L <10 High

Dissolved

The most fundamental problem in underwater sensor 5 mg/L 0.2-2 Medium

Oxygen

network is network connectivity. The connectivity

problem reflects how well a sensor network is Water

tracked or monitored by sensors. An underwater 6 temperatur C <10 Low

wireless sensor networks is the emerging field that is e

having the challenges in each field such as the mg/lit <0.05

7 Fluoride Medium

deployment of nodes, routing, floating movement of re –0.2

sensors etc. For the connectivity of nodes use DFS mg/lit

8 Chlorine 0.2–1 Low

(Depth first search) algorithm and for coverage use re

distributed coverage algorithm [11] [12]. 9 Oxygen mg/l 1-2 High

(e) Sensor location

Sensor nodes are addressed by means of their

locations. The distance between neighboring nodes

can be estimated on the basis of incoming signal

Volume 10, Issue 1 ∙January-June 2019 3

V. PROPOSED MODEL FOR WATER Ganga Rejuvenation, Government of

QUALITY india.http://cgwb.gov.in/.

[2] U.S.Environmental Protection Agency,

―Drinking water standards and health

advisories‖.Tech.Rep.EPA 822-S-12-001,

2012.

[3] Water Resource Information System of

India.http:www.india-

wris.nrsc.gov.in/wrpinfo/index.php?title=Rive

r_Water_Quality_Monitoring.

[4] Szabo, J. and Hall, J., Detection of

Figure 3. Proposed model for monitor quality of

contamination in drinking water using

drinking water

fluorescence and light absorption based online

Proposed model (Figure 3) for monitor quality of sensors, EPA/600/R-12/672 (2012),

drinking water is very effective for monitor ground http://www.epa.gov/ord.

water. In this proposed model all sensors sense the

[5] Homeland Security Presidential

data and collect data then stored these data in local-

Directive/HSPD-9, United States of America.

controller. Once the local-controller receives the

data, it then transferred to the cloud for analyzing the [6] Hart, D. B., Klise, K. A., McKenna, S. A. and

data. Cloud storage work as a mediator between data Wilson, M. P., CANARY User‘s Manual,

transmission layer and database management layer. Version 4.2. EPA-600-R-08e040 (2009), U.S.

After analyzing data by cloud storage data Environmental Protection Agency, Office of

transferred to end-user. Domestic water supplied Research and Development, National

form Municipal Corporation or directly takesn from Homeland Security Research Centre,

the ground water are mainly used for drinking and Cincinnati, OH.

cooking purposes.

[7] Laine, J., Huovinen, E., Virtanen, M. J. et al.,

Traditional water supply management An extensive gastroenteritis outbreak after

involves<storing the pool of water at various drinking-water contamination by sewage

locations and distributing the same through water effluent, Finland, Epidemiol. Infect. (2010)

head tanks and domestic pipelines. 139 (7), pp. 1105–1113.

VI. CONCLUSIONS [8] Szabo, J. and Hall, J., Detection of

contamination in drinking water using

The main objective of this paper is to show the role

fluorescence and light absorption based online

of sensors for the betterment of water quality in order

sensors, EPA/600/R-12/672

to obtain a hygienic environment. In this paper we

(2012),http://www.epa.gov/ord.

study the challenges of monitor quality of drinking

water and parameters required for monitor drinking [9] Bergamaschi, B., Downing, B., Pellerin, B.

water. According to the study, drinking water and Saraceno, J. F., In Situ Sensors for

obtained from both groundwater and surface water Dissolved Organic Matter Fluorescence:

must satisfied the standards for safe drinking water. Bringing the Lab to the Field, USGS Optical

This paper gives a clear view about what is a sensor, Hydrology Group, CA Water Science Centre.

parameters to identify quality of water, and stages to

[10] Hart, D. B., Klise, K. A., McKenna, S. A. and

create online water quality management system.

Wilson, M. P., CANARY User‘s Manual,

REFERENCES Version 4.2. EPA-600-R-08e040 (2009), U.S.

Environmental Protection Agency, Office of

[1] Central Ground Water Board, Ministry of

Research and Development, National

water resources, River development and

4 IITM Journal of Management and IT

Homeland Security Research Centre, [12] Homeland Security Presidential

Cincinnati, OH. Directive/HSPD-9, United States of America.

[11] Hart, D. B., Klise, K. A., McKenna, S. A. and [13] Storey, M. V., Van der Gaag, B. and Burns, B.

Wilson, M. P., CANARY User‘s Manual, P., Advances in online drinking water quality

Version 4.2. EPA-600-R-08e040 (2009), U.S. monitoring and early warning systems, Water

Environmental Protection Agency, Office of Research 45 (2011), pp. 741–74.

Research and Development, National

Homeland Security Research Centre,

Cincinnati, OH.

Volume 10, Issue 1 ∙January-June 2019 5

Multimode Summarized Text to Speech Conversion

Application

Archit Sehgal 1, Gitika Khanna 2

1,2

Department of Computer Science, HMR Institute of Technology & Management

Hamidpur, Delhi-110036, India

Archit_150@yahoo.co.in, gitikakhanna392@gmail.com

Abstract - This paper draws focus towards construction of meaningful summary by selecting

summarizing the tremendous amount of data useful paraphrases from the text available. The

collected from various sources and presenting the summarized text is then transformed into speech using

output as speech. In recent years, huge data sets are Text-to-Speech Synthesizer (TTS). The whole

being generated every moment and it becomes approach is categorized into three phases which are

difficult to manage it. In order to extract relevant text extraction from input, formation of summary and

information, an innovative, efficient and real- time conversion of the same into speech.

cost beneficial technique is required that enables

II. LITERATURE REVIEW

users to hear the summarized content instead of

reading it. This kind of application is beneficial for Mrunmayee Patil[1] This paper tells us about an

visually impaired and people with disabilities. Text OCR system to recognize the characters from image.

Rank algorithm, a ranking based approach is Edge detection and Image segmentation plays a

proposed with a variation in similarity function to significant role in extraction of text from image. The

make summary based on the scores computed for algorithm which can be used to summarize the

each sentence. The summarized text is then spoken extracted text works similar to PageRank Algorithm

out using text-to-speech synthesizer (TTS). discussed in the paper for web search engines [10].

Modifications can be made to make the TextRank

Keywords - TextRank, PageRank, Lexemes, Image

algorithm more effective. Sunchit Sehgal[5] This

Segmentation, Character Recognition, Text-to-

paper represents a way to make the algorithm more

Speech (TTS).

efficient by taking the score of the title in account.

I. INTRODUCTION Marcia A. Bush [19] shows us the efforts put in the

research of recognition of documents and their

In our proposed work of collecting data from different

prediction models This has enabled us to analyze the

sources and converting it into summarized text, we

signal based processes taking vocabulary, font and the

develop a cost efficient and user friendly interface.

sentence formation sequence into account.

The input to the application can be an image, audio or

video. While converting the input into editable text, III. OVERVIEW OF IMAGE ANALYSIS

there are various techniques used such as image

Over the decades, many researchers have been looking

processing, image segmentation [1] and edge

for possible ways of retrieving data from images and

detection. The approach direct towards format

video content. In a research paper, a framework was

conversion, where audio, video or image data is

proposed that will decompose the scanned image into

converted into symbolic representations that fully

its constituent visual patterns and the parsed results

describe the content. In case of an image, the

will be converted into semantically meaningful text

segmented characters are obtained from preprocessing

report. A model was also introduced where the users

of images. It is then provided as input to the Optical

will send the image of their respective meter‘s display

Character Recognition (OCR) to obtain the converted

screen along with the kilo-watt information [2]. The

text. In order to manage the enormous amount of

information will then be processed to convert it into

information, the derived text is summarized using a

text.

graph based technique i.e. TextRank Algorithm.

Image analysis [3] is the extraction of information

The TextRank Algorithm has application in

from images using different image processing

6 IITM Journal of Management and IT

approaches such as image filtering, image recognizable. In an automatic speech recognition

compression, image editing and manipulation, image system, the size of vocabulary affects the performance

preprocessing, image segmentation, feature of the system. Amidst the initial process, the system

extraction, object recognition. An Image can be learns about pattern, different speech sounds which

considered as a matrix of square pixels arranged in the embody the vocabulary of the application. If there is

pattern of rows and columns. It can be considered as a any unknown pattern, it is identified using the cluster

linear sequence of characters. of references. The whole approach can be categorized

as phases such as analysis, feature extraction,

modeling and testing. The analysis phase is used to

extract information about speaker identity using vocal

tract, behavior feature and excitation source. Since

every speech has different characteristics which can be

fetched in the feature extraction phase in order to deal

with the speech signals.

V. TEXTRANK ALGORITHM

Fig 1. Conversion of Image to text using Optical With the tremendous growth in chunks of text data,

Character Recognition there is a need to effectively summarize it to be useful.

Automatic text summarization [4] is very demanding

Fig 1. Depicts various blocks responsible for detecting and non-trivial task. There have been methods

text from the image. Once the image is scanned, it is proposed which uses word and phrase frequency to

preprocessed to remove any noise and is further extract salient sentences from the text. Overall, there

divided into segments. Every segment has its own are two different approaches for text summarization:

unique feature which must be further extracted and extraction and abstraction. Extraction works by

classified to specific groups. selecting the sentences from original text whereas

Edge detection and image segmentation are important abstraction aim at modifying the original text using

aspects in image analysis. Edge detection advanced natural language techniques in order to

differentiates different regions of an image by generate a new brief summary. However, extractive

identifying the change in gray scale and texture. Image summarization yields better results as compared to

Segmentation is another technique which divide and abstractive summarization because abstraction face

decompose image for further processing. It categorizes issues such as semantic representation and natural

the pixels with similar gray scale values and organizes language generation. Here, we focus on graph based

it into higher level units so that the objects become TextRank algorithm to perform extractive

more meaningful. The proposed system will work in summarization. TextRank [5] is an autonomous

various phases. The input image will undergo pre- machine learning algorithm and is an extension of the

processing such as removing noise induced due to the PageRank algorithm.

technique applied for thresholding and improving the VI. PROPOSED APPROACH

quality. The image will undergo image segmentation

In our proposed approach to build this application,

to separate non-text part present in the next step. input can be taken in different modes such as editable,

Further, feature extraction is performed to extract text, image, audio and video also. After the input is

preliminary features and comparing the same which taken, TextRank Algorithm can be used to convert it

are stored in the database. Sometimes, there are often into summarized text. The summarized text will be

error in which characters might be blurred or broken. taken as output and convert into speech. Input

They are processed in post-processing stage. processing from different modes has been discussed

IV. AUDIO ANALYSIS above. The major concern is summarizing the content

efficiently and accurately. In further section we will

Conversion of real time speech to text requires special

discuss about improving the graph based technique i.e.

techniques as it must be quick and precise to be

TextRank Algorithm for accurate summarization.

Volume 10, Issue 1 ∙January-June 2019 7

For any summarizer, intermediate representation is sentences and for each individual sentence is given

done to express the main aspect of the text. It uses two by:

PR(Vi) = (1 - d) + d * X Vj ∈ In(Vi) PR(Vj) | |𝑾𝒌|𝑾𝒌 ∈ 𝑺𝒊&𝑊𝑘 ∈ 𝑆𝑗|

𝑺𝒊𝒎𝒊𝒍𝒂𝒓𝒊𝒕𝒚 𝒔𝒊 , 𝒔𝒋 =

Out(Vj) (1) |𝑺𝒊| + |𝑺𝒋|

𝟐

In order to build a connected graph, an edge is to be

added between the two vertices which represents the 𝑺𝒊𝒎𝒊𝒍𝒂𝒓𝒊𝒕𝒚𝒕𝒊𝒕𝒍𝒆 𝒔𝒊 , 𝒔𝒕𝒊𝒕𝒍𝒆

similarity between them. The similarity depends on |𝑾𝒌|𝑾𝒌 ∈ 𝑺𝒊&𝑊𝑘 ∈ 𝑆𝒕𝒊𝒕𝒍𝒆|

=

the words common between the two sentences which |𝑺𝒊| + |𝑺𝒕𝒊𝒕𝒍𝒆|

𝟐

can be calculated using the similarity function. Let Si

and Sj be two sentences where a sentence is Therefore, the cumulative score for any sentence say

represented by Ni words that forms it. S1 is given by:

Similarity(S, Si,j) = |𝑾𝒌 |𝑾𝒌∈𝑺𝒊 & 𝑾𝒌 ∈𝑺𝒋| 𝑺𝒊𝒎𝒊𝒍𝒂𝒓𝒊𝒕𝒚𝒕𝒊𝒕𝒍𝒆 𝒔𝒊 , 𝒔𝒕𝒊𝒕𝒍𝒆

𝐥𝐨𝐠(|𝑺𝒊|)+𝐥𝐨𝐠(|𝑺𝒋|) (2) 𝒋=𝒏

+ 𝑺𝒊𝒎𝒊𝒍𝒂𝒓𝒊𝒕𝒚 𝑺𝒂𝒏𝒕𝒆𝒏𝒄𝒆𝒔 𝒔𝒊 , 𝒔𝒋

𝒋=𝒊,𝒋=𝟐

Score is accredited to each sentence depending upon

− 𝑺𝒊𝒎𝒊𝒍𝒂𝒓𝒊𝒕𝒚𝑺𝒆𝒏𝒕𝒂𝒏𝒄𝒆𝒔 𝒔𝟏 , 𝒔𝟏

the type of representation approach. In topic

representation, the score depends on how well the VII. IMPLEMENTATION AND EVALUATION

sentence describes the topic whereas in case of

We have made this application using Apache

indicator representation, a variety of machine

Cordova and this application is compatible with both

learning techniques can be used in aggregating the

android and IOS. We divided the whole approach

results. In the final step, a methodology should be

into three modules:

used which selects the best combination of sentences

that maximizes the importance and minimize Module 1: Uploading of image and processing

redundancy. of input text using OCR approach. The text can

directly be typed in the text field. It can also be

In our proposed method, TextRank algorithm is used

taken in the form of audio for which we used a

to find the similarity between the sentences. This

button to record voice and then processing it

method describes the document as a connected graph

using audio analysis.

where sentences represents the vertices and an edge

indicates how similar the two sentences are. It is Module 2: In this module, text summarization

based on frequency of occurrence of words so any takes place once an event is fired. Sentences are

specific language processing is not required. ranked and the best sentences are picked to

make up a summary and be shown in the output

Consider an undirected graph, say G = (V, E), where

window.

V = set of vertices.

E = set of edges.However, the title can also play an Module 3: The text in the output window is

important role in converted into speech when a button is clicked.

For a given vertex Vi, let In(Vi) represent the set of We have evaluated our application by taking 3 sample

vertices pointing towards the former vertices and articles and evaluating the summary using ROUGE

Out(Vi) represents the set of vertices pointing to the evaluation. ROUGE [6] is the most widely used

next-inline vertices. The score can be calculated for method to evaluate the summary automatically by

each vertex using the formula: adding distinguishing correlating it to human summaries. There are various

information to elaborate meaning of the text. The variations of ROUGE such as ROUGE-n, ROUGE-L

similarity function can be improvised by computing and ROUGE-SU. In ROUGE-n, a series of n-grams is

the correlation between each individual sentence and elicited from the human summaries used as reference

title of article as well. and the candidate summary. ROUGE-L used the

longest common subsequence (LCS) approach i.e. the

So, the modified similarity function between two longer the LCS, more will be the similarity. The metric

8 IITM Journal of Management and IT

ROUGE-SU makes use of bi-grams as well as uni-

grams.

Results of our evaluation are shown in a given table

below:

Rouge Task Average Average Average Number

Type Name Recall Precision FScore Referenced

Summaries

ROUGE1 Sample 1.0 0.29664 0.45732 1

1

ROUGE1 Sample 1.0 0.09125 0.16841 1 Fig2. Overview of conversion of text to speech

2

ROUGE1 Sample 1.0 0.33504 0.50192 1

3 Fig 2 shows how speech is generated from text.

Natural Language Processing analyze and synthesize

Table 1. Results of summary evaluation using

natural language and speech.

ROUGE 2.0 Evaluation Toolkit

IX. CONCLUSION & FUTURE SCOPE

VIII. TEXT TO SPEECH SYNTHESIS

The paper proposes an approach to generate an

The text-to-speech synthesis [7] is the self-regulating

optimized summary taking input from various mode

conversion of a text into speech by transcribing the

such as image, audio and editable text. We also talked

text into phonetic representation and then generates

about different summarization techniques such as

the speech waveform. A text-to-speech consists of a abstraction and extraction based. In order to generate

front-end and a back-end. The front-end performs two summary we proposed modification to graph based

major operations which are text normalization and algorithm i.e. TextRank algorithm. Besides the entire

assigning phonetic transcription to each word (text-to- paragraph, score of the paragraph title is also taken

phoneme). The back-end part generates the speech account. Three sample articles are computed using

waveform. The engine is divided into modules such as ROUGE evaluation toolkit and the results are depicted

Natural Language Processing (NLP) module, Digital in table 1. However there is a scope for video analysis.

Signal Processing (DSP) module, text analysis and Since the paper discusses about taking input from

application of pronunciation rules. This can be multiple modes, video can be amongst them. Also,

developed using Java programming language. improvements can be made to make the summary

There are various techniques to preform speech algorithm more efficient and accurate. This will in turn

synthesis like Concatenative synthesis, Articulatory ensure that the generated summary has its logical

synthesis, Formant synthesis, Domain Specific meaning.

synthesis, Unit selection synthesis, Diphone synthesis, REFERENCES

HMM based Synthesis etc. Concatenative synthesis

[1] Mrunmayee Patil, Ramesh Kagalkar, ―A Review

involves concatenation of short samples of speech on Conversion of Image to Text As Well As

recording. Articulatory synthesis makes use of Speech Using Speech Detection and Image

articulatory parameters like human vocal tract to Segmentation‖, International Journal Of

generate speech. Formant Synthesis is clear at high Science and Research.

speeds. It is rule based synthesis which synthesize [2] S. Shahnawaz Ahmed, Shah Muhammed Abid

speech using acoustic rules. Domain-specific Hussain and Md. Sayeed Salam, ―A Novel

synthesis uses a simple approach of concatenating pre- Substitute for the Meter Readersin a Resource

recorded words and phrases to complete a sentence. Constrained Electricity Utility‖ IEEE Trans.

On Smart Grid, vol. 4, no. 3, Sept. 2013.

Unit selection synthesis makes use of segmented

[3] K.Kalaivani, R.Praveena, V.Anjalipriya,

records stored in database to create speech.

R.Srimeena, ―Real Time Implementation of

Image Recognition and Text to Speech

Conversion‖, International Journal of

Advanced Engineering Research and

Technology, vol.2, Sept. 2014.

[4] Mehdi Allahyari, Seyedamin Pouriyeh, Mehdi

Volume 10, Issue 1 ∙January-June 2019 9

Assefi, Saeid Safaei, Elizabeth D. Trippe, Juan ―Document Summarization Using

B. Gutierrez, Krys Kochut, ―Text Conditional Random Fields‖. In

Summarization Techniques: A Brief Survey‖, IJCAI, Vol. 7. 2862–2867.

28 Jul. 2017.

[13] Sérgio Soares, Bruno Martins, and

[5] Sunchit Sehgal, Badal Kumar, Maheshwar Pavel Calado. 2011. Extracting

Sharma, Lakshay Rampal, Ankit Chaliya, ―A biographical sentences from textual

Modification to graph based approach for documents. In Proceedings of the

extraction based Automatic Text 15th Portuguese Conference on

Summarization‖, Institute of Electrical and Artificial Intelligence (EPIA 2011),

Electronics Engineer. Lisbon, Portugal. 718–30.

[6] Chirantana Mallick, Ajit Kumar Das, [14] Karen Spärck Jones. 2007.

Madhurima Dutta, Asit Kumar Das, Apurba ―Automatic summarizing: The state of

Sarkar, ―Graph-based Text Summarization the art. Information Processing &

Using Modified TextRank‖, Aug. 2018. Management‖ 43, 6 (2007), 1449–

1481.

[7] Itunuoluwa Isewon, Jelili Oyelade, Olufunke

Oladipupo, ―Design and Implementation of Text [15] Josef Steinberger, Massimo Poesio,

to Speech Conversion for Visually Impaired Mijail A Kabadjov, and Karel Ježek.

People‖, International Journal of Applied 2007. Two uses of anaphora

Information System, vol.7 no.2, Apr. 2014. resolution in summarization.

Information Processing &

[8] Kaladharan N, ―An English Text to Speech

Management 43, 6 (2007), 1663–

Conversion‖, International Journal of

1680.

Advanced Research in Computer Science and

Software Engineering, vol.5, Oct. 2015. [16] Mark Steyvers and Tom Griffiths.

2007. Probabilistic topic models.

[9] R. Aida-Zade, C. Aril, A.M.

Handbook of latent semantic analysis

Sharifova, ―The Main Principles of

427, 7 (2007), 424–440.

Text-to-Speech Synthesis System‖,

International Journal of Computer and [17] Fabian M Suchanek, Gjergji Kasneci,

Information Engineering, vol. 7 no. 3, and Gerhard Weikum. 2007. Yago: a

2013. core of semantic knowledge. In

Proceedings of the 16th international

[10] S. Brin and L. Page, ―The anatomy of

conference on World Wide Web.

a large-scale hypertextual Web

ACM, 697–706.

search engine‖, Computer Networks

and ISDN systems, 30(1-7), 1998. [18] Simone Teufel and Marc Moens.

2002. ―Summarizing scientific

[11] Horacio Saggion and Thierry

articles: experiments with relevance

Poibeau. 2013. ―Automatic text

and rhetorical status‖. Computational

summarization: Past, present and

linguistics 28, 4 (2002), 409–445.

future‖. In Multi-source,

Multilingual Information Extraction [19] Marcia A. Bush, ―Speech and Text-Image

and Summarization. Springer, 3–21. Processing in Documents‖, Technical Report

P92-000150, Xerox PARC, Palo Alto, CA,

[12] Dou Shen, Jian-Tao Sun, Hua Li,

November, 1992

Qiang Yang, and Zheng Chen. 2007.

10 IITM Journal of Management and IT

Aadhaar Smart Meter: A Real-Time Bill Generator

Ramaneek Kalra1*, Vijay Rohilla2*

1*

Computer Science Department, IEEE Member, HMRITM, New Delhi, India

2*

Assistant Professor EEE Department, HMRITM, New Delhi, India

kalraramneek@ieee.org, vijay1402rohilla@gmail.com

Abstract- In present scenario, humans are at Factor, Units Consumed with little bit of information

outskirts of changing technology i.e. really helping of days from installation. Due to this less

oneself to explore new things going around and get transparency between government‘s Database and

familiar in short time and giving much output. In Customer‘s need we are hereby proposing idea of

these days, Indian Government is at extent to convert ―Aadhaar Smart Meter‖ which is nothing but the live

all money in digital banks/ wallets like BHIM App, example of details discussed in above section. This

UPI Payment etc. idea is purely made upon the Database Connectivity

and SMS Service provided to Customer for particular

With that, the technology of smart meters is emerging

Smart Meter they owned with. The complete

in every street of Indian Societies/Colonies. Perhaps

exploration of ASM will be discussed in coming

this change initially is little bit unfamiliar since

Methodology. But, for now instant let‘s discuss

citizens are facing problems in getting details

coming scenario which Indian Government is trying

correctly in their hands. So, there‘s comes a need of

to establish under these needs.

developing and inventing such innovative way that

government should become completely transparent to Actually, since all governmental services are now

public and long queues for submitting electricity tending to connect with Aadhaar Database (The huge

bills. Biometric Database in world). But, due to some

insecurity issue public is not ready to share their

For this kind of problem approach, we are here to

personal Aadhaar Credentials with Government

introduce a new way to interact with all

because many are thinking of leaking of details in

governmental services like one we discuss above. So,

some wrong hands.

here introducing ―Aadhaar Smart Meter: A Real-

Time Bill Generator‖ (ASM). This new way of For that, we have solved this issue just by connecting

connecting government services brings Aadhaar Server‘s database with simple mobile number and if

server to come in existence and customer Aadhaar Database is provided then the mobile

connectivity with Smart Meter via Electricity number and respective details can be retrieved easily

Department‘s Server. The whole scenario is and quickly irrespective of huge database

discussed in coming parts. connectivity.

Keywords: Database, Smart Meter, Aadhaar II. PROPOSED IDEA

Database, Cloud, Gateway.

Before digging in the actual methodology let‘s have a

I. INTRODUCTION look on the basic architecture of ASM which can be

used to manipulate the required electrical factors and

A Smart Meter is a Digital Meter for analyzing the

to calculate the bill as month wise and set to count

Power Factor, Units Used, Power Used to be

zero.

displayed on a LCD screen with connectivity to both

Electricity Department‘s Server as well to Customer Actually, smart meter consists of the readings

Database stored in Cloud Database with respect to available for providing real-time sensors, power

customer‘s provided details like: Aadhaar_Number, outage notification and power quality monitoring.

Customer_Name, Customer_Address, Due to availability of these extra features, the simple

Customer_Region etc. But nowadays government has Automatic meter Reading differs in greater extent.

implemented Smart Meters for just displaying Power With that, the following symbolic block diagram can

Volume 10, Issue 1 ∙January-June 2019 11

clear much things about ASM and how‘s the working one month the user gets online payment link over

and functioning occurs. SMS Service and thus helps customer not to go

electricity bill payment office to stand in long

queue.

- With that, the real innovation comes when

one month expires and now it‘s turn to pay the

bill using payment portal via SMS service or

using PayTm Different electricity

department‘s portals. Plus, now how to

generate manual bill that is officially sent by

electricity department. This can also be

resolved using Customer‘s android Device or

if customer is not having then he/she can

request government officials to come and

generate bill using their official android

Fig 1: Symbolic Block Diagram of Smart Meter device.

Here, Fig 1 makes clear of important parts that plays - Since, nowadays the trend of using android

crucial role in making smart meter liable and device is so much at high scale of popularity

beneficial for home connection. This consists of that every citizen is active online and has

following components as follows: tremendous amount of data consumption. So,

one can use this amazing trend as a use of

- Data Reader: This is the initial point where the government‘s service.

functioning of a simple meter reading starts

which helps to store and get the data like power - The basic idea can be used to generate

outage, power units consumed which it passes to manual bill and clear out the reading shown on

LCD Controller. Also, the Home Connection is particular customer‘s meter and help to print

connected wirelessly to Electricity Department that bill at same time.

Server which helps to fetch electricity account - So, from Fig 2 one can understand the flow

no. stored as Elect_Acc_No (say) and of information takes place when scanning of

corresponding customer‘s account details which Meter LCD takes place to generate the

indirectly fetched from connected Aadhaar required bill from Meter itself. The

Database. Functioning of each component is as follows:

- LCD Controller: This is the second phase which

consists of LCD Screen connected with Data

Reader which provides the data like Power

Factor, Units Consumed to the outside world.

With that, the details at real time are getting

updated over Database maintained under

Electricity Department Server.

- Automatic SMS Sender Block: This is the block

used for sending the data manipulated in 15 days

and shown over database with calculated

proposed bill amount to the user‘s registered

mobile number so that for next 15 days,

electricity can be consumed safely to ensure

green environment and this facility will too help Fig 2: Depiction of Flow of Information

one to alert the late bill submission. Plus, after - Android Application: There will be an android

12 IITM Journal of Management and IT

application that scans the meter LCD screen and - Elimination of wrong data transmitted over

thus fetches the information from electricity Citizen‘s Electricity Bill.

department server which initializes the value in

- Will increase the transparency over

database, that this particular meter for particular

Government‘s Functioning and Processing.

customer_id with corresponding to

Customer_name taken from Aadhaar Database to IV. FUTURE WORK

status as true. Then, after turning status as true of This research paper represents one of the applications

scanning done the value of units consumed and that is applied but as there is still a huge number of

rupees calculated inside server of electricity applications to study which can be further be taken in

department. contrast of study and analysis.

- Mini Printer: There is one new feature in Smart As this paper only includes the architectural point of

Meter that includes mini printer which is view of Smart Meter.The same proposed idea can be

attached inside the meter which is used to print studied for more enhancements/applications as

the required bill which is requested by android follows:

app by electricity dept. server and the

corresponding request is sent back by server to - Can be used for large-scale level study in

Mini Printer and thus do the task of printing bill industrial application.

which is sent officially by server automatically. - Can be implemented by deploying the idea to

Plus, the data calculated over manipulation with real-world machine.

server is temporally stored over android app

database which secures that if any transaction - Security measures can study and apply

failed in between the changes will be rolled back. thoroughly to this study.

- Aadhaar Database: Here, in this scenario we REFERENCES

used Aadhaar database since after scanning if [1] W. Luan, J. Peng, M. Maras, J. Lo and B.

any customer wants to pay the bill amount at the Harapnuk, "Smart Meter Data Analytics for

same time can give request from android app to Distribution Network Connectivity

Server and thus the amount can be deduced using Verification," in IEEE Transactions on Smart

connected Bank Server which is connected to Grid, vol. 6, no. 4, pp. 1964-1971, July 2015.

one‘s Aadhaar Database and indirectly to

Electricity Department Server and after the [2] G. R. Barai, S. Krishnan and B. Venkatesh,

transaction is done the receipt of payment status "Smart metering and functionalities of smart

can be printed over mini printer. meters in smart grid a review," 2015 IEEE

Electrical Power and Energy Conference

Moreover, the applications over this proposed idea (EPEC), London, ON, 2015, pp. 138-145.

can be implemented easily under government‘s

services which will definitely reduce the manual [3] V. G. Vilas, A. Pujara, S. M. Bakre and V.

paper work and money exchange. Muralidhara, "Implementation of metering

practices in smart grid," 2015 International

III. CONCLUSIONS Conference on Smart Technologies and

Therefore, this research idea if implemented by the Management for Computing, Communication,

Governmental Services can give the current Nation‘s Controls, Energy and Materials (ICSTM),

Use of manual Electricity Bill payment to automatic Chennai, 2015, pp. 484-487.

payment and thus, eliminating the long queues. [4] R. Rashedi and H. Feroze, "Optimization of

Moreover, the advantages that this proposed idea process security in smart meter reading," 2013

gives to particular Indian Citizen are as follows: Smart Grid Conference (SGC), Tehran, 2013,

- Initialization of Paperless and Cashless pp. 150-152.

Technology which will promote Digital India [5] S. Elakshumi and A. Ponraj, "A server based

Movement initiated by Indian Government. load analysis of smart meter systems," 2017

Volume 10, Issue 1 ∙January-June 2019 13

International Conference on Nextgen 2017 IEEE International Conference on Smart

Electronic Technologies: Silicon to Software Grid and Smart Cities (ICSGSC), Singapore,

(ICNETS2), Chennai, 2017, pp. 141-144. 2017, pp. 243-247.

[6] J. Russell, "Smart metering: Working towards [9] P. Bansal and A. Singh, "Smart metering in

mass roll-out," IET smart grid framework: A review," 2016 Fourth

International Conference on Parallel,

[7] Conference on Power in Unity: a Whole

Distributed and Grid Computing (PDGC),

System Approach, London, 2013, pp. 1-7.

Waknaghat, 2016, pp. 174-176.

[8] A. M. Barua and P. K. Goswami, "Smart

metering deployment scenarios in India and

implementation using RF mesh network,"

14 IITM Journal of Management and IT

A Review on Optical Character Recognition

Archit Singhal1,and Bhoomika2

1 Department of Computer Science, The NorthCap University,

Gurugram, Haryana 122017

singhal97.archit@gmail.com, wbhoomikaw@gmail.com

Abstract--- Nowadays, using a keyboard for entering OCR in general is classified into two types: Off-line

data is the most common way but sometime it and On-line. This technique of Off-line recognition is

becomes more time consuming and need lots of used for automated conversion of text into codes of

energy. So, a technique was invented named Optical letter which are usable by computer and applications

Character Recognition abbreviated as OCR that developed for text processing. But, it is more

transfigures printed as well as handwritten text into difficult, as different people have different

machine encoded text by electronic means. OCR has handwriting font. Whereas, On-line recognition deals

been a topic for research for more than half a century. with a continuous input of data stream that comes

It electronically and mechanically converts the from a transducer when the user types or writes.

scanned images which can be handwritten,

typewritten or printed text. In general, to figure out

the characters of page, OCR compares each scanned

letter pixel by pixel to a known database of fonts and

decides onto the closest match.

Index Terms - optical character recognition,

processed, pixel, scanned document, machine

encoded text.

I. INTRODUCTION

Optical Character Recognition is a simple way of

digitizing machine-encoded text that can be searched

through and processed by a machine. It is amongst

the greatest topic of research in the field of Artificial Fig. 1. Types of Optical Character Recognition

Intelligence, Pattern Recognition, Machine Vision II. LITERATURE REVIEW

and Signal Processing. Character Recognition

techniques associate a symbolic identity within the Research Paper Statement: A technique named

image of character. It extracts the significant Optical Character Recognition abbreviated as OCR

information and directly enters it to the database which is in its development stage has proven to be

instead of using accustomed methods of manual data much beneficial for transfiguring any kind of

insertion. handwritten material to digitized form.

This technique was firstly introduced for two main This paper reviews the work done by various authors

reasons i.e., expanding telegraphy and helping blinds in the field of exploring Optical Character

to get education. Emanuel Goldberg and Edmund Recognition. Prior studies have identified various

Fourier d‘Albe were first to work on this technique in steps involved from pre-processing the image to give

1914. They built a machine that firstly scan the the final Digitized output. Also, the paper has

characters and later convert them into standard depicted various fields where this technology has

telegraph code and another device named Optophone been efficiently implemented. But as it is in its

that produced specific tone around specific letters or development stage, it also faces few challenges in

characters. These machines were patented in 1931 giving the best required output. Integrating the

and now they are acquired by IBM. concept and theories provided in paper to various

Volume 10, Issue 1 ∙January-June 2019 15

other fields with more advanced development will character‘s shape (slant, skew, curve, etc).

show much better results surpassing 99%. Sometimes this difficulty arises due to overlapping of

one or more characters also.

Additionally, material learned in paper can be applied

to benefit the community through a variety of D. Post Processing

tangible services

In this phase, features of every character is enhanced

III. PHASES OF OCR and extracted. In this phase, we can classify every

character in a unique way. Feature of individual

The whole procedure of transfiguring the handwritten

character is enhanced. Also, if there are some

as well as printed text into machine encoded text is

unrecognized characters found, they are also given

broadly divided into four simple phases:

some meaning. Extra templates can also be added in

this phase for providing a wide range of compatibility

checking in the systems database.

IV. APPLICATIONS OF OCR

Optical Character Recognition transfigures the

scanned documents into more than an image file;

rather, turn it into a readable as well as searchable

text-file that can be processed by computers.

OCR is a field with enormous application in number

of industries such as legal, healthcare, banking,

education, etc.

Fig. 2. Phases of Optical Character Recognition

A. Banking

A. Pre Processing

In banks, cheques are processed using OCR without

In this phase, the image is scanned starting from the any kind of involvement by humans. The inserted

top to the end & converted into gray level image, cheque in the device is searched & scanned for the

which is then converted into digital binary image. writing in various fields and the amount is transferred

This process is sometimes termed as Digitization of to the following payer. This whole process reduces

image or Binarization. We use various scanners for the overall cheque process time.

this phase and last digital image then goes to the next

step. B. Healthcare

B. Character Extraction The use of OCR technology has also been increased

in Healthcare industry to process paperwork. In the

The pre-processed image of the previous step serves healthcare industry, they deal with the huge amount

as the input of the following step. In this step, each of forms like patient details, medical-history,

single character of the image is recognized. Also, the insurance forms, etc., so, in order to reduce energy

image is converted into the window size from the and time, this technology is used.

normalized form in this step.

C. Legal Industry

C. Segmentation

Documents are scanned; information is extracted and

This is the most important step of the whole process automatically entered into the database to save space.

as it removes most of the noises from the images for The time consuming task that requires the need to

more understandable form. It segments different search for information through boxes is also

characters into various zones i.e., upper, middle and eliminated. This helps in locating any of the specific

lower zone. text/document easily. It has also helped legal industry

Segmenting is difficult in offline recognition because to have easy, fast and readily available access to a

of variability in paragraph, words of line and huge library of documents.

16 IITM Journal of Management and IT

D. Invoice Imaging environment. The object detected makes the text

recognition in processed image very challenging as

It is important to maintain a track of financial records

the appearances and structure of these objects is

to avoid any piles of backlog payments. Among other

comparative to the text present around it. Text itself

processes, OCR helps in simplifying collection and

is easily present in any form to encourage

analysis of large sets of data. It is also used to decrypt

decipherability making the scene of segregating text

the large amount of information stored in the Digital

from non-text very intricate

code like Bar & QR codes.

C. Conditions of uneven lighting

E. Other Fields

The major challenge for OCR is degradation of text

OCR is extensively used in many other different

quality due to uneven lighting and shadows when

areas also, like:

images are taken in a natural environment. This

CAPTCHA- to prevent hacking; results in poor detection, recognition and detection of

Digital Libraries- sharing of digital teaching material; text. This case of shadows and uneven lightning

differentiates between images taken by the camera

Optical Music Recognition- to extract information and scanners. The lack of proper lighting makes

from images; scanned images more preferred than images

Automatic Number Recognition- to identify vehicle processed by camera for their better text and

registration plates; characteristics quality. But these problems of lighting

can be solved by using flash in camera which also

Handwriting Recognition; Education; lead to some new challenges.

Maestro Recognition Server; Trapeze. D. Skewness

V. CHALLENGES OF OCR In OCR technique, the POV for the image used as

The techniques of OCR require images of high input might change when the image is taken from

resolution which have basic structural property camera or any hand-held devices which is not

differentiating text and background to get high applicable for scanner image input. As a result,

accuracy in character recognition. Image generation change of point of view leads to skewing which

plays an important role in determining the accuracy provides a great degree of poor results when image is

and successful recognition. The image generated by processed. To overcome this problem, many deskew

scanners gives high performance and accuracy while techniques are available such as RAST algorithm,

images generated by cameras have numerous errors Methods of Fourier transformation, projectile profile

due to surroundings & factors related to camera. etc.

These errors are clarified as follows E. Aspect ratio

A. Tilting The image of documents obtained by scanners is

parallel and in line to plane of sensor which is not

The image of documents obtained by scanners is observed in image taken by hand-held devices. The

parallel and in line to plane of sensor which is not text nearer to camera seems a little large while the

observed in image taken by hand-held devices. The text distant appears smaller which causes perspective

text nearer to camera seems a little large while the distortion resulting in tilted pictures. The perspective

text distant appears smaller which causes perspective intolerant recognizer causes lower recognition rate

distortion resulting in tilted pictures. The perspective and accuracy. The new latest cell phones can easily

intolerant recognizer causes lower recognition rate recognize if the portable device is tilted and then can

B. Scene Complexity prohibit clients to click images. This all detection and

prohibition is done with the help of orientation

The image taken by portable devices generally

sensors which also allows camera to align the text in

involves various no of artificial objects such as

plane of form resulting in greater degree of evenness

building, symbols, cars etc. considering a regular

Volume 10, Issue 1 ∙January-June 2019 17

F. Wraping scripts.

One character on another can be another challenge Imperfections and irregularities in OCR systems are

for OCR to be precise. This situation arises when mainly due to problems occurred during scanning

images are scanned using flatbed scanners which phase which usually result in inappropriate text or

procured text on picture of the twisted text. character. These irregularities often result in the

misinterpretation among text and graphics or among

For panacea, a technique called dewraping was

text and noise. Perfectly scanned character can also

introduced by Ulges et al, which treat these texts the

cause imperfections due to characters with the same

same way as they are equally distant and parallel to

shapes and features, which makes the system difficult

each other.

to exactly recognize the character. With this we can

G. Multilingual Environments conclude that precision of OCR totally depend on the

Latin language contains a large number of symbols, quality of input it takes.

character classes as it is composed of many other Although we have seen a lot of improvement and

languages like, Japanese, Korean and Chinese. advancement in OCR in recent years, from reading

Arabic languages have characters with different only a limited set of characters to reading characters

writing shapes. Hindi language contains syllables with different fonts and styles and further reading

which are made up of combining different shapes. handwritten text. In the coming years, seeing

Therefore, multilingual become a primary problem in advancement in technology, one can predict that

OCR. OCR can have much more potential and recognition

H. Fonts in following years.

Using different styles and fonts for different VII. CONCLUSION

characters can make them overlap with each other This paper tells about a field in Artificial Intelligence

and thus making OCR difficult. It is difficult to i.e., Optical Character Recognition; its types, its

perform precisely accurate recognition due to whole process and its applications in different areas.

various within subclasses variations and forming

Optical Character Recognition or OCR has made

pattern sub-spaces.

scanned documents to become more than an image

VI. SCOPE OF OCR file, rather, turning them into a fully searchable,

Nowadays, a diverse collection of OCR systems are readable as well as editable text file that can be

available but still we face many problems therein. processed by computers.

The collections of OCR systems were earlier The research in this area has been going from more

categorized into two groups. The first group includes than half of the century and the aftermath have been

machines that are specially designed to recognize striking with successful recognition rates surpass

specific set of problems which are mostly hard-wired 99% with notable advancement accomplishing for

so become little expensive and also decreases cursive handwritten character recognition. Further,

throughput rates. The second kind of group includes the research in this area aims for more improvement

all software based techniques which involve and scope.

computer or low cost scanner. Due to advancements

REFERENCES

in recent technologies, the second group of OCR

systems is much more cost effective with high [1] G.Vamvakas, B.Gatos, N. Stamatopoulos, and

throughput; however, there are few limitations in S.J.Perantonis: A Complete Optical Character

these systems regarding speed and reading set of Recognition Methodology for Historical

characters. They read the data line-by-line and Documents 2007.

transfer it to the OCR software systems. OCR

[2] Karez Abdulwahhab Hamad, Mehmet Kaya: A

systems are now categorized into five different

Detailed Analysis of Optical Character

groups based on character sets, namely, fixed-font,

Recognition Technology. International Journal

multi-font, omni-font, constraint handwriting and

of Applied Mathematics, Electronics and

18 IITM Journal of Management and IT

Computers Advanced Technology and Science [12] Yu, F. T. S., Jutamulia, S. (Editors): Optical

ISSN: 2147-8228. Pattern Recognition, Cambridge University

Press, 1998.

[3] Combination of Document Image Binarization

Techniques 2011. [13] Mantas, J.: An Overview of Character

Recognition Methodologies, Pattern

[4] International Conference on Document

Recognition, 19(6), pp 425–430, 1986.

Analysis and Recognition 2015.

[14] Pradeep J, Srinivasan E, Himavathi S.:

[5] D-Lib Magazine: How Good Can It Get?

Diagonal based feature extraction for

Analysing and Improvising of OCR Accuracy

handwritten character recognition system

in Large Scale Historic Newspaper

using neural network. InElectronics Computer

Digitisation Programs.

Technology (ICECT), 2011 3rd International

[6] Raghuraj Singh1 , C. S. Yadav2 , Prabhat Conference on 2011 Apr 8 (Vol. 4, pp. 364-

Verma3 , Vibhash Yadav4: Optical Character 368). IEEE.

Recognition (OCR) for Printed Devnagari

[15] Bishnu A, Bhattacharya BB, Kundu MK,

Script Using Artificial Neural Network

Murthy CA, Acharya T.: A pipeline

[7] B. B. Chaudhary and U. Pal: OCR Error architecture for computing the Euler number

Detection and Correction of an Inflectional of a binary image. Journal of Systems

Indian Language Script, Pattern Recognition, Architecture. 2005 Aug 31;51(8):470-87.

IEEE Proceeding of 13th International

[16] Verma R, Ali DJ. A-Survey of Feature

Conference on Image Processing 2002.

Extraction and Classification Techniques in

[8] Agia Paraskevi, Athens: Institute of OCR Systems. International Journal of

Informatics and Telecommunications, Computer Applications & Information

National Center for Scientific Research: Technology. 2012 Nov;1(3).

Demokritos, GR-153 10.

[17] Md. Anwar Hossain, Optical Character

[9] Optical Character Recognition System Using Recognition based on Template

BP Algorithm: Department of Industrial Matching(2018)

Systems and Information Engineering, Korea

[18] C. Vasantha Lakshmi1 and C. Patvardhan ―An

University, Sungbuk-gu Anam-dong 5 Ga 1,

optical character recognition system for

Seoul 136-701, South Korea.

printed

[10] Dholakia, K., A Survey on Handwritten

[19] Telugu text , Pattern Analysis &

Character Recognition Techniques for various

Applications‖, Category, Theoretical

Indian

Advances, Volume 7, Number 2 / July, 2004

[11] Languages, International Journal of Computer Pages 190-204

Applications, 115(1), pp 17–21, 2015.

Volume 10, Issue 1 ∙January-June 2019 19

Prediction of Heart Attack Using Machine Learning

Akshit Bhardwaj1, Ayush Kundra2, Bhavya Gandhi3,

Sumit Kumar4, Arvind Rehalia5, Manoj Gupta6

1,2,3,4,5,6

Department of Instrumentation & Control Engineering

Bharati Vidyapeeth’s College of Engineering Delhi-110063

Abstract- Cardiovascular diseases are one of the data and diagnose problems in the healthcare field.

biggest reasons for death of millions of people

A simplified explanation of what the machine

around the world only second to cancer. A heart

learning algorithms would do is, it will learn from

attack occurs when a blood clot blocks the blood flow

previously diagnosed cases of patients. A heart

to a part of the heart. In case this blood clot cuts off

Problem must be diagnosed quickly, efficiently and

the blood flow entirely, the part of the heart muscle

correctly in order to save lives. Due to this

begins to die as a result. Going by the statistics, a

Researchers are interested in predicting risk of heart

heart problem can gradually start between the age of

disease and they created different heart risk

40-50 for people with unhealthy diet and bad lifestyle

prediction systems using various machine learning

choices. So, an early prognosis can really make a

techniques. The presence of missing and outlier data

huge difference in their lives by motivating them

in the training set often hampers the performance of a

towards a healthy and active life. By changing their

model and leads to inaccurate predictions. So, it is

lifestyle and diet this risk can be controlled. This

critical to treat missing and outlier values before

Project intends to pinpoint the most relevant/risk

making a prediction.

factors of heart disease as well as predict the overall

risk using machine learning. The machine learning 1. Missing: For continuous variable, we can find

model predicts the likelihood of patients getting a the missing values using isnull() function. Mean

heart disease trained on dataset of other individuals. of the data can also help identify. We can also

As the result, the probability of getting a heart write an algorithm to predict the missing

disease based on current lifestyle and diet is variables.

calculated. The model was trained with Framingham 2. Outlier: We can use a scatter plot to identify and

heart study dataset. as per need, delete the data, perform

Keywords:-Heart Disease, Machine Learning, logistic transformation, binning, Imputation or any other

regression, Cross-validation method. Diagnosis of heart disease using K-fold

cross validation method will be used to evaluate

I. INTRODUCTION

the data and the result would be more accurate.

Machine Learning is one of the most rapidly evolving 80% data of the patients will be used for training

fields of AI which is used in many areas of life, and 20% for testing. Parameter tuning is also

primarily in the healthcare field. It has a great value necessary if accuracy is not close to 80 %.

in the healthcare field since it is an intelligent tool to Logistic regression is the suitable regression

analyse data, and the medical field is rich with data. analysis to perform when the dependent variable

In the past few years, numerous amounts of data were „y‟ is either 0 or 1. Like all regression models,

collected and stored because of the digital revolution. the logistic regression is a type of predictive

Monitoring and other data collection devices are analysis. Logistic regression is used to explain

available in modern hospitals and are being used the relationship between dependent variable

every day, and abundant amounts of data are being usually „y‟ and various nominal, ordinal,

gathered. It is very hard or even impossible for interval or ratio-level independent variables

humans to derive useful information from these (array of x features).

massive amounts of data that is why machine

3. Features have higher odds of explaining the

learning is widely used nowadays to analyse these

variance in the dataset. Thus, giving improved

20 IITM Journal of Management and IT

model accuracy. The dependent variable or target Vadicherla and Sonawane et al [4] proposed a

variable should be binary/dichotomous in nature. minimal optimization technique of SVM for coronary

heart disease prediction. This technique helps in

4. There should be no missing value/outlier in the

training of SVM by looking for the optimal values

data, which can be assessed by or converting the

during training period. This shows minimal

continuous values to standardized scores.

optimization technique provided good results even on

5. There shouldn‟t be a high correlation among the a big dataset and execution time was also reduced

predictors. This can be interpreted by a significantly.

correlation matrix among the predictors. The

Elshazly et al [5] presented a classifier called Genetic

regression analysis is the task of estimating the

algorithm SVM method Bio-Medical diagnosis in

log of odds ratio of an event.

which 18 features were reduced to 6 features via

6. Statistical tools easily allow us to perform the dimensionality reduction. Different kernel functions

analysis for better results. Adding independent were put up for use and performance was compared

variables to a logistic regression model will in terms of measures like accuracy, precision, recall,

always increase the amount of variance which area under curve and f1 score. The results showed

would reduce the accuracy. that linear SVM classifier managed an 83.10%

accuracy with 82.60% true positive rate, 84.90%

II. LITERATURE SURVEY

AUC and 82.70% f1 score.

In the past few years, a lot of projects related to a

heart disease risk prediction have been developed. III. PROPOSED SYSTEM

Work carried out by various researchers in the field The machine learning technique used for the

of medical diagnosis using machine learning analysis prediction of heart attack is Logistic Regression. The

has been discussed in this section of the paper. Dataset used for analysis and training is taken from

Das et al [1] worked on Deep Learning technology to Framingham Heart Study. It is a long-term, ongoing

find odds ratio or the prediction values from various cardiovascular cohort study of residents of the city of

different analytical models and with K-nearest Framingham, Massachusetts. The study began in

neighbours got 89.00% classification accuracy on the 1948 with 5,209 adult subjects from Framingham and

Cleveland dataset for heart study. is now on its third generation of participants. This

Dataset can be found at

Anbarasi et al [2] used 3 binary classifiers such as www.framinghamheartstudy.org

Naive Bayes, K-means clustering and Random forest

for heart attack risk prediction using 13 features and This research intends to pinpoint the relevant/risk

then applied feature engineering for algorithm tuning factors of heart disease as well as predict the overall

and got great prediction results. They discovered that risk using logistic regression. Mathematically, we can

Random forest outperforms the other two binary say that the logistic regression uses a Sigmoid

function. Logistic regression values are categorical

Classifiers with an accuracy of 99.2% for binary unlike linear regressions which are continuous. The

classification. The accuracy of K-means was 88.3% logistic function is a sigmoid function, which takes

and Naive Bayes was about 96.5%. any real value between 0 and 1. Mathematically,

Zhang et al [3] suggested an effective heart attack S(y) = 1 / 1 + e-y

prediction model using Support Vector Machine

(SVM) algorithm. In this, Principal Component Or p (probability) = 1 / 1 + e - (β0 + β1x) Consider

Analysis was applied to retrieve the imperative „y‟ as a linear function in a regression analysis,

features and different kernel functions. The highest y+= β0 + β1x

accuracy was found with Radial Basis Function. To

Putting y in s(y) sigmoid function, it becomes a

get the optimum parameter values, Grid search in

logistic function after solving, logit(p) = log(p/(1−p))

SVM was brought to use and optimum values were

=

found. The maximum classification accuracy touched

about 88.64%. β0+β1∗ Sexmale+β2∗ age+β3∗ cigsPeryear+β4∗ tot

Volume 10, Issue 1 ∙January-June 2019 21

Chol+β5∗ BP+β6∗ heartrate+β7∗ BMI testing datasets using cross-validation.

Here, β0 = Regression Constant p/1-p = odds ratio of d. Algorithm Tuning

the event βk = coefficient of x (predictors) where k =

The aim of parameter tuning is to find the best value

1,2...

for each parameter to improve the accuracy of the

ML model. To tune them, we must have a good

knowledge about their impact on the output. We can

repeat this process for other algorithms.

e. Results and Analysis

The machine learning model and implementation of a