Professional Documents

Culture Documents

Context-Based Indoor Object Detection As An Aid To

Context-Based Indoor Object Detection As An Aid To

Uploaded by

Monalia ghosh0 ratings0% found this document useful (0 votes)

3 views5 pagesindoor object detection

Original Title

Context-based_indoor_object_detection_as_an_aid_to

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentindoor object detection

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

3 views5 pagesContext-Based Indoor Object Detection As An Aid To

Context-Based Indoor Object Detection As An Aid To

Uploaded by

Monalia ghoshindoor object detection

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 5

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/221571630

Context-based indoor object detection as an aid to blind persons accessing

unfamiliar environments

Conference Paper · October 2010

DOI: 10.1145/1873951.1874156 · Source: DBLP

CITATIONS READS

29 997

4 authors, including:

Yingli Tian Aries Arditi

City College of New York Visibility Metrics LLC

159 PUBLICATIONS 12,253 CITATIONS 114 PUBLICATIONS 12,098 CITATIONS

SEE PROFILE SEE PROFILE

All content following this page was uploaded by Aries Arditi on 14 August 2014.

The user has requested enhancement of the downloaded file.

Context-based Indoor Object Detection as an Aid to Blind

Persons Accessing Unfamiliar Environments

Xiaodong Yang, Yingli Tian Chucai Yi Aries Arditi

Dept. of Electrical Engineering The Graduate Center Arlene R Gordon Research Institute

The City College of New York The City University of New York Lighthouse International

NY 10031, USA NY 10016, USA New York, NY 10222, USA

{xyang02, ytian}@ccny.cuny.edu cyi@gc.cuny.edu aarditi@lighthouse.org

ABSTRACT vision impairment to independently find doors, rooms, elevators,

Independent travel is a well known challenge for blind or visually stairs, bathrooms, and other building amenities in unfamiliar

impaired persons. In this paper, we propose a computer vision- indoor environments. Computer vision technology has the

based indoor wayfinding system for assisting blind people to potential to assist blind individuals to independently access,

independently access unfamiliar buildings. In order to find understand, and explore such environments.

different rooms (i.e. an office, a lab, or a bathroom) and other

building amenities (i.e. an exit or an elevator), we incorporate

door detection with text recognition. First we develop a robust and

efficient algorithm to detect doors and elevators based on general

geometric shape, by combining edges and corners. The algorithm

is generic enough to handle large intra-class variations of the

object model among different indoor environments, as well as

small inter-class differences between different objects such as

doors and elevators. Next, to distinguish an office door from a

bathroom door, we extract and recognize the text information

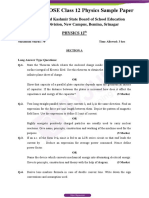

associated with the detected objects. We first extract text regions (a) (b) (c) (d)

from indoor signs with multiple colors. Then text character

Figure 1: Typical indoor objects (top row) and their

localization and layout analysis of text strings are applied to filter

associated contextual information (bottom row). (a) a

out background interference. The extracted text is recognized by

bathroom, (b) an exit, (c) a laboratory, (4) an elevator.

using off-the-shelf optical character recognition (OCR) software

products. The object type, orientation, and location can be Computer vision-based indoor object detection is a challenging

displayed as speech for blind travelers. research area due to following factors: 1) there are large intra-

class variations of appearance and design of objects in different

Categories and Subject Descriptors architectural environments; 2) there are relatively small inter-class

I.4.8 [Scene Analysis]: Object Recognition variations of different object models. As shown in Fig. 1, the basic

shapes of a bathroom, an exit, a laboratory, and an elevator are

General Terms very similar. It is very difficult to distinguish them without using

Algorithms, Design. the associated context information; 3) compared to objects with

enriched texture and color in natural scene or outdoor

Keywords environments, most indoor objects are man-made with less

Indoor wayfinding, computer vision, object detection, text texture. Existing feature descriptors which work well for outdoor

extraction, blind/visually impaired persons. environments may not effectively represent indoor objects; and 4)

when a blind user moves with wearable cameras, the changes of

1. INTRODUCTION position and distance between the user and the object will cause

Robust and efficient indoor object detection can help people big view variations of the objects, as well as only part of the

with severe vision impairment to independently access unfamiliar objects is captured. Indoor wayfinding aid should be able to

indoor environments. While GPS-guided electronic wayfinding handle object occlusion and view variations.

aids show much promise in outdoor environments, there is still a To improve the ability of people who are blind or have

lack of orientation and navigation aids to help people with severe significant visual impairments to independently access,

understand, and explore unfamiliar indoor environments, we

propose a new framework using a single camera to detect and

recognize doors, and elevators, incorporating text information

Permission to make digital or hard copies of all or part of this work for associated with the detected object. In order to discriminate

personal or classroom use is granted without fee provided that copies are similar objects in indoor environments, the text information

not made or distributed for profit or commercial advantage and that copies

associated with the detected objects is extracted. The extracted

bear this notice and the full citation on the first page. To copy otherwise,

or republish, to post on servers or to redistribute to lists, requires prior text is then recognized by using off-the-shelf optical character

specific permission and/or a fee. recognition (OCR) software. The object type and relative position

MM’10, October 25–29, 2010, Firenze, Italy. are displayed as speech for blind travelers.

Copyright 2010 ACM 978-1-60558-933-6/10/10...$10.00.

2. INDOOR OBJECT DETECTION the lateral information of a detected doorframe to obtain the depth

information with respect to the wall.

2.1 Door Detection

Among indoor objects, doors play a significant role for

navigation and provide transition points between separated spaces

as well as entrance and exit information. Most existing door

detection approaches for robot navigation employed laser range

finders or sonar to obtain distance data to refine the detection

results from cameras [1, 3, 9]. However, the problem of

portability, high-power, and high-cost in these systems with

complex and multiple sensors make them inappropriate to work

for visually impaired people. To reduce the cost and complexity

of the device and computation and enhance the probability, we

use a single camera. A few algorithms using monocular visual

information have been developed for door detection [2, 7]. Most

existing algorithms are designed for specific environments, which

cannot be extended to unfamiliar environments. Some cannot Figure 3: Concave and convex models. (a-b) concave object;

discriminate doors from typical large rectangular objects in indoor (c-d) convex objects.

environments, such as bookshelves, cabinets, and cupboards. Due to the different geometric characteristics and different

In this paper, our door detection method is extended based on relative positions, concave and convex objects demonstrate the

the algorithm in our paper [11]. We built a general geometric laterals in different positions with respect to the detected

model which consists of four corners and four bars. The model is “doorframe”, as shown in Fig. 3. In Fig. 3(a), since LL<LR, the

robust to variations in color, texture, and occlusion. Considering elevator door locates on the right side with respect to the user.

the diversity and variance of appearance of doors in different Furthermore, the elevator door, a concave object, demonstrates its

environments, we utilized the general and stable features, edges lateral (C1-C2-C5-C6) on the left side of the doorframe (C1-C2-C3-

and corners to implement door detection. C4). In Fig. 3(c), since LL<LR, the bookshelf locates on the right

side and as a convex object, its lateral (C4-C3-C5-C6) on the right

2.2 Relative Position Determination side of the frame (C1-C2-C3-C4). Similar relations can be found in

For indoor wayfinding device to assist blind or visually Fig. 3(b) and (d). Therefore, combining the position of a

impaired persons, we need also, after detecting the door, to “doorframe” and the position of its lateral, we can determine the

indicate the door’s position to the blind user. We classify the convexity or concavity of the “doorframe”, as shown in Table 1.

relative position as Left, Front, and Right as shown in Fig. 2. LL Note that the position of a frame is relative to the user; the

and LR correspond to the length of left vertical bar and the length position of a lateral is relative to the frame.

of right vertical bar of a door in an image. From the perspective

projection, if a door locates on the left side of a camera, then LL is Table 1. Convexity or concavity determination combining the

larger than LR; if a door locates on the right side of a camera, then position of a frame and the position of a lateral.

LL is smaller than LR; if a door locates in front of a camera, the Convexity or

difference between LL and LR is smaller than a threshold. Position of a frame Position of a lateral

Concavity

Therefore, we can infer the relative position of a door with respect

to the observer by comparing the values of LL and LR. In practice, Left Left Convex

to avoid scale variance, we use the angle formed by the horizontal Left Right Concave

bar and the horizontal axis of an image to determine the relative

position of a door. Right Left Concave

Right Right Convex

3. ALGORITHM OF TEXT EXTRACTION,

LOCALIZATION, AND RECOGNITION

The context information includes different kinds of visual

features. Our system focuses on finding and extracting text from

Figure 2: Examples of door position determination: (a) Left, signage. Many efforts have been made to extract text regions from

(b) Front, and (c) Right. the scene image [4, 6, 8, 10]. We propose a robust algorithm to

extract text from complex background with multiple colors.

2.3 Distinguish Concave and Convex Objects Structural features of single text character and layout analysis of

The depth information plays an important role in indoor objects text strings containing at least 3 character members are both

recognition, as well as in robotics, scene understanding and 3-D employed to refine the detection and localization of text region.

reconstruction. In most cases, doors are receded into a wall,

especially for the doors of elevators. Other large objects with 3.1 Text Region Extraction

door-like shape and size, such as bookshelves and cabinets,

extend outward from a wall. In order to distinguish concave (e.g.

3.1.1 Color Decomposition

In order to extract text information from indoor signage with

doors and elevators) and convex (e.g. bookshelves, cabinets)

complex background, we first design a robust algorithm to extract

objects, we propose a novel and simple algorithm by integrating

the coarse regions of interest (ROI) that are very likely to contain

text information. We observe that each text string generally has a 3.2 Text Localization

uniform color in most indoor environments. Thus color To localize text characters and filter out non-text regions from

decomposition is performed to obtain image regions with identical the coarse extracted ROIs, we use a text structure model to

colors to different color layers. Each color layer contains only one identify letters and numbers. This model is based on the fact that

foreground color with a white background, as shown in Fig. 4. each of these characters is shaped by closed edge boundary which

has proper size and aspect ratio with no more than two holes (e.g.

“A” contains one hole and “8” contains two). Based on the

observation that text information usually appears as a string rather

than a single character, layout analysis of text strings are taken

into account, including the area variance and the neighboring

character distance variance of a text string, as shown in Fig. 6.

The only assumption is that a text string has at least three

characters in uniform color and linear alignment.

Figure 4: Two color layers are extracted from an exit door. (a) To recognize the extracted text information, we employ off-the-

the original image; (b-c) different color layers extracted by shelf OCR software to translate the text information from image

color decomposition. into readable text codes. OCR software cannot read the text

information directly from indoor sign images without performing

3.1.2 Text Region Extraction text region extraction and text localization. In our experiment,

After color decomposition, we extract text regions based on the Tesseract and OmniPage OCR software are used for text

structure of text characters. Text characters which are composed recognition. Tesseract is open-source without graphical user

of strokes and major arcs are well-aligned along direction of the interface (GUI). OmniPage is commercial software with GUI and

text string they belong to. In each color layer, the well structured performs better than Tesseract for our testing.

characters result in high frequency of binary switches from

foreground to background and vice versa. Generally, text strings

on indoor signage have layouts with a dominant horizontal

direction respect to the image frame. We apply a 1× N sliding

window to calculate the binary switch around the center pixel of

the window P. In our system, we choose N = 13. If there are no

less than 2 binary switches occurring inside the sliding window,

the corresponding central pixel will be assigned BS(P)=1 as

foreground, otherwise BS(P)=0 as background, as shown in Fig.

5(a). In the binary switch (BS) map, the foreground clusters

correspond to regions which are most likely to contain text

information.

Figure 6: In the top row, the inconsistency of |EF| and |MN|

leads to separation of two text strings and the arrow is filtered

out; in the bottom row, area of M demonstrates inconsistency

because it is much larger than its neighbors.

4. EXPERIMENT AND DISCUSSION

We have constructed a database containing 221 images

Figure 5: (a) No binary switch occurs within the sliding collected from a wide variety of environments to test the

window in cyan while binary switches are detected within the performance of the proposed door detection algorithm and text

one in orange; (b) the extracted text regions. recognition. The database includes both door and non-door

images. Door images include doors and elevators with different

To extract the text regions, an accumulated calculation is

colors and texture, and doors captured under different viewpoints,

developed to locate the rows and columns that belong to the

illumination changes, and occlusions. Non-door images include

regions in the BS map by Eq. (1), where H(i) and V(j) denote the

door-like objects, such as bookshelves and cabinets. Furthermore,

horizontal projection at row i and vertical projection at column j in

we categorize the database into three groups: Simple (57 images),

the BS map respectively.

Medium (113 images), and Complex (51 images), based on the

, , Row TextRegion complexity of backgrounds, intensity of deformation, and

occlusion, as well as the changes of illuminations and scales.

(1) We evaluated the proposed algorithm with and without

, , Col TextRegion performing the function of differentiating doors from door-like

convex objects. For images with resolution of 320×240, the

The extracted text region is presented in Fig. 5(b). The non-text proposed algorithm achieve 92.3% true positive rate with a false

regions will further be filtered out based on structure of text positive rate of 5.0% without convex object detection. With

characters. In the extracted ROIs, text characters with larger font convex objects detection, the algorithm achieves 89.5% true

size than their neighbor are often truncated because the extended positive rate with a false positive rate of 2.3%. Table 2 displays

parts without enough binary switches compared with other text the details of detection results for each category with convexity

characters. Thus we either extend the text regions to cover the detection and without convexity detection. Some examples of

complete boundaries or remove the truncated boundaries. door detection are illustrated in Fig. 7. Our text region extraction

algorithm performs well on extracting text regions from indoor discriminate other objects with large rectangular shape such as

signage and localizing text characters in those regions. OCR is bookshelves and cabinets. In order to help blind people

employed on the extracted text regions for text recognition. The distinguish an office door from a restroom door, we have

last row of Fig. 7 shows that recognized text from OCR as proposed a new method to extract and recognize text from indoor

readable codes on the extracted text regions. Of course, if we had signage. The recognized text is incorporated with the detected

input the unprocessed images of the indoor environment directly door and further represented to blind persons in audio display.

into the OCR engine, only messy codes would have been Our future work will focus on detecting and recognizing more

produced. types of indoor objects and icons on signage in addition to text for

indoor wayfinding aid to assist blind people travel independently.

Table 2. Door detection results for groups of “Simple”, We will also study the significant human interface issues

“Medium”, and “Complex” including auditory output and spatial updating of object location,

True Positive False Positive Convexity orientation, and distance. With real-time updates, blind users will

Data Category

Rate Rate Detection be able to better use spatial memory to understand the surrounding

environment.

98.2% 0% Yes

Simple

98.2% 1.8% No 6. ACKNOWLEDGMENTS

This work was supported by NSF grant IIS-0957016 and NIH

90.5% 3.5% Yes grant EY017583.

Medium

91.4% 6.2% No

7. REFERENCES

80.0% 2.0% Yes [1] Anguelov D., Koller D., Parker E., and Thrun S., Detecting

Complex

87.8% 5.9% No and modeling doors with mobile robots. In Proceedings of

the IEEE International Conference on Robotics and

89.5% 2.3% Yes Automation, 2004.

Average

92.3% 5.0% No [2] Chen Z. and Birchfield S., Visual Detection of Lintel-

Occluded Doors from a Single Image. IEEE Computer

Society Workshop on Visual Localization for Mobile

Platforms, 2008.

[3] Hensler J., Blaich M., and Bittel O., Real-time Door

Detection Based on AdaBoost Learning Algorithm.

International Conference on Research and Education in

Robotics, Eurobot 2009.

[4] T. Kasar, J. Kumar and A. G. Ramakrishnan. “Font and

Background Color Independent Text Binarization”. Second

International Workshop on Camera-Based Document

Analysis and Recognition, 2007.

[5] Liu, C., Wang, C., and Dai, R.,Text Detection in Images

Figure 7: The top row shows the detected doors and regions Based on Unsupervised Classification of Edge-based

containing text information; the middle row shows the Features, International Conference on Document Analysis

extracted and binarized text regions; the bottom row shows and Recognition, 2005.

the text recognition results of OCR in text regions. [6] Liu, Q., Jung, C., and Moon, Y.,Text Segmentation Based on

Stroke Filter, Proceedings of International Conference on

As shown in Table 2, from “Simple” to “Complex”, the true Multimedia, 2006.

positive rate decreases and the false positive rate increases since

[7] Murillo A. C., Kosecka J., Guerrero J. J., and Sagues C.,

the complexity of background increases a lot. The convexity

Visual door detection integrating appearance and shape cues.

detection is effective to lower the false positive rate and maintain

Robotics and Autonomous Systems, 2008.

the true positive rates of “Simple” and “Medium”. In images with

highly complex backgrounds, some background corners adjacent [8] Shivakumara, P., Huang, W., and Tan, C., An Efficient Edge

to a door constitute a spurious lateral. So, this door is detected as a based Technique for Text Detection in Video Frames, The

convex object and eliminated. However, considering the safety of Eighth IAPR Workshop on Document Analysis Systems,

blind users, the lower false positive rate is more desirable. Text 2008.

extraction results in a purified background while text localization [9] Stoeter S., Mauff F., and Papanikolopoulos N., Realtime

provides the text positions for OCR. But some background door detection in cluttered environments. In Proceedings of

interferences with similar color or size to text characters are still the 15th IEEE International Symposium on Intelligent

preserved, because the pixel-based algorithms cannot distinguish Control, 2000.

them from text by high-level structure. [10] Wong, E., and Chen, M., A new robust algorithm for video

text extraction, Pattern Recognition 36, 2003.

5. Conclusion [11] Yang X. and Tian Y., Robust Door Detection in Unfamiliar

We have presented a computer vision-based indoor wayfinding Environments by Combining Edge and Corner Features.

aid to assist blind persons accessing unfamiliar environments by IEEE Computer Society Workshop on Computer Vision

incorporating text information with door detection. A novel and Applications for the Visually Impaired, 2010.

robust door detection algorithm is proposed to detect doors and

View publication stats

You might also like

- Real Time Object Detection With Audio Feedback Using Yolo v3Document4 pagesReal Time Object Detection With Audio Feedback Using Yolo v3Editor IJTSRDNo ratings yet

- 19bce0950 VL2020210503924 Pe003Document18 pages19bce0950 VL2020210503924 Pe003Priyanshu WalechaNo ratings yet

- Virtual Assistant and Navigation For Visually Impa-1Document12 pagesVirtual Assistant and Navigation For Visually Impa-1whitedevil.x10No ratings yet

- Blind Persons Aid For Indoor Movement Using RFIDDocument6 pagesBlind Persons Aid For Indoor Movement Using RFIDSajid BashirNo ratings yet

- Object Detection With Deep Learning: A ReviewDocument21 pagesObject Detection With Deep Learning: A ReviewDhananjay SinghNo ratings yet

- Object DetectionDocument13 pagesObject DetectionSuyog KshirsagarNo ratings yet

- Real-Time Object Detection Using Deep LearningDocument4 pagesReal-Time Object Detection Using Deep LearningIJRASETPublicationsNo ratings yet

- Robio 2012 6491076Document6 pagesRobio 2012 6491076rohit mittalNo ratings yet

- A Wearable Device For Indoor Imminent Danger Detection and Avoidance With Region-Based Ground SegmentationDocument14 pagesA Wearable Device For Indoor Imminent Danger Detection and Avoidance With Region-Based Ground Segmentation6 4 8 3 7 JAYAASRI KNo ratings yet

- Object Detection With Deep Learning: A ReviewDocument21 pagesObject Detection With Deep Learning: A Reviewvikky shrmaNo ratings yet

- sensors-22-04833Document17 pagessensors-22-04833deshmukhneha833No ratings yet

- 1 s2.0 S2405844023041312 MainDocument19 pages1 s2.0 S2405844023041312 MainmokhirjonrNo ratings yet

- Detecting and Identifying Occluded and Camouflaged Objects in Low-Illumination EnvironmentsDocument9 pagesDetecting and Identifying Occluded and Camouflaged Objects in Low-Illumination EnvironmentsInternational Journal of Advances in Applied Sciences (IJAAS)No ratings yet

- Object Detection Techniques For Mobile Robot Navigation in Dynamic Indoor Environment: A ReviewDocument11 pagesObject Detection Techniques For Mobile Robot Navigation in Dynamic Indoor Environment: A ReviewiajerNo ratings yet

- A Comprehensive Study of Camouflaged Object Detection Using Deep LearningDocument8 pagesA Comprehensive Study of Camouflaged Object Detection Using Deep LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Deeplearningfor ObjectdetectDocument20 pagesDeeplearningfor ObjectdetectMonalia ghoshNo ratings yet

- Robust Detection of Abandoned and Removed Objects in Complex Surveillance VideosDocument12 pagesRobust Detection of Abandoned and Removed Objects in Complex Surveillance VideosVasuhi SamyduraiNo ratings yet

- Iris Recognition With Off-the-Shelf CNN Features: A Deep Learning PerspectiveDocument8 pagesIris Recognition With Off-the-Shelf CNN Features: A Deep Learning PerspectivepedroNo ratings yet

- 1 s2.0 S0921889023001975 MainDocument9 pages1 s2.0 S0921889023001975 MainCarmen GheorgheNo ratings yet

- IJEERPaper 29Document8 pagesIJEERPaper 29Atharva KarvalNo ratings yet

- Deep Learning in Object Detection: A Review: August 2020Document12 pagesDeep Learning in Object Detection: A Review: August 2020skNo ratings yet

- Object Detection System With Voice Alert For BlindDocument7 pagesObject Detection System With Voice Alert For BlindIJRASETPublicationsNo ratings yet

- Ivorra 2018Document5 pagesIvorra 2018Qaiser KhanNo ratings yet

- Object Detection and Recognition Using Tensorflow For Blind PeopleDocument5 pagesObject Detection and Recognition Using Tensorflow For Blind Peoplesivakumar vishnukumarNo ratings yet

- Iodetector: A Generic Service For Indoor Outdoor DetectionDocument14 pagesIodetector: A Generic Service For Indoor Outdoor DetectionMayuresh SarodeNo ratings yet

- YOLO-ACN Focusing On Small Target and Occluded Object DetectionDocument16 pagesYOLO-ACN Focusing On Small Target and Occluded Object DetectionzugofnNo ratings yet

- Indoor Content Delivery Solution For A Museum Based On BLE BeaconsDocument22 pagesIndoor Content Delivery Solution For A Museum Based On BLE Beaconsldelosreyes122No ratings yet

- Dark - SORT Seminar (Aaiman)Document22 pagesDark - SORT Seminar (Aaiman)Umme AaimanNo ratings yet

- Artificial Intelligence Based Mobile RobotDocument19 pagesArtificial Intelligence Based Mobile RobotIJRASETPublicationsNo ratings yet

- Feng 2015 HrilbrDocument2 pagesFeng 2015 HrilbrguissimoesNo ratings yet

- LIDAR Assist Spatial Sensing For The Visually Impaired and Performance AnalysisDocument10 pagesLIDAR Assist Spatial Sensing For The Visually Impaired and Performance AnalysisAhmad GhoziNo ratings yet

- A Survey of Modern Object Detection Literature Using Deep LearningDocument15 pagesA Survey of Modern Object Detection Literature Using Deep LearningAmrit KumarNo ratings yet

- Abnormal Crowd Detection in Public Places Using OpenCV and Deep LearningDocument6 pagesAbnormal Crowd Detection in Public Places Using OpenCV and Deep LearningIJRASETPublicationsNo ratings yet

- 18 TallapallyHarini 162-170Document9 pages18 TallapallyHarini 162-170iisteNo ratings yet

- Applsci 12 07825Document23 pagesApplsci 12 07825sadeqNo ratings yet

- Blind Assistive System Based On Real Time Object Recognition Using Machine LearningDocument7 pagesBlind Assistive System Based On Real Time Object Recognition Using Machine Learninglahiru bulathwaththaNo ratings yet

- Object Detection With Voice Guidance To Assist Visually Impaired Using Yolov7Document7 pagesObject Detection With Voice Guidance To Assist Visually Impaired Using Yolov7IJRASETPublicationsNo ratings yet

- Convolutional Networks For Images, Speech, and Time-Series: January 1995Document15 pagesConvolutional Networks For Images, Speech, and Time-Series: January 1995sridharchandrasekarNo ratings yet

- Assistive Object Recognition System For Visually Impaired IJERTV9IS090382Document5 pagesAssistive Object Recognition System For Visually Impaired IJERTV9IS090382ABD BESTNo ratings yet

- Object Search Using Object Co-Occurrence Relations Derived From Web Content MiningDocument13 pagesObject Search Using Object Co-Occurrence Relations Derived From Web Content MiningXiaoyang YuanNo ratings yet

- Computer Vision 3Document38 pagesComputer Vision 3sagar patoleNo ratings yet

- Object Detection Using Stacked Yolov3: Ingénierie Des Systèmes D Information November 2020Document8 pagesObject Detection Using Stacked Yolov3: Ingénierie Des Systèmes D Information November 2020lamine karousNo ratings yet

- Lecun 2015Document10 pagesLecun 2015spanishbear75No ratings yet

- Object DetectionDocument10 pagesObject Detectionashmita deyNo ratings yet

- Live Object Recognition Using YOLODocument5 pagesLive Object Recognition Using YOLOInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Advanced Indoor and Outdoor Navigation System For Blind People Using Raspberry-PiDocument14 pagesAdvanced Indoor and Outdoor Navigation System For Blind People Using Raspberry-PiGeorge AdrianNo ratings yet

- Deep Learning ApplicationsDocument309 pagesDeep Learning Applicationszxv7mg4b2zNo ratings yet

- A Traffic Sign Recognition Method Under Complex Illumination ConditionsDocument12 pagesA Traffic Sign Recognition Method Under Complex Illumination ConditionsEZHILMANI SNo ratings yet

- Satellite and Land Cover Image Classification Using Deep LearningDocument5 pagesSatellite and Land Cover Image Classification Using Deep LearningEditor IJTSRDNo ratings yet

- A Review Comparison of Performance Metrics of PretDocument16 pagesA Review Comparison of Performance Metrics of PretSamsam87No ratings yet

- Real Time Object Detection Using Deep LearningDocument6 pagesReal Time Object Detection Using Deep LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Real Time Obj Detect For BlindDocument6 pagesReal Time Obj Detect For Blinddata proNo ratings yet

- Hao2020 Article IrisSegmentationUsingFeatureChDocument12 pagesHao2020 Article IrisSegmentationUsingFeatureChnaseemNo ratings yet

- JCTN Avinash Rohini 417 425Document10 pagesJCTN Avinash Rohini 417 425demihuman086No ratings yet

- Vision AI A Deep Learning-Based Object Recognition System For Visually Impaired People Using TensorFlow and OpenCVDocument7 pagesVision AI A Deep Learning-Based Object Recognition System For Visually Impaired People Using TensorFlow and OpenCVIJRASETPublicationsNo ratings yet

- TELILATrilaterationand Edge Learningbased Indoor Localization Techniquefor Emergency ScenariosDocument9 pagesTELILATrilaterationand Edge Learningbased Indoor Localization Techniquefor Emergency ScenariosAnthony WellsNo ratings yet

- Facial Emotion and Object Detection For Visually Impaired Blind Persons IJERTV10IS090108Document4 pagesFacial Emotion and Object Detection For Visually Impaired Blind Persons IJERTV10IS090108Aayush SachdevaNo ratings yet

- Deep Learning with Python: A Comprehensive Guide to Deep Learning with PythonFrom EverandDeep Learning with Python: A Comprehensive Guide to Deep Learning with PythonNo ratings yet

- Learn Computer Vision Using OpenCV: With Deep Learning CNNs and RNNsFrom EverandLearn Computer Vision Using OpenCV: With Deep Learning CNNs and RNNsNo ratings yet

- Do It With Electrons ! IIDocument37 pagesDo It With Electrons ! IIHilmi FauzanNo ratings yet

- PDF Physics For Scientists and Engineers With Modern Physics 4Th Edition Douglas C Giancoli Ebook Full ChapterDocument53 pagesPDF Physics For Scientists and Engineers With Modern Physics 4Th Edition Douglas C Giancoli Ebook Full Chaptersally.buziak425100% (2)

- Nvis 6006Document1 pageNvis 6006Mohammd EssaNo ratings yet

- Opacimetro Manual OriginalDocument46 pagesOpacimetro Manual Originalcdacentrorev IngenierosNo ratings yet

- Master CatalogDocument36 pagesMaster Catalogismaele triggianiNo ratings yet

- Physics 10 Bulletin Board 2Document8 pagesPhysics 10 Bulletin Board 2Mariel CondesaNo ratings yet

- Reflection of LightDocument50 pagesReflection of LightTrixie Nicole CagadasNo ratings yet

- Glossary - Online - Visual TestingDocument22 pagesGlossary - Online - Visual TestingMohamad JunaedyNo ratings yet

- LYSAGHT Trimdek Optima (Sep 2010)Document12 pagesLYSAGHT Trimdek Optima (Sep 2010)Budi KuncoroNo ratings yet

- Binotron 27Document16 pagesBinotron 27aeroseb1No ratings yet

- THPTQG Anh 2022 - tỉnh Bắc Ninh - 3Document5 pagesTHPTQG Anh 2022 - tỉnh Bắc Ninh - 3Van A NguyenNo ratings yet

- Physics Nmat ReviewerDocument7 pagesPhysics Nmat ReviewerLeanna DaneNo ratings yet

- Solution Manual For Managing For Quality and Performance Excellence 9th Edition by EvansDocument37 pagesSolution Manual For Managing For Quality and Performance Excellence 9th Edition by Evanstubuloseabeyant4v9n100% (19)

- Chapter 12 (10th Physics)Document2 pagesChapter 12 (10th Physics)Noor UlainNo ratings yet

- Curved Mirror and Lenses Assignment 2021 210518 073419Document1 pageCurved Mirror and Lenses Assignment 2021 210518 073419KENNETH GERALDNo ratings yet

- Class 7 Light and It's PhenomenaDocument3 pagesClass 7 Light and It's PhenomenaCrazzy RamNo ratings yet

- Newton's Ring Experiment - 1Document6 pagesNewton's Ring Experiment - 1darshan100% (1)

- Method Statement: Conduit Condition EvaluationDocument21 pagesMethod Statement: Conduit Condition EvaluationAzhar KhanNo ratings yet

- Science10 Q2 Mod4 v4Document11 pagesScience10 Q2 Mod4 v4Kim Taehyung100% (1)

- C WavesDocument18 pagesC WavesTravis TeohNo ratings yet

- Using Parallax TSL1401-DB Linescan Camera Module For Line DetectionDocument17 pagesUsing Parallax TSL1401-DB Linescan Camera Module For Line Detectionn224No ratings yet

- Ashadeep Iit: Chapter: 09 - Ray Optics and Optical InstrumentsDocument3 pagesAshadeep Iit: Chapter: 09 - Ray Optics and Optical InstrumentsPurab PatelNo ratings yet

- Briot EmotionDocument210 pagesBriot EmotionOsmanyNo ratings yet

- UntitledfccccfffDocument3 pagesUntitledfccccfffABNo ratings yet

- Sed 344 LPDocument3 pagesSed 344 LPapi-509434752No ratings yet

- Revised Review Material in Forensic PhotographyDocument19 pagesRevised Review Material in Forensic PhotographyJudy Ann AciertoNo ratings yet

- Experiment 3 - Prism SpectrometerDocument6 pagesExperiment 3 - Prism SpectrometerTATENDA GOTONo ratings yet

- Guide To Magnifying GlassesDocument2 pagesGuide To Magnifying GlassesIndustry JunkieNo ratings yet

- Nikon FM INSTRUCTION MANUALDocument35 pagesNikon FM INSTRUCTION MANUALlae_vacaroiu100% (1)

- Absorptive Lenses and CoatingsDocument2 pagesAbsorptive Lenses and CoatingsSayoki GhoshNo ratings yet