Professional Documents

Culture Documents

Hybrid Face Recognition With Locally Discriminating Projection

Hybrid Face Recognition With Locally Discriminating Projection

Uploaded by

Phanikumar SistlaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hybrid Face Recognition With Locally Discriminating Projection

Hybrid Face Recognition With Locally Discriminating Projection

Uploaded by

Phanikumar SistlaCopyright:

Available Formats

Hybrid Face Recognition with Locally Discriminating Projection

Venkatrama Phani Kumar S KVK Kishore K Hemanth Kumar

Vignans Engineering College Vignans Engineering College Vignans Engineering College

Guntur, A.P, India, Guntur, A.P, India, Guntur, A.P, India

ABSTRACT

The face recognition task involves extraction of

unique features from the human face. Manifold learning

methods are proposed to project the original data into a

lower dimensional feature space by preserving the local

neighborhood structure. LPP should be seen as an

alternative to Principal Component Analysis (PCA). When

the high dimensional data lies on a low dimensional

manifold embedded in the ambient space, the Locality

Preserving Projections are obtained by doing the optimal

linear approximations to the Eigen functions of the Laplace

Beltrami operator on the manifold. However, LPP is an

unsupervised feature extraction method because it considers

only class information. LDP is the recently proposed feature

extraction method different from PCA and LDA, which aims

to preserve the global Euclidean structure, LDP is the

extension of LPP, which seeks to preserve the intrinsic

geometry structure by learning a locality preserving

submanifold. LDP is a supervised feature extraction method

because it considers both class and label information. LDP

performs much better than the other feature extraction

methods such as PCA and Laplacian faces.

In this paper LDP along with Wavelet features is

proposed to enhance the class structure of the data with

local and directional information. In this paper, the face

Image is decomposed into different subbands using the

discrete wavelet transform bior3.7, and the subbands which

contain the discriminatory information are used for the

feature extraction with LDP. In general the size of the face

database is too high and it needs more memory and needs

more time for training so that to improve time and space

complexities there is a need for dimensionality reduction. It

is achieved by using both biorthogonal wavelet transform

and LDP the features extracted take less space and take low

time for training.

Experimental results on the ORL face Database

suggests that LDP with DWT provides better representation

and achieves lower error rates than LDP with out wavelets

and has lower time complexity. The subband faces performs

much better than the original image in the presence of

variations in lighting, and expression and pose. This is

because the subbands which contain discriminatory

information for face recognition are selected for face

representation and others are discarded.

Keywords: Dimensionality reduction, LPP, LDP, bior3.7.

I INTRODUCTION

The famous face recognition techniques are

appearance based; here we represent an image of size n x m

pixels by a vector of n x m dimensional space. Feature

extraction from original data is an important step in pattern

recognition tasks and it poses the input data onto feature

space. For unsupervised learning, feature extraction is

performed by constructing the most representative features

out of the original data. Feature extraction is to map the

original data on a discriminative feature space, in which the

samples from different classes are clearly separated.

Principal Component Analysis (PCA) and Linear

Discriminant Analysis (LDA) are global structure

preserving, the eigen face method uses PCA for

dimensionality reduction and maximize the total scatter

across all classes. PCA retains unwanted variations due to

lighting and facial expressions. The PCA projects original

data onto lower dimensional subspace spanned by eigen

vectors and corresponding largest eigenvalues of the

covariance matrix for data of all classes. Eigen faces are the

eigen vectors of the set of the faces. PCA aims to extract

subspace in which variance is maximized Objective function

as max

w

(y

- y)

n

=1

2

where y =

1

n

y

n

=1

. The output set

of principal vectors {w

1

,w

2

.w

k

} is an ortho normal set of

vectors representing the eigen vectors of covariance matrix

associated with the k<d largest eigen values. The Fisher

Linear Discriminant Analysis is a classical technique in

feature extraction and in contrast with PCA it does little

with label information hence it is a supervised feature

extraction technique. LDA maximizes the ratio of the

between class distance to the within distance. LDA

enhances class separability.

S

B

= n

(x

- x)

c

=1

(x

- x)

1

S

w

= n

(x

]

-x)(x

]

- x)

1 n

]=1

c

=1

The objective function is max

w

w

T

S

B

w

w

T

S

w

w

where x is the total

sample mean vector and N

c

is the number of samples,

c

is

the average vector. X

i

is the i

th

sample.

II Proposed Method

The proposed face recognition system consists of

five modules, (1) Face Cropping (2) Face Normalization (3)

Image Compression (4) Feature Extraction with LDP (5)

Classification. In order to reduce the computational

complexity, all the face images are cropped. The main

objective of this paper is that existing feature extraction

methods are capable of generating feature vectors with 70-

85% and more discrimination. Achievement of 100% of

discrimination to the generated feature vectors of the face

images is very difficult. This is due to the similarity that

exists among features of the images stored in the database.

They possess small variations and may not be able to

produce feature vectors with required amount of

discrimination.

2010 International Conference on Signal Acquisition and Processing

978-0-7695-3960-7/10 $26.00 2010 IEEE

DOI 10.1109/ICSAP.2010.68

327

Wavelets have been successfully used in image

processing. Its ability to capture localized time-frequency

information of images motivates its use for feature

extraction. Numerous techniques have been developed to

make the imaging information more easily accessible and to

perform analysis automatically. Wavelet transforms have

proven promising useful for signal and image processing.

Wavelets are applied to various aspects of imaging

informatics, including image compression. Image

compression is a major application area for wavelets.

Because the original image can be represented by a linear

combination of the wavelet basis functions, compression

can be performed on the wavelet coefficients. The wavelet

approach is therefore a powerful tool for data compression,

especially for functions with long-range slow variations and

short-range sharp variations. During the process of image

compression, it is necessary to choose symmetrical wavelet

with high vanishing moment, according to the property of

the image. The classical wavelets have a drawback of not

custom-designing the wavelet for a particular application

and most of them are not symmetrical.

A biorthogonal wavelet is a wavelet where the

associated wavelet transform is invertible but not

necessarily orthogonal. Designing biorthogonal wavelets

allows more degrees of freedoms than orthogonal wavelets.

One additional degree of freedom is the possibility to

construct symmetric wavelet functions. Architecture of the

Proposed Method is as follows.

The features of Wavelet transformation are Multi

resolution, Locality, Sparsity, Dcor relation. These

properties make the wavelet domain of natural image more

propitious to feature extraction for face recognition

compared with the direct spatial-domain.

.

Figure1: Architecture of the Proposed Method

III LOCALLY DISCRIMINATING PROJECTION

In classification problems, the class labels of the

training samples are available. The label information can be

used to guide the procedure of feature extraction. This is

supervised feature extraction, in contrast to the unsupervised

feature extraction methods, which take no consideration of

the label information. From the theoretical derivation, we

can easily find that LPP is an unsupervised feature

extraction method. Sine it has little to do with the class

information. Some preliminary efforts have already been

taken to extend LPP to be a supervised feature extraction

method, in which the similarity matrix W can be defined as.

w

]

= _

1 if x

I

anu x

j

belongs to the same class

u

The basis idea behind it is to make two points

become the same point in the feature space. If they belong

to the same class. However, this can make the algorithm apt

to over fit the training data and sensitive to the noise.

Moreover, it can make the neighborhood graph of the input

data disconnected. This contradicts the basic idea of LPP. In

this situation LPP has close connection to LDA. It means

that the manifold structure of the data, which is also very

important for classification, is distorted. To make the LPP

algorithm more robust for the classification tasks, LDP

method is proposed. LDP is the recent one and it is different

from PCA and LDA, which aim to preserve the global

Euclidean structure, LDP is the extension of LPP. Which

preserve the intrinsic geometry structure by learning a

locality preserving submanifold? But unlike LPP, LDP is a

supervised feature extraction method. LPP is based on the

spectral graph theory it is assumed that the n-dimensional

subband face (x

1

, x

2

..xn) is distributed on a low

dimensional submanifold. It is desired to find a set of d

dimensional data points (y

1

,y

2

..y

N

)with the same local

neighborhood structure as the (x

1

,x

2

.. x

n

). A weighted graph

G=(V,E,W) is constructed, where V is the set of all points,

E is the set of edges connecting the points, W is a similarity

matrix with weights characterizing the likelihood of two

points. Where W can be defined as

w

]

= _

exp_-

[X

- X

]

[

2

[

_, [X

-X

]

[

2

<

u, otbcrwisc

If x

i

is among k nearest neighbors of x

j

or x

j

is among k

nearest neighbors of x

i

; the given observation are (x

i

,

i

)

where i=1....N and

i

is the class label of x

i

. The

discriminating similarity between two points x

i

and x

j

is

defined as follows.

w

]

=

`

1

1

1

1

1

1

exp_-

[X

- X

]

[

2

[

_(1 + exp_-

[X

-X

]

[

2

[

_) 1

exp_-

[X

- X

]

[

2

[

_(1 -exp_-

[X

- X

]

[

2

[

_) 2

u S

1- If xi is among k nearest neighbors of x

j

or x

j

is among k

nearest neighbors x

i

and

i

=

j

.

Feature vectors

Normalized Face Images

Faces

Recognition

with Euclidean

Distance

Subbands

Face sub Images

LDP DWT

Image

Normalization

Face

Cropping

Face ID

328

2- If x

i

is among k nearest neighbors of x

nearest neighbors x

i

and

i

~=

j

.

3- otherwise;

Since the Euclidean distance [X

exponent, the parameter is used as a r

selection of is the problem and it controls

or smoothing of the space. Very low value f

Wij in above equation will be near zero

closest points. Low value for therefore

connections between the points. Very hi

implies that the Wij is near that Wij in equa

equation in (2) can be viewed as gener

equation (1). Usually the value of the par

chosen as the square of the average Eu

between all pairs of the data points. In

discriminating similarities of the points in th

larger than those in LPP whereas the

similarities of the points in different class

those in LPP. This is more favorable for t

tasks. Let exp _-

[X

i

-X

]

[

2

[

_ be the local

(1 + exp _-

[X

i

-X

]

[

2

[

_) and (1 - exp _-

the intra class discriminating weight

discriminating weight, respectively. Then th

similarity can be viewed as the integrati

weight and the discriminating weight. It

discriminating similarity reflects bo

neighborhood structure and the class inform

set. As for the discriminating similarity, it

the corresponding advantages can be

follows.

Property 1: when the euclidean distance is

class similarity is larger than the interclass

gives a certain chance to the points in the

more similar i.e., to have a large value o

those in different classes. This is suitable

tasks.

Property 2: since 1<= (1 + exp _-

[X

i

-X

]

[

2

[

(1 - exp _-

[X

i

-X

]

[

2

[

_) < =1 Thus no ma

the noise, the intra class and inter class s

controlled in certain ranges, respectively. T

neighborhood relationship from being for

and the main geometric structure of the dat

preserved.

Property 3: with the decreasing of the Eu

the inter class discriminating weight decrea

means close points from different classes

smaller value of similarity. Thus the m

different classes becomes larger than that

other hand with the increasing of the Euc

The local weight decreases toward 0. t

xj ,x

j

is among k

- X

]

[ is in the

egulator and the

the overall scale

for implies that

o for all but the

e minimizes the

igh value for

ation (1).Then the

ralization of the

rameter can be

uclidean distance

equation (2) the

he same class are

e discriminating

ses are less than

the classification

weight and let

-

[X

i

-X

]

[

2

[

_) be

and inter class

he discriminating

ion of the local

means that the

oth the local

mation of the data

ts properties and

summarized as

s equal, the intra

s similarity. This

same class to be

f similarity, than

for classification

2

_) <=2 and 0<=

atter how strong

similarity can be

This prevents the

rcefully distorted

ta set can largely

uclidean distance,

ases toward 0. It

s should have a

margin between

in LPP. On the

clidean distance.

this endows the

discriminating similarity with the

noise. i.e., the more distinct poin

should be less similar to each othe

that the discriminating similarity c

of margin augmentation and noise s

Because of these g

discriminating similarity can be use

Since the discriminating similarity

information and label information

called LDP. LDP can map the data

space where points belonging to th

each other while those belonging to

away from each other. At the same

structure of the original data can

LDP can limit the effect of the

margin between different classes. T

design a robust classification m

problems. To summarize the classif

follows.

1. Construct the discriminating sim

points. For each sample x

i

set the si

w

]

= W

jI

= c

_-

_X

i

-X

]

_

2

_

(

if x

j

is among the k-nearest neighbo

the similarity w

]

= W

jI

= c

_-

_X

i

-X

]

_

if x

j

is among the k-nearest neighbo

2. Obtain the diagnol matrix D and t

3. Optimize

-

= aigmin

(q

T

XX

T

q)=1

tr

This can be done by solving the

problem XIX

1

= zXX

1

4. Map the given query using the p

then predict its class label using a gi

IV Experiment

Image cropping:

Image cropping is the rem

an image to improve framing, acce

change aspect ratio.

Face Normalization:

Normalization is a proces

of pixel intensity values and

information.

Gamma Transformation:

The grayscale component

intensity (gamma < 1 ) or darken the

f (x) = alpha*x for

= beta*(x-a) + f (a) for

= gamma* (x - b)+ f (b) for

e ability to prevent the

nts from the same class

er. Both aspects indicate

can strengthen the power

suppression.

good properties, the

ed in classification tasks.

integrates both the local

n the novel algorithm is

a into a low dimensional

e same class are close to

o different classes are far

time, the main manifold

be preserved. Moreover

noise and augment the

Thus LDP can be used to

method for real world

fication has four steps as

milarity of any two data

imilarity

(1+c

_-

_X

i

-X

]

_

2

_

)

ors of x

i

and

i

=

j

; Set

2

_

(1 -e

_-

_X

i

-X

]

_

2

_

)

rs of x

i

and

i

~=

j.

the Laplacian matrix L.

rocc (

1

XIX

1

).

e generalized eigenvalue

projection matrix * and

iven classifier.

Results

moval of the outer parts of

entuate subject matter or

ss that changes the range

excludes background

ts either to brighten the

e intensity (gamma >1).

0<=x<=a

r a<x<=b

r b<x<=x max

329

Figure 2: Normalized and Original fa

By the transfer law D

B

=f (D

A

).F

input is mapped to the full range of the outp

the input image with densities in the regio

D

A

will have their pixel values re-assigne

assume an output pixel density value in th

to D

B

+d D

B

. Figure 2 shows the Normali

sample face images of ORL.

Global projection suppresses lo

and it is not resilient to face illuminatio

facial expression variation. It does not tak

task into account ideally; we wish to comp

allow good discrimination not the same as

PCA is performed on a mixture of different

similar to the unknown images which are t

LDA maximizes the ratio of between-class

within-class variance in any particular d

guaranteeing maximal separability. LD

classification to obtain a non-redundant se

eigen vectors corresponding to non-zero e

are considered and the ones correspo

eigenvalues are neglected. LPP & LDP pr

geometric structure. In short, the recognit

three steps. First, we calculate the face su

training samples; then the new face image t

projected into d-dimensional subspace

algorithm; finally, the new face image is

nearest neighbor classifier. Comparatively

has more discriminating features than tha

fisher faces. And those faces are shown in fi

Figure 3: Eigen, Fisher and Laplaci

Locally discriminating projection

recognition capability than the remaining

methods. The recognition (True positive) ra

in Table 1 and plot 1 shows the recognition

proposed method is better than LDP. The p

also has the same recognition capability w

of fewer features. Error rates (False Reje

different algorithms are shown in Table 2 an

ace images

Full range of the

put. All pixels in

n D

A

to D

A

+ d

ed such that they

he range from D

B

ized and original

ocal information,

on condition and

ke discriminative

pute features that

largest variance.

nt training images

to be recognized.

s variance to the

data set thereby

DA does data

et of features all

igen values only

onding to zero

reserves the local

tion process has

ubspace from the

to be identified is

by using our

s identified by a

a Laplacian face

at of Eigen and

figure 3.

ian faces

n has the more

g face detection

ates are tabulated

n capability of the

proposed method

with consideration

ction Rate) with

nd Plot 2 shows

Figure 4: Decompos

the low values of FRR for the prop

original LDP. The face images are

3 and they compressed up to half o

the time taken to the training o

drastically decreases. After th

decompositions the recognition abi

due to consideration of fewer fea

decompositions are shown in the fig

PCA ratio is one of the red

the PCA ratio is equal to one then

considered then there is no dimensi

by decreasing the PCA Ratio valu

dimensionality of the Laplacian

Laplacian faces with different PCA

in Table 3. Plot 3 shows that the err

increasing the PCA ratio we get mo

By modifying the minim

values the system identifies an unau

shows the error rates with differ

distances.

Plot 1: True Negative Plot2

Plot 3: PCA R

sed Faces

osed method than that of

e decomposed up to level

of their original size then

f the face images also

he fourth and fifth

ility drastically decreases

atures. The faces after 5

gure 4.

duced dimension factor if

all the eigen vectors are

ionality reduction. Hence

ue we achieve the lower

faces. The size of the

A ratio values are shown

ror rate is more hence by

re recognition rate.

mum euclidean distance

uthorized person. Table 4

rent minimum euclidean

2 : False Rejection Rate

Ratio Graph

330

Plot 4: FAR Plot 5: Tr

And the plot 4 shows with min

distance as 1e+013 has less false acceptanc

the authorized persons are identified an

persons are rejected and Table 5 shows th

values with different minimum euclidean d

5 shows with minimum euclidean distance

unauthorized persons are correctly identified

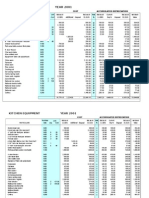

Algorithm 0 1 2 3

PCA 97 96.5 96 96.5

LDA 97.25 96.75 96 97.25

LPP 98 97.75 97.75 98.25

LDP 98.25 98.5 98.5 98.75

Table 1: True Positiv

Algorithm 0 1 2 3

PCA 3 3.5 4 3.5

LDA 2.75 3.25 4 2.75

LPP 2 2.25 2.25 1.75

LDP 1.75 1.5 1.5 1.25

Table 2: False Rejection R

PCA Ratio 0 1 2

0.5 26% 26.7% 26%

0.6 20% 16% 14.75%

0.75 4% 7.75% 5.25%

0.99 1.25% 1.5% 1.5%

1.1 1.5% 2.25% 2%

Table 3: PCA Ratio

Euclidean Distance 0 1

1e+012 0 0

5e+012 0 0

1e+013 0 45

5e+013 0 98

1e+014 14 100

Table 4: False Acceptan

Euclidean Distance 0 1

1e+012 100 100

5e+012 100 100

1e+013 100 45

5e+013 100 2

1e+014 86 0

Table 5: True Negative

rue Negative

nimum euclidean

ce rate (FAR) all

nd unauthorized

he True Negative

distances and plot

e 1e+013 all the

d.

4 5

5 95.5 95

5 95.75 95.25

5 97 96

5 97.25 96.75

ve

4 5

4.5 5

4.25 4.75

3 4

2.75 3.25

Rate

3

19%

% 13.75%

5%

1.25%

2%

2 3

0 0

0 0

0 0

0 0

82 0

nce Rate

2 3

0 100 100

0 100 100

5 100 100

100 100

18 100

V Conclusio

In this paper the LDP feat

applied on the subband face (reco

subbands) for the face recognition

results on the ORL face database sh

the proposed method. The subban

better than the original image in th

in lighting, expression and pose

subbands which contain discrimina

recognition are selected for face r

are discarded.

Reference

[1].Local structure based superv

Haitao Zhao, Shaoyuan Sun, Zhong

Science direct (2006) 1546-1550.

[2].Statistical and Computational

Preserving Projection Xiaofei He, D

[3]. Face Recognition Using Laplac

Xiaofei He, Shuicheng Yan, Yuxia

Hong-Jiang Zhang IEEE march 200

[4]. A Hybrid Feature Extractio

Recognition Systems: A. Saradha an

ICGST-GVIP Journal, Volume (5),

[5].Locality Preserving Projection

Niyogi.

Venkatrama Phani k

graduation in Mat

Nagarjuna University

working towards M

Vignans Engineerin

supervision of Prof. KVK Kisho

Hemantha Kumar. His research i

image processing, Pattern Recognit

algebra.

Prof K.V.Krishna Kish

degrees in Computer S

from Andhra Universit

currently working as

Computer Science

Department. He got more than 17

Research experience. His current r

Digital image processing,

NeuralNetworks, Pattern Recognitio

Mr K.Hemantha Kum

degrees in Computer S

from Andhra Universit

currently working as

Computer Science

Department. He got more than

experience. His current research

image processing, Biometric Securi

on

ture extraction method is

onstructed from wavelet

n task. The experimental

hows the effectiveness of

nd faces performs much

he presence of variations

e. This is because the

tory information for face

representation and others

es

vised feature extraction

gliang Jing, Jingyu Yang.

l Analysis of Locality

Deng Cai, Wanli Min

cianfaces

ao Hu, Partha Niyogi and

05.

on Approach for Face

nd S. Annadurai

Issue (5), May 2005

ns Xiaofei He, Partha

kumar S did his Post

thematics in Acharya

in 2007. He is currently

.Tech CSE Project in

ng College under the

ore and Asso. Prof K

interests include Digital

tion and numerical linear

hore received his M.Tech

Science and Engineering

ty, Visakhapatnam. He is

Professor and Head of

and Engineering

7 years of teaching and

research interests include

Biometric Security,

on and Medical Imaging.

mar received his M.Tech

Science and Engineering

ty, Visakhapatnam. He is

Associate Professor in

and Engineering

10 years of teaching

interests include Digital

ty, Pattern Recognition.

331

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5825)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Reproductive - Systems - in - Vertebrates ss2Document12 pagesReproductive - Systems - in - Vertebrates ss2Ezeh Princess100% (1)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Promiment Sobriquets (World) : S.No. Place SobriquetDocument6 pagesPromiment Sobriquets (World) : S.No. Place Sobriquetkausik nath100% (1)

- Super Mario Galaxy 2 Prima Official Game GuideDocument273 pagesSuper Mario Galaxy 2 Prima Official Game GuideZachary RobinsonNo ratings yet

- Drone Study PDFDocument54 pagesDrone Study PDFMihaela Azamfirei100% (1)

- Wall Colmonoy - Properties of Hard Surfacing Alloy Colmonoy 88 - July 2019Document8 pagesWall Colmonoy - Properties of Hard Surfacing Alloy Colmonoy 88 - July 2019joseocsilvaNo ratings yet

- Tự học Hóa Cấu tạo chấtDocument200 pagesTự học Hóa Cấu tạo chấtHoàng LêNo ratings yet

- San Andres Cop Pel 981Document21 pagesSan Andres Cop Pel 981RubenNo ratings yet

- 14Φ Series Metal Oxide Varistor (MOV) Data Sheet: FeaturesDocument12 pages14Φ Series Metal Oxide Varistor (MOV) Data Sheet: Featuresirensy vivasNo ratings yet

- An Epistle of JeremiahDocument3 pagesAn Epistle of JeremiahBenson MuimiNo ratings yet

- Origin of The Universe - On Nasadiya SuktamDocument5 pagesOrigin of The Universe - On Nasadiya SuktamRaghava Akshintala100% (1)

- WCR AscoDocument12 pagesWCR AscojuliosieteNo ratings yet

- Electrical Troubleshooting ManualDocument52 pagesElectrical Troubleshooting ManualMohammed Shdiefat100% (1)

- 4 Exploration of Coal - IV Coal Resource EstimationDocument30 pages4 Exploration of Coal - IV Coal Resource EstimationRasmiranjan Jena RajaNo ratings yet

- Midterm Examination: Ce 322 - Building Systems DesignDocument5 pagesMidterm Examination: Ce 322 - Building Systems DesignJEAN KATHLEEN SORIANONo ratings yet

- 1a209 Manual PDFDocument2 pages1a209 Manual PDFbayu edityaNo ratings yet

- Tec CHFX R Eflow 3 .0 Contr Roller An ND Techf FX Reflo Ow Tools SDocument58 pagesTec CHFX R Eflow 3 .0 Contr Roller An ND Techf FX Reflo Ow Tools Smyself248No ratings yet

- MSDS Diamond Paste (Metprep)Document3 pagesMSDS Diamond Paste (Metprep)Claudia MmsNo ratings yet

- Assignment 3Document2 pagesAssignment 3Gaurav JainNo ratings yet

- 5 XerophtalmiaDocument36 pages5 XerophtalmiaMarshet GeteNo ratings yet

- Pragati Maidan Exhibition DetailsDocument10 pagesPragati Maidan Exhibition DetailsDr-Amit KumarNo ratings yet

- Arduino Earthquake CodeDocument3 pagesArduino Earthquake Codekimchen edenelleNo ratings yet

- Avionics 16 MarksDocument1 pageAvionics 16 MarksJessica CarterNo ratings yet

- Human Anatomy NotesDocument2 pagesHuman Anatomy NotesSamantha LiberatoNo ratings yet

- Cold-Rolled Magnetic Lamination Quality Steel, Semiprocessed TypesDocument5 pagesCold-Rolled Magnetic Lamination Quality Steel, Semiprocessed TypessamehNo ratings yet

- Pro Tip Catalogue 4 07Document28 pagesPro Tip Catalogue 4 07notengofffNo ratings yet

- Swamy JPC-CDocument33 pagesSwamy JPC-Cvishnu shankerNo ratings yet

- Do Dice Play GodDocument6 pagesDo Dice Play Godninafatima allamNo ratings yet

- Fixed Asset RegisterDocument3 pagesFixed Asset Registerzuldvsb0% (1)

- Procedures For Meat Export From Rwanda-1Document6 pagesProcedures For Meat Export From Rwanda-1Eddy Mula DieudoneNo ratings yet

- MARCH 7-9, 2011: Biomolecular Drug TargetsDocument2 pagesMARCH 7-9, 2011: Biomolecular Drug TargetsSwapanil YadavNo ratings yet