Professional Documents

Culture Documents

03 - Data Mining

03 - Data Mining

Uploaded by

Dd dCopyright:

Available Formats

You might also like

- Lecture 3-Know Your Data - MDocument19 pagesLecture 3-Know Your Data - MKhizar ShahidNo ratings yet

- Data Understanding and Visualization (Part-1) : Homework-2Document4 pagesData Understanding and Visualization (Part-1) : Homework-2muhammad mubasherNo ratings yet

- Lecture 10Document44 pagesLecture 10QUANG ANH B18DCCN034 PHẠMNo ratings yet

- DocumentsData PreprocessingDocument102 pagesDocumentsData PreprocessingRoy DeepNo ratings yet

- Data Warehousing and Mining: Dr. Hossen Asiful MustafaDocument49 pagesData Warehousing and Mining: Dr. Hossen Asiful MustafaSheikh AbujarNo ratings yet

- ML 1,2 Unit Peter Flach Machine Learning. The Art and SciencDocument22 pagesML 1,2 Unit Peter Flach Machine Learning. The Art and Scienckanchan khadeNo ratings yet

- Unit 2 - Data PreprocessingDocument23 pagesUnit 2 - Data Preprocessingevanjames038No ratings yet

- Wk. 3. Data (12-05-2021)Document57 pagesWk. 3. Data (12-05-2021)Nusrat BadhonNo ratings yet

- Data Mining Notes C2Document12 pagesData Mining Notes C2wuziqiNo ratings yet

- FullDocument367 pagesFulladitijain3727No ratings yet

- Pokok Bahasan 02-1 DataDocument70 pagesPokok Bahasan 02-1 DataSatria ArifNo ratings yet

- DWDM Unit6-Data Similarity MeasuresDocument40 pagesDWDM Unit6-Data Similarity MeasuresmounikaNo ratings yet

- CH 2Document96 pagesCH 2楊喻妃No ratings yet

- DM - P4 - Data Dan Preprocessing DataDocument45 pagesDM - P4 - Data Dan Preprocessing DataAcip SuracipNo ratings yet

- 02 DataDocument77 pages02 DataOscar WongNo ratings yet

- Unit 1 Part 1Document53 pagesUnit 1 Part 1TECHMASTER HUBNo ratings yet

- DWM MergedDocument388 pagesDWM MergedamulyaNo ratings yet

- Data PreprocessDocument82 pagesData PreprocessRa AbhishekNo ratings yet

- DataDocument84 pagesData20je0426HritikGuptaNo ratings yet

- Source Materials: Data Mining: Concepts and Techniques, Machine LearningDocument53 pagesSource Materials: Data Mining: Concepts and Techniques, Machine LearningVrushali Vilas BorleNo ratings yet

- Lect 2 DM Converted 1Document29 pagesLect 2 DM Converted 1Manahil NoorNo ratings yet

- Getting To Know Your Data: - Chapter 2Document63 pagesGetting To Know Your Data: - Chapter 2Moneeb AbbasNo ratings yet

- 01 DataDocument100 pages01 Data20bme094No ratings yet

- Lesson 2.1 - Know Your Data PDFDocument43 pagesLesson 2.1 - Know Your Data PDFTayyaba FaisalNo ratings yet

- Chapter 2 Data IssuesDocument21 pagesChapter 2 Data IssuesBama Raja SegaranNo ratings yet

- CH 01Document11 pagesCH 01ohmumu140No ratings yet

- IT326 - Ch2Document44 pagesIT326 - Ch2عبده شعواNo ratings yet

- Chap2 DataDocument86 pagesChap2 Dataomaima.msds002229No ratings yet

- Lecture Notes For Chapter 2 Introduction To Data MiningDocument34 pagesLecture Notes For Chapter 2 Introduction To Data MiningraadNo ratings yet

- Unit IDocument57 pagesUnit IShivraj DeshmukhNo ratings yet

- Concepts and Techniques: Data MiningDocument65 pagesConcepts and Techniques: Data MiningPantherNo ratings yet

- Data Mining: DataDocument50 pagesData Mining: DataAbdul AowalNo ratings yet

- Lecture Notes For Chapter 2 Introduction To Data Mining: by Tan, Steinbach, KumarDocument66 pagesLecture Notes For Chapter 2 Introduction To Data Mining: by Tan, Steinbach, KumarAbu Kafsha100% (1)

- 2.1 Data Types and Attributes: AttributeDocument23 pages2.1 Data Types and Attributes: AttributeAnand Kumar BhagatNo ratings yet

- Sess02 DataDocument96 pagesSess02 DataKriti SinhaNo ratings yet

- Penggalian Data & Analitika Bisnis: Faculties Teknologi Informasi - ITSDocument69 pagesPenggalian Data & Analitika Bisnis: Faculties Teknologi Informasi - ITSGalih KhairulfalahNo ratings yet

- Attribute Oriented AnalysisDocument27 pagesAttribute Oriented AnalysisJoveliza Angelia TrongcosoNo ratings yet

- Concepts and Techniques: - Chapter 2Document65 pagesConcepts and Techniques: - Chapter 2kogladNo ratings yet

- Data Mining Chapter 2 Data PreprocessingDocument33 pagesData Mining Chapter 2 Data PreprocessingBulmi HilmeNo ratings yet

- Data Preprocessing IIDocument21 pagesData Preprocessing IIDhruvi ThakrarNo ratings yet

- Chpater 2 PDFDocument44 pagesChpater 2 PDFBilalNo ratings yet

- Lecture Notes For Chapter 2: by Tan, Steinbach, KumarDocument25 pagesLecture Notes For Chapter 2: by Tan, Steinbach, KumaryoniazharNo ratings yet

- Lec02 DataAndDataTypesDocument76 pagesLec02 DataAndDataTypesDewi NovitaNo ratings yet

- CH 8Document30 pagesCH 8api-3832575No ratings yet

- Module 2 2022Document19 pagesModule 2 2022Shabnam SaniyaNo ratings yet

- Lec 2Document26 pagesLec 2tin nguyenNo ratings yet

- CL 2Document85 pagesCL 2Rajiv Ranjan SahNo ratings yet

- Data Preprocessing Part 1Document42 pagesData Preprocessing Part 1A04-Apoorva PardhiNo ratings yet

- Week 5 - Data Mining Exploring Data With RDocument146 pagesWeek 5 - Data Mining Exploring Data With RYna ForondaNo ratings yet

- DWDM AR16 Unit 1.2Document14 pagesDWDM AR16 Unit 1.2GODDU NAVVEN BABUNo ratings yet

- DM Preprocessing Lec4,5Document36 pagesDM Preprocessing Lec4,5Haardik MishraNo ratings yet

- Lecture Notes For Chapter 2 Introduction To Data Mining, 2 EditionDocument87 pagesLecture Notes For Chapter 2 Introduction To Data Mining, 2 Editionعبد الحافظ عبد الحافظNo ratings yet

- R21 DM Unit1Document77 pagesR21 DM Unit1Asif EE-010No ratings yet

- Knowing Your DataDocument43 pagesKnowing Your DataYogesh PandeyNo ratings yet

- Modified Module 2-DMDocument107 pagesModified Module 2-DMVaishnavi MNo ratings yet

- DMDW 2Document68 pagesDMDW 2Anu agarwalNo ratings yet

- Data Mining Chapter 2 NotesDocument87 pagesData Mining Chapter 2 NotesMd Mahfoozur RahmanNo ratings yet

- UNIT-3 Foundations of Deep LearningDocument32 pagesUNIT-3 Foundations of Deep LearningbhavanaNo ratings yet

- Linn & Petersen (1985) PDFDocument21 pagesLinn & Petersen (1985) PDFMuhammad IkbalNo ratings yet

- Appendix PAINTDocument14 pagesAppendix PAINTBibi NafiisahNo ratings yet

- DAE SSPS 2004 PresentationDocument32 pagesDAE SSPS 2004 PresentationRavi Kumar ChNo ratings yet

- Term Paper Challenges of Parents As Teachers in Modular Learning ApproachDocument20 pagesTerm Paper Challenges of Parents As Teachers in Modular Learning ApproachJames RoxasNo ratings yet

- Latch Up Failure in IGBTDocument3 pagesLatch Up Failure in IGBTgetssyNo ratings yet

- AquacultureDocument2 pagesAquacultureTarek AlmahdyNo ratings yet

- Vertex Distance CompensationDocument11 pagesVertex Distance CompensationDaniDenzongpaSulaeman100% (1)

- Sas 18 Acc115 QuizDocument7 pagesSas 18 Acc115 Quizloga.tecson.auNo ratings yet

- Chapter Wise Test Paper: Made by Digraj Singh RajputDocument2 pagesChapter Wise Test Paper: Made by Digraj Singh Rajputanshsoni722009No ratings yet

- Call 4 Paper 19th IBCAST 2022Document14 pagesCall 4 Paper 19th IBCAST 2022AliNo ratings yet

- Cummins Engine Oil RecommendationsDocument16 pagesCummins Engine Oil RecommendationsAndaru GunawanNo ratings yet

- Hard Work Is More Important Than TalentDocument2 pagesHard Work Is More Important Than TalentSadman RashidNo ratings yet

- Factors Influencing The PerceptionDocument1 pageFactors Influencing The PerceptionTinesh Kumar100% (1)

- TOPIC 6 Examining Causation Relationship Among Your VariablesDocument7 pagesTOPIC 6 Examining Causation Relationship Among Your VariablesgarnetbinzaNo ratings yet

- Linear Optimization-7-7-17Document35 pagesLinear Optimization-7-7-17preetmehtaNo ratings yet

- Teaching and LearningDocument7 pagesTeaching and Learningshiela50% (2)

- Bastiaanse Why Reference To The Past Is Difficult For Agrammatic SpeakersDocument21 pagesBastiaanse Why Reference To The Past Is Difficult For Agrammatic SpeakersShawnNo ratings yet

- 1 PBDocument13 pages1 PBAyu Endang AprianiNo ratings yet

- Reply Code Challenge 2019 - Teen Edition - TeleportationDocument2 pagesReply Code Challenge 2019 - Teen Edition - Teleportationavash royNo ratings yet

- Scheduling of Vehicles From A Central Depot To A Number of Delivery PointsDocument15 pagesScheduling of Vehicles From A Central Depot To A Number of Delivery PointsMy NguyễnNo ratings yet

- Reaction Paper (Magnifico)Document2 pagesReaction Paper (Magnifico)Melay AlmoradoNo ratings yet

- Wilkinson CatalystDocument19 pagesWilkinson Catalystjagabandhu_patraNo ratings yet

- The Ultimate PowerDocument95 pagesThe Ultimate Powerlyesss21100% (22)

- 6 Matrix Chain M UltiplicationDocument19 pages6 Matrix Chain M UltiplicationArslan Ahmed DanishNo ratings yet

- SAR AI PaperDocument26 pagesSAR AI PaperDharmesh PanchalNo ratings yet

- ENVIRONMENTAL CONTROL SYSTEMS (3681) Lec 2-3Document73 pagesENVIRONMENTAL CONTROL SYSTEMS (3681) Lec 2-3shariq talatNo ratings yet

- Ped 4-MethodsDocument21 pagesPed 4-MethodsAndrea LyccaNo ratings yet

- MDEA 508 AssignmentDocument8 pagesMDEA 508 AssignmentMorgan NtindaNo ratings yet

- Sexist MicroaggressionsDocument32 pagesSexist MicroaggressionsCarolina SantosNo ratings yet

- Design of Channel With Flexible LiningDocument21 pagesDesign of Channel With Flexible Liningmamay papayNo ratings yet

03 - Data Mining

03 - Data Mining

Uploaded by

Dd dCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

03 - Data Mining

03 - Data Mining

Uploaded by

Dd dCopyright:

Available Formats

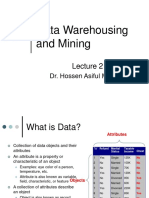

Data Mining:

Data Quality

& Proximity Measures

Data Mining - Lecture 3

Important Characteristics of Structured Data

Dimensionality

Number of attributes each object is described with

Challenge: high dimensionality (curse of dimensionality)

Sparsity

Sparse data: values of most attributes are zero

Challenge: sparse data call for special handling (Only presence counts)

Resolution

Data properties often could be measured with different resolutions

Challenge: decide on the most appropriate resolution

Data Mining - Lecture 3 2

Attribute Values

Attribute values are numbers or symbols assigned

to an attribute

Distinction between attributes and attribute

values

Same attribute can be mapped to different attribute

values

Example: height can be measured in feet or meters

Different attributes can be mapped to the same set of

values

Example: Attribute values for ID and age are integers

But properties of attribute values can be different

ID has no limit but age has a maximum and minimum value

Data Mining - Lecture 3 3

Types of Attributes

There are different types of attributes

Nominal

Examples: ID numbers, eye color, zip codes

Ordinal

Examples: rankings (e.g., taste of potato chips on a scale

from 1-10), grades, height in {tall, medium, short}

Interval

Examples: calendar dates, temperatures in Celsius or

Fahrenheit.

Ratio

Examples: temperature in Kelvin, length, time, counts

Data Mining - Lecture 3 4

Properties of Attribute Values

The type of an attribute depends on which of the

following properties it possesses:

Distinctness: =

Order: < >

Addition: + -

Multiplication: */

Nominal attribute: distinctness

Ordinal attribute: distinctness & order

Interval attribute: distinctness, order & addition

Ratio attribute: all 4 properties

Data Mining - Lecture 3 5

Attribute Type Description Examples

Nominal The values of a nominal attribute are just zip codes, employee ID

different names, i.e., nominal attributes numbers, eye color, gender

provide only enough information to

distinguish one object from another. (=, )

Ordinal The values of an ordinal attribute provide hardness of minerals,

enough information to order objects. (<, >) {good, better, best},

grades, street numbers

Interval For interval attributes, the differences calendar dates, temperature

between values are meaningful, i.e., a unit in Celsius or Fahrenheit

of measurement exists.

(+, - )

Ratio For ratio variables, both differences and temperature in Kelvin,

ratios are meaningful. (*, /) monetary quantities,

counts, age, mass, length,

electrical current

Data Mining - Lecture 3 6

Attribute Transformation

A function that maps the entire set of values of a

given attribute to a new set of replacement

values such that each old value can be identified

with one of the new values

Simple functions: xk, log(x), ex, |x|

Standardization and Normalization

Data Mining - Lecture 3 7

Attribute Transformation Comments

Level

Nominal Any permutation of values If all employee ID numbers

were reassigned, would it

make any difference?

Ordinal An order preserving change of values, An attribute encompassing the

i.e., notion of good, better best can

new_value = f(old_value) be represented equally well by

where f is a monotonic function. the values {1, 2, 3} or by {

0.5, 1, 10}.

Interval new_value =a * old_value + b where Thus, the Fahrenheit and

a and b are constants Celsius temperature scales

differ in terms of where their

zero value is and the size of a

unit (degree).

Ratio new_value = a * old_value Length can be measured in

meters or feet.

Data Mining - Lecture 3 8

Discrete and Continuous Attributes

Discrete Attribute

Has only a finite or countably infinite set of values.

Examples: zip codes, counts, or the set of words in a collection of

documents.

Often represented as integer variables.

Note: binary attributes are a special case of discrete attributes

Continuous Attribute

Has real numbers as attribute values.

Examples: temperature, height, or weight.

Practically, real values can only be measured and represented

using a finite number of digits.

Continuous attributes are typically represented as floating-point

variables.

Data Mining - Lecture 3 9

Asymmetric Attributes

Only presence of attribute – non zero

attribute-is important

Example : each object is a student, each attribute is 1

if the student take a particular course at the

university, 0 otherwise.

Asymmetric binary attributes

Asymmetric discrete attributes

Asymmetric continuous attributes

Data Mining - Lecture 3 10

Data Quality

What kinds of data quality problems?

How can we detect problems with the data?

What can we do about these problems?

Examples of data quality problems:

Noise and outliers

missing values

duplicate data

Data Mining - Lecture 3 11

Noise

Noise refers to modification of original values

Examples: distortion of a person’s voice when talking

on a poor phone and “snow” on television screen

Two Sine Waves Two Sine Waves + Noise

Data Mining - Lecture 3 12

Outliers

Outliers are data objects with characteristics that are

considerably different than most of the other data objects

in the data set

Data Mining - Lecture 3 13

Missing Values

Reasons for missing values

Information is not collected

(e.g., people decline to give their age and weight)

Attributes may not be applicable to all cases

(e.g., annual income is not applicable to children)

Handling missing values

Eliminate Data Objects

Estimate Missing Values

Ignore the Missing Value During Analysis

Data Mining - Lecture 3 14

Duplicate Data

Data set may include data objects that are

duplicates, or almost duplicates of one another

Major issue when merging data from heterogeous

sources

Examples:

Same person with multiple email addresses

Data cleaning

Process of dealing with duplicate data issues

Data Mining - Lecture 3 15

Proximity Measures

Similarity

Dissimilarity

Data Mining - Lecture 3 16

Similarity and Dissimilarity

Similarity

Numerical measure of how alike two data objects are.

Is higher when objects are more alike.

Often falls in the range [0,1].

Dissimilarity

Numerical measure of how different are two data objects.

Lower when objects are more alike.

Minimum dissimilarity is often 0.

Upper limit varies.

Proximity refers to a similarity or dissimilarity

Data Mining - Lecture 3 17

Similarity/Dissimilarity for Simple Attributes

p and q are the attribute values for two data objects.

Data Mining - Lecture 3 18

Euclidean Distance: Example

dist ( x1 x2 ) 2 ( y1 y2 ) 2

3

2 p1 point x y

p3 p4 p1 0 2

1 p2 2 0

p2 p3 3 1

0 p4 5 1

0 1 2 3 4 5 6

p1 p2 p3 p4

p1 0 2.828 3.162 5.099

p2 2.828 0 1.414 3.162

p3 3.162 1.414 0 2

p4 5.099 3.162 2 0

Distance Matrix

Data Mining - Lecture 3 19

Euclidean Distance

Euclidean Distance

n

dist k k

( p

k 1

q ) 2

Where n is the number of dimensions (attributes) and pk and

qk are, respectively, the kth attributes (components) of data

objects p and q.

Standardization is necessary, if scales differ.

Data Mining - Lecture 3 20

Minkowski Distance

Minkowski Distance is a generalization of Euclidean

Distance

n 1

dist ( | pk qk | ) r r

k 1

Where r is a parameter, n is the number of dimensions

(attributes) and pk and qk are, respectively, the kth attributes

(components) or data objects p and q.

Data Mining - Lecture 3 21

Minkowski Distance: Examples

r = 1: Manhattan distance

A common example of this is the Hamming distance, which is just the

number of bits that are different between two binary vectors.

r = 2: Euclidean distance

r : “supremum” distance

This is the maximum difference between any component of the vectors

Do not confuse r with n, i.e., all these distances are

defined for all numbers of dimensions.

Data Mining - Lecture 3 22

Minkowski Distance: Examples (cont.)

Manhattan distance

L1 p1 p2 p3 p4

p1 0 4 4 6

p2 4 0 2 4

p3 4 2 0 2

p4 6 4 2 0

Euclidean distance

point x y L2 p1 p2 p3 p4

p1 0 2 p1 0 2.828 3.162 5.099

p2 2 0 p2 2.828 0 1.414 3.162

p3 3 1 p3 3.162 1.414 0 2

p4 5 1 p4 5.099 3.162 2 0

“supremum” distance

L p1 p2 p3 p4

p1 0 2 3 5

p2 2 0 1 3

p3 3 1 0 2

p4 5 3 2 0

Distance Matrix

Data Mining - Lecture 3 23

Mahalanobis Distance

1

Mahalanobis ( p, q ) ( p q ) ( p q ) T

is the covariance matrix of

the input data X

1 n

j ,k ( X ij X j )( X ik X k )

n 1 i 1

For red points, the Euclidean distance is 14.7, Mahalanobis distance is 6.

Data Mining - Lecture 3 24

Mahalanobis Distance

Covariance Matrix:

0.3 0.2

0.2 0.3

C

B A: (0.5, 0.5)

B: (0, 1)

A C: (1.5, 1.5)

Mahal(A,B) = 5

Mahal(A,C) = 4

Data Mining - Lecture 3 25

Common Properties of a Distance

Distances, such as the Euclidean distance,

have some well known properties:

1. d(p, q) 0 for all p and q and d(p, q) = 0 only if

p = q. (Positive definiteness)

2. d(p, q) = d(q, p) for all p and q. (Symmetry)

3. d(p, r) d(p, q) + d(q, r) for all points p, q, and r.

(Triangle Inequality)

where d(p, q) is the distance (dissimilarity) between

points (data objects), p and q.

A distance that satisfies these properties is a

metric

Data Mining - Lecture 3 26

Common Properties of a Similarity

Similarities, also have some well known

properties.

1. s(p, q) = 1 (or maximum similarity) only if p = q.

2. s(p, q) = s(q, p) for all p and q. (Symmetry)

where s(p, q) is the similarity between points (data

objects), p and q.

Data Mining - Lecture 3 27

Similarity Between Binary Vectors

Common situation is that objects, p and q, have only

binary attributes

Compute similarities using the following quantities

M01 = the number of attributes where p was 0 and q was 1

M10 = the number of attributes where p was 1 and q was 0

M00 = the number of attributes where p was 0 and q was 0

M11 = the number of attributes where p was 1 and q was 1

Simple Matching and Jaccard Coefficients

SMC = number of matches / number of attributes

= (M11 + M00) / (M01 + M10 + M11 + M00)

J = number of 11 matches / number of not-both-zero attributes values

= (M11) / (M01 + M10 + M11)

Data Mining - Lecture 3 28

SMC versus Jaccard: Example

p= 1000000000

q= 0000001001

M01 = 2 (the number of attributes where p was 0 and q was 1)

M10 = 1 (the number of attributes where p was 1 and q was 0)

M00 = 7 (the number of attributes where p was 0 and q was 0)

M11 = 0 (the number of attributes where p was 1 and q was 1)

SMC = (M11 + M00)/(M01 + M10 + M11 + M00) = (0+7) / (2+1+0+7)

= 0.7

J = (M11) / (M01 + M10 + M11) = 0 / (2 + 1 + 0) = 0

Data Mining - Lecture 3 29

Cosine Similarity

If d1 and d2 are two document vectors, then

cos( d1, d2 ) = (d1 d2) / ||d1|| ||d2|| ,

where indicates vector dot product and || d || is the length of vector d.

Example:

d1 = 3 2 0 5 0 0 0 2 0 0

d2 = 1 0 0 0 0 0 0 1 0 2

d1 d2= 3*1 + 2*0 + 0*0 + 5*0 + 0*0 + 0*0 + 0*0 + 2*1 + 0*0 + 0*2 = 5

||d1|| = (3*3+2*2+0*0+5*5+0*0+0*0+0*0+2*2+0*0+0*0)0.5 = (42) 0.5 = 6.481

||d2|| = (1*1+0*0+0*0+0*0+0*0+0*0+0*0+1*1+0*0+2*2) 0.5 = (6) 0.5 = 2.45

cos( d1, d2 ) = .315

Data Mining - Lecture 3 30

Extended Jaccard Coefficient (Tanimoto)

Variation of Jaccard for continuous or count

attributes

Reduces to Jaccard for binary attributes

Data Mining - Lecture 3 31

Correlation

Correlation measures the linear relationship

between objects

To compute correlation, we standardize data

objects, p and q, and then take their dot product

pk ( pk mean( p)) / std ( p)

qk (qk mean(q)) / std (q)

correlation( p, q) p q

Data Mining - Lecture 3 32

Visually Evaluating Correlation

Scatter plots

showing the

similarity from

–1 to 1.

Data Mining - Lecture 3 33

General Approach for Combining Similarities

Sometimes attributes are of many different

types, but an overall similarity is needed.

Data Mining - Lecture 3 34

Using Weights to Combine Similarities

May not want to treat all attributes the same.

Use weights wk which are between 0 and 1 and sum to 1.

Data Mining - Lecture 3 35

Issues in Proximity Calculation

How to handle the case in witch attributes have different

scales and/or are correlated

How to calculate proximity between objects that are

composed of different types of attributes, quantitative and

qualitative

How to handle proximity calculation when attributes have

different weight (when not all attributes contribute equally

to the proximity of objects)

Data Mining - Lecture 3 36

Mahalanobis

Attributes are correlated

Different ranges of values

The distribution of the date is Gaussian

Data Mining - Lecture 3 37

You might also like

- Lecture 3-Know Your Data - MDocument19 pagesLecture 3-Know Your Data - MKhizar ShahidNo ratings yet

- Data Understanding and Visualization (Part-1) : Homework-2Document4 pagesData Understanding and Visualization (Part-1) : Homework-2muhammad mubasherNo ratings yet

- Lecture 10Document44 pagesLecture 10QUANG ANH B18DCCN034 PHẠMNo ratings yet

- DocumentsData PreprocessingDocument102 pagesDocumentsData PreprocessingRoy DeepNo ratings yet

- Data Warehousing and Mining: Dr. Hossen Asiful MustafaDocument49 pagesData Warehousing and Mining: Dr. Hossen Asiful MustafaSheikh AbujarNo ratings yet

- ML 1,2 Unit Peter Flach Machine Learning. The Art and SciencDocument22 pagesML 1,2 Unit Peter Flach Machine Learning. The Art and Scienckanchan khadeNo ratings yet

- Unit 2 - Data PreprocessingDocument23 pagesUnit 2 - Data Preprocessingevanjames038No ratings yet

- Wk. 3. Data (12-05-2021)Document57 pagesWk. 3. Data (12-05-2021)Nusrat BadhonNo ratings yet

- Data Mining Notes C2Document12 pagesData Mining Notes C2wuziqiNo ratings yet

- FullDocument367 pagesFulladitijain3727No ratings yet

- Pokok Bahasan 02-1 DataDocument70 pagesPokok Bahasan 02-1 DataSatria ArifNo ratings yet

- DWDM Unit6-Data Similarity MeasuresDocument40 pagesDWDM Unit6-Data Similarity MeasuresmounikaNo ratings yet

- CH 2Document96 pagesCH 2楊喻妃No ratings yet

- DM - P4 - Data Dan Preprocessing DataDocument45 pagesDM - P4 - Data Dan Preprocessing DataAcip SuracipNo ratings yet

- 02 DataDocument77 pages02 DataOscar WongNo ratings yet

- Unit 1 Part 1Document53 pagesUnit 1 Part 1TECHMASTER HUBNo ratings yet

- DWM MergedDocument388 pagesDWM MergedamulyaNo ratings yet

- Data PreprocessDocument82 pagesData PreprocessRa AbhishekNo ratings yet

- DataDocument84 pagesData20je0426HritikGuptaNo ratings yet

- Source Materials: Data Mining: Concepts and Techniques, Machine LearningDocument53 pagesSource Materials: Data Mining: Concepts and Techniques, Machine LearningVrushali Vilas BorleNo ratings yet

- Lect 2 DM Converted 1Document29 pagesLect 2 DM Converted 1Manahil NoorNo ratings yet

- Getting To Know Your Data: - Chapter 2Document63 pagesGetting To Know Your Data: - Chapter 2Moneeb AbbasNo ratings yet

- 01 DataDocument100 pages01 Data20bme094No ratings yet

- Lesson 2.1 - Know Your Data PDFDocument43 pagesLesson 2.1 - Know Your Data PDFTayyaba FaisalNo ratings yet

- Chapter 2 Data IssuesDocument21 pagesChapter 2 Data IssuesBama Raja SegaranNo ratings yet

- CH 01Document11 pagesCH 01ohmumu140No ratings yet

- IT326 - Ch2Document44 pagesIT326 - Ch2عبده شعواNo ratings yet

- Chap2 DataDocument86 pagesChap2 Dataomaima.msds002229No ratings yet

- Lecture Notes For Chapter 2 Introduction To Data MiningDocument34 pagesLecture Notes For Chapter 2 Introduction To Data MiningraadNo ratings yet

- Unit IDocument57 pagesUnit IShivraj DeshmukhNo ratings yet

- Concepts and Techniques: Data MiningDocument65 pagesConcepts and Techniques: Data MiningPantherNo ratings yet

- Data Mining: DataDocument50 pagesData Mining: DataAbdul AowalNo ratings yet

- Lecture Notes For Chapter 2 Introduction To Data Mining: by Tan, Steinbach, KumarDocument66 pagesLecture Notes For Chapter 2 Introduction To Data Mining: by Tan, Steinbach, KumarAbu Kafsha100% (1)

- 2.1 Data Types and Attributes: AttributeDocument23 pages2.1 Data Types and Attributes: AttributeAnand Kumar BhagatNo ratings yet

- Sess02 DataDocument96 pagesSess02 DataKriti SinhaNo ratings yet

- Penggalian Data & Analitika Bisnis: Faculties Teknologi Informasi - ITSDocument69 pagesPenggalian Data & Analitika Bisnis: Faculties Teknologi Informasi - ITSGalih KhairulfalahNo ratings yet

- Attribute Oriented AnalysisDocument27 pagesAttribute Oriented AnalysisJoveliza Angelia TrongcosoNo ratings yet

- Concepts and Techniques: - Chapter 2Document65 pagesConcepts and Techniques: - Chapter 2kogladNo ratings yet

- Data Mining Chapter 2 Data PreprocessingDocument33 pagesData Mining Chapter 2 Data PreprocessingBulmi HilmeNo ratings yet

- Data Preprocessing IIDocument21 pagesData Preprocessing IIDhruvi ThakrarNo ratings yet

- Chpater 2 PDFDocument44 pagesChpater 2 PDFBilalNo ratings yet

- Lecture Notes For Chapter 2: by Tan, Steinbach, KumarDocument25 pagesLecture Notes For Chapter 2: by Tan, Steinbach, KumaryoniazharNo ratings yet

- Lec02 DataAndDataTypesDocument76 pagesLec02 DataAndDataTypesDewi NovitaNo ratings yet

- CH 8Document30 pagesCH 8api-3832575No ratings yet

- Module 2 2022Document19 pagesModule 2 2022Shabnam SaniyaNo ratings yet

- Lec 2Document26 pagesLec 2tin nguyenNo ratings yet

- CL 2Document85 pagesCL 2Rajiv Ranjan SahNo ratings yet

- Data Preprocessing Part 1Document42 pagesData Preprocessing Part 1A04-Apoorva PardhiNo ratings yet

- Week 5 - Data Mining Exploring Data With RDocument146 pagesWeek 5 - Data Mining Exploring Data With RYna ForondaNo ratings yet

- DWDM AR16 Unit 1.2Document14 pagesDWDM AR16 Unit 1.2GODDU NAVVEN BABUNo ratings yet

- DM Preprocessing Lec4,5Document36 pagesDM Preprocessing Lec4,5Haardik MishraNo ratings yet

- Lecture Notes For Chapter 2 Introduction To Data Mining, 2 EditionDocument87 pagesLecture Notes For Chapter 2 Introduction To Data Mining, 2 Editionعبد الحافظ عبد الحافظNo ratings yet

- R21 DM Unit1Document77 pagesR21 DM Unit1Asif EE-010No ratings yet

- Knowing Your DataDocument43 pagesKnowing Your DataYogesh PandeyNo ratings yet

- Modified Module 2-DMDocument107 pagesModified Module 2-DMVaishnavi MNo ratings yet

- DMDW 2Document68 pagesDMDW 2Anu agarwalNo ratings yet

- Data Mining Chapter 2 NotesDocument87 pagesData Mining Chapter 2 NotesMd Mahfoozur RahmanNo ratings yet

- UNIT-3 Foundations of Deep LearningDocument32 pagesUNIT-3 Foundations of Deep LearningbhavanaNo ratings yet

- Linn & Petersen (1985) PDFDocument21 pagesLinn & Petersen (1985) PDFMuhammad IkbalNo ratings yet

- Appendix PAINTDocument14 pagesAppendix PAINTBibi NafiisahNo ratings yet

- DAE SSPS 2004 PresentationDocument32 pagesDAE SSPS 2004 PresentationRavi Kumar ChNo ratings yet

- Term Paper Challenges of Parents As Teachers in Modular Learning ApproachDocument20 pagesTerm Paper Challenges of Parents As Teachers in Modular Learning ApproachJames RoxasNo ratings yet

- Latch Up Failure in IGBTDocument3 pagesLatch Up Failure in IGBTgetssyNo ratings yet

- AquacultureDocument2 pagesAquacultureTarek AlmahdyNo ratings yet

- Vertex Distance CompensationDocument11 pagesVertex Distance CompensationDaniDenzongpaSulaeman100% (1)

- Sas 18 Acc115 QuizDocument7 pagesSas 18 Acc115 Quizloga.tecson.auNo ratings yet

- Chapter Wise Test Paper: Made by Digraj Singh RajputDocument2 pagesChapter Wise Test Paper: Made by Digraj Singh Rajputanshsoni722009No ratings yet

- Call 4 Paper 19th IBCAST 2022Document14 pagesCall 4 Paper 19th IBCAST 2022AliNo ratings yet

- Cummins Engine Oil RecommendationsDocument16 pagesCummins Engine Oil RecommendationsAndaru GunawanNo ratings yet

- Hard Work Is More Important Than TalentDocument2 pagesHard Work Is More Important Than TalentSadman RashidNo ratings yet

- Factors Influencing The PerceptionDocument1 pageFactors Influencing The PerceptionTinesh Kumar100% (1)

- TOPIC 6 Examining Causation Relationship Among Your VariablesDocument7 pagesTOPIC 6 Examining Causation Relationship Among Your VariablesgarnetbinzaNo ratings yet

- Linear Optimization-7-7-17Document35 pagesLinear Optimization-7-7-17preetmehtaNo ratings yet

- Teaching and LearningDocument7 pagesTeaching and Learningshiela50% (2)

- Bastiaanse Why Reference To The Past Is Difficult For Agrammatic SpeakersDocument21 pagesBastiaanse Why Reference To The Past Is Difficult For Agrammatic SpeakersShawnNo ratings yet

- 1 PBDocument13 pages1 PBAyu Endang AprianiNo ratings yet

- Reply Code Challenge 2019 - Teen Edition - TeleportationDocument2 pagesReply Code Challenge 2019 - Teen Edition - Teleportationavash royNo ratings yet

- Scheduling of Vehicles From A Central Depot To A Number of Delivery PointsDocument15 pagesScheduling of Vehicles From A Central Depot To A Number of Delivery PointsMy NguyễnNo ratings yet

- Reaction Paper (Magnifico)Document2 pagesReaction Paper (Magnifico)Melay AlmoradoNo ratings yet

- Wilkinson CatalystDocument19 pagesWilkinson Catalystjagabandhu_patraNo ratings yet

- The Ultimate PowerDocument95 pagesThe Ultimate Powerlyesss21100% (22)

- 6 Matrix Chain M UltiplicationDocument19 pages6 Matrix Chain M UltiplicationArslan Ahmed DanishNo ratings yet

- SAR AI PaperDocument26 pagesSAR AI PaperDharmesh PanchalNo ratings yet

- ENVIRONMENTAL CONTROL SYSTEMS (3681) Lec 2-3Document73 pagesENVIRONMENTAL CONTROL SYSTEMS (3681) Lec 2-3shariq talatNo ratings yet

- Ped 4-MethodsDocument21 pagesPed 4-MethodsAndrea LyccaNo ratings yet

- MDEA 508 AssignmentDocument8 pagesMDEA 508 AssignmentMorgan NtindaNo ratings yet

- Sexist MicroaggressionsDocument32 pagesSexist MicroaggressionsCarolina SantosNo ratings yet

- Design of Channel With Flexible LiningDocument21 pagesDesign of Channel With Flexible Liningmamay papayNo ratings yet