Professional Documents

Culture Documents

Final Paper N Simulaton Final

Final Paper N Simulaton Final

Uploaded by

salman_ansari_pk4198Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Final Paper N Simulaton Final

Final Paper N Simulaton Final

Uploaded by

salman_ansari_pk4198Copyright:

Available Formats

NS2 TCP Linux: An NS2 TCP Implementation With Congestion Control Algorithms (High Speed TCP) Some Results

and Observations

Muhammad Ahmed Salman (Salman728@hotmail.com)

PAF- KIET

Asif Hussain (aasif_hussain@hotmail.com)

PAF- KIET

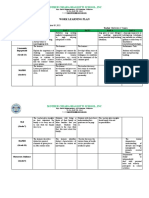

Abstract High-speed networks with large delays present a unique environment where TCP may have a problem utilizing the full bandwidth. Several congestion control proposals have been suggested to remedy this problem This paper is an brief of TCP congestion control principles and techniques H-TCP is a loss-based algorithm, using additiveincrease/multiplicative-decrease (AIMD) to control TCP's congestion window. It is one of many TCP congestion avoidance algorithms which seeks to increase the aggressiveness of TCP on high bandwidth-delay product (BDP) paths, while maintaining "TCP friendliness" for small BDP paths. H-TCP increases its aggressiveness (in particular, the rate of additive increase) as the time since the previous loss increases. This avoids the problem encountered by HSTCP and BIC TCP of making flows more aggressive if their windows are already large. Thus, new flows can be expected to converge to fairness faster under HTCP than HSTCP and BIC TCP. 1. Introduction To avoid congestion collapse, TCP uses a multifaceted congestion control strategy. For each connection, TCP maintains a congestion window, limiting the total number of unacknowledged packets that may be in transit end-to-end. This is somewhat analogous to TCP's sliding window used for flow control. TCP uses a mechanism called slow start to increase the congestion window after a connection is initialized and after a timeout. It starts with a window of two times the maximum segment size (MSS). Although the initial rate is low, the rate of increase is very rapid: for every packet acknowledged, the congestion window increases by 1 MSS so that the congestion window effectively doubles for every round trip time (RTT). When the congestion window exceeds a threshold ssthresh the algorithm enters a new state, called congestion avoidance. In some implementations (e.g., Linux), the initial ssthresh is large, and so the first slow start usually ends after a loss. However, ssthresh is updated at the end of each slow start, and will often affect subsequent slow starts triggered by timeouts.

2. Key Words

NS-2, TCP, Congestion Control, Linux

3. Motivation

For the model design, we have used the ns2 simulator. The ns2 is popular simulation tool for the network behavior modeling proposed by UC Berkeley for the educational and the research needs. It is the object simulator written in C++ with the Otcl language for the object definition. Users define required protocols in C++ and Otcl that are represented by object inherited from the Agent class. The ns2 use NAM (Network Animator) utility for the animation of the correct protocol design and the XGRAPH tool for the representation of the achievement results. The ns2 simulator supports

the great scale of the protocols (TCP, UDP, RTP, i.e.) and the technologies (LAN, MAN, sensor networks, sessions, multicasting i.e.) in the wired and also in the wireless networks. A simpler one for developer which most of the analysis community takes: Add the new congestion control as a window Option in TCPAgent. The advantage of this approach is that the same congestion control algorithm can work with other algorithms (loss recovery, ECN and etc). The disadvantages are: All the state variables from all different congestion control algorithms are variables in the same TCPAgent class even there is only one set of variables used at any time. So, this solution does not scale with the # of congestion control algorithms A more complicated one is to write a new subclass derived from TCPAgent and overwrite the relevant functions (slowdown and opencwnd). This will make the number of class variables controllable. On the other hand, the disadvantages are: 1. if each congestion control algorithm has to use a new derived class, other algorithms (loss recovery, ECN and etc) have to be repeatedly implemented in all these new derived model. 2. The congestion control algorithm of a TCP cannot be changed in the middle of a simulation (this point is less important). Design goals of NS-2 TCP-Linux: Accuracy: Use congestion control algorithm code *as-is* from Linux 2.6 source trees Improve SACK processing Extensibility: Import the concept of interfaces for loss detection algorithms Speed: Improve event queue scheduler

Standard interface for congestion control algorithms: void start(struct tcp_sock *tp): init the algorithm u32 ssthresh(struct tcp_sock *tp): called after packet loss u32 min_cwnd(struct tcp_sock *tp): called after loss void cong_avoid(struct tcp_sock *tp, u32 ack, u32 rtt, u32 in_flight, int good): called after ack received void rtt_sample(struct tcp_sock *tp, u32 rtt): called after rtt calculation set_state(), cwnd_event(), undo_cwnd(), get_info() Better SACK implementation processing than NS-2

The algorithms are: Reno (actually with FACK), Vegas, HS-TCP, Scalable-TCP, BIC, Cubic, Westwood, H-TCP, Hybla, Veno, TCP-LP, Compound, Better SACK: will explain details later D-SACK and Adaptive Delayed ACK: currently not implemented in NS2 TCP-Linux and not covered in this talk Code structure

The yellow boxes are from existing codes (one from NS2, the other from Linux kernels). LinuxTcpAgent is a subclass of TcpAgent in NS-2. ScoreBoard1 is an independent class for loss detection (or more generally, per-packet state management).

4. TCP Implementations in Linux 2.6

Ns-linux-util.h / .cc are interfaces between NS-2 and Linux. Ns-linux-c.h is a highly simplified environment for Linux codes (the environment is made from sets of macro definitions to shortcut all system calls that are not related to TCP) An example of congestion control algorithm in NS-2 TCP-Linux

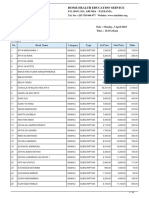

Reno (FACK), Vegas, HS-TCP, Scalable-TCP, BIC, Cubic, Westwood, H-TCP, Hybla Imported 3 experimental congestion control algorithms in testing for future Linux versions Veno, TCP-LP, TCP Compound (MS Windows Vista) 3 bugs were found with Linux 2.6 HighSpeed TCP, 1 performance problem is found with Linux 2.6 Vegas The 9 algorithms are: Reno (actually with FACK), Vegas, HS-TCP, Scalable-TCP, BIC, Cubic, Westwood, H-TCP, Hybla The 3 experimental ones are: Veno, TCP-LP, Compound, Among them, Compound is the new algorithm by MSR which will be included in Windows Vista Using Linux TCP Agent in NS-2 Simulations Easy usage for all users to migrate TCL scripts: #set tcp [new Agent/TCP/Sack1] set tcp [new Agent/TCP/Linux] #everything else is the same ns at 0 tcp select_ca reno #everything else is the same The yellow parts (3 lines) are the simulation codes (tcl script) to be changed. tcp select_ca <congestion control algorithm> is a new command introduced by NS-2 TCPLinux for selecting different congestion modules. Better SACK Processing Scoreboard1:

To implement a new congestion window algorithm with Linux interface, the researchers need to implement three functions minimally. This figure illustrates a minimal implementation of TCP (Reno) Note that this illustration is for NS-2 TCP-Linux. If it is to be used in Linux system, the module register functions also need to be implemented (can be done by copy-and-paste). Also, it takes less than 10 detailed steps to include a new algorithm in Linux into NS-2 TCP-Linux. (It usually takes me <1 hour to do that.) Though we highly recommend the researchers to thoroughly test the newly included module before running performance simulation. Status of NS-2 TCP-Linux Imported all 9 congestion control algorithms from Linux 2.6.16-3

Combines NS-2 Sack1 design and Linux scoreboard design Enable correct implementation of FACK Simulation speed similar to NS-2 Sack1 SACK Processing in NS-2 Sack1 Reassembly queue Pro: scans the SACK list very fast Con: difficult to implement FACK correct, cant identify retransmitted packets, etc. Each packet (sent yet unacked) has a state diagram as above This packet state machine can solve the FACK problem. As in Linux SACK processing, each packet in the retransmission queue is in one of the four possible states: In Flight ,Lost, Retrans or SACKed. The state transition diagram is shown in.A packet that is sent for the first time, but not acknowledged and not considered lost, is in InFlight state. It enters the SACKed state when the packet is selectively acknowledged by SACK, or enters the Lost state when either a retransmission timeout occurs, or the furthest SACKed packetis more than 3 packets ahead of it (FACK policy). When alost packet is retransmitted, it enters the Retrans state. Finally, the most challenging part is the transition from the Retrans state to the Lost state, which happens when a retransmitted packet is lost again. We approximate the Linux implementation: When a packet is retransmitted, it is assigned a snd nxt (similar to Scoreboard in NS-2 TCPFack) which records the packet sequence number of the next data packet to be sent. Additionally, it is assigned a retrx id which records the number of packets that are retransmitted in this loss recovery phase, as shown in the first two nodes in Figure 4. The (snd nxt,retrx id) pair helps to detect the loss of a retransmitted packet in the following ways: 1. When another InFlight packet is SACKed or acknowledged with its sequence number higher than 3+snd nxt of the retransmitted packet, the retransmitted packet is considered lost; or 2. When another Retrans packet is SACKed or acknowledged with its own retrx id higher than

1~11: eleven packets that are sent but not acknowledged. Yellow blocks: packets that are SACKed White blocks: packets that are not Sacked nor Acked. (maybe lost, maybe inflight) Sack1 design use a data structure called reassembly queue to mimic the packet reassembling in the receiver side. The queue combines the all sacked packets in a block into one node. pro: scanning a SACK queue much faster (in the order of # of SACK block instead of # of packets in flight). con: How to implement FACK? How to identify retransmitted packets? How to identify lost retransmitted packets? And retransmitted-lossretransmitted packets? SACK processing in Linux 2.6

retrx id+3, the retransmitted packet is considered lost. ScoreBoard1 Combine the Linux design (state diagram for each packet) and Sack1 design (Reassembly Queue)

Evaluation Setup

ScoreBoard1 in NS2-TCP-Linux combines the concept of packet state machine into the reassembly queue data structure. One Exemption: For each retransmitted packet, we have an element in the queue as retx_id itself is not necessarily increasing. RtxID is an ID for each inflight retransmitted packets (can be retransmitted multiple times). Its like a sequence number for retransmitted packets. This is to help the FACK: if a retransmitted packet is acked/sacked (with a retrx id of N), all retransmitted packets with retxid smaller than N-3 are considered lost. Snd_nxt is the snd_nxt seq # when the packet is retransmitted. Its another way to help FACK: if a nonretransmitted packet is acked/sacked with a sequence number of N, all retransmitted packets with snd_nxt seq # smaller than N-3 are considered lost. Con: If the number of retransmitted packets are large, the current implementation is not efficient. (extension: split the retrans element only when the retx_id is not increasing)

You might also like

- Textil El AguilaDocument3 pagesTextil El AguilaromeojulietayrockyNo ratings yet

- A Comparative Analysis of TCP Cubic and Westwood AlgorithmsDocument3 pagesA Comparative Analysis of TCP Cubic and Westwood AlgorithmsMohammed MoustafaNo ratings yet

- HW 2Document3 pagesHW 2Marina CzuprynaNo ratings yet

- TCP Variants Performance Analysis in Mobile Ad Hoc NetworksDocument4 pagesTCP Variants Performance Analysis in Mobile Ad Hoc NetworksIISRTNo ratings yet

- Finish Works For GuitarDocument21 pagesFinish Works For Guitarppopgod63% (8)

- Evaluation of Different TCP Congestion Control Algorithms Using NS-2Document21 pagesEvaluation of Different TCP Congestion Control Algorithms Using NS-2anthony81212No ratings yet

- Lab #2: Contention-Aware Scheduling To in Wireless NetworksDocument5 pagesLab #2: Contention-Aware Scheduling To in Wireless NetworksvsalaiselvamNo ratings yet

- TCP Congestion Control and Its Variants: Harjinder Kaur and Dr. Gurpreet SinghDocument10 pagesTCP Congestion Control and Its Variants: Harjinder Kaur and Dr. Gurpreet Singhanwar adewmNo ratings yet

- TCP ManDocument17 pagesTCP ManGiovany RosalesNo ratings yet

- NS Simulation Implementing Large Window Over TCP SACKDocument5 pagesNS Simulation Implementing Large Window Over TCP SACKElizabeth FlowersNo ratings yet

- Ns 2 Part 1Document7 pagesNs 2 Part 1Ketan DasNo ratings yet

- Effect of Maximum Congestion of TCP Reno in Decagon NoCDocument5 pagesEffect of Maximum Congestion of TCP Reno in Decagon NoCJournal of ComputingNo ratings yet

- 02a - FTP Generic Optim.1.04 ALUDocument63 pages02a - FTP Generic Optim.1.04 ALUleandre vanieNo ratings yet

- Computer Networks LabDocument15 pagesComputer Networks LabLikhith Kumar C MNo ratings yet

- Winsock Programmer's FAQ Articles: Debugging TCP/IPDocument4 pagesWinsock Programmer's FAQ Articles: Debugging TCP/IPQ ZNo ratings yet

- NS2: Contents: - NS2 - Introduction To NS2 Simulator - Some NS2 Examples - NS2 Project Work InstructionsDocument6 pagesNS2: Contents: - NS2 - Introduction To NS2 Simulator - Some NS2 Examples - NS2 Project Work Instructionselexncomm1No ratings yet

- CN Lab ProgramsDocument36 pagesCN Lab ProgramsKavitha SANo ratings yet

- Cs 261 - Computer Networks B. Tech CSE (V Semester) : Project ReportDocument61 pagesCs 261 - Computer Networks B. Tech CSE (V Semester) : Project ReportKoussay JabériNo ratings yet

- Host To Host Congestion Control For TCPDocument43 pagesHost To Host Congestion Control For TCPSurekha Muzumdar100% (1)

- TR2005 07 22 TCP EFSMDocument50 pagesTR2005 07 22 TCP EFSMChandru BlueEyesNo ratings yet

- Macroscopic TcpmodelDocument16 pagesMacroscopic Tcpmodelharini1opNo ratings yet

- 10 1 1 103 316 PDFDocument11 pages10 1 1 103 316 PDFShaik NisarNo ratings yet

- TTCPW 95Document9 pagesTTCPW 95Adam UrassaNo ratings yet

- An Implementation and Experimental Study of The Explicit Control Protocol (XCP) by Yongguang ZhangDocument54 pagesAn Implementation and Experimental Study of The Explicit Control Protocol (XCP) by Yongguang ZhangErma PerendaNo ratings yet

- CN Assignment No3Document13 pagesCN Assignment No3sakshi halgeNo ratings yet

- Journey To The Center of The Linux KernelDocument25 pagesJourney To The Center of The Linux KernelagusalsaNo ratings yet

- SolsarisDocument124 pagesSolsarisRaji GoprajuNo ratings yet

- Solutions OSI ExercisesDocument50 pagesSolutions OSI ExercisesHussam AlwareethNo ratings yet

- Transmission Control Protocol (TCP) : Comparison of TCP Congestion Control Algorithms Using Netsim™Document12 pagesTransmission Control Protocol (TCP) : Comparison of TCP Congestion Control Algorithms Using Netsim™AhmedElhajNo ratings yet

- Assignment 3 CN - Iteration 1Document5 pagesAssignment 3 CN - Iteration 1The Gamer Last nightNo ratings yet

- Int Ant Week3Document32 pagesInt Ant Week3Ahmet ÇakıroğluNo ratings yet

- Barré2011 Chapter MultiPathTCPFromTheoryToPractiDocument14 pagesBarré2011 Chapter MultiPathTCPFromTheoryToPractiMAIZIA SARAHNo ratings yet

- A Review On Snoop With Rerouting in Wired Cum Wireless NetworksDocument4 pagesA Review On Snoop With Rerouting in Wired Cum Wireless NetworksInternational Organization of Scientific Research (IOSR)No ratings yet

- Comparative Analysis of TCP Variants Using NS-3.25 and Netanim On Ubuntu Version 16.04 PlatformDocument7 pagesComparative Analysis of TCP Variants Using NS-3.25 and Netanim On Ubuntu Version 16.04 PlatformIJRASETPublicationsNo ratings yet

- 8.2. 20 Lab4Document15 pages8.2. 20 Lab4Mai Huy HoàngNo ratings yet

- Network Emulation With NetEmDocument9 pagesNetwork Emulation With NetEmghostreamNo ratings yet

- Throughput Analysis of TCP Newreno For Multiple BottlenecksDocument10 pagesThroughput Analysis of TCP Newreno For Multiple BottlenecksTJPRC PublicationsNo ratings yet

- 8.7. 3 Lab2Document10 pages8.7. 3 Lab2Mai Huy HoàngNo ratings yet

- CN Assignment 3 2020Document4 pagesCN Assignment 3 2020Avik DasNo ratings yet

- Umts TCP Stacks 170407Document12 pagesUmts TCP Stacks 170407Anicet Germain ONDO NTOSSUINo ratings yet

- CN Bookmarked Note Part 3Document188 pagesCN Bookmarked Note Part 3PavanNo ratings yet

- 8.5. 10 Lab3Document10 pages8.5. 10 Lab3Mai Huy HoàngNo ratings yet

- Ec8563 CN Lab RecordDocument45 pagesEc8563 CN Lab RecordSri RamNo ratings yet

- Lab3 TCPDocument7 pagesLab3 TCPAnonymous XGWa9e9iILNo ratings yet

- CN Lab Manual 18CSL57Document83 pagesCN Lab Manual 18CSL571dt19cs056 HemanthNo ratings yet

- DTN NS3Document4 pagesDTN NS3Mirza RizkyNo ratings yet

- Congestion Control and Packet Reordering For Multipath Transmission Control ProtocolDocument56 pagesCongestion Control and Packet Reordering For Multipath Transmission Control ProtocolEng Ali HussienNo ratings yet

- Screenshot 2024-06-06 at 10.25.49 AMDocument4 pagesScreenshot 2024-06-06 at 10.25.49 AMAyush PandeyNo ratings yet

- 32 The ns-3 Network Simulator - An Introduction To Computer Networks, Desktop Edition 2.0.10Document10 pages32 The ns-3 Network Simulator - An Introduction To Computer Networks, Desktop Edition 2.0.10Sovann DoeurNo ratings yet

- Transport AnswersDocument5 pagesTransport AnswersPebo GreenNo ratings yet

- TCP Cong ControlDocument34 pagesTCP Cong ControlrockerptitNo ratings yet

- Tutorial Ns802 11Document13 pagesTutorial Ns802 11Roberto FariasNo ratings yet

- Comparisons of Tahoe, Reno, and Sack TCPDocument14 pagesComparisons of Tahoe, Reno, and Sack TCPadvaitkothareNo ratings yet

- Terena 2005Document2 pagesTerena 2005Mohamed AhmadNo ratings yet

- How To Set DiffServ Over MPLS Test-Bed On Linux Routers - MPLS TutorialDocument3 pagesHow To Set DiffServ Over MPLS Test-Bed On Linux Routers - MPLS TutorialmaxymonyNo ratings yet

- CISCO PACKET TRACER LABS: Best practice of configuring or troubleshooting NetworkFrom EverandCISCO PACKET TRACER LABS: Best practice of configuring or troubleshooting NetworkNo ratings yet

- PLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.From EverandPLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.No ratings yet

- ROUTING INFORMATION PROTOCOL: RIP DYNAMIC ROUTING LAB CONFIGURATIONFrom EverandROUTING INFORMATION PROTOCOL: RIP DYNAMIC ROUTING LAB CONFIGURATIONNo ratings yet

- Computer Networking: An introductory guide for complete beginners: Computer Networking, #1From EverandComputer Networking: An introductory guide for complete beginners: Computer Networking, #1Rating: 4.5 out of 5 stars4.5/5 (2)

- WAN TECHNOLOGY FRAME-RELAY: An Expert's Handbook of Navigating Frame Relay NetworksFrom EverandWAN TECHNOLOGY FRAME-RELAY: An Expert's Handbook of Navigating Frame Relay NetworksNo ratings yet

- The HP Openview Approach To Help Desk and Problem ManagementDocument21 pagesThe HP Openview Approach To Help Desk and Problem Managementsalman_ansari_pk4198No ratings yet

- Computer and LCDDocument1 pageComputer and LCDsalman_ansari_pk4198No ratings yet

- Chapter 11 Link-Level Flow and Error Control 1Document41 pagesChapter 11 Link-Level Flow and Error Control 1salman_ansari_pk4198No ratings yet

- Fulltext01 PDFDocument68 pagesFulltext01 PDFareebaNo ratings yet

- ProStream 1000 9000 Port Socket Service RedundancyDocument20 pagesProStream 1000 9000 Port Socket Service RedundancyRobertNo ratings yet

- Activity Guide and Evaluation Rubric - Task 4 - Speech Sounds and SemanticsDocument6 pagesActivity Guide and Evaluation Rubric - Task 4 - Speech Sounds and SemanticsCamila GarciaNo ratings yet

- DCOM Config Step by Step Win 7Document9 pagesDCOM Config Step by Step Win 7Juan Pablo RamirezNo ratings yet

- Guide To Autodidactic Foreign Language StudyDocument4 pagesGuide To Autodidactic Foreign Language StudyJenifferRuizNo ratings yet

- September 05, 2022 - September 09, 2022Document2 pagesSeptember 05, 2022 - September 09, 2022Maybelyn de los ReyesNo ratings yet

- Computer Networks, EC-803, Lab ManualDocument24 pagesComputer Networks, EC-803, Lab ManualKaran SainiNo ratings yet

- Student Profiles - (Taarvin, Tharshan, Elisha)Document3 pagesStudent Profiles - (Taarvin, Tharshan, Elisha)dinesan patmaNo ratings yet

- Principles of Speech WritingDocument24 pagesPrinciples of Speech WritingKate Iannel VicenteNo ratings yet

- Performativity and Performance: An Analysis of The Portrayal of Gender Identity of Women in Plays Written by Sri Lankan Playwrights - Sabreena NilesDocument5 pagesPerformativity and Performance: An Analysis of The Portrayal of Gender Identity of Women in Plays Written by Sri Lankan Playwrights - Sabreena NilesTheYRCNo ratings yet

- Bei Ya Vitabu Dukani 2023Document16 pagesBei Ya Vitabu Dukani 2023sele aloysNo ratings yet

- False Beliefs PDFDocument31 pagesFalse Beliefs PDFLee Ning JoannaNo ratings yet

- Donna Rosenberg - World Mythology-NTC Publishing Group (1994)Document612 pagesDonna Rosenberg - World Mythology-NTC Publishing Group (1994)Kwabena Obeng-Fosu100% (1)

- Calculate Electricity Bill With IfDocument118 pagesCalculate Electricity Bill With Ifmani8312No ratings yet

- Pelaporan IKP Puskesmas - 200921 (1), Edit Taufiq 20 Sept 2021Document54 pagesPelaporan IKP Puskesmas - 200921 (1), Edit Taufiq 20 Sept 2021diniayu100% (1)

- Data Recovery and High Availability Guideand ReferenceDocument524 pagesData Recovery and High Availability Guideand ReferenceCelso Cabral CoimbraNo ratings yet

- Business Architecture Aspects: Business Process ModellingDocument41 pagesBusiness Architecture Aspects: Business Process ModellingVihara Abeysinghe100% (1)

- SecurOS System Specs v.2.7Document9 pagesSecurOS System Specs v.2.7Full NetNo ratings yet

- The Gaelic LanguageDocument13 pagesThe Gaelic Languageapi-281117692No ratings yet

- The Story of Prophet HudDocument3 pagesThe Story of Prophet HudHafiz MajdiNo ratings yet

- Sternberg's Theory 1Document1 pageSternberg's Theory 1Keith RosillaNo ratings yet

- TOEFL Certificate Ibt Mugen OriDocument2 pagesTOEFL Certificate Ibt Mugen OriMugen EENo ratings yet

- DS ArchitectureDocument7 pagesDS Architecturemec101No ratings yet

- Why Theology MattersDocument4 pagesWhy Theology MattersMark SheppardNo ratings yet

- RoboDK Doc EN Robots KUKADocument7 pagesRoboDK Doc EN Robots KUKAПаша БыковNo ratings yet

- 19 JunechimesDocument10 pages19 Junechimesapi-168778838No ratings yet

- Chapter 1 (A) - Distribted SystemDocument40 pagesChapter 1 (A) - Distribted Systemsiraj mohammedNo ratings yet

- Jumboo ListsDocument36 pagesJumboo ListsMuhammad AkramNo ratings yet

- Types of Speech in Context g11Document26 pagesTypes of Speech in Context g11Camille FaustinoNo ratings yet