Professional Documents

Culture Documents

Sampling Distributions and The Bootstrap

Sampling Distributions and The Bootstrap

Uploaded by

bm.nikhileshOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Sampling Distributions and The Bootstrap

Sampling Distributions and The Bootstrap

Uploaded by

bm.nikhileshCopyright:

Available Formats

this month

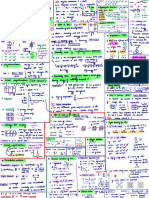

a Resistance by induced

b Variation in

c Simulated distributions

Points of SIGNIFICANCE t

or random mutations

Induced Spontaneous

cell count

Induced Spontaneous

0.3

of cell count

Induced

1 Spontaneous

2

Sampling distributions

3

4 N=8

+virus

and the bootstrap

2.07

1.42

0 4 8

Bacterium Bacterium with mutation Cell count

The bootstrap can be used to assess uncertainty of Figure 2 | The Luria-Delbrück experiment studied the mechanism by which

bacteria acquired mutations that conferred resistance to a virus. (a) Bacteria

sample estimates. are grown for t generations in the absence of the virus, and N cells are

plated onto medium containing the virus. Those with resistance mutations

We have previously discussed the importance of estimating uncer- survive. (b) The relationship between the mean and variation in the number

tainty in our measurements and incorporating it into data analysis1. of cells in each culture depends on the mutation mechanism. (c) Simulated

To know the extent to which we can generalize our observations, we distributions of cell counts for both processes shown in a using 10,000

need to know how our estimate varies across samples and whether cultures and mutation rates (0.49 induced, 0.20 spontaneous) that yield

equal count means. Induced mutations occur in the medium (at t = 4).

it is biased (systematically over- or underestimating the true value).

Spontaneous mutations can occur at each of the t = 4 generations. Points

Unfortunately, it can be difficult to assess the accuracy and precision and error bars are mean and s.d. of simulated distributions (3.92 ± 2.07

of estimates because empirical data are almost always affected by spontaneous, 3.92 ± 1.42 induced). For a small number of generations, the

noise and sampling error, and data analysis methods may be com- induced model distribution is binomial and approaches Poisson when t is

plex. We could address these questions by collecting more samples, large and rate is small.

© 2015 Nature America, Inc. All rights reserved.

but this is not always practical. Instead, we can use the bootstrap, a

computational method that simulates new samples, to help deter- If we are interested in estimating a quantity that is a complex func-

mine how estimates from replicate experiments might be distributed tion of the observed data (for example, normalized protein counts

and answer questions about precision and bias. or the output of a machine learning algorithm), a theoretical frame-

The quantity of interest can be estimated in multiple ways from a work to predict the sampling distribution may be difficult to devel-

sample—functions or algorithms that do this are called estimators op. Moreover, we may lack the experience or knowledge about the

(Fig. 1a). In some cases we can analytically calculate the sampling system to justify any assumptions that would simplify calculations.

distribution for an estimator. For example, the mean of a normal In such cases, we can apply the bootstrap instead of collecting a large

distribution, m, can be estimated using the sample mean. If we collect volume of data to build up the sampling distribution empirically.

many samples, each of size n, we know from theory that their means The bootstrap approximates the shape of the sampling distribu-

will form a sampling distribution that is also normal with mean m tion by simulating replicate experiments on the basis of the data

and s.d. s/√n (s is the population s.d.). The s.d. of a sampling dis- we have observed. Through simulation, we can obtain s.e. values,

tribution of a statistic is called the standard error (s.e.)1 and can be predict bias, and even compare multiple ways of estimating the

used to quantify the variability of the estimator (Fig. 1). same quantity. The only requirement is that data are independently

The sampling distribution tells us about the reproducibility and sampled from a single source distribution.

accuracy of the estimator (Fig. 1b). The s.e. of an estimator is a We’ll illustrate the bootstrap using the 1943 Luria-Delbrück

measure of precision: it tells us how much we can expect estimates experiment, which explored the mechanism behind mutations con-

npg

to vary between experiments. However, the s.e. is not a confidence ferring viral resistance in bacteria2. In this experiment, researchers

interval. It does not tell us how close our estimate is to the true value questioned whether these mutations were induced by exposure to

or whether the estimator is biased. To assess accuracy, we need to the virus or, alternatively, were spontaneous (occurring randomly

measure bias—the expected difference between the estimate and at any time) (Fig. 2a). The authors reasoned that these hypotheses

the true value. could be distinguished by growing a bacterial culture, plating it onto

medium that contained a virus and then determining the variability

a Estimator sampling distribution b Performance of an estimator

in the number of surviving (mutated) bacteria (Fig. 2b). If the muta-

Population Precision Accuracy

distribution tions were induced by the virus after plating, the bacteria counts

μ

Sample mean is would be Poisson distributed. Alternatively, if mutations occurred

an estimator of μ

Sampling

spontaneously during growth of the culture, the variance would be

distribution

of estimator –2 –1 0 1 2 –2 –1 0 1 2

higher than the mean, and the Poisson model—which has equal

–2 –1 0 1 2

s.d. Bias

mean and variance—would be inadequate. This increase in vari-

Figure 1 | Sampling distributions of estimators can be used to predict the ance is expected because spontaneous mutations propagate through

precision and accuracy of estimates of population characteristics. generations as the cells multiply. We simulated 10,000 cultures to

(a) The shape of the distribution of estimates can be used to evaluate demonstrate this distribution; even for a small number of genera-

the performance of the estimator. The population distribution shown is tions and cells, the difference in distribution shape is clear (Fig. 2c).

standard normal (m = 0, s = 1). The sampling distribution of the sample To quantify the difference between distributions under

means estimator is shown in red (this particular estimator is known to be

the two mutation mechanisms, Luria and Delbrück used the

normal with s = 1/√n for sample size n). (b) Precision can be measured

by the s.d. of the sampling distribution (which is defined as the standard

variance-to-mean ratio (VMR), which is reasonably stable between

error, s.e.). Estimators whose distribution is not centered on the true value samples and free of bias. From the reasoning above, if the mutations

are biased. Bias can be assessed if the true value (red point) is available. are induced, the counts are distributed as Poisson, and we expect

Error bars show s.d. VMR = 1; if mutations are spontaneous, then VMR >> 1.

nature methods | VOL.12 NO.6 | JUNE 2015 | 477

this month

a Bootstrap sample generation b Bootstrap VMR sampling distributions

Source sample distribution

The parametric bootstrap VMR sampling distributions of 10,000

Source sample Negative binomial Bimodal

Negative binomial

simulated samples are shown in Figure 3b. The s.d. of these distribu-

Parametric

bootstrap

tions is a measure of the precision of the VMR. When our assumed

Negative binomial

Bimodal

model matches the data source (negative binomial), the VMR distri-

model assumed 4.58 1.59

4.58 4.35

bution simulated by the parametric bootstrap very closely approxi-

Nonparametric

bootstrap

mates the VMR distribution one would obtain if we drew all the sam-

0

Cell count 25+

No assumption

VMR sampling

ples from the source distribution (Fig. 3b). The bootstrap sampling

required

4.58

2.61

1.59

1.33 distribution distribution s.d. matches that of the true sampling distribution (4.58).

True

0 10 20

Cell count

30 0 10

VMR

20 0 10

VMR

20

Bootstrap In practice we cannot be certain that our parametric bootstrap

model represents the distribution of the source sample. For example,

Figure 3 | The sampling distribution of complex quantities such as the if our source sample is drawn from a bimodal distribution instead of

variance-to-mean ratio (VMR) can be generated from observed data using

a negative binomial, the parametric bootstrap generates an inaccurate

the bootstrap. (a) A source sample (n = 25, mean = 5.48, variance = 55.3,

VMR = 10.1), generated from negative binomial distribution (m = 5,

sampling distribution because it is limited by our erroneous assump-

s2 = 50, VMR = 10), was used to simulate four samples (hollow circles) with tion (Fig. 3b). Because the source samples have similar mean and vari-

parametric (blue) and nonparametric bootstrap (red). (b) VMR sampling ance, the output of the parametric bootstrap is essentially the same as

distributions generated from parametric (blue) and nonparametric (red) before. The parametric bootstrap generates not only the wrong shape

bootstrap of 10,000 samples (n = 25) simulated from source samples drawn but also an incorrect uncertainty in the VMR. Whereas the true sam-

from two different distributions: negative binomial and bimodal, both with pling distribution from the bimodal distribution has an s.d. = 1.59, the

m = 5 and s2 = 50, shown as black histograms with the source samples

bootstrap (using negative binomial model) overestimates it as 4.35.

shown below. Points and error bars show mean and s.d. of the respective

In the nonparametric bootstrap, we forego the model and approxi-

© 2015 Nature America, Inc. All rights reserved.

sampling distributions of VMR. Values beside error bars show s.d.

mate the population by randomly sampling (with replacement) from

Unfortunately, measuring the uncertainty in the VMR is dif- the observed data to obtain new samples of the same size. As before,

ficult because its sampling distribution is hard to derive for we compute the VMR for each bootstrap sample to generate boot-

small sample sizes. Luria and Delbrück plated 5–100 cultures per strap sampling distributions. Because the nonparametric bootstrap

experiment to measure this variation before being able to rule is not limited by a model assumption, it reasonably reconstructs the

out the induction mechanism. Let’s see how the bootstrap can be VMR sampling distributions for both source distributions. It is gen-

used to estimate the uncertainty and bias of the VMR using mod- erally safer to use the nonparametric bootstrap when we are uncer-

est sample sizes; applying it to distinguish between the mutation tain of the source distribution. However, because the nonparametric

mechanisms is beyond the scope of this column. bootstrap takes into account only the data observed and thus cannot

Suppose that we perform a similar experiment with 25 cultures generate very extreme samples, it may underestimate the sampling

and use the count of cells in each culture as our sample (Fig. 3a). distribution s.d., especially when sample size is small. We see some

We can use our sample’s mean (5.48) and variance (55.3) to calcu- evidence of this in our simulation. Whereas the true sampling dis-

late VMR = 10.1, but because we don’t have access to the sampling tributions have s.d. values of 4.58 and 1.59 for the negative binomial

distribution, we don’t know the uncertainty. Instead of plating and bimodal, respectively, the bootstrap yields 2.61 and 1.33 (43%

more cultures, let’s simulate more samples with the bootstrap. To and 16% lower) (Fig. 3b).

demonstrate differences in the bootstrap, we will consider two The bootstrap sampling distribution can also provide an estimate of

source samples, one drawn from a negative binomial and one bias, a systematic difference between our estimate of the VMR and the

npg

from a bimodal distribution of cell counts (Fig. 3b). Each distri- true value. Recall that the bootstrap approximates the whole popula-

bution is parameterized to have the same VMR = 10 (m = 5, s 2 = tion by the data we have observed in our initial sample. Therefore, if

50). The negative binomial distribution is a generalized form of we treat the VMR derived from the sample used for bootstrapping as

the Poisson distribution and models discrete data with indepen- the true value and find that our bootstrap estimates are systematically

dently specified mean and variance, which is required to allow smaller or larger than this value, then we can predict that our initial

for different values of VMR. For the bimodal distribution we use estimate is also biased. In our simulations we did not see any signifi-

a combination of two Poisson distributions. The source samples cant sign of bias—means of bootstrap distributions were close to the

generated from these distributions were selected to have the same sample VMR.

VMR = 10.1, very close to their populations’ VMR = 10. The simplicity and generality of bootstrapping allow for analysis of

We will discuss two types of bootstrap: parametric and non- the stability of almost any estimation process, such as generation of

parametric. In the parametric bootstrap, we use our sample to phylogenetic trees or machine learning algorithms.

estimate the parameters of a model from which further samples

COMPETING FINANCIAL INTERESTS

are simulated. Figure 3a shows a source sample drawn from the

The authors declare no competing financial interests.

negative binomial distribution together with four samples simu-

lated using a parametric bootstrap that assumes a negative bino- Anthony Kulesa, Martin Krzywinski, Paul Blainey & Naomi Altman

mial model. Because the parametric bootstrap generates samples 1. Krzywinski, M. & Altman, N. Nat. Methods 10, 809–810 (2013).

from a model, it can produce values that are not in our sample, 2. Luria, S.E. & Delbrück, M. Genetics 28, 491–511 (1943).

including values outside of the range of observed data, to create

a smoother d istribution. For example, the maximum value in our Anthony Kulesa is a graduate student in the Department of Biological Engineering

source sample is 29, whereas one of the simulated samples in Figure at MIT. Martin Krzywinski is a staff scientist at Canada’s Michael Smith Genome

Sciences Centre. Paul Blainey is an Assistant Professor of Biological Engineering

3a includes 30. The choice of model should be based on our knowl- at MIT and a Core Member of the Broad Institute. Naomi Altman is a Professor of

edge of the experimental system that generated the original sample. Statistics at The Pennsylvania State University.

478 | VOL.12 NO.6 | JUNE 2015 | nature methods

You might also like

- Panel Data: Fixed and Random Effects: I1 0 I1 0 I I1Document8 pagesPanel Data: Fixed and Random Effects: I1 0 I1 0 I I1Waqar HassanNo ratings yet

- Driver Lncrna Oncoplot: ArticleDocument1 pageDriver Lncrna Oncoplot: ArticleshoummowNo ratings yet

- Week 12 Real AnalysisDocument9 pagesWeek 12 Real Analysiskeyla inaraNo ratings yet

- Rate Form - BackDocument1 pageRate Form - BackrenzowenhitoroNo ratings yet

- EthicsDocument1 pageEthics鈴木結月No ratings yet

- 48.yellow Alert Frame 27.5 ST 100 L YellowDocument7 pages48.yellow Alert Frame 27.5 ST 100 L YellowGabriel MorosanuNo ratings yet

- Operational Research Series PRT 3Document25 pagesOperational Research Series PRT 3NotebookNo ratings yet

- ACT Math Formulas To MemorizeDocument1 pageACT Math Formulas To MemorizeRack OsMaNo ratings yet

- Vaccines I T: Ma (MM 300 0 MMDocument1 pageVaccines I T: Ma (MM 300 0 MMjmNo ratings yet

- Pag 104Document1 pagePag 104Frank FungNo ratings yet

- The Spanish Version of The Pain Anxiety Symptoms SDocument2 pagesThe Spanish Version of The Pain Anxiety Symptoms SRafael UgarteNo ratings yet

- Serial Write and Read Loop: Bytes at PortDocument1 pageSerial Write and Read Loop: Bytes at PortErikChinachiAmánNo ratings yet

- 110-1020 5Document2 pages110-1020 5Emir KamberovicNo ratings yet

- 12745QGDocument2 pages12745QGvisvambharaNo ratings yet

- WCB 061.20.0644 Swing Circle Gear Turntable Slewing Ring BearingDocument1 pageWCB 061.20.0644 Swing Circle Gear Turntable Slewing Ring BearingWCB BEARINGNo ratings yet

- Traffic and Market Report q2 2012Document4 pagesTraffic and Market Report q2 2012Ahmed HussainNo ratings yet

- WFP 0000130513Document2 pagesWFP 0000130513h3493061No ratings yet

- Bildschirmfoto 2022-05-08 Um 11.39.35Document1 pageBildschirmfoto 2022-05-08 Um 11.39.35Giorgi AlimbarashviliNo ratings yet

- Statistics Thống KêDocument11 pagesStatistics Thống KêVu Khanh HuyenNo ratings yet

- BLANK Everything You Need To Memorise: Part 3, Statistics PDFDocument1 pageBLANK Everything You Need To Memorise: Part 3, Statistics PDFFrancesco PiazzaNo ratings yet

- File 2280Document1 pageFile 2280m8j87cg3rzNo ratings yet

- Coexistent Surviving Neonatetwin and Complete Hydatidiform MoleDocument3 pagesCoexistent Surviving Neonatetwin and Complete Hydatidiform MoleKahfi Rakhmadian KiraNo ratings yet

- CHI Mborazo: Provi Nci A deDocument2 pagesCHI Mborazo: Provi Nci A deTheMaskeredNo ratings yet

- ToyotaDocument1 pageToyotaDîo ÂrûñNo ratings yet

- Inequalities: Compound InequalityDocument4 pagesInequalities: Compound InequalityCrystal LoNo ratings yet

- Calc Lec 1Document1 pageCalc Lec 1Nirved JainNo ratings yet

- Summary of NumbersDocument1 pageSummary of Numbers2E19BrendanNo ratings yet

- (Synthesis Lectures On Quantum Computing) Marco Lanzagorta-Quantum Radar - MC (2012)Document141 pages(Synthesis Lectures On Quantum Computing) Marco Lanzagorta-Quantum Radar - MC (2012)Wilmer Moncada100% (1)

- Local Media8050642576181228665Document2 pagesLocal Media8050642576181228665Beloy SumaNo ratings yet

- Aromaticity, Pericyclic Reactions and PhotochemistryDocument181 pagesAromaticity, Pericyclic Reactions and PhotochemistryAhallya JaladeepNo ratings yet

- Final Exam 2014Document7 pagesFinal Exam 2014tedy yidegNo ratings yet

- D Block-ExtractedDocument1 pageD Block-ExtractedKharnikaNo ratings yet

- Supply Chain Cognitive ProjectDocument1 pageSupply Chain Cognitive ProjectNabia SohailNo ratings yet

- Regression AnalysisDocument4 pagesRegression AnalysisThorn MailNo ratings yet

- Teoría Completa PDFDocument10 pagesTeoría Completa PDFIñigoNo ratings yet

- Flashcards Algebra e GeometriaDocument35 pagesFlashcards Algebra e GeometriaMartina MigliaccioNo ratings yet

- GITDocument22 pagesGITPrantik Manoj SaikiaNo ratings yet

- LGUs Statistical Report TemplateDocument3 pagesLGUs Statistical Report TemplateDalayunan IloilocityNo ratings yet

- Bending Table WRT Part Co-Ordinate: Scale: 1:10 (1:1) - (1:5)Document1 pageBending Table WRT Part Co-Ordinate: Scale: 1:10 (1:1) - (1:5)Pritamauto mechNo ratings yet

- Dtlstict2012d2 enDocument66 pagesDtlstict2012d2 enkago khachanaNo ratings yet

- Factsheet Jun2021 CFHSFDSDDocument1 pageFactsheet Jun2021 CFHSFDSDAbu KhalidNo ratings yet

- Further Mech Cheat SheetDocument3 pagesFurther Mech Cheat SheetmaneetbhattNo ratings yet

- Oxford Notes PDFDocument239 pagesOxford Notes PDFN S ChakNo ratings yet

- Non-Conforming: DefectiveDocument7 pagesNon-Conforming: DefectiveKhloodNo ratings yet

- Nejmc2008646 PDFDocument3 pagesNejmc2008646 PDFRosliana MahardhikaNo ratings yet

- Cheat Sheet SSPDocument2 pagesCheat Sheet SSPArchita VNo ratings yet

- B School RankingsDocument2 pagesB School Rankingschandru420100% (4)

- Hypothesis Testing Spinning The WheelDocument1 pageHypothesis Testing Spinning The WheelRudra DasNo ratings yet

- Panel Data: Fixed and Random Effects: I1 0 I1 0 I I1Document8 pagesPanel Data: Fixed and Random Effects: I1 0 I1 0 I I1Anonymous RQQTvjNo ratings yet

- Drenaj Stradal Cu TeavaDocument2 pagesDrenaj Stradal Cu Teavaadrian_dumitru_4No ratings yet

- HV Impulse Test Systems: Lightning Impulse Chopped Lightning Impulse Switching Impulse Fast TransientDocument3 pagesHV Impulse Test Systems: Lightning Impulse Chopped Lightning Impulse Switching Impulse Fast Transientchaima haddoudiNo ratings yet

- Mole Concept Short NotesDocument3 pagesMole Concept Short NotesGrandmaNo ratings yet

- Chemistry Class 11th Short NotesDocument66 pagesChemistry Class 11th Short NotesSiddharth (Keshav)No ratings yet

- ZF Idrift: Marine Propulsion SystemsDocument3 pagesZF Idrift: Marine Propulsion SystemsAlbertoNo ratings yet

- Bobadilla 03 x03 Activity Costs WP Mas Cost Accounting Reviewer - CompressDocument20 pagesBobadilla 03 x03 Activity Costs WP Mas Cost Accounting Reviewer - Compressjohnreydomingo98No ratings yet

- DatasheetDocument1 pageDatasheetguapodelejosNo ratings yet

- CATALOGO CKPDocument1 pageCATALOGO CKPDaniel MuñozNo ratings yet

- SPIVA Around The WorldDocument1 pageSPIVA Around The WorldCNBC.com100% (1)

- Sani Tary RTD Sensor: Reotemp I NST R Ument S PR Oduct DR Awi NG PR Oduct Made I N U. S. ADocument1 pageSani Tary RTD Sensor: Reotemp I NST R Ument S PR Oduct DR Awi NG PR Oduct Made I N U. S. AFrank Almenares UrzolaNo ratings yet

- It's So Easy Going Green: An Interactive, Scientific Look at Protecting Our EnvironmentFrom EverandIt's So Easy Going Green: An Interactive, Scientific Look at Protecting Our EnvironmentNo ratings yet

- Quantitative Methods: Section-BDocument52 pagesQuantitative Methods: Section-BKamesh VijayabaskaranNo ratings yet

- Measures of VariabilityDocument8 pagesMeasures of VariabilityArci RyanNo ratings yet

- EstimationDocument12 pagesEstimationwe_spidus_2006No ratings yet

- Stakeholder Groups of Public and Private UniversitDocument22 pagesStakeholder Groups of Public and Private UniversitPhương VõNo ratings yet

- Chapter 5 Some AnswersDocument6 pagesChapter 5 Some AnswersGazi Mohammad JamilNo ratings yet

- Science and Football 2007 Antalya.10pdf PDFDocument225 pagesScience and Football 2007 Antalya.10pdf PDFRomi Bu0% (1)

- Module 3 and 4 Only QuestionsDocument169 pagesModule 3 and 4 Only QuestionslovishNo ratings yet

- Chapter 4A: Inferences Based On A Single Sample: Confidence IntervalsDocument88 pagesChapter 4A: Inferences Based On A Single Sample: Confidence IntervalsKato AkikoNo ratings yet

- Handout 03 Continuous Random VariablesDocument18 pagesHandout 03 Continuous Random Variablesmuhammad ali100% (1)

- HANDOUTSdocxDocument4 pagesHANDOUTSdocxsofiaNo ratings yet

- Projection SampleDocument6 pagesProjection SampleSesennNo ratings yet

- Statistic and Probability ReportDocument20 pagesStatistic and Probability ReportIvy Jewel Zambrano IlagaNo ratings yet

- Gestión de Las Fases de Pre y Post-Analítica de La PDFDocument12 pagesGestión de Las Fases de Pre y Post-Analítica de La PDFUlises Saldías RoaNo ratings yet

- R.1 VariousHypoTestAnswrsDocument7 pagesR.1 VariousHypoTestAnswrsArthur ChenNo ratings yet

- 19010920-104 Quiz 2 StatisticDocument3 pages19010920-104 Quiz 2 StatisticAyesha FarooqNo ratings yet

- Concrete Strength Acceptance Criteria IS456-2000Document5 pagesConcrete Strength Acceptance Criteria IS456-2000Limbu Niwa LawahangNo ratings yet

- Business Stats ProblemsDocument2 pagesBusiness Stats ProblemsIshaan KumarNo ratings yet

- Speech Processing 15-492/18-492: Speech Recognition Template MatchingDocument24 pagesSpeech Processing 15-492/18-492: Speech Recognition Template MatchingShobhit PradhanNo ratings yet

- NR-220105 - Probability and StatisticsDocument8 pagesNR-220105 - Probability and StatisticsSrinivasa Rao G100% (1)

- Jim 104e 0708 PDFDocument27 pagesJim 104e 0708 PDFasangNo ratings yet

- ST130 Final Exam S1, 2010Document8 pagesST130 Final Exam S1, 2010Chand DivneshNo ratings yet

- Subject: STATS Test Marks: 50Document4 pagesSubject: STATS Test Marks: 50aravindreddyNo ratings yet

- Full Download Book Essentials of Statistics For The Behavioral Sciences 9Th PDFDocument41 pagesFull Download Book Essentials of Statistics For The Behavioral Sciences 9Th PDFleonard.collingwood476100% (29)

- Tensile TestDocument5 pagesTensile Testgnklol3No ratings yet

- Managing Director's Message: BriefingDocument126 pagesManaging Director's Message: Briefingsid2007goelNo ratings yet

- Maths MCQ 1&2Document7 pagesMaths MCQ 1&25026 SHIBU MNo ratings yet

- Chapter 6 - Risk ManagementDocument10 pagesChapter 6 - Risk ManagementCHIẾN NGUYỄN NGỌC HOÀNGNo ratings yet

- Inventory Management - OPMG406 - L08 - Special Inventory Systems - Spring22Document14 pagesInventory Management - OPMG406 - L08 - Special Inventory Systems - Spring22mohamed abushanabNo ratings yet

- MODULE Normal DistirbutionDocument5 pagesMODULE Normal DistirbutionTrisha GonzalesNo ratings yet