Professional Documents

Culture Documents

Machine Learning Math Deep Dive - Opendir - Cloud

Machine Learning Math Deep Dive - Opendir - Cloud

Uploaded by

adapa.sai2022Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Machine Learning Math Deep Dive - Opendir - Cloud

Machine Learning Math Deep Dive - Opendir - Cloud

Uploaded by

adapa.sai2022Copyright:

Available Formats

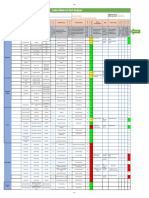

Example

Straight Line Technique Formula Y = mx+b

We choose linear Regression(When data is showing linear

pa erns Least Square Error Technique Formula Y = mx+b

Gradient Descent Technique Formula Y = mx+b

When Output (Y) is con nuous Regression Models

Hyperparameter Tuning

Feature Engineering/Change the features

Over Sampling or Undersampling Techniques

Evalua on Metrics

Change the algorithms Accuracy Improving Techniques

Change the sample or collect more data

Subtopic

Standardiza on

We can apply for Linear regression, KNN, Neural Network,

RobustScaler Feature Scaling Techniques

SVM

MinMaxScaler

Logis c Regression

Variance Error

Machine Learning Math

Variance-Bias Tradeoff

CART

Bias Error

ID3

Decision Tree Classifica on

Op miza on & Regulariza on

CHAID

Overfi ng & Underfi ng ID 4.5

Random Forest

When Output(Y) is Discrete Classifica on Models

Gradient Boos ng

Support Vector Machines

KNN Algorithm 1. Euclidean Distance

2. Manha an Distance

3. Minkowski Distance

Subtopic

Subtopic

You might also like

- DA4675 CFA Level II SmartSheet 2020 PDFDocument10 pagesDA4675 CFA Level II SmartSheet 2020 PDFMabelita Flores100% (3)

- Manual 1000 HP Quintuplex MSI QI 1000 PumpDocument106 pagesManual 1000 HP Quintuplex MSI QI 1000 Pumposwaldo58No ratings yet

- Supervised LearningDocument3 pagesSupervised Learningvamsi krishnaNo ratings yet

- Reverse Email Address Lookup: Esalerugs - 75% Off RetailDocument1 pageReverse Email Address Lookup: Esalerugs - 75% Off RetailPavle RisteskiNo ratings yet

- Storm Drainage Model Flow ChartDocument1 pageStorm Drainage Model Flow ChartHemant ChauhanNo ratings yet

- Slides Basics Learnercomponents HroDocument9 pagesSlides Basics Learnercomponents HroChaitanya ParaleNo ratings yet

- Machine Learning Quick Start GuideDocument1 pageMachine Learning Quick Start GuideHammad RiazNo ratings yet

- Six Sigma Methods and Tools For Process Improvement 1664037686Document86 pagesSix Sigma Methods and Tools For Process Improvement 1664037686Haris PrayogoNo ratings yet

- Machine Learning Algorithms CheatsheetDocument1 pageMachine Learning Algorithms CheatsheetdownbuliaoNo ratings yet

- L7-526 Exact Comparison ENDocument2 pagesL7-526 Exact Comparison ENvihansNo ratings yet

- Bhcs Maths Roadmap 2019Document1 pageBhcs Maths Roadmap 2019Michael100% (1)

- Complete Machine Learning Project FlowchartDocument1 pageComplete Machine Learning Project FlowchartAbhishek8101No ratings yet

- AI StrategyDocument31 pagesAI StrategyHans CasteelsNo ratings yet

- Teaching Integer Programming Formulations Using The Traveling Salesman ProblemDocument8 pagesTeaching Integer Programming Formulations Using The Traveling Salesman ProblemSudhanshu SinghNo ratings yet

- Contoh Research GapDocument1 pageContoh Research GapDhika AdhityaNo ratings yet

- Six Sigma Training PresentationDocument86 pagesSix Sigma Training PresentationphongbuitmvNo ratings yet

- Rainfall PredictionDocument8 pagesRainfall PredictionANIKET DUBEYNo ratings yet

- ( - Partial) - ( - Partial Y) (LN (2x 3+3y 2) ) - Partial Derivative Calculator - SymbolabDocument2 pages( - Partial) - ( - Partial Y) (LN (2x 3+3y 2) ) - Partial Derivative Calculator - SymbolabafjkjchhghgfbfNo ratings yet

- Complicated Rate Equations PPT VERDocument1 pageComplicated Rate Equations PPT VERnabillaNo ratings yet

- MATLAB Fundamentals - Quick ReferenceDocument14 pagesMATLAB Fundamentals - Quick ReferenceChin AliciaNo ratings yet

- 2.3: Trigonometric Substitution: Integrals Involving XDocument1 page2.3: Trigonometric Substitution: Integrals Involving Xfuriouslighning1929No ratings yet

- TQM 2021HB79113Document1 pageTQM 2021HB79113chirag sharmaNo ratings yet

- Data Transformation With Dplyr Cheat SheetDocument2 pagesData Transformation With Dplyr Cheat SheetAlicia GordonNo ratings yet

- Trajectory Tracking of Quaternion Based Quadrotor Using Model Predictive ControlDocument19 pagesTrajectory Tracking of Quaternion Based Quadrotor Using Model Predictive ControlMaidul IslamNo ratings yet

- MIMO Lecture Notes Part 2 PDFDocument18 pagesMIMO Lecture Notes Part 2 PDFmohamed1991No ratings yet

- CS3500 Computer Graphics Module: Scan Conversion: P. J. NarayananDocument56 pagesCS3500 Computer Graphics Module: Scan Conversion: P. J. Narayananapi-3799599No ratings yet

- Alarm and Warning List00Document2 pagesAlarm and Warning List00Saeed ZamaniNo ratings yet

- Crash Course in Analytics For Non Analytics ManagersDocument74 pagesCrash Course in Analytics For Non Analytics ManagersUzair FaruqiNo ratings yet

- Scatter Diagram - Merits and Demerits - Correlation Analysis PDFDocument3 pagesScatter Diagram - Merits and Demerits - Correlation Analysis PDFAsif gillNo ratings yet

- TOS Template Exam With FormulaDocument1 pageTOS Template Exam With FormulaRuby Esmeralda AndayaNo ratings yet

- Corporate Finance Cheat SheetDocument3 pagesCorporate Finance Cheat Sheet050610220479No ratings yet

- Module-3 Eco-598 ML & AiDocument93 pagesModule-3 Eco-598 ML & AiSoujanya NerlekarNo ratings yet

- Machine Learning - Project Approach - Opendir - CloudDocument1 pageMachine Learning - Project Approach - Opendir - Cloudadapa.sai2022No ratings yet

- DAA Unit 1Document41 pagesDAA Unit 1ILSFE 1435No ratings yet

- 4Ms' & AN I - CMV Matrix: Man Machine Material Method InformationDocument12 pages4Ms' & AN I - CMV Matrix: Man Machine Material Method InformationZia AbidiNo ratings yet

- CX EN Manu DRW171461AB 20180726 WDocument1 pageCX EN Manu DRW171461AB 20180726 WEricsonOSNo ratings yet

- Integral Equation That Equals To 2 - Google SearchDocument1 pageIntegral Equation That Equals To 2 - Google Searchmariahalmazrouei360No ratings yet

- 8-Data Driven MPCDocument94 pages8-Data Driven MPChinsermuNo ratings yet

- Me 500Document17 pagesMe 500Marl SumaelNo ratings yet

- S 0363012901387550Document23 pagesS 0363012901387550980921kill123No ratings yet

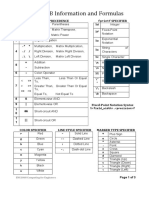

- MATLAB Information and Formulas: Operator Precedence Fprintf SPECIFIERDocument3 pagesMATLAB Information and Formulas: Operator Precedence Fprintf SPECIFIEROmar El MasryNo ratings yet

- A Path-Dependent Vector Field With Zero Curl - Math InsightDocument1 pageA Path-Dependent Vector Field With Zero Curl - Math InsightMohsin JuttNo ratings yet

- Bayesian Data Analysis Using RDocument3 pagesBayesian Data Analysis Using Rmexx4u2nvNo ratings yet

- SI Auto Transm BPT 06 18 Rev2Document1 pageSI Auto Transm BPT 06 18 Rev2deepak.sNo ratings yet

- Parametric IdentificationDocument45 pagesParametric Identificationhung kungNo ratings yet

- Assignment MethodsDocument6 pagesAssignment MethodsAndrei GheorghiuNo ratings yet

- Hypothesis Testing Spinning The WheelDocument1 pageHypothesis Testing Spinning The WheelRudra DasNo ratings yet

- EBI Brief Template Concrete Representational AbstractDocument3 pagesEBI Brief Template Concrete Representational AbstractARMY BLINKNo ratings yet

- TOS - SY-2022-2023-in-MATH-8 - QUARTER 2Document3 pagesTOS - SY-2022-2023-in-MATH-8 - QUARTER 2RYAN C. ENRIQUEZNo ratings yet

- Derivative Calculator - With Steps!Document4 pagesDerivative Calculator - With Steps!emil wahyudiantoNo ratings yet

- Neural Network NotesDocument268 pagesNeural Network NotesGoutham Kumar RNo ratings yet

- MLT FormulaDocument1 pageMLT Formulaswapnil kaleNo ratings yet

- Everything You Need To Memorise - Part 1, Core Pure Year 1 PDFDocument1 pageEverything You Need To Memorise - Part 1, Core Pure Year 1 PDFBrain MasterNo ratings yet

- HTR MT17Document1 pageHTR MT17Andrés Oswaldo Perales LópezNo ratings yet

- WWW GeeksforgeeksDocument2 pagesWWW GeeksforgeeksTest accNo ratings yet

- Practical Problem SolvingDocument1 pagePractical Problem SolvingHaris PrayogoNo ratings yet

- Practical Problem Solving (PPS) : S M A R TDocument1 pagePractical Problem Solving (PPS) : S M A R TlmqasemNo ratings yet

- Intervention Name: Word - Problem MnemonicsDocument3 pagesIntervention Name: Word - Problem MnemonicsJamuna Pandiyan MuthatiyarNo ratings yet

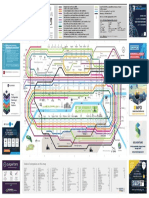

- IT Subway Map Europe 2021Document1 pageIT Subway Map Europe 2021Chandrani GuptaNo ratings yet

- Using the Standards - Number & Operations, Grade 8From EverandUsing the Standards - Number & Operations, Grade 8No ratings yet

- Training Regulations: Motorcycle/ Small Engine Servicing NC IiDocument102 pagesTraining Regulations: Motorcycle/ Small Engine Servicing NC IiAmit Chopra AmitNo ratings yet

- Chapter 2 Empiricism and PositivismDocument15 pagesChapter 2 Empiricism and PositivismJames RayNo ratings yet

- Decommissioning and Installation Project at Lillgrund Offshore Wind FarmDocument23 pagesDecommissioning and Installation Project at Lillgrund Offshore Wind FarmzakaNo ratings yet

- Quiz 2Document3 pagesQuiz 2Blancheska DimacaliNo ratings yet

- 1 - Young Paper On EXW HistoryDocument6 pages1 - Young Paper On EXW Historylastking_king17No ratings yet

- Earthing Design Calculation 380/110/13.8kV SubstationDocument19 pagesEarthing Design Calculation 380/110/13.8kV Substationhpathirathne_1575733No ratings yet

- Visvesvaraya Technological University Application For Issue of Duplicate Marks Cards (DMC)Document1 pageVisvesvaraya Technological University Application For Issue of Duplicate Marks Cards (DMC)Harshkumar KattiNo ratings yet

- Kingspan Jindal Ext. Wall Panel SystemDocument32 pagesKingspan Jindal Ext. Wall Panel Systemabhay kumarNo ratings yet

- Experimental Optimization of Radiological Markers For Artificial Disk Implants With Imaging/Geometrical ApplicationsDocument11 pagesExperimental Optimization of Radiological Markers For Artificial Disk Implants With Imaging/Geometrical ApplicationsSEP-PublisherNo ratings yet

- FAR FR2805 Operators ManualDocument169 pagesFAR FR2805 Operators ManualJack NguyenNo ratings yet

- B.Lib SyllabusDocument17 pagesB.Lib SyllabussantoshguptaaNo ratings yet

- Jurnal AnakDocument6 pagesJurnal AnakWidhi SanglahNo ratings yet

- Boot Procedure For REDocument12 pagesBoot Procedure For RESivaraman AlagappanNo ratings yet

- 3134478Document4 pages3134478Intan WidyawatiNo ratings yet

- Four Japanese Brainstorming Techniques A-Small-LabDocument7 pagesFour Japanese Brainstorming Techniques A-Small-LabChris BerthelsenNo ratings yet

- 4.3 - B - 7 - Procedure For OHS Management ProgrammeDocument3 pages4.3 - B - 7 - Procedure For OHS Management ProgrammeSASIKUMAR SNo ratings yet

- 2 HIE ENG HardwareDocument21 pages2 HIE ENG HardwareWalter Lazo100% (1)

- Intimate Partner Violence Among Pregnant Women in Kenya: Forms, Perpetrators and AssociationsDocument25 pagesIntimate Partner Violence Among Pregnant Women in Kenya: Forms, Perpetrators and AssociationsNove Claire Labawan EnteNo ratings yet

- SSRN Id3139808Document5 pagesSSRN Id3139808Carlo L. TongolNo ratings yet

- Vertiv Aisle Containment SystemDocument46 pagesVertiv Aisle Containment SystemOscar Lendechy MendezNo ratings yet

- Residential BuildingDocument64 pagesResidential BuildingFahad Ali100% (1)

- SIE DS TPS411 ExternalDocument4 pagesSIE DS TPS411 ExternalCarlos HernándezNo ratings yet

- Tripper Structure LoadsDocument9 pagesTripper Structure LoadsHarish KumarNo ratings yet

- Presentation FixDocument3 pagesPresentation FixDanny Fajar SetiawanNo ratings yet

- Excavator PC210-8 - UESS11008 - 1209Document28 pagesExcavator PC210-8 - UESS11008 - 1209Reynaldi TanjungNo ratings yet

- Holbright Hb-8212Document1 pageHolbright Hb-8212Eliana Cristina100% (1)

- Fukuoka - Serene Green Roof of JapanDocument7 pagesFukuoka - Serene Green Roof of JapanJo ChanNo ratings yet

- EBenefits Life Events White PaperDocument21 pagesEBenefits Life Events White PaperRahul VemuriNo ratings yet

- Der DirectoryDocument262 pagesDer DirectoryBenNo ratings yet