Professional Documents

Culture Documents

Instruction Level Parallelism: Intel

Instruction Level Parallelism: Intel

Uploaded by

Jayaprabha KanaseCopyright:

Available Formats

You might also like

- Final Review 09Document21 pagesFinal Review 09qwerty17327No ratings yet

- Assembler InstructionsDocument101 pagesAssembler Instructionsalokagarwaljgd33% (3)

- Inside The CpuDocument10 pagesInside The Cpuapi-3760834No ratings yet

- Mips RIO000 Microprocessor: SuperscalarDocument13 pagesMips RIO000 Microprocessor: SuperscalarNishant SinghNo ratings yet

- 09 - Thread Level ParallelismDocument34 pages09 - Thread Level ParallelismSuganya Periasamy50% (2)

- 15th Lecture 6. Future Processors To Use Coarse-Grain ParallelismDocument35 pages15th Lecture 6. Future Processors To Use Coarse-Grain ParallelismArchana RkNo ratings yet

- Superscalar Processor Pentium ProDocument11 pagesSuperscalar Processor Pentium ProdiwakerNo ratings yet

- Project-1 Basic Architecture DesignDocument4 pagesProject-1 Basic Architecture DesignUmesh KumarNo ratings yet

- Design Issues: SMT and CMP ArchitecturesDocument9 pagesDesign Issues: SMT and CMP ArchitecturesSenthil KumarNo ratings yet

- Magzhan KairanbayDocument27 pagesMagzhan Kairanbaybaglannurkasym6No ratings yet

- Assembly Language Ass#2Document29 pagesAssembly Language Ass#2Nehal KhanNo ratings yet

- On Chip Periperals tm4c UNIT-IIDocument30 pagesOn Chip Periperals tm4c UNIT-IIK JahnaviNo ratings yet

- Intel Core I7 ProcessorDocument22 pagesIntel Core I7 ProcessorPrasanth M GopinathNo ratings yet

- Lecture 0: Cpus and Gpus: Prof. Mike GilesDocument36 pagesLecture 0: Cpus and Gpus: Prof. Mike GilesAashishNo ratings yet

- CUDA - Introduction CUDA - IntroductionDocument3 pagesCUDA - Introduction CUDA - Introductionolia.92No ratings yet

- AMP Lab ManualDocument22 pagesAMP Lab ManualSatish PawarNo ratings yet

- Pentium 4 Pipe LiningDocument7 pagesPentium 4 Pipe Liningapi-3801329100% (5)

- Power PC ArchitectureDocument24 pagesPower PC ArchitectureArkadip RayNo ratings yet

- Reduced Instruction Set Computing (RISC) : Li-Chuan FangDocument42 pagesReduced Instruction Set Computing (RISC) : Li-Chuan FangDevang PatelNo ratings yet

- 20 Advanced Processor DesignsDocument28 pages20 Advanced Processor DesignsHaileab YohannesNo ratings yet

- Basicfeaturesof Advanced MicroprocessorsDocument15 pagesBasicfeaturesof Advanced MicroprocessorsAMISHANo ratings yet

- Advanced Processor SuperscalarclassDocument73 pagesAdvanced Processor SuperscalarclassKanaga Varatharajan50% (2)

- Intel 80586 (Pentium)Document24 pagesIntel 80586 (Pentium)Soumya Ranjan PandaNo ratings yet

- Intel Nehalem-Ex 8-Core ProcessorsDocument6 pagesIntel Nehalem-Ex 8-Core ProcessorsNithin BharathNo ratings yet

- IBM Flex System p24L, p260 and p460 Compute Nodes: IBM Redbooks Product GuideDocument24 pagesIBM Flex System p24L, p260 and p460 Compute Nodes: IBM Redbooks Product GuidepruebaorgaNo ratings yet

- Microway Nehalem Whitepaper 2009-06Document6 pagesMicroway Nehalem Whitepaper 2009-06kuttan268281No ratings yet

- Coa Unit - 5 NotesDocument6 pagesCoa Unit - 5 Notes1NT19IS077-MADHURI.CNo ratings yet

- Digital Signal Processing AdvancedDocument14 pagesDigital Signal Processing AdvancedMathi YuvarajanNo ratings yet

- ITEC582 Chapter18Document36 pagesITEC582 Chapter18Ana Clara Cavalcante SousaNo ratings yet

- Intel Core's Multicore ProcessorDocument7 pagesIntel Core's Multicore ProcessorGurjeet ChahalNo ratings yet

- SPARC M7 Course PDFDocument518 pagesSPARC M7 Course PDFMuthikulam VenkatakrishnanNo ratings yet

- Tanium Rocessor Icroarchitecture: Harsh Sharangpani Ken Arora IntelDocument20 pagesTanium Rocessor Icroarchitecture: Harsh Sharangpani Ken Arora IntelRishabh JainNo ratings yet

- Ibm Blue GeneDocument17 pagesIbm Blue GeneRitika SethiNo ratings yet

- Computer Architecture - Teachers NotesDocument11 pagesComputer Architecture - Teachers NotesHAMMAD UR REHMANNo ratings yet

- Pentium CacheDocument5 pagesPentium CacheIsmet BibićNo ratings yet

- Traffic Light ControlDocument14 pagesTraffic Light ControlsamarthgulatiNo ratings yet

- Power PC ArchitectureDocument28 pagesPower PC ArchitecturedesamratNo ratings yet

- 54x NewDocument10 pages54x NewaruncidNo ratings yet

- ES Assignment 3Document12 pagesES Assignment 3satinder singhNo ratings yet

- Assignment 2 Embedded System (2) UpdateDocument14 pagesAssignment 2 Embedded System (2) Updateano nymousNo ratings yet

- Evolution of Computer SystemsDocument16 pagesEvolution of Computer SystemsMuhammad AneesNo ratings yet

- Cpe 631 Pentium 4Document111 pagesCpe 631 Pentium 4rohitkotaNo ratings yet

- Computer Architecture & OrganizationDocument6 pagesComputer Architecture & Organizationabdulmateen01No ratings yet

- Arm ShowDocument38 pagesArm Showselva33No ratings yet

- Intel 8086 Microprocessor ArchitectureDocument12 pagesIntel 8086 Microprocessor ArchitectureYokito YamadaNo ratings yet

- A Brief History of The Pentium Processor FamilyDocument43 pagesA Brief History of The Pentium Processor Familyar_garg2005100% (2)

- Socunit 1Document65 pagesSocunit 1Sooraj SattirajuNo ratings yet

- PIC (Peripheral Interface Controller) PIC Is A Family of Harvard Architecture Microcontrollers Made byDocument7 pagesPIC (Peripheral Interface Controller) PIC Is A Family of Harvard Architecture Microcontrollers Made byNeeraj KarnaniNo ratings yet

- L 3 GPUDocument33 pagesL 3 GPUfdfsNo ratings yet

- Multicore ComputersDocument21 pagesMulticore Computersmikiasyimer7362No ratings yet

- Crusoe Is The New Microprocessor Which Has Been Designed Specially For TheDocument17 pagesCrusoe Is The New Microprocessor Which Has Been Designed Specially For TheDivya KalidindiNo ratings yet

- "Multicore Processors": A Seminar ReportDocument11 pages"Multicore Processors": A Seminar ReportLovekesh BhagatNo ratings yet

- Pic 16F877Document115 pagesPic 16F877narendramaharana39100% (1)

- Chapter 04 Processors and Memory HierarchyDocument50 pagesChapter 04 Processors and Memory Hierarchyশেখ আরিফুল ইসলাম75% (8)

- Introduction To 8085Document139 pagesIntroduction To 8085eldho k josephNo ratings yet

- Dual Core Processors: Presented by Prachi Mishra IT - 56Document16 pagesDual Core Processors: Presented by Prachi Mishra IT - 56Prachi MishraNo ratings yet

- Nehalem ArchitectureDocument7 pagesNehalem ArchitectureSuchi SmitaNo ratings yet

- MPMC Unit 4Document23 pagesMPMC Unit 4KvnsumeshChandraNo ratings yet

- 8085 Microprocessor QuestionsDocument15 pages8085 Microprocessor QuestionsVirendra GuptaNo ratings yet

- Nintendo 64 Architecture: Architecture of Consoles: A Practical Analysis, #8From EverandNintendo 64 Architecture: Architecture of Consoles: A Practical Analysis, #8No ratings yet

- Appendix B: Excel Plot FunctionsDocument1 pageAppendix B: Excel Plot FunctionsJayaprabha KanaseNo ratings yet

- Title: Objectives: To Understand and Task Distribution Using Gprof.l TheoryDocument12 pagesTitle: Objectives: To Understand and Task Distribution Using Gprof.l TheoryJayaprabha KanaseNo ratings yet

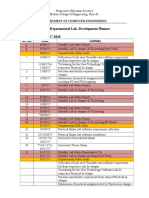

- Departmental Lab. Development Planner Academic Year: 2017-2018 Sr. No. Date ActivityDocument2 pagesDepartmental Lab. Development Planner Academic Year: 2017-2018 Sr. No. Date ActivityJayaprabha KanaseNo ratings yet

- Assignment No: A-1 Date: Aim: Implementation of Create/ Rename/ Delete A File Using Unix/Linux CommandsDocument4 pagesAssignment No: A-1 Date: Aim: Implementation of Create/ Rename/ Delete A File Using Unix/Linux CommandsJayaprabha KanaseNo ratings yet

- Facilities and Technical Support (80) Action Plan (JMK)Document1 pageFacilities and Technical Support (80) Action Plan (JMK)Jayaprabha KanaseNo ratings yet

- RPC ppt1Document26 pagesRPC ppt1Jayaprabha KanaseNo ratings yet

- Department of Computer EngineeringDocument6 pagesDepartment of Computer EngineeringJayaprabha KanaseNo ratings yet

- Shiva StutiDocument3 pagesShiva Stutiyatin panditNo ratings yet

- IEEE A4 FormatDocument4 pagesIEEE A4 Formatprem035No ratings yet

- Dirac NotationDocument3 pagesDirac NotationJayaprabha KanaseNo ratings yet

- Thumb InstructionsDocument37 pagesThumb InstructionsSuhas ShirolNo ratings yet

- The Processor: (Datapath and Pipelining)Document144 pagesThe Processor: (Datapath and Pipelining)paranoid486No ratings yet

- Prime Payment CMDDocument5 pagesPrime Payment CMDPrithviraj SanningannavarNo ratings yet

- Chapter 6 - PipeliningDocument61 pagesChapter 6 - PipeliningShravani ShravzNo ratings yet

- Architecture Sample QuestionsDocument4 pagesArchitecture Sample QuestionsRejin Paul JamesNo ratings yet

- GFK 1512 KDocument9 pagesGFK 1512 Knamomari20031889No ratings yet

- Instruction PipeliningDocument32 pagesInstruction PipeliningTech_MXNo ratings yet

- The Microprocessor AgeDocument18 pagesThe Microprocessor AgeAshutosh GuptaNo ratings yet

- Computer Organization Architecture and Assembly Language File/8085 FileDocument32 pagesComputer Organization Architecture and Assembly Language File/8085 FileGarimaNo ratings yet

- An Operating System Vade Mecum - Raphael FinkelDocument362 pagesAn Operating System Vade Mecum - Raphael FinkelThe_ArkalianNo ratings yet

- PSoC Assembly Language User GuideDocument110 pagesPSoC Assembly Language User GuidekmmankadNo ratings yet

- Using Winmips64 Simulator: 1. Starting and Configuring Winmips64Document9 pagesUsing Winmips64 Simulator: 1. Starting and Configuring Winmips64VenkateshDuppadaNo ratings yet

- What Are The Differences Between ROM and RAMDocument7 pagesWhat Are The Differences Between ROM and RAMCostasNo ratings yet

- Lab 6 PicoblazeDocument6 pagesLab 6 PicoblazeMadalin NeaguNo ratings yet

- Add On (P ValveSO)Document48 pagesAdd On (P ValveSO)carbono980No ratings yet

- Instruction Level ParallelismDocument49 pagesInstruction Level ParallelismBijay MishraNo ratings yet

- Microsoft Word - Revised Microcomputer & Interfacing - RepaiDocument152 pagesMicrosoft Word - Revised Microcomputer & Interfacing - RepaimickygbNo ratings yet

- Syslib Rm029 en P (P DOut)Document48 pagesSyslib Rm029 en P (P DOut)carbono980No ratings yet

- Assembler BookDocument119 pagesAssembler BookJitender Saini100% (5)

- RPRTDocument6 pagesRPRTanon_191402976No ratings yet

- Practical 2 Data TransferDocument3 pagesPractical 2 Data TransferHet PatelNo ratings yet

- Question and Answer of ITDocument181 pagesQuestion and Answer of ITSuraj MehtaNo ratings yet

- Fabbc9: Convert Hexadecimal To BinaryDocument11 pagesFabbc9: Convert Hexadecimal To BinaryShan NavasNo ratings yet

- Learning C With PebbleDocument305 pagesLearning C With Pebblektokto666No ratings yet

- Fortran 90 Course Notes (278P)Document278 pagesFortran 90 Course Notes (278P)Eng W EaNo ratings yet

- Architecture of DSP ProcessorsDocument13 pagesArchitecture of DSP ProcessorsSugumar Sar DuraiNo ratings yet

- Pic16f84a PDFDocument88 pagesPic16f84a PDFBenjamin Michael LandisNo ratings yet

- Reverse Engineering Smac-TutDocument3 pagesReverse Engineering Smac-TutSagar RastogiNo ratings yet

Instruction Level Parallelism: Intel

Instruction Level Parallelism: Intel

Uploaded by

Jayaprabha KanaseOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Instruction Level Parallelism: Intel

Instruction Level Parallelism: Intel

Uploaded by

Jayaprabha KanaseCopyright:

Available Formats

1.

Instruction Level Parallelism

Most of modern processors have a similar architecture which is speculative superscalar outoforder execution design; it concerns both RISC and X86. The approach implies that several functional units (FU) operate simultaneously in the processor and exploit instructions from a special buffer, if possible, where they proceed to after decoding. The advantage is that parallelization doesn't depend on a programmer (at least, in higher-level languages) and it's unnecessary to apply special algorithms and language constructions used for development of programs for computers with several processors. One can think that by increasing the number of FUs it's possible to achieve a very high degree of ILP (Instruction Level Parallelism, ILP). It's true to some degree. But the superscalar architecture has a lot of limitations which grow as the number of execution units increases. For example:

1. Register dependences - the number of registers to provide a sufficient load for FUs must be quadratically dependent on the number of FUs. The X86 ISA with 8 GPRs has problems of further growth of the ILP by just increasing the number of FUs, that is why visible registers are renamed into the much greater number of hidden ones. RISC architectures look better, but it's also necessary to use the register renaming technique. This is not a cure-all as far as performance is concerned and makes the chip more complicated. However, theoretically the ILP of superscalar processors has a high limit (tens of instructions per clock for a great deal of programs included into the SPEC tests), but in reality there is no ground for talking of even a much lower parallelism degree. 2. Rapidly growing processor complexity (complicated development, debugging and testing) raises the costs and lead time which is not made up for by the performance gain. 3. Increasing requirements for the L1 cache - to "feed" a great number of FUs the cache must have a great throughput and a large capacity. The cost is longer delays which make the performance poorer. It's also required to increase the number of ports for registers.

According to some estimates, to double the ILP level regarding modern superscalar processors it's necessary to provide about 128 GPRs and 8 ALUs + 8 loadstore units. It will probably be realized in future IA-64 chips, but even now the same speed-up can be achieved for a vast deal of applications by simpler ways.

2. Thread Level Parallelism

Various TLP (Thread Level Parallelism) technologies are one of the ways to boost up performance of superscalar processors. Processors which use this approach exploit several instruction flows simultaneously. Multithread programs benefit from the TLP for them it makes sense to use the already existent parallelism in programs optimized for multiprocessor systems. Usage of some SMT variation (Simultaneous multithreading), for example HMT (Hardware Multi-Threading) from Intel , is a temporary solution. This technology is able to provide a more effective FU load and optimize the memory access of existent superscalar architectures, and flows are divided by the same processor's FUs. It's estimated that the performance gain is 1030% for different programs on the Xeon processors (this is Pentium 4, in fact). Another example of realization of the SMT is a server processor of the Power PC IBM RS64 IV family which is a predecessor of the POWER4 in the pSeries 6000 (RS/6000) and iSeries 400 (AS/400) systems. [1] On the whole, the SMT is logical and simple in realization improvement of modern processors. The Chip MultiProcessing (CMP) is a more radical approach which implies that several processor cores are located on one die. At present this technology has reached the level when it's possible to put two complex superscalar processors and enough amount of cache on one chip. We thus get an SMP system, and as the cores are located on the same die it makes possible to increase much a data rate between the processors in comparison to using any external buses, commutators etc. It is interesting that in the early 90s Intel considered to develop such processor - the 786 chip codenamed Micro-2000 was to have 4 cores, additional 2 vector processors and

to work at 250 MHz. Just compare with the Pentium 4 :). The POWER4 consists of 2 identical processor cores which implement PowerPC AS instruction set, the die measures about 400 mm2, it's based on the 0.18 micron copper SOI IBM CMOS 8S2 technology with 7 metallization layers, works at 1.1 and 1.3 GHz, and is the fastest microprocessor for today. There is also a POWER4's variation with one processor on the die. However, HP and SUN are also going to release CMP processors soon which will be based on the 0.13 micron fab process. It's possible that AMD will follow this way as well.

3. IBM POWER4 - introduction

This processor is meant for the maximum performance, for hi-end server and supercomputer market, designed for 32-processor SMP systems. Development of high-performance communication means for processors and memory was given much attention to. The POWER4 has a high fault-tolerance: critical fails do not make the system hang; instead, interrupts are generated and then processed by the system. The POWER4 was developed for an efficient operation of commercial (server) and scientific and technical applications. Note that earlier the IBM Power/Power PC processors were divided into server and scientific ones - POWER and RS64. The POWER4 suits a wide range of hi-end applications and uses all topical performance boosting ways (within the PowerPC instruction set). We won't find there truncated caches and lacking FUs. The chip's design looks unusual; later we will see why the frequency of the POWER4 jumped from 600 MHz of the RS64 IV to 1.3 GHz.

4. POWER4 die

The POWER4 houses 2 processors each having an L1 cache for data and instructions. The die has a single L2 cache of 1450 KBytes controlled by 3 separate controllers connected to the cores via a CIU (Core Interface Unit). The controllers work independently and can process 32 bytes per clock. Each processor uses two separate 256-bit buses to connect the CIU for data fetching and data loading, as well as a separate 64-bit bus to save the results; the L2 cache has a bandwidth of 100 GBytes/s. The L2 cache's system looks well balanced and very powerful. Each processor has a special unit to support noncachable operations (Noncacheable Unit). The L3 controller and the memory's one are located on die as well. For connection with the L3 cache working at 1/3 of the processor's speed and with the memory there are two 128-bit buses operating at 1/3 of the processor's frequency. The throughput of the memory interface is about 11 GBytes/s. Data flows coming from the memory and L2 and L3 caches and the buses of the chips are controlled by the Fabric Controller:

The 32-bit GX Bus running at 1/3 of the processor's frequency is used to connect to the I/O subsystem (e.g., by the PCI bridge) and a commutator in case of multiple nodes which contain POWER4 chips for creating clusters.

5. SMP capabilities

4 POWER4 chips can be packed into one module forming a 8-processor SMP. The POWER4 chips are connected via 4 128-bit buses used on one module; they operate at 1/2 of the processor's speed. They are realized as 6 unidirectional buses, three in one direction and three in the other, and their total throughput is about 35 GBytes/s.

Take a look at the 4-chip module: (such silicon pieces can be found, for example, in a 32-processor pSeries 690 Model 681 server).

IBM focuses on the fact that a central commutator used for connection of the 4 POWER4 chips is replaced with multiple rapid independent point-to-point buses. AMD is going to use this approach in its future systems on the Hammer processor. For creation of multimodule systems it's possible to use up to 4 modules which allow for 16-processor SMP system or, taking into account 2 processors on each POWER4 chip, for a 32-processor one. Two unidirectional 64-bit buses are used to connect other modules; they form a ring topology:

The POWER4 is not meant for SMP systems with the number of processors more than 32.

6. POWER4 core

The POWER4 core is much different from its predecessors as it uses the approach applied in modern X86, - transformation of PowerPC instructions into internal and group formation. So, a separate POWER4 processor is a superscalar core with speculative out-of-order execution. There are 8 pipeline execution units in all - two identical floating-point pipelines each able to implement addition and subtraction at a clock, i.e. up to 4 floating point operations at a clock, two loadstore units, two integer-valued execution devices, a branch execution unit a logical execution unit. Operations of division and square-rooting for floating-point figures are not pipelined and can worsen the POWER4's performance much. Look at the pipeline of the POWER4:

The integer-valued pipeline has 17 stages! It contrasts to the previous IBM chips with their 5-stage pipeline. Let's dwell on some interesting peculiarities of the POWER4 core. The L1 cache is capable of delivering to the front part of the pipeline up to 8 instructions per clock according to the address given by the IFAR register the contents of which is determined by the branch prediction unit. Then instructions are decoded, cracked and groups are formed. In order to minimize the logic necessary to track a large number of in flight instructions, groups of instructions are formed. A group contains up to five internal instructions referred to as IOPs. In the decode stages the instructions are placed sequentially in a group, the oldest instruction is placed in slot 0, the next oldest one in slot 1, and so on. Slot 4 is reserved for branch instructions only. To reach a high clock speed the POWER4 cracks PowerPC instructions into a greater number of simpler instructions which then combine into groups and are executed. If an instruction is split into 2 instructions we consider that cracking. If an instruction is split into more than 2 IOPs then we term this a millicoded instruction. Register renaming is widely used in the POWER4, in particular, 32 GPRs are renamed into 80 internal registers, 32 FPRs into 72 registers. It's clear that many once attractive peculiarities of the PowerPC are out-dated, and the processor gets new units for transformation of instructions into a form more convenient for execution. A processor with such a long pipeline needs an effective branch prediction algorithm. For dynamic prediction the POWER4 uses 2 algorithm versions and an additional table which tracks the most effective algorithm for a certain branch instruction. The dynamic prediction can be overriden by a special bit in the branch instruction. By the way, such feature appeared also in the X86 line with the Pentium 4. There are 3 buffer types to speed up translation of virtual addresses into physical ones - a translation lookaside buffer (TLB) for 1024 entries, a segment look-aside buffer (SLB) - a completely associative cache for 64 entries, and an effective-to-real address table (ERAT). The ERAT is divided into two tables - for data and for instructions for 128 elements.

More detailed information on the POWER4 architecture and optimization of programs can be found in the IBM's guide [3].

7. Caches and memory

The following table shows key data on the memory subsystem:

Component L1 Instruction Cache L1 Data Cache L2 L3 Memory Organization Direct map, 128-byte line managed as 4 32-byte sectors 2-way, 128-byte line 8-way, 128-byte line 8-way, 512-byte line managed as 4 128-byte sectors Capacity per chip 128 KBytes (64 KBytes per processor) 64 KBytes (32 KBytes per processor) 1.41 MBytes 32 MBytes 0 - 16 GBytes

Latency of the L1 cache is 4 cycles (for Pentium 4 - 2 cycles, for Athlon - 4). The L2 cache uses the MESI protocol for coherence support, and its average latency is 12 cycles (for Pentium 4 - 18, for Athlon - 20). But sometimes its latency can rise up to 20 cycles. Controllers of the L3 cache and of the memory, as well as the tag directory are integrated into the chip, and the cache consists of two 16 MBytes eDRAM chips mounted on a separate module which is divided into 8 banks of 2 MBytes. An important feature of the L3 cache is a capability to combine separate caches of POWER4 chips up to 4 (128 MBytes) which allows using address interleaving to speed up the access. The L3 cache is connected to the memory controller via two bidirectional 64-bit buses which operate at 1/3 of the processor's speed. The memory (200 MHz DDR SDRAM) is connected to the controller via two ports each consisting of 4 32-bit buses working at 400 MHz. So, the memory throughput when the two ports are used is a bit over 11 GBytes/s (the respective parameter of the Intel McKinley which is not released yet is 6.4 GBytes/s). Each chip has its own bus to the L3 cache and memory. The POWER4 has a hardware prefetch unit which loads data into the L1 cache from the whole memory hierarchy, and there are instructions which allow controlling this process on a software level.

8. Happy End

CPU AMD Athlon XP 1900+ HP PA-8700 IBM POWER4 (1CPU) Intel Itanium Intel Pentium 4 SUN UltraSPARC III-Cu CPU MHz 1600 750 1300 800 2200 900 CINT2000 base/peak 677/701 568/604 790/814 314/314 771/784 470/533 CFP2000 base/peak 588/634 526/576 1098/1169 645 766/777 629/731

The Alpha is fading, and the POWER4 has no more competitors as far as processor power is concerned. The new Pentium 4 looks impressive in comparison to pale Itanium and PA-8700, despite announcements of aging IA-32 and advanced IA-64 technologies. Will the McKinley with its more powerful IA-64 be able to stand against the POWER4 at least in computational tests? Will SMT be integrated into personal POWER4 processors? What kind of CMP chips are other companies going to unveil?

You might also like

- Final Review 09Document21 pagesFinal Review 09qwerty17327No ratings yet

- Assembler InstructionsDocument101 pagesAssembler Instructionsalokagarwaljgd33% (3)

- Inside The CpuDocument10 pagesInside The Cpuapi-3760834No ratings yet

- Mips RIO000 Microprocessor: SuperscalarDocument13 pagesMips RIO000 Microprocessor: SuperscalarNishant SinghNo ratings yet

- 09 - Thread Level ParallelismDocument34 pages09 - Thread Level ParallelismSuganya Periasamy50% (2)

- 15th Lecture 6. Future Processors To Use Coarse-Grain ParallelismDocument35 pages15th Lecture 6. Future Processors To Use Coarse-Grain ParallelismArchana RkNo ratings yet

- Superscalar Processor Pentium ProDocument11 pagesSuperscalar Processor Pentium ProdiwakerNo ratings yet

- Project-1 Basic Architecture DesignDocument4 pagesProject-1 Basic Architecture DesignUmesh KumarNo ratings yet

- Design Issues: SMT and CMP ArchitecturesDocument9 pagesDesign Issues: SMT and CMP ArchitecturesSenthil KumarNo ratings yet

- Magzhan KairanbayDocument27 pagesMagzhan Kairanbaybaglannurkasym6No ratings yet

- Assembly Language Ass#2Document29 pagesAssembly Language Ass#2Nehal KhanNo ratings yet

- On Chip Periperals tm4c UNIT-IIDocument30 pagesOn Chip Periperals tm4c UNIT-IIK JahnaviNo ratings yet

- Intel Core I7 ProcessorDocument22 pagesIntel Core I7 ProcessorPrasanth M GopinathNo ratings yet

- Lecture 0: Cpus and Gpus: Prof. Mike GilesDocument36 pagesLecture 0: Cpus and Gpus: Prof. Mike GilesAashishNo ratings yet

- CUDA - Introduction CUDA - IntroductionDocument3 pagesCUDA - Introduction CUDA - Introductionolia.92No ratings yet

- AMP Lab ManualDocument22 pagesAMP Lab ManualSatish PawarNo ratings yet

- Pentium 4 Pipe LiningDocument7 pagesPentium 4 Pipe Liningapi-3801329100% (5)

- Power PC ArchitectureDocument24 pagesPower PC ArchitectureArkadip RayNo ratings yet

- Reduced Instruction Set Computing (RISC) : Li-Chuan FangDocument42 pagesReduced Instruction Set Computing (RISC) : Li-Chuan FangDevang PatelNo ratings yet

- 20 Advanced Processor DesignsDocument28 pages20 Advanced Processor DesignsHaileab YohannesNo ratings yet

- Basicfeaturesof Advanced MicroprocessorsDocument15 pagesBasicfeaturesof Advanced MicroprocessorsAMISHANo ratings yet

- Advanced Processor SuperscalarclassDocument73 pagesAdvanced Processor SuperscalarclassKanaga Varatharajan50% (2)

- Intel 80586 (Pentium)Document24 pagesIntel 80586 (Pentium)Soumya Ranjan PandaNo ratings yet

- Intel Nehalem-Ex 8-Core ProcessorsDocument6 pagesIntel Nehalem-Ex 8-Core ProcessorsNithin BharathNo ratings yet

- IBM Flex System p24L, p260 and p460 Compute Nodes: IBM Redbooks Product GuideDocument24 pagesIBM Flex System p24L, p260 and p460 Compute Nodes: IBM Redbooks Product GuidepruebaorgaNo ratings yet

- Microway Nehalem Whitepaper 2009-06Document6 pagesMicroway Nehalem Whitepaper 2009-06kuttan268281No ratings yet

- Coa Unit - 5 NotesDocument6 pagesCoa Unit - 5 Notes1NT19IS077-MADHURI.CNo ratings yet

- Digital Signal Processing AdvancedDocument14 pagesDigital Signal Processing AdvancedMathi YuvarajanNo ratings yet

- ITEC582 Chapter18Document36 pagesITEC582 Chapter18Ana Clara Cavalcante SousaNo ratings yet

- Intel Core's Multicore ProcessorDocument7 pagesIntel Core's Multicore ProcessorGurjeet ChahalNo ratings yet

- SPARC M7 Course PDFDocument518 pagesSPARC M7 Course PDFMuthikulam VenkatakrishnanNo ratings yet

- Tanium Rocessor Icroarchitecture: Harsh Sharangpani Ken Arora IntelDocument20 pagesTanium Rocessor Icroarchitecture: Harsh Sharangpani Ken Arora IntelRishabh JainNo ratings yet

- Ibm Blue GeneDocument17 pagesIbm Blue GeneRitika SethiNo ratings yet

- Computer Architecture - Teachers NotesDocument11 pagesComputer Architecture - Teachers NotesHAMMAD UR REHMANNo ratings yet

- Pentium CacheDocument5 pagesPentium CacheIsmet BibićNo ratings yet

- Traffic Light ControlDocument14 pagesTraffic Light ControlsamarthgulatiNo ratings yet

- Power PC ArchitectureDocument28 pagesPower PC ArchitecturedesamratNo ratings yet

- 54x NewDocument10 pages54x NewaruncidNo ratings yet

- ES Assignment 3Document12 pagesES Assignment 3satinder singhNo ratings yet

- Assignment 2 Embedded System (2) UpdateDocument14 pagesAssignment 2 Embedded System (2) Updateano nymousNo ratings yet

- Evolution of Computer SystemsDocument16 pagesEvolution of Computer SystemsMuhammad AneesNo ratings yet

- Cpe 631 Pentium 4Document111 pagesCpe 631 Pentium 4rohitkotaNo ratings yet

- Computer Architecture & OrganizationDocument6 pagesComputer Architecture & Organizationabdulmateen01No ratings yet

- Arm ShowDocument38 pagesArm Showselva33No ratings yet

- Intel 8086 Microprocessor ArchitectureDocument12 pagesIntel 8086 Microprocessor ArchitectureYokito YamadaNo ratings yet

- A Brief History of The Pentium Processor FamilyDocument43 pagesA Brief History of The Pentium Processor Familyar_garg2005100% (2)

- Socunit 1Document65 pagesSocunit 1Sooraj SattirajuNo ratings yet

- PIC (Peripheral Interface Controller) PIC Is A Family of Harvard Architecture Microcontrollers Made byDocument7 pagesPIC (Peripheral Interface Controller) PIC Is A Family of Harvard Architecture Microcontrollers Made byNeeraj KarnaniNo ratings yet

- L 3 GPUDocument33 pagesL 3 GPUfdfsNo ratings yet

- Multicore ComputersDocument21 pagesMulticore Computersmikiasyimer7362No ratings yet

- Crusoe Is The New Microprocessor Which Has Been Designed Specially For TheDocument17 pagesCrusoe Is The New Microprocessor Which Has Been Designed Specially For TheDivya KalidindiNo ratings yet

- "Multicore Processors": A Seminar ReportDocument11 pages"Multicore Processors": A Seminar ReportLovekesh BhagatNo ratings yet

- Pic 16F877Document115 pagesPic 16F877narendramaharana39100% (1)

- Chapter 04 Processors and Memory HierarchyDocument50 pagesChapter 04 Processors and Memory Hierarchyশেখ আরিফুল ইসলাম75% (8)

- Introduction To 8085Document139 pagesIntroduction To 8085eldho k josephNo ratings yet

- Dual Core Processors: Presented by Prachi Mishra IT - 56Document16 pagesDual Core Processors: Presented by Prachi Mishra IT - 56Prachi MishraNo ratings yet

- Nehalem ArchitectureDocument7 pagesNehalem ArchitectureSuchi SmitaNo ratings yet

- MPMC Unit 4Document23 pagesMPMC Unit 4KvnsumeshChandraNo ratings yet

- 8085 Microprocessor QuestionsDocument15 pages8085 Microprocessor QuestionsVirendra GuptaNo ratings yet

- Nintendo 64 Architecture: Architecture of Consoles: A Practical Analysis, #8From EverandNintendo 64 Architecture: Architecture of Consoles: A Practical Analysis, #8No ratings yet

- Appendix B: Excel Plot FunctionsDocument1 pageAppendix B: Excel Plot FunctionsJayaprabha KanaseNo ratings yet

- Title: Objectives: To Understand and Task Distribution Using Gprof.l TheoryDocument12 pagesTitle: Objectives: To Understand and Task Distribution Using Gprof.l TheoryJayaprabha KanaseNo ratings yet

- Departmental Lab. Development Planner Academic Year: 2017-2018 Sr. No. Date ActivityDocument2 pagesDepartmental Lab. Development Planner Academic Year: 2017-2018 Sr. No. Date ActivityJayaprabha KanaseNo ratings yet

- Assignment No: A-1 Date: Aim: Implementation of Create/ Rename/ Delete A File Using Unix/Linux CommandsDocument4 pagesAssignment No: A-1 Date: Aim: Implementation of Create/ Rename/ Delete A File Using Unix/Linux CommandsJayaprabha KanaseNo ratings yet

- Facilities and Technical Support (80) Action Plan (JMK)Document1 pageFacilities and Technical Support (80) Action Plan (JMK)Jayaprabha KanaseNo ratings yet

- RPC ppt1Document26 pagesRPC ppt1Jayaprabha KanaseNo ratings yet

- Department of Computer EngineeringDocument6 pagesDepartment of Computer EngineeringJayaprabha KanaseNo ratings yet

- Shiva StutiDocument3 pagesShiva Stutiyatin panditNo ratings yet

- IEEE A4 FormatDocument4 pagesIEEE A4 Formatprem035No ratings yet

- Dirac NotationDocument3 pagesDirac NotationJayaprabha KanaseNo ratings yet

- Thumb InstructionsDocument37 pagesThumb InstructionsSuhas ShirolNo ratings yet

- The Processor: (Datapath and Pipelining)Document144 pagesThe Processor: (Datapath and Pipelining)paranoid486No ratings yet

- Prime Payment CMDDocument5 pagesPrime Payment CMDPrithviraj SanningannavarNo ratings yet

- Chapter 6 - PipeliningDocument61 pagesChapter 6 - PipeliningShravani ShravzNo ratings yet

- Architecture Sample QuestionsDocument4 pagesArchitecture Sample QuestionsRejin Paul JamesNo ratings yet

- GFK 1512 KDocument9 pagesGFK 1512 Knamomari20031889No ratings yet

- Instruction PipeliningDocument32 pagesInstruction PipeliningTech_MXNo ratings yet

- The Microprocessor AgeDocument18 pagesThe Microprocessor AgeAshutosh GuptaNo ratings yet

- Computer Organization Architecture and Assembly Language File/8085 FileDocument32 pagesComputer Organization Architecture and Assembly Language File/8085 FileGarimaNo ratings yet

- An Operating System Vade Mecum - Raphael FinkelDocument362 pagesAn Operating System Vade Mecum - Raphael FinkelThe_ArkalianNo ratings yet

- PSoC Assembly Language User GuideDocument110 pagesPSoC Assembly Language User GuidekmmankadNo ratings yet

- Using Winmips64 Simulator: 1. Starting and Configuring Winmips64Document9 pagesUsing Winmips64 Simulator: 1. Starting and Configuring Winmips64VenkateshDuppadaNo ratings yet

- What Are The Differences Between ROM and RAMDocument7 pagesWhat Are The Differences Between ROM and RAMCostasNo ratings yet

- Lab 6 PicoblazeDocument6 pagesLab 6 PicoblazeMadalin NeaguNo ratings yet

- Add On (P ValveSO)Document48 pagesAdd On (P ValveSO)carbono980No ratings yet

- Instruction Level ParallelismDocument49 pagesInstruction Level ParallelismBijay MishraNo ratings yet

- Microsoft Word - Revised Microcomputer & Interfacing - RepaiDocument152 pagesMicrosoft Word - Revised Microcomputer & Interfacing - RepaimickygbNo ratings yet

- Syslib Rm029 en P (P DOut)Document48 pagesSyslib Rm029 en P (P DOut)carbono980No ratings yet

- Assembler BookDocument119 pagesAssembler BookJitender Saini100% (5)

- RPRTDocument6 pagesRPRTanon_191402976No ratings yet

- Practical 2 Data TransferDocument3 pagesPractical 2 Data TransferHet PatelNo ratings yet

- Question and Answer of ITDocument181 pagesQuestion and Answer of ITSuraj MehtaNo ratings yet

- Fabbc9: Convert Hexadecimal To BinaryDocument11 pagesFabbc9: Convert Hexadecimal To BinaryShan NavasNo ratings yet

- Learning C With PebbleDocument305 pagesLearning C With Pebblektokto666No ratings yet

- Fortran 90 Course Notes (278P)Document278 pagesFortran 90 Course Notes (278P)Eng W EaNo ratings yet

- Architecture of DSP ProcessorsDocument13 pagesArchitecture of DSP ProcessorsSugumar Sar DuraiNo ratings yet

- Pic16f84a PDFDocument88 pagesPic16f84a PDFBenjamin Michael LandisNo ratings yet

- Reverse Engineering Smac-TutDocument3 pagesReverse Engineering Smac-TutSagar RastogiNo ratings yet