Professional Documents

Culture Documents

WS08

WS08

Uploaded by

buttersotyughCopyright:

Available Formats

You might also like

- ML Unit-1Document12 pagesML Unit-120-6616 Abhinay100% (1)

- Coincent - Data Science With Python AssignmentDocument23 pagesCoincent - Data Science With Python AssignmentSai Nikhil Nellore100% (2)

- Invoice of Acer LaptopDocument1 pageInvoice of Acer LaptopAshish SinghNo ratings yet

- 19C Rac Dataguard Build Document: Mallikarjun Ramadurg Mallik034Document87 pages19C Rac Dataguard Build Document: Mallikarjun Ramadurg Mallik034Khansex ShaikNo ratings yet

- 50 Deep Learning Technical Interview Questions With AnswersDocument20 pages50 Deep Learning Technical Interview Questions With AnswersIkram Laaroussi100% (1)

- Machine Learning with Clustering: A Visual Guide for Beginners with Examples in PythonFrom EverandMachine Learning with Clustering: A Visual Guide for Beginners with Examples in PythonNo ratings yet

- Neural Networks BiasDocument7 pagesNeural Networks BiasNyariki KevinNo ratings yet

- 13 Useful Deep Learning Interview Questions and AnswerDocument6 pages13 Useful Deep Learning Interview Questions and AnswerMD. SHAHIDUL ISLAMNo ratings yet

- Artificial Intelligence Interview QuestionsFrom EverandArtificial Intelligence Interview QuestionsRating: 5 out of 5 stars5/5 (2)

- 30 Frequently Asked Deep Learning Interview Questions and AnswersDocument28 pages30 Frequently Asked Deep Learning Interview Questions and AnswersKhirod Behera100% (1)

- Deep Learning Interview Questions and AnswersDocument21 pagesDeep Learning Interview Questions and AnswersSumathi MNo ratings yet

- Exercises INF 5860: Exercise 1 Linear RegressionDocument5 pagesExercises INF 5860: Exercise 1 Linear RegressionPatrick O'RourkeNo ratings yet

- Datos de Cosas InteresantesDocument16 pagesDatos de Cosas InteresantesManolo CaracolNo ratings yet

- SIM - Chapters - DA T5Document9 pagesSIM - Chapters - DA T5Adam TaufikNo ratings yet

- Week - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Document7 pagesWeek - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Mrunal BhilareNo ratings yet

- Fitting A Neural Network ModelDocument9 pagesFitting A Neural Network ModelArpan KumarNo ratings yet

- Le y Yang - Tiny ImageNet Visual Recognition ChallengeDocument6 pagesLe y Yang - Tiny ImageNet Visual Recognition Challengemusicalización pacíficoNo ratings yet

- HyperparametersDocument15 pagesHyperparametersrajaNo ratings yet

- Nero Solution Litreture From HelpDocument6 pagesNero Solution Litreture From Helpvijay_dhawaleNo ratings yet

- HCIA AI Practice Exam AllDocument64 pagesHCIA AI Practice Exam AllKARLHENRI MANANSALANo ratings yet

- Understanding AlexNetDocument8 pagesUnderstanding AlexNetlizhi2012No ratings yet

- Counting in Dense Crowds Using Deep LearningDocument6 pagesCounting in Dense Crowds Using Deep LearningJoseph JeremyNo ratings yet

- Week 4Document13 pagesWeek 4Muneeba MehmoodNo ratings yet

- Plant Disease IdentificationDocument17 pagesPlant Disease IdentificationSuresh BalpandeNo ratings yet

- ELL784/AIP701: Assignment 3: InstructionsDocument3 pagesELL784/AIP701: Assignment 3: Instructionslovlesh royNo ratings yet

- A Recipe For Training Neural NetworksDocument18 pagesA Recipe For Training Neural Networkstxboxi23No ratings yet

- Unit 5 CNNDocument9 pagesUnit 5 CNNdhammjyoti dhawaseNo ratings yet

- Fixing Neural Network Course 2 1659759284Document30 pagesFixing Neural Network Course 2 1659759284Mahalakshmi VijayaraghavanNo ratings yet

- 01 Speed Read Tensorflow PlaygroundDocument6 pages01 Speed Read Tensorflow Playgroundmoumita deyNo ratings yet

- Institute of Engineering & ManagementDocument3 pagesInstitute of Engineering & Managementmanish pandeyNo ratings yet

- Exam2004 2 3Document22 pagesExam2004 2 3JoHn ScofieldNo ratings yet

- Oop Answer KeysDocument9 pagesOop Answer KeysJayvee TanNo ratings yet

- Designing Your Neural Networks - Towards Data ScienceDocument15 pagesDesigning Your Neural Networks - Towards Data ScienceGabriel PehlsNo ratings yet

- Cats and Dogs ClassificationDocument12 pagesCats and Dogs Classificationtechoverlord.contactNo ratings yet

- Unsupervised Learning, Neural NetworksDocument22 pagesUnsupervised Learning, Neural NetworksShraddha DubeyNo ratings yet

- A Probabilistic Theory of Deep Learning: Unit 2Document17 pagesA Probabilistic Theory of Deep Learning: Unit 2HarshitNo ratings yet

- Importany Questions Unit 3 4Document30 pagesImportany Questions Unit 3 4Mubena HussainNo ratings yet

- Ds Cheat SheetDocument21 pagesDs Cheat SheetDhruv Chawda100% (1)

- Models For Machine Learning: M. Tim JonesDocument10 pagesModels For Machine Learning: M. Tim JonesShanti GuruNo ratings yet

- Room Classification Using Machine LearningDocument16 pagesRoom Classification Using Machine LearningVARSHANo ratings yet

- A Recipe For Training Neural NetworksDocument16 pagesA Recipe For Training Neural NetworksMagnus RagnvaldssonNo ratings yet

- CS 672 - Neural Networks - Practice - Midterm - SolutionsDocument7 pagesCS 672 - Neural Networks - Practice - Midterm - SolutionsMohammed AL-waaelyNo ratings yet

- Artificial Neural NetworkDocument4 pagesArtificial Neural Networkreshma acharyaNo ratings yet

- Ai Ga1Document7 pagesAi Ga1Darshnik DeepNo ratings yet

- Neural Network Thesis 2013Document5 pagesNeural Network Thesis 2013WriteMySociologyPaperCanada100% (1)

- Neural Network Project Report.Document12 pagesNeural Network Project Report.Ashutosh LembheNo ratings yet

- Pattern RecongnitionDocument21 pagesPattern RecongnitionRiad El AbedNo ratings yet

- Basic Neural Network Tutorial - C++ Implementation and Source Code Taking InitiativeDocument45 pagesBasic Neural Network Tutorial - C++ Implementation and Source Code Taking InitiativeAbderrahmen BenyaminaNo ratings yet

- 2021 ITS665 - ISP565 - GROUP PROJECT-revMac21Document6 pages2021 ITS665 - ISP565 - GROUP PROJECT-revMac21Umairah IbrahimNo ratings yet

- Deep Learning Interview Questions - Deep Learning QuestionsDocument21 pagesDeep Learning Interview Questions - Deep Learning QuestionsheheeNo ratings yet

- CS440: HW3Document7 pagesCS440: HW3Jon MuellerNo ratings yet

- Unit 5Document8 pagesUnit 5arinkamble1711No ratings yet

- Thesis Artificial Neural NetworkDocument4 pagesThesis Artificial Neural NetworkBuyResumePaperSingapore100% (2)

- Homework 1Document2 pagesHomework 1Hendra LimNo ratings yet

- Interview QuestionsDocument67 pagesInterview Questionsvaishnav Jyothi100% (1)

- Introduction To Neural Networks AIDocument7 pagesIntroduction To Neural Networks AIheavenss2009No ratings yet

- Soft Module 1Document14 pagesSoft Module 1AMAN MUHAMMEDNo ratings yet

- Deep Learning Interview Questions: Click HereDocument45 pagesDeep Learning Interview Questions: Click HereRajachandra VoodigaNo ratings yet

- An Ingression Into Deep Learning - FPDocument17 pagesAn Ingression Into Deep Learning - FPrammilan kushwahaNo ratings yet

- Imagenet ClassificationDocument9 pagesImagenet Classificationice117No ratings yet

- Interview Questions For DS & DA (ML)Document66 pagesInterview Questions For DS & DA (ML)pratikmovie999100% (1)

- Artificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationFrom EverandArtificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationNo ratings yet

- California Housing DatasetDocument3 pagesCalifornia Housing DatasetAnas IshaqNo ratings yet

- Connectivity DartDocument4 pagesConnectivity Dartİlter Engin KIZILGÜNNo ratings yet

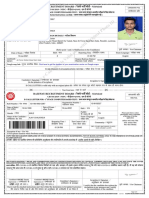

- Is Valid Only With Original Photo ID: Railway Recruitment BoardDocument3 pagesIs Valid Only With Original Photo ID: Railway Recruitment BoardajayNo ratings yet

- PECs Self Rating Questionnaire Scoring Sheet 1Document1 pagePECs Self Rating Questionnaire Scoring Sheet 1nelson segubreNo ratings yet

- Aquametro H AMT F131P D11Document36 pagesAquametro H AMT F131P D11SPIC UreaNo ratings yet

- Changing P6 Settings To Import Budget Costs From Excel Into P6Document11 pagesChanging P6 Settings To Import Budget Costs From Excel Into P6Ameer JoshiNo ratings yet

- Laboratoriya Ishi 2: Multimediya Texnologiyalari Kafedrasi Veb Dasturlashga Kirish FanidanDocument8 pagesLaboratoriya Ishi 2: Multimediya Texnologiyalari Kafedrasi Veb Dasturlashga Kirish Fanidansunnat ermatovNo ratings yet

- Girbau, S.A.: STI-30/STI-34 Parts ManualDocument36 pagesGirbau, S.A.: STI-30/STI-34 Parts ManualHUY TIN100% (1)

- Subhadip CVDocument3 pagesSubhadip CVToufik HossainNo ratings yet

- Modbus RS485 Troubleshooting Quick ReferenceDocument16 pagesModbus RS485 Troubleshooting Quick ReferenceYashNo ratings yet

- Unit-I: Register TransferDocument79 pagesUnit-I: Register TransferHarshitNo ratings yet

- ANSI-A156-29-2001 Exit Locks Exit AlarmsDocument12 pagesANSI-A156-29-2001 Exit Locks Exit AlarmsAhmed AbidNo ratings yet

- Text Ib Cs Java EnabledDocument406 pagesText Ib Cs Java Enabledsushma_2No ratings yet

- Iergo - 2007Document49 pagesIergo - 2007vagabond4uNo ratings yet

- Planning Data Warehouse InfrastructureDocument21 pagesPlanning Data Warehouse InfrastructureRichie PooNo ratings yet

- SDCVDocument3 pagesSDCVTania Camila Cristancho CarbonellNo ratings yet

- 1988 Motorola Annual Report PDFDocument40 pages1988 Motorola Annual Report PDFmrinal1690No ratings yet

- Lesson 4 - Converting Time MeasurementsDocument3 pagesLesson 4 - Converting Time MeasurementsGeraldine Carisma AustriaNo ratings yet

- Industrial Engineering KTU M TechDocument7 pagesIndustrial Engineering KTU M Techsreejan1111No ratings yet

- Ims DBDocument48 pagesIms DBAle GomesNo ratings yet

- GE Dash Responder - Service ManualDocument100 pagesGE Dash Responder - Service ManualРинат ЖахинNo ratings yet

- Basics of InternetDocument23 pagesBasics of InternetRambir SinghNo ratings yet

- EASA Mod 5 BK 4 Electron 2Document44 pagesEASA Mod 5 BK 4 Electron 2aviNo ratings yet

- DDWD2013 Project-MISDocument9 pagesDDWD2013 Project-MISirsyadiskandarNo ratings yet

- 30 Crunchyroll HitsDocument4 pages30 Crunchyroll Hitsgustavovieira98762No ratings yet

- DataSunrise Database Security Suite User GuideDocument232 pagesDataSunrise Database Security Suite User Guidebcalderón_22No ratings yet

- Preliminary: (MS-OXPROPS) : Office Exchange Protocols Master Property List SpecificationDocument226 pagesPreliminary: (MS-OXPROPS) : Office Exchange Protocols Master Property List SpecificationrsebbenNo ratings yet

- Curriculum Vitae: Informations GénéralesDocument5 pagesCurriculum Vitae: Informations GénéralesJalel SaidiNo ratings yet

WS08

WS08

Uploaded by

buttersotyughCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

WS08

WS08

Uploaded by

buttersotyughCopyright:

Available Formats

SEA100- Workshop 8

Total Mark: 10 marks

Submission file(s): - WS08.docx

Please work in groups to complete this lab. This lab is worth 2.5% of the total course grade and

will be evaluated through your written submission, as well as the lab demo. During the lab

demo, group members are randomly selected to explain the submitted solution. Group

members not present during the lab demo will lose the demo mark.

Please submit the submission file(s) through Blackboard. Only one person must submit for the

group and only the last submission will be marked.

Part I: Neural Network Playground

1- Visit the following link and familiarize yourself with the settings:

A Neural Network playground

http://playground.tensorflow.org/

Note that you can change the data, the number of hidden layers, the number of neurons in

each hidden layer, as well as the parameters for training this dataset. The visualizations

shows you how well the network learns the task of binary classification for the given

dataset.

2- Select to check “Show test data” and “Discretize output”. Fill out Table 1, as shown in the

example. Keep settings not mentioned in the table unchanged.

3- Answer the following questions based on your findings above.

What type of problem can a single neuron (0 hidden layers) learn to solve?

ANSWER: A single neuron without any hidden layers can solve linearly separable

problems. These are problems where the classes can be separated by a straight line or

hyperplane in the feature space.

When are hidden layers needed?

SEA100- Workshop 8 Page 1 of 10

ANSWER: Hidden layers are needed when the problem at hand is not linearly separable

or when the relationships between input and output variables are complex and non-

linear. Hidden layers allow neural networks to learn hierarchical representations of data,

enabling them to tackle more intricate patterns and relationships.

What is the effect of increasing neurons or hidden layers?

ANSWER: Increasing the number of neurons or adding hidden layers can increase the

capacity and complexity of the neural network. This can potentially lead to better

performance in learning complex patterns and relationships in the data.

Is the activation function important?

ANSWER: Yes, the activation function is crucial in determining the output of a neuron

and the overall behavior of the neural network. Different activation functions introduce

non-linearity into the network, allowing it to learn and model complex relationships in

the data. Choosing the appropriate activation function based on the problem domain

and network architecture is important for achieving optimal performance.

How does the training loss change as learning progresses (increasing epochs)?

ANSWER: Typically, as the number of epochs increases during training, the training loss

initially decreases as the network learns to fit the training data. However, after a certain

point, further training may lead to overfitting, causing the training loss to increase again

as the model starts to memorize noise in the training data.

How does the test loss change as learning progresses?

ANSWER: The test loss generally follows a similar pattern to the training loss initially,

decreasing as the model learns from the training data. However, it is essential to

monitor the test loss to ensure that the model generalizes well to unseen data. If the

test loss starts increasing while the training loss decreases, it indicates that the model is

overfitting and may not perform well on new data. Regularization techniques and

monitoring the test loss are crucial for avoiding overfitting.

Part II: InceptionV3 Playground

4- InceptionV3 is a convolutional network that is trained on ImageNet. Do some research

online to answer the following questions. Include the links to your resources.

How many images is the ImageNet dataset composed of? How many in the training

subset?

The ImageNet dataset is composed of millions of images. Specifically, it contains

over 14 million images covering more than 20,000 categories or classes.

SEA100- Workshop 8 Page 2 of 10

The training subset of the ImageNet dataset contains approximately 1.2 million

images. Source: ImageNet

What is the problem type solved by InceptionV3 (regression, classification, or

clustering)?

InceptionV3 is primarily used for image classification tasks. It categorizes images

into different classes or categories based on their visual content.

How many object categories can InceptionV3 identify? Give a few examples.

InceptionV3 can identify thousands of object categories. It is trained on the

ImageNet dataset, which consists of over 20,000 categories. Examples of

categories include "dog," "cat," "car," "bird," "bicycle," "banana," "airplane," and

many more.

What is the accuracy of InceptionV3 on ImageNet dataset?

InceptionV3 achieves state-of-the-art accuracy on the ImageNet dataset. As of the latest

research, its top-5 error rate is around 3.46%. This means that in the top 5 predictions for

an image, the correct label is one of them around 96.54% of the time.

Source: Inception-v4, Inception-ResNet and the Impact of Residual Connections on

Learning

5- The following link lets you see how it works on another dataset of images (ICONIC200).

https://convnetplayground.fastforwardlabs.com/#/

Fill out Table 2 similar to the example given.

6- Answer the following questions based on your findings above.

How are the top 15 images selected?

The top 15 images are likely selected based on their similarity to the input image.

InceptionV3 uses its trained weights to compute features for each image and then

compares these features to determine similarity.

What does the “search result score” indicate?

The "search result score" likely indicates the degree of similarity between the

input image and the top 15 images retrieved by the model. A higher score implies

a higher degree of similarity.

SEA100- Workshop 8 Page 3 of 10

Does a high similarity to the top 15 images correlate with high search result score?

Typically, yes. A high similarity to the top 15 images should correlate with a high

search result score because the search result score is likely based on the similarity

between the input image and the retrieved images.

In your opinion, which images are easier to identify? In other words, what attributes

make it more likely to have a high search result score?

Images with clear, distinct objects in the foreground, minimal clutter or

background noise, and good lighting conditions are likely to have a high search

result score. High-resolution images with well-defined shapes and textures would

also be easier to identify.

In your opinion, which images are harder to identify? In other words, what attributes

make it more likely to have a low search result score?

Images with complex backgrounds, occlusions, or multiple objects in close

proximity may be harder to identify. Low-resolution images with blurry or

ambiguous content would also likely have a lower search result score.

Part III: Group work

7- Complete this declaration by adding your names:

We, - GROUP 3 (SAMUEL ASEFA, FATHIMA SHAJAHAN, SHRAVAN KIZHAKKITTIL, YISHAK

ABRAHAM) ----------- (mention your names), declare that the attached assignment is our

own work in accordance with the Seneca Academic Policy. We have not copied any part of

this assignment, manually or electronically, from any other source including web sites,

unless specified as references. We have not distributed our work to other students.

8- Specify what each member has done towards the completion of this work:

Name Task(s)

1 SAMUEL ASEFA HELPED THE GROUP TO COMPLETE PART 1

2 FATHIMA SHAJAHAN HELPED THE GROUP TO COMPLETE PART 1

3 SHRAVAN HELPED THE GROUP TO COMPLETE PART 2

KIZHAKKITTIL

4 YISHAK ABRAHAM HELPED THE GROUP TO COMPLETE PART 2

SEA100- Workshop 8 Page 4 of 10

Table 1: Neural Network Playground

Data Structure Activation Training & Training visualization at Training & Train & Test visualization at

Function Test Loss at low epochs Test Loss at >500 epochs

low epochs >500 epochs (select ‘show test data’)

(pause at

low values)

2 hidden Tanh (29 (540 epochs)

layers: epochs)

- 4 neurons Test loss

in 1st Test loss 0.001

- 2 neurons 0.218

in 2nd Training loss

Training 0.001

loss 0.165

1 hidden Tanh (15 (539 epochs)

layer: epochs)

- 4 neurons Test loss

in 1st Test loss 0.015

0.308

Training loss

Training 0.006

loss

0.293

SEA100- Workshop 8 Page 5 of 10

1 hidden Tanh (15 (529 epochs)

layer: epochs)

- 1 neurons Test loss

in 1st Test loss 0.401

0.308

Training loss

Training 0.384

loss

0.293

SEA100- Workshop 8 Page 6 of 10

1 hidden Tanh (22 (546 epochs)

layer: epochs)

- 2 neurons Test loss

in 1st Test loss 0.254

0.467

Training loss

Training 0.173

loss

0.466

O hidden Tanh (20 (539 epochs)

layers epochs)

Test loss

Test loss 0.000

0.002

Training loss

Training 0.000

loss

0.002

1 hidden Tanh (18 (534 epochs)

layer: epochs)

- 2 neurons Test loss

in 1st Test loss 0.299

0.450

Training loss

Training 0.263

loss

0.416

SEA100- Workshop 8 Page 7 of 10

1 hidden Tanh (16 (537 epochs)

layer: epochs)

- 4 neurons Test loss

in 1st Test loss 0.018

0.273

Training loss

Training 0.010

loss

0.261

2 hidden Sigmoid (14 (543 epochs)

layers: epochs)

- 4 neurons Test loss

in 1st Test loss 0.475

- 2 neurons 0.500

in 2nd Training loss

Training 0.456

loss

0.500

2 hidden ReLU (14 (545 epochs)

layers: epochs)

- 4 neurons Test loss

in 1st Test loss 0.002

- 2 neurons 0.430

in 2nd Training loss

Training 0.000

loss

0.425

SEA100- Workshop 8 Page 8 of 10

SEA100- Workshop 8 Page 9 of 10

Table 2. InceptionV3

Selected image Category Search Highest Lowest

result similarity similarity

score (dst) (among

top 15)

Banana 100.0% 0.56 0.39

Beetle 63.3% 0.60 0.51

EmpireStateBuilding 51.7% 0.57 0.50

Sedan 51.7% 0.58 0.50

SEA100- Workshop 8 Page 10 of 10

You might also like

- ML Unit-1Document12 pagesML Unit-120-6616 Abhinay100% (1)

- Coincent - Data Science With Python AssignmentDocument23 pagesCoincent - Data Science With Python AssignmentSai Nikhil Nellore100% (2)

- Invoice of Acer LaptopDocument1 pageInvoice of Acer LaptopAshish SinghNo ratings yet

- 19C Rac Dataguard Build Document: Mallikarjun Ramadurg Mallik034Document87 pages19C Rac Dataguard Build Document: Mallikarjun Ramadurg Mallik034Khansex ShaikNo ratings yet

- 50 Deep Learning Technical Interview Questions With AnswersDocument20 pages50 Deep Learning Technical Interview Questions With AnswersIkram Laaroussi100% (1)

- Machine Learning with Clustering: A Visual Guide for Beginners with Examples in PythonFrom EverandMachine Learning with Clustering: A Visual Guide for Beginners with Examples in PythonNo ratings yet

- Neural Networks BiasDocument7 pagesNeural Networks BiasNyariki KevinNo ratings yet

- 13 Useful Deep Learning Interview Questions and AnswerDocument6 pages13 Useful Deep Learning Interview Questions and AnswerMD. SHAHIDUL ISLAMNo ratings yet

- Artificial Intelligence Interview QuestionsFrom EverandArtificial Intelligence Interview QuestionsRating: 5 out of 5 stars5/5 (2)

- 30 Frequently Asked Deep Learning Interview Questions and AnswersDocument28 pages30 Frequently Asked Deep Learning Interview Questions and AnswersKhirod Behera100% (1)

- Deep Learning Interview Questions and AnswersDocument21 pagesDeep Learning Interview Questions and AnswersSumathi MNo ratings yet

- Exercises INF 5860: Exercise 1 Linear RegressionDocument5 pagesExercises INF 5860: Exercise 1 Linear RegressionPatrick O'RourkeNo ratings yet

- Datos de Cosas InteresantesDocument16 pagesDatos de Cosas InteresantesManolo CaracolNo ratings yet

- SIM - Chapters - DA T5Document9 pagesSIM - Chapters - DA T5Adam TaufikNo ratings yet

- Week - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Document7 pagesWeek - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Mrunal BhilareNo ratings yet

- Fitting A Neural Network ModelDocument9 pagesFitting A Neural Network ModelArpan KumarNo ratings yet

- Le y Yang - Tiny ImageNet Visual Recognition ChallengeDocument6 pagesLe y Yang - Tiny ImageNet Visual Recognition Challengemusicalización pacíficoNo ratings yet

- HyperparametersDocument15 pagesHyperparametersrajaNo ratings yet

- Nero Solution Litreture From HelpDocument6 pagesNero Solution Litreture From Helpvijay_dhawaleNo ratings yet

- HCIA AI Practice Exam AllDocument64 pagesHCIA AI Practice Exam AllKARLHENRI MANANSALANo ratings yet

- Understanding AlexNetDocument8 pagesUnderstanding AlexNetlizhi2012No ratings yet

- Counting in Dense Crowds Using Deep LearningDocument6 pagesCounting in Dense Crowds Using Deep LearningJoseph JeremyNo ratings yet

- Week 4Document13 pagesWeek 4Muneeba MehmoodNo ratings yet

- Plant Disease IdentificationDocument17 pagesPlant Disease IdentificationSuresh BalpandeNo ratings yet

- ELL784/AIP701: Assignment 3: InstructionsDocument3 pagesELL784/AIP701: Assignment 3: Instructionslovlesh royNo ratings yet

- A Recipe For Training Neural NetworksDocument18 pagesA Recipe For Training Neural Networkstxboxi23No ratings yet

- Unit 5 CNNDocument9 pagesUnit 5 CNNdhammjyoti dhawaseNo ratings yet

- Fixing Neural Network Course 2 1659759284Document30 pagesFixing Neural Network Course 2 1659759284Mahalakshmi VijayaraghavanNo ratings yet

- 01 Speed Read Tensorflow PlaygroundDocument6 pages01 Speed Read Tensorflow Playgroundmoumita deyNo ratings yet

- Institute of Engineering & ManagementDocument3 pagesInstitute of Engineering & Managementmanish pandeyNo ratings yet

- Exam2004 2 3Document22 pagesExam2004 2 3JoHn ScofieldNo ratings yet

- Oop Answer KeysDocument9 pagesOop Answer KeysJayvee TanNo ratings yet

- Designing Your Neural Networks - Towards Data ScienceDocument15 pagesDesigning Your Neural Networks - Towards Data ScienceGabriel PehlsNo ratings yet

- Cats and Dogs ClassificationDocument12 pagesCats and Dogs Classificationtechoverlord.contactNo ratings yet

- Unsupervised Learning, Neural NetworksDocument22 pagesUnsupervised Learning, Neural NetworksShraddha DubeyNo ratings yet

- A Probabilistic Theory of Deep Learning: Unit 2Document17 pagesA Probabilistic Theory of Deep Learning: Unit 2HarshitNo ratings yet

- Importany Questions Unit 3 4Document30 pagesImportany Questions Unit 3 4Mubena HussainNo ratings yet

- Ds Cheat SheetDocument21 pagesDs Cheat SheetDhruv Chawda100% (1)

- Models For Machine Learning: M. Tim JonesDocument10 pagesModels For Machine Learning: M. Tim JonesShanti GuruNo ratings yet

- Room Classification Using Machine LearningDocument16 pagesRoom Classification Using Machine LearningVARSHANo ratings yet

- A Recipe For Training Neural NetworksDocument16 pagesA Recipe For Training Neural NetworksMagnus RagnvaldssonNo ratings yet

- CS 672 - Neural Networks - Practice - Midterm - SolutionsDocument7 pagesCS 672 - Neural Networks - Practice - Midterm - SolutionsMohammed AL-waaelyNo ratings yet

- Artificial Neural NetworkDocument4 pagesArtificial Neural Networkreshma acharyaNo ratings yet

- Ai Ga1Document7 pagesAi Ga1Darshnik DeepNo ratings yet

- Neural Network Thesis 2013Document5 pagesNeural Network Thesis 2013WriteMySociologyPaperCanada100% (1)

- Neural Network Project Report.Document12 pagesNeural Network Project Report.Ashutosh LembheNo ratings yet

- Pattern RecongnitionDocument21 pagesPattern RecongnitionRiad El AbedNo ratings yet

- Basic Neural Network Tutorial - C++ Implementation and Source Code Taking InitiativeDocument45 pagesBasic Neural Network Tutorial - C++ Implementation and Source Code Taking InitiativeAbderrahmen BenyaminaNo ratings yet

- 2021 ITS665 - ISP565 - GROUP PROJECT-revMac21Document6 pages2021 ITS665 - ISP565 - GROUP PROJECT-revMac21Umairah IbrahimNo ratings yet

- Deep Learning Interview Questions - Deep Learning QuestionsDocument21 pagesDeep Learning Interview Questions - Deep Learning QuestionsheheeNo ratings yet

- CS440: HW3Document7 pagesCS440: HW3Jon MuellerNo ratings yet

- Unit 5Document8 pagesUnit 5arinkamble1711No ratings yet

- Thesis Artificial Neural NetworkDocument4 pagesThesis Artificial Neural NetworkBuyResumePaperSingapore100% (2)

- Homework 1Document2 pagesHomework 1Hendra LimNo ratings yet

- Interview QuestionsDocument67 pagesInterview Questionsvaishnav Jyothi100% (1)

- Introduction To Neural Networks AIDocument7 pagesIntroduction To Neural Networks AIheavenss2009No ratings yet

- Soft Module 1Document14 pagesSoft Module 1AMAN MUHAMMEDNo ratings yet

- Deep Learning Interview Questions: Click HereDocument45 pagesDeep Learning Interview Questions: Click HereRajachandra VoodigaNo ratings yet

- An Ingression Into Deep Learning - FPDocument17 pagesAn Ingression Into Deep Learning - FPrammilan kushwahaNo ratings yet

- Imagenet ClassificationDocument9 pagesImagenet Classificationice117No ratings yet

- Interview Questions For DS & DA (ML)Document66 pagesInterview Questions For DS & DA (ML)pratikmovie999100% (1)

- Artificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationFrom EverandArtificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationNo ratings yet

- California Housing DatasetDocument3 pagesCalifornia Housing DatasetAnas IshaqNo ratings yet

- Connectivity DartDocument4 pagesConnectivity Dartİlter Engin KIZILGÜNNo ratings yet

- Is Valid Only With Original Photo ID: Railway Recruitment BoardDocument3 pagesIs Valid Only With Original Photo ID: Railway Recruitment BoardajayNo ratings yet

- PECs Self Rating Questionnaire Scoring Sheet 1Document1 pagePECs Self Rating Questionnaire Scoring Sheet 1nelson segubreNo ratings yet

- Aquametro H AMT F131P D11Document36 pagesAquametro H AMT F131P D11SPIC UreaNo ratings yet

- Changing P6 Settings To Import Budget Costs From Excel Into P6Document11 pagesChanging P6 Settings To Import Budget Costs From Excel Into P6Ameer JoshiNo ratings yet

- Laboratoriya Ishi 2: Multimediya Texnologiyalari Kafedrasi Veb Dasturlashga Kirish FanidanDocument8 pagesLaboratoriya Ishi 2: Multimediya Texnologiyalari Kafedrasi Veb Dasturlashga Kirish Fanidansunnat ermatovNo ratings yet

- Girbau, S.A.: STI-30/STI-34 Parts ManualDocument36 pagesGirbau, S.A.: STI-30/STI-34 Parts ManualHUY TIN100% (1)

- Subhadip CVDocument3 pagesSubhadip CVToufik HossainNo ratings yet

- Modbus RS485 Troubleshooting Quick ReferenceDocument16 pagesModbus RS485 Troubleshooting Quick ReferenceYashNo ratings yet

- Unit-I: Register TransferDocument79 pagesUnit-I: Register TransferHarshitNo ratings yet

- ANSI-A156-29-2001 Exit Locks Exit AlarmsDocument12 pagesANSI-A156-29-2001 Exit Locks Exit AlarmsAhmed AbidNo ratings yet

- Text Ib Cs Java EnabledDocument406 pagesText Ib Cs Java Enabledsushma_2No ratings yet

- Iergo - 2007Document49 pagesIergo - 2007vagabond4uNo ratings yet

- Planning Data Warehouse InfrastructureDocument21 pagesPlanning Data Warehouse InfrastructureRichie PooNo ratings yet

- SDCVDocument3 pagesSDCVTania Camila Cristancho CarbonellNo ratings yet

- 1988 Motorola Annual Report PDFDocument40 pages1988 Motorola Annual Report PDFmrinal1690No ratings yet

- Lesson 4 - Converting Time MeasurementsDocument3 pagesLesson 4 - Converting Time MeasurementsGeraldine Carisma AustriaNo ratings yet

- Industrial Engineering KTU M TechDocument7 pagesIndustrial Engineering KTU M Techsreejan1111No ratings yet

- Ims DBDocument48 pagesIms DBAle GomesNo ratings yet

- GE Dash Responder - Service ManualDocument100 pagesGE Dash Responder - Service ManualРинат ЖахинNo ratings yet

- Basics of InternetDocument23 pagesBasics of InternetRambir SinghNo ratings yet

- EASA Mod 5 BK 4 Electron 2Document44 pagesEASA Mod 5 BK 4 Electron 2aviNo ratings yet

- DDWD2013 Project-MISDocument9 pagesDDWD2013 Project-MISirsyadiskandarNo ratings yet

- 30 Crunchyroll HitsDocument4 pages30 Crunchyroll Hitsgustavovieira98762No ratings yet

- DataSunrise Database Security Suite User GuideDocument232 pagesDataSunrise Database Security Suite User Guidebcalderón_22No ratings yet

- Preliminary: (MS-OXPROPS) : Office Exchange Protocols Master Property List SpecificationDocument226 pagesPreliminary: (MS-OXPROPS) : Office Exchange Protocols Master Property List SpecificationrsebbenNo ratings yet

- Curriculum Vitae: Informations GénéralesDocument5 pagesCurriculum Vitae: Informations GénéralesJalel SaidiNo ratings yet