Professional Documents

Culture Documents

ICSigSys BabyCryingDetection

ICSigSys BabyCryingDetection

Uploaded by

bt21ee013Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

ICSigSys BabyCryingDetection

ICSigSys BabyCryingDetection

Uploaded by

bt21ee013Copyright:

Available Formats

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/335388902

The Study of Baby Crying Analysis Using MFCC and LFCC in Different

Classification Methods

Conference Paper · August 2019

DOI: 10.1109/ICSIGSYS.2019.8811070

CITATIONS READS

48 2,850

3 authors, including:

Anggunmeka Luhur Prasasti Budhi Irawan

Telkom University Telkom University

41 PUBLICATIONS 212 CITATIONS 62 PUBLICATIONS 452 CITATIONS

SEE PROFILE SEE PROFILE

All content following this page was uploaded by Anggunmeka Luhur Prasasti on 25 August 2019.

The user has requested enhancement of the downloaded file.

The 2019 IEEE International Conference on Signals and Systems (ICSigSys)

The Study of Baby Crying Analysis Using MFCC

and LFCC in Different Classification Methods

Sita Purnama Dewi Anggunmeka Luhur Prasasti Budhi Irawan

Faculty of Electrical Engineering Faculty of Electrical Engineering Faculty of Electrical Engineering

Telkom University, Bandung Telkom University, Bandung Telkom University, Bandung

Bandung, Indonesia Bandung, Indonesia Bandung, Indonesia

sittadewii@student.telkomuniversity.ac.id anggunmeka@telkomuniversity.ac.id budhiirawan@telkomuniversity.ac.id

Abstract— Nowadays, there are so many researches about the the last one is classification process. The popular feature

baby crying detection for many purposes. The right interpretation of extraction methods for audio processing are Mel Frequency

the crying baby is notable for the medical objective so caregiver Cepstral Coefficient (MFCC) and Linear Frequency Cepstral

knows how to treat baby well. Babies within the first three months Coefficient (LFCC), they use sound frequency based. For the

of age use Dustan Baby Language (DBL) to communicate. Based on classification process, there are so many methods that have

some researches, there are five words to express their needs, such as been used, but in this study, KNN classification, Vector

“Neh” (I am hungry), “Eh” (burp is needed), “Owh/Oah” (fatigue), Quantization (VQ) and Simple Neural Network (SNN) are

“Eair/Eargghh” (cramps), “Heh” (physical discomfort; feel hot or analyzed to inform the appropriate condition when using this

wet). Beside that purpose, smart home technology implements baby

method combination from feature extraction to the

crying detection for monitoring the baby. Several stages to detect

crying baby are preprocessing, feature extraction, and classification.

classification.

Popular feature extractions for voice or sound recognition are Mel II. BASIC THEORY

Frequency Cepstral Coefficient (MFCC) and Linear Frequency

Cepstral Coefficient (LFCC). In this study, both of that feature A. Speech Signal

extraction have been analyzed to know the appropriate condition

The process of forming speech signals starting from the

when using one of those feature extractions. Classification methods

(KNN classification, Vector Quantization, and Simple Neural larynx (where the vocal cords located) and ended up in the

Network) affect the accuracy in detecting and recognizing of baby mouth. Speech or voice signals categorized into voiced and

crying. KNN classification with LFCC results better accuracy than unvoiced. Unvoiced is a condition where the state of the vocal

using MFCC one with the sample data is female voice. If using baby cords do not vibrate. Voiced is a condition where the state of

voice, there is no significant different accuracy in both of feature the vocal cords vibrate and produce a pulse of the glottis.

extractions. Pitch is known as the fundamental frequency of the glottis

[3]. The human voice has a low-frequency range with a

Keywords— DBL, MFCC, LFCC, KNN, VQ. fundamental frequency about 220 Hz for women and 130 Hz

for men and for the first formant vocal discrimination under

I. INTRODUCTION

1000 Hz [4].

Research on the baby crying consist of detecting the sound

of the crying baby and recognizing or identifying the baby’s

need when he cries (baby crying translation). Baby crying

detection is implemented on the smart home technology to

monitor the baby easily. Parents no need to always inspect the

CCTV when the baby’s home with the babysitter, but they can

get notification when their baby’s crying automatically. For a

medical objective, it is important to know what baby needs

from his crying. Before crying, the baby will try to

communicate with specific language which known as Dustan

Baby Language (DBL) that has some meaning like “I am

hungry”, “I am sleepy”, and others. The baby language is (a)

grouped into five meanings that use as universal language of

babies. The sound of crying baby contains a lot of information

about his emotional and physical condition, and also the baby

identity. Priscilla Dustan found that infants with the first three

months of age using proto language to communicate, which is

five words to express their needs [1]. That five words are

“Neh” (hungry), “Eh” (need to burp), “Owh/Oah” (fatigue),

“Eair/Eargghh” (cramps), “Heh” (physical discomfort; feel

hot or wet). Fundamental frequency in baby crying is ranging

from 250 Hz to 600 Hz [2].

(b)

Crying baby detection by Dunstan Baby Language (DBL) Fig. 1. (a) Spectogram of baby crying sigmal; (b) Spectogram of speech

is through three main stages, the first is preprocessing to sound signal

normalized all sound data, the second is feature extraction, and Adult voice and the sound of crying babies have

similarities and differences, in previous studies were found

978-1-7281-2177-2/19/$31.00 ©2019 IEEE 19

The 2019 IEEE International Conference on Signals and Systems (ICSigSys)

differences in the character of the sound in the aspect of the C. Feature Extraction Method

fundamental frequency (pitch) where the voice of crying After pre-processing, the next step of speech-recognition

babies is higher. The voice of crying babies has short vocal systems is feature extraction. It is an important process to get

cords and thin so it can be seen from spectrogram have tidy the feature of the audio which can distinguish one from the

characters[5]. A speech signal classification system should be other. Audio’s features are extracted by dividing the input

able to categorize different types of input voice, especially to signal from the frame with a length of 10-40ms then each

detect the type of speech, noise or music genre[3]. feature value is calculated [3]. Many studies of speech

B. Dunstan Baby Language (DBL) recognition system use MFCC feature extraction because it is

considered similar to the concept of human hearing [6]. Other

Priscilla Dunstan proposed the idea to identify the

studies also use LFCC because it has similarities with the

meaning of a crying baby called Dunstan Baby Language [2],

concept of MFCC.

That there are five types of universal sound of crying babies

and their meanings are as follows:

“Neh”: The sound of "Neh" comes from sucking and

tongue pushed into her mouth, which means that the baby

is hungry.

“Owh/Oah”: Sound "Owh" would sound like a man who (a)

yawns which means that the baby was sleeping.

“Heh”: The sound of "Heh" is derived from the infant's

response from burning or itching, which means that the

baby was not comfortable. (b)

“Eairh/Eargghh”: Sound "Eairh" is generated when the Fig. 3. (a) Linear filter-bank; (b) Mel filter-bank [7]

baby does not burp which causes air bubbles enter the

stomach and can’t be released, this means that the baby is Mel-Frequency Cepstral Coefficient (MFCC)

experiencing gastric problems.

“Eh”: Sound"Eh" is generated when the wind get trapped MFCC is a method of extracting feature that converts

sound into a voice signal vector. This method provides a

and does not get out in the chest that causes the air bubbles

representation of the short-term power spectrum of the signal.

out of the mouth, this means the baby wants to burp.

MFCC concept was similar to human hearing which has a

critical bandwidth of the human ear at the frequency below

1000Hz. MFCC process start from dividing the sound signal

into the form of a frame with a duration of 10-40 milliseconds

time frame, this is the frame blocking. Then frame blocking is

windowed by hamming to eliminate aliasing effects that occur

due to the framing. The windowing process where w is a

windowing function and N is a number of samples in one

frame, the equation (1) is the formula for the windowing

process:

w = 0.54 − 0.46 cos , 0≤n≤N−1 (1)

The results of this windowing process then followed by the

calculation of the Fast Fourier Transformation (FFT) which

converts the signal from the time domain into the frequency

domain. Filter-bank applied to the signal with the frequency

domain so that signal it turns into Mel frequency by the

equation (2):

Mel f = 2595 log 1+ (2)

MFCC using bank filter Mel-scale which is a band-pass

filter triangle logarithmic [8]. It makes a higher frequency

filter results the greater bandwidth. The final stage in the

MFCC process is Discrete Cosine Transformation (DCT) after

the results of the previous process is converted back into the

time domain, so that the signal can be presented well. These

results form a row of an acoustic vector which named Mel

Frequency Cepstral Coefficient.

Based on previous literature, the human ear has non-linear

characteristics in tone perception. The relationship between

Fig. 2. Spectogram of baby crying signal : a) Neh; b) Heh; c) Eh; d) Frequency and Mel-scale described for a case about frequency

Eairh/Eargghh; e) Owh/Oah [1] above 500Hz where the increasing value of the interval is

proportional to the increasing in the same pitch, for example,

four octaves on a scale Hz over two octaves on the Mel scale

value [3]. The relationship between the use of Mel scale and

978-1-7281-2177-2/19/$31.00 ©2019 IEEE 20

The 2019 IEEE International Conference on Signals and Systems (ICSigSys)

scale Hz in a nonlinear mapping function is useful for 2. Feature Extraction : Feature Extraction is a stage that

analyzing seismic signals where there are few differences aims to convert voice signals using digital signal

between the speech signal and seismic signal. The filter is used processing so that the signal can be differentiated

for the frequency range 0-22050 Hz in speech recognition but by the system. At this stage, MFCC and LFCC are

in the case study took samples on the band seismic signal is used as feature extraction methods.

below 500Hz. Mapping function which gets under 1000Hz

relatively linear so that the use of MFCC work is not good

enough at frequencies below 1000 Hz [9].

Linear Frequency Cepstral Coefficients (LFCC)

LFCC is a feature extraction method which laid out well.

The process starts with breaking the audio clip into multiple

segments consisting of a fixed number of frames. LFCC has

extraction process characteristic that similar to MFCC [10].

LFCC using a linear filter-bank to replace the Mel filter-bank.

The use of linear filter-banks is very well used in the high-

Fig. 5. MFCC Feature Extraction

frequency area.

D. Classification Method The stage of MFCC can be seen in figure 5, LFCC

After getting the feature value from the feature extraction has similar steps to MFCC, the different only in the

process, these values will be calculated by the classification type of fillter-bank used.

method used.

3. Classification : Classification at this stage is a

Vector Quantization continuation of the feature extraction stages. The

VQ is a method to map a large number of vectors of the sound signal will have its own characteristics so that

space to the number of clusters defined by each center is at this stage the sound signal can be classified.

represented as a vector. VQ produce lower distortion. Where

most of the value of the feature vector embraced and a set of 4. Data analysis and matching process are conducted

vectors by the small size produces a value that matches to the after the sound signals are classified. At this stage,

centroid of distribution [2]. all samples and classification results are analyzed.

K-Nearest Neighbor Algorithm (KNN) IV. RESULT AND DISCUSSCION

KNN is a method that focuses on the classification of types The previous study, MFCC is good enough in speech

of data to other data that has its own label vector. This recognition, but it is bad enough when the audio contains a

classification determines the non-linear decision boundary to lot of noises [10], so it needs proper preprocessing to

increase its performance. Here is a distance metric that is often eliminate the noise. In an experiment to classify the samples

used to calculate the distance of the sample, the Euclidean of nine-speaker using MFCC and VQ codebook, the process

distance. Using two samples x and y, Euclidean distance to get cepstral value is calculated using 12 coefficients of the

between the samples was determined by the formula (3): nine different sounds. The database consists of 21 forms a

| − |= ∑ − (3) sound signal, 8 of them from different users and the rest from

the same users. There are seven women's voices and men. The

where n is the number of features that describe the x and y

test is carried out at a noisy place results a high failure rate of

[3].

MFCC, i.e. 20% where the failure occurred in testing the

III. SYSTEM DESIGN & OVERVIEW sound Speakers8-Male detected as Spekers-9-Female. Then

using LFCC as the addition of MFCC method can reduce the

Baby crying detection system focuses on monitoring the

error rate [11]. Table I shows the change in the value of the

baby. The system can be implemented in a smart home so that

error before and after adding LFCC method in feature

caregivers or parents can monitor their children. This system

extraction MFCC from 146 speakers (73 male and 73

can be explained in figure (4):

female). First row is EER with 10 common sentences, and the

second row is unique sentences testing.

TABLE I. EER (EQUAL ERROR RATIO) BEFORE AND AFTER LFCC

Fig. 4. System overview BEFORE AFTER

MALE FEMALE MALE FEMALE

Several stages in the system are as follows: 2.63 % 7.01 % 2.28 % 3.48 %

2.49% 5.9 % 1.96 % 3.22 %

1. Voice Input : Voice input consists of various kinds of

children's voice signals which are digital evidence

that is tested whether it is a baby's cry or not. In one test to the case of a telephone conversation it was

Before entering the extraction stage, the sound is found that LFCC gave EER increase 21.5% and 15.0%

pre-processed to remove signals from the noise or relative to MFCC in nasal and non-nasal consonants area [6].

other unexpected sounds. Performance comparison between MFCC and LFCC using

NIST SRE 2010 shows that the MFCC and LFCC complete

978-1-7281-2177-2/19/$31.00 ©2019 IEEE 21

The 2019 IEEE International Conference on Signals and Systems (ICSigSys)

each other. LFCC is better than MFCC in capturing spectral B. Analyze using LFCC

in high frequency regions, such as in detecting female voice Using codebook model, LFCC and Euclidean distance,

as long as other parameters are same. It is because the female’s where the voice signal is extracted using parameters of a pitch

vocal channel is relatively shorter and its formant frequency is

but no recorded using a Vector Quantization, it yields

higher than male’s one [12].

accuracy about 93% to detect crying baby[2], The benefits of

A. Analyze using MFCC using this method is :

MFCC has some advantages in extracting feature that - It is easy to identify babies who cry and to verify the

used for analysis of baby crying classification [13], such as : use of KNN to classify infant emotions.

- It can identify the character of the sound so that it can - It results high accuracy if using euclidean distance.

determine the pattern of sound. - It provides higher accuracy in detecting emotion from

- The output vector has a small data size but does not a crying baby.

remove the noise characteristics in the extraction. - Cutting silent voice signals to produce a sound that is

- MFCC works similar to the way of a human listener more specific resulting higher accuracy.

works in giving their perceptions. - LFCC produces the same frequency with the

MFCC[16].

The test using KNN classification method with the value C. Comparison of MFCC and LFCC

K=1 when compared to the Simple Neural Network with two

There are 40 data that are baby crying and 40 data are not

hidden layers, the first layer has seven knots. The accuracy of

baby crying consisting of noise, mute sounds and baby

recognition shows in table II.

laughter as training data. then the data were tested for each of

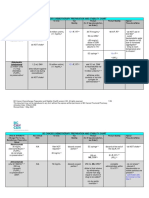

TABLE II. ACCURACY KNN VS SNN WITH MFCC the 10 data in each voice category (Crying and Non-Crying)

using the LFCC and MFCC methods using the KNN

Classification Method classification with K = 3, the results are shown in Table III.

Type of cry

KNN NN

Neh 80.00% 40.00%

TABLE III. ACCURACY LFCC VS MFCC WITH KNN

Owh 100.00% 80.00%

Heh 66.67% 100.00% Test Case LFCC MFCC

Eairh / Eh 57.14% 42.68% Crying 90% 80%

Average Accuracy 75.95% 65.67% Non-Crying 90% 90%

Average Accuracy 90% 85%

Table II is the result of MFCC feature extraction using

tuner package has several parameters for the input data. The Tests were conducted to the baby voice between the ages

training data is 85% of 4 classes (Eairh / Eh, Heh, Neh, and of 0-9 months [17]. There are noise additions such as engine

Owh) of baby crying; using KNN K = 1; and normalized noise, passers-by, and motorcycles. There are 140 training

standard deviation for the number of sample testing is 139 data with 5 categories with 28 samples each. Number of

audio[14]. Sample Rate recording = 11205 Hz; Total spectra testing data is 35 consist of 7 crying babies for every kind of

(n bands) = 40; Window time = 0.02 to 0.08 seconds; The crying. Testing was conducted to analyze performance

distance between frames (hop time) where if the voice MFCC and LFCC. There is significant performance between

recording is less than 2 seconds than set the value is 0.1 both methods, the average percentage of LFCC accuracy is

seconds hop time reduce the duration of the recording, but about 91.58% and the average percentage of MFCC accuracy

when recording more than 2 seconds, the value is 1 second is about 82.14% [15]. Recognition rate (number of

hop time reduced the duration of the recording; Pre-emphasize recognizable words / number of words presented) of each

= 0.97; Sum power = true; Liftering exponential value class of crying baby sound is shown in table IV [18].

(lifterexp) = 0.6; Spectral bandwidth (bwidth) = 10; Usecmp

= True; Number of columns in the result vector (numcep) = TABLE IV. THE RECOGNITION RATE LFCC VS MFCC

10 columns for each hop time where the number of features

Test Case LFCC MFCC

produced in the extraction process is 20 Frequency Features. Eairh/Eh 87.22% 85.11%

The average accuracy of 96.67% is found in the system if the Heh 88.19% 86.87%

parameter Wintime = 0:08; Neh 94.57% 79.89%

Owh 93.33% 80.87%

Unlike the case with the use of five types of cries by Average Accuracy 90.83% 83.19%

presenting 50 data samples consist of 40 training data samples

and 10 testing data samples for each class. The accuracy of all

It can be concluded based on Table III and Table IV that

recognition: Neh is 60%, Eh is 70%, Owh is 60%, Eairh is

percentage of using LFCC is better than MFCC although both

70%, and Heh is 70%. Where the signal was recorded for 20

sec at the hospital environment [8]. The overall average methods have accuracy values above 80% [17], It is because

accuracy is 66%. the sound of crying babies is on high-frequency region.

The use of LFCC combined with the VQ codebook using

MFCC has high accuracy using Euclidean distance BG calculation with the data collected from the sound of

parameter. This model can produce accuracy about 94% [15]. babies aged 0-6 months. 150 voice as training data that

This happens because the signal cut research sound of silence represent each of the 50 cries each category and testing data

at the beginning and end of the speech signal. The conclusion as many as 40. LFCC works well in capturing high-frequency

is that using MFCC method has high accuracy values when area so it concluded LFCC better than MFCC [18], However,

using KNN.

both these methods are considered either to be applied in

analyzing the emotions in the baby's crying.

978-1-7281-2177-2/19/$31.00 ©2019 IEEE 22

The 2019 IEEE International Conference on Signals and Systems (ICSigSys)

The equation below will produce the Mel scale cepstral V. CONCLUSION

coefficient. Where the value N is the number of Mel Automatic crying detection methods for infants with high

Frequency Wrapping, L is the number of Mel-Scale Cepstral fundamental frequency (pitch), with short and thin vocal

Coefficient. cords in crying baby sound using LFCC feature extraction

C = ∑ cos m k − E , m = 1,2, … , L (4) and k-Nearest Neighbor (KNN) algorithm for classification

is more effective than using MFCC and 2 other classifications

The experiment of baby crying sound uses L or number (SNN and VQ). LFCC uses the linear cepstral coefficient,

of cepstral = 19 results cepstral figure of MFCC and LFCC while MFCC uses a filter-bank which is a logarithmic triangle

as shown in figure 6 and 7 with 19 different colors who band-pass filter. Because of its filter bank characteristic, the

represent the 19 cepstral. use of MFCC is relatively not good enough high frequency

of voice such as female voice and baby voice so it is

recommended use LFCC. LFCC outperforms MFCC when

using female voice trial data. It is because the female vocal

tract is relatively short and the formant frequency obtained is

relatively high. Besides that, the use of LFCC feature

extraction as an addition to the MFCC method can help

reduce the error rate at MFCC.

The accuracy value can be higher because of the

preprocessing factor where the mute sound signal is cut at the

beginning, unvoiced sound, and end of the sound, so that the

feature more valuable and precise. The choice of the use

MFCC method in a condition that full of noise is considered

to be unsuitable, but it is still good in performance if proper

Fig. 6. MFCC with 19-Cepstral preprocessing conducted and the voice is in regular

frequency. The accuracy of the value on the use of the LFCC

and MFCC methods depends on the number of test samples

used and the type of sample being tested.

REFERENCES

[1] E. Franti, I. Ispas, And M. Dascalu, “Testing The Universal Baby

Language Hypothesis - Automatic Infant Speech Recognition

With Cnns,” 2018 41st Int. Conf. Telecommun. Signal Process.

Tsp 2018, Pp. 1–4, 2018.

[2] S. S. Jagtap, P. K. Kadbe, And P. N. Arotale, “System Propose For

Be Acquainted With Newborn Cry Emotion Using Linear

Frequency Cepstral Coefficient,” Int. Conf. Electr. Electron.

Optim. Tech. Iceeot 2016, Pp. 238–242, 2016.

[3] H. Subramanian, “Audio Signal Classification,” M. Tech Credit

Fig. 7. LFCC with 19-Cepstral Semin. Rep., Pp. 1–17, 2004.

[4] R. C. G. Smith And S. R. Price, “Modelling Of Human Low

Frequency Sound Localization Acuity Demonstrates Dominance

Figure 8 is a combination from figure 6 and 7 that Of Spatial Variation Of Interaural Time Difference And Suggests

represent MFCC with orange line and represent LFCC with Uniform Just-Noticeable Differences In Interaural Time

blue line. MFCC produces a less stable pattern compared to Difference,” Plos One, Vol. 9, No. 2, 2014.

[5] G. Gu, X. Shen, And P. Xu, “A Set Of Dsp System To Detect Baby

LFCC pattern. Where the sound of baby cries on high- Crying,” 2018 2nd Ieee Adv. Inf. Manag. Autom. Control Conf.,

frequency area on the LFCC is better than MFCC as shown No. Imcec, Pp. 411–415, 2018.

on figure below. But, it is not significant different accuracy [6] H. Lei And E. Lopez, “Mel, Linear, And Antimel Frequency

on baby voice than adult voice because the characteristic of Cepstral Coefficients In Broad Phonetic Regions For Telephone

Speaker Recognition,” Proc. Annu. Conf. Int. Speech Commun.

baby voice not as much as adult voice. Assoc. Interspeech, Pp. 2323–2326, 2009.

[7] N. Sengupta, M. Sahidullah, And G. Saha, “Lung Sound

Classification Using Cepstral-Based Statistical Features,” Comput.

Biol. Med., Vol. 75, Pp. 118–129, 2016.

[8] S. Bano And K. M. Ravikumar, “Decoding Baby Talk: A Novel

Approach For Normal Infant Cry Signal Classification,” Proc. Ieee

Int. Conf. Soft-Computing Netw. Secur. Icsns 2015, Pp. 24–26,

2015.

[9] G. Jin, B. Ye, Y. Wu, And F. Qu, “Vehicle Classification Based

On Seismic Signatures Using Convolutional Neural Network,”

Ieee Geosci. Remote Sens. Lett., Vol. Pp, Pp. 1–5, 2018.

[10] M. J. Alam, P. Kenny, And V. Gupta, “Tandem Features For Text-

Dependent Speaker Verification On The Reddots Corpus,” Proc.

Annu. Conf. Int. Speech Commun. Assoc. Interspeech, Vol. 08–12–

Sept, Pp. 420–424, 2016.

[11] A. K. Singh, R. Singh, And Ashutosh Dwivedi, “Evolvement And

Recent Research In Parametric Representations Of Speech

Fig. 8. Final result of LFCC and MFCC Features For Automatic Speaker Recognition,” Int. J. Electr.

Electron. Data Commun., Vol. 2, No. 1, Pp. 11–15, 1389.

978-1-7281-2177-2/19/$31.00 ©2019 IEEE 23

The 2019 IEEE International Conference on Signals and Systems (ICSigSys)

[12] X. Zhou, D. Garcia-romero, R. Duraiswami, C. Espy-wilson, S. identification by using codebook as feature matching, and MFCC

Shamma, and A. Motivation, “Linear versus Mel Frequency as feature extraction,” J. Theor. Appl. Inf. Technol., vol. 56, no. 3,

Cepstral Coefficients for Speaker Recognition,” pp. 559–564, pp. 437–442, 2013.

2011. [16] R. G. Dandage and Prof. P.R. Badadapure, “A Survey on an

[13] S. Sharma, P. R. Myakala, R. Nalumachu, S. V. Gangashetty, and Automatic Infant’s Cry Detection Using Linear Frequency

V. K. Mittal, “Acoustic analysis of infant cry signal towards Cepstrum Coefficients Rajeshwari,” Int. J. Innov. Res. Comput.

automatic detection of the cause of crying,” 2017 7th Int. Conf. Commun. Eng., vol. 153, no. 9, pp. 975–8887, 2017.

Affect. Comput. Intell. Interact. Work. Demos, ACIIW 2017, vol. [17] V. V Bhagatpatil and P. V. M. Sardar, “An Automatic Infant’s Cry

2018–Janua, pp. 117–122, 2018. Detection Using Linear Frequency Cepstrum Coefficients

[14] W. S. Limantoro, C. Fatichah, and U. L. Yuhana, “Application (LFCC),” vol. 5, no. 12, pp. 1379–1383, 2014.

development for recognizing type of infant’s cry sound,” Proc. [18] R. G. Dandage and P. P. R. Badadapure, “Infant ’ s Cry Detection

2016 Int. Conf. Inf. Commun. Technol. Syst. ICTS 2016, pp. 157– Using Linear Frequency Cepstrum Coefficients,” pp. 5377–5383,

161, 2017. 2017.

[15] M. Dewi Renanti, A. Buono, and W. Ananta Kusuma, “Infant cries

978-1-7281-2177-2/19/$31.00 ©2019 IEEE 24

View publication stats

You might also like

- Ashrae 15-2022 (Packaged Standard 34-2022)Document5 pagesAshrae 15-2022 (Packaged Standard 34-2022)rpercorNo ratings yet

- CSETDocument5 pagesCSETLinda SchweitzerNo ratings yet

- 2020 Dockzilla Loading Dock Buyers GuideDocument11 pages2020 Dockzilla Loading Dock Buyers GuideNadeem RazaNo ratings yet

- Automatic Methods For Infant Cry Classification: Abstract - Studies Have Shown That Newborns Are CryingDocument4 pagesAutomatic Methods For Infant Cry Classification: Abstract - Studies Have Shown That Newborns Are CryingnidhalNo ratings yet

- Baby Cry Classification Using Machine LearningDocument5 pagesBaby Cry Classification Using Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- (IJCST-V10I3P20) :sahithi Vesangi, Saketh Reddy Regatte, Baby V, Chalumuru SureshDocument5 pages(IJCST-V10I3P20) :sahithi Vesangi, Saketh Reddy Regatte, Baby V, Chalumuru SureshEighthSenseGroupNo ratings yet

- Baby Cry AnalysisDocument7 pagesBaby Cry AnalysisVaishnavi VeluNo ratings yet

- WordDocument6 pagesWordkamtam srichandanaNo ratings yet

- Frequential Characterization of Healthy and Pathologic Newborns CriesDocument12 pagesFrequential Characterization of Healthy and Pathologic Newborns CriesnidhalNo ratings yet

- Icnsc 2013 6548768Document5 pagesIcnsc 2013 6548768diiinnaliinnnNo ratings yet

- Infant Cry Language Analysis and RecogniDocument11 pagesInfant Cry Language Analysis and RecogniStaszek SkowrońskiNo ratings yet

- Biomedical Signal Processing and Control: Lina Abou-Abbas, Hesam Fersaie Alaie, Chakib TadjDocument9 pagesBiomedical Signal Processing and Control: Lina Abou-Abbas, Hesam Fersaie Alaie, Chakib TadjnidhalNo ratings yet

- Automatic Classification of Infant Cry:A Review: J. Saraswathy, M.Hariharan, Sazali Yaacob and Wan KhairunizamDocument6 pagesAutomatic Classification of Infant Cry:A Review: J. Saraswathy, M.Hariharan, Sazali Yaacob and Wan KhairunizamnidhalNo ratings yet

- 2018 - Peat NeonatosDocument10 pages2018 - Peat NeonatosDaniela Belén Sánchez DuarteNo ratings yet

- Nazifa 2019 J. Phys. Conf. Ser. 1372 012011Document7 pagesNazifa 2019 J. Phys. Conf. Ser. 1372 012011sharmanikki8381No ratings yet

- Perinatal AsphyxiaDocument5 pagesPerinatal AsphyxiaMikaela Eris CortelloNo ratings yet

- An Investigation Into Infant Cry and Apgar Score Using Principle Component AnalysisDocument6 pagesAn Investigation Into Infant Cry and Apgar Score Using Principle Component AnalysisSaranya N MuthuNo ratings yet

- Expiratory and Inspiratory Cries Detection Using Different Signals' Decomposition TechniquesDocument16 pagesExpiratory and Inspiratory Cries Detection Using Different Signals' Decomposition TechniquesnidhalNo ratings yet

- Future Prospects of The Application of The Infant Cry in The MedicineDocument16 pagesFuture Prospects of The Application of The Infant Cry in The MedicinenidhalNo ratings yet

- 1 s2.0 S0021992420300642 MainDocument12 pages1 s2.0 S0021992420300642 MainDee HumairaNo ratings yet

- International Journal of Pediatric Otorhinolaryngology: Andre M. MarcouxDocument8 pagesInternational Journal of Pediatric Otorhinolaryngology: Andre M. MarcouxOmar E Sandoval SantiagoNo ratings yet

- 2019 Artificial IntelligentDocument6 pages2019 Artificial IntelligentHemalNo ratings yet

- Current Trends in Medicine and Medical RDocument7 pagesCurrent Trends in Medicine and Medical RJunior KabeyaNo ratings yet

- Development of Central Auditory Processes and Their Links With Language Skills in Typically Developing ChildrenDocument5 pagesDevelopment of Central Auditory Processes and Their Links With Language Skills in Typically Developing Childrennoemie.pagnonNo ratings yet

- Masking Level Difference and ElectrophysiologicalDocument8 pagesMasking Level Difference and ElectrophysiologicalFernanda CardozoNo ratings yet

- Gjo MS Id 555800 PDFDocument3 pagesGjo MS Id 555800 PDFkoas pulmouhNo ratings yet

- Kuhl BroccaDocument6 pagesKuhl Broccaiman rabihNo ratings yet

- Acoustics: Diagnosis of Noise Inside Neonatal Incubators Under Free-Field ConditionsDocument13 pagesAcoustics: Diagnosis of Noise Inside Neonatal Incubators Under Free-Field Conditionskalrasahil77No ratings yet

- JournalDocument6 pagesJournalAndré LuizNo ratings yet

- Fact Sheet Cochlear ImplantDocument4 pagesFact Sheet Cochlear Implantmuhammad al farisiNo ratings yet

- Advances in Auditory Prostheses: ReviewDocument6 pagesAdvances in Auditory Prostheses: ReviewvaleperoneNo ratings yet

- Voice Pathology Detection Using Interlaced Derivative Pattern On Glottal Source ExcitationDocument9 pagesVoice Pathology Detection Using Interlaced Derivative Pattern On Glottal Source ExcitationGabriel Almeida AzevedoNo ratings yet

- Lidzba Brain and Language 2011 119 6Document11 pagesLidzba Brain and Language 2011 119 6Blanca ArreguínNo ratings yet

- Developmental Science - 2022 - VogelsangDocument9 pagesDevelopmental Science - 2022 - VogelsangLihui TanNo ratings yet

- Pdvocal: Towards Privacy-Preserving Parkinson'S Disease Detection Using Non-Speech Body SoundsDocument16 pagesPdvocal: Towards Privacy-Preserving Parkinson'S Disease Detection Using Non-Speech Body SoundsGustavo Vásquez LozadaNo ratings yet

- Speech ABRreview Jo HS2016Document9 pagesSpeech ABRreview Jo HS2016Aji KusumaNo ratings yet

- Cry DetectionDocument19 pagesCry Detectionsoumyarup.gh27No ratings yet

- Devi Priya SECOND PAPERDocument7 pagesDevi Priya SECOND PAPERNaga Raju GNo ratings yet

- Speech Emotion Recognition Using Neural NetworksDocument6 pagesSpeech Emotion Recognition Using Neural NetworksEditor IJTSRDNo ratings yet

- Clinical Review: Cochlear Implants in Infants and ToddlersDocument16 pagesClinical Review: Cochlear Implants in Infants and ToddlersRashid HussainNo ratings yet

- Long Term Auditory Follow Up of Preterm Infants After Neonatal Hearing ScreeningDocument6 pagesLong Term Auditory Follow Up of Preterm Infants After Neonatal Hearing ScreeningLissaberti AmaliahNo ratings yet

- Springer Lecture Notes in Computer ScienceDocument13 pagesSpringer Lecture Notes in Computer Sciencetejas rayangoudarNo ratings yet

- Articulo Disartria y Paralisis CerebralDocument10 pagesArticulo Disartria y Paralisis CerebralHaizea MuñozNo ratings yet

- Kent - Et - Al Acoustic in SSDDocument22 pagesKent - Et - Al Acoustic in SSDBetül Özsoy TanrıkuluNo ratings yet

- Segmentation of Expiratory and Inspiratory Sounds in Baby Cry Audio Recordings Using Hidden Markov ModelsDocument10 pagesSegmentation of Expiratory and Inspiratory Sounds in Baby Cry Audio Recordings Using Hidden Markov ModelsnidhalNo ratings yet

- HA & BrainDocument100 pagesHA & BrainGhada WageihNo ratings yet

- Philosophy Study of Infant Cry AnalisisDocument11 pagesPhilosophy Study of Infant Cry AnalisissomiwarehouseNo ratings yet

- 07 - Artigo Do MicrofoneDocument9 pages07 - Artigo Do MicrofonelenzajrNo ratings yet

- 1 1.1 Problem Statement: Chapter OneDocument7 pages1 1.1 Problem Statement: Chapter OneGodsmiracle O. IbitayoNo ratings yet

- 1 s2.0 S1746809423000812 MainDocument12 pages1 s2.0 S1746809423000812 Mainkanthimathi chidambaramNo ratings yet

- Voice Disorder Classification Using Speech Enhancement and Deep Learning ModelsDocument18 pagesVoice Disorder Classification Using Speech Enhancement and Deep Learning ModelsGabriel Almeida AzevedoNo ratings yet

- The Application of Bionic Wavelet TransformationDocument11 pagesThe Application of Bionic Wavelet TransformationzameershahNo ratings yet

- 2019 Classification of VDDocument7 pages2019 Classification of VDHemalNo ratings yet

- 647 PDFDocument6 pages647 PDFShruthi KyNo ratings yet

- Voxyvi A System For Long-Term Audio and Video Acquisitions in NeonatalDocument15 pagesVoxyvi A System For Long-Term Audio and Video Acquisitions in NeonatalSuvarn SrivatsaNo ratings yet

- Inner Ear Malformations in Cochlear Implantation Candidates at Mother and Child Hospital of Bingerville (Cote D'ivoire)Document5 pagesInner Ear Malformations in Cochlear Implantation Candidates at Mother and Child Hospital of Bingerville (Cote D'ivoire)Phong HoàngNo ratings yet

- Sound Environments Surrounding Preterm Infants Within An Occupied Closed IncubatorDocument6 pagesSound Environments Surrounding Preterm Infants Within An Occupied Closed Incubatorsam crushNo ratings yet

- Newborn Infant Hearing Screening DMIMSUDocument12 pagesNewborn Infant Hearing Screening DMIMSUSharnie JoNo ratings yet

- Class 12 Bio Project 182Document42 pagesClass 12 Bio Project 182Amar VenkateshNo ratings yet

- Learning, Memory, and Cognitive Processes in Deaf Children Following Cochlear ImplantationDocument50 pagesLearning, Memory, and Cognitive Processes in Deaf Children Following Cochlear ImplantationSoporte CeffanNo ratings yet

- Bjorl: Auditory Neuropathy / Auditory Dyssynchrony in Children With Cochlear ImplantsDocument7 pagesBjorl: Auditory Neuropathy / Auditory Dyssynchrony in Children With Cochlear ImplantsTamizaje Auditivo Luz FigueroaNo ratings yet

- Neural Modeling of Speech Processing and Speech Learning: An IntroductionFrom EverandNeural Modeling of Speech Processing and Speech Learning: An IntroductionNo ratings yet

- Electronic Fetal MonitoringFrom EverandElectronic Fetal MonitoringXiaohui GuoNo ratings yet

- Projeto Caixa Acustica X-PRO-15Document2 pagesProjeto Caixa Acustica X-PRO-15Denílson SouzaNo ratings yet

- CHEM 580: Computational Chemistry Fall 2020 From Schrodinger To Hartree-FockDocument41 pagesCHEM 580: Computational Chemistry Fall 2020 From Schrodinger To Hartree-FockciwebNo ratings yet

- Chemistry Project On Presence of Insecticides & Pesticides in FDocument8 pagesChemistry Project On Presence of Insecticides & Pesticides in FShaila BhandaryNo ratings yet

- Presentation8 Relational AlgebraDocument23 pagesPresentation8 Relational Algebrasatyam singhNo ratings yet

- Adam&Eve 5Document4 pagesAdam&Eve 5Victor B. MamaniNo ratings yet

- NEW Fees Record 2010-11Document934 pagesNEW Fees Record 2010-11manojchouhan2014No ratings yet

- 1949 NavalRadarSystemsDocument145 pages1949 NavalRadarSystemsTom NorrisNo ratings yet

- Pro Ofpoint Messaging Security Gateway™ and Proofpoint Messaging Security Gateway™ Virtual Edition - Release 8.XDocument3 pagesPro Ofpoint Messaging Security Gateway™ and Proofpoint Messaging Security Gateway™ Virtual Edition - Release 8.XErhan GündüzNo ratings yet

- Certificado Copla 6000 1Document1 pageCertificado Copla 6000 1juan aguilarNo ratings yet

- A Kick For The GDP: The Effect of Winning The FIFA World CupDocument39 pagesA Kick For The GDP: The Effect of Winning The FIFA World CupBuenos Aires HeraldNo ratings yet

- Loop Switching PDFDocument176 pagesLoop Switching PDFshawnr7376No ratings yet

- WGST 3809A - Feminist ThoughtDocument12 pagesWGST 3809A - Feminist ThoughtIonaNo ratings yet

- English Project QuestionsDocument3 pagesEnglish Project Questionsharshitachhabria18No ratings yet

- Apostolic Fathers II 1917 LAKEDocument410 pagesApostolic Fathers II 1917 LAKEpolonia91No ratings yet

- Xt2052y2asr GDocument1 pageXt2052y2asr GjoseNo ratings yet

- The Life Eternal Trust Pune: (A Sahaja Yoga Trust Formed by Her Holiness Shree Nirmala Devi)Document20 pagesThe Life Eternal Trust Pune: (A Sahaja Yoga Trust Formed by Her Holiness Shree Nirmala Devi)Shrikant WarkhedkarNo ratings yet

- Henry The NavigatorDocument2 pagesHenry The Navigatorapi-294843376No ratings yet

- 102 192 1 SMDocument8 pages102 192 1 SMLinaNo ratings yet

- 16.1.4 Lab - Configure Route Redistribution Using BGPDocument11 pages16.1.4 Lab - Configure Route Redistribution Using BGPnetcom htkt100% (1)

- Result Awaited FormDocument1 pageResult Awaited FormRoshan kumar sahu100% (1)

- Peritonitis Capd-1Document15 pagesPeritonitis Capd-1David SenNo ratings yet

- Week 5 E-Tech DLLDocument3 pagesWeek 5 E-Tech DLLJOAN T. DELITONo ratings yet

- Grade Thresholds - June 2023: Cambridge International AS & A Level Thinking Skills (9694)Document2 pagesGrade Thresholds - June 2023: Cambridge International AS & A Level Thinking Skills (9694)rqb7704No ratings yet

- Chemo Stability Chart - AtoKDocument59 pagesChemo Stability Chart - AtoKAfifah Nur Diana PutriNo ratings yet

- PrepositionDocument5 pagesPrepositionsourov07353No ratings yet

- Lamp Ba t302Document16 pagesLamp Ba t302Yeni EkaNo ratings yet

- Nippon SteelDocument7 pagesNippon SteelAnonymous 9PIxHy13No ratings yet