Professional Documents

Culture Documents

PPA-Building Prediction Model ML

PPA-Building Prediction Model ML

Uploaded by

Daffa Ammarul0 ratings0% found this document useful (0 votes)

2 views26 pagesCopyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

2 views26 pagesPPA-Building Prediction Model ML

PPA-Building Prediction Model ML

Uploaded by

Daffa AmmarulCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 26

Classification usually used to Regression usually used to

predict labeling predict numerical values

Groups items using a

hierarchical clustering

algorithm.

Groups items using the k-

Means clustering algorithm.

Building Basic Models of Learnings

This is where we load our data table into

orange and define what data type our

features are. In this case we are loading an

Excel table file (.xlsx) with our 94 (95 if we

include the target variable ‘ROCK’) features.

As mentioned previously, the data in our

features can come in the form of numeric,

categorical, text or time series. To access the

widget double click on it, navigate to and

open the file then define what each

feature’s (column) data type is. In this case

ROCK will be categorical and the remainder

will be numeric. Under the ‘Role’ column

ROCK also needs to be put as our target

variable as it’s what we are trying to classify.

Rank assesses the relationship between the

features and target variable and tells us how

well they correlate. As geologists we know

that the biggest differentiating features

between mafic and felsic rocks are the

magnesium and silica content. Rank is

telling us that MgO varies the most between

the 4 lithologies, followed by SiO2, Al2O3

etc etc. Here we can decide what features

can go into our model. There is a goldilocks

zone of how many features should be

included into to make an optimum model

and it is determined on a case by case basis.

You don’t want too few and you don’t want

too many, thankfully in Orange it is easy to

just select how many pass through the

workflow by just highlighting them. In this

case I’ve selected the top 10 features shown

below.

Train and Test Data

Train and Test Data

THE JUICY BITS

These 5 pink widgets are Machine Learning algorithms, each with their own way of mathematically

classifying/predicting our target variable using the geochem data. In the beer example we used a a single

regression algorithm to create our model, in Orange we can use many different algorithms at once and

compare them. There is an ever-growing list of ML algorithms but in this workflow I have used k-Nearest-

Neighbour (kNN), Support Vector Machines (SVM), Naive Bayes, Random Forest and Adaptive Boosting

(AdaBoost). Each algorithm has parameters that can be tweaked in attempt to increase model accuracy.

Machine Learning algorithms can be quite (definitely are) mathematically intense and difficult to break

down into simple terms. There are many documents online that outline each specific algorithms function,

but the Orange documentation is usually pretty good. This is a good summary for selecting the right

algorithm too.

TEST AND SCORE

When you create a Machine Learning model you need a way

to make sure your model actually works. We can do this by

randomly splitting the data set into ‘training’ and ‘test’ data.

The training data is used to create the model and the test data

is used to determine the accuracy of the model. The 5

different models created by the training data ignores the

target variable in our test data (just looks at the chemistry and

not the rock type) and attempts to classify/predict what rock

type each instance would be. The predicted rock type is then

compared to the actual known value in our test data and each

model is scored based on accuracy. In this case the split is

selected at 80% training and 20% test, representing 320 and

80 instances respectively.

To put it simply we, the operator, know what the target

variables are for both our training and our test data, but our

model only knows the training data. eg the model knows that

the training data is Homer Simpson and now has to predict

whether the test data is or not.

TEST AND SCORE

TEST AND SCORE

PREDICTION

You might also like

- 21 Machine Learning Design Patterns Interview Questions (ANSWERED) MLStackDocument29 pages21 Machine Learning Design Patterns Interview Questions (ANSWERED) MLStackChristine CaoNo ratings yet

- Thor Hurricane Owners Manual 2012Document131 pagesThor Hurricane Owners Manual 2012glenrgNo ratings yet

- Business Report M2 PDFDocument14 pagesBusiness Report M2 PDFA d100% (2)

- Deploying Enterprise SIP Trunks With CUBE, CUCM and MediaSense SBC PDFDocument96 pagesDeploying Enterprise SIP Trunks With CUBE, CUCM and MediaSense SBC PDFZeeshan DawoodaniNo ratings yet

- Reading 11 - Programming End-to-End SolutionDocument13 pagesReading 11 - Programming End-to-End SolutionlussyNo ratings yet

- Query For Machine LearningDocument6 pagesQuery For Machine Learningpriyada16No ratings yet

- The 5 Feature Selection Algorithms Every Data Scientist Should KnowDocument29 pagesThe 5 Feature Selection Algorithms Every Data Scientist Should KnowRama Chandra GunturiNo ratings yet

- Malignant Comments Classifier ProjectDocument30 pagesMalignant Comments Classifier ProjectSaranya MNo ratings yet

- A "Short" Introduction To Model SelectionDocument25 pagesA "Short" Introduction To Model SelectionSuvin Chandra Gandhi (MT19AIE325)No ratings yet

- Receiver Operator CharacteristicDocument25 pagesReceiver Operator CharacteristicSuvin Chandra Gandhi (MT19AIE325)No ratings yet

- Ranking Features Based On Predictive Power - Importance of The Class LabelsDocument11 pagesRanking Features Based On Predictive Power - Importance of The Class LabelsJuanNo ratings yet

- Employee Attrition MiniblogsDocument15 pagesEmployee Attrition MiniblogsCodein100% (1)

- Building Good Training SetsDocument51 pagesBuilding Good Training Setsthulasi prasadNo ratings yet

- TB 969425740Document16 pagesTB 969425740guohong huNo ratings yet

- Article Review 11 EngDocument18 pagesArticle Review 11 EngCecilia FauziahNo ratings yet

- ML Unit 2Document18 pagesML Unit 2SUJATA SONWANENo ratings yet

- Model EvaluationDocument29 pagesModel Evaluationniti guptaNo ratings yet

- Chapter5: Experiment On Explanation UtilityDocument7 pagesChapter5: Experiment On Explanation UtilityLillian LinNo ratings yet

- Ch3 - Structering ML ProjectDocument36 pagesCh3 - Structering ML ProjectamalNo ratings yet

- Data ScienceDocument38 pagesData ScienceDINESH REDDYNo ratings yet

- ML MetricsDocument9 pagesML Metricszpddf9hqx5No ratings yet

- Opening Black Boxes: How To Leverage Explainable Machine LearningDocument11 pagesOpening Black Boxes: How To Leverage Explainable Machine LearningSowrya ReganaNo ratings yet

- Dealing With Missing Data in Python PandasDocument14 pagesDealing With Missing Data in Python PandasSelloNo ratings yet

- Employee Attrition PredictionDocument21 pagesEmployee Attrition Predictionuser user100% (1)

- Unit III 1Document21 pagesUnit III 1mananrawat537No ratings yet

- PS Notes (Machine LearningDocument14 pagesPS Notes (Machine LearningKodjo ALIPUINo ratings yet

- Data Science Interview GuideDocument23 pagesData Science Interview GuideMary KokoNo ratings yet

- The Art of Finding The Best Features For Machine Learning - by Rebecca Vickery - Towards Data ScienceDocument14 pagesThe Art of Finding The Best Features For Machine Learning - by Rebecca Vickery - Towards Data ScienceHamdan Gani, S.Kom., MTNo ratings yet

- 11 Important Model Evaluation Error Metrics 2Document4 pages11 Important Model Evaluation Error Metrics 2PRAKASH KUMAR100% (1)

- Machine LEarningDocument4 pagesMachine LEarningKarimNo ratings yet

- Stock Market Analysis Using Supervised Machine LearningDocument6 pagesStock Market Analysis Using Supervised Machine LearningAbishek Pangotra (Abi Sharma)No ratings yet

- Chapter-3-Common Issues in Machine LearningDocument20 pagesChapter-3-Common Issues in Machine Learningcodeavengers0No ratings yet

- Rapid Miner TutorialDocument15 pagesRapid Miner TutorialDeepika Vaidhyanathan100% (1)

- Ss PPT PresentationDocument11 pagesSs PPT PresentationNAGA LAKSHMI GAYATRI SAMANVITA POTTURUNo ratings yet

- ML Model Paper 1 Solution-1Document10 pagesML Model Paper 1 Solution-1VIKAS KUMARNo ratings yet

- Validation Over Under Fir Unit 5Document6 pagesValidation Over Under Fir Unit 5Harpreet Singh BaggaNo ratings yet

- Modelling and Error AnalysisDocument8 pagesModelling and Error AnalysisAtmuri GaneshNo ratings yet

- Decision-MakingUsingtheAnalyticHierarchyProcessAHPandPJM Melvin AlexanderDocument15 pagesDecision-MakingUsingtheAnalyticHierarchyProcessAHPandPJM Melvin AlexanderRavi TejNo ratings yet

- How To Choose The Right Test Options When Evaluating Machine Learning AlgorithmsDocument16 pagesHow To Choose The Right Test Options When Evaluating Machine Learning AlgorithmsprediatechNo ratings yet

- Company Wise Data Science Interview QuestionsDocument39 pagesCompany Wise Data Science Interview Questionschaddi100% (1)

- 07two Marks Quest & AnsDocument4 pages07two Marks Quest & AnsV MERIN SHOBINo ratings yet

- Data Prep and Cleaning For Machine LearningDocument22 pagesData Prep and Cleaning For Machine LearningShubham JNo ratings yet

- Top 9 Feature Engineering Techniques With Python: Dataset & PrerequisitesDocument27 pagesTop 9 Feature Engineering Techniques With Python: Dataset & PrerequisitesMamafouNo ratings yet

- TEAM DS Final ReportDocument14 pagesTEAM DS Final ReportGurucharan ReddyNo ratings yet

- Chapter 2 SolutionsDocument6 pagesChapter 2 Solutionsfatmahelawden000No ratings yet

- Machine Learning ModelDocument9 pagesMachine Learning ModelSanjay KumarNo ratings yet

- Advanced Machine Learning and Feature Engineering: StackingDocument7 pagesAdvanced Machine Learning and Feature Engineering: StackingAtmuri GaneshNo ratings yet

- Machine LearningDocument9 pagesMachine LearningSanjay KumarNo ratings yet

- House Price PredictionDocument14 pagesHouse Price PredictionSanidhya pasariNo ratings yet

- Machine Learning KNN - SupervisedDocument9 pagesMachine Learning KNN - SuperviseddanielNo ratings yet

- Data Mining PrimerDocument5 pagesData Mining PrimerJoJo BristolNo ratings yet

- 40 Interview Questions On Machine Learning From Analytics VidhyaDocument14 pages40 Interview Questions On Machine Learning From Analytics Vidhyashakir aliNo ratings yet

- Data Science Interview Questions 30 Days 1686062665Document300 pagesData Science Interview Questions 30 Days 1686062665yassine.boutakboutNo ratings yet

- Interview QuestionsDocument4 pagesInterview QuestionsMahima Sharma100% (1)

- Train Test Split in PythonDocument11 pagesTrain Test Split in PythonNikhil TiwariNo ratings yet

- ML 5Document14 pagesML 5dibloaNo ratings yet

- Interview Questions On Machine LearningDocument22 pagesInterview Questions On Machine LearningPraveen100% (4)

- Basic Interview Q's On ML PDFDocument243 pagesBasic Interview Q's On ML PDFsourajit roy chowdhury100% (2)

- CE802 ReportDocument7 pagesCE802 ReportprenithjohnsamuelNo ratings yet

- Machine Learning Models: by Mayuri BhandariDocument48 pagesMachine Learning Models: by Mayuri BhandarimayuriNo ratings yet

- Process Performance Models: Statistical, Probabilistic & SimulationFrom EverandProcess Performance Models: Statistical, Probabilistic & SimulationNo ratings yet

- Advanced Analytics with Transact-SQL: Exploring Hidden Patterns and Rules in Your DataFrom EverandAdvanced Analytics with Transact-SQL: Exploring Hidden Patterns and Rules in Your DataNo ratings yet

- 60-Cell Bifacial Mono PERC Double Glass Module (30mm Frame) JAM60D00 - BPDocument2 pages60-Cell Bifacial Mono PERC Double Glass Module (30mm Frame) JAM60D00 - BPAlcides Araujo SantosNo ratings yet

- Atlas of Obstetric UltrasoundDocument48 pagesAtlas of Obstetric UltrasoundSanchia Theresa100% (1)

- (CANEDA 81-A) Narrative Report - Project Implementation PlanDocument2 pages(CANEDA 81-A) Narrative Report - Project Implementation PlanJULIANNE BAYHONNo ratings yet

- BPO CultureDocument2 pagesBPO Cultureshashi1810No ratings yet

- SOP 04 - Preparation of Glycerol-Malachite Green Soaked Clippings - v1 - 0Document1 pageSOP 04 - Preparation of Glycerol-Malachite Green Soaked Clippings - v1 - 0MioDe Joseph Tetra DummNo ratings yet

- CDCA 2203 Ram & RomDocument11 pagesCDCA 2203 Ram & RomMUHAMAD AMMAR SYAFIQ BIN MAD ZIN STUDENTNo ratings yet

- Quantum User ManualDocument220 pagesQuantum User ManualRoshi_11No ratings yet

- Experimental Study On Strength and Durability Characteristics of Concrete With Partial Replacement of Nano-Silica, Nano-Vanadium MixtureDocument4 pagesExperimental Study On Strength and Durability Characteristics of Concrete With Partial Replacement of Nano-Silica, Nano-Vanadium MixtureInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- 2000 Seadoo Shop Manual 1 BombardierDocument456 pages2000 Seadoo Shop Manual 1 BombardierEcocec Centralita Electronica ComplementariaNo ratings yet

- MPF Multi-Pole Automotive Fuses: Technical Data 10602Document2 pagesMPF Multi-Pole Automotive Fuses: Technical Data 10602Darren DeVoseNo ratings yet

- Assignment 1 (Internal Control)Document23 pagesAssignment 1 (Internal Control)Ali WaqarNo ratings yet

- Evolution of Islamic Geometric Patterns PDFDocument9 pagesEvolution of Islamic Geometric Patterns PDFSaw Tun Lynn100% (1)

- UBS Price and Earnings ReportDocument43 pagesUBS Price and Earnings ReportArun PrabhudesaiNo ratings yet

- Mis All TopicsDocument28 pagesMis All TopicsfaisalNo ratings yet

- Haha Youre Not Real Schizophrenia Has Spread NIGHTMARE NIGHTMARE NIGHTMAREDocument9 pagesHaha Youre Not Real Schizophrenia Has Spread NIGHTMARE NIGHTMARE NIGHTMARERyanflare1231No ratings yet

- Module 2 - SAMPLE OF REPORT WRITING FORMATDocument5 pagesModule 2 - SAMPLE OF REPORT WRITING FORMATMirza Farouq BegNo ratings yet

- Optimization of Spray Drying Process For Developing Seabuckthorn Fruit Juice Powder Using Response Surface MethodologyDocument9 pagesOptimization of Spray Drying Process For Developing Seabuckthorn Fruit Juice Powder Using Response Surface MethodologyLaylla CoelhoNo ratings yet

- Hso422567 Issue2Document13 pagesHso422567 Issue2Александр ЩербаковNo ratings yet

- Computer Network Unit-5 NotesDocument44 pagesComputer Network Unit-5 NotessuchitaNo ratings yet

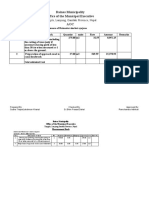

- Rainas Municipality Office of The Municipal Executive AOC: Tinpiple, Lamjung, Gandaki Province, NepalDocument10 pagesRainas Municipality Office of The Municipal Executive AOC: Tinpiple, Lamjung, Gandaki Province, NepalLaxu KhanalNo ratings yet

- Cip 002 1Document3 pagesCip 002 1silviofigueiroNo ratings yet

- West Africa Gas Pipeline - Benin EIADocument677 pagesWest Africa Gas Pipeline - Benin EIABdaejo RahmonNo ratings yet

- Miele Tumble Dryer T5206 Operating Instructions enDocument36 pagesMiele Tumble Dryer T5206 Operating Instructions enAlberto AriasNo ratings yet

- Data Theft by Social Media PlatformDocument1 pageData Theft by Social Media PlatformSarika SinghNo ratings yet

- DiagnoseDocument25 pagesDiagnosesambathnatarajanNo ratings yet

- Hooverphonic Biography (2000)Document4 pagesHooverphonic Biography (2000)6980MulhollandDriveNo ratings yet

- The Engulfing Trader Handbook English VersionDocument26 pagesThe Engulfing Trader Handbook English VersionJm TolentinoNo ratings yet

- Engine Variant: V2527-A5Document12 pagesEngine Variant: V2527-A5Kartika Ningtyas100% (1)