Professional Documents

Culture Documents

AIML Question Bank-ML-17Jan2022

AIML Question Bank-ML-17Jan2022

Uploaded by

Parameshwarappa CmCopyright:

Available Formats

You might also like

- Deliria-Faerie Tales For A New Millennium PDFDocument344 pagesDeliria-Faerie Tales For A New Millennium PDFJuan Del Desierto100% (4)

- Question Bank: Subject Name: Artificial Intelligence & Machine Learning Subject Code: 18CS71 Sem: VIIDocument8 pagesQuestion Bank: Subject Name: Artificial Intelligence & Machine Learning Subject Code: 18CS71 Sem: VIIDileep Kn100% (1)

- Assignment 2 Updated AIMLDocument2 pagesAssignment 2 Updated AIMLVismitha GowdaNo ratings yet

- Machine LearningDocument37 pagesMachine LearningPrince RajNo ratings yet

- MCA3 (DS) Unit 4 MLDocument29 pagesMCA3 (DS) Unit 4 MLRuparel Education Pvt. Ltd.No ratings yet

- Question Bank AMLDocument4 pagesQuestion Bank AMLshwethaNo ratings yet

- Module 3 DecisionTree NotesDocument14 pagesModule 3 DecisionTree NotesManas HassijaNo ratings yet

- Decision and Regression Tree LearningDocument51 pagesDecision and Regression Tree LearningMOOKAMBIGA A100% (1)

- Sequences and Series - By TrockersDocument49 pagesSequences and Series - By TrockerschamunorwamachonaNo ratings yet

- Sequences and Series - by TrockersDocument49 pagesSequences and Series - by TrockersshellingtontiringindiNo ratings yet

- ML Unit-2 Material WORDDocument25 pagesML Unit-2 Material WORDafreed khanNo ratings yet

- Extension and Evaluation of ID3 - Decision Tree AlgorithmDocument8 pagesExtension and Evaluation of ID3 - Decision Tree AlgorithmVăn Hoàng TrầnNo ratings yet

- Module 4 Question Bank: Big Data AnalyticsDocument2 pagesModule 4 Question Bank: Big Data AnalyticsKaushik KapsNo ratings yet

- 1 4Document26 pages1 4Rao KunalNo ratings yet

- LINFO2262: Decision Trees + Random Forests: Pierre DupontDocument43 pagesLINFO2262: Decision Trees + Random Forests: Pierre DupontQuentin LambotteNo ratings yet

- Module 3-Decision Tree LearningDocument33 pagesModule 3-Decision Tree Learningramya100% (1)

- Lec19 Decision Trees TypednotesDocument17 pagesLec19 Decision Trees TypednotesNilima DeoreNo ratings yet

- Aiml Assignment 2Document2 pagesAiml Assignment 2Gem StonesNo ratings yet

- Chapter 10 MLDocument69 pagesChapter 10 MLKhoa TrầnNo ratings yet

- 476A Beam Report (FINAL)Document23 pages476A Beam Report (FINAL)farhanNo ratings yet

- AiML 4 3 SGDocument59 pagesAiML 4 3 SGngNo ratings yet

- 2.3 Decision-Tree-AlgorithmDocument61 pages2.3 Decision-Tree-AlgorithmNandini rathiNo ratings yet

- AiML 4 3Document59 pagesAiML 4 3Nox MidNo ratings yet

- Aiml Imp@Azdocuments - inDocument5 pagesAiml Imp@Azdocuments - inSINDHU TKNo ratings yet

- Predictive Modeling Week3Document68 pagesPredictive Modeling Week3Kunwar RawatNo ratings yet

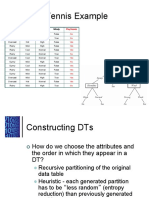

- Play Tennis Example: Outlook Temperature Humidity WindyDocument29 pagesPlay Tennis Example: Outlook Temperature Humidity Windyioi123No ratings yet

- Laboratory 1 Intro To Machine Learning 1Document3 pagesLaboratory 1 Intro To Machine Learning 1Emmanuel AncenoNo ratings yet

- AIML IMPROVEMENT TEST DOCDocument50 pagesAIML IMPROVEMENT TEST DOCsyedshariqkamran123No ratings yet

- 03 EagleseDocument11 pages03 EagleseHENRY ALEJANDRO MAYORGA ARIASNo ratings yet

- VI Sem Machine Learning CS 601Document28 pagesVI Sem Machine Learning CS 601pankaj guptaNo ratings yet

- ML Experiment-1Document3 pagesML Experiment-1Sanket SinghNo ratings yet

- Time Weather Temperature Company Humidity Wind GoesDocument3 pagesTime Weather Temperature Company Humidity Wind GoesAaraNo ratings yet

- Decision TreeDocument5 pagesDecision TreeshubhNo ratings yet

- Government Engineering College, Modasa: B.E. - Computer Engineering (Semester - VII) 3170724 - Machine LearningDocument3 pagesGovernment Engineering College, Modasa: B.E. - Computer Engineering (Semester - VII) 3170724 - Machine LearningronakNo ratings yet

- Lesson TwoDocument6 pagesLesson TwoMarijo Bugarso BSIT-3BNo ratings yet

- Machine Learning Descision TreeDocument20 pagesMachine Learning Descision TreeNilima DeoreNo ratings yet

- VI Sem Machine Learning CS 601 PDFDocument28 pagesVI Sem Machine Learning CS 601 PDFpankaj guptaNo ratings yet

- Week 2Document31 pagesWeek 2Mohammad MoatazNo ratings yet

- 1985 Deconvolution Analysis of Friction PeaksDocument4 pages1985 Deconvolution Analysis of Friction Peaksac.diogo487No ratings yet

- Intro To Machine LearningDocument15 pagesIntro To Machine Learningharrisondaniel0415No ratings yet

- R20 Iii-Ii ML Lab ManualDocument79 pagesR20 Iii-Ii ML Lab ManualEswar Vineet100% (1)

- Foundations of Machine Learning: Module 2: Linear Regression and Decision TreeDocument16 pagesFoundations of Machine Learning: Module 2: Linear Regression and Decision TreeShivansh Sharma100% (2)

- ID3 Lecture4Document25 pagesID3 Lecture4jameelkhalidalanesyNo ratings yet

- Week05 - Naive Bayes Tutorial - SolutionsDocument23 pagesWeek05 - Naive Bayes Tutorial - SolutionsRawaf FahadNo ratings yet

- Lec 3&4Document20 pagesLec 3&4Hariharan RavichandranNo ratings yet

- Data DescriptionDocument1 pageData DescriptionAyele NugusieNo ratings yet

- Lecture 2 EE675Document4 pagesLecture 2 EE675SAURABH VISHWAKARMANo ratings yet

- Instructions For How To Solve AssignmentDocument4 pagesInstructions For How To Solve AssignmentbotiwaNo ratings yet

- Association Rules L3Document5 pagesAssociation Rules L3u- m-No ratings yet

- 15-381 Spring 2007 Assignment 6: LearningDocument14 pages15-381 Spring 2007 Assignment 6: LearningsandeepanNo ratings yet

- Physics 201: Lab On Experimental Uncertainties Using A PendulumDocument3 pagesPhysics 201: Lab On Experimental Uncertainties Using A PendulumChee WongNo ratings yet

- EXP-3-To Implement CART Decision Tree Algorithm.docxDocument14 pagesEXP-3-To Implement CART Decision Tree Algorithm.docxbadgujarvarad800No ratings yet

- 2b Decision Tree 18mayDocument16 pages2b Decision Tree 18mayAkshay KaushikNo ratings yet

- LAB 3 ActivitiesDocument2 pagesLAB 3 ActivitiesChristopher KatkoNo ratings yet

- Enhancements To Basic Decision Tree Induction, C4.5Document53 pagesEnhancements To Basic Decision Tree Induction, C4.5Poornima VenkateshNo ratings yet

- Assignment 2 AIMLDocument6 pagesAssignment 2 AIMLPriyanka appinakatteNo ratings yet

- ML PapersDocument10 pagesML PapersJovan JacobNo ratings yet

- Machine LearningDocument52 pagesMachine LearningaimahsiddNo ratings yet

- Assignment 2Document3 pagesAssignment 2Saujatya MandalNo ratings yet

- Decision Trees Iterative Dichotomiser 3 (ID3) For Classification: An ML AlgorithmDocument7 pagesDecision Trees Iterative Dichotomiser 3 (ID3) For Classification: An ML AlgorithmMohammad SharifNo ratings yet

- AIML Assignment5-23Dec2022Document1 pageAIML Assignment5-23Dec2022Parameshwarappa CmNo ratings yet

- AIML Question Bank-AI-17Jan2022Document1 pageAIML Question Bank-AI-17Jan2022Parameshwarappa CmNo ratings yet

- Assignment2-28June2024Document1 pageAssignment2-28June2024Parameshwarappa CmNo ratings yet

- Assignment1-6June2024Document1 pageAssignment1-6June2024Parameshwarappa CmNo ratings yet

- Unit IG2: Risk Assessment: L L P 1 o 2Document24 pagesUnit IG2: Risk Assessment: L L P 1 o 2white heart green mindNo ratings yet

- Scholastic Instant Practice Packets Numbers - CountingDocument128 pagesScholastic Instant Practice Packets Numbers - CountingcaliscaNo ratings yet

- Course CurriculumDocument3 pagesCourse CurriculumPRASENJIT MUKHERJEENo ratings yet

- Ai-Ai ResumeDocument3 pagesAi-Ai ResumeNeon True BeldiaNo ratings yet

- UntitledDocument2 pagesUntitledelleNo ratings yet

- Brother 1660e Service ManualDocument117 pagesBrother 1660e Service ManualtraminerNo ratings yet

- Module - 1 IntroductionDocument33 pagesModule - 1 IntroductionIffat SiddiqueNo ratings yet

- Arlegui Seminar RoomDocument1 pageArlegui Seminar RoomGEMMA PEPITONo ratings yet

- DorkDocument5 pagesDorkJeremy Sisto ManurungNo ratings yet

- EEET423L Final ProjectDocument7 pagesEEET423L Final ProjectAlan ReyesNo ratings yet

- Adamco BIFDocument2 pagesAdamco BIFMhie DazaNo ratings yet

- Factory Physics PrinciplesDocument20 pagesFactory Physics Principlespramit04100% (1)

- Semantically Partitioned Object Sap BW 7.3Document23 pagesSemantically Partitioned Object Sap BW 7.3smiks50% (2)

- Geography P1 May-June 2023 EngDocument20 pagesGeography P1 May-June 2023 Engtanielliagreen0No ratings yet

- Occupational Safety and Health Aspects of Voice and Speech ProfessionsDocument34 pagesOccupational Safety and Health Aspects of Voice and Speech ProfessionskaaanyuNo ratings yet

- 3M CorporationDocument3 pages3M CorporationIndoxfeeds GramNo ratings yet

- New AccountDocument1 pageNew Account1144abdurrahmanNo ratings yet

- GEO01 - CO1.2 - Introduction To Earth Science (Geology)Document14 pagesGEO01 - CO1.2 - Introduction To Earth Science (Geology)Ghia PalarcaNo ratings yet

- Understanding Organizational Behavior: de Castro, Donna Amor Decretales, Thea Marie Estimo, Adrian Maca-Alin, SaharaDocument41 pagesUnderstanding Organizational Behavior: de Castro, Donna Amor Decretales, Thea Marie Estimo, Adrian Maca-Alin, SaharaAnna Marie RevisadoNo ratings yet

- 11 Earthing and Lightning Protection PDFDocument37 pages11 Earthing and Lightning Protection PDFThomas Gilchrist100% (1)

- Tle 7-1st Periodic TestDocument2 pagesTle 7-1st Periodic TestReymart TumanguilNo ratings yet

- MC&OB Unit 4Document17 pagesMC&OB Unit 4Tanya MalviyaNo ratings yet

- Getting The Most From Lube Oil AnalysisDocument16 pagesGetting The Most From Lube Oil AnalysisGuru Raja Ragavendran Nagarajan100% (2)

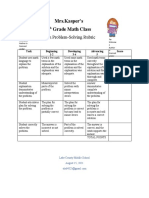

- Problemsolving RubricDocument1 pageProblemsolving Rubricapi-560491685No ratings yet

- Ricardo Moreira Da Silva Baylina - Artistic Research ReportDocument65 pagesRicardo Moreira Da Silva Baylina - Artistic Research ReportEsteve CostaNo ratings yet

- A Study On Financial Performance of Selected Public and Private Sector Banks - A Comparative AnalysisDocument3 pagesA Study On Financial Performance of Selected Public and Private Sector Banks - A Comparative AnalysisVarun NagarNo ratings yet

- Mathematics Past Paper QuestionsDocument174 pagesMathematics Past Paper Questionsnodicoh572100% (2)

- Laboratory For Energy and The Environment: HighlightsDocument14 pagesLaboratory For Energy and The Environment: HighlightsZewdu TsegayeNo ratings yet

- ACCA P5 Question 2 June 2013 QaDocument4 pagesACCA P5 Question 2 June 2013 QaFarhan AlchiNo ratings yet

AIML Question Bank-ML-17Jan2022

AIML Question Bank-ML-17Jan2022

Uploaded by

Parameshwarappa CmCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

AIML Question Bank-ML-17Jan2022

AIML Question Bank-ML-17Jan2022

Uploaded by

Parameshwarappa CmCopyright:

Available Formats

Sri Taralabalu Jagadguru Education Society®, Sirigere

S T J INSTITUTE OF TECHNOLOGY, RANEBENNUR – 581 115

DEPARTMENT OF COMPUTER SCIENCE & ENGINEERING

Question Bank-ML

1) Define Concept learning, and discuss the terminologies used in concept learning

Problems.

2) For the learning example given by following table, illustrate the general-to-specific

ordering of hypotheses in concept learning.

Example SKY Air Temp. Humidity Wind Water Fore cast Enjoy sport

1 Sunny Warm Normal Strong Warm Same Yes

2 Sunny Warm High Strong Warm Same Yes

3 Rain Cold High Strong Warm Change No

4 Sunny Warm High Strong Cool Change Yes

3) Write FIND-S algorithm, and hence illustrate the algorithm for the learning example given

by the above table.

4) Discuss the limitations of FIND-S algorithm.

5) Trace the (running of) Find-S algorithm on the following training data. The attributes have the

following values: Study: Intense, Moderate, None, Difficulty: Easy, Hard, Sleepy: Very, Somewhat,

Attendance: Frequent, Rare, Hungry: Yes, No, Thirsty: Yes, No. The hypothesis space contains

6-tuples with ?, , a single required value (e.g., Normal) for the attributes.

Example Study Difficulty Sleepy Attendance Hungry Thirsty PassTest

1 Intense Normal Extremely Frequent No No Yes

2Example Intense

SKY Normal

Air Temp.Slightly

Humidity Frequent

Wind No

Water No cast

Fore Yes sport

Enjoy

3 1 None

Sunny HighWarm Slightly

Normal Frequent

Strong No

Warm Yes

Same No Yes

4 2 Intense

Sunny Normal

Warm Slightly

High Frequent

Strong Yes

Warm Yes

Same YesYes

3 Rain Cold High Strong Warm Change No

6) 4 Sunny Warm High Strong Cool Change Yes

Write Candidate-elimination algorithm, and hence illustrate the algorithm for the learning example

given by the following table.

7) Write a note on inductive hypothesis and unbiased learner with respect to the concept learning

algorithms.

8) Consider the following set of training examples:

Instance Classification a1 a2

1 + T T

2 + T T

3 - T F

4 + F F

5 - F T

6 - F T

(a) What is the entropy of this collection of training examples with respect to the target function

classification?

(b) What is the information gain of a2 relative to these training examples?

9) Write the steps of ID3 algorithm.

10) Describe hypothesis Space search in ID3 and contrast it with Candidate-Elimination algorithm.

11) Illustrate Occam’s razor and relate the importance of Occam’s razor with respect to ID3 algorithm.

12) Discuss the effect of reduced error pruning in decision tree algorithm.

13) What are the capabilities and limitations of ID3 algorithm?

14) Explain the Rule Post Pruning.

15) What is a perceptron? Explain.

16) Explain the appropriate problems for ANN learning with its characteristics.

17) Discuss the representational power of perceptron.

18) Explain the perceptron training rule and the delta rule.

19) Describe the derivation of the Gradient descent rule.

20) Write the Gradient descent rule algorithm and explain.

21) Give the steps of backpropagation algorithm used to train multilayer network and explain.

22) Briefly explain the effect of adding momentum to weight update rule in the backpropagation

algorithm.

23) Discuss the derivation of backpropagation rule used in learning of direct acyclic graph considering

different cases.

24) Explain the features of Bayesian learning methods.

25) Define Bayes theorem and MAP hypothesis.

26) Explain Brute force MAP hypothesis learner.

27) Explain the Maximum likelihood and Least-squared error hypothesis.

28) Write a note on Maximum likelihood hypothesis for predicting probabilities.

29) What is minimum description length (MDL) principle? Explain

30) Discuss the Naïve Bayes Classifier.

31) Explain Bayesian belief network and conditional independence with example.

32) Explain EM Algorithm.

33) Discuss the derivation of k Means algorithm.

34) Define True Error and Sample Error. What are they used for?

35) What is the importance of binomial and Normal Distribution?

36) Define mean, variance, and Standard deviation of a random variable.

37) Write a note on Confidence intervals.

38) How to estimate difference in error between two hypotheses using error D(h) and error S(h)?

39) Write a procedure to estimate the difference in error between two learning methods.

40) Describe k-nearest neighbor algorithm. Why is it called instance based learning?

41) Write a note on

a. Locally weighted linear regression

b. Radial basis functions

c. Case-based Reasoning

42) Briefly explain the Reinforcement learning method.

43) Write Q-learning algorithm and explain.

You might also like

- Deliria-Faerie Tales For A New Millennium PDFDocument344 pagesDeliria-Faerie Tales For A New Millennium PDFJuan Del Desierto100% (4)

- Question Bank: Subject Name: Artificial Intelligence & Machine Learning Subject Code: 18CS71 Sem: VIIDocument8 pagesQuestion Bank: Subject Name: Artificial Intelligence & Machine Learning Subject Code: 18CS71 Sem: VIIDileep Kn100% (1)

- Assignment 2 Updated AIMLDocument2 pagesAssignment 2 Updated AIMLVismitha GowdaNo ratings yet

- Machine LearningDocument37 pagesMachine LearningPrince RajNo ratings yet

- MCA3 (DS) Unit 4 MLDocument29 pagesMCA3 (DS) Unit 4 MLRuparel Education Pvt. Ltd.No ratings yet

- Question Bank AMLDocument4 pagesQuestion Bank AMLshwethaNo ratings yet

- Module 3 DecisionTree NotesDocument14 pagesModule 3 DecisionTree NotesManas HassijaNo ratings yet

- Decision and Regression Tree LearningDocument51 pagesDecision and Regression Tree LearningMOOKAMBIGA A100% (1)

- Sequences and Series - By TrockersDocument49 pagesSequences and Series - By TrockerschamunorwamachonaNo ratings yet

- Sequences and Series - by TrockersDocument49 pagesSequences and Series - by TrockersshellingtontiringindiNo ratings yet

- ML Unit-2 Material WORDDocument25 pagesML Unit-2 Material WORDafreed khanNo ratings yet

- Extension and Evaluation of ID3 - Decision Tree AlgorithmDocument8 pagesExtension and Evaluation of ID3 - Decision Tree AlgorithmVăn Hoàng TrầnNo ratings yet

- Module 4 Question Bank: Big Data AnalyticsDocument2 pagesModule 4 Question Bank: Big Data AnalyticsKaushik KapsNo ratings yet

- 1 4Document26 pages1 4Rao KunalNo ratings yet

- LINFO2262: Decision Trees + Random Forests: Pierre DupontDocument43 pagesLINFO2262: Decision Trees + Random Forests: Pierre DupontQuentin LambotteNo ratings yet

- Module 3-Decision Tree LearningDocument33 pagesModule 3-Decision Tree Learningramya100% (1)

- Lec19 Decision Trees TypednotesDocument17 pagesLec19 Decision Trees TypednotesNilima DeoreNo ratings yet

- Aiml Assignment 2Document2 pagesAiml Assignment 2Gem StonesNo ratings yet

- Chapter 10 MLDocument69 pagesChapter 10 MLKhoa TrầnNo ratings yet

- 476A Beam Report (FINAL)Document23 pages476A Beam Report (FINAL)farhanNo ratings yet

- AiML 4 3 SGDocument59 pagesAiML 4 3 SGngNo ratings yet

- 2.3 Decision-Tree-AlgorithmDocument61 pages2.3 Decision-Tree-AlgorithmNandini rathiNo ratings yet

- AiML 4 3Document59 pagesAiML 4 3Nox MidNo ratings yet

- Aiml Imp@Azdocuments - inDocument5 pagesAiml Imp@Azdocuments - inSINDHU TKNo ratings yet

- Predictive Modeling Week3Document68 pagesPredictive Modeling Week3Kunwar RawatNo ratings yet

- Play Tennis Example: Outlook Temperature Humidity WindyDocument29 pagesPlay Tennis Example: Outlook Temperature Humidity Windyioi123No ratings yet

- Laboratory 1 Intro To Machine Learning 1Document3 pagesLaboratory 1 Intro To Machine Learning 1Emmanuel AncenoNo ratings yet

- AIML IMPROVEMENT TEST DOCDocument50 pagesAIML IMPROVEMENT TEST DOCsyedshariqkamran123No ratings yet

- 03 EagleseDocument11 pages03 EagleseHENRY ALEJANDRO MAYORGA ARIASNo ratings yet

- VI Sem Machine Learning CS 601Document28 pagesVI Sem Machine Learning CS 601pankaj guptaNo ratings yet

- ML Experiment-1Document3 pagesML Experiment-1Sanket SinghNo ratings yet

- Time Weather Temperature Company Humidity Wind GoesDocument3 pagesTime Weather Temperature Company Humidity Wind GoesAaraNo ratings yet

- Decision TreeDocument5 pagesDecision TreeshubhNo ratings yet

- Government Engineering College, Modasa: B.E. - Computer Engineering (Semester - VII) 3170724 - Machine LearningDocument3 pagesGovernment Engineering College, Modasa: B.E. - Computer Engineering (Semester - VII) 3170724 - Machine LearningronakNo ratings yet

- Lesson TwoDocument6 pagesLesson TwoMarijo Bugarso BSIT-3BNo ratings yet

- Machine Learning Descision TreeDocument20 pagesMachine Learning Descision TreeNilima DeoreNo ratings yet

- VI Sem Machine Learning CS 601 PDFDocument28 pagesVI Sem Machine Learning CS 601 PDFpankaj guptaNo ratings yet

- Week 2Document31 pagesWeek 2Mohammad MoatazNo ratings yet

- 1985 Deconvolution Analysis of Friction PeaksDocument4 pages1985 Deconvolution Analysis of Friction Peaksac.diogo487No ratings yet

- Intro To Machine LearningDocument15 pagesIntro To Machine Learningharrisondaniel0415No ratings yet

- R20 Iii-Ii ML Lab ManualDocument79 pagesR20 Iii-Ii ML Lab ManualEswar Vineet100% (1)

- Foundations of Machine Learning: Module 2: Linear Regression and Decision TreeDocument16 pagesFoundations of Machine Learning: Module 2: Linear Regression and Decision TreeShivansh Sharma100% (2)

- ID3 Lecture4Document25 pagesID3 Lecture4jameelkhalidalanesyNo ratings yet

- Week05 - Naive Bayes Tutorial - SolutionsDocument23 pagesWeek05 - Naive Bayes Tutorial - SolutionsRawaf FahadNo ratings yet

- Lec 3&4Document20 pagesLec 3&4Hariharan RavichandranNo ratings yet

- Data DescriptionDocument1 pageData DescriptionAyele NugusieNo ratings yet

- Lecture 2 EE675Document4 pagesLecture 2 EE675SAURABH VISHWAKARMANo ratings yet

- Instructions For How To Solve AssignmentDocument4 pagesInstructions For How To Solve AssignmentbotiwaNo ratings yet

- Association Rules L3Document5 pagesAssociation Rules L3u- m-No ratings yet

- 15-381 Spring 2007 Assignment 6: LearningDocument14 pages15-381 Spring 2007 Assignment 6: LearningsandeepanNo ratings yet

- Physics 201: Lab On Experimental Uncertainties Using A PendulumDocument3 pagesPhysics 201: Lab On Experimental Uncertainties Using A PendulumChee WongNo ratings yet

- EXP-3-To Implement CART Decision Tree Algorithm.docxDocument14 pagesEXP-3-To Implement CART Decision Tree Algorithm.docxbadgujarvarad800No ratings yet

- 2b Decision Tree 18mayDocument16 pages2b Decision Tree 18mayAkshay KaushikNo ratings yet

- LAB 3 ActivitiesDocument2 pagesLAB 3 ActivitiesChristopher KatkoNo ratings yet

- Enhancements To Basic Decision Tree Induction, C4.5Document53 pagesEnhancements To Basic Decision Tree Induction, C4.5Poornima VenkateshNo ratings yet

- Assignment 2 AIMLDocument6 pagesAssignment 2 AIMLPriyanka appinakatteNo ratings yet

- ML PapersDocument10 pagesML PapersJovan JacobNo ratings yet

- Machine LearningDocument52 pagesMachine LearningaimahsiddNo ratings yet

- Assignment 2Document3 pagesAssignment 2Saujatya MandalNo ratings yet

- Decision Trees Iterative Dichotomiser 3 (ID3) For Classification: An ML AlgorithmDocument7 pagesDecision Trees Iterative Dichotomiser 3 (ID3) For Classification: An ML AlgorithmMohammad SharifNo ratings yet

- AIML Assignment5-23Dec2022Document1 pageAIML Assignment5-23Dec2022Parameshwarappa CmNo ratings yet

- AIML Question Bank-AI-17Jan2022Document1 pageAIML Question Bank-AI-17Jan2022Parameshwarappa CmNo ratings yet

- Assignment2-28June2024Document1 pageAssignment2-28June2024Parameshwarappa CmNo ratings yet

- Assignment1-6June2024Document1 pageAssignment1-6June2024Parameshwarappa CmNo ratings yet

- Unit IG2: Risk Assessment: L L P 1 o 2Document24 pagesUnit IG2: Risk Assessment: L L P 1 o 2white heart green mindNo ratings yet

- Scholastic Instant Practice Packets Numbers - CountingDocument128 pagesScholastic Instant Practice Packets Numbers - CountingcaliscaNo ratings yet

- Course CurriculumDocument3 pagesCourse CurriculumPRASENJIT MUKHERJEENo ratings yet

- Ai-Ai ResumeDocument3 pagesAi-Ai ResumeNeon True BeldiaNo ratings yet

- UntitledDocument2 pagesUntitledelleNo ratings yet

- Brother 1660e Service ManualDocument117 pagesBrother 1660e Service ManualtraminerNo ratings yet

- Module - 1 IntroductionDocument33 pagesModule - 1 IntroductionIffat SiddiqueNo ratings yet

- Arlegui Seminar RoomDocument1 pageArlegui Seminar RoomGEMMA PEPITONo ratings yet

- DorkDocument5 pagesDorkJeremy Sisto ManurungNo ratings yet

- EEET423L Final ProjectDocument7 pagesEEET423L Final ProjectAlan ReyesNo ratings yet

- Adamco BIFDocument2 pagesAdamco BIFMhie DazaNo ratings yet

- Factory Physics PrinciplesDocument20 pagesFactory Physics Principlespramit04100% (1)

- Semantically Partitioned Object Sap BW 7.3Document23 pagesSemantically Partitioned Object Sap BW 7.3smiks50% (2)

- Geography P1 May-June 2023 EngDocument20 pagesGeography P1 May-June 2023 Engtanielliagreen0No ratings yet

- Occupational Safety and Health Aspects of Voice and Speech ProfessionsDocument34 pagesOccupational Safety and Health Aspects of Voice and Speech ProfessionskaaanyuNo ratings yet

- 3M CorporationDocument3 pages3M CorporationIndoxfeeds GramNo ratings yet

- New AccountDocument1 pageNew Account1144abdurrahmanNo ratings yet

- GEO01 - CO1.2 - Introduction To Earth Science (Geology)Document14 pagesGEO01 - CO1.2 - Introduction To Earth Science (Geology)Ghia PalarcaNo ratings yet

- Understanding Organizational Behavior: de Castro, Donna Amor Decretales, Thea Marie Estimo, Adrian Maca-Alin, SaharaDocument41 pagesUnderstanding Organizational Behavior: de Castro, Donna Amor Decretales, Thea Marie Estimo, Adrian Maca-Alin, SaharaAnna Marie RevisadoNo ratings yet

- 11 Earthing and Lightning Protection PDFDocument37 pages11 Earthing and Lightning Protection PDFThomas Gilchrist100% (1)

- Tle 7-1st Periodic TestDocument2 pagesTle 7-1st Periodic TestReymart TumanguilNo ratings yet

- MC&OB Unit 4Document17 pagesMC&OB Unit 4Tanya MalviyaNo ratings yet

- Getting The Most From Lube Oil AnalysisDocument16 pagesGetting The Most From Lube Oil AnalysisGuru Raja Ragavendran Nagarajan100% (2)

- Problemsolving RubricDocument1 pageProblemsolving Rubricapi-560491685No ratings yet

- Ricardo Moreira Da Silva Baylina - Artistic Research ReportDocument65 pagesRicardo Moreira Da Silva Baylina - Artistic Research ReportEsteve CostaNo ratings yet

- A Study On Financial Performance of Selected Public and Private Sector Banks - A Comparative AnalysisDocument3 pagesA Study On Financial Performance of Selected Public and Private Sector Banks - A Comparative AnalysisVarun NagarNo ratings yet

- Mathematics Past Paper QuestionsDocument174 pagesMathematics Past Paper Questionsnodicoh572100% (2)

- Laboratory For Energy and The Environment: HighlightsDocument14 pagesLaboratory For Energy and The Environment: HighlightsZewdu TsegayeNo ratings yet

- ACCA P5 Question 2 June 2013 QaDocument4 pagesACCA P5 Question 2 June 2013 QaFarhan AlchiNo ratings yet