Professional Documents

Culture Documents

SSRN-id4120102

SSRN-id4120102

Uploaded by

forpicturesonly6969Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

SSRN-id4120102

SSRN-id4120102

Uploaded by

forpicturesonly6969Copyright:

Available Formats

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/360877750

Machine-Learning Component for Multi-Start Metaheuristics to Solve the

Capacitated Vehicle Routing Problem

Preprint · May 2022

DOI: 10.13140/RG.2.2.30289.20327

CITATIONS READS

0 191

4 authors, including:

Juan Pablo Mesa Mauricio Toro

Universidad EAFIT Universidad EAFIT

4 PUBLICATIONS 4 CITATIONS 93 PUBLICATIONS 711 CITATIONS

SEE PROFILE SEE PROFILE

All content following this page was uploaded by Juan Pablo Mesa on 26 May 2022.

The user has requested enhancement of the downloaded file.

Machine-Learning Component for Multi-Start

Metaheuristics to Solve the Capacitated Vehicle Routing

Problem

Juan Pablo Mesaa,∗, Alejandro Montoyaa , Raúl Ramos-Pollanb , Mauricio

Toroa

a

School of Applied Sciences and Engineering, Universidad EAFIT, Carrera 49 N 7

Sur-50, Medellin, 05001, Antioquia, Colombia

b

Universidad de Antioquia, Calle 67 N 53-108, Medellin, 05001, Antioquia, Colombia

Abstract

Multi-Start metaheuristics (MSM) are commonly used to solve vehicle

routing problems (VRPs). These methods create different initial solutions and

improve them through local-search. The goal of these methods is to deliver

the best solution found. We introduce initial-solution classification (ISC)

to predict if a local-search algorithm should be applied to initial solutions

in MSM. This leads to a faster convergence of MSM and to higher-quality

solutions when the amount of computation time is limited. In this work,

we extract known features of a capacitated VRP (CVRP) solution and we

introduce additional features. With these features and a machine-learning

classifier (random forest), we show how ISC –significantly– improves the

performance of greedy randomized adaptive search procedure (GRASP),

over benchmark instances from the CVRP literature. With the objective

of evaluating ISC’s performance with different local-search algorithms, we

implemented a local-search composed of classical neighborhoods from the

literature and another local-search with only a variation of Ruin-and-Recreate.

In both cases, ISC significantly improves the quality of the solutions found in

almost all the evaluated instances.

∗

Corresponding author

Email addresses: jmesalo@eafit.edu.co (Juan Pablo Mesa),

jmonto36@eafit.edu.co (Alejandro Montoya), raul.ramos@udea.edu.co (Raúl

Ramos-Pollan), mtorobe@eafit.edu.co (Mauricio Toro)

Preprint submitted to Computers & Operations Research May 3, 2022

Electronic copy available at: https://ssrn.com/abstract=4120102

Keywords: metaheuristics, vehicle routing problem, machine learning,

classification, feature extraction, GRASP

1. Introduction

In vehicle routing problems (VRPs), recent research [1], [2] has demon-

strated that structural properties of VRP solutions can be extracted to obtain

valuable information. These features have been used differently in heuristics

for VRP: from developing state-of-the-art metaheuristics –such as the Knowl-

edge Guided local-search (KGLS) of Arnold and Sörensen [3]– to discovering

patterns in high-quality solutions [4]. In this paper, we investigate how these

features can be used to improve the performance of multi-start metaheuristics

(MSM) for VRP. We focus, in particular, on the capacitated VRP (CVRP).

CVRP is a variant of VRP and has been studied for more than six decades

(since it was first proposed by Dantzig and Ramser [5]) and it is still a relevant

problem. The objective of CVRP is to find a set of routes that minimizes

the total cost travelled by a fleet of vehicles, which serves a set of customers,

starting and ending their routes at a single depot. The capacity of the

vehicles is limited, homogeneous, and cannot be exceeded. Each customer has

a known demand, must be visited once, and the distance among customers is

Euclidean.

CVRP is an NP-Hard problem, which limits the ability of state-of-the-art

exact algorithms to optimally solve instances of CVRP in a reasonable com-

puting time [6]. Therefore, metaheuristics are used to solve large instances of

CVRP because they can deliver high-quality solutions: High-quality solutions

are solutions with a low total-route cost, computing them in a reasonable

amount of time, at the expense of not guaranteeing optimality.

In recent years, there has been a trend to solve CVRP directly with

Artificial Intelligence (AI) such as the works of Nazari et al. [7] and Kool

et al. [8], or combining metaheuristics with machine learning (ML) or deep

learning [9]. This is possible because a large amount of data can be generated

during the execution of different heuristics while solving the problem. This

data can be used in ML algorithms. ML may be employed with different

objectives within the metaheuristics, such as: (1) to improve the quality of

the solutions, (2) to guide local-search and (3) to reduce the computing time.

Of the different types of metaheuristics used to solve CVRP, MSM have

a potential to easily integrate ML into them. MSM typically operate in the

Electronic copy available at: https://ssrn.com/abstract=4120102

following manner. First, an initial solution to the problem is constructed.

Second, this initial solution is improved by the means of local-search. Third,

once the solution cannot be further improved by local-search, the process

starts again. Fourth, this process is repeated until some stopping criterion is

met. Finally, the best solution found is returned as the final solution of the

MSM.

Generally, constructing initial solutions is not a procedure that consumes

a considerable amount of time, compared to local-search, which consumes

most of the computing time when solving CVRP. Usually, in MSM, every time

one constructs an initial solution, local-search is always applied to such initial

solution. But what if one can analyze these initial solutions and predict how

much local-search will improve them? This would better utilize computing

time for improving solutions in promising regions of the solution space. This

research path, that aims to reduce the solution search-space on heuristics

for VRP, has been recently explored by Lucas et al. [2], but it remains

open as a promising direction in the design of heuristics for VRP and its

variants. The problem remains open because no results were achieved where

the metaheuristic combined with ML was better than the metaheuristic alone.

To fill this research gap, we introduce a method that enables the clas-

sification of initial solutions, within MSM, that would lead to high-quality

solutions after local-search is applied to such initial solutions. We name this

method initial-solution classification (ISC). ISC efficiently predicts if applying

local-search to an initial solution will lead to a high-quality solution.

ISC consists of two phases that are widely used in ML: (1) training and (2)

classification. The training phase extracts features –for instance, average route

length and average route span– from initial solutions, applies local-search to

the initial solutions, and trains an ML classifier to choose whether an initial

solution will be a high-quality solution or not after applying local-search to

such solution. After the classifier is trained for a given time, the training

phase is no longer executed, and now the classification phase is performed.

In this phase, new initial solutions are created, and the same features are

extracted to predict if local-search should be applied or if it is not worth to

employ computational effort on them. This decision corresponds to whether

the ML classifier predicts a high-quality solution or the ML classifier predicts

a low-quality solution.

In this work, as a case study, we apply ISC to Greedy Randomized

Adaptive Search (GRASP) [10]; however, ISC can be easily replicated in

other MSM to predict promising initial solutions using different local-search

Electronic copy available at: https://ssrn.com/abstract=4120102

algorithms. In particular, we apply ISC to two existing local-search algorithms:

(1) variable neighborhood descent (VND) of Mladenovic and Hansen [11], and

(2) a variation of Ruin-and-Recreate (R&R) [12]. We use random forest as a

machine-learning classifier [13]. Nonetheless, this can also be easily replicated

to other ML algorithms.

1.1. Contributions

The contributions of our work are the following:

• A new method, ISC, that learns to predict which initial solutions will

lead to high-quality solutions after local-search. To the best of our

knowledge, there are no studies that evaluate initial solutions for MSM.

• As ISC requires features to be used by an ML classifier, we employ

both the features proposed by Arnold and Sörensen [1] and the ones

introduced by Lucas et al. [2]. Additionally, we introduce new features.

• Finally, we carry out experiments to measure the performance of the

integration of ISC to GRASP metaheuristics with two different local-

search algorithms.

The objective of this work is not to propose a method that competes with

the state-of-the-art metaheuristics for CVRP; instead, we introduce a method

that enables the improvement of existent MSM for CVRP.

1.2. Structure of the paper

The rest of this paper is structured as follows. In Section 2, we discuss

the state-of-the-art algorithms for CVRP and other works related to ML

methods applied to CVRP. In Section 3, we present and explain the ISC

method. In Section 4, we present the experimental results and analysis of

the performance of the integration of ISC to GRASP to solve CVRP. Finally,

Section 5 concludes our work and presents future-work directions.

2. Literature review

We divide this section into two parts. First, we give a brief explanation of

the state-of-the-art metaheuristics for CVRP. We then review the methods

that combine CVRP metaheuristics with ML and highlight the differences

with our work. The methods that use only ML to solve routing problems –such

Electronic copy available at: https://ssrn.com/abstract=4120102

as [7, 14, 15, 16, 8]– are out of the scope of our research. Additionally, other

methods that use ML to learn to recommend an algorithm or metaheuristic

out of a portfolio of options –such as the works from Gutierrez et al. [17] or

Jiang et al. [18]– are also out of the scope of our research.

2.1. Metaheuristics state-of-the-art for CVRP

We identified six metaheuristics that are considered state-of-the-art for

CVRP. The hybrid Iterated local-search with Set Partitioning from Subrama-

nian et al. [19] is the only MSM that is advantageous with respect to previous

MSM for VRP variants. Likewise, Maximo and Nascimento [20] introduced

an hybrid iterated local-search metaheuristic, that uses path-relinking strate-

gies and an adaptive diversity control mechanism to escape local-optima

during the search. The Unified Hybrid Genetic Search from Vidal et al. [21]

is a population-based adaptive genetic algorithm, with advanced diversity

management methods, that give it an edge with respect to previous genetic

algorithms for several VRP variants. The Slack Induction by String Removal

(SISR) [22], introduced by Christiaens and Vanden Berghe, [22] is a sophisti-

cated, yet easily reproducible, ruin-and-recreate-based approach combined

with a simulated-annealing metaheuristic [23]. SISR uses simple removal and

insertion heuristics to achieve high-quality solutions.

Recently, Accorsi and Vigo [12] presented Fast Iterated-Local-Search

Localized Optimization (FILO), a fast and scalable metaheuristic, specialized

for CVRP, based on the iterated local-search paradigm. FILO uses several

acceleration and pruning techniques, coupled with strategies to keep the

optimization localized in promising regions of the search space, and it is able

to deliver high-quality solutions faster than all the other methods presented

here. Finally, the Knowledge-Guided local-search (KGLS), proposed by

Arnold and Sörensen [3], is a Guided Local-Search is enhanced by knowledge

extracted from previous data-mining.

2.2. Methods that combine CVRP metaheuristics with ML

The knowledge extracted from data analyses for KGLS comes from the

previous work by Arnold and Sörensen “What makes a good VRP solution?”

[1]. Specifically, the authors found that solutions with longer edges and wider,

less compact routes have lower quality than solutions with thinner routes and

shorter edges between customers. This knowledge is used to construct the

guiding strategy in Guided Local-Search. The results and efficiency of KGLS

demonstrate that the use of local-search that have proven to work well, and

Electronic copy available at: https://ssrn.com/abstract=4120102

the use of problem knowledge enables the creation of efficient heuristics that

work well for CVRP.

More recently, the work of Arnold et al. [4] proposed the use of pattern

mining as an online strategy within vehicle-routing metaheuristics to aid

local-search. The pattern injection local-search (PILS) investigated whether

pattern mining –the discovery of frequently used patterns or structures in

high-quality solutions– can similarly improve the performance of heuristic

optimization. PILS consists of using two algorithmic steps: (1) pattern

collection and (2) pattern injection. Pattern collection is the step where

patterns (i.e., sequences of consecutive visits) that frequently occur in high-

quality solutions are collected. Pattern injection then takes a subset from

the collected patterns and tries to introduce them in a solution, whose edges

adjacent to nodes in the pattern are previously removed, to facilitate the

injection.

This pattern mining approach is similar to an approach proposed by Maia

et al. [24]. The authors used a hybrid version of an MSM that incorporates a

data mining phase for the heterogeneous-fleet-VRP. Frequent patterns are

collected from a set of high-quality solutions, these patterns are used as a

starting point for the construction of initial solutions. This results in better-

quality initial solutions. Consequently, the authors found that this allowed to

reach better-quality solutions after local search.

A different approach consists in using reinforcement-learning [25] to learn

how to execute local-search to improve heuristics on initial solutions. Wu

et al. [26] proposed a deep reinforcement-learning framework, based on self-

attention, to learn the improvement heuristics based on the 2-opt operator

for the Traveling Salesman Problem and CVRP.

On the same line, the work of Gao et al. [27] used a graph-attention

network to learn (1) the destroy operator, removing patterns of customers

from a solution, and (2) the repair operator, choosing the insertion order

of customers. Gao et al.’s network was applied within a Very Large-scale

Neighborhood Search metaheuristic for CVRP and CVRP with time windows.

Similarly, Hottung and Tierney [28] proposed a neural large neighborhood

search for the split delivery vehicle routing problem and CVRP, where the task

of the repair operator is learned by a deep neural network that is trained via

policy-gradient reinforcement-learning [29]. Contrary to using hand-crafted

improvement heuristics, all these approaches aim at automating the generation

of heuristics in a way that requires no optimization knowledge.

As mentioned earlier, the work of Arnold and Sörensen [1] enabled the

Electronic copy available at: https://ssrn.com/abstract=4120102

extraction of features from VRP solutions to use them in ML models. These

features were exploited by Lucas et al. [2]. In the latter work, some additional

features were introduced, and a new metaheuristic, called Feature-Guided

Multiple Neighborhood Search (FG-MNS) was proposed. In FG-MNS, solu-

tions are represented as features, and a decision tree [30] defines promising

areas for local-search exploration according to the features extracted from

the solution.

FG-MNS is divided into two phases: (1) a learning phase and (1) a ex-

ploitation phase. In the learning phase, solutions are generated and improved

with a multiple neighborhood search (MNS). Once there are enough solutions,

a decision tree is trained. Finally, the exploitation phase uses the information

from the decision tree to analyze a solution and alternate between applying

the MNS or guiding the solution from a non-promising area to a promising

one. Unfortunately, when MNS and FG-MNS are executed for the same fixed

amount of time, MNS gives better results than FG-MNS.

Even if MNS delivers better results than FG-MNS, the method proposed

by Lucas et al. [2] is a promising research direction in the intersection of

metaheuristics and ML. In this work, we chose to follow up Arnold & Sörensen

[3] and Lucas et al.’s [2] direction, while focusing on improving MSM for

CVRP. Our research is different from the two works mentioned above in

that we focus on analyzing initial solutions and predicting their improvement

potential, instead of directly modifying the local-search algorithms. Unlike

Arnold et al. [4] and Maia et al. [24], we extract features from VRP solutions

and use them within ML algorithms, instead of extracting recurring patterns

in high-quality solutions and inserting them into new solutions.

3. Method

In this section, we describe ISC and how to integrate it within MSM.

In the following sections, we make use of the notation in Table 1 for easy

reference throughout the algorithms.

3.1. Initial Solutions Classification (ISC)

MSM normally follow a similar pattern as the traditional GRASP proposed

by Feo and Resende [10]. First, they construct an initial solution So , usually

feasible, but far from being a high-quality solution. After, they try to obtain

an improved solution S ′ by applying local-search to So . When a local-search

stopping criteria is met, local-search stops. Next, the best solution S ∗ found

Electronic copy available at: https://ssrn.com/abstract=4120102

Table 1: Notation.

N Number of customers of an instance

Γ GRASP time limit

θ Training phase time limit

Ψ Classification phase time limit

τ Current execution time

A Local-Search algorithm

S Solution

So Initial solution

S′ Improved solution

Ŝ Ruined solution

S∗ Best solution

B Binary classifier

Dsol Solutions dataset

Dtrain Training dataset

F Features of a solution

L Class label

P Promising solution

U Unpromising solution

cv Improved cost value

T Temperature

ω Shaking parameter

∆f Number of core optimization iterations of FILO0.

H Neighborhood

Electronic copy available at: https://ssrn.com/abstract=4120102

overall is updated if the cost of S ′ is lower. This process is repeated until

the stopping criterion of the metaheuristic is met: Current execution time τ

exceeds the time limit Γ. Finally, the best solution found S ∗ is returned as

the final solution of the metaheuristic. This process corresponds to GRASP

described in Algorithm 1. On MSM of this kind, for different initial solutions,

the same local-search algorithm might lead to different improved solutions.

Some of them will be high-quality solutions and others will be low-quality

solutions, within the limits and capabilities of the metaheuristic.

Algorithm 1: Greedy Randomized Adaptive Search (GRASP).

Adapted from [10].

Input: Γ

1 cost best ← ∞

2 while τ ≤ Γ do

3 So ← randomized construction()

4 S ′ ← A(So )

5 if cost(S ′ ) ≤ cost best then

6 cost best ← cost(S ′ )

7 S∗ ← S′

8 return S ∗

The structural differences in the initial solutions can be meaningful, and

this is what ISC aims to exploit. Instead of applying a local-search to all

initial solutions, ISC improves MSM by analyzing the features F of each

initial solution So , and making predictions using ML. If ISC predicts that the

initial solution So and the local-search algorithm A will lead to a good-quality

solution, ISC classifies So as a promising solution P , or as an unpromising

solution U otherwise. ISC then applies local-search if the initial solution

So is predicted as a promising solution P . This allows to employ the saved

computing time to potentially find better solutions faster.

Arnold and Sörensen [1] created features that represent a VRP solution,

such as the average width of routes, or the number of inter-route crosses.

Some additional features were introduced by Lucas et al. [2]. ISC uses most

of the features from these two works, and we added some new features that

help to improve the accuracy of the classifier. All features F are presented in

Table 2. The features from [1] are marked with an (A), the features from [2]

are marked with an (L), and the features without symbols are proposed by

ourselves.

Electronic copy available at: https://ssrn.com/abstract=4120102

Table 2: Features used in ISC to characterize and evaluate a CVRP solution. The

explanation and formulas of different features, such as depth, width, span, etc. can be

found on [1].

Standard

Average Minimum Maximum

deviation

Standard deviation

Average number of

of the number of

intersections per

intersections per

customer (A)

customer

Average longest Standard deviation Minimum value Maximum value

distance between of the longest among the longest among the longest

two connected distance between distance between distance between

customers, per route two connected two connected two connected

(A) customers, per route customers, per route customers, per route

Standard deviation Minimum value Maximum value

Average shortest

of the shortest among the shortest among the shortest

distance between

distance between distance between distance between

two connected

two connected two connected two connected

customers, per route

customers, per route customers, per route customers, per route

Average distance Shortest distance Longest distance

between depot to between depot to between depot to

directly-connected directly-connected directly-connected

customers (A) customers customers

Average distance

between routes

(their centers of

gravity) (A)

Standard deviation Minimum value Maximum value

Average width per

of the width of each among the width of among the width of

route (A)

route (L) each route each route

Standard deviation Minimum value Maximum value

Average span in

of the span in among the span in among the span in

radians per route

radians of each radians of each radians of each

(A)

route (L) route route

Minimum value Maximum value

Average

among the among the

compactness per

compactness per compactness per

route, measured by

route, measured by route, measured by

width (A)

width width

Minimum value Maximum value

Average

among the among the

compactness per

compactness per compactness per

route, measured in

route, measured in route, measured in

radians (A)

radians radians

Standard deviation Minimum value Maximum value

Average depth per

of the depth of each among the depth of among the depth of

route (A)

route (L) each route each route

10

Electronic copy available at: https://ssrn.com/abstract=4120102

Standard deviation

Average number of of the number of

customers per route customers per route

(A)

Average demand of Standard deviation

the farthest of the demand of the

customer of each farthest customer of

route (L) each route (L)

Standard deviation

Average Demand of

of the demand of

the first and

the first and

last customer of

last customer of

each route (L)

each route

Standard deviation

Average degree of

of the degree of

neighborhood of

neighborhood of

each route (L)

each route

Standard deviation

Average degree of

of the degree of

neighborhood of

neighborhood of

each customer

each customer

Standard deviation Minimum value Maximum value

Average length of

of the length of each among the length of among the length of

all routes

route (L) each route each route

Average length of

the longest interior

edge of each route

divided by the

length of each route

(L)

Average length of

the shortest interior

edge of each route

divided by the

length of each route

Average area of Smallest area Largest area among

each route among the routes the routes

Sum of the length of

all the routes

In what follows, we describe the two phases that comprise ISC: (1) the

training phase and (2) the classification phase. We also explain how to

integrate ISC into an MSM to solve CVRP.

3.1.1. Training phase of ISC

The training phase extracts features F from initial solutions So and trains

an ML classifier B to predict the quality of the solutions S ′ after applying

11

Electronic copy available at: https://ssrn.com/abstract=4120102

local-search. This process is described in Algorithm 2.

After a feasible initial solution So is created, the features mentioned

previously are extracted from such solution, and stored into a solutions

dataset Dsol . Then, a local-search algorithm A is applied to the initial

solution So , and, from the improved solution S ′ , the cost(S ′ ) –that we named

improved cost value (cv)– is also stored in Dsol . This process is repeated until

a time limit θ is reached. When the cost of an improved solution S ′ is lower

than the cost of the best solution S ∗ , the best solution S ∗ is updated.

The algorithm proceeds as follows. The improved cost values, from the

solutions dataset Dsol , are used to label the solutions as promising (P ) and

unpromising (U ) solutions. A percentage λp of solutions with the lowest cv

are labeled P . Additionally, a percentage λu of solutions with the highest

cv are labeled U . The remaining solutions, which are neither the best nor

the worst, remain unlabeled and are discarded. The cv of the solutions

is also discarded. The new dataset, comprised only by labeled promising

and unpromising solutions, is the training dataset Dtrain . Next, the binary

classifier B is trained with data from Dtrain . In this work, we train B with

a Random-Forest algorithm as shown in Algorithm 7. Finally, the trained

classifier B is returned, along with the best solution found S ∗ .

Algorithm 2: ISC’s Training phase.

Input: B

1 Dsol = ∅, Dtrain = ∅

2 cost best ← ∞

3 while τ ≤ θ do

4 So ← randomized construction()

5 Dsol ← extract features(So )

6 S ′ ← A(So )

7 Dsol ← store cost(S ′ )

8 if cost(S’) ≤ cost best then

9 cost best ← cost(S ′ )

10 S∗ ← S′

11 Dtrain ← label solutions(Dsol )

12 B ← train classifier(B, Dtrain )

13 return B, S ∗

12

Electronic copy available at: https://ssrn.com/abstract=4120102

3.1.2. Classification phase of ISC

The classification phase starts when the training phase ends. This phase

is described in Algorithm 3. This phase consists of extracting features from

initial solutions So and using the trained binary classifier B to classify (predict

to which class belongs) L the solutions into promising P or unpromising U

solutions. If a solution is classified as promising, a local-search algorithm A is

applied. Otherwise, the algorithm skips the solutions classified as unpromising,

without applying local-search to them. When the cost of an improved solution

S ′ is lower than the cost of the best solution S ∗ , the best solution S ∗ is

updated. This process is repeated until the time limit for the classification

phase Ψ is reached. Finally, the best solution found is returned as S ∗ .

Algorithm 3: ISC’s Classification phase.

Input: B, S ∗

∗

1 cost best ← cost(S )

2 while τ ≤ Ψ do

3 So ← randomized construction()

4 F ← extract features (So )

5 L ← classify(B, F )

6 if L is P then

7 S ′ ← A(So )

8 if cost(S’) ≤ cost best then

9 cost best ← cost(S ′ )

10 S∗ ← S′

11 return S ∗

We could choose one of the different MSM that are commonly used in

CVRP, but we chose to work with a GRASP for its simplicity. Regarding

the selection of the binary classifier of ISC, we opted for a Random-Forest

Classifier [13]. The reasons for choosing this classifier are the following:

• Features do not have to be re-scaled to similar order magnitudes.

• Training can be done on a single central-processing-unit core in a

reasonable amount of time.

• It fits multiple bagged decision trees classifiers, on different sub-samples

of a dataset, for training, reducing the high variance that individual

decision trees exhibit.

13

Electronic copy available at: https://ssrn.com/abstract=4120102

3.2. Integration of ISC into GRASP for CVRP

The incorporation of the training phase and the classification phase into

an MSM such as GRASP is straightforward: We replace the initial-solution

creation step and the local-search step, with the training phase and the

classification phase of ISC, and we establish an individual time-limit for each

phase. The structure of the integration of ISC into GRASP is described in

Algorithm 4.

14

Electronic copy available at: https://ssrn.com/abstract=4120102

Algorithm 4: Integration of ISC into GRASP for CVRP

Input: Γ, θ, B

ISC’s Training phase

1 Dsol = ∅, Dtrain = ∅

2 cost best ← ∞

3 while τ ≤ θ do

4 So ← randomized construction()

5 Dsol ← extract features(So )

6 S ′ ← A(So )

7 Dsol ← store cost(S ′ )

8 if cost(S’) ≤ cost best then

9 cost best ← cost(S ′ )

10 S∗ ← S′

11 Dtrain ← label solutions(Dsol )

12 B ← train classifier(B, Dtrain )

ISC’s Classification phase

13 Ψ=Γ−θ

14 while τ ≤ Ψ do

15 So ← randomized construction()

16 F ← extract features (So )

17 L ← classify(B, F )

18 if L is P then

19 S ′ ← A(So )

20 if cost(S’) ≤ cost best then

21 cost best ← cost(S ′ )

22 S∗ ← S′

23 return S ∗

In the GRASP algorithm for CVRP, we constructed the initial solutions

using a randomized variation of the well-known parallel savings algorithm by

Clarke and Wright [31]. The Clarke and Wright algorithm (C-W) is a purely

greedy and deterministic algorithm; therefore, we introduced a randomization

15

Electronic copy available at: https://ssrn.com/abstract=4120102

like the one proposed by Hart and Shodan [32].

The C-W algorithm starts by creating an initial solution, where each

customer is visited in an individual route, from depot to customer, and then

back to depot. After, for each pair of customers, the saving that would result

from visiting these customers contiguously, before returning to the depot, is

determined. The information of each pair of customers and their savings is

stored on a savings list. The savings list is sorted in decreasing order of the

savings amount. Next, to add randomization, we create a restricted candidate

list (RCL) with the highest savings. Now, the algorithm randomly selects a

saving from the RCL, merging the two routes of the pair of customers of each

saving if it is possible, and decreasing the cost of the solution by the saving

amount. After a saving is selected from the RCL, the RCL is updated. This

merging is possible when the following three conditions are satisfied. (1) The

customers are currently in different routes. (2) Both customers are directly

connected to the depot. (3) The sum of demands of the two routes is not

larger than the vehicle capacity.

To demonstrate the improvement capacity and generality of ISC, we

study its application when two different local-search algorithms (A1 and A2 )

are used. The first one is variable neighborhood descent (VND), which is

a deterministic variant of the Variable Neighborhood Search proposed by

Mladenovic and Hansen [11]. The other local-search algorithm is FILO0 [12]

by Accorsi and Vigo, a variation of the Ruin-&-Recreate method used in FILO

[12], which is itself inspired on the Slack Induction by String Removal (SISR)

algorithm of Christiaens and Vanden Berghe [22]. In both cases, we use a

Random-Forest classifier [13]. In what follows, we explain VND local-search,

FILO0 local-search and random forest classifier.

3.3. VND local-search

The VND algorithm is presented in Algorithm 5. The algorithm proceeds

as follows. First, the order of the neighborhoods Hn is established. After, the

first neighborhood to start the exploration procedure is assigned. Now, in a

cyclic manner, neighborhood Hn is completely explored. The neighborhood

movement that best improves the current solution S is applied and the first

neighborhood H1 is assigned once again as the next neighborhood. In case

no solution improvement is found, the next neighborhood is selected to be

explored. When no improvement is available after exploring all neighborhoods,

the solution S is returned.

16

Electronic copy available at: https://ssrn.com/abstract=4120102

We implemented four different classical neighborhoods: SWAP (S), RELO-

CATE (R), 2-OPT (O), and 2-OPT* (O*). Both S and R are implemented as

inter-route and intra-route neighborhoods. These four neighborhoods enable

24 possible order permutations for the VND, from which we selected four

different VND permutations: R-S-O-O*, S-R-O*-O, O-S-R-O*, and O*-S-

O-R. R-S-O-O* was selected following an increasing order of computational

complexity, and the other three were selected randomly. We applied each one

of the VND’s to each initial solution individually, because the order in which

the neighborhoods are applied affects the solution quality. For ISC, we con-

sider each VND as a different local-search algorithm. Therefore, we trained a

binary classifier B for each VND (i.e., four classifiers in our implementation).

Algorithm 5: VND. Adapted from [11]

Input: So

1 Set the order of neighborhood structures H1 , H2 , ..., Hl

2 S ← So

3 n = 1

4 while n ≤ L do

5 Find the best neighbor S ′ of S in the neighborhood Hk (S)

6 if cost(S ′ ) < cost(S) then

7 S ← S′

8 n=1

9 else

10 n=n+1

11 return S

17

Electronic copy available at: https://ssrn.com/abstract=4120102

3.4. FILO0 local-search

Algorithm 6: FILO0. Adapted from [12]

Input: So , ∆f

1 w ← (w0 , w1 , ..., wc ), wi ← wbase ∀i ∈ N

2 S ← So

∗

3 S ← S, T ← T0

4 for it ← 1 to ∆f do

5 Ŝ, i′ ← Ruin(S, w)

6 S ′ ← Recreate(Ŝ)

7 if cost(S ′ ) < cost(S ∗ ) then

8 S∗ ← S′

9 w ← U pdateShakingP arameters(w, S ′ , S, i′ )

10 if accept criterion(S, S ′ , T ) then

11 S ← S′

12 T ← decrease temperature(T )

13 return S ∗

FILO0 is a simplified variant of the FILO algorithm, which is a state-

of-the-art metaheuristic for CVRP [12]. FILO0 is a simulated-annealing

metaheuristic, with a local-search Ruin-&-Recreate algorithm, coupled with

an update strategy to control the intensity of the ruin procedure. On each

iteration, a feasible solution S is ruined by removing some customers from

their routes. After, each unrouted customer is greedily inserted into the best

possible feasible position in the ruined solution Ŝ. Once all customers are

routed, a new feasible solution S ′ is obtained. When the cost of an improved

solution S ′ is lower than the cost of the best solution S ∗ , the best solution S ∗

is updated. The shaking parameters ω –that control the intensity of the ruin

algorithm– are updated while taking into account the cost of the previous

solution S, the cost of the new solution S ′ , and past executed ruin procedures.

FILO0 is presented in Algorithm 6.

To use either VND or FILO0 within the integration of ISC into GRASP,

we replace the local-search algorithm, on lines 6 and 21, of Algorithm 4,

with Algorithm 5 (VND) or Algorithm 6 (FILO0), respectively. A similar

replacement can be done within other MSM.

18

Electronic copy available at: https://ssrn.com/abstract=4120102

3.5. Random-Forest Classifier

The binary classifier B used in the integration of ISC into GRASP for

CVRP is a Random-Forest Classifier [13]. A Random-Forest is composed

by a set of decision trees. A decision tree is an algorithm that splits the

dataset recursively into sub-spaces, by selecting the feature that maximizes

the separation of the data. At each step, the selected feature should reduce

the heterogeneity of the data, according to a specific measure, by splitting one

of the sub-spaces. After a split, each partition of the subspace corresponds to

a hyper-plane defined in a single dimension. New data is classified based on

the majority of classes of the sub-spaces where the features belong.

A decision tree by itself is likely to overfit the training data, and might

exhibit high-variance when classifying new data [13]. Therefore, Breiman [13]

proposed to combine multiple decision trees into a Random-Forest. Each tree

is trained with a randomly selected subset of features from the data. For new

data, the predicted class corresponds to the class majority predicted by the

trees.

The Random-Forest algorithm is described in Algorithm 7. The decision

trees implement a Classification and Regression Trees (CART) classifier [33].

The maximum depth of the tree dmax and the weight associated to each class ρ

are among the most important parameters for ISC to work. We used the Gini

index as the measure of impurity im to decide node splits [33]. Additionally,

in ISC, all samples from the dataset are used to train each tree.

19

Electronic copy available at: https://ssrn.com/abstract=4120102

Algorithm 7: Random-Forest. Adapted from [34]

Input: Dtrain , F, ρ, nc , dmax , ssmin , ismin , slmin

Training

1 Select randomly fmax features from the features in Dtrain

2 for ne trees do

3 for f ∈ fmax features do

4 a. Calculate the Gini impurity index G at each node d

5

X

tc = ρ i ni

i

X

ic = 1 − (ρi ni /tc )2

i

X

G(d) = ic (tc /tp )

c

where tc is the weight of all observations in a potential child

node c, ni is the number of observations of class i in c, and ρi

is the class weight. The impurity of c is ic , and tp is the

weight of all observations in the parent node (before the split).

6 b. Select the node d which has the lowest impurity value G(d),

with a minimum impurity value ismin , and a minimum

number of samples ssmin .

7 c. Split the samples from d into sub-nodes, each resulting

sub-node must have at least slmin samples.

8 d. Repeat steps a, b and c to construct the tree e until

reaching the maximum depth of a tree dmax .

9 B ← e : Consolidate tree e in the Random-Forest B

Classification

10 Use the features F and the rules of each decision tree e ∈ B to predict

the class

11 Calculate the number of votes for each predicted class

12 Consider the highest voted predicted class as the final prediction L

13 return L

20

Electronic copy available at: https://ssrn.com/abstract=4120102

In this section we presented ISC, a method that uses ML to improve MSM,

by choosing which initial solutions should be improved with local-search. We

introduced new features to describe a CVRP solution. These features are

complimentary to the features proposed in [1, 2]. We integrated ISC with

two MSM. The computational experiments and results of these integrations

are presented in Section 4.

4. Computational Experiments

Computational experiments evaluate the benefits of ISC when it is in-

tegrated into an MSM, and how different instance characteristics affect its

performance. To this end, we evaluated the algorithms on the X dataset

proposed by Uchoa et al. [35]. This dataset contains 100 benchmark instances,

with a varying number of customers ranging from 100 to 1000 (small if less

than 250, medium if from 250 to 500, and large if 500 or more), different cus-

tomer positioning (C=clustered, R=random, and RC=mixed), and different

depot locations (C=central, E=eccentric, and R=random).

The metaheuristics GRASP-VND (G-VND), GRASP-FILO0 (G-FILO0),

along with their respective variants GRASP-VND-ISC (G-VND-ISC) and

GRASP-FILO0-ISC (G-FILO0-ISC) are specified in section 3. G-VND is a

GRASP to solve CVRP that uses VND and G-FILO0 is a GRASP to solve

CVRP that uses FILO0. Variant G-VND-ISC is the integration of ISC to

G-VND and G-FILO0-ISC is the integration of ISC to G-FILO0.

All algorithms 1 were coded in C++ and compiled using Intel oneAPI

DPC++/C++ Compiler, Boost 1.78 library [36], and Twigy library [37]. The

experiments were run on an Intel Xeon Gold 6130 CPU, running at 2.10

GHz, with 64 GB of RAM, on a Rocky Linux 8.5 Operating System. The

experiments for G-VND and G-VND-ISC were run on four parallel cores.

The experiments for G-FILO0 and G-FILO0-ISC were run on a single core.

Because of the randomized nature of the GRASP algorithm, we executed

each experiment 10 times, with a different random seed, and reported the

average gap (GAP) of the solution found, with respect to the best-known

solution (BKS), as shown below.

100 × (cost(Solution) − BKS)

GAP =

BKS

1

All algorithms are available at https://github.com/mesax1/ISC-CVRP

21

Electronic copy available at: https://ssrn.com/abstract=4120102

Table 3: Parameters of the different algorithms.

GRASP

N Number of customers of the instance.

Γ Execution time limit for GRASP in seconds.

|RCL| = 10 Size of the restricted candidate list.

FILO0

∆f = 100 ∗ C Number of core optimization iterations of FILO0.

T0 , Tf Initial and final simulated annealing temperature.

ωbase = |ln|C|| Initial shaking intensity of ruin procedure.

ILB = 0.375 Lower bound of the shaking factors.

IU B = 0.85 Upper bound of the shaking factors.

ISC

θ = 0.25 ∗ Γ Training time limit in seconds.

λp = 15% Percentage of the solutions with the lowest cv to be labeled as promising solutions.

λu = 50% Percentage of the solutions with the highest cv to be labeled as unpromising solutions.

Random Forest

slmin = 1 Minimum number of samples at a leaf node.

dmax = 3 Maximum depth to which a tree is grown.

ssmin = 2 Minimum number of samples for a node to be split.

fmax = 8 Maximum number of randomly selected features to be considered at each split.

ismin = 0.00 Minimal impurity value for a node to be considered for another split.

ne = 100 Number of decision tree estimators to use as classifiers.

Recently, to objectively assess the performance differences between two

algorithms Accorsi et al. [38] recommended using a one-tailed Wilcoxon signed-

rank test for paired observations [39]. This allows to determine whether two

sets of paired observations are statistically different, or if the two algorithms

are considered to provide equivalent results. This test can be performed even if

no assumption can be done on the distributions of the paired observations sets.

We followed this recommendation and evaluated the statistical significance of

the performance difference between each pair of methods using a one-tailed

Wilcoxon signed-rank test at a significance level of p = 0.025.

4.1. Parameters

The parameters used in the different algorithms of this work are sum-

marized in Table 3. The values of some of them were selected from the

algorithms found on the literature (i.e., FILO0), while others were tuned

when considering the performance on the small instances of dataset X. For

ease of notation, N represents the number of customers of each instance.

22

Electronic copy available at: https://ssrn.com/abstract=4120102

The randomized Clarke and Wright construction method, as previously

mentioned, uses the well-known Clarke and Wright Savings algorithm [31],

with a slight variation to randomize the solutions that are generated. Instead

of using the sorted savings to construct an initial solution (this would lead to

the same solution been constructed in every iteration), the savings are sorted,

and an RCL of size 10 is used to select which saving will be evaluated. This

means that the customers to be joined by the savings algorithm are randomly

selected at each step out of the 10 best possible savings in the RCL.

The training dataset Dtrain that is used to train B is obtained from the

solutions dataset Dsol as follows. Fifteen percent of the solutions with the

lowest improved cost value cv are labeled as promising solutions. Fifty percent

of the solutions with the highest cv are labeled as unpromising solutions. The

remaining 35% of the solutions, which are unlabeled, are discarded. This gap

between solutions allows for a better differentiation between promising and

unpromising solutions for the classifier. The imbalance in the class labels

requires an adjustment of the weights [33], which is inversely proportional to

the class frequencies in Dtrain . Therefore, class weights ρ are the following:

|Dtrain |

promising weight =

(2 ∗ |promising solutions|)

and

|Dtrain |

unpromising weight =

(2 ∗ |unpromising solutions|)

When including VND into GRASP, the neighborhoods are exhaustively

explored, and an improving movement is executed if it is the best possible

improvement found. This is typically called the best-improvement strategy.

GRASP’s time limit, in seconds, Γ, for the execution of G-VND and

G-VND-ISC, is proportional to the size of the instance as follows. For small

instances, with less than 250 customers, Γ = N . For medium instances, with

250 or more customers and less than 500 customers, Γ = 3 ∗ N . Finally,

for large instances, with 500 customers or more, Γ = 10 ∗ N . Similarly, for

the execution of G-FILO0 and G-FILO0-ISC, Γ is also proportional to the

size of the instance, with lower time limit values, as FILO0 is faster than

VND for medium and large instances. The values are as follows. For small

instances Γ = N . For medium instances Γ = 2 ∗ N . Finally, for large instances

23

Electronic copy available at: https://ssrn.com/abstract=4120102

Γ = 3 ∗ N . The parameter θ is proportional to the Γ of every instance. For

G-VND-ISC and G-FILO0-ISC, ISC’s training time limit is θ = 0.25 ∗ Γ.

The parameters of FILO0 are taken directly from the work of Accorsi

and Vigo [12]. The only exception is ∆f , which corresponds to ∆CO in [12].

In our case, we established the number of ruin-and-recreate iterations to

be proportional to the size of the instance; therefore, ∆f = 100 ∗ N . The

initial temperature T0 and final temperature Tf , for simulated annealing, are

proportional to the average cost of an arc in an instance. Specifically, the

value of T0 is equal to 0.1 times the average instance arc cost and Tf is 0.01

times T0 .

4.2. Results

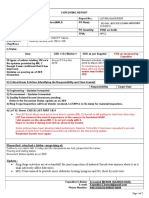

Figure 1: Comparison of the performance of MSM using VND local-search with and without

ISC.

Our experiments evaluated the performance of ISC on different instances

and for two local-search algorithms. Figure 1 shows the results of the com-

parison of G-VND Vs. G-VND-ISC on all instances, and among different

instances sizes, obtained over 10 executions with different random seeds on

each instance of the X dataset. Similarly, Figure 2 shows the results of

24

Electronic copy available at: https://ssrn.com/abstract=4120102

Figure 2: Comparison of the performance of MSM using FILO0 local-search with and

without ISC.

the comparison of G-FILO0 Vs. G-FILO0-ISC. Table 4 shows the results

of the comparison of the four configurations of the GRASP metaheuristics:

G-VND Vs. G-VND-ISC and G-FILO0 Vs. G-FILO0-ISC, obtained over 10

executions on each instance of the X dataset. The instances are divided into

subsets according to their characteristics. The table shows the number of

instances (#) of each subset, the average gap value (Gap(%)) obtained for

each of these subsets, along with the execution time (T) in minutes, and the

results of the statistical difference significance (Sign.) of the Wilcoxon test.

The improvement of the performance of GRASP combined with ISC for

CVRP is shown in Table 4. Overall, both local-search algorithms are guided

towards promising initial solutions, delivering solutions with better quality

than the methods without ISC, with an average substantial improvement of

0.067% in the VND method, and 0.028% in the FILO0 method. However,

FILO0 delivers solutions with state-of-the-art quality, where an improvement

of 0.028% is difficult to achieve.

An example that illustrates the improvement that ISC provides is shown

on Figure 3. The average Gap(%) reported corresponds to 10 different

25

Electronic copy available at: https://ssrn.com/abstract=4120102

Table 4: Comparison of the performance of MSM with and without ISC. The best average performance per subset, is in bold.

G-VND G-VND-ISC Sign. G-FILO0 G-FILO0-ISC Sign.

Subset # Gap(%) Gap(%) T*(min) Gap(%) Gap(%) T(min)

All 100 2.632 2.563 45.74 Yes 0.775 0.747 16.67 Yes

Small 32 2.097 2.020 2.88 Yes 0.370 0.353 2.88 No

Medium 36 2.732 2.654 17.45 Yes 0.828 0.784 11.64 Yes

Large 32 3.056 3.005 120.43 Yes 1.121 1.099 36.13 Yes

Depot (C) 32 2.864 2.794 42.93 Yes 0.747 0.723 15.78 Yes

Depot (E) 34 2.205 2.142 45.89 Yes 0.700 0.673 16.67 Yes

Depot (R) 34 2.840 2.768 48.25 Yes 0.877 0.843 17.51 Yes

Customer (C) 32 2.618 2.539 43.66 Yes 0.761 0.740 16.03 Yes

Customer (R) 33 2.798 2.733 48.18 Yes 0.891 0.859 17.44 Yes

Customer (RC) 35 2.489 2.425 45.35 Yes 0.680 0.648 16.54 Yes

T* is for a parallel execution in 4 threads

executions of G-FILO0-ISC and G-FILO0 on instance X-n331-15 at different

time steps. After 25% to 30% of the CPU time limit, ISC starts classifying the

initial solutions, boosting the search performance. Therefore, G-FILO0-ISC

converges faster to better quality solutions than G-FILO0.

When analyzing the performance along the different subsets of instances,

we observe significant improvements on all the subsets for both VND and

FILO0. The only exception is the subset of small instances, where the

difference between G-FILO0 and G-FILO0-ISC is not statistically significant.

In small instances the improvement is limited when using ISC to improve

FILO0 methods, because FILO0 by itself can find high-quality solutions with

ease.

Compared to G-VND, G-VND-ISC delivers a better average-solution qual-

ity on 99 out of the 100 instances of the X dataset. Similarly, G-FILO0-ISC

delivers better results than G-FILO0 on 81 out of 100 instances. These results

confirm the usefulness of ISC when applied to two GRASP metaheuristics

with different local-search algorithms. ISC compensates for the computational

effort introduced by the feature-extraction and solution classification proce-

dures, with a significant improvement of MSM’s performance. For information

on the results obtained for each instance, see Appendix A.

5. Conclusions and future-work directions

In this paper, we presented ISC: a method to classify and detect promising

initial solutions within MSM for CVRP. ISC combines (1) feature extraction,

(2) machine-learning classification, and (3) vehicle routing heuristics to im-

prove the performance of existing MSM. We successfully integrated ISC with

two GRASP metaheuristics with different local-search algorithms.

26

Electronic copy available at: https://ssrn.com/abstract=4120102

Figure 3: Average convergence of G-FILO0-ISC and G-FILO0 solutions over time for

instance X-n331-k15.

We performed experiments to evaluate the performance of ISC. ISC

improves the performance of MSM, accelerating the convergence to better-

quality solutions. Considering the Wilcoxon test, the average improvements

for an MSM when using ISC with either VND local-search or FILO0 local-

search, are statistically significant. For almost all the instances subsets that

we evaluated the improvements are statistically significant even considering

the additional computational effort that ISC requires. The results of this work

demonstrate that it is possible for an algorithm to learn to determine if an

initial solution would lead to a high-quality solution after local-search. In the

future, we propose to explore the integration of ISC within other local-search

algorithms.

ISC uses a Random-Forest classifier, which is a simple, efficient, and

well-known machine-learning algorithm. Another future-work direction could

exploit the progress that has been recently made on deep learning, such as

(1) deep neural networks, (2) graph neural networks, or (3) deep reinforce-

ment learning, to obtain classifiers with better performance. Finally, we

demonstrated with the computational experiments that ISC can improve the

27

Electronic copy available at: https://ssrn.com/abstract=4120102

performance of two GRASP variants for CVRP. Thus, ISC with a Random-

Forest classifier is a component that can be easily integrated to any other

MSM for CVRP.

6. Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the

publication of this paper.

7. Acknowledgments

The authors acknowledge supercomputing resources made available by

the Universidad EAFIT scientific computing center (APOLO) to conduct

the research reported in this work, and for its support for the computational

experiments. The authors would like to thank Luca Accorsi for providing

access to FILO code, and guidance in how to turn it into the simplified FILO0

variant. Additionally, the authors would like to thank Alejandro Guzmán

and Sebastián Pérez for their help during the early stages of the heuristics

implementation. Finally, the first author is grateful to Universidad EAFIT

for the PhD Grant from project 974-000005.

References

[1] F. Arnold, K. Sörensen, What makes a vrp solution good?

the generation of problem-specific knowledge for heuris-

tics, Computers & Operations Research 106 (2019) 280–288.

doi:https://doi.org/10.1016/j.cor.2018.02.007.

URL https://www.sciencedirect.com/science/article/pii/

S0305054818300315

[2] F. Lucas, R. Billot, M. Sevaux, K. Sörensen, Reducing space search in

combinatorial optimization using machine learning tools, in: International

Conference on Learning and Intelligent Optimization, Springer, 2020, pp.

143–150. doi:https://doi.org/10.1007/978-3-030-53552-0_15.

[3] F. Arnold, K. Sörensen, Knowledge-guided local search for the vehicle

routing problem, Computers & Operations Research 105 (2019) 32–46.

doi:https://doi.org/10.1016/j.cor.2019.01.002.

URL https://www.sciencedirect.com/science/article/pii/

S0305054819300024

28

Electronic copy available at: https://ssrn.com/abstract=4120102

[4] F. Arnold, Í. Santana, K. Sörensen, T. Vidal, Pils: Explor-

ing high-order neighborhoods by pattern mining and injec-

tion, Pattern Recognition 116 (2021) 107957. doi:https:

//doi.org/10.1016/j.patcog.2021.107957.

URL https://www.sciencedirect.com/science/article/pii/

S0031320321001448

[5] G. B. Dantzig, J. H. Ramser, The truck dispatching problem, Manage-

ment Science 6 (1) (1959) 80–91. doi:10.1287/mnsc.6.1.80.

URL http://dx.doi.org/10.1287/mnsc.6.1.80

[6] G. Laporte, S. Ropke, T. Vidal, Chapter 4: Heuristics for the Vehi-

cle Routing Problem, SIAM, 2014, Ch. 4, pp. 87–116. arXiv:https:

//epubs.siam.org/doi/pdf/10.1137/1.9781611973594.ch4, doi:10.

1137/1.9781611973594.ch4.

URL https://epubs.siam.org/doi/abs/10.1137/1.9781611973594.

ch4

[7] M. Nazari, A. Oroojlooy, L. Snyder, M. Takac, Reinforcement learning

for solving the vehicle routing problem, Vol. 31, 2018, pp. 9839–9849.

URL https://proceedings.neurips.cc/paper/2018/file/

9fb4651c05b2ed70fba5afe0b039a550-Paper.pdf

[8] W. Kool, H. van Hoof, J. Gromicho, M. Welling, Deep policy dynamic pro-

gramming for vehicle routing problems, arXiv preprint arXiv:2102.11756

(2021). doi:https://doi.org/10.48550/arXiv.2102.11756.

[9] E.-G. Talbi, Machine learning into metaheuristics: A survey and taxon-

omy, ACM Computing Surveys (CSUR) 54 (6) (2021) 1–32. doi:https:

//doi.org/10.1145/3459664.

[10] T. A. Feo, M. G. Resende, Greedy randomized adaptive search procedures,

Journal of global optimization 6 (2) (1995) 109–133. doi:https://doi.

org/10.1007/BF01096763.

[11] N. Mladenović, P. Hansen, Variable neighborhood search,

Computers & Operations Research 24 (11) (1997) 1097–1100.

doi:https://doi.org/10.1016/S0305-0548(97)00031-2.

URL https://www.sciencedirect.com/science/article/pii/

S0305054897000312

29

Electronic copy available at: https://ssrn.com/abstract=4120102

[12] L. Accorsi, D. Vigo, A fast and scalable heuristic for the solution of large-

scale capacitated vehicle routing problems, Transportation Science 55 (4)

(2021) 832–856. doi:https://doi.org/10.1287/trsc.2021.1059.

[13] L. Breiman, Random forests, Machine learning 45 (1) (2001) 5–32. doi:

https://doi.org/10.1023/A:1010933404324.

[14] A. K. Kalakanti, S. Verma, T. Paul, T. Yoshida, Rl solver pro: Reinforce-

ment learning for solving vehicle routing problem, in: 2019 1st Interna-

tional Conference on Artificial Intelligence and Data Sciences (AiDAS),

IEEE, 2019, pp. 94–99. doi:10.1109/AiDAS47888.2019.8970890.

[15] B. Peng, J. Wang, Z. Zhang, A deep reinforcement learning algo-

rithm using dynamic attention model for vehicle routing problems, in:

International Symposium on Intelligence Computation and Applica-

tions, Springer, 2019, pp. 636–650. doi:https://doi.org/10.1007/

978-981-15-5577-0_51.

[16] A. Delarue, R. Anderson, C. Tjandraatmadja, Reinforcement learning

with combinatorial actions: An application to vehicle routing, in:

H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, H. Lin (Eds.),

Advances in Neural Information Processing Systems, Vol. 33, Curran

Associates, Inc., 2020, pp. 609–620.

URL https://proceedings.neurips.cc/paper/2020/file/

06a9d51e04213572ef0720dd27a84792-Paper.pdf

[17] A. E. Gutierrez-Rodrı́guez, S. E. Conant-Pablos, J. C. Ortiz-Bayliss,

H. Terashima-Marı́n, Selecting meta-heuristics for solving vehicle routing

problems with time windows via meta-learning, Expert Systems with

Applications 118 (2019) 470–481. doi:https://doi.org/10.1016/j.

eswa.2018.10.036.

[18] H. Jiang, Y. Wang, Y. Tian, X. Zhang, J. Xiao, Feature construction for

meta-heuristic algorithm recommendation of capacitated vehicle routing

problems, ACM Transactions on Evolutionary Learning and Optimization

1 (1) (2021) 1–28. doi:10.1145/3447540.

URL https://doi.org/10.1145/3447540

[19] A. Subramanian, E. Uchoa, L. S. Ochi, A hybrid algorithm

for a class of vehicle routing problems, Computers &

30

Electronic copy available at: https://ssrn.com/abstract=4120102

Operations Research 40 (10) (2013) 2519–2531. doi:https:

//doi.org/10.1016/j.cor.2013.01.013.

URL https://www.sciencedirect.com/science/article/pii/

S030505481300021X

[20] V. R. Máximo, M. C. Nascimento, A hybrid adaptive iterated local

search with diversification control to the capacitated vehicle routing

problem, European Journal of Operational Research 294 (3) (2021)

1108–1119. doi:https://doi.org/10.1016/j.ejor.2021.02.024.

URL https://www.sciencedirect.com/science/article/pii/

S037722172100117X

[21] T. Vidal, T. G. Crainic, M. Gendreau, C. Prins, A unified so-

lution framework for multi-attribute vehicle routing problems,

European Journal of Operational Research 234 (3) (2014) 658–673.

doi:https://doi.org/10.1016/j.ejor.2013.09.045.

URL https://www.sciencedirect.com/science/article/pii/

S037722171300800X

[22] J. Christiaens, G. Vanden Berghe, Slack induction by string removals for

vehicle routing problems, Transportation Science 54 (2) (2020) 417–433.

doi:https://doi.org/10.1287/trsc.2019.0914.

[23] S. Kirkpatrick, C. D. Gelatt Jr, M. P. Vecchi, Optimization by simulated

annealing, science 220 (4598) (1983) 671–680.

[24] M. R. de Holanda Maia, A. Plastino, P. H. V. Penna, Hybrid data

mining heuristics for the heterogeneous fleet vehicle routing problem,

RAIRO-Operations Research 52 (3) (2018) 661–690.

[25] R. S. Sutton, A. G. Barto, Reinforcement Learning: An Introduction,

2nd Edition, The MIT Press, 2018.

URL http://incompleteideas.net/book/the-book-2nd.html

[26] Y. Wu, W. Song, Z. Cao, J. Zhang, A. Lim, Learning improvement

heuristics for solving routing problems, IEEE Transactions on Neural

Networks and Learning Systems (2021) 1–13doi:10.1109/TNNLS.2021.

3068828.

31

Electronic copy available at: https://ssrn.com/abstract=4120102

[27] L. Gao, M. Chen, Q. Chen, G. Luo, N. Zhu, Z. Liu, Learn to design the

heuristics for vehicle routing problem, arXiv preprint arXiv:2002.08539

(2020). doi:https://doi.org/10.48550/arXiv.2002.08539.

[28] A. Hottung, K. Tierney, Neural large neighborhood search for the ca-

pacitated vehicle routing problem, in: 24th European Conference on

Artificial Intelligence (ECAI 2020), 2020.

[29] R. S. Sutton, D. McAllester, S. Singh, Y. Mansour, Policy gradient meth-

ods for reinforcement learning with function approximation, Advances

in neural information processing systems 12 (1999) 1057–1063.

[30] P. H. Swain, H. Hauska, The decision tree classifier: Design and potential,

IEEE Transactions on Geoscience Electronics 15 (3) (1977) 142–147.

doi:10.1109/TGE.1977.6498972.

[31] G. Clarke, J. W. Wright, Scheduling of vehicles from a central depot to

a number of delivery points, Operations research 12 (4) (1964) 568–581.

doi:https://doi.org/10.1287/opre.12.4.568.

[32] J. P. Hart, A. W. Shogan, Semi-greedy heuristics: An empir-

ical study, Operations Research Letters 6 (3) (1987) 107–114.

doi:https://doi.org/10.1016/0167-6377(87)90021-6.

URL https://www.sciencedirect.com/science/article/pii/

0167637787900216

[33] L. Breiman, J. H. Friedman, R. A. Olshen, C. J. Stone, Classification

and regression trees, Routledge, 2017.

[34] N. Hassan, W. Gomaa, G. Khoriba, M. Haggag, Supervised learning

approach for twitter credibility detection, 2018, pp. 196–201. doi:10.

1109/ICCES.2018.8639315.

[35] E. Uchoa, D. Pecin, A. Pessoa, M. Poggi, T. Vidal, A. Subramanian,

New benchmark instances for the capacitated vehicle routing problem,

European Journal of Operational Research 257 (3) (2017) 845–858.

doi:https://doi.org/10.1016/j.ejor.2016.08.012.

URL https://www.sciencedirect.com/science/article/pii/

S0377221716306270

32

Electronic copy available at: https://ssrn.com/abstract=4120102

[36] Boost c++ libraries (2022).

URL http://www.boost.org/

[37] Twigy random forest c++ and python library (2022).

URL https://github.com/christophmeyer/twigy

[38] L. Accorsi, A. Lodi, D. Vigo, Guidelines for the computa-

tional testing of machine learning approaches to vehicle routing

problems, Operations Research Letters 50 (2) (2022) 229–234.

doi:https://doi.org/10.1016/j.orl.2022.01.018.

URL https://www.sciencedirect.com/science/article/pii/

S0167637722000244

[39] F. Wilcoxon, Individual comparisons by ranking methods, in: Break-

throughs in statistics, Springer, 1992, pp. 196–202.

Appendix A. Computational details of results on the X dataset

The results in tables A.5 to A.7 show the Average Gap (%) obtained

over 10 executions on each instance of the X dataset, along with the CPU

time (min), for the metaheuristics G-FILO0, G-FILO0-ISC, G-VND, and

G-VND-ISC.

33

Electronic copy available at: https://ssrn.com/abstract=4120102

Table A.5: Computational results on the small instances of X dataset. * Corresponds to execution time in four parallel cores.

INSTANCE BKS G-FILO0(%) G-FILO0-ISC(%) TIME(min) G-VND(%) G-VND-ISC(%) TIME*(min)

X-n101-k25 27591 0.016 0.003 1.67 1.771 1.723 1.67

X-n106-k14 26362 0.236 0.176 1.75 1.175 1.173 1.75

X-n110-k13 14971 0.000 0.000 1.82 1.303 1.235 1.82

X-n115-k10 12747 0.000 0.000 1.90 1.180 1.298 1.90

X-n120-k6 13332 0.001 0.000 1.98 1.596 1.539 1.98

X-n125-k30 55539 0.557 0.522 2.07 3.123 2.969 2.07

X-n129-k18 28940 0.091 0.060 2.13 1.275 1.246 2.13

X-n134-k13 10916 0.325 0.254 2.22 1.524 1.429 2.22

X-n139-k10 13590 0.046 0.071 2.30 0.760 0.615 2.30

X-n143-k7 15700 0.076 0.011 2.37 1.240 1.208 2.37

X-n148-k46 43448 0.119 0.104 2.45 1.350 1.203 2.45

X-n153-k22 21220 0.690 0.731 2.53 5.423 5.397 2.53

X-n157-k13 16876 0.007 0.017 2.60 1.214 1.181 2.60

X-n162-k11 14138 0.057 0.059 2.68 2.053 2.003 2.68

X-n167-k10 20557 0.170 0.034 2.77 1.918 1.780 2.77

34

X-n172-k51 45607 0.363 0.361 2.85 1.725 1.675 2.85

X-n176-k26 47812 1.952 2.072 2.92 3.245 3.129 2.92

X-n181-k23 25569 0.084 0.079 3.00 1.162 1.079 3.00

X-n186-k15 24145 0.212 0.139 3.08 1.214 1.187 3.08

X-n190-k8 16980 0.358 0.346 3.15 2.151 2.047 3.15

X-n195-k51 44225 0.402 0.373 3.23 1.994 1.974 3.23

X-n200-k36 58578 0.775 0.775 3.32 2.803 2.770 3.32

X-n204-k19 19565 0.492 0.480 3.38 2.717 2.659 3.38

X-n209-k16 30656 0.312 0.256 3.47 2.287 2.260 3.47

X-n214-k11 10856 1.138 1.099 3.55 2.917 2.777 3.55

X-n219-k73 117595 0.100 0.090 3.63 0.126 0.113 3.63

Electronic copy available at: https://ssrn.com/abstract=4120102

X-n223-k34 40437 0.414 0.402 3.70 1.986 1.904 3.70

X-n228-k23 25742 0.376 0.356 3.78 3.135 3.000 3.78

X-n233-k16 19230 0.440 0.356 3.87 2.378 2.230 3.87

X-n237-k14 27042 0.366 0.369 3.93 2.658 2.586 3.93

X-n242-k48 82751 0.494 0.467 4.02 1.547 1.467 4.02

X-n247-k50 37274 1.160 1.230 4.10 6.147 5.777 4.10

Mean 0.370 0.353 2.88 2.097 2.020 2.88

Table A.6: Computational results on the medium instances of X dataset. * Corresponds to execution time in four parallel cores.

INSTANCE BKS G-FILO0(%) G-FILO0-ISC(%) TIME(min) G-VND(%) G-VND-ISC(%) TIME*(min)

X-n251-k28 38684 0.733 0.639 8.33 2.198 2.147 12.50

X-n256-k16 18839 0.289 0.254 8.50 2.263 2.203 12.75

X-n261-k13 26558 1.066 0.926 8.67 3.540 3.423 13.00

X-n266-k58 75478 1.019 0.935 8.83 2.401 2.340 13.25

X-n270-k35 35291 0.509 0.495 8.97 2.355 2.286 13.45

X-n275-k28 21245 0.366 0.358 9.13 2.522 2.484 13.70

X-n280-k17 33503 1.048 0.969 9.30 3.037 3.011 13.95

X-n284-k15 20226 1.303 1.138 9.43 4.008 3.852 14.15

X-n289-k60 95151 1.076 1.033 9.60 2.274 2.190 14.40

X-n294-k50 47161 0.467 0.460 9.77 1.635 1.563 14.65

X-n298-k31 34231 0.821 0.724 9.90 3.368 3.354 14.85

X-n303-k21 21736 0.727 0.719 10.07 2.775 2.715 15.10

X-n308-k13 25859 0.840 0.879 10.23 3.050 2.953 15.35

X-n313-k71 94043 0.902 0.909 10.40 2.563 2.542 15.60

X-n317-k53 78355 0.074 0.065 10.53 0.551 0.543 15.80

X-n322-k28 29834 0.785 0.677 10.70 3.191 2.947 16.05

X-n327-k20 27532 0.892 0.793 10.87 3.535 3.469 16.30

35

X-n331-k15 31102 0.492 0.416 11.00 3.324 3.132 16.50

X-n336-k84 139111 0.938 0.923 11.17 2.326 2.255 16.75

X-n344-k43 42050 0.976 0.912 11.43 2.944 2.888 17.15

X-n351-k40 25896 1.113 1.014 11.67 2.560 2.512 17.50

X-n359-k29 51505 0.860 0.795 11.93 2.318 2.284 17.90

X-n367-k17 22814 0.768 0.750 12.20 3.527 3.385 18.30

X-n376-k94 147713 0.063 0.058 12.50 0.342 0.339 18.75

X-n384-k52 65940 0.823 0.804 12.77 2.319 2.244 19.15

X-n393-k38 38260 0.749 0.737 13.07 2.486 2.376 19.60

X-n401-k29 66154 0.622 0.605 13.33 1.855 1.763 20.00

X-n411-k19 19712 1.141 1.157 13.67 3.759 3.579 20.50

Electronic copy available at: https://ssrn.com/abstract=4120102

X-n420-k130 107798 0.622 0.613 13.97 2.635 2.577 20.95

X-n429-k61 65449 0.861 0.870 14.27 2.609 2.584 21.40

X-n439-k37 36391 0.382 0.348 14.60 2.887 2.814 21.90

X-n449-k29 55233 1.338 1.302 14.93 3.095 2.980 22.40

X-n459-k26 24139 1.634 1.600 15.27 4.658 4.614 22.90

X-n469-k138 221824 1.697 1.685 15.60 4.577 4.517 23.40

X-n480-k70 89449 0.671 0.647 15.97 2.450 2.388 23.95

X-n491-k59 66483 1.138 1.029 16.33 2.409 2.285 24.50

Mean 0.828 0.784 11.636 2.732 2.654 17.45

View publication stats

Table A.7: Computational results on the large instances of X dataset. * Corresponds to execution time in four parallel cores.

INSTANCE BKS G-FILO0(%) G-FILO0-ISC(%) TIME(min) G-VND(%) G-VND-ISC(%) TIME*(min)

X-n502-k39 69 226 0.120 0.119 25.05 0.952 0.910 83.50

X-n513-k21 24 201 0.904 0.853 25.6 4.507 4.426 85.33

X-n524-k153 154 593 0.751 0.771 26.15 4.732 4.641 87.17

X-n536-k96 94 846 1.282 1.261 26.75 3.120 3.026 89.17

X-n548-k50 86 700 0.248 0.266 27.35 1.516 1.485 91.17

X-n561-k42 42 717 0.711 0.644 28 3.099 3.077 93.33

X-n573-k30 50 673 0.722 0.705 28.6 1.630 1.609 95.33

X-n586-k159 190 316 1.267 1.240 29.25 3.659 3.566 97.50

X-n599-k92 108 451 1.037 1.039 29.9 2.552 2.505 99.67

X-n613-k62 59 535 1.568 1.473 30.6 3.201 3.104 102.00

X-n627-k43 62 164 1.168 1.167 31.3 2.800 2.733 104.33

X-n641-k35 63 684 1.387 1.341 32 3.149 3.083 106.67

X-n655-k131 106 780 0.179 0.161 32.7 0.530 0.527 109.00

X-n670-k130 146 332 2.579 2.577 33.45 6.302 6.231 111.50

X-n685-k75 68 205 1.282 1.232 34.2 2.705 2.645 114.00

36

X-n701-k44 81 923 1.061 1.031 35 2.208 2.189 116.67

X-n716-k35 43 373 1.583 1.534 35.75 2.777 2.693 119.17

X-n733-k159 136 187 0.777 0.775 36.6 1.602 1.592 122.00

X-n749-k98 77 269 1.417 1.401 37.4 2.146 2.121 124.67

X-n766-k71 114 417 0.936 0.893 38.25 2.629 2.518 127.50

X-n783-k48 72 386 1.356 1.312 39.1 3.256 3.216 130.33

X-n801-k40 73 311 0.621 0.605 40 2.921 2.878 133.33

X-n819-k171 158 121 1.303 1.307 40.9 3.915 3.906 136.33

X-n837-k142 193 737 0.990 0.994 41.8 2.730 2.714 139.33

X-n856-k95 88 965 0.466 0.466 42.75 2.196 2.184 142.50

X-n876-k59 99 299 1.151 1.136 43.75 1.791 1.784 145.83

Electronic copy available at: https://ssrn.com/abstract=4120102

X-n895-k37 53 860 1.997 1.920 44.7 4.866 4.802 149.00

X-n916-k207 329 179 1.105 1.089 45.75 3.474 3.394 152.50

X-n936-k151 132 715 2.389 2.374 46.75 7.801 7.770 155.83

X-n957-k87 85 465 0.590 0.578 47.8 2.565 2.531 159.33

X-n979-k58 118 976 0.996 0.983 48.9 2.405 2.311 163.00

X-n1001-k43 72 355 1.945 1.907 50 4.043 3.978 166.67

Mean 1.121 1.099 36.128 3.056 3.005 120.43

You might also like

- Chapter 02 SlidesDocument57 pagesChapter 02 SlidesZahraa AlQallafNo ratings yet

- CRM Harrah'sDocument3 pagesCRM Harrah'sAshutosh MishraNo ratings yet

- SF EC OData API REF PDFDocument610 pagesSF EC OData API REF PDFPablo Salinas50% (2)

- ITS332 SDD Library Management SystemDocument20 pagesITS332 SDD Library Management SystemSabariah Hashim100% (1)

- Deep Reinforcement Learning For MOODocument12 pagesDeep Reinforcement Learning For MOOnandaNo ratings yet

- Algorithms 14 00191Document17 pagesAlgorithms 14 00191marisNo ratings yet

- A Comprehensive Review On NSGA-II For Multi-Objective Combinatorial Optimization ProblemsDocument35 pagesA Comprehensive Review On NSGA-II For Multi-Objective Combinatorial Optimization Problemssunday obinnaNo ratings yet

- 23 Towards More Likely Models ForDocument9 pages23 Towards More Likely Models Forjoykiratsingh16No ratings yet

- An Improved Discrete Migrating Birds Optimization Algorithm For The No-Wait Flow Shop Scheduling ProblemDocument13 pagesAn Improved Discrete Migrating Birds Optimization Algorithm For The No-Wait Flow Shop Scheduling Problemzrdasma01No ratings yet

- Genetic AlgorithmDocument6 pagesGenetic AlgorithmmetpalashNo ratings yet

- Tabu Search For Partitioning Dynamic Data Ow ProgramsDocument12 pagesTabu Search For Partitioning Dynamic Data Ow ProgramsDaniel PovedaNo ratings yet

- Discrete Fire y Algorithm: A New Metaheuristic Approach For Solving Constraint Satisfaction ProblemsDocument9 pagesDiscrete Fire y Algorithm: A New Metaheuristic Approach For Solving Constraint Satisfaction ProblemsI'm DuongNo ratings yet

- Deep Reinforcement Learning For Multi-Objective OptimizationDocument12 pagesDeep Reinforcement Learning For Multi-Objective Optimizationmehmetkartal19No ratings yet

- Multi Objective Passing Vehicle Search Algorithm For Structure Optimization Sumit Kumar Full Chapter PDFDocument40 pagesMulti Objective Passing Vehicle Search Algorithm For Structure Optimization Sumit Kumar Full Chapter PDFsadkljamfoh100% (7)

- 824 Multi Source Transfer LearningDocument25 pages824 Multi Source Transfer LearningVõ Minh TríNo ratings yet

- Multi Objective Passing Vehicle Search Algorithm For Structure Optimization Sumit Kumar Full ChapterDocument38 pagesMulti Objective Passing Vehicle Search Algorithm For Structure Optimization Sumit Kumar Full Chapterchad.young967100% (14)

- Explanation On NX Nastran SolversDocument8 pagesExplanation On NX Nastran SolversAndres OspinaNo ratings yet

- Designing and Implementing Strategies For Solving Large Location-Allocation Problems With Heuristic MethodsDocument31 pagesDesigning and Implementing Strategies For Solving Large Location-Allocation Problems With Heuristic MethodsTony BuNo ratings yet

- Artigo NSGA-IIDocument23 pagesArtigo NSGA-IIHoldolf BetrixNo ratings yet

- p553 BoehmDocument12 pagesp553 Boehmnz0ptkNo ratings yet

- Scatter search for the vehicle routing problemDocument17 pagesScatter search for the vehicle routing problemchinhtran200422No ratings yet

- Belief Networks in Construction SimulationDocument8 pagesBelief Networks in Construction SimulationaeroacademicNo ratings yet

- Soft ComputingDocument126 pagesSoft Computingbhuvi2312No ratings yet

- A Novel Physical Based Meta-Heuristic Optimization Method Known As Lightning Attachment Procedure OptimizationDocument67 pagesA Novel Physical Based Meta-Heuristic Optimization Method Known As Lightning Attachment Procedure OptimizationGogyNo ratings yet

- An Efficient Multiobjective Differential EvolutionDocument19 pagesAn Efficient Multiobjective Differential Evolutionkmewar981No ratings yet

- MetaDocument8 pagesMetahossamyomna10No ratings yet