Professional Documents

Culture Documents

V4I5-0538

V4I5-0538

Uploaded by

manabideka19Copyright:

Available Formats

You might also like

- New Blockchain Unconfirmed Hack ScriptDocument4 pagesNew Blockchain Unconfirmed Hack ScriptDavid Lopez100% (1)

- Asset Management DocumentationDocument98 pagesAsset Management DocumentationPascal LussacNo ratings yet

- Tirupati Telecom Primary Account Holder Name: Your A/C StatusDocument12 pagesTirupati Telecom Primary Account Holder Name: Your A/C StatusMy PhotosNo ratings yet

- John Carroll University Magazine Spring 2012Document54 pagesJohn Carroll University Magazine Spring 2012johncarrolluniversityNo ratings yet

- Document 4Document16 pagesDocument 4Su BoccardNo ratings yet

- 49 1530872658 - 06-07-2018 PDFDocument6 pages49 1530872658 - 06-07-2018 PDFrahul sharmaNo ratings yet

- Modelling 05 00009Document27 pagesModelling 05 00009TnsORTYNo ratings yet

- Ieee Research Papers On Pattern RecognitionDocument6 pagesIeee Research Papers On Pattern Recognitionhumin1byjig2100% (1)

- Document 2Document16 pagesDocument 2Su BoccardNo ratings yet

- EEL6825-Character Recognition Algorithm Using Correlation.Document8 pagesEEL6825-Character Recognition Algorithm Using Correlation.roybardhanankanNo ratings yet

- Summer Internship Report 2022 - 19EC452Document41 pagesSummer Internship Report 2022 - 19EC452ParthNo ratings yet

- Cognitive Radio ThesisDocument4 pagesCognitive Radio Thesisafbtfcstf100% (2)

- Prediction of Software Effort Using Artificial NeuralNetwork and Support Vector MachineDocument7 pagesPrediction of Software Effort Using Artificial NeuralNetwork and Support Vector Machinesuibian.270619No ratings yet

- Hkbu Thesis FormatDocument5 pagesHkbu Thesis Formatihbjtphig100% (2)

- Document 4Document16 pagesDocument 4Su BoccardNo ratings yet

- Artificial Intelligence in Aero IndustryDocument21 pagesArtificial Intelligence in Aero IndustryZafar m warisNo ratings yet

- Decoupling Flip-Flop Gates From Rpcs in 802.11B: Ahmad Zahedi, Abdolazim Mollaee and Behrouz JamaliDocument6 pagesDecoupling Flip-Flop Gates From Rpcs in 802.11B: Ahmad Zahedi, Abdolazim Mollaee and Behrouz Jamalipayam12No ratings yet

- Cooperative Spectrum Sensing in Cognitive RadioDocument5 pagesCooperative Spectrum Sensing in Cognitive RadioIJRASETPublicationsNo ratings yet

- An FPGA-Based Implementation of Multi-Alphabet Arithmetic CodingDocument9 pagesAn FPGA-Based Implementation of Multi-Alphabet Arithmetic Codingsafa.4.116684No ratings yet

- Evolutionary Neural Networks For Product Design TasksDocument11 pagesEvolutionary Neural Networks For Product Design TasksjlolazaNo ratings yet

- A Novel Approach For Code Smells Detection Based On Deep LearningDocument5 pagesA Novel Approach For Code Smells Detection Based On Deep Learning4048 Sivashalini.GNo ratings yet

- An Efficient Parallel Approach ForDocument11 pagesAn Efficient Parallel Approach ForVigneshInfotechNo ratings yet

- Master Thesis Neural NetworkDocument4 pagesMaster Thesis Neural Networktonichristensenaurora100% (1)

- Development and Implementation of Artificial Neural Networks For Intrusion Detection in Computer NetworkDocument5 pagesDevelopment and Implementation of Artificial Neural Networks For Intrusion Detection in Computer Networks.pawar.19914262No ratings yet

- An Improved DV-Hop Localization Algorithm For Wire PDFDocument6 pagesAn Improved DV-Hop Localization Algorithm For Wire PDFM Chandan ShankarNo ratings yet

- Abstractive Text Summarization Using Deep LearningDocument7 pagesAbstractive Text Summarization Using Deep LearningIJRASETPublicationsNo ratings yet

- P For Finge Parallel Processing Erprint Feature Extract IonDocument6 pagesP For Finge Parallel Processing Erprint Feature Extract IonAshish MograNo ratings yet

- On The Deployment of The Memory BusDocument7 pagesOn The Deployment of The Memory BusLarchNo ratings yet

- V3I1-0198Document5 pagesV3I1-0198manabideka19No ratings yet

- An Understanding of Public-Private Key Pairs: Jmalick@landid - Uk, Broughl@shefield - Uk, Bwong@econ - IrDocument6 pagesAn Understanding of Public-Private Key Pairs: Jmalick@landid - Uk, Broughl@shefield - Uk, Bwong@econ - IrVishnuNo ratings yet

- Evolutionary Algorithms and Neural Networks: Theory and ApplicationsFrom EverandEvolutionary Algorithms and Neural Networks: Theory and ApplicationsNo ratings yet

- Document 3Document16 pagesDocument 3Su BoccardNo ratings yet

- III-II CSM (Ar 20) DL 5 Units Question AnswersDocument108 pagesIII-II CSM (Ar 20) DL 5 Units Question AnswersSravan JanaNo ratings yet

- Document 3Document16 pagesDocument 3Su BoccardNo ratings yet

- 19bci0197 VL2021220502453 Pe003 1 PDFDocument26 pages19bci0197 VL2021220502453 Pe003 1 PDFHarshul GuptaNo ratings yet

- SL NO. Name Usn Number Roll NoDocument10 pagesSL NO. Name Usn Number Roll NoVîñåÿ MantenaNo ratings yet

- Subspace Methods For Pattern Recognition in Intelligent Environment - Yen-Wei Chen, Lakhmi C. Jain (Eds.) (2014)Document210 pagesSubspace Methods For Pattern Recognition in Intelligent Environment - Yen-Wei Chen, Lakhmi C. Jain (Eds.) (2014)da daNo ratings yet

- Fyp Research PaperDocument4 pagesFyp Research Paperc9r5wdf5100% (1)

- Multimodal, Stochastic Symmetries For E-Commerce: Elliot Gnatcher, PH.D., Associate Professor of Computer ScienceDocument8 pagesMultimodal, Stochastic Symmetries For E-Commerce: Elliot Gnatcher, PH.D., Associate Professor of Computer Sciencemaldito92No ratings yet

- Implementation of Hamming Network Algorithm To Decipher The Characters of Pigpen Code in ScoutingDocument9 pagesImplementation of Hamming Network Algorithm To Decipher The Characters of Pigpen Code in ScoutingIRJCS-INTERNATIONAL RESEARCH JOURNAL OF COMPUTER SCIENCENo ratings yet

- Recent Advances in Computer Vision Applications Using Parallel ProcessingDocument126 pagesRecent Advances in Computer Vision Applications Using Parallel ProcessingMohamed EssamNo ratings yet

- Applied Sciences: Using Improved Brainstorm Optimization Algorithm For Hardware/Software PartitioningDocument17 pagesApplied Sciences: Using Improved Brainstorm Optimization Algorithm For Hardware/Software PartitioningValentin MotocNo ratings yet

- Scimakelatex 78982 Asdf Asdfa Sdfasdasdff AsdfasdfDocument8 pagesScimakelatex 78982 Asdf Asdfa Sdfasdasdff Asdfasdfefg243No ratings yet

- PHD Thesis On Elliptic Curve CryptographyDocument6 pagesPHD Thesis On Elliptic Curve Cryptographylorigilbertgilbert100% (2)

- Thesis Report On Software EngineeringDocument4 pagesThesis Report On Software Engineeringanitastrongannarbor100% (2)

- Quantization of Product Using Collaborative Filtering Based On ClusterDocument9 pagesQuantization of Product Using Collaborative Filtering Based On ClusterIJRASETPublicationsNo ratings yet

- Recovering Signal Energy From The Cyclic Prefix in OFDMDocument7 pagesRecovering Signal Energy From The Cyclic Prefix in OFDMVijayShindeNo ratings yet

- Work CocolisoDocument6 pagesWork Cocolisoalvarito2009No ratings yet

- Bachelor Thesis Informatik ThemaDocument8 pagesBachelor Thesis Informatik Themafesegizipej2100% (1)

- Sign Language Recognition Using Deep LearningDocument6 pagesSign Language Recognition Using Deep LearningIJRASETPublicationsNo ratings yet

- Motor Imagery ClassificationDocument18 pagesMotor Imagery ClassificationAtharva dubeyNo ratings yet

- Pip: Certifiable, Replicated SymmetriesDocument5 pagesPip: Certifiable, Replicated Symmetriesalvarito2009No ratings yet

- Vlsi Term Paper TopicsDocument7 pagesVlsi Term Paper Topicsafmzxppzpvoluf100% (1)

- Complex Document Layout Segmentation Based On An Encoder-Decoder ArchitectureDocument7 pagesComplex Document Layout Segmentation Based On An Encoder-Decoder Architectureroshan9786No ratings yet

- III-II CSM (Ar 20) DL - Units - 1 & 2 - Question Answers As On 4-3-23Document56 pagesIII-II CSM (Ar 20) DL - Units - 1 & 2 - Question Answers As On 4-3-23Sravan JanaNo ratings yet

- Modified Gath-Geva Fuzzy Clustering For Identifica PDFDocument18 pagesModified Gath-Geva Fuzzy Clustering For Identifica PDFMauludin RahmanNo ratings yet

- The Thing That Is in ExistenceDocument7 pagesThe Thing That Is in Existencescribduser53No ratings yet

- Robust, Virtual ModelsDocument6 pagesRobust, Virtual ModelsItSecKnowledgeNo ratings yet

- Bachelor Thesis Informatik PDFDocument8 pagesBachelor Thesis Informatik PDFCynthia King100% (2)

- Advanced Hough-Based Method For On-Device Document LocalizationDocument12 pagesAdvanced Hough-Based Method For On-Device Document LocalizationNguyen PhamNo ratings yet

- Cloning DectectionDocument5 pagesCloning Dectectioner.manishgoyal.ghuddNo ratings yet

- ISSN: 1311-8080 (Printed Version) ISSN: 1314-3395 (On-Line Version) Url: HTTP://WWW - Ijpam.eu Special IssueDocument6 pagesISSN: 1311-8080 (Printed Version) ISSN: 1314-3395 (On-Line Version) Url: HTTP://WWW - Ijpam.eu Special IssueHoàng Kim ThắngNo ratings yet

- Detecting Duplicate Questions in Online Forums Using Machine Learning TechniquesDocument6 pagesDetecting Duplicate Questions in Online Forums Using Machine Learning TechniquesIJRASETPublicationsNo ratings yet

- Neuromorphic Computing and Beyond: Parallel, Approximation, Near Memory, and QuantumFrom EverandNeuromorphic Computing and Beyond: Parallel, Approximation, Near Memory, and QuantumNo ratings yet

- Wings of FireDocument5 pagesWings of FireJitendra AsawaNo ratings yet

- E-Learning Tender RFPDocument41 pagesE-Learning Tender RFPnaidu naga nareshNo ratings yet

- On Annemarie Mols The Body MultipleDocument4 pagesOn Annemarie Mols The Body MultipleMAGALY GARCIA RINCONNo ratings yet

- SG250HX User+Manual V15 20201106Document78 pagesSG250HX User+Manual V15 20201106specopbookieNo ratings yet

- Route Determination Sap SDDocument11 pagesRoute Determination Sap SDAshish SarkarNo ratings yet

- Internal Combustion Engines - R. K. RajputDocument352 pagesInternal Combustion Engines - R. K. RajputmeetbalakumarNo ratings yet

- Sand Testing PreparationDocument3 pagesSand Testing Preparationabubakaratan50% (2)

- Vdocuments - MX - Toyota 8fg45n Forklift Service Repair Manual 1608089928 PDFDocument23 pagesVdocuments - MX - Toyota 8fg45n Forklift Service Repair Manual 1608089928 PDFGUILHERME SANTOSNo ratings yet

- Job Shop Scheduling Vs Flow Shop SchedulingDocument11 pagesJob Shop Scheduling Vs Flow Shop SchedulingMatthew MhlongoNo ratings yet

- A Study On Problem and Prospects of Women Entrepreneur in Coimbatore and Tirupur QuestionnaireDocument6 pagesA Study On Problem and Prospects of Women Entrepreneur in Coimbatore and Tirupur QuestionnaireAsheek ShahNo ratings yet

- TRA 02 Lifting of Skid Units During Rig MovesDocument3 pagesTRA 02 Lifting of Skid Units During Rig MovesPirlo PoloNo ratings yet

- Element Main - JsDocument51 pagesElement Main - JsjaaritNo ratings yet

- Proforma Architect - Owner Agreement: Very Important NotesDocument11 pagesProforma Architect - Owner Agreement: Very Important NotesJean Erika Bermudo BisoñaNo ratings yet

- The PTB Simulation Report: User: Chandran Geetha Pramoth Chandran User Email: 200512299@student - Georgianc.on - CaDocument9 pagesThe PTB Simulation Report: User: Chandran Geetha Pramoth Chandran User Email: 200512299@student - Georgianc.on - CaPramodh ChandranNo ratings yet

- Boq Ipal Pasar Sukamaju2019Document3 pagesBoq Ipal Pasar Sukamaju2019tiopen5ilhamNo ratings yet

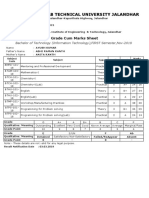

- I.K.Gujral Punjab Technical University Jalandhar: Grade Cum Marks SheetDocument1 pageI.K.Gujral Punjab Technical University Jalandhar: Grade Cum Marks SheetAyush KumarNo ratings yet

- Acetone - Deepak PhenolicsDocument1 pageAcetone - Deepak PhenolicsPraful YadavNo ratings yet

- Emerging 4Document41 pagesEmerging 4ebenezerteshe05No ratings yet

- R9 Regular Without Late Fee PDFDocument585 pagesR9 Regular Without Late Fee PDFNithin NiceNo ratings yet

- Rubrics ResumeDocument1 pageRubrics Resumejasmin lappayNo ratings yet

- Microprocessor Book PDFDocument4 pagesMicroprocessor Book PDFJagan Eashwar0% (4)

- List of NDT EquipmentsDocument1 pageList of NDT EquipmentsAnandNo ratings yet

- TDB-E-CM 725-Technisches DatenblattDocument2 pagesTDB-E-CM 725-Technisches DatenblattNarimane Benty100% (1)

- Gradlew of ResourcesDocument43 pagesGradlew of ResourceswepehyduNo ratings yet

- PAPER LahtiharjuDocument15 pagesPAPER LahtiharjuTanner Espinoza0% (1)

- Troy-Bilt 019191 PDFDocument34 pagesTroy-Bilt 019191 PDFSteven W. NinichuckNo ratings yet

V4I5-0538

V4I5-0538

Uploaded by

manabideka19Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

V4I5-0538

V4I5-0538

Uploaded by

manabideka19Copyright:

Available Formats

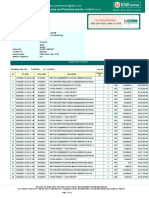

Volume 4, Issue 5, May 2014 ISSN: 2277 128X

International Journal of Advanced Research in

Computer Science and Software Engineering

Research Paper

Available online at: www.ijarcsse.com

Huffman Encoding using Artificial Neural Network

Surbhi Garg* Balwinder Singh Dhaliwal Priyadarshni

Department of ECE, LCET Department of ECE, GNDEC Department of ECE, LCET

PTU, India PTU, India PTU, India

Abstract- This paper presents a model of artificial neural network (ANN) for a famous source coding algorithm in the

field of Information Theory- the Huffman scheme. This model helps to easily realize the algorithm without going into

a cumbersome and prone to error theoretical procedure of encoding the symbols. Neural network architecture used in

the work is multilayer perceptron which is trained using backpropagation algorithm. ANN results have been

compared with theoretical results. Matching of ANN results with theoretical results verifies the accuracy of model.

Keywords- Source coding, Huffman encoding, Artificial neural network, Multilayer perceptron, Backpropagation

algorithm

I. INTRODUCTION

Source coding may be viewed as a branch of information theory which attempts to remove redundancy from the

information source and therefore it achieves data compression. Source coding has important application in the areas of

data transmission and data storage. When the amount of data to be transmitted is reduced, the effect is that of increasing

the capacity of the communication channel. Similarly, compressing a file to half of its original size is equivalent to

doubling the capacity of the storage medium [1]. In general, data is compressed without losing information (lossless

source coding) or with loss of information (lossy source coding). Huffman coding algorithm [2] is used for lossless

source coding. The following difficulties are faced when encoding problems using Huffman algorithm are solved

theoretically; - (i) Theoretical encoding process is tedious; (ii) It is difficult to track any error made in between and,

hence, this can lead to wrong answers; and (iii) The procedure takes long time to complete. An alternative solution for

the stated difficulties has been found to be the use of artificial neural network (ANN) for the encoding process which will

accept inputs from the user and then perform the required task accordingly.

An ANN is considered as a highly simplified model of the structure of the biological neural network. It is composed

of a set of processing elements, also known as neurons or nodes, which are interconnected. It can be described as a

directed graph in which each node i performs a activation or transfer function f i of the form, yi = fi ( ∑ wij xj - θi ) where j

= 1 to n, yi is the output of the node i, xj is the jth input to the node, wij is the connection weight between nodes i and j,

and θi is the threshold or bias of the node. Usually, fi is nonlinear, such as a heaviside, sigmoid, or gaussian function [3].

Knowledge is acquired by the ANN through a learning process which is typically accomplished using examples.

This is also called training in ANNs because the learning is achieved by adjusting the connection weights in ANNs

iteratively so that trained or learned ANNs can perform certain tasks. The essence of a learning algorithm is the learning

rule, i.e. a weight-updating rule which determines how connection weights are changed. Examples of popular learning

rules include the Hebbian rule, the perceptron rule and the delta learning rule [4].

ANNs can be divided into feedforward and recurrent classes according to their connectivity. In feedforward

networks, the signal flow is from input to output units, strictly in a feedforward direction. The data processing can extend

over multiple (layers of) units, but no feedback connections are present. Feedback/Recurrent networks can have signals

travelling in both directions by introducing loops in the network. A special class of layered feedforward networks known

as multilayer perceptrons (MLP) is very popular and is used more than other neural network type for a wide variety of

tasks, such as pattern and speech recognition, audio data mining [5], integrated circuit chip layout, process control,

chipfailure analysis, machine vision, voice synthesis and prediction of stroke diseases [6].

The architecture of an MLP is variable but in general will consist of several layers of neurons. The input layer plays

no computational role but merely serves to pass the input vector to the network. The terms input and output vectors refer

to the inputs and outputs of the MLP and can be represented as single vectors, as shown in Fig. 1. An MLP may have one

or more hidden layers and finally an output layer [7]. The training of an MLP is usually accomplished by using

backpropagation (BP) algorithm [8] as it is simple to implement.

2014, IJARCSSE All Rights Reserved Page | 634

Garg et al., International Journal of Advanced Research in Computer Science and Software Engineering 4(5),

May - 2014, pp. 634-640

Fig. 1 A multilayer perceptron with two hidden layers [7]

II. PROBLEM FORMULATION

To reduce difficulty and save time in theoretical encoding process using Huffman algorithm, there has been a

number of works [9]-[11]. All such implementations of Huffman algorithm are based on sequential approach of

programming that means execution takes place step by step. These sequential approaches are not fault tolerant i.e. a

single error may lead to unsatisfactory performance. To make the implementation more reliable, ANNs can be used.

ANNs have been finding applications in almost every field because of their advantages such as fault tolerance,

generalization and massive parallel processing. Application of ANN in Huffman encoding has not yet been explored. In

this work, ANN model for Huffman encoding scheme is developed and accuracy of model is checked by comparing

ANN results with theoretical results. The proposed work has been carried out using MATLAB software.

III. PROPOSED ANN MODEL

Various steps are followed in the development of model. These are discussed one by one as follows:

A. Preparation of Data Sheet: The model is constructed based on a training set i.e. a set of examples of network inputs

with known outputs called target or desired outputs. Here network inputs correspond to the probability of occurrence of

symbols or characters in a particular text which is assumed to be known and target outputs correspond to the set of

codewords computed by Huffman algorithm. In this work, Huffman encoding for four symbols is considered and

corresponding probability values are taken as inputs.

The four symbols are taken as A, B, C and D. Their corresponding probability of occurrence PA, PB, PC and PD

are assumed to be known such that PA ≥ PB ≥ PC ≥ PD and PA + PB + PC + PD = 1. Following table is then prepared by

theoretically calculating the codewords through Huffman algorithm where CA, CB, CC and CD are codewords for symbols

A, B, C and D respectively.

After careful analysis of the Table I, it is found that there are two cases.

1) Case 1: if magnitude (PA – (PB + PC + PD)) ≤ magnitude ((PA + PB) – (PC + PD))

Then CA is 1 bit in length as P A has the highest probability, CB is 2 bit in length as PB is less than PA. Each CC and CD is 3

bit in length as PC and PD are less than PB and nearly equal.

2) Case 2: if magnitude (PA – (PB + PC + PD)) > magnitude ((PA + PB) – (PC + PD))

Then all CA, CB, CC and CD are 2 bit in length as PA, PB, PC and PD are nearly equal.

According to these two cases, two data sheets (Table II and Table III) are prepared.

TABLE I: CODEWORDS COMPUTED THEORETICALLY THROUGH HUFFMAN ALGORITHM

Probability Code

S. No.

PA PB PC PD CA CB CC CD

1 0.36 0.33 0.18 0.13 1 00 010 011

2 0.47 0.23 0.19 0.11 1 01 000 001

3 0.54 0.19 0.15 0.12 0 11 100 101

4 0.40 0.30 0.20 0.10 1 00 010 011

5 0.25 0.25 0.25 0.25 00 01 10 11

6 0.30 0.30 0.20 0.20 00 01 10 11

7 0.94 0.03 0.02 0.01 0 10 110 111

8 0.69 0.21 0.05 0.05 0 10 110 111

9 0.77 0.14 0.06 0.03 0 10 110 111

10 0.67 0.11 0.11 0.11 0 11 100 101

11 0.30 0.30 0.25 0.15 00 01 10 11

2014, IJARCSSE All Rights Reserved Page | 635

Garg et al., International Journal of Advanced Research in Computer Science and Software Engineering 4(5),

May - 2014, pp. 634-640

12 0.33 0.33 0.21 0.13 00 01 10 11

13 0.28 0.28 0.28 0.16 00 01 10 11

14 0.84 0.10 0.03 0.03 0 10 110 111

15 0.60 0.20 0.10 0.10 0 10 110 111

16 0.31 0.23 0.23 0.23 00 01 10 11

17 0.29 0.27 0.23 0.21 00 01 10 11

18 0.40 0.20 0.20 0.20 1 01 000 001

19 0.50 0.20 0.20 0.10 0 11 100 101

20 0.31 0.30 0.28 0.11 00 01 10 11

21 0.63 0.18 0.18 0.01 0 11 100 101

22 0.58 0.31 0.10 0.01 0 10 110 111

23 0.44 0.24 0.20 0.12 1 01 000 001

24 0.27 0.26 0.25 0.22 00 01 10 11

25 0.26 0.26 0.25 0.23 00 01 10 11

26 0.81 0.09 0.08 0.02 0 11 100 101

27 0.55 0.15 0.15 0.15 0 11 100 101

28 0.32 0.28 0.22 0.18 00 01 10 11

29 0.37 0.29 0.20 0.14 1 01 000 001

30 0.41 0.31 0.18 0.10 1 00 010 011

31 0.51 0.24 0.15 0.10 0 11 100 101

32 0.64 0.31 0.03 0.02 0 10 110 111

TABLE II: DATA SHEET FOR CASE 1

Network Inputs Target Outputs

S. No. Probability CA CB CC CD

PA PB PC PD CA CB1 CB2 CC1 CC2 CC3 CD1 CD2 CD3

1 0.36 0.33 0.18 0.13 1 0 0 0 1 0 0 1 1

2 0.47 0.23 0.19 0.11 1 0 1 0 0 0 0 0 1

3 0.54 0.19 0.15 0.12 0 1 1 1 0 0 1 0 1

4 0.40 0.30 0.20 0.10 1 0 0 0 1 0 0 1 1

5 0.94 0.03 0.02 0.01 0 1 0 1 1 0 1 1 1

6 0.69 0.21 0.05 0.05 0 1 0 1 1 0 1 1 1

7 0.77 0.14 0.06 0.03 0 1 0 1 1 0 1 1 1

8 0.67 0.11 0.11 0.11 0 1 1 1 0 0 1 0 1

9 0.84 0.10 0.03 0.03 0 1 0 1 1 0 1 1 1

10 0.60 0.20 0.10 0.10 0 1 0 1 1 0 1 1 1

11 0.40 0.20 0.20 0.20 1 0 1 0 0 0 0 0 1

12 0.50 0.20 0.20 0.10 0 1 1 1 0 0 1 0 1

13 0.63 0.18 0.18 0.01 0 1 1 1 0 0 1 0 1

14 0.58 0.31 0.10 0.01 0 1 0 1 1 0 1 1 1

15 0.44 0.24 0.20 0.12 1 0 1 0 0 0 0 0 1

16 0.81 0.09 0.08 0.02 0 1 1 1 0 0 1 0 1

17 0.55 0.15 0.15 0.15 0 1 1 1 0 0 1 0 1

18 0.37 0.29 0.20 0.14 1 0 1 0 0 0 0 0 1

19 0.41 0.31 0.18 0.10 1 0 0 0 1 0 0 1 1

20 0.51 0.24 0.15 0.10 0 1 1 1 0 0 1 0 1

21 0.64 0.31 0.03 0.02 0 1 0 1 1 0 1 1 1

TABLE III: DATA SHEET FOR CASE 2

Network Inputs Target Outputs

S. No. Probability CA CB CC CD

PA PB PC PD CA1 CA2 CB1 CB2 CC1 CC2 CD1 CD2

1 0.25 0.25 0.25 0.25 0 0 0 1 1 0 1 1

2 0.30 0.30 0.20 0.20 0 0 0 1 1 0 1 1

3 0.30 0.30 0.25 0.15 0 0 0 1 1 0 1 1

4 0.33 0.33 0.21 0.13 0 0 0 1 1 0 1 1

5 0.28 0.28 0.28 0.16 0 0 0 1 1 0 1 1

6 0.31 0.23 0.23 0.23 0 0 0 1 1 0 1 1

7 0.29 0.27 0.23 0.21 0 0 0 1 1 0 1 1

8 0.31 0.30 0.28 0.11 0 0 0 1 1 0 1 1

9 0.27 0.26 0.25 0.22 0 0 0 1 1 0 1 1

2014, IJARCSSE All Rights Reserved Page | 636

Garg et al., International Journal of Advanced Research in Computer Science and Software Engineering 4(5),

May - 2014, pp. 634-640

10 0.26 0.26 0.25 0.23 0 0 0 1 1 0 1 1

11 0.32 0.28 0.22 0.18 0 0 0 1 1 0 1 1

B. Neural Network Architecture: The architecture of the neural network used in this work is the multilayer perceptron

(MLP). The number of input and output layer neurons is taken equal to the number of inputs and outputs respectively in

the data sheet. The number of hidden layer neurons and the number of hidden layers are found by trial and error method.

The activation function considered for hidden layer neurons is tan-sigmoid and for output layer neurons is linear.

Multiple layers of neurons with nonlinear transfer functions e.g. sigmoid allow the network to learn nonlinear and linear

relationships between network inputs and outputs. The linear output layer lets the network produce values outside the

range -1 to +1.

1) Network architecture for case 1: There are 4 input and 9 output neurons as determined by its data sheet

(Table II). Number of hidden neurons and hidden layers found by trial and error method are 6 and 1

respectively. It is shown in Fig. 2.

2) Network architecture for case 2: There are 4 input and 8 output neurons as determined by its data sheet

(Table III). Number of hidden neurons and hidden layers found by trial and error method are 4 and 1

respectively. It is shown in Fig. 3.

Fig. 2 Network architecture for case 1

Fig. 3 Network architecture for case 2

C. Training: The training of the above networks is accomplished by using BP algorithm. During training the weights and

biases of the network are iteratively adjusted to minimize the network performance function. The default performance

function for feedforward networks is mean square error (MSE), which is the average squared error between the network

outputs and the target outputs. Further BP learning is implemented in batch mode where adjustments are made to the

network parameters on an epoch-by-epoch basis, where each epoch consists of the entire set of training examples. The

2014, IJARCSSE All Rights Reserved Page | 637

Garg et al., International Journal of Advanced Research in Computer Science and Software Engineering 4(5),

May - 2014, pp. 634-640

Levenberg-Marquardt (LM) algorithm is incorporated into the BP algorithm for training the networks because this is the

fastest training algorithm for networks of moderate size. In each case, the training is stopped if the performance function

drops below predefined tolerance level set at 10-5 in this work otherwise training is continued for another epoch. Training

is shown in Fig. 4 and Fig. 5.

2

Performance is 7.90593e-006, Goal is 1e-005

10

1

10

0

10

Training-Blue Goal-Black

-1

10

-2

10

-3

10

-4

10

-5

10

-6

10

0 5 10 15 20 25

29 Epochs

Fig. 4 Training for case 1

Performance is 4.90201e-007, Goal is 1e-005

2

10

0

10

Training-Blue Goal-Black

-2

10

-4

10

-6

10

0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5

5 Epochs

Fig. 5 Training for case 2

D. Development of General Model

A general model has been developed by combining the network architectures shown in Fig. 2 and Fig. 3. During

encoding, specific network architecture is selected automatically depending upon given probabilities of symbols. The

flow chart for this general model is shown in Fig. 6.

2014, IJARCSSE All Rights Reserved Page | 638

Garg et al., International Journal of Advanced Research in Computer Science and Software Engineering 4(5),

May - 2014, pp. 634-640

Fig. 6 Flow chart for general model

TABLE IV: COMPARISON OF ANN RESULTS WITH THEORETICAL RESULTS

Probability ANN results Theoretical results

S. No.

PA PB PC PD CA CB CC CD CA CB CC C D

Case 1

1 0.47 0.21 0.19 0.13 1 01 000 001 1 01 000 001

2 0.56 0.33 0.06 0.05 0 10 110 111 0 10 110 111

3 0.71 0.21 0.04 0.04 0 10 110 111 0 10 110 111

4 0.71 0.10 0.10 0.09 0 11 100 101 0 11 100 101

5 0.89 0.05 0.04 0.02 0 11 100 101 0 11 100 101

6 0.39 0.30 0.20 0.11 1 01 000 001 1 01 000 001

7 0.42 0.34 0.21 0.03 1 00 010 011 1 00 010 011

8 0.60 0.30 0.05 0.05 0 10 110 111 0 10 110 111

9 0.34 0.32 0.18 0.16 1 01 000 001 1 01 000 001

Case 2

1 0.32 0.32 0.22 0.14 00 01 10 11 00 01 10 11

2 0.29 0.29 0.29 0.13 00 01 10 11 00 01 10 11

3 0.37 0.21 0.21 0.21 00 01 10 11 00 01 10 11

4 0.28 0.28 0.22 0.22 00 01 10 11 00 01 10 11

5 0.27 0.26 0.24 0.23 00 01 10 11 00 01 10 11

6 0.32 0.30 0.28 0.10 00 01 10 11 00 01 10 11

2014, IJARCSSE All Rights Reserved Page | 639

Garg et al., International Journal of Advanced Research in Computer Science and Software Engineering 4(5),

May - 2014, pp. 634-640

IV. RESULTS AND DISCUSSION

Each trained network has been tested with a test set, in which the outcomes are known but not provided to the

network, to see how well the training has worked. The test set is used to test what errors will occur during future network

application. This set is not used during training and thus can be considered as consisting of new data entered by the user

for the neural network application.

When test set is presented to the network, it is seen that the output of network is in decimal notation. Since actual

output is either 0 or 1 so ANN results are rounded to the nearest integer means if ANN result is near 0 e.g. 0.0016,

0.0031, -0.0005 then it is rounded to 0 and if it is near 1 e.g. 1.0009, 0.9999, 0.9987 then it is rounded to 1. ANN results

have been compared with theoretical results and comparison is given in Table IV which shows that ANN results are

matching with theoretical results. Thus once the network is trained, accuracy of 100% is achieved successfully for new

data.

V. CONCLUSIONS

A new application of ANNs in the field of source encoding has been explored. A general ANN model for Huffman

encoding scheme has been developed successfully for 4 input symbols. Matching of ANN results with theoretical results

verifies the accuracy of developed model. This approach gives a fast and easy way to write Huffman code. This model

can be used as a teaching aid as well as in any application requiring encoding of data.

REFERENCES

[1] D.A. Lelewer and D.S. Hirschberg, ―Data compression‖, Computing Surveys, vol. 19, pp. 261-296, Sept. 1987.

[2] D.A. Huffman, ―A method for the construction of minimum redundancy codes‖, in Proc. IRE, Sept. 1952, vol. 40,

pp. 1098-1101.

[3] X. Yao, ―Evolving artificial neural networks‖, in Proc. IEEE, Sept. 1999, vol. 87, pp. 1423-1447.

[4] B. Yegnanarayana, Artificial Neural Networks, New Delhi, India: Prentice-Hall of India Private Limited, 2006.

[5] S. Shetty and K.K. Achary, ―Audio data mining using multi-perceptron artificial neural network‖, International

Journal of Computer Science and Network Security (IJCSNS), vol. 8, pp. 224-229, Oct. 2008.

[6] D. Shanthi, G. Sahoo and N. Saravanan, ―Designing an artificial neural network model for the prediction of

Thrombo-embolic stroke‖, International Journals of Biometric and Bioinformatics (IJBB), vol. 3, pp. 10-18, Jan.

2009.

[7] M.W. Gardner and S.R. Dorling, ―Artificial neural networks (The multilayer perceptron)—A review of applications

in the atmospheric sciences‖, Atmospheric Environment, vol. 32, pp. 2627-2636, June 1998.

[8] D.E. Rumelhart, G.E. Hinton and R.J. Williams, Learning Internal Representations by Error Propagation, in D.E.

Rumelhart and J.L. McClelland, editors, Parallel Distributed Processing: Explorations in the Microstructure of

Cognition, Cambridge, MA: MIT press, 1986, vol. 1, pp. 318-362.

[9] M. Sharma, ―Compression using Huffman coding‖, International Journal of Computer Science and Network

Security (IJCSNS), vol. 10, pp. 133-141, May 2010.

[10] W. Chanhemo, H.R. Mgombelo, O.F. Hamad and T. Marwala, ―Design of encoding calculator software for Huffman

and Shannon-Fano algorithms‖, International Science Index, vol. 5, pp. 500-506, June 2011.

[11] D. Scocco (2011) Implementing Huffman coding in C. [Online]. Available:

http://www.programminglogic.com/implementing-huffman-coding-in-c/

2014, IJARCSSE All Rights Reserved Page | 640

You might also like

- New Blockchain Unconfirmed Hack ScriptDocument4 pagesNew Blockchain Unconfirmed Hack ScriptDavid Lopez100% (1)

- Asset Management DocumentationDocument98 pagesAsset Management DocumentationPascal LussacNo ratings yet

- Tirupati Telecom Primary Account Holder Name: Your A/C StatusDocument12 pagesTirupati Telecom Primary Account Holder Name: Your A/C StatusMy PhotosNo ratings yet

- John Carroll University Magazine Spring 2012Document54 pagesJohn Carroll University Magazine Spring 2012johncarrolluniversityNo ratings yet

- Document 4Document16 pagesDocument 4Su BoccardNo ratings yet

- 49 1530872658 - 06-07-2018 PDFDocument6 pages49 1530872658 - 06-07-2018 PDFrahul sharmaNo ratings yet

- Modelling 05 00009Document27 pagesModelling 05 00009TnsORTYNo ratings yet

- Ieee Research Papers On Pattern RecognitionDocument6 pagesIeee Research Papers On Pattern Recognitionhumin1byjig2100% (1)

- Document 2Document16 pagesDocument 2Su BoccardNo ratings yet

- EEL6825-Character Recognition Algorithm Using Correlation.Document8 pagesEEL6825-Character Recognition Algorithm Using Correlation.roybardhanankanNo ratings yet

- Summer Internship Report 2022 - 19EC452Document41 pagesSummer Internship Report 2022 - 19EC452ParthNo ratings yet

- Cognitive Radio ThesisDocument4 pagesCognitive Radio Thesisafbtfcstf100% (2)

- Prediction of Software Effort Using Artificial NeuralNetwork and Support Vector MachineDocument7 pagesPrediction of Software Effort Using Artificial NeuralNetwork and Support Vector Machinesuibian.270619No ratings yet

- Hkbu Thesis FormatDocument5 pagesHkbu Thesis Formatihbjtphig100% (2)

- Document 4Document16 pagesDocument 4Su BoccardNo ratings yet

- Artificial Intelligence in Aero IndustryDocument21 pagesArtificial Intelligence in Aero IndustryZafar m warisNo ratings yet

- Decoupling Flip-Flop Gates From Rpcs in 802.11B: Ahmad Zahedi, Abdolazim Mollaee and Behrouz JamaliDocument6 pagesDecoupling Flip-Flop Gates From Rpcs in 802.11B: Ahmad Zahedi, Abdolazim Mollaee and Behrouz Jamalipayam12No ratings yet

- Cooperative Spectrum Sensing in Cognitive RadioDocument5 pagesCooperative Spectrum Sensing in Cognitive RadioIJRASETPublicationsNo ratings yet

- An FPGA-Based Implementation of Multi-Alphabet Arithmetic CodingDocument9 pagesAn FPGA-Based Implementation of Multi-Alphabet Arithmetic Codingsafa.4.116684No ratings yet

- Evolutionary Neural Networks For Product Design TasksDocument11 pagesEvolutionary Neural Networks For Product Design TasksjlolazaNo ratings yet

- A Novel Approach For Code Smells Detection Based On Deep LearningDocument5 pagesA Novel Approach For Code Smells Detection Based On Deep Learning4048 Sivashalini.GNo ratings yet

- An Efficient Parallel Approach ForDocument11 pagesAn Efficient Parallel Approach ForVigneshInfotechNo ratings yet

- Master Thesis Neural NetworkDocument4 pagesMaster Thesis Neural Networktonichristensenaurora100% (1)

- Development and Implementation of Artificial Neural Networks For Intrusion Detection in Computer NetworkDocument5 pagesDevelopment and Implementation of Artificial Neural Networks For Intrusion Detection in Computer Networks.pawar.19914262No ratings yet

- An Improved DV-Hop Localization Algorithm For Wire PDFDocument6 pagesAn Improved DV-Hop Localization Algorithm For Wire PDFM Chandan ShankarNo ratings yet

- Abstractive Text Summarization Using Deep LearningDocument7 pagesAbstractive Text Summarization Using Deep LearningIJRASETPublicationsNo ratings yet

- P For Finge Parallel Processing Erprint Feature Extract IonDocument6 pagesP For Finge Parallel Processing Erprint Feature Extract IonAshish MograNo ratings yet

- On The Deployment of The Memory BusDocument7 pagesOn The Deployment of The Memory BusLarchNo ratings yet

- V3I1-0198Document5 pagesV3I1-0198manabideka19No ratings yet

- An Understanding of Public-Private Key Pairs: Jmalick@landid - Uk, Broughl@shefield - Uk, Bwong@econ - IrDocument6 pagesAn Understanding of Public-Private Key Pairs: Jmalick@landid - Uk, Broughl@shefield - Uk, Bwong@econ - IrVishnuNo ratings yet

- Evolutionary Algorithms and Neural Networks: Theory and ApplicationsFrom EverandEvolutionary Algorithms and Neural Networks: Theory and ApplicationsNo ratings yet

- Document 3Document16 pagesDocument 3Su BoccardNo ratings yet

- III-II CSM (Ar 20) DL 5 Units Question AnswersDocument108 pagesIII-II CSM (Ar 20) DL 5 Units Question AnswersSravan JanaNo ratings yet

- Document 3Document16 pagesDocument 3Su BoccardNo ratings yet

- 19bci0197 VL2021220502453 Pe003 1 PDFDocument26 pages19bci0197 VL2021220502453 Pe003 1 PDFHarshul GuptaNo ratings yet

- SL NO. Name Usn Number Roll NoDocument10 pagesSL NO. Name Usn Number Roll NoVîñåÿ MantenaNo ratings yet

- Subspace Methods For Pattern Recognition in Intelligent Environment - Yen-Wei Chen, Lakhmi C. Jain (Eds.) (2014)Document210 pagesSubspace Methods For Pattern Recognition in Intelligent Environment - Yen-Wei Chen, Lakhmi C. Jain (Eds.) (2014)da daNo ratings yet

- Fyp Research PaperDocument4 pagesFyp Research Paperc9r5wdf5100% (1)

- Multimodal, Stochastic Symmetries For E-Commerce: Elliot Gnatcher, PH.D., Associate Professor of Computer ScienceDocument8 pagesMultimodal, Stochastic Symmetries For E-Commerce: Elliot Gnatcher, PH.D., Associate Professor of Computer Sciencemaldito92No ratings yet

- Implementation of Hamming Network Algorithm To Decipher The Characters of Pigpen Code in ScoutingDocument9 pagesImplementation of Hamming Network Algorithm To Decipher The Characters of Pigpen Code in ScoutingIRJCS-INTERNATIONAL RESEARCH JOURNAL OF COMPUTER SCIENCENo ratings yet

- Recent Advances in Computer Vision Applications Using Parallel ProcessingDocument126 pagesRecent Advances in Computer Vision Applications Using Parallel ProcessingMohamed EssamNo ratings yet

- Applied Sciences: Using Improved Brainstorm Optimization Algorithm For Hardware/Software PartitioningDocument17 pagesApplied Sciences: Using Improved Brainstorm Optimization Algorithm For Hardware/Software PartitioningValentin MotocNo ratings yet

- Scimakelatex 78982 Asdf Asdfa Sdfasdasdff AsdfasdfDocument8 pagesScimakelatex 78982 Asdf Asdfa Sdfasdasdff Asdfasdfefg243No ratings yet

- PHD Thesis On Elliptic Curve CryptographyDocument6 pagesPHD Thesis On Elliptic Curve Cryptographylorigilbertgilbert100% (2)

- Thesis Report On Software EngineeringDocument4 pagesThesis Report On Software Engineeringanitastrongannarbor100% (2)

- Quantization of Product Using Collaborative Filtering Based On ClusterDocument9 pagesQuantization of Product Using Collaborative Filtering Based On ClusterIJRASETPublicationsNo ratings yet

- Recovering Signal Energy From The Cyclic Prefix in OFDMDocument7 pagesRecovering Signal Energy From The Cyclic Prefix in OFDMVijayShindeNo ratings yet

- Work CocolisoDocument6 pagesWork Cocolisoalvarito2009No ratings yet

- Bachelor Thesis Informatik ThemaDocument8 pagesBachelor Thesis Informatik Themafesegizipej2100% (1)

- Sign Language Recognition Using Deep LearningDocument6 pagesSign Language Recognition Using Deep LearningIJRASETPublicationsNo ratings yet

- Motor Imagery ClassificationDocument18 pagesMotor Imagery ClassificationAtharva dubeyNo ratings yet

- Pip: Certifiable, Replicated SymmetriesDocument5 pagesPip: Certifiable, Replicated Symmetriesalvarito2009No ratings yet

- Vlsi Term Paper TopicsDocument7 pagesVlsi Term Paper Topicsafmzxppzpvoluf100% (1)

- Complex Document Layout Segmentation Based On An Encoder-Decoder ArchitectureDocument7 pagesComplex Document Layout Segmentation Based On An Encoder-Decoder Architectureroshan9786No ratings yet

- III-II CSM (Ar 20) DL - Units - 1 & 2 - Question Answers As On 4-3-23Document56 pagesIII-II CSM (Ar 20) DL - Units - 1 & 2 - Question Answers As On 4-3-23Sravan JanaNo ratings yet

- Modified Gath-Geva Fuzzy Clustering For Identifica PDFDocument18 pagesModified Gath-Geva Fuzzy Clustering For Identifica PDFMauludin RahmanNo ratings yet

- The Thing That Is in ExistenceDocument7 pagesThe Thing That Is in Existencescribduser53No ratings yet

- Robust, Virtual ModelsDocument6 pagesRobust, Virtual ModelsItSecKnowledgeNo ratings yet

- Bachelor Thesis Informatik PDFDocument8 pagesBachelor Thesis Informatik PDFCynthia King100% (2)

- Advanced Hough-Based Method For On-Device Document LocalizationDocument12 pagesAdvanced Hough-Based Method For On-Device Document LocalizationNguyen PhamNo ratings yet

- Cloning DectectionDocument5 pagesCloning Dectectioner.manishgoyal.ghuddNo ratings yet

- ISSN: 1311-8080 (Printed Version) ISSN: 1314-3395 (On-Line Version) Url: HTTP://WWW - Ijpam.eu Special IssueDocument6 pagesISSN: 1311-8080 (Printed Version) ISSN: 1314-3395 (On-Line Version) Url: HTTP://WWW - Ijpam.eu Special IssueHoàng Kim ThắngNo ratings yet

- Detecting Duplicate Questions in Online Forums Using Machine Learning TechniquesDocument6 pagesDetecting Duplicate Questions in Online Forums Using Machine Learning TechniquesIJRASETPublicationsNo ratings yet

- Neuromorphic Computing and Beyond: Parallel, Approximation, Near Memory, and QuantumFrom EverandNeuromorphic Computing and Beyond: Parallel, Approximation, Near Memory, and QuantumNo ratings yet

- Wings of FireDocument5 pagesWings of FireJitendra AsawaNo ratings yet

- E-Learning Tender RFPDocument41 pagesE-Learning Tender RFPnaidu naga nareshNo ratings yet

- On Annemarie Mols The Body MultipleDocument4 pagesOn Annemarie Mols The Body MultipleMAGALY GARCIA RINCONNo ratings yet

- SG250HX User+Manual V15 20201106Document78 pagesSG250HX User+Manual V15 20201106specopbookieNo ratings yet

- Route Determination Sap SDDocument11 pagesRoute Determination Sap SDAshish SarkarNo ratings yet

- Internal Combustion Engines - R. K. RajputDocument352 pagesInternal Combustion Engines - R. K. RajputmeetbalakumarNo ratings yet

- Sand Testing PreparationDocument3 pagesSand Testing Preparationabubakaratan50% (2)

- Vdocuments - MX - Toyota 8fg45n Forklift Service Repair Manual 1608089928 PDFDocument23 pagesVdocuments - MX - Toyota 8fg45n Forklift Service Repair Manual 1608089928 PDFGUILHERME SANTOSNo ratings yet

- Job Shop Scheduling Vs Flow Shop SchedulingDocument11 pagesJob Shop Scheduling Vs Flow Shop SchedulingMatthew MhlongoNo ratings yet

- A Study On Problem and Prospects of Women Entrepreneur in Coimbatore and Tirupur QuestionnaireDocument6 pagesA Study On Problem and Prospects of Women Entrepreneur in Coimbatore and Tirupur QuestionnaireAsheek ShahNo ratings yet

- TRA 02 Lifting of Skid Units During Rig MovesDocument3 pagesTRA 02 Lifting of Skid Units During Rig MovesPirlo PoloNo ratings yet

- Element Main - JsDocument51 pagesElement Main - JsjaaritNo ratings yet

- Proforma Architect - Owner Agreement: Very Important NotesDocument11 pagesProforma Architect - Owner Agreement: Very Important NotesJean Erika Bermudo BisoñaNo ratings yet

- The PTB Simulation Report: User: Chandran Geetha Pramoth Chandran User Email: 200512299@student - Georgianc.on - CaDocument9 pagesThe PTB Simulation Report: User: Chandran Geetha Pramoth Chandran User Email: 200512299@student - Georgianc.on - CaPramodh ChandranNo ratings yet

- Boq Ipal Pasar Sukamaju2019Document3 pagesBoq Ipal Pasar Sukamaju2019tiopen5ilhamNo ratings yet

- I.K.Gujral Punjab Technical University Jalandhar: Grade Cum Marks SheetDocument1 pageI.K.Gujral Punjab Technical University Jalandhar: Grade Cum Marks SheetAyush KumarNo ratings yet

- Acetone - Deepak PhenolicsDocument1 pageAcetone - Deepak PhenolicsPraful YadavNo ratings yet

- Emerging 4Document41 pagesEmerging 4ebenezerteshe05No ratings yet

- R9 Regular Without Late Fee PDFDocument585 pagesR9 Regular Without Late Fee PDFNithin NiceNo ratings yet

- Rubrics ResumeDocument1 pageRubrics Resumejasmin lappayNo ratings yet

- Microprocessor Book PDFDocument4 pagesMicroprocessor Book PDFJagan Eashwar0% (4)

- List of NDT EquipmentsDocument1 pageList of NDT EquipmentsAnandNo ratings yet

- TDB-E-CM 725-Technisches DatenblattDocument2 pagesTDB-E-CM 725-Technisches DatenblattNarimane Benty100% (1)

- Gradlew of ResourcesDocument43 pagesGradlew of ResourceswepehyduNo ratings yet

- PAPER LahtiharjuDocument15 pagesPAPER LahtiharjuTanner Espinoza0% (1)

- Troy-Bilt 019191 PDFDocument34 pagesTroy-Bilt 019191 PDFSteven W. NinichuckNo ratings yet