Professional Documents

Culture Documents

EXP-3-To Implement CART Decision Tree Algorithm.docx

EXP-3-To Implement CART Decision Tree Algorithm.docx

Uploaded by

badgujarvarad800Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

EXP-3-To Implement CART Decision Tree Algorithm.docx

EXP-3-To Implement CART Decision Tree Algorithm.docx

Uploaded by

badgujarvarad800Copyright:

Available Formats

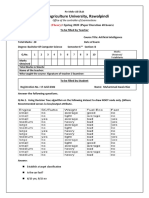

Machine Learning Laboratory (PECO6051L)

Laboratory Report

Experiment No - 3

Batch -

Date of Experiment: __________ Date of Submission: __________

Title: To implement CART decision tree algorithm

___________________________________________________________

Evaluation

1) Attendance [2] ----------------

2) Lab Performance [2] ----------------

3) Oral [1] ----------------

Overall Marks [5] ----------------

Subject Incharge

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Experiment No. 3

TITLE: To implement CART decision tree algorithm

THEORY:

Decision Tree

Decision tree is a non-parametric supervised learning technique, it is a tree of multiple decision

rules, all these rules will be derived from the data features. It is one of most easy to understand &

explainable machine learning algorithm. This ML algorithm is the most fundamental components

of Random Forest, which are most popular & powerful ML algorithm.

Structure of Decision Tree

The figure shows how a decision tree would look like. Each internal node represents a

segment or region. With respect to tree analogy, segments or regions are nodes or leaves

of the tree.

Root Node: This is the first node which is our training data set.

Internal Node: This is the point where subgroup is split to a new sub-group or leaf node.

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

We can call this as a decision node as well because this is where node splits further based

on the best attribute of your sub-group.

Leaf Node: Final node from any internal node, this holds the decision.

About nodes in Decision Tree

As mentioned before Decision tree is a tree like structure which will have nested nodes,

the splitting of one node to another happens based on a threshold value of an attribute.

Decision tree algorithm splits the training set (root node) to sub-groups - internal nodes

& any internal node with final sub-group will be the leaf node which we can term it as

a Recursive partitioning.

To split a node Decision Tree algorithm needs best attribute & threshold value. Selection of best

attribute & threshold value pair (f, t) happens based on below algorithms which will give you the

purest nodes. The below algorithms helps to find the measurements of the best attributes:

CART algorithm : Gini Index

ID3 algorithm : Information Gain

C4.5 algorithm : Gain Ratio

CART Algorithm

This algorithm can be used for both classification & regression. CART algorithm uses Gini Index

criterion to split a node to a sub-node. It start with the training set as a root node, after successfully

splitting the root node in two, it splits the subsets using the same logic & again split the sub-

subsets, recursively until it finds further splitting will not give any pure sub-nodes or maximum

number of leaves in a growing tree or termed it as a Tree pruning.

How to calculate Gini Index?

In Gini Index, P is the probability of class i & there is total c classes.

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Data set

There are 14 instances of golf playing decisions based on outlook, temperature, humidity and wind

factors.

Day Outlook Temp. Humidity Wind Decision

1 Sunny Hot High Weak No

2 Sunny Hot High Strong No

3 Overcast Hot High Weak Yes

4 Rain Mild High Weak Yes

5 Rain Cool Normal Weak Yes

6 Rain Cool Normal Strong No

7 Overcast Cool Normal Strong Yes

8 Sunny Mild High Weak No

9 Sunny Cool Normal Weak Yes

10 Rain Mild Normal Weak Yes

11 Sunny Mild Normal Strong Yes

12 Overcast Mild High Strong Yes

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

13 Overcast Hot Normal Weak Yes

14 Rain Mild High Strong No

Gini index

Gini index is a metric for classification tasks in CART. It stores sum of squared probabilities of

each class. We can formulate it as illustrated below.

Gini = 1 – Σ (Pi) 2 for i=1 to number of classes

Outlook

Outlook is a nominal feature. It can be sunny, overcast or rain. Final decisions for outlook feature

are as below.

Outlook Yes No Number of instances

Sunny 2 3 5

Overcast 4 0 4

Rain 3 2 5

Gini (Outlook=Sunny) = 1 – (2/5)2 – (3/5)2 = 1 – 0.16 – 0.36 = 0.48

Gini (Outlook=Overcast) = 1 – (4/4)2 – (0/4)2 = 0

Gini (Outlook=Rain) = 1 – (3/5)2 – (2/5)2 = 1 – 0.36 – 0.16 = 0.48

Then, we will calculate weighted sum of Gini indexes for outlook feature.

Gini (Outlook) = (5/14) x 0.48 + (4/14) x 0 + (5/14) x 0.48 = 0.171 + 0 + 0.171 = 0.342

Temperature

Similarly, temperature is a nominal feature and it could have 3 different values: Cool, Hot and

Mild. Let’s summarize decisions for temperature feature.

Temperature Yes No Number of instances

Hot 2 2 4

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Cool 3 1 4

Mild 4 2 6

Gini (Temp=Hot) = 1 – (2/4)2 – (2/4)2 = 0.5

Gini (Temp=Cool) = 1 – (3/4)2 – (1/4)2 = 1 – 0.5625 – 0.0625 = 0.375

Gini (Temp=Mild) = 1 – (4/6)2 – (2/6)2 = 1 – 0.444 – 0.111 = 0.445

We’ll calculate weighted sum of Gini index for temperature feature

Gini (Temp) = (4/14) x 0.5 + (4/14) x 0.375 + (6/14) x 0.445 = 0.142 + 0.107 + 0.190 = 0.439

Humidity

Humidity is a binary class feature. It can be high or normal.

Humidity Yes No Number of instances

High 3 4 7

Normal 6 1 7

Gini (Humidity=High) = 1 – (3/7)2 – (4/7)2 = 1 – 0.183 – 0.326 = 0.489

Gini (Humidity=Normal) = 1 – (6/7)2 – (1/7)2 = 1 – 0.734 – 0.02 = 0.244

Weighted sum for humidity feature will be calculated next

Gini (Humidity) = (7/14) x 0.489 + (7/14) x 0.244 = 0.367

Wind

Wind is a binary class similar to humidity. It can be weak and strong.

Wind Yes No Number of instances

Weak 6 2 8

Strong 3 3 6

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Gini (Wind=Weak) = 1 – (6/8)2 – (2/8)2 = 1 – 0.5625 – 0.062 = 0.375

Gini (Wind=Strong) = 1 – (3/6)2 – (3/6)2 = 1 – 0.25 – 0.25 = 0.5

Gini (Wind) = (8/14) x 0.375 + (6/14) x 0.5 = 0.428

We’ve calculated Gini index values for each feature. The winner will be outlook feature because

its cost is the lowest.

Feature Gini index

Outlook 0.342

Temperature 0.439

Humidity 0.367

Wind 0.428

We’ll put outlook decision at the top of the tree.

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

You might realize that sub dataset in the overcast leaf has only yes decisions. This means that

overcast leaf is over.

Focus on the sub dataset for sunny outlook. We need to find the Gini index scores for temperature,

humidity and wind features respectively.

Day Outlook Temp. Humidity Wind Decision

1 Sunny Hot High Weak No

2 Sunny Hot High Strong No

8 Sunny Mild High Weak No

9 Sunny Cool Normal Weak Yes

11 Sunny Mild Normal Strong Yes

Gini of temperature for sunny outlook

Temperature Yes No Number of instances

Hot 0 2 2

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Cool 1 0 1

Mild 1 1 2

Gini (Outlook=Sunny and Temp. =Hot) = 1 – (0/2)2 – (2/2)2 = 0

Gini (Outlook=Sunny and Temp. =Cool) = 1 – (1/1)2 – (0/1)2 = 0

Gini (Outlook=Sunny and Temp. =Mild) = 1 – (1/2)2 – (1/2)2 = 1 – 0.25 – 0.25 = 0.5

Gini (Outlook=Sunny and Temp.) = (2/5) x0 + (1/5) x0 + (2/5) x0.5 = 0.2

Gini of humidity for sunny outlook

Humidity Yes No Number of instances

High 0 3 3

Normal 2 0 2

Gini (Outlook=Sunny and Humidity=High) = 1 – (0/3)2 – (3/3)2 = 0

Gini (Outlook=Sunny and Humidity=Normal) = 1 – (2/2)2 – (0/2)2 = 0

Gini (Outlook=Sunny and Humidity) = (3/5) x0 + (2/5) x0 = 0

Gini of wind for sunny outlook

Wind Yes No Number of instances

Weak 1 2 3

Strong 1 1 2

Gini (Outlook=Sunny and Wind=Weak) = 1 – (1/3)2 – (2/3)2 = 0.266

Gini (Outlook=Sunny and Wind=Strong) = 1- (1/2)2 – (1/2)2 = 0.2

Gini (Outlook=Sunny and Wind) = (3/5) x0.266 + (2/5) x0.2 = 0.466

Decision for sunny outlook

We’ve calculated Gini index scores for feature when outlook is sunny. The winner is humidity

because it has the lowest value.

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Feature Gini index

Temperature 0.2

Humidity 0

Wind 0.466

We’ll put humidity check at the extension of sunny outlook.

As seen, decision is always no for high humidity and sunny outlook. On the other hand, decision

will always be yes for normal humidity and sunny outlook. This branch is over.

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Now, we need to focus on rain outlook.

Rain outlook

Day Outlook Temp. Humidity Wind Decision

4 Rain Mild High Weak Yes

5 Rain Cool Normal Weak Yes

6 Rain Cool Normal Strong No

10 Rain Mild Normal Weak Yes

14 Rain Mild High Strong No

We’ll calculate Gini index scores for temperature, humidity and wind features when outlook is

rain.

Gini of temperature for rain outlook

Temperature Yes No Number of instances

Cool 1 1 2

Mild 2 1 3

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Gini (Outlook=Rain and Temp. =Cool) = 1 – (1/2)2 – (1/2)2 = 0.5

Gini (Outlook=Rain and Temp. =Mild) = 1 – (2/3)2 – (1/3)2 = 0.444

Gini (Outlook=Rain and Temp.) = (2/5) x0.5 + (3/5) x0.444 = 0.466

Gini of humidity for rain outlook

Humidity Yes No Number of instances

High 1 1 2

Normal 2 1 3

Gini (Outlook=Rain and Humidity=High) = 1 – (1/2)2 – (1/2)2 = 0.5

Gini (Outlook=Rain and Humidity=Normal) = 1 – (2/3)2 – (1/3)2 = 0.444

Gini (Outlook=Rain and Humidity) = (2/5) x0.5 + (3/5) x0.444 = 0.466

Gini of wind for rain outlook

Wind Yes No Number of instances

Weak 3 0 3

Strong 0 2 2

Gini (Outlook=Rain and Wind=Weak) = 1 – (3/3)2 – (0/3)2 = 0

Gini (Outlook=Rain and Wind=Strong) = 1 – (0/2)2 – (2/2)2 = 0

Gini (Outlook=Rain and Wind) = (3/5) x0 + (2/5) x0 = 0

Decision for rain outlook

The winner is wind feature for rain outlook because it has the minimum Gini index score in

features.

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

Feature Gini index

Temperature 0.466

Humidity 0.466

Wind 0

Put the wind feature for rain outlook branch and monitor the new sub data sets.

As seen, decision is always yes when wind is weak. On the other hand, decision is always no if

wind is strong. This means that this branch is over.

R. C. Patel Institute of Technology, Shirpur

Machine Learning Laboratory (PECO6051L)

CONCLUSION / RESULT:

In this Experiment, we have implemented CART decision tree algorithm on given dataset.

R. C. Patel Institute of Technology, Shirpur

You might also like

- ID3 Algorithm For Decision TreesDocument16 pagesID3 Algorithm For Decision TreesYiping HuangNo ratings yet

- CISA EXAM-Testing Concept-Recovery Time Objective (RTO) & Recovery Point Objective (RPO)From EverandCISA EXAM-Testing Concept-Recovery Time Objective (RTO) & Recovery Point Objective (RPO)Rating: 1 out of 5 stars1/5 (2)

- (Lec 6) Decision Tree MLDocument26 pages(Lec 6) Decision Tree MLMuhtasim Jawad NafiNo ratings yet

- Machine LearningDocument52 pagesMachine LearningaimahsiddNo ratings yet

- MODULE 4-Dr - GMDocument23 pagesMODULE 4-Dr - GMgeetha megharajNo ratings yet

- Unit IV NotesDocument20 pagesUnit IV Notesaa9128707607No ratings yet

- Classification and PredictionDocument81 pagesClassification and PredictionKrishnan SwamiNo ratings yet

- BAR AssignmentDocument8 pagesBAR AssignmentAkash KumarNo ratings yet

- Session 6 - Decision TreeDocument37 pagesSession 6 - Decision Treevoradhairya09No ratings yet

- Predictive Modeling Week3Document68 pagesPredictive Modeling Week3Kunwar RawatNo ratings yet

- Decision Tree Entropy GiniDocument5 pagesDecision Tree Entropy GiniSudheer RedusNo ratings yet

- 4.decision TreeDocument68 pages4.decision TreesharadNo ratings yet

- Decision TreeDocument10 pagesDecision TreeSameer Khan100% (1)

- Seminar 3Document43 pagesSeminar 3manojmani380No ratings yet

- 3.1 C 4.5 Algorithm-19Document10 pages3.1 C 4.5 Algorithm-19nayan jainNo ratings yet

- CART - Machine LearningDocument29 pagesCART - Machine Learningadnan arshadNo ratings yet

- AiML 4 3Document59 pagesAiML 4 3Nox MidNo ratings yet

- Decision Tree Classification Algorithm: Why Use Decision Trees?Document15 pagesDecision Tree Classification Algorithm: Why Use Decision Trees?shreya sarkarNo ratings yet

- Module 3-1 PDFDocument43 pagesModule 3-1 PDFBirendra Kumar Baniya 1EW19CS024No ratings yet

- Data DescriptionDocument1 pageData DescriptionAyele NugusieNo ratings yet

- Chapter 4classification and PredictionDocument19 pagesChapter 4classification and PredictionsrjaswarNo ratings yet

- Classification With Decision Trees: Instructor: Qiang YangDocument62 pagesClassification With Decision Trees: Instructor: Qiang Yangmalik_genius100% (1)

- Government Engineering College, Modasa: B.E. - Computer Engineering (Semester - VII) 3170724 - Machine LearningDocument3 pagesGovernment Engineering College, Modasa: B.E. - Computer Engineering (Semester - VII) 3170724 - Machine LearningronakNo ratings yet

- Topic 1 - Time Complexity AnalysisDocument14 pagesTopic 1 - Time Complexity Analysisrazaaziz9191No ratings yet

- Extension and Evaluation of ID3 - Decision Tree AlgorithmDocument8 pagesExtension and Evaluation of ID3 - Decision Tree AlgorithmVăn Hoàng TrầnNo ratings yet

- Government Engineering College Modasa Semester:7 (C.E) Machine Learning (3170724)Document27 pagesGovernment Engineering College Modasa Semester:7 (C.E) Machine Learning (3170724)Bhargav BharadiyaNo ratings yet

- Ch4 SupervisedDocument78 pagesCh4 Supervisedhareem iftikharNo ratings yet

- Machine LearningDocument27 pagesMachine Learningsahibpctebca21aNo ratings yet

- Classification With Decision Trees I: Instructor: Qiang YangDocument29 pagesClassification With Decision Trees I: Instructor: Qiang YangPoornima VenkateshNo ratings yet

- R. Vishnu PriyaDocument16 pagesR. Vishnu PriyaAlok KumarNo ratings yet

- COMP 6651 Lecture1 Winter 2022Document46 pagesCOMP 6651 Lecture1 Winter 2022Akshita PatelNo ratings yet

- Unit 2Document20 pagesUnit 2Srujana ShettyNo ratings yet

- Decision Tree LearningDocument42 pagesDecision Tree LearningSuraj HSNo ratings yet

- Chapter 6Document172 pagesChapter 6Koert OosterhuisNo ratings yet

- Lab 08: ID3 - Decision Tree and Linear Regression ObjectivesDocument4 pagesLab 08: ID3 - Decision Tree and Linear Regression Objectiveszombiee hookNo ratings yet

- 2SLS MrozDocument2 pages2SLS Mrozсимона златковаNo ratings yet

- Data Minig Haris Arshad BSSEDocument9 pagesData Minig Haris Arshad BSSEArsh SyedNo ratings yet

- KNNDocument5 pagesKNNUday KumarNo ratings yet

- Classification: Decision TreesDocument30 pagesClassification: Decision TreesAshish TiwariNo ratings yet

- Decision Tree c45Document30 pagesDecision Tree c45AhmadRizalAfaniNo ratings yet

- AVIA Development of An Aerial Fire Identification System Based On Visual Artificial IntelligenceDocument6 pagesAVIA Development of An Aerial Fire Identification System Based On Visual Artificial Intelligencemahruri arifNo ratings yet

- Decision Trees CLSDocument43 pagesDecision Trees CLSAshish TiwariNo ratings yet

- 17-Arid-6382 - Muhammad Awais RiazDocument9 pages17-Arid-6382 - Muhammad Awais RiazLucky Asr AwaisNo ratings yet

- ID3 Lecture4Document25 pagesID3 Lecture4jameelkhalidalanesyNo ratings yet

- EXP 3 Coefficient of Friction FinalDocument5 pagesEXP 3 Coefficient of Friction FinalCef LlameloNo ratings yet

- Decision Tree 2Document20 pagesDecision Tree 2nguyenxuanthai140585No ratings yet

- Naive BayesDocument32 pagesNaive Bayesharshbafna.ei20No ratings yet

- Decision Tree Classifier-Introduction, ID3Document34 pagesDecision Tree Classifier-Introduction, ID3mehra.harshal25No ratings yet

- Decision Tree Random Forest TheoryDocument13 pagesDecision Tree Random Forest Theoryany.pc2500No ratings yet

- Module 2 Notes v1 PDFDocument20 pagesModule 2 Notes v1 PDFsuryaNo ratings yet

- Romi DM 04 Algoritma June2012Document23 pagesRomi DM 04 Algoritma June2012Ratih AflitaNo ratings yet

- ID3 Algorithm: Michael CrawfordDocument28 pagesID3 Algorithm: Michael CrawfordMihir ShahNo ratings yet

- The ID3 AlgorithmDocument9 pagesThe ID3 AlgorithmSiva SankarNo ratings yet

- Big Assignment 2Document10 pagesBig Assignment 2melesse bisemaNo ratings yet

- ID3 Algorithm: Michael CrawfordDocument28 pagesID3 Algorithm: Michael CrawfordMuhammad MudassirNo ratings yet

- VI Sem Machine Learning CS 601Document28 pagesVI Sem Machine Learning CS 601pankaj guptaNo ratings yet

- Visit:: Join Telegram To Get Instant Updates: Contact: MAIL: Instagram: Instagram: Whatsapp ShareDocument21 pagesVisit:: Join Telegram To Get Instant Updates: Contact: MAIL: Instagram: Instagram: Whatsapp ShareMoldyNo ratings yet

- Multi-Objective Optimization using Artificial Intelligence TechniquesFrom EverandMulti-Objective Optimization using Artificial Intelligence TechniquesNo ratings yet

- GRE Physics Review: Comprehensive Review for GRE Physics Subject TestFrom EverandGRE Physics Review: Comprehensive Review for GRE Physics Subject TestNo ratings yet

- Deterministic Pushdown AutomataDocument10 pagesDeterministic Pushdown AutomataSruthisree R MNo ratings yet

- Answer Reference Hart Problem 31 - 12062012Document3 pagesAnswer Reference Hart Problem 31 - 12062012Rhyza Mariele GrapilonNo ratings yet

- Introduction To Artificial Intelligence: PlanningDocument65 pagesIntroduction To Artificial Intelligence: PlanningmikkcsNo ratings yet

- Introduction To Algorithms: Chapter 6: Heap SortDocument59 pagesIntroduction To Algorithms: Chapter 6: Heap SortAyoade Akintayo MichaelNo ratings yet

- Chapter Four - Solutions of A System of Linear EquationsDocument62 pagesChapter Four - Solutions of A System of Linear EquationsAdem Terna SamuelNo ratings yet

- Facebook - LeetCode PDFDocument19 pagesFacebook - LeetCode PDFTuhin KunduNo ratings yet

- Horas de EstudioDocument16 pagesHoras de EstudioAldo Guillermo RojasNo ratings yet

- Cryptographic Hash FunctionsDocument37 pagesCryptographic Hash Functionsyohan suryaNo ratings yet

- CS 2750 Machine LearningDocument14 pagesCS 2750 Machine LearningravigobiNo ratings yet

- Lecture 34 35Document8 pagesLecture 34 35Huzaifa RehanNo ratings yet

- Integrated Task Assignment and Path Planning For Capacitated Multi-Agent Pickup and DeliveryDocument8 pagesIntegrated Task Assignment and Path Planning For Capacitated Multi-Agent Pickup and Deliveryypes61205No ratings yet

- Assignment & Increment OperatorsDocument2 pagesAssignment & Increment OperatorsRajani UpadhyayNo ratings yet

- Cmpe112 Lab1Document4 pagesCmpe112 Lab1xatabi6022No ratings yet

- MC4112 Set2Document3 pagesMC4112 Set2tpgittfcNo ratings yet

- CB - X - Math - CH 2 - Polynomials - Case Study Questions - QPDocument3 pagesCB - X - Math - CH 2 - Polynomials - Case Study Questions - QPdeepak kalalNo ratings yet

- Midterm Lab Quiz 2 - Attempt ReviewDocument6 pagesMidterm Lab Quiz 2 - Attempt ReviewKing Richard D. AmoloNo ratings yet

- BCS304-Module-4 NotesDocument30 pagesBCS304-Module-4 Notesjexehif373No ratings yet

- Minimization of DFADocument21 pagesMinimization of DFAGarvit JoshiNo ratings yet

- 13 Clustering TechniquesDocument47 pages13 Clustering TechniquesAyush RajNo ratings yet

- Linear Regression: Jia-Bin Huang Virginia TechDocument59 pagesLinear Regression: Jia-Bin Huang Virginia TechMr. Raghunath ReddyNo ratings yet

- Cybs6101 Prelim Quiz 1Document2 pagesCybs6101 Prelim Quiz 1Yacob MortNo ratings yet

- Fundamentals of Python: Data Structures: Kenneth A. LambertDocument16 pagesFundamentals of Python: Data Structures: Kenneth A. LambertTanjim AhmedNo ratings yet

- Red-Black Tree - WikipediaDocument20 pagesRed-Black Tree - WikipediaJeya MuruganNo ratings yet

- MCQDocument22 pagesMCQAmol AdhangaleNo ratings yet

- HabibDocument1 pageHabibMuhammad Enam ul HaqNo ratings yet

- Unit 1Document94 pagesUnit 1Bala VishnuNo ratings yet

- Simple Data Types in C: Alan L. Cox Alc@rice - EduDocument44 pagesSimple Data Types in C: Alan L. Cox Alc@rice - EduDavinder SinghNo ratings yet

- Why Study The Theory of Computation?: Implementations Come and GoDocument68 pagesWhy Study The Theory of Computation?: Implementations Come and GoRaj SharmaNo ratings yet

- Ian Stewart On MinesweeperDocument5 pagesIan Stewart On MinesweeperMarcopollo824No ratings yet