Professional Documents

Culture Documents

Cammd Aims Lecture2

Cammd Aims Lecture2

Uploaded by

RV Whatsapp statusCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Cammd Aims Lecture2

Cammd Aims Lecture2

Uploaded by

RV Whatsapp statusCopyright:

Available Formats

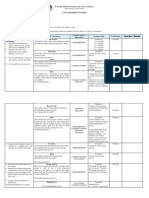

Materials Informatics

Practical Concepts in ML

Rohit Batra

rbatra@iitm.ac.in

LECTURE 2 (Afternoon, June 7, 2024)

Machine learning and Artificial Intelligence in Materials Science

Use the following concepts to improve your ML model performance

• Overfitting

• Regularization

• Normalization

• Feature selection (dimensionality reduction, curse of dimensionality)

• Ensemble models (e.g., random forest)

• Generally, simple methods generalize better on smaller (less datapoints or less features) datasets

Other important concepts in ML

• Error metrics (mean square error, R2 score)

• Train, Validation, Test error

• Cross-validation

• Overfitting

• Regularization

• Normalization

• Feature selection (dimensionality reduction, curse of dimensionality)

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 2

Polynomial Regression

We assume that a linear model can explain the data

1-Dimensional example

𝑦! = 𝑓 𝒙 = 𝑤! + 𝑤" 𝑥" + 𝑤# 𝑥"#

&

Quadratic model

= * 𝑤$ 𝑥"&

y

$%!

Linear model = 𝒘( 𝒙

𝒙 = (𝑥' , 𝑥" , 𝑥" )

𝒘 = (𝑤' , 𝑤" , 𝑤# )

x

+ of the “line”

How to find the parameters (𝒘)

that best fits the data?

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 3

Error Metrics

1-Dimensional example

Error 𝑒$ = 𝑦$ − 𝑦+$

y

Error )

1

Mean square error *(𝑒$ )#

𝑁

$%"

∑)

$%"(𝑒$ )

#

x Coefficient of 1− )

determination (R )

2 5 #

∑$%"(𝑦$ − 𝑦)

Mean of 𝒚

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 4

Example:

Cross-Validation (CV)

Four-fold CV

All training data

hype-parameter estimation

Use validation errors for

Validation CV training

CV iteration

CV training Validation CV training

CV training Validation CV training

CV training Validation

Final model training using hyper-parameters from CV

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 5

Overfitting

How to resolve overfitting?

Use regularization and cross-validation

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 6

Regularization

)

1

Change cost (or loss) function from *(𝑒$ )#

𝑁

$%"

)

1

to *(𝑒$ )# + 𝜆 𝒘 #

𝑁

$%"

Caution other variations are

Solution that minimizes the new cost function (𝑋 ( 𝑋 + 𝜆𝐼)𝒘

+ = 𝑋( 𝒚 possible depending on what

is regularized!

We impose constraints on 𝒘 to avoid over-fitting

How to decide 𝜆?

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 7

Curse of dimensionality

https://medium.com/analytics-vidhya/the-curse-of-dimensionality-and-its-cure-f9891ab72e5c

More features à Higher data sparsity

More features à More no. of training samples needed

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 8

Feature Selection

Iteration 1

Feature set Selected Feature set

E1

E2

Model training

E3

E4

Iteration 2 Feature set Selected Feature set

E1

Model training E2

E3

Continue iteration until error decreases…

Will this result in optimal set of features?

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 9

Support Vector Regression

• A linear model can explain the data

• Cost function includes regularization term and slack

variables, subject to constraints

• Kernel trick is used to learn non-linear models

Good resources:

https://in.mathworks.com/help/stats/understanding-support-vector-machine-regression.html

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 10

Ensemble Models

• Multiple weak learners (models) together result

in a more accurate prediction

• Methods to build ensemble models

• Bootstrap

• Boosting

• Combining models of different nature

Why ensemble methods work?

Source: https://towardsdatascience.com/what-are-ensemble-methods-in-machine-learning-cac1d17ed349

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 11

Random Forest

• Ensemble of decision trees

• Bootstrapping for better accuracy

• Split strategy based on reduction in MSE

Rohit Batra, Materials Informatics Lab, MME, IIT Madras 12

You might also like

- SRM Formula SheetDocument10 pagesSRM Formula SheetYU XUAN LEENo ratings yet

- Course Material - Computer FundamentalsDocument32 pagesCourse Material - Computer FundamentalsKobe MartinezNo ratings yet

- 4th - How We Express OurselvesDocument10 pages4th - How We Express OurselvesdanijelapjNo ratings yet

- A Comparitive Study On Different Classification Algorithms Using Airline DatasetDocument4 pagesA Comparitive Study On Different Classification Algorithms Using Airline DatasetEditor IJRITCCNo ratings yet

- Model Evaluation MetricsDocument21 pagesModel Evaluation MetricsYoussef MissaouiNo ratings yet

- Module - 4 (R Training) - Basic Stats & ModelingDocument15 pagesModule - 4 (R Training) - Basic Stats & ModelingRohitGahlanNo ratings yet

- Data Scientist 1Document96 pagesData Scientist 1Farah DibaNo ratings yet

- Lecture 4 - Systems of Linear Equations (DONE!!)Document44 pagesLecture 4 - Systems of Linear Equations (DONE!!)Sharelle TewNo ratings yet

- The Basics of R Programming LanguageDocument6 pagesThe Basics of R Programming LanguageGuilliane GallanoNo ratings yet

- The Basics of R Programming LanguageDocument6 pagesThe Basics of R Programming LanguageGuilliane GallanoNo ratings yet

- Estimation Problems: Adapted FromDocument41 pagesEstimation Problems: Adapted FromEdma Nadhif OktarianiNo ratings yet

- Lecture 3 - MachineLearning-CrashCourse2023Document99 pagesLecture 3 - MachineLearning-CrashCourse2023Giorgio AdusoNo ratings yet

- Individual Assignment #3 Regression P (2241565)Document8 pagesIndividual Assignment #3 Regression P (2241565)OhmymelNo ratings yet

- Model EvaluationDocument80 pagesModel EvaluationDeva Hema DNo ratings yet

- K-Means Clustering Tutorial - Matlab CodeDocument3 pagesK-Means Clustering Tutorial - Matlab Codeakumar5189No ratings yet

- Data Science I: Lesson #01 - Outline PresentationDocument20 pagesData Science I: Lesson #01 - Outline PresentationalesyNo ratings yet

- Case Study 1 v2Document28 pagesCase Study 1 v2Aiman Nazeer AhmedNo ratings yet

- CV 2024022910202935Document3 pagesCV 2024022910202935info4nirbhayNo ratings yet

- Format Practical IndexDocument4 pagesFormat Practical IndexBdejNo ratings yet

- Random Sample ConsensusDocument10 pagesRandom Sample Consensussophia787No ratings yet

- Enhancing Clustering Mechanism by Implementation of EM Algorithm For Gaussian Mixture ModelDocument4 pagesEnhancing Clustering Mechanism by Implementation of EM Algorithm For Gaussian Mixture ModelInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Unit 4 - DSDocument118 pagesUnit 4 - DSramya ravindranNo ratings yet

- Data Analysis:: Quantitative and QualitativeDocument73 pagesData Analysis:: Quantitative and QualitativeChu WanNo ratings yet

- Mathematical ModelingDocument33 pagesMathematical ModelingIsma OllshopNo ratings yet

- 41 j48 Naive Bayes WekaDocument5 pages41 j48 Naive Bayes WekapraveennallavellyNo ratings yet

- Regression and Classification - Supervised Machine Learning - GeeksforGeeksDocument9 pagesRegression and Classification - Supervised Machine Learning - GeeksforGeeksbsudheertecNo ratings yet

- Quick Reference Guide The Math Class: AP Computer Science A Home Exam Reference SheetDocument26 pagesQuick Reference Guide The Math Class: AP Computer Science A Home Exam Reference SheetRyanNo ratings yet

- Data Mining Lab Maual Through Python 031023Document22 pagesData Mining Lab Maual Through Python 031023Manish KumarNo ratings yet

- SRM Formula Sheet-2Document11 pagesSRM Formula Sheet-2colore admistreNo ratings yet

- Machine Learning Algorithms - A Review - ART20203995Document6 pagesMachine Learning Algorithms - A Review - ART20203995sdsdfsNo ratings yet

- Supplementary eBioMedicine 2023 MeningiomaDocument10 pagesSupplementary eBioMedicine 2023 MeningiomastatyoungNo ratings yet

- Paper 2Document4 pagesPaper 2syed sakibNo ratings yet

- Machine Learning AlgorithmDocument8 pagesMachine Learning AlgorithmShivaprakash D MNo ratings yet

- CV 202402291017286Document3 pagesCV 202402291017286info4nirbhayNo ratings yet

- Glossary of Terms Journal of Machine LearningDocument4 pagesGlossary of Terms Journal of Machine Learningassistant0849No ratings yet

- Css Model Ans 22519 W 2022Document23 pagesCss Model Ans 22519 W 2022verycutestbabyNo ratings yet

- Quiz-1 NotesDocument4 pagesQuiz-1 NotesShruthi ShettyNo ratings yet

- RMIT - Cfd.lecture.08 230605aDocument36 pagesRMIT - Cfd.lecture.08 230605aAttila Dr. KissNo ratings yet

- Group A Assignment No2 WriteupDocument9 pagesGroup A Assignment No2 Writeup403 Chaudhari Sanika SagarNo ratings yet

- Assignment 1 - LP1Document14 pagesAssignment 1 - LP1bbad070105No ratings yet

- Lecture 1Document31 pagesLecture 1Varun AntoNo ratings yet

- Numerical Computation PresentationDocument34 pagesNumerical Computation PresentationChrono2ergeNo ratings yet

- Pointer (Data Structures) - JavatpointDocument8 pagesPointer (Data Structures) - Javatpointmadsamael004No ratings yet

- Missing Data Analysis: University College London, 2015Document37 pagesMissing Data Analysis: University College London, 2015charudattasonawane55No ratings yet

- CappstoneDocument2 pagesCappstoneAnkita MishraNo ratings yet

- Sakhil CapstoneDocument20 pagesSakhil CapstoneJenishNo ratings yet

- UNIT-III Lecture NotesDocument18 pagesUNIT-III Lecture Notesnikhilsinha789No ratings yet

- Machine Learning PresentationDocument18 pagesMachine Learning PresentationLallu Bhai YTNo ratings yet

- Programming ConceptsDocument17 pagesProgramming Conceptsbea fNo ratings yet

- Instant Download PDF Essentials of MATLAB Programming 3rd Edition Chapman Solutions Manual Full ChapterDocument78 pagesInstant Download PDF Essentials of MATLAB Programming 3rd Edition Chapman Solutions Manual Full Chapterbalaliugay100% (9)

- R20 - R Program - PDocument29 pagesR20 - R Program - PnarayanababuNo ratings yet

- Lesson 4 Gradient DescentDocument13 pagesLesson 4 Gradient DescentJohn Veksler LingalNo ratings yet

- C Program To Find Transpose of A MatrixDocument1 pageC Program To Find Transpose of A MatrixVishwas ShuklaNo ratings yet

- Confusion MatrixDocument5 pagesConfusion MatrixNugroho Anis RahmantoNo ratings yet

- CS 461 - Fall 2021 - Neural Networks - Machine LearningDocument5 pagesCS 461 - Fall 2021 - Neural Networks - Machine LearningVictor RutoNo ratings yet

- Essentials of MATLAB Programming 3rd Edition Chapman Solutions Manual instant download all chapterDocument78 pagesEssentials of MATLAB Programming 3rd Edition Chapman Solutions Manual instant download all chaptercubanamarton100% (5)

- Machine Learning Algorithms - A Review: January 2019Document7 pagesMachine Learning Algorithms - A Review: January 2019Nitin PrasadNo ratings yet

- Introduction To Algorithms: Chapter 2 - Principles of Data Structures Using C and by Vinu V DasDocument34 pagesIntroduction To Algorithms: Chapter 2 - Principles of Data Structures Using C and by Vinu V DasAamir ChohanNo ratings yet

- UNIT-1 Regression vs. ClassificationDocument25 pagesUNIT-1 Regression vs. ClassificationHiiNo ratings yet

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- Curriculum Mapping 2nd TermDocument35 pagesCurriculum Mapping 2nd TermroseNo ratings yet

- Engl 1301 Revised Proposal Self Yaretcy LezaDocument5 pagesEngl 1301 Revised Proposal Self Yaretcy Lezaapi-533836907No ratings yet

- Naop Level 1 & Level 2 Qualifications in Occupational Testing and AssessmentDocument8 pagesNaop Level 1 & Level 2 Qualifications in Occupational Testing and AssessmentPrateek VarmaNo ratings yet

- BIG BIG: Think, Dream ThinkDocument15 pagesBIG BIG: Think, Dream Thinkgraceyluz100% (1)

- English Scheme of Work For Year 5Document42 pagesEnglish Scheme of Work For Year 5Mohamad Tarmidzi0% (1)

- Audu Maryam ProjectDocument57 pagesAudu Maryam ProjectUGWUEKE CHINYERENo ratings yet

- Technology For Teaching and Learning : DirectionDocument13 pagesTechnology For Teaching and Learning : DirectionJoan Pangyan100% (1)

- SBF Form 16-Application Form For Scholarship of Higher Education To Railway EmployeesDocument1 pageSBF Form 16-Application Form For Scholarship of Higher Education To Railway EmployeesAkizuki TakaoNo ratings yet

- APC 309 Assignment Jan 2014Document4 pagesAPC 309 Assignment Jan 2014Milky WayNo ratings yet

- Lesson Plan HealthDocument3 pagesLesson Plan HealthВікторія АтаманюкNo ratings yet

- Effectiveness of Subtitles in Second Language AcquisitionDocument6 pagesEffectiveness of Subtitles in Second Language AcquisitionKyle DumalaogNo ratings yet

- Ped SGDocument120 pagesPed SGTshepi Carol DavidsNo ratings yet

- Curriculum Vitae: W Earnest Preetham JaikarDocument6 pagesCurriculum Vitae: W Earnest Preetham JaikarPaul PhiliphsNo ratings yet

- Laptop Initiatives Summary of Research Across Seven StatesDocument20 pagesLaptop Initiatives Summary of Research Across Seven Stateschintan darjiNo ratings yet

- CC 101 Project Proposal TemplateDocument3 pagesCC 101 Project Proposal TemplatekayjejustintvNo ratings yet

- Starter Activity: - Recap Previous Lesson and Discussion About The Homework TaskDocument7 pagesStarter Activity: - Recap Previous Lesson and Discussion About The Homework TaskMary Lin100% (1)

- New National Curriculum of Pakistan - 2Document428 pagesNew National Curriculum of Pakistan - 2Muzamil HafeezNo ratings yet

- Instructional Design Models, Theories & MethodologyDocument2 pagesInstructional Design Models, Theories & MethodologyRonnil FernandezNo ratings yet

- Jurnal Internasional 1 PDFDocument5 pagesJurnal Internasional 1 PDFOpiNo ratings yet

- Bowling Lesson Plan 1Document2 pagesBowling Lesson Plan 1api-280553324No ratings yet

- ACCOMPLISHMENT REPORT 2022 School HeadDocument5 pagesACCOMPLISHMENT REPORT 2022 School HeadMARCK JOHN EUSTAQUIO100% (3)

- Lorma TM 1 Checklist - of - Requirements - 20191Document2 pagesLorma TM 1 Checklist - of - Requirements - 20191Khael Angelo Zheus JaclaNo ratings yet

- How To Approach The Advanced Audit and Assurance (AAA) ExamDocument1 pageHow To Approach The Advanced Audit and Assurance (AAA) ExamRaghavNo ratings yet

- Learning Activity Sheet: LearnDocument3 pagesLearning Activity Sheet: LearnChinn R. LegaspiNo ratings yet

- Learning Competencies The LearnerDocument4 pagesLearning Competencies The LearnerJennifer J. PascuaNo ratings yet

- 2023 Schneider Global Student Experience - FAQsDocument6 pages2023 Schneider Global Student Experience - FAQsKamil M. AhmedNo ratings yet

- Marylin B. Tarongoy Bsed-Math 3 Ed-Asl1 MR - Ionell Jay TerogoDocument1 pageMarylin B. Tarongoy Bsed-Math 3 Ed-Asl1 MR - Ionell Jay TerogoMarylin TarongoyNo ratings yet

- Endorsement Letter NewDocument9 pagesEndorsement Letter NewRodj Eli Mikael Viernes-IncognitoNo ratings yet

- Proposal For IM's Preparation Program MatrixDocument4 pagesProposal For IM's Preparation Program MatrixAzzel ArietaNo ratings yet