Professional Documents

Culture Documents

KNN_Rainfall

KNN_Rainfall

Uploaded by

sayyeda.stu2018Copyright:

Available Formats

You might also like

- Big Data Analytics Seminar Report 2020-21Document21 pagesBig Data Analytics Seminar Report 2020-21Bangkok Dude71% (21)

- Comp131 TestDocument84 pagesComp131 TestHailey Constantino76% (17)

- Naive_Bayes_RainfallDocument6 pagesNaive_Bayes_Rainfallsayyeda.stu2018No ratings yet

- 5 1 1-Ridf-CurvesDocument18 pages5 1 1-Ridf-Curvesjohn rey toledoNo ratings yet

- The Sales Manager of An Automobile Parts Dealer Wants To Develop A Model To Predict The Total Annual Sales by Region. Based On Experience ItDocument4 pagesThe Sales Manager of An Automobile Parts Dealer Wants To Develop A Model To Predict The Total Annual Sales by Region. Based On Experience ItJuan Jose RodriguezNo ratings yet

- Tightening Torque PDFDocument2 pagesTightening Torque PDFPablo Alvarez GuichouNo ratings yet

- Tightening Torque: English Units: (Coarse Thread Series)Document2 pagesTightening Torque: English Units: (Coarse Thread Series)bpavlidisNo ratings yet

- Momenti Pritezanja Vijaka PDFDocument2 pagesMomenti Pritezanja Vijaka PDFMladen Čorokalo100% (1)

- Tightening TorqueDocument2 pagesTightening TorqueWONG TSNo ratings yet

- Tightening TorqueDocument2 pagesTightening TorqueMiguel QueirosNo ratings yet

- Tightening Torque - RPM Mechanical Inc PDFDocument2 pagesTightening Torque - RPM Mechanical Inc PDFJesús SuárezNo ratings yet

- Tightening Torque PDFDocument2 pagesTightening Torque PDFVenkatasubramanian IyerNo ratings yet

- Wind Load CalculationDocument3 pagesWind Load CalculationGajera HarshadNo ratings yet

- Principal Component Analysis: C1 C2Document4 pagesPrincipal Component Analysis: C1 C2Wulan Atikah SuriNo ratings yet

- SPSS - BW AsigesDocument6 pagesSPSS - BW AsigesBAGAS PUTRA PRATAMANo ratings yet

- Ash RegressionDocument11 pagesAsh Regressionsarpateashish5No ratings yet

- Case Study 04Document9 pagesCase Study 04Tanvir ShawonNo ratings yet

- Final AssignmentDocument11 pagesFinal AssignmentRadwan OhiNo ratings yet

- Planing Analysis Report 3.9 LCGDocument7 pagesPlaning Analysis Report 3.9 LCGSérgio Roberto Dalha ValheNo ratings yet

- BK-3B Ahmad RahmatullahDocument98 pagesBK-3B Ahmad RahmatullahAhmadRahmatullahNo ratings yet

- UtilitiesDocument4 pagesUtilitiesMANIKANTH TALAKOKKULANo ratings yet

- Small Cap 2017Document66 pagesSmall Cap 2017sakthy82No ratings yet

- Cimenataciones OkkkDocument22 pagesCimenataciones OkkkAlin Del Castillo VelaNo ratings yet

- Varma GarchDocument55 pagesVarma GarchJosue KouakouNo ratings yet

- BNBC 2016: Part 6: Chapter 2 Loads On Buildings and StructuresDocument27 pagesBNBC 2016: Part 6: Chapter 2 Loads On Buildings and Structuresmist ce19No ratings yet

- Sta 2Document40 pagesSta 2Sevo's RahmanNo ratings yet

- Substation Transformer Impedance Worksheet ###: Impedance in Per UnitDocument1 pageSubstation Transformer Impedance Worksheet ###: Impedance in Per UnitromoNo ratings yet

- Polin Monten, PTRDocument2 pagesPolin Monten, PTROmar NavarroNo ratings yet

- Welcome To "Rte-Book1Ddeltanorm - XLS" Calculator For 1D Subaerial Fluvial Fan-Delta With Channel of Constant WidthDocument23 pagesWelcome To "Rte-Book1Ddeltanorm - XLS" Calculator For 1D Subaerial Fluvial Fan-Delta With Channel of Constant WidthsteveNo ratings yet

- COCADocument13 pagesCOCACristhian GoodandBadNo ratings yet

- Ward FootingDocument4 pagesWard FootingUrusha TyataNo ratings yet

- Node X Y Z Node X Y Z: Out in 2 3Document2 pagesNode X Y Z Node X Y Z: Out in 2 3ARUN K RAJNo ratings yet

- TABLE: Specific Shear at Different SectionsDocument2 pagesTABLE: Specific Shear at Different SectionsKshitiz ShresthaNo ratings yet

- Experiment 3: Impact of A JetDocument7 pagesExperiment 3: Impact of A JetBandali TannousNo ratings yet

- Data Vizualization - Jupyter NotebookDocument20 pagesData Vizualization - Jupyter Notebookdimple mahaduleNo ratings yet

- Linear Regression AssignmentDocument15 pagesLinear Regression AssignmentrizkaNo ratings yet

- File Perhitungan GumbelDocument27 pagesFile Perhitungan GumbelCrash_systemNo ratings yet

- Report Full Direct Shear Test Edit Repaired PDFDocument15 pagesReport Full Direct Shear Test Edit Repaired PDFarif daniel muhamaddunNo ratings yet

- RSLTE037 - Service Retainability-RSLTE-LNCEL-2-day-rslte LTE16A Reports RSLTE037 xml-2017 07 14-14 13 28 548Document116 pagesRSLTE037 - Service Retainability-RSLTE-LNCEL-2-day-rslte LTE16A Reports RSLTE037 xml-2017 07 14-14 13 28 548prosenjeet_singhNo ratings yet

- Thay May 1300hpDocument4 pagesThay May 1300hpHUNG NIKKONo ratings yet

- Jagannath GMS Design Report - With Current SectionsDocument13 pagesJagannath GMS Design Report - With Current Sectionsharish gowdaNo ratings yet

- Rain Water Basin DesignDocument11 pagesRain Water Basin DesignSturza AnastasiaNo ratings yet

- Ejercicio Unidad 3 Estabilidad de TaludesDocument10 pagesEjercicio Unidad 3 Estabilidad de TaludesFERMIN CONDORI QUISPENo ratings yet

- Tabel Perhitungan Evapotranspirasi Potensial Dengan Metode PenmannDocument19 pagesTabel Perhitungan Evapotranspirasi Potensial Dengan Metode Penmannfahyudi100% (6)

- Description: Tags: 1997-FfelpDocument12 pagesDescription: Tags: 1997-Ffelpanon-391305No ratings yet

- AnnualDocument34 pagesAnnualDaniel SbizzaroNo ratings yet

- Engineering Hydrology Lab: (GROUP No.11) Section: ADocument21 pagesEngineering Hydrology Lab: (GROUP No.11) Section: AMugahed Abdo Al-gahdariNo ratings yet

- Syauqizaidan K.K - KNearestNeighborsDocument4 pagesSyauqizaidan K.K - KNearestNeighborsSyauqizaidan Khairan KhalafNo ratings yet

- S9 Regresión Simple y Múltiple Al - ColaboratoryDocument14 pagesS9 Regresión Simple y Múltiple Al - ColaboratorySebastian CallesNo ratings yet

- Channels DimensionsDocument2 pagesChannels DimensionsAristotle MedinaNo ratings yet

- PS-11 CT Calcs-Ver 2-6 MiscDocument29 pagesPS-11 CT Calcs-Ver 2-6 MiscMaria Saucedo SanchezNo ratings yet

- Tugas 3 Kelompok 1 Dinamika Kapal BDocument3 pagesTugas 3 Kelompok 1 Dinamika Kapal BFirmansyah AuliaNo ratings yet

- Output Beban Hidup 250kgDocument672 pagesOutput Beban Hidup 250kgwahyuNo ratings yet

- 2x660MW Maitree STPP at Rampal, Bagerhat.: Crosshole Shear Test (CST-1)Document6 pages2x660MW Maitree STPP at Rampal, Bagerhat.: Crosshole Shear Test (CST-1)Priodeep ChowdhuryNo ratings yet

- Minimos Cuadrados: K AÑO Oferta Xy X 2 Y (Y-Y)Document12 pagesMinimos Cuadrados: K AÑO Oferta Xy X 2 Y (Y-Y)EvilLordMuMexNo ratings yet

- VMA2Document1,666 pagesVMA2FranciusNo ratings yet

- Pile Capacity (Version 1)Document6 pagesPile Capacity (Version 1)Rajesh GangwalNo ratings yet

- A Statistical Miscellany of A Special LibraryDocument24 pagesA Statistical Miscellany of A Special LibraryM S SridharNo ratings yet

- Course: Soil Mechanics II Code: CVNG 2009 Lab: Consolidation Name: Adrian Rampersad I.D:809001425Document16 pagesCourse: Soil Mechanics II Code: CVNG 2009 Lab: Consolidation Name: Adrian Rampersad I.D:809001425Adrian Mufc RampersadNo ratings yet

- Centre Universitaire Régional D'interface 1 15/02/2011: Main Graphics, Analyze ViewDocument3 pagesCentre Universitaire Régional D'interface 1 15/02/2011: Main Graphics, Analyze ViewMOHAMMED REZKINo ratings yet

- Metode Analisis Perencanaan I (MAP I) : Fika Febi Novianti (2018280030)Document6 pagesMetode Analisis Perencanaan I (MAP I) : Fika Febi Novianti (2018280030)fika febiNo ratings yet

- Engineering Reports - 2023 - Parthasarathy - A Framework For Managing Ethics in Data Science ProjectsDocument12 pagesEngineering Reports - 2023 - Parthasarathy - A Framework For Managing Ethics in Data Science ProjectsoroborobNo ratings yet

- MLA TAB Lecture3Document70 pagesMLA TAB Lecture3Lori GuerraNo ratings yet

- Hopfield Neural Network: Presented by:V.BharanighaDocument20 pagesHopfield Neural Network: Presented by:V.Bharanighahemanthbbc100% (1)

- 41 R20 MID-1 - Sept-2023Document21 pages41 R20 MID-1 - Sept-2023kalyanNo ratings yet

- Top 100 Interview Questions On Machine LearningDocument155 pagesTop 100 Interview Questions On Machine LearningJeevith Soumya SuhasNo ratings yet

- Introduction To Unsupervised Learning:: ClusteringDocument21 pagesIntroduction To Unsupervised Learning:: Clusteringmohini senNo ratings yet

- Machine Learning Methods in Environmental SciencesDocument365 pagesMachine Learning Methods in Environmental SciencesAgung Suryaputra100% (1)

- Implementation of Flight Fare Prediction System Using Machine LearningDocument11 pagesImplementation of Flight Fare Prediction System Using Machine LearningIJRASETPublicationsNo ratings yet

- 9 A.validation Methods - Jupyter NotebookDocument3 pages9 A.validation Methods - Jupyter Notebookvenkatesh mNo ratings yet

- Master Thesis OguzKiranlar Final Final PDFDocument96 pagesMaster Thesis OguzKiranlar Final Final PDFSweta DeyNo ratings yet

- Comparative Study For ClassificationDocument6 pagesComparative Study For Classificationimad khanNo ratings yet

- Mit Data Science Machine Learning Program BrochureDocument17 pagesMit Data Science Machine Learning Program BrochureImissakNo ratings yet

- (COMP5214) (2021) (S) Midterm Cmu63l 15021Document9 pages(COMP5214) (2021) (S) Midterm Cmu63l 15021ferdyNo ratings yet

- Project List - ClgsDocument18 pagesProject List - ClgsAvinash SinghNo ratings yet

- B. Ramasubramanian Dr.S.Kaja Mohideen: Presented by Guided byDocument25 pagesB. Ramasubramanian Dr.S.Kaja Mohideen: Presented by Guided byramadurai123No ratings yet

- Azure Applied AI ServicesDocument3 pagesAzure Applied AI ServicesAndroidNo ratings yet

- Smartphone Applications For Pavement Condition Monitoring: A ReviewDocument20 pagesSmartphone Applications For Pavement Condition Monitoring: A ReviewVINEESHA CHUNDINo ratings yet

- Deep Learning UniversityDocument129 pagesDeep Learning UniversityVinayak PhutaneNo ratings yet

- Upgrad MLDocument7 pagesUpgrad MLSanjeev Kumar Malik100% (1)

- Synopsis Stock Market PredictionDocument6 pagesSynopsis Stock Market PredictionSantoshNo ratings yet

- Final YearDocument25 pagesFinal YearHarry RoyNo ratings yet

- Assignment # 01 Bscs - 7 Semester: Machine LearningDocument5 pagesAssignment # 01 Bscs - 7 Semester: Machine LearningPk PoliticNo ratings yet

- Update Syllabus For CSE DepartmentDocument65 pagesUpdate Syllabus For CSE DepartmentAbu HanifNo ratings yet

- Assignment 8Document4 pagesAssignment 8VijayNo ratings yet

- Violence Detection Paper 1Document6 pagesViolence Detection Paper 1Sheetal SonawaneNo ratings yet

- Cereal Crop Yield Prediction Using Machine Learning Techniques in EthiopiaDocument76 pagesCereal Crop Yield Prediction Using Machine Learning Techniques in Ethiopiaamandawilliam8060No ratings yet

- Dimensionality ReductionDocument7 pagesDimensionality ReductionM Hafidh Dliyaul HaqNo ratings yet

- Machine Learning OverviewDocument11 pagesMachine Learning OverviewJasmine Delos santosNo ratings yet

KNN_Rainfall

KNN_Rainfall

Uploaded by

sayyeda.stu2018Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

KNN_Rainfall

KNN_Rainfall

Uploaded by

sayyeda.stu2018Copyright:

Available Formats

3/20/24, 3:32 PM KNN

K-nearest Neighborhood classification algorithm to

predict rainfall level

Importing Necessary Libraries & Functions

In [1]: import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score,classification_report,confusion_matrix

Loading and preparing data

In [2]: D = pd.read_csv('C:/Users/Admin/Desktop/STAT 405/Mymensingh.csv')

D

Out[2]: ID Station Year Month TEM DPT WIS HUM SLP T_RAN A_RAIN RAN

0 1 Mymensingh 1960 1 16.9 11.3 2.0 73.39 1016.0 15 0.48 NRT

1 2 Mymensingh 1960 2 21.4 12.6 1.7 66.34 1013.0 0 0.00 NRT

2 3 Mymensingh 1960 3 24.1 14.9 2.3 64.13 1011.4 69 2.23 LTR

3 4 Mymensingh 1960 4 29.9 17.6 2.2 59.03 1007.1 27 0.90 NRT

4 5 Mymensingh 1960 5 29.6 23.2 2.4 73.45 1003.4 187 6.03 LTR

... ... ... ... ... ... ... ... ... ... ... ... ...

667 668 Mymensingh 2015 8 28.7 26.2 2.5 87.10 1003.3 349 11.26 MHR

668 669 Mymensingh 2015 9 28.8 25.2 2.0 85.63 1006.0 263 8.77 LTR

669 670 Mymensingh 2015 10 27.0 23.5 2.0 82.48 1011.3 180 5.81 LTR

670 671 Mymensingh 2015 11 23.1 18.7 1.7 81.73 1013.7 13 0.43 NRT

671 672 Mymensingh 2015 12 18.3 14.9 1.8 82.68 1015.9 5 0.16 NRT

672 rows × 12 columns

In [3]: D.dropna(how='any',axis=0,inplace=True)

D

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 1/9

3/20/24, 3:32 PM KNN

Out[3]: ID Station Year Month TEM DPT WIS HUM SLP T_RAN A_RAIN RAN

0 1 Mymensingh 1960 1 16.9 11.3 2.0 73.39 1016.0 15 0.48 NRT

1 2 Mymensingh 1960 2 21.4 12.6 1.7 66.34 1013.0 0 0.00 NRT

2 3 Mymensingh 1960 3 24.1 14.9 2.3 64.13 1011.4 69 2.23 LTR

3 4 Mymensingh 1960 4 29.9 17.6 2.2 59.03 1007.1 27 0.90 NRT

4 5 Mymensingh 1960 5 29.6 23.2 2.4 73.45 1003.4 187 6.03 LTR

... ... ... ... ... ... ... ... ... ... ... ... ...

667 668 Mymensingh 2015 8 28.7 26.2 2.5 87.10 1003.3 349 11.26 MHR

668 669 Mymensingh 2015 9 28.8 25.2 2.0 85.63 1006.0 263 8.77 LTR

669 670 Mymensingh 2015 10 27.0 23.5 2.0 82.48 1011.3 180 5.81 LTR

670 671 Mymensingh 2015 11 23.1 18.7 1.7 81.73 1013.7 13 0.43 NRT

671 672 Mymensingh 2015 12 18.3 14.9 1.8 82.68 1015.9 5 0.16 NRT

654 rows × 12 columns

In [4]: DD = D.drop(['ID','Station','Year','Month','T_RAN','A_RAIN'],axis=1)

DD

Out[4]: TEM DPT WIS HUM SLP RAN

0 16.9 11.3 2.0 73.39 1016.0 NRT

1 21.4 12.6 1.7 66.34 1013.0 NRT

2 24.1 14.9 2.3 64.13 1011.4 LTR

3 29.9 17.6 2.2 59.03 1007.1 NRT

4 29.6 23.2 2.4 73.45 1003.4 LTR

... ... ... ... ... ... ...

667 28.7 26.2 2.5 87.10 1003.3 MHR

668 28.8 25.2 2.0 85.63 1006.0 LTR

669 27.0 23.5 2.0 82.48 1011.3 LTR

670 23.1 18.7 1.7 81.73 1013.7 NRT

671 18.3 14.9 1.8 82.68 1015.9 NRT

654 rows × 6 columns

In [5]: X = DD.drop(['RAN'],axis=1)

X

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 2/9

3/20/24, 3:32 PM KNN

Out[5]: TEM DPT WIS HUM SLP

0 16.9 11.3 2.0 73.39 1016.0

1 21.4 12.6 1.7 66.34 1013.0

2 24.1 14.9 2.3 64.13 1011.4

3 29.9 17.6 2.2 59.03 1007.1

4 29.6 23.2 2.4 73.45 1003.4

... ... ... ... ... ...

667 28.7 26.2 2.5 87.10 1003.3

668 28.8 25.2 2.0 85.63 1006.0

669 27.0 23.5 2.0 82.48 1011.3

670 23.1 18.7 1.7 81.73 1013.7

671 18.3 14.9 1.8 82.68 1015.9

654 rows × 5 columns

In [6]: Y = DD['RAN']

Y

0 NRT

Out[6]:

1 NRT

2 LTR

3 NRT

4 LTR

...

667 MHR

668 LTR

669 LTR

670 NRT

671 NRT

Name: RAN, Length: 654, dtype: object

Splitting data into trainning and test set ( 75% and

25% )

In [7]: X_train,X_test,Y_train,Y_test = train_test_split(X,Y,test_size=0.25,random_state=124)

X_train

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 3/9

3/20/24, 3:32 PM KNN

Out[7]: TEM DPT WIS HUM SLP

434 25.8 19.4 3.3 72.55 1008.0

260 29.2 24.3 3.7 80.63 1006.5

299 20.1 14.0 2.7 72.74 1013.4

159 29.5 21.0 2.0 70.10 997.5

544 26.5 23.2 4.1 82.29 1005.2

... ... ... ... ... ...

550 22.7 18.9 1.8 83.83 1012.7

118 23.9 17.2 1.0 73.03 1014.1

144 19.8 12.7 1.2 67.87 1015.6

17 27.6 24.2 2.0 87.27 1001.7

480 17.4 13.3 2.3 80.35 1014.1

490 rows × 5 columns

In [8]: Y_train

434 NRT

Out[8]:

260 LTR

299 NRT

159 LTR

544 MHR

...

550 NRT

118 NRT

144 NRT

17 MHR

480 NRT

Name: RAN, Length: 490, dtype: object

In [9]: X_test

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 4/9

3/20/24, 3:32 PM KNN

Out[9]: TEM DPT WIS HUM SLP

446 24.9 17.9 3.8 70.45 1010.8

401 27.5 23.9 5.2 85.67 1001.7

244 27.8 23.1 6.5 76.77 1005.3

502 23.7 20.0 1.7 83.90 1013.5

524 28.3 25.3 2.5 87.77 1005.5

... ... ... ... ... ...

438 28.5 25.9 3.7 86.68 1000.8

129 26.9 23.4 0.8 83.19 1009.0

193 20.5 15.4 0.1 80.82 995.2

68 28.3 24.6 1.4 85.40 1006.4

219 26.3 19.5 4.1 72.77 1008.2

164 rows × 5 columns

In [10]: Y_test

446 LTR

Out[10]:

401 MHR

244 MHR

502 NRT

524 LTR

...

438 LTR

129 LTR

193 NRT

68 LTR

219 LTR

Name: RAN, Length: 164, dtype: object

Fit training data in KNN algorithm

In [11]: KNN = KNeighborsClassifier(n_neighbors=8,metric='euclidean')

KNN.fit(X_train,Y_train)

P = KNN.predict(X_test)

P

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 5/9

3/20/24, 3:32 PM KNN

array(['LTR', 'MHR', 'LTR', 'NRT', 'MHR', 'MHR', 'LTR', 'MHR', 'NRT',

Out[11]:

'NRT', 'LTR', 'MHR', 'NRT', 'MHR', 'NRT', 'NRT', 'MHR', 'NRT',

'MHR', 'LTR', 'NRT', 'MHR', 'NRT', 'MHR', 'NRT', 'MHR', 'LTR',

'NRT', 'LTR', 'NRT', 'LTR', 'MHR', 'LTR', 'NRT', 'LTR', 'LTR',

'MHR', 'NRT', 'LTR', 'NRT', 'LTR', 'NRT', 'LTR', 'MHR', 'NRT',

'LTR', 'LTR', 'LTR', 'NRT', 'LTR', 'MHR', 'MHR', 'MHR', 'NRT',

'LTR', 'NRT', 'LTR', 'NRT', 'NRT', 'MHR', 'LTR', 'LTR', 'NRT',

'MHR', 'NRT', 'LTR', 'NRT', 'MHR', 'LTR', 'LTR', 'NRT', 'MHR',

'LTR', 'LTR', 'NRT', 'MHR', 'MHR', 'LTR', 'LTR', 'LTR', 'NRT',

'LTR', 'MHR', 'LTR', 'MHR', 'NRT', 'LTR', 'NRT', 'LTR', 'LTR',

'LTR', 'LTR', 'LTR', 'NRT', 'LTR', 'LTR', 'NRT', 'NRT', 'LTR',

'NRT', 'LTR', 'NRT', 'LTR', 'MHR', 'LTR', 'NRT', 'LTR', 'NRT',

'NRT', 'LTR', 'LTR', 'LTR', 'NRT', 'LTR', 'NRT', 'LTR', 'LTR',

'LTR', 'MHR', 'NRT', 'MHR', 'MHR', 'LTR', 'MHR', 'NRT', 'LTR',

'MHR', 'NRT', 'LTR', 'NRT', 'NRT', 'LTR', 'NRT', 'NRT', 'NRT',

'LTR', 'NRT', 'MHR', 'NRT', 'MHR', 'LTR', 'NRT', 'LTR', 'LTR',

'NRT', 'NRT', 'MHR', 'LTR', 'LTR', 'NRT', 'LTR', 'LTR', 'NRT',

'MHR', 'NRT', 'LTR', 'LTR', 'LTR', 'LTR', 'MHR', 'LTR', 'MHR',

'LTR', 'LTR'], dtype=object)

Performance Metrics

In [14]: accuracy_score(Y_test,P)

0.7073170731707317

Out[14]:

In [15]: print(confusion_matrix(Y_test,P))

[[47 11 11]

[15 24 0]

[ 9 2 45]]

In [16]: print(classification_report(Y_test,P))

precision recall f1-score support

LTR 0.66 0.68 0.67 69

MHR 0.65 0.62 0.63 39

NRT 0.80 0.80 0.80 56

accuracy 0.71 164

macro avg 0.70 0.70 0.70 164

weighted avg 0.71 0.71 0.71 164

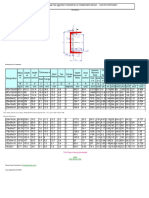

Finding optimal value of K

In [17]: As = []

kk = []

for i in range(2,40):

knn = KNeighborsClassifier(n_neighbors=i,metric='euclidean')

knn.fit(X_train,Y_train)

pred = knn.predict(X_test)

As.append(accuracy_score(Y_test,pred))

kk.append(i)

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 6/9

3/20/24, 3:32 PM KNN

In [18]: As

[0.676829268292683,

Out[18]:

0.725609756097561,

0.7134146341463414,

0.7195121951219512,

0.7012195121951219,

0.7195121951219512,

0.7073170731707317,

0.7012195121951219,

0.7012195121951219,

0.7073170731707317,

0.6951219512195121,

0.7073170731707317,

0.7012195121951219,

0.7073170731707317,

0.7012195121951219,

0.7012195121951219,

0.6890243902439024,

0.6951219512195121,

0.6890243902439024,

0.6951219512195121,

0.6951219512195121,

0.7073170731707317,

0.6890243902439024,

0.6890243902439024,

0.676829268292683,

0.6951219512195121,

0.6890243902439024,

0.6951219512195121,

0.7073170731707317,

0.7073170731707317,

0.6890243902439024,

0.6951219512195121,

0.6890243902439024,

0.6829268292682927,

0.6829268292682927,

0.6890243902439024,

0.6951219512195121,

0.7073170731707317]

In [19]: kk

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 7/9

3/20/24, 3:32 PM KNN

[2,

Out[19]:

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39]

In [22]: plt.figure(figsize=(7,4))

plt.plot(kk,As,color='red',marker='o')

plt.axvline(x=3)

<matplotlib.lines.Line2D at 0x14c171e0610>

Out[22]:

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 8/9

3/20/24, 3:32 PM KNN

In [ ]:

localhost:8889/nbconvert/html/Desktop/STAT 405/KNN.ipynb?download=false 9/9

You might also like

- Big Data Analytics Seminar Report 2020-21Document21 pagesBig Data Analytics Seminar Report 2020-21Bangkok Dude71% (21)

- Comp131 TestDocument84 pagesComp131 TestHailey Constantino76% (17)

- Naive_Bayes_RainfallDocument6 pagesNaive_Bayes_Rainfallsayyeda.stu2018No ratings yet

- 5 1 1-Ridf-CurvesDocument18 pages5 1 1-Ridf-Curvesjohn rey toledoNo ratings yet

- The Sales Manager of An Automobile Parts Dealer Wants To Develop A Model To Predict The Total Annual Sales by Region. Based On Experience ItDocument4 pagesThe Sales Manager of An Automobile Parts Dealer Wants To Develop A Model To Predict The Total Annual Sales by Region. Based On Experience ItJuan Jose RodriguezNo ratings yet

- Tightening Torque PDFDocument2 pagesTightening Torque PDFPablo Alvarez GuichouNo ratings yet

- Tightening Torque: English Units: (Coarse Thread Series)Document2 pagesTightening Torque: English Units: (Coarse Thread Series)bpavlidisNo ratings yet

- Momenti Pritezanja Vijaka PDFDocument2 pagesMomenti Pritezanja Vijaka PDFMladen Čorokalo100% (1)

- Tightening TorqueDocument2 pagesTightening TorqueWONG TSNo ratings yet

- Tightening TorqueDocument2 pagesTightening TorqueMiguel QueirosNo ratings yet

- Tightening Torque - RPM Mechanical Inc PDFDocument2 pagesTightening Torque - RPM Mechanical Inc PDFJesús SuárezNo ratings yet

- Tightening Torque PDFDocument2 pagesTightening Torque PDFVenkatasubramanian IyerNo ratings yet

- Wind Load CalculationDocument3 pagesWind Load CalculationGajera HarshadNo ratings yet

- Principal Component Analysis: C1 C2Document4 pagesPrincipal Component Analysis: C1 C2Wulan Atikah SuriNo ratings yet

- SPSS - BW AsigesDocument6 pagesSPSS - BW AsigesBAGAS PUTRA PRATAMANo ratings yet

- Ash RegressionDocument11 pagesAsh Regressionsarpateashish5No ratings yet

- Case Study 04Document9 pagesCase Study 04Tanvir ShawonNo ratings yet

- Final AssignmentDocument11 pagesFinal AssignmentRadwan OhiNo ratings yet

- Planing Analysis Report 3.9 LCGDocument7 pagesPlaning Analysis Report 3.9 LCGSérgio Roberto Dalha ValheNo ratings yet

- BK-3B Ahmad RahmatullahDocument98 pagesBK-3B Ahmad RahmatullahAhmadRahmatullahNo ratings yet

- UtilitiesDocument4 pagesUtilitiesMANIKANTH TALAKOKKULANo ratings yet

- Small Cap 2017Document66 pagesSmall Cap 2017sakthy82No ratings yet

- Cimenataciones OkkkDocument22 pagesCimenataciones OkkkAlin Del Castillo VelaNo ratings yet

- Varma GarchDocument55 pagesVarma GarchJosue KouakouNo ratings yet

- BNBC 2016: Part 6: Chapter 2 Loads On Buildings and StructuresDocument27 pagesBNBC 2016: Part 6: Chapter 2 Loads On Buildings and Structuresmist ce19No ratings yet

- Sta 2Document40 pagesSta 2Sevo's RahmanNo ratings yet

- Substation Transformer Impedance Worksheet ###: Impedance in Per UnitDocument1 pageSubstation Transformer Impedance Worksheet ###: Impedance in Per UnitromoNo ratings yet

- Polin Monten, PTRDocument2 pagesPolin Monten, PTROmar NavarroNo ratings yet

- Welcome To "Rte-Book1Ddeltanorm - XLS" Calculator For 1D Subaerial Fluvial Fan-Delta With Channel of Constant WidthDocument23 pagesWelcome To "Rte-Book1Ddeltanorm - XLS" Calculator For 1D Subaerial Fluvial Fan-Delta With Channel of Constant WidthsteveNo ratings yet

- COCADocument13 pagesCOCACristhian GoodandBadNo ratings yet

- Ward FootingDocument4 pagesWard FootingUrusha TyataNo ratings yet

- Node X Y Z Node X Y Z: Out in 2 3Document2 pagesNode X Y Z Node X Y Z: Out in 2 3ARUN K RAJNo ratings yet

- TABLE: Specific Shear at Different SectionsDocument2 pagesTABLE: Specific Shear at Different SectionsKshitiz ShresthaNo ratings yet

- Experiment 3: Impact of A JetDocument7 pagesExperiment 3: Impact of A JetBandali TannousNo ratings yet

- Data Vizualization - Jupyter NotebookDocument20 pagesData Vizualization - Jupyter Notebookdimple mahaduleNo ratings yet

- Linear Regression AssignmentDocument15 pagesLinear Regression AssignmentrizkaNo ratings yet

- File Perhitungan GumbelDocument27 pagesFile Perhitungan GumbelCrash_systemNo ratings yet

- Report Full Direct Shear Test Edit Repaired PDFDocument15 pagesReport Full Direct Shear Test Edit Repaired PDFarif daniel muhamaddunNo ratings yet

- RSLTE037 - Service Retainability-RSLTE-LNCEL-2-day-rslte LTE16A Reports RSLTE037 xml-2017 07 14-14 13 28 548Document116 pagesRSLTE037 - Service Retainability-RSLTE-LNCEL-2-day-rslte LTE16A Reports RSLTE037 xml-2017 07 14-14 13 28 548prosenjeet_singhNo ratings yet

- Thay May 1300hpDocument4 pagesThay May 1300hpHUNG NIKKONo ratings yet

- Jagannath GMS Design Report - With Current SectionsDocument13 pagesJagannath GMS Design Report - With Current Sectionsharish gowdaNo ratings yet

- Rain Water Basin DesignDocument11 pagesRain Water Basin DesignSturza AnastasiaNo ratings yet

- Ejercicio Unidad 3 Estabilidad de TaludesDocument10 pagesEjercicio Unidad 3 Estabilidad de TaludesFERMIN CONDORI QUISPENo ratings yet

- Tabel Perhitungan Evapotranspirasi Potensial Dengan Metode PenmannDocument19 pagesTabel Perhitungan Evapotranspirasi Potensial Dengan Metode Penmannfahyudi100% (6)

- Description: Tags: 1997-FfelpDocument12 pagesDescription: Tags: 1997-Ffelpanon-391305No ratings yet

- AnnualDocument34 pagesAnnualDaniel SbizzaroNo ratings yet

- Engineering Hydrology Lab: (GROUP No.11) Section: ADocument21 pagesEngineering Hydrology Lab: (GROUP No.11) Section: AMugahed Abdo Al-gahdariNo ratings yet

- Syauqizaidan K.K - KNearestNeighborsDocument4 pagesSyauqizaidan K.K - KNearestNeighborsSyauqizaidan Khairan KhalafNo ratings yet

- S9 Regresión Simple y Múltiple Al - ColaboratoryDocument14 pagesS9 Regresión Simple y Múltiple Al - ColaboratorySebastian CallesNo ratings yet

- Channels DimensionsDocument2 pagesChannels DimensionsAristotle MedinaNo ratings yet

- PS-11 CT Calcs-Ver 2-6 MiscDocument29 pagesPS-11 CT Calcs-Ver 2-6 MiscMaria Saucedo SanchezNo ratings yet

- Tugas 3 Kelompok 1 Dinamika Kapal BDocument3 pagesTugas 3 Kelompok 1 Dinamika Kapal BFirmansyah AuliaNo ratings yet

- Output Beban Hidup 250kgDocument672 pagesOutput Beban Hidup 250kgwahyuNo ratings yet

- 2x660MW Maitree STPP at Rampal, Bagerhat.: Crosshole Shear Test (CST-1)Document6 pages2x660MW Maitree STPP at Rampal, Bagerhat.: Crosshole Shear Test (CST-1)Priodeep ChowdhuryNo ratings yet

- Minimos Cuadrados: K AÑO Oferta Xy X 2 Y (Y-Y)Document12 pagesMinimos Cuadrados: K AÑO Oferta Xy X 2 Y (Y-Y)EvilLordMuMexNo ratings yet

- VMA2Document1,666 pagesVMA2FranciusNo ratings yet

- Pile Capacity (Version 1)Document6 pagesPile Capacity (Version 1)Rajesh GangwalNo ratings yet

- A Statistical Miscellany of A Special LibraryDocument24 pagesA Statistical Miscellany of A Special LibraryM S SridharNo ratings yet

- Course: Soil Mechanics II Code: CVNG 2009 Lab: Consolidation Name: Adrian Rampersad I.D:809001425Document16 pagesCourse: Soil Mechanics II Code: CVNG 2009 Lab: Consolidation Name: Adrian Rampersad I.D:809001425Adrian Mufc RampersadNo ratings yet

- Centre Universitaire Régional D'interface 1 15/02/2011: Main Graphics, Analyze ViewDocument3 pagesCentre Universitaire Régional D'interface 1 15/02/2011: Main Graphics, Analyze ViewMOHAMMED REZKINo ratings yet

- Metode Analisis Perencanaan I (MAP I) : Fika Febi Novianti (2018280030)Document6 pagesMetode Analisis Perencanaan I (MAP I) : Fika Febi Novianti (2018280030)fika febiNo ratings yet

- Engineering Reports - 2023 - Parthasarathy - A Framework For Managing Ethics in Data Science ProjectsDocument12 pagesEngineering Reports - 2023 - Parthasarathy - A Framework For Managing Ethics in Data Science ProjectsoroborobNo ratings yet

- MLA TAB Lecture3Document70 pagesMLA TAB Lecture3Lori GuerraNo ratings yet

- Hopfield Neural Network: Presented by:V.BharanighaDocument20 pagesHopfield Neural Network: Presented by:V.Bharanighahemanthbbc100% (1)

- 41 R20 MID-1 - Sept-2023Document21 pages41 R20 MID-1 - Sept-2023kalyanNo ratings yet

- Top 100 Interview Questions On Machine LearningDocument155 pagesTop 100 Interview Questions On Machine LearningJeevith Soumya SuhasNo ratings yet

- Introduction To Unsupervised Learning:: ClusteringDocument21 pagesIntroduction To Unsupervised Learning:: Clusteringmohini senNo ratings yet

- Machine Learning Methods in Environmental SciencesDocument365 pagesMachine Learning Methods in Environmental SciencesAgung Suryaputra100% (1)

- Implementation of Flight Fare Prediction System Using Machine LearningDocument11 pagesImplementation of Flight Fare Prediction System Using Machine LearningIJRASETPublicationsNo ratings yet

- 9 A.validation Methods - Jupyter NotebookDocument3 pages9 A.validation Methods - Jupyter Notebookvenkatesh mNo ratings yet

- Master Thesis OguzKiranlar Final Final PDFDocument96 pagesMaster Thesis OguzKiranlar Final Final PDFSweta DeyNo ratings yet

- Comparative Study For ClassificationDocument6 pagesComparative Study For Classificationimad khanNo ratings yet

- Mit Data Science Machine Learning Program BrochureDocument17 pagesMit Data Science Machine Learning Program BrochureImissakNo ratings yet

- (COMP5214) (2021) (S) Midterm Cmu63l 15021Document9 pages(COMP5214) (2021) (S) Midterm Cmu63l 15021ferdyNo ratings yet

- Project List - ClgsDocument18 pagesProject List - ClgsAvinash SinghNo ratings yet

- B. Ramasubramanian Dr.S.Kaja Mohideen: Presented by Guided byDocument25 pagesB. Ramasubramanian Dr.S.Kaja Mohideen: Presented by Guided byramadurai123No ratings yet

- Azure Applied AI ServicesDocument3 pagesAzure Applied AI ServicesAndroidNo ratings yet

- Smartphone Applications For Pavement Condition Monitoring: A ReviewDocument20 pagesSmartphone Applications For Pavement Condition Monitoring: A ReviewVINEESHA CHUNDINo ratings yet

- Deep Learning UniversityDocument129 pagesDeep Learning UniversityVinayak PhutaneNo ratings yet

- Upgrad MLDocument7 pagesUpgrad MLSanjeev Kumar Malik100% (1)

- Synopsis Stock Market PredictionDocument6 pagesSynopsis Stock Market PredictionSantoshNo ratings yet

- Final YearDocument25 pagesFinal YearHarry RoyNo ratings yet

- Assignment # 01 Bscs - 7 Semester: Machine LearningDocument5 pagesAssignment # 01 Bscs - 7 Semester: Machine LearningPk PoliticNo ratings yet

- Update Syllabus For CSE DepartmentDocument65 pagesUpdate Syllabus For CSE DepartmentAbu HanifNo ratings yet

- Assignment 8Document4 pagesAssignment 8VijayNo ratings yet

- Violence Detection Paper 1Document6 pagesViolence Detection Paper 1Sheetal SonawaneNo ratings yet

- Cereal Crop Yield Prediction Using Machine Learning Techniques in EthiopiaDocument76 pagesCereal Crop Yield Prediction Using Machine Learning Techniques in Ethiopiaamandawilliam8060No ratings yet

- Dimensionality ReductionDocument7 pagesDimensionality ReductionM Hafidh Dliyaul HaqNo ratings yet

- Machine Learning OverviewDocument11 pagesMachine Learning OverviewJasmine Delos santosNo ratings yet