Professional Documents

Culture Documents

SSRN-id4089053

SSRN-id4089053

Uploaded by

yashyashwanthpvreddy444Copyright:

Available Formats

You might also like

- KST ServoGun Basic 31 enDocument214 pagesKST ServoGun Basic 31 enRáfaga CoimbraNo ratings yet

- Dot Net 4.0 Programming Black BookDocument1 pageDot Net 4.0 Programming Black BookDreamtech Press100% (2)

- A Swin Transformer-Based Model For Mosquito Species IdentificationDocument13 pagesA Swin Transformer-Based Model For Mosquito Species Identificationshruti adhauNo ratings yet

- Recognition Method of Soybean Leaf DiseasesDocument17 pagesRecognition Method of Soybean Leaf DiseasesabduNo ratings yet

- Plant Disease in SugarncaneDocument20 pagesPlant Disease in SugarncaneMalik HashmatNo ratings yet

- Agriculture 12 00742 v3Document17 pagesAgriculture 12 00742 v3Sofi KetemaNo ratings yet

- Detection of Malaria Disease Using Image Processing and Machine LearningDocument10 pagesDetection of Malaria Disease Using Image Processing and Machine LearningMiftahul RakaNo ratings yet

- Agronomy 12 00365 v2Document14 pagesAgronomy 12 00365 v2Sofia GomezNo ratings yet

- Anitha - Saranya - 2022 - Cassava Leaf Disease Identification and Detection Using Deep Learning ApproachDocument7 pagesAnitha - Saranya - 2022 - Cassava Leaf Disease Identification and Detection Using Deep Learning Approachbigliang98No ratings yet

- Malaria Detection in Blood Smeared Images Using Convolutional Neural NetworksDocument8 pagesMalaria Detection in Blood Smeared Images Using Convolutional Neural NetworksIJRASETPublicationsNo ratings yet

- Enhanced Sugarcane Disease Diagnosis Through Transfer Learning & Deep Convolutional NetworksDocument10 pagesEnhanced Sugarcane Disease Diagnosis Through Transfer Learning & Deep Convolutional NetworksInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Cucumber Leaf Disease Severity Grading Method in Natural Environment Based on the Fusion of TRNet and U-NetDocument19 pagesA Cucumber Leaf Disease Severity Grading Method in Natural Environment Based on the Fusion of TRNet and U-Nettovabi4927No ratings yet

- Detection and Prediction of Monkey Pox Disease by Enhanced Convolutional Neural Network ApproachDocument9 pagesDetection and Prediction of Monkey Pox Disease by Enhanced Convolutional Neural Network ApproachIJPHSNo ratings yet

- Junde Chen Et Al - 2021 - Identification of Rice Plant Diseases Using Lightweight Attention NetworksDocument13 pagesJunde Chen Et Al - 2021 - Identification of Rice Plant Diseases Using Lightweight Attention Networksbigliang98No ratings yet

- Rapid and Low Cost Insect Detection For Analysing Species Trapped On Yellow Sticky TrapsDocument13 pagesRapid and Low Cost Insect Detection For Analysing Species Trapped On Yellow Sticky TrapsAbdsamad ElaasriNo ratings yet

- Coronavirus COVID 19 Detection From Chest Radiology Images UsingDocument6 pagesCoronavirus COVID 19 Detection From Chest Radiology Images UsingHabiba AhmedNo ratings yet

- Detection of Malarial Parasites Using Deep LearningDocument6 pagesDetection of Malarial Parasites Using Deep LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Deep LearningDocument10 pagesDeep LearningAMNo ratings yet

- CNN-process-1 (Trivedi2020)Document10 pagesCNN-process-1 (Trivedi2020)Eng Tatt YaapNo ratings yet

- Sensors 22 08497Document10 pagesSensors 22 08497skgs1970No ratings yet

- Seasonal Crops Disease Prediction and Classification Using Deep Convolutional Encoder NetworkDocument19 pagesSeasonal Crops Disease Prediction and Classification Using Deep Convolutional Encoder NetworkmulhamNo ratings yet

- Patient-Level Performance Evaluation of A Smartphone-Based Malaria Diagnostic ApplicationDocument10 pagesPatient-Level Performance Evaluation of A Smartphone-Based Malaria Diagnostic Applicationdouglas.consolaro2645No ratings yet

- Image Classification Using Deep Neural Networks For Malaria Disease Detection LAXMIDocument5 pagesImage Classification Using Deep Neural Networks For Malaria Disease Detection LAXMIramesh koppadNo ratings yet

- Mobile Convolution Neural Network For The Recognition of Potato Leaf Disease ImagesDocument20 pagesMobile Convolution Neural Network For The Recognition of Potato Leaf Disease ImagesMarriam NawazNo ratings yet

- Plant Disease Detection System For Smart Agriculture, Thilagavathy Et. AlDocument6 pagesPlant Disease Detection System For Smart Agriculture, Thilagavathy Et. AlSasi KalaNo ratings yet

- reference paperDocument6 pagesreference paperbhanuNo ratings yet

- Corn Leaf Disease Classification and Detection Using Deep Convolutional Neural NetworkDocument26 pagesCorn Leaf Disease Classification and Detection Using Deep Convolutional Neural NetworkSaba TahseenNo ratings yet

- 1 s2.0 S0168169923000960 MainDocument12 pages1 s2.0 S0168169923000960 Main1No ratings yet

- Comparative Analysis Diff Malaria ParasiteDocument7 pagesComparative Analysis Diff Malaria ParasiteMichaelNo ratings yet

- 10 1109@10 923789 PDFDocument13 pages10 1109@10 923789 PDFAyu Oka WiastiniNo ratings yet

- Malaria Parasite Detection Using Deep LearningDocument8 pagesMalaria Parasite Detection Using Deep LearningIJRASETPublicationsNo ratings yet

- Frai 05 733345Document11 pagesFrai 05 733345equalizertechmasterNo ratings yet

- Vision-Based Perception and Classification of Mosquitoes Using Support Vector MachineDocument12 pagesVision-Based Perception and Classification of Mosquitoes Using Support Vector Machineshruti adhauNo ratings yet

- Classification and Morphological Analysis of Vector Mosquitoes Using Deep Convolutional Neural NetworksDocument13 pagesClassification and Morphological Analysis of Vector Mosquitoes Using Deep Convolutional Neural NetworkshetiNo ratings yet

- Malaria Detection Using Deep LearningDocument5 pagesMalaria Detection Using Deep LearningDeepak SubramaniamNo ratings yet

- IJRPR14146Document6 pagesIJRPR14146Gumar YuNo ratings yet

- AnoMalNet: Outlier Detection Based Malaria Cell Image Classification Method Leveraging Deep AutoencoderDocument8 pagesAnoMalNet: Outlier Detection Based Malaria Cell Image Classification Method Leveraging Deep AutoencoderIJRES teamNo ratings yet

- 1 Gopakumar2017 PDFDocument17 pages1 Gopakumar2017 PDFMd. Mohidul Hasan SifatNo ratings yet

- Research PaperDocument11 pagesResearch Paperمحمد حمزہNo ratings yet

- Field Pest Monitoring & Pest SurveillanceDocument17 pagesField Pest Monitoring & Pest SurveillanceradheNo ratings yet

- Evaluation of The Parasight Platform For Malaria DiagnosisDocument8 pagesEvaluation of The Parasight Platform For Malaria Diagnosisdawam986142No ratings yet

- Plant Disease Detection Using Deep LearningDocument7 pagesPlant Disease Detection Using Deep LearningIJRASETPublicationsNo ratings yet

- Journal of Clinical Microbiology-2020-Dobaño-JCM.01731-20.fullDocument43 pagesJournal of Clinical Microbiology-2020-Dobaño-JCM.01731-20.fullJorge NúñezNo ratings yet

- 1 s2.0 S0026265X22008487 MainDocument9 pages1 s2.0 S0026265X22008487 MainKamel TawficNo ratings yet

- Early Detection of Tomato Leaf Diseases Based On Deep Learning TechniquesDocument7 pagesEarly Detection of Tomato Leaf Diseases Based On Deep Learning TechniquesIAES IJAINo ratings yet

- 35429-Article Text-126139-2-10-20180206Document5 pages35429-Article Text-126139-2-10-20180206Robertus RonnyNo ratings yet

- Automated SystemsDocument11 pagesAutomated SystemsAquaNo ratings yet

- Escorcia-Gutierrez Et Al. - 2023Document16 pagesEscorcia-Gutierrez Et Al. - 2023apatzNo ratings yet

- Mango Leaf Diseases Identification Using Convolutional Neural NetworkDocument14 pagesMango Leaf Diseases Identification Using Convolutional Neural NetworkShradha Verma0% (1)

- Journal Pone 0284330Document20 pagesJournal Pone 0284330Wanchalerm PoraNo ratings yet

- Ijst 2024 536Document12 pagesIjst 2024 536samuel mulatuNo ratings yet

- A Hybrid Deep Learning Architecture For Apple FoliDocument14 pagesA Hybrid Deep Learning Architecture For Apple FolifirekartonNo ratings yet

- Agronomy 12 02395 v2Document19 pagesAgronomy 12 02395 v2Coffee pasteNo ratings yet

- Article 4 Do Not UseDocument6 pagesArticle 4 Do Not Usesafae afNo ratings yet

- (IJCST-V11I3P2) :K.Vivek, P.Kashi Naga Jyothi, G.Venkatakiran, SK - ShaheedDocument4 pages(IJCST-V11I3P2) :K.Vivek, P.Kashi Naga Jyothi, G.Venkatakiran, SK - ShaheedEighthSenseGroupNo ratings yet

- A Clinico Investigative and Mycopathological Profile of Fungi Causing Subcutaneous Infections in A Tertiary Care HospitalDocument8 pagesA Clinico Investigative and Mycopathological Profile of Fungi Causing Subcutaneous Infections in A Tertiary Care HospitalIJAR JOURNALNo ratings yet

- 012121Document24 pages012121Claidelyn AdazaNo ratings yet

- Zhenbo Li Et Al - 2020 - A Solanaceae Disease Recognition Model Based On SE-InceptionDocument11 pagesZhenbo Li Et Al - 2020 - A Solanaceae Disease Recognition Model Based On SE-Inceptionbigliang98No ratings yet

- Identification of Tomato Plant Diseases From Images Using The Deep-Learning ApproachDocument6 pagesIdentification of Tomato Plant Diseases From Images Using The Deep-Learning ApproachNee andNo ratings yet

- 123 pp1Document17 pages123 pp1yashyashwanthpvreddy444No ratings yet

- The Plant Phenome Journal - 2023 - Kar-1Document20 pagesThe Plant Phenome Journal - 2023 - Kar-1SASASANo ratings yet

- Hsnops SeaTelAntennas 031019 2325 418Document4 pagesHsnops SeaTelAntennas 031019 2325 418Alex BombayNo ratings yet

- Astm C796Document6 pagesAstm C796Abel ClarosNo ratings yet

- App 34Document4 pagesApp 34kagisokhoza000No ratings yet

- Project Report On Tpms DeviceDocument12 pagesProject Report On Tpms DeviceVikas KumarNo ratings yet

- DehumidificationDocument31 pagesDehumidificationmohammed hussienNo ratings yet

- 304 Chromic Acid AnodizingDocument6 pages304 Chromic Acid AnodizingPuguh Cahpordjo BaeNo ratings yet

- Chem7a BSN-1-J Module2 Group5 DapulaseDocument4 pagesChem7a BSN-1-J Module2 Group5 DapulaseKiana JezalynNo ratings yet

- Jazz Piano Skills (Music 15) : TH TH THDocument2 pagesJazz Piano Skills (Music 15) : TH TH THaliscribd4650% (2)

- AMC 10 Book Vol 1 SCDocument169 pagesAMC 10 Book Vol 1 SCAnand AjithNo ratings yet

- SDL 700 DataDocument1 pageSDL 700 DataPetinggi TElektroNo ratings yet

- Electronic Schematics Audio Devices1 PDFDocument250 pagesElectronic Schematics Audio Devices1 PDFElneto CarriNo ratings yet

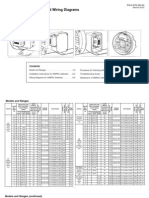

- Installation Instructions and Wiring Diagrams For All Models and RangesDocument8 pagesInstallation Instructions and Wiring Diagrams For All Models and RangesMaria MusyNo ratings yet

- Motp Junior Algebra and Logic Puzzle Problem Set 8Document3 pagesMotp Junior Algebra and Logic Puzzle Problem Set 8K. M. Junayed AhmedNo ratings yet

- Properties of WavesDocument56 pagesProperties of WavesDessy Norma JuitaNo ratings yet

- MTCR Handbook Item9Document20 pagesMTCR Handbook Item9makenodimaNo ratings yet

- 1.08 Example: 1 Exploring DataDocument2 pages1.08 Example: 1 Exploring DataAisha ChohanNo ratings yet

- Asmiv T I Dwgs For 3keDocument14 pagesAsmiv T I Dwgs For 3kePSI Guillermo Francisco RuizNo ratings yet

- Matrix Algebra From A Statistician's Perspective: Printed BookDocument1 pageMatrix Algebra From A Statistician's Perspective: Printed BookbrendaNo ratings yet

- NEW IT Acknowledgement Form - Workstation - User Acceptance Form V2.0 - LOKDocument1 pageNEW IT Acknowledgement Form - Workstation - User Acceptance Form V2.0 - LOKArisha Rania EnterpriseNo ratings yet

- Motor Data For 32038Document1 pageMotor Data For 32038zo-kaNo ratings yet

- UMTS IRAT OptimizationGuidelineDocument52 pagesUMTS IRAT OptimizationGuidelineMohamed Abdel MonemNo ratings yet

- Technical Habilis 6Document6 pagesTechnical Habilis 6EugeneNo ratings yet

- Brocade Hitachi Data Systems QRGDocument4 pagesBrocade Hitachi Data Systems QRGUday KrishnaNo ratings yet

- Circle CPPDocument7 pagesCircle CPPAnugrah AgrawalNo ratings yet

- Infra-Red CAR-KEY Transmitter: OM1058 in Case SO-8Document4 pagesInfra-Red CAR-KEY Transmitter: OM1058 in Case SO-8José SilvaNo ratings yet

- LTRT-83309 Mediant 600 and Mediant 1000 SIP User's Manual v6.4Document824 pagesLTRT-83309 Mediant 600 and Mediant 1000 SIP User's Manual v6.4kaz7878No ratings yet

- Retaining Wall U Wall Type Estimate With Box PushingDocument8 pagesRetaining Wall U Wall Type Estimate With Box PushingApurva ParikhNo ratings yet

- Sample Preparation For EBSDDocument6 pagesSample Preparation For EBSDTNNo ratings yet

SSRN-id4089053

SSRN-id4089053

Uploaded by

yashyashwanthpvreddy444Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

SSRN-id4089053

SSRN-id4089053

Uploaded by

yashyashwanthpvreddy444Copyright:

Available Formats

1 Research on silkworm disease detection in real conditions based

2 on CA-YOLO v3

3 Hongkang Shia Dingyi Tiana Shiping Zhua * Linbo Lib Jianmei Wub

4 a College of Engineering and technology, Southwest university, Chongqing 400700, China

5 b Sericultural Research Institute, Sichuan academy of agricultural sciences, Sichuan 637000,

6 China

7 * Corresponding author. zspswu@126.com

8 Abstract: Silkworm is an important economic insect, but often suffered from diseases during

9 rearing process, resulting in a large amount of cocoons losses each year in China. Accurate

10 recognition for the diseased silkworms can benefit for preventing transmission of pathogens and

11 reducing the loss of silkworm cocoons. However, current recognition methods for silkworm diseases

12 based on deep learning are mainly image classification, which is quite different from the real rearing

13 environments. Therefore, the detection of silkworm diseases under high-density rearing conditions

14 is an unsolved problem. In this paper, the nuclear polyhedrosis virues (the NPV), one of the most

15 high breeding frequency and strong infectiousness silkworm diseases, was selected for detection

16 object, and detection research was carried out by using object detection method. The method of

17 rearing and infecting pathogens was used for acquiring the healthy and diseased silkworms in actual

18 environments. Images mixed with the healthy and diseased silkworms at same time were collected

19 by using mobile phone, and the collected images were labeled by using the Labelimg toolkit. The

20 CA-YOLO v3 network was proposed by using the structure of YOLO v3, as well as combining

21 ConvNeXt module and image attention mechanism to realize the extraction of key features. The

22 CA-YOLO v3 was trained and evaluated on silkworm disease dataset, the recall was 82.35% and

23 93.92%, the precision was 95.32% and 87.90%, the F1-score was 0.88 and 0.91, and the AP was

24 94.81% and 95.19% for the healthy and diseased silkworms respectively, the mean Average

25 Precision (mAP) was 95.0%. The performance was better than the original YOLO v3 network. A

26 GUI of detection for silkworm disease was developed based on PyQt 5, and the trained CA-YOLO

27 v3 model was embedded into the GUI, realizing detection on real-time video, local image and video.

28 The results of this paper indicated that the CA-YOLO v3 can realize efficiently and accurately

29 detection for silkworm diseases. This study can provide theoretical reference for the development

Electronic copy available at: https://ssrn.com/abstract=4089053

30 of disease early warning, and technical support for development precise control equipment.

31 Keywords: silkworm diseases; object detection; CA-YOLO v3; deep learning

32 1. Introduction

33 Silkworm, an important economic insect, mainly used for producing natural silks. In China,

34 silkworm rearing has a history of more than 5,000 years and has always been an important part of

35 agriculture and animal husbandry. However, mulberry leaves are picked from the open air, and

36 silkworm is reared in indoor open environment, which is very susceptible to attacked by pathogens.

37 In addition, silkworm has a short life cycle, high-density breeding, and the pathogens are usually

38 high contagious. Therefore, the infected silkworms are difficult to be cured by using medicines, and

39 usually die directly or do not form cocoons due to serious physiological damage. Statistics show

40 that the average loss of silkworm cocoons caused by diseases accounts for more than 20% of the

41 total output each year in China, and some areas with severe diseases lost more than 50%, and even

42 encounter no harvest, causing huge economic losses (Jiang et al. 2014; Xu et al. 2019).

43 Due to the harmfulness and incurability of silkworm diseases, timely recognition for the

44 diseased silkworms is helpful to cut off the transmission of pathogens, carry out precise control and

45 reduce the loss of silkworm cocoons. However, silkworm belongs to high-density reared insect with

46 subtle individual differences, high similarity between the diseased and healthy silkworms, leading

47 to difficult identify for them. The traditional method mainly rely on manual identification, which

48 has problems such as low efficiency, poor reliability, and relying on long-term professional

49 experience, which cannot meet the demand of the evolution and development of sericulture industry.

50 With the development of computer science in recent years, deep learning technology has been

51 widely used in agriculture and animal husbandry fields (Kamilaris et al. 2018). There are many

52 application cases about this emerging technology. Karlekar and Seal. (2020) proposed a

53 classification method for soybean leaf diseases based on convolution neural network (CNN),

54 achieved effective recognition accuracy of 98.14% for 16 categories diseases. Wang et al. (2020)

55 designed the CPAFNet network, which was similar to Inception (Szegedy et al. 2014) structure for

56 pest image recognition. The accuracy reached 92.26 %, which is better than several state-of-the-art

57 networks on a dataset containing 73, 635 images. Altuntaş et al. (2019) adopted CNN and transfer

58 learning to recognize haploid and diploid maize seeds. The experimental results proved that CNN

Electronic copy available at: https://ssrn.com/abstract=4089053

59 models could be a useful tool in recognizing haploid maize seeds. Shi et al. (2020) used MobileNet

60 v1 (Howard et al. 2017) for image recognition of 10 silkworm varieties, the accuracy rates were

61 98.9% and 96%, respectively. Spirited by this trend and according to the fact that silkworm would

62 show different features after infected diseases. Researchers attend to utilize deep learning and

63 machine vision for recognition of silkworm diseases. Xia et al. (2019) proposed a classification

64 algorithm of silkworm diseases based on image attention mechanism (Borji and Itti, 2013) and

65 DenseNet module (Huang et al. 2016), realizing image recognition for five categories diseased and

66 healthy silkworms. The recognition accuracy achieved 87.8% on a dataset including 7978 images.

67 Ding and Chen. (2019) proposed a method based on the Mergencelayer-Slicelayer-Pairingloss and

68 ALexNet (Krizhevsky et al. 2017) architecture to classify image of silkworm diseases, with the

69 accuracy of 84.5% on same dataset with (Xia et al. 2019). In our previous study (Shi et al. 2021),

70 we adopted rearing and artificial infection method to obtain the healthy and five diseased silkworms,

71 including nuclear polyhedrosis virues (NPV), nosema bombycis, beauveria bassiana, bacterial

72 disease, and pesticide poisoning in same growth stage. Images were collected by using mobile phone

73 and a disease dataset was constructed in actual condition. An improved ResNet (He et al. 2016)

74 algorithm was proposed to recognize image of the healthy and diseased silkworms. The average

75 accuracy reached 94.37%. Hence, the above researches indicated that deep learning could accurately

76 recognize silkworm diseases by using method of image recognition. However, the above studies

77 were carried out by image classification method, which each image contains only one silkworm in

78 dataset. It is inconsistent with the actual situation of high-density rearing of silkworms. Moreover,

79 there are healthy and diseased silkworms in rearing trays at same time when pathogens breeding

80 and transmission, so classification model is not conducive to the recognition of diseases in actual

81 rearing environments.

82 Object detection can overcome the shortcoming of classification networks, and can recognize

83 category and detect position of each object simultaneously. Representative object detection

84 algorithms includes SSD (Wei et al. 2016), Faster R-CNN (Ren et al. 2017), YOLO v3 (Redmon

85 and Farhadi. 2018), and so on. In agriculture fields, using object detection method for research is a

86 hot spot and mainstream (Chen et al. 2021). Riekert et al. (2021) proposed a method to detect posture

87 for 24/7 pig position and posture based on Faster R-CNN and NASNet (Zoph and Li. 2017), which

88 realized 84.5% mean average precision (mAP) for day recording and 58% mAP for night recording.

Electronic copy available at: https://ssrn.com/abstract=4089053

89 Liu and Wang. (2022) proposed a two-stages method for pig face recognition based on EfficientDet-

90 d0 (Tan et al. 2019) and DenseNet 121, which improved the mAP by 28% than EfficientDet-d0

91 network trained by the one-stage method. Liu et al. 2022 presented a MSRCR-YOLO v4-tiny model

92 based on MSRCR algorithm and channel pruning, to detect corn weeds in field environment. The

93 test result showed that the mAP achieved 96.6%, which was higher than Faster-RCNN and YOLO

94 v3 network. Wang et al. (2022) proposed Pest-D2Det network for pest monitoring by using attention

95 module and modifying the backbone of the original D2Det model. The experiment result showed

96 Pest-D2Det achieved performance in terms of 78.6% mAP.

97 Among the emerging object detection architectures, YOLO v3 (Redmon and Farhadi. 2018)

98 can realize efficiency and precision detection at the same time, and has attracted extensive attention

99 from researchers. Liu and Wang. (2020) used YOLO v3 and image feature pyramid for detection of

100 tomato leaf diseases and insect pests, which the mAP reached 92.39 %. Wang et al. (2020) employed

101 YOLO v3 to detect six behaviors of laying hens, including mating, standing, feeding, spreading,

102 fighting, and drinking. Tian et al. (2019) designed an improved YOLO v3 to detect different growth

103 stages of apple. The detection results were better than Faster R-CNN. Bai et al. (2022) used YOLO

104 v3 and U-Net (Olaf et al. 2015) for segmentation and detection of green cucumbers, which achieved

105 good performance simultaneously. Zhang et al. (2022) proposed an improved YOLO v3 algorithm

106 to extract skeleton of beef cattle by detecting 16 key nodes in actual breeding environments, the

107 experiment indicated that the AP reached 97.18%. The research cited above showed that YOLO v3

108 could be competent for a variety of detection tasks, but silkworm belongs to high-density rearing

109 insect, and healthy silkworms are very similar to the diseased silkworms in appearance. In order to

110 achieve excellent detection for silkworm disease by using YOLO v3, it is necessary to enhance the

111 key feature extract capability of network.

112 Attention mechanism (Borji and Itti, 2013) is an important method to improve the performance

113 of deep learning network. It can make network pay more attention to the feature that play an

114 important role for detection or recognition. Common visual attention mechanism models include

115 SENet (Hu et al. 2019), CBAM (Woo et al. 2018), and ECA (Wang et al. 2020), etc. Some scholars

116 have combined attention mechanism and YOLO algorithms to conduct detection research in the

117 field of agriculture, and achieved very excited results. Qi et al. (2022) added SENet module to

118 YOLO v5 network to detect leaf diseases of tomato plants. The results showed that the network with

Electronic copy available at: https://ssrn.com/abstract=4089053

119 SENet achieved better performance than the original one. Lu et al. (2022) added CBAM module to

120 YOLO v4 to detect immature or mature apple fruits. The result showed that the improved

121 architecture was better in F1-score, precision and recall. Le et al (2021) designed an improved

122 YOLO v4–tiny network by using an adaptive spatial feature pyramid method and CBAM module.

123 The experimental results on detection of individual green pepper indicated that the performance of

124 improved algorithm was better than SSD, Faster R-CNN, YOLO v3, and so on. Zhang et al. (2021)

125 used a lightweight attention mechanism model MobileNet v3 (Howard et al. 2019) to replace the

126 backbone of YOLO v4, and detect potato individual in complex environment, which realized

127 significantly improved detection efficiency without accuracy reduction.

128 In the past period, image vision and sequence model were two independent branches of deep

129 learning. Yet, with Transformer (Vaswani et al. 2017), a sequence model based on pure attention

130 mechanism, achieved great success in sequence tasks. More and more researchers have applied

131 Transformer ideas to image vision fields. The milestone is ViT model, proposed by Dosovitskiy et

132 al (2021). ViT first regards an image as 16 × 16 patches, and expands each patch into a one-

133 dimensional vector. Transformer encoder then was used for extracting image global features based

134 on attention mechanism. One-dimension vector representing the prediction result was obtained by

135 the Softmax operation, which as same as image classification in CNNs. ViT model not only realized

136 the leapfrog development of image classification using sequence network, and its accuracy on

137 ImageNet was higher than the representative algorithm in CNNs after pre-training on large dataset.

138 Subsequently, Liu et al. (2021) proposed the Swin-Transformer network based on ViT module,

139 which build some hierarchical features maps of image, indicated that Transformer architecture could

140 be used for visual tasks such as object detection and semantic segmentation. The recognition

141 accuracy on ImageNet dataset was better than ViT and the sate-of-the-art CNNs. Hence, many

142 researchers regard Transformer architecture as the mainstream of deep learning in the next few years

143 (Han et al. 2021). More recently, however, Liu et al. (2022) proposed a CNN model named

144 ConvNeXt, which adopted a series of optimization methods and absorbed the design idea of Swin-

145 Transformer, and achieved better performance on ImageNet than Swin-Transformer. Therefore,

146 ConvNeXt is also regard as the "Renaissance" of CNNs.

147 In summary, in order to realize efficient and accurate detection of silkworm diseases, the NPV,

148 which is one of high infectious and accounts for more than 60% in all diseases (Jiang et al. 2014),

Electronic copy available at: https://ssrn.com/abstract=4089053

149 was selected for study in this work. The approach improved the existing YOLO v3 algorithm, and

150 a CA-YOLO v3 network was designed based on ConvNeXt, Swin-Transformer and visual attention

151 mechanism to replace backbone of YOLO v3. We also constructed a silkworm disease dataset,

152 which each image containing healthy and diseased silkworms so that similar to real rearing

153 conditions. This study can provide support for the research of disease early warning and equipment

154 development of precision control.

155 The organizational structure of this paper is that the second section will introduce data

156 collection and dataset construction, as well as the structure and principles of CA-YOLO v3. The

157 third section is the experimental environment and results, and the last chapter is the conclusion of

158 research and future work.

159 2. Materials and method

160 2.1 Sample collection and image acquisition

161 2.1.1 Sample collection

162 Nowadays, there are thousands of silkworm varieties in the world and each variety enjoy

163 different appearance and disease resistance. In this experiment, a variety named Chuan Shan × Shu

164 Shui, which is main rearing variety in southwest of China, and easily suffered from the NPV, was

165 used for experiment sample. Fig. 1 showed the healthy and suffered from the NPV pathogeny images

166 of Chuan Shan × Shu Shui at instar 4. To collect adequate samples for dataset construction, a method

167 of rearing and manual infecting pathogens was conducted before image collection. According to the

168 fact that young silkworm co-breeding (Shi et al. 2018) was generally adopted in China, which

169 realized reduction of the risk of disease infection at instar 1 to 3, so this paper mainly focuses on

170 adult silkworm (instar 4 to 5).

171

172 (a) (b) (c) (d)

173 Fig.1 Health and diseased images of silkworm variety Chuanshan×Shushui, (a) is health image, (b), (c), (d) are

174 diseased images.

Electronic copy available at: https://ssrn.com/abstract=4089053

175 In this experiment, more than 3,000 silkworms were reared for sample collection, about half

176 of which were used for diseased samples and other for healthy ones. Diseased silkworms were

177 infected by smearing the NPV pathogen on mulberry leaves at the first day of instar 4. The dosage

178 of pathogen was 5 ml, which can make most of silkworms get sick. The onset time of the NPV is

179 about 3 days after infected pathogens. After infection, all silkworm were reared normally, and only

180 once pathogen infection.

181

182 Fig.2 Infection pathogenic

183 2.1.2 Image acquisition

184 From the third day after infection, an expert checked the infected silkworms one by one, and

185 the diagnosed silkworms were used for diseased sample. When image acquisition, several healthy

186 and diseased silkworms were placed manually on background to imitate the scene of healthy

187 silkworms mixed with diseased silkworms when emerging of diseases in real rearing conditions.

188 Image acquisition lasted four days because silkworm individual got sick at different times. The

189 number of samples consumed and images collected each day was shown in Table 1. Silkworms have

190 been photographed were no longer used for research sample in order to prevent cross infection.

191 Table 1 Number of samples consumed and number of images collected each day

Data 4th day of 1th day of 2th day of

Sleeping day Total

Group instar 4 instar 5 instar 5

Health 407 20 751 315 1493

Diseased 422 189 680 188 1479

Image number 211 90 486 154 941

192 Note: some diseased silkworm did not sleep in sleeping day due to infection. Only a few healthy silkworm did not sleep in time.

193 Images of silkworm were photographed and acquired using a model iPhone 6S smart phone

194 with a 12-megapixel. The environment was indoor with natural light, and collection time was 9:00-

195 11:00 and 15:00-17:00 in each day. A tripod was used for fix the collection device so that the lens

Electronic copy available at: https://ssrn.com/abstract=4089053

196 was pointing downward and the silkworm maintained a natural posture (Fig. 3). To ensure that the

197 body shape of the silkworm did not change after image resizing, the aspect ratio of capture device

198 screen was set to 1: 1, and the focal length was fixed to ensure that the screen could contain 8 ~ 10

199 silkworms. Mulberry leaves were selected as image background and be replaced frequently and

200 randomly to avoid image background becomes regular. Image collection method has been used in

201 our previous research (Shi et al. 2020, Shi et al. 2022).

202

203 Fig.3 Image acquisition device

204 In this experiment, the manual placement of diseased and healthy silkworms in one background

205 was used to simulate the early stage of disease spreads, which needs timely warning and prevention.

206 Hence, most of the collected images contain both healthy and diseased silkworms. Moreover, some

207 images contain only one diseased silkworm to imitate that the phenomenon of manic crawling when

208 silkworm infected the NPV. A total of 941 original images were collected, which including 2972

209 silkworms, composed of 1479 diseased silkworms and 1493 healthy ones. Some of collected

210 original images were shown in Fig.4.

211

212 Fig.4 Example of original image, most of collected images contain healthy and diseased silkworms

213 simultaneously.

214 2.2 Dataset construction

215 2.2.1 Image resizing and labeling

216 The size of the original captured image was 3224 × 3224 pixels, which is more than input size

Electronic copy available at: https://ssrn.com/abstract=4089053

217 of common object detection algorithms. The bilinear interpolation was employed for resized original

218 image to 416×416 pixels,and no other image preprocessing method was performed. All images

219 were divide into a training set and test set in the ratio of 8: 2 by selecting images randomly. The

220 annotation called LabelImg was used to label the images, according to recognition results by expert.

221 A rectangle box (ground box) was used to represent position of silkworm in image and the label NP

222 (Nuclear Polyhedrosis virus) and H (healthy) was used to represent diseased and healthy silkworm

223 respectively, as shown in Fig. 5. A total of 1493 H objects and 1479 NP objects were labeled.

224

225 Fig.5 Label fabrication

226 2.2.2 Image enhancement

227 Data augmentation can improve the stability of neural networks. In this study, silkworms were

228 placed on background by manual for image collection, it is inevitable that dataset has formed some

229 law due to personal operation habits, the spacing and posture between silkworm and mulberry leaf

230 at different time, which may affect detection research. Hence, some image enhancement operation

231 were carried out on training set collected by this paper, including random rotation, width and height

232 shift, and randomly horizontal flip. The parameters of enhancement were shown in Table 2, where,

233 𝑁 is the length and width of the image.

234 Table 2 Parameters of image enhancement

Parameter Rotation Width shift Height shift range Randomly Shuffle

range (𝛼) range (𝑡𝑥) (𝑡𝑦) horizontal flip

Electronic copy available at: https://ssrn.com/abstract=4089053

Value ( ‒ 90𝑜,90𝑜) (-0.2 × N, 0.2 × N) (-0.2 × N, 0.2 × N True True

235 Examples of image enhancement were shown in Fig. 6. For one image on training set, image

236 enhancement would provide different results due to the parameters of enhancement are random

237 number in a certain range, and only one enhanced image would be used to replace original image

238 for model training. Moreover, image enhancement operation were carried out by transform each

239 pixel coordinates, the ground boxes of image were changed synchronously after enhancement.

240

241 (a) Original image

242

243 (b) Images after data enhancement

244 Fig.6 Example of image enhancement, (a) is original image; (b) are images after data enhancement. When

245 training the networks, only one image in (b) would used to replace to original image for training.

246 2.3 CA-YOLO v3

247 2.3.1 Network structure

248 The primary network architecture of CA-YOLO v3 was based on generic YOLO v3, as shown

249 in Fig. 7. The backbone of CA-YOLO v3 is composed of an input layer, a convolution layer, and

250 four stages CA-block. The input size of CA-YOLO v3 is 416 × 416 × 3. On convolution layer, 96

251 filters with a size of 4 × 4 are used to perform convolution operation, and the convolution stride is

252 4, so the dimension of feature map is mapping into 104 × 104 × 96 after convolution operation.

253 Then, four stages CA-blocks were designed to extract key features of silkworm image. We detail

254 structure of CA-block in the section 2.3.2. The basic number of convolution kernels of the four

255 stages CA-blocks is 96, 192, 384, and 768 respectively, and the stage compute ratio is set to 3, 3, 9

256 and 3, which means the loop number of residual operations in CA-block instead of the number of

257 CA-block. These amount distributions about the number of convolutional kernel and stage ratio

Electronic copy available at: https://ssrn.com/abstract=4089053

258 were originated from Swin-Transformer, and be adopted in ConvNeXt architecture. Furthermore,

259 compared to Darknet-53, the backbone of generic YOLO v3, which constituted by five stages

260 residual block, we reduced the computational complexity and training parameters, so as to improve

261 efficiency of network. Another reason why we adopt this stage compute ratio is that we argue the

262 feature maps of 26 × 26 dimensional plays a key role in connecting the preceding and the following,

263 so it be set to 9, which is 3 times than other CA-blocks. The CA-block in first stages does not change

264 the width and height of feature maps, other stages CA-blocks halve the width and height of feature

265 maps to ensure obtain the feature maps with dimensional of 13 × 13, 26 × 26 and 52 × 52 for feature

266 fusion in FPN. All CA-blocks double the channel of feature maps.

267

268 Fig.7 CA-YOLO v3 structure, the size of input image is 416×416×3, “Conv2D, 4×4, 96, stride=4” represents

269 using 96 filters with a size of 4×4 and stride of 4 to conduct convolutional operation. “batch_size, 104×104×96”

270 represents the number of batch operation images and the output dimension of the feature map after CA-blocks

271 operation respectively. “CA-blocks, 96, 3” refers to the basic number of filters in CA-blocks is 96, and the loop of

272 residual operation in CA-block is 3 times. “Concat layer” means future concatenate layer. “Upsampling” is up sample

273 layer. “Conv2D, 3×3, 1×1” denotes two convolutional layers with a size of 3×3 and 1×1 respectively.

274 We adopted CA-block to replace original five convolution layers in FPN network, to make

275 structure of network keep coherent so that to ensure detection efficiency. However, there are

276 some differences between CA-block in FPN and backbone extraction network, which be

277 described in section 2.3.2. There are two convolutional layers with a size of 3 × 3 and 1 × 1

278 filters respectively in YOLO head. The number filters of in with a size of 3 × 3 convolution

Electronic copy available at: https://ssrn.com/abstract=4089053

279 layers is four times than basic number filters of CA-block in FPN, the number filters of last

280 convolutional layer is related to the number of detection categories. The specific calculation

281 formula is as follow:

282 K = (N + 5) × 𝑁𝐶 (1)

283 where K means the number of filters in the last convolution operation, N refers to the number

284 of detection object categories, 𝑁𝐶 is the number of anchor boxes.

285 This research includes healthy and diseased silkworms, and the number of anchor boxes in

286 each YOLO head is 3, it can be concluded that K is 21 by using formula (1).

287 2.3.2 CA-block

288 We proposed two different CA-blocks in backbone and the FPN network respectively, the

289 structure of CA-block in backbone is shown in Fig.8 (a), the Padding operation with 5 pixels is used

290 to prevent loss of edge features and control dimension of output feature maps. A depth-wise

291 separable convolutional operation with a size of 7×7 filter, and a normal 2D convolutional layer

292 with a size of 1×1 filter are used for feature extraction and channel adjustment, followed by a vision

293 attention block. Then, a residual architecture contains 7×7 depth-wise separable convolution and 3

294 normal convolutional layers with a size of 1 × 1 are employed for increase the depth and extraction

295 capability of block, and residual block is performed repeatedly according the stage compute ratio in

296 backbone.

297

298 (a) (b)

Electronic copy available at: https://ssrn.com/abstract=4089053

299 Fig.8 Block design for backbone, FPN of CA-YOLO v3.

300 Fig.8 (b) showed the structure of CA-block in FPN network, a 1×1 convolutional layer

301 operation is used first for channel adjustment due to the input future map is conducted by

302 concatenation operation. A depth-wise separable convolutional layer with a size of 3×3 filter and 3

303 convolutional layers with a size of 1×1 were used to feature extraction and channel adjustment,

304 followed by a attention block.

305 We designed large convolutional kernel with a size of 7×7 in backbone of CA-YOLO v3 to

306 enhance the receptive filed of the network as much as possible. The performance of large kernel

307 was proved in recent research (Szegedy et al., 2017; Ding et al., 2022). However, in FPN network,

308 a depth-wise size of 3×3 was used for extract more exact features. We adopted separable convolution

309 operation to reduce training parameters and ensure operation efficiency. The training parameters of

310 CA-YOLO v3 are only 46.3 million, which is 15 million less than YOLO v3. At same time, an

311 image attention block was added in CA-block, which be detailed in section 2.3.3, to enhance

312 extraction capability of key features. There is no attention block in residual structure of CA-block

313 in backbone for avoiding the surge of parameters.

314 The idea of inverted bottleneck was also used in CA-block, its principle is changing the

315 adjustment way of feature channels. This idea originated from Swin-Transformer, also applied to

316 ConvNeXt network. As shown in Fig.9. The inverted bottleneck refers to that expanding the channel

317 number of feature maps to four times than the original, and then restoring them. This is different

318 from conventional method in ResNet, which condensing the channel to 1/4 times of input future

319 maps, and then restoring them. ConvNeXt has verified the inverted bottleneck was beneficial for

320 improving network performance.

321

Electronic copy available at: https://ssrn.com/abstract=4089053

322 Fig. 9 Block designs for a ResNet, a Swin Transformer, and a ConvNeXt.

323 The operation including batch normalization and LeakyReLU activation was conducted in each

324 convolutional layers of CA-blocks, the formula of LeakyReLU is as follows:

325 𝐿𝑒𝑎𝑘𝑦𝑅𝑒𝐿𝑈(x) = {0.1 x,× x, 𝑖𝑓 𝑥 ≥ 0

otherwise (2)

326 2.3.3 Attention block

327 Image attention mechanism mainly includes spatial attention model and channel attention

328 model. Spatial attention model focuses on the spatial local information of image, while channel

329 attention model focuses more on the characteristic channel information (Qi et al, 2022). In this

330 article, we introduced the ECA (efficient channel attention) module, proposed by (Wang et al. 2019),

331 into the CA-block, which was expected to make the algorithm focus more on the difference of

332 healthy and diseased silkworms and the location of each silkworm. Specifically, ECA is a channel

333 attention mechanism using convolution operation instead of full connection operation, which was

334 proposed by SENet (Hu et al. 2019), to enhance local cross-channel interaction without

335 dimensionality reduction. ECA module first compresses the feature maps in the spatial dimension

336 and then performs one-dimensional convolution and activation operation to obtain the attention

337 weights, the refined feature map finally was obtained by multiplying the attention weights and

338 original feature map.

339 The method of using ECA module for attention weights is as follows: for given feature maps, 𝑋

× H × C

340 = [x1, 𝑥2, … 𝑥𝑐], 𝑋 ∈ R𝑊 , where 𝑊, H, and C represent the length, width, and

341 number of feature channels, respectively. The global average pooling was used for gained one-

342 dimensional feature graph, the formula is as follows:

343 Y = GAP(𝑋) (3)

344 where, the GAP refers to the global average pooling.

× 1 × C

345 Therefore, the output after the GAP is Y ∈ R1 , Then a one-dimensional convolution

346 and activation operations were utilized for acquired the attention weight of feature channels. The

347 relationship for Y' is as follows:

348 Y' = 𝛿(𝐶1𝐷𝑘(Y)) (4)

349 where 𝐶1D refers to one-dimensional convolutional operation, subscript k is the kernel size,

350 the default value is 5, 𝛿 means activation function, the formula is as follows:

Electronic copy available at: https://ssrn.com/abstract=4089053

1

351 𝛿(𝑥) =

1 + 𝑒‒𝑥

(5)

352 Therefore, the training parameter of using ECA module was Wk, which is defined as follows:

w1,1 w1,k 0 0 0

0 w2,2 w 2, k 1

0 0

353 (6)

C ,C

0 0 0 wC ,C k 1

w

354 According to the above matrix, the amount of parameters increase 5 ∗ C when using ECA

355 module each time. The broadcast operation provided by Python was used for changing the shape of

× 1 × C × H × C

356 Y' ∈ R1 to Y' ∈ R𝑊 , followed by multiplying Y' by input feature graph 𝑋, the

357 importance of each channel in the original feature map was obtained.

358 2.3.4 Loss function

359 The loss function acts as evaluating the CNN model in the process of training. The CA-

360 YOLO v3 employed the mean squared error (MSE) loss function for module training. The

361 specific calculation formula for MSE loss is described in equation (7), which concluding the

362 coordinate error of detection objects, the confidence error of region where containing or not

363 containing detection objects, and the category probability error of detection object. Different

364 weights were assigned to each error and the square operation of the width and height of the

365 prediction boxes makes the position of the prediction boxes, the confidence of the category and

366 the accuracy of the category reach a relatively ideal state.

𝑆2 𝐵

367 𝐽 = 𝜆𝑐𝑜𝑜𝑟𝑑 ∑ ∑ 𝐼 [ (𝑥 ‒ 𝑥 ) 𝑜𝑏𝑗

𝑖𝑗 𝑖 𝑖

2

+ (𝑦𝑖 ‒ 𝑦𝑖) ]

2

𝑖 = 0𝑗 = 0

𝑆2 𝐵

368 + 𝜆𝑐𝑜𝑜𝑟𝑑 ∑ ∑ 𝐼 [( 𝑜𝑏𝑗

𝑖𝑗 𝑤𝑖 ‒

2

𝑤𝑖) + ( ℎ𝑖 ‒ ℎ𝑖)

2

]

𝑖 = 0𝑗 = 0

𝑆2 𝐵

369 + ∑ ∑𝐼 𝑜𝑏𝑗

𝑖𝑗 (𝐶𝑖 ‒ 𝐶𝑖)2

𝑖 = 0𝑗 = 0

𝑆2 𝐵

370 + 𝜆𝑛𝑜𝑏𝑗 ∑ ∑𝐼 𝑛𝑜𝑜𝑏𝑗

𝑖𝑗 (𝐶𝑖 ‒ 𝐶𝑖)2

𝑖 = 0𝑗 = 0

𝑆2 2

371 + ∑𝑖 = 0𝐼𝑜𝑏𝑗

𝑖 ∑𝑐 ∈ 𝑐𝑙𝑎𝑠𝑠𝑒𝑠

(𝑝𝑖(𝑐) ‒ 𝑝𝑖(𝑐)) (7)

372 where 𝜆𝑐𝑜𝑜𝑟𝑑 represents the weight of grid containing object with value of 5. S is the number

373 of grid in the input image, with values of 13, 26 and 52 respectively. B refers to the number of

Electronic copy available at: https://ssrn.com/abstract=4089053

374 anchor boxes generated by each grid. 𝐼𝑜𝑏𝑗 𝑡ℎ

𝑖 𝑗 denotes the silkworm falls into 𝑗 bounding boxes of

375 𝑖𝑡ℎ grid. 𝑥𝑖 and 𝑦𝑖 are the geometric center coordinate of silkworm in the input image, 𝑥𝑖 and 𝑦𝑖

376 are the prediction coordinate of network. 𝑤𝑖 and ℎ𝑖 are the width and height of bounding box in

377 the input image. 𝑤𝑖 and ℎ𝑖 are the width and height of prediction box. 𝐶𝑖 is the ground

378 confidence value of object of 𝐼𝑜𝑏𝑗 𝑛𝑜𝑜𝑏𝑗

𝑖 𝑗 , 𝐶𝑖 is the prediction confidence value of network. 𝐼 𝑖 𝑗

379 reprents there is no silkworm falls into 𝑗𝑡ℎ bounding boxes of 𝑖𝑡ℎ grid, 𝐼𝑜𝑏𝑗

𝑖 represents the

380 geometric center of detection object falls into 𝑖𝑡ℎ grid, 𝜆𝑛𝑜𝑏𝑗 is the weight of grid which not

381 containing object with value of 0.5. 𝑝𝑖(𝑐) denotes the probability of 𝑐 belongs to healthy or

382 diseased, 𝑝𝑖(𝑐) denotes the prediction probability of 𝑐 belongs to healthy or diseased. MSE loss

383 function reduces the contribution of the grid that does not contain object to the parameter update by

384 setting different weight values.

385 2.4 Experimental environment and evaluation indicators

386 2.4.1 Experimental environment

387 All experiments were operated on a DellL Precision 5820 workstation with an Intel® Core i7-

388 9800X processor, and RTX2080Ti GPUs, with 11GB memory, and the CUDA-10.0 computing

389 platform. The operating system was Windows10 Professional (64 bits), the programming language

390 was Python3.7, the programming environment was Jupyter notebook, and the deep learning

391 framework was TensorFlow GPU 1.14. The toolkits used include Numpy, Keras, PIL, etc.

392 The hyper-parameters of model training included the number of epoch was 300, and the mini-

393 batch size was 4. The learning rate was initial 0.001, and was multiplied by 0.8 to reduce it when

394 the loss value did not decrease in five consecutive epochs. The MSE loss was used as a loss function

395 and the Adam was used as the optimizer. The IoU (Intersection over Union) value was 0.5, and the

396 confidence threshold of prediction result was 0.3.

397 The size of anchor boxes during data encoding and decoding were 10 × 13, 16 × 30, 33 × 23,

398 30 × 61, 62 × 45, 59 × 119, 116 × 90, 156 × 198, 373 × 326. We directly used these anchor boxes

399 proposed by YOLO v3 due to the boxes obtained by clustering operation of ground boxes from our

400 dataset is not distributed in proportion, and the experiment showed that it would reduce the detection

401 accuracy.

402 2.4.2 Evaluation indicators

403 The 80% images (602 images) on training set were used for network training, and 20% (150

Electronic copy available at: https://ssrn.com/abstract=4089053

404 images) are used for network verification. The training set includes 1221 healthy silkworms and

405 1216 diseased silkworms. The model weight was saved after the training, and the model was tested

406 by using test set (189 images), which include 272 healthy silkworms and 263 diseased silkworms.

407 The precision, recall, F1-score, average precision (AP) and mean average precision (mAP) were

408 used for model evaluation. The specific calculation formulas are as follows, respectively:

𝑇𝑃

409 𝑃𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛 = 𝑇𝑃 + 𝐹𝑃 × 100% (8)

𝑇𝑃

410 𝑅𝑒𝑐𝑎𝑙𝑙 = 𝑇𝑃 + 𝐹𝑁 × 100% (9)

2 × Precision × Recall

411 F1-score = Precision + Recall

(10)

N 1

412 AP = ∑1∫0Precision(𝑅ecall)𝑑𝑅 × 100% (11)

∑N ∫1Precision(𝑅ecall)𝑑𝑅

1 0

413 mAP = 𝑁 × 100% (12)

414 where TP (True Positives) refers to the object is detected as a positive sample and the test result

415 is correct, FP (False Positives) means the object is detected as a positive sample, but the actual

416 object is a negative sample, FN (False Negatives) represents object is detected as a negative sample,

417 but the object is actually a positive sample. AP is the area enclosed by precision and recall curve (P-

418 R curve). mAP is the average of all AP. In this study, objects are healthy silkworm and pyogenic

419 silkworm respectively, so 𝑁 is 2.

420 Judging an object belongs to a positive sample or a negative sample is not absolute. For

421 example, when the detection object is healthy silkworms, the area contain diseased silkworm or not

422 contain silkworm is a negative sample. Whereas healthy silkworms may be regard as negative

423 sample when detecting diseased silkworms, Therefore, the precision, recall, F1-score, AP were

424 calculated for both healthy and diseased silkworms respectively.

425 3 Experimental results

426 3.1 Comparison of the detection effect with YOLO v3

427 In order to verify the training and detection effects of CA-YOLO v3, the original YOLO v3

428 and CA-YOLO v3 architecture were trained and tested under the same environments, respectively.

429 The loss values of the two networks in the training set and validation set were recorded at same

430 time.

431 Fig.10. showed the loss value curve of CA-YOLO v3 and YOLO v3 training, it can be

Electronic copy available at: https://ssrn.com/abstract=4089053

432 concluded that the convergence speed of CA-YOLO v3 was slower than YOLO v3 in the initial

433 stage of training, the reason of this phenomena is that image attention mechanism and large size

434 kernel made the network spend more time to extract the key features. The two networks can reach

435 the state of relative convergence after about 100 epochs, and the convergence effects of two

436 networks are same approximately. However, the loss value of CA-YOLO v3 is significantly smaller

437 than that of YOLO v3 network after about 150 epochs, indicating its training effect is better.

438

439 (a) Training set (b) Validation set

440 Note: in order to observe the convergence of the network, we used 100 to replaced the loss value greater than 100 at the initial stage

441 of training.

442 Fig.10 Loss curves of CA-YOLO v3 and YOLO v3 in training

443 CA-YOLO v3 and YOLO v3 were tested on test set after model training, and the recall,

444 precision, F1-score, AP and mAP for healthy and diseased silkworms were calculated respectively,

445 which was shown in Table 3.

446 Table 3 The detection result of two networks

Recall Precision F1-SCORE AP

Model mAP

H NP H NP H NP H NP

YOLO v3 83.82 % 88.59 % 89.76 % 84.73 % 0.87 0.87 94.15 % 92.10 % 93.13 %

CA-YOLO v3 82.35 % 93.92 % 95.32 % 87.90 % 0.88 0.91 94.81 % 95.19 % 95.00 %

447 Note: The “H” means healthy silkworms, and the “NP” refers to diseased silkworms.

448 The test result showed that the proposed CA-YOLO v3 achieved the better performance based

449 on the most of evaluating indicator, including precision of 95.32% and 87.90%, F1-score of 0.88

450 and 0.91, AP of 94.81 and 95.19% for healthy silkworms and diseased silkworms respectively. More

451 importantly, the mAP of CA-YOLO v3 reached 95.00%, which is 1.87% higher than 93.13% of

Electronic copy available at: https://ssrn.com/abstract=4089053

452 YOLO v3. There is only one index of CA-YOLO v3 was slightly lower than YOLO v3, which is

453 the recall of healthy silkworm. However, CA-YOLO v3 achieved recall of 93.92% for diseased

454 silkworm, which is significantly better than 88.59% of YOLO v3.

455 3.2 Ablation study of CA-block

456 3.2.1 Ablation study of kernel size

457 In order to prove the effectiveness of CA-block with different structure, the ablation study on

458 different types of kernel size, attention module, and stage ratio were performed in this section. The

459 influence of kernel size was tested first. We used the different kernel size, which is from 3×3 to

460 11×11, for depth-wise separable convolution to tested the detection effectiveness.

461 Table 4 Influence of kernel size

Recall Precision F1-score AP

Methods mAP

H NP H NP H NP H NP

3×3 dw 64.34 % 85.55 % 88.83 % 74.01 % 0.75 0.79 89.36 % 86.66 % 88.01 %

5×5 dw 72.06 % 86.69 % 92.45 % 80.85 % 0.81 0.84 92.56 % 89.75 % 91.16 %

7×7 dw 76.10 % 86.69 % 90.79 % 84.44 % 0.83 0.86 90.76 % 89.27 % 90.01 %

9×9 dw 77.21 % 90.49 % 94.17 % 84.40 % 0.85 0.87 93.24 % 92.76 % 93.00 %

11×11 dw 54.51 % 63.12 % 89.70 % 74.11 % 0.68 0.68 86.73 % 77.40 % 82.07 %

462 The “dw” means depth-wise separable convolution.

463 As demonstrated in Table 4, the experiment result implicated that the kernel size of CA-block

464 do impact the detection performance and the kernel size with 9 × 9 dw achieved the best results,

465 with the mAP of 93.00 %. It also showed that with the increase of the kernel size from 3 × 3 to 9 ×

466 9, the mAP increases except that 7 × 7 dw achieved slight reduction than 5 × 5 dw. However, the

467 kernel size with 11 × 11 obtained the mAP with only 82.07 %, which was far below than other size.

468 The reason for this result may be the padding operation before 11×11 dw operation was 9 pixel,

469 which contained more irrelevant information for feature extraction. In addition, the result showed

470 that increasing the kernel size in some range is helpful to improve the detection performance.

471 3.2.2 Ablation study of ECA module

472 To verify that attention mechanism can effectively enhance the capability of key feature

473 extraction, the ECA module was combined with different kernel size were tested in this section. The

Electronic copy available at: https://ssrn.com/abstract=4089053

474 comparisons were conducted on the kernel size with the 3 × 3, 5 ×5, 7 × 7 and 9 × 9, and the

475 evaluating indicators were calculated respectively.

476 Table 5 Influence of ECA module

Recall Precision F1-SCORE AP

Methods mAP

H NP H NP H NP H NP

3×3 dw + ECA 63.38 % 89.73 % 95.38 % 77.12 % 0.80 0.83 92.81 % 86.63 % 89.72 %

5×5 dw + ECA 77.21 % 90.87 % 90.13 % 84.85 % 0.83 0.86 94.18 % 92.22 % 93.20 %

7×7 dw + ECA 82.35 % 93.92 % 95.32 % 87.90 % 0.88 0.91 94.81 % 95.19 % 95.00 %

9×9 dw + ECA 69.49 % 87.07 % 90.87 % 79.51 % 0.79 0.83 90.15 % 86.94 % 88.55 %

477 Table 5 shows the experiment influence result of attention mechanism. On the one hand, when

478 added ECA module to CA-block, the mAP increased obviously, which proved that attention

479 mechanism could make network pay more attention to the feature which play an important role in

480 detection, and realize efficient extraction of image features. Nevertheless, the performance of 9 × 9

481 dw achieved some decrease on evaluating indicators, which implied some over fitting may occurred.

482 On the other hand, the CA block with 7 × 7 dw and ECA module achieved the best performance on

483 recall, precision, F1- score, AP, and mAP compare to other kernel size, so we designed the CA-

484 blocks based on this test result.

485 To further verify that the ECA can stay ahead of other attention modules, in this section, two

486 state-of-the-art attention modules, SENet and CBAM module were compared on the dataset,

487 respectively. The specific results are presented in Table 5.

488 Table 6 Influence of attention module

Recall Precision F1-SCORE AP

Methods mAP

H NP H NP H NP H NP

7×7 dw + SENet 72.79 % 91.63 % 95.65 % 80.33 % 0.83 0.86 93.81 % 91.19 % 92.50 %

7×7 dw + CBAM 58.82 % 76.43 % 91.43 % 76.43 % 0.72 0.76 87.01 % 79.37 % 83.19 %

7×7 dw + ECA 82.35 % 93.92 % 95.32 % 87.90 % 0.88 0.91 94.81 % 95.19 % 95.00 %

489 The CA-block SENet and CBAM achieved 92.50% and 83.19 % mAP respectively, which are

490 inferior to ECA module. The result implied that different attention mechanism make distinct impact

491 on detection result, and the ECA module is an effective method to obtain excellent performance on

Electronic copy available at: https://ssrn.com/abstract=4089053

492 the dataset built in this study.

493 3.2.2 Ablation study of stage radio and bottleneck

494 The stage radio of the backbone network of CA-YOLO v3 is 3: 3: 9: 3, which spirited from

495 Swin-Transformer, and is different from the original YOLO v3 network and ResNet. At the same

496 time, the CA-block absorbed the inverted bottleneck from Swin-Transformer and ConvNeXt

497 module. So in order to verify the impact of the stage radio and bottleneck on the model, two sage

498 radio from ResNet-50 and ResNet-101 were used for trainng and testing CA-YOLO v3, two types

499 of bottleneck module also tested in this section.

500 Table 7 Influence of stage radio and bottleneck

Recall Precision F1-SCORE AP

Methods mAP

H NP H NP H NP H NP

3: 4: 6: 3 76.47 % 90.11 % 95.85 % 83.16 % 0.85 0.86 95.91 % 91.76 % 93.84 %

3: 4: 23: 3 65.44 % 81.75 % 92.27 % 77.62 % 0.78 0.80 91.79 % 81.02 % 86.40 %

Resnet bottleneck 76.84 % 87.83 % 96.76 % 84.93 % 0.86 0.86 95.22 % 91.67 % 93.44 %

Flatten bottleneck 80.88 % 92.78 % 99.10 % 85.16 % 0.89 0.89 95.97 % 92.15 % 94.06 %

Inverted bottleneck 82.35 % 93.92 % 95.32 % 87.90 % 0.88 0.91 94.81 % 95.19 % 95.00 %

501 As can be seen from Table 7, when the stage radio of backbone of CA-YOLO v3 is 3: 4: 6: 3,

502 which is equal to ResNet-50, the mAP was 93.84%, and the stage radio is 3: 4: 23: 3, which is equal

503 to ResNet-101, the mAP was only 86.40%. The experiment result proved the stage radio used in

504 this study, which is 3: 3: 9: 3, is more effective for object detection on dataset of this research.

505 Moreover, when the bottleneck is same to ResNet, or a flatten bottleneck, which means the number

506 of filters are same in three convolution layers and the number of channel is not change, the

507 performance of network is 93.44% and 94.06% mAP, both of them were lower than the inverted

508 bottleneck structure designed in this work. It could be conclude that the structure of CA-block and

509 CA-YOLO v3 absorbed design idea and achieved better performance.

510 3.3 Visualization of detection results

511 3.3.1 Visualization result analysis

512 In order to visualize the detection results on image, three images selected from test set, and

513 their labeled results by using the Labelimg toolkit, and detection results of by using YOLO v3 and

Electronic copy available at: https://ssrn.com/abstract=4089053

514 CA-YOLO v3 respectively, as are shown in Fig.11.

515

516 (a) (b) (c)

517

518 (d) (e) (f)

519

520 (g) (h) (i)

521 Fig. 11 Comparison of detection results between YOLO v3 and CA-YOLO v3. (a), (b), (c) are labeled by using

522 the Labelimg toolkit, where silkworms in boxes of light blue are the diseased, and in boxes of dark red are the

523 healthy. (d), (e), (f) are detection results by using YOLO v3 model, (g), (h), (i) are detection results by using CA-

524 YOLO v3. The silkworm located pink boxes are the diseased, whereas green boxes are the healthy, the character

525 located in the left above of boxes is confidence value of its category.

526 It could be observed that YOLO v3 failed to detect one silkworm at the top of the image in (d).

527 Similarly, CA-YOLO v3 misjudged the category of one silkworm on the left in (g). YOLO v3 failed

528 to detect one at the top of the image in (d). Similarly, CA-YOLO v3 misjudged the category of one

Electronic copy available at: https://ssrn.com/abstract=4089053

529 silkworm on the left in (g). YOLO v3 misjudged the category of the leftmost silkworm and the

530 uppermost silkworm respectively, whereas the test results of CA-YOLO v3 are completely correct

531 in (e) and (f).

532 Though some silkworms were failed to detection as shown in Fig (11), however, the

533 visualization result also indicated that deep learning could not only accurately identify the category

534 of silkworm, but also accurately detect the position of silkworm in the image in the case of mixed

535 with healthy and diseased silkworms.

536 3.3.2 GUI for the trained CA-YOLO v3 module

537 In order to apply the research results to real rearing environment, a GUI (Graphical User

538 Interface) for detection of silkworm diseases was developed after model training and testing by

539 using PyQt 5, and the trained CA-YOLO v3 model was embedded into the GUI. We hope that

540 detection GUI could work together with rearing machine of silkworm in real conditions, which

541 require the GUI could used for silkworm disease detection in real-time video, local image, and local

542 video.

543

544 Fig.12 Detection interface of local images

545 The QT designer provided by PyQt 5 was used to design software interface, as shown in Fig.12.

546 The interface of detection software contains working mode selection, warning mode, image

547 visualization, text visualization and control area, etc. The Pushbutton, RadioButton, Qpainter and

Electronic copy available at: https://ssrn.com/abstract=4089053

548 other controls were used for designing interface of the GUI. Each control is associated with a

549 corresponding function. The operation of software is very easy, only one of working mode needs to

550 be selected before pressing the button of start detection. The small window displays in red when the

551 warning function is enable and the diseased silkworm is detected, otherwise it remains in green.

552

553 Fig.13 Workflow design of detection GUI

554 Fig.13 showed the workflow chart of the GUI. The working mode of the GUI includes

555 detection for real-time video acquired by USB camera, local image and video saved in computer.

556 When using the GUI to detection, first, the one of working mode should be selected according to

557 application occasion. Then, the pre-trained CA-YOLO v3 model would be called to detect image or

558 frames of video by using slot function, which connected by the start button and detection program.

559 The visualization results are displayed on the software interface at the same time. Text results of

560 each image or frames of video finally are saved automatically, which used for traceability analysis.

561 The pausing or terminating detection function also can be used during detection process.

562 4. Conclusion and future work

563 In this research, the NPV, which is the most prevalent silkworm disease with high occur

564 frequency and strong contagion, was detected in adult stages of silkworm by using deep learning

565 and object detection. The healthy and diseased silkworms were collected synchronously by using

566 rearing and manual infection in actual environments. A dataset, which most of images containing

567 several healthy and diseased silkworms, was built by putting silkworms on background and imaging

Electronic copy available at: https://ssrn.com/abstract=4089053

568 them using a high-resolution smartphone camera. The state-of-the-art deep learning architecture,

569 including YOLO v3, ConvNeXt, and Swin-Transformer, were architecturally improved to design a

570 CA-YOLO v3 network for effectively and accurately detecting silkworm disease in complex

571 conditions. A detection GUI was developed for silkworm detection based on PyQt 5. Based on the

572 results, the following specific conclusions can be drawn from this article.

573 1) This research proposed a detection method for silkworm diseases based on object objection,

574 different from existing researches based on image classification, which is inconsistent with the

575 actual situation of high-density rearing of silkworm. The proposed method can not only recognize

576 whether a silkworm is diseased or healthy, but also detect the coordinate of each silkworm in image,

577 which can provide technical support for the development of diseases prevent equipment.

578 2) Our designed CA-YOLO v3 contains two major improvements: a) The CA-block was

579 designed based on two state-of-the-art including ConvNeXt and Swin-Transformer network. The

580 ECA attention mechanism also was introduced to the CA-block for extraction of key features. b)

581 Four stages stacked CA-blocks were used for replacing the DarkNet-53, which significantly reduced

582 training parameters and improved network performance. The experiment on dataset verified the

583 superiority of CA-YOLO v3 over the original YOLO v3 with 1.87% higher mAP.

584 3) Our developed a detection GUI for silkworm disease, and the trained CA-YOLO v3 model

585 was embedded into the GUI, which realizing detection of real-time video, local image and video.

586 The GUI can save detection results for traceability analysis and show tips when the diseased

587 silkworm is detected. The GUI could also be used for joint experiment with rearing machine of

588 silkworm in real conditions.

589 Overall, this study indicated the superiority of the proposed CA-YOLO v3 algorithm for better

590 silkworm disease detection in mixed with healthy and diseased silkworms, and in adult stage of

591 silkworm. CA-YOLO v3 can perform in real-time applications when all images of model training

592 were collected from real conditions. This research also can provide a theoretical reference for early

593 warning of silkworm diseases, and technical support for development of precision control

594 equipment.

595 However, there are still some deficiencies in our study. a) The silkworm density in dataset

596 proposed by this experiment is less than the real environments, and only partial growth stages of

597 single silkworm variety were imaged and detected, so as to the diversity and richness of the dataset

Electronic copy available at: https://ssrn.com/abstract=4089053

598 needs to be enhanced. b) Object detection method belongs to supervised learning, which means that

599 all diseased silkworms were diagnosed by human, then image annotation was carried out. The

600 silkworm could be diagnosed by eyes indicated that there is obvious morphological characteristics,

601 which also means the diseases belongs to middle or late stage of infection. The recognition results

602 are less helpful to cut transmission of pathogeny and reduce cocoons losses.

603 In future work, we plan to collect diseased silkworm in different growth stages and include

604 more variety, constructing a larger dataset for detection research. More importantly, we will attach

605 important on early detection of silkworm diseases, focus on the behavioral difference when

606 pathogeny infection early, so as to realize the early warning and accurate prevention of silkworm

607 diseases.

608 Author contributions

609 Hongkang Shi: Image acquisition, draft writing. Dingyi Tian: Image acquisition, model

610 training. Shiping Zhu: Image acquisition, paper review, and test guarantee. Linbo Li: Sample

611 collection, software development. Jianmei Wu: Silkworm rearing, writing, and testing.

612 Declaration of Competing Interest

613 The authors declare that they have no known competing financial interests or personal

614 relationships that could have appeared to influence the work reported in this paper.

615 Acknowledgments

616 This study has supported by the National Modern Agricultural Industrial Technology System

617 Special Project (No.CARS-18).

618 References

619 Jiang, L., Zhao, P., Xia Q., 2014. Research Progress and Prospect of Silkworm Molecular Breeding

620 for Disease Resistance. Science of Sericulture, 40(04):571-575.

621 Xu, A., Qian, H., Sun, P., Liu, M., Lin, C., Li, G., Li, L., Zhang, Y., Zhao G., 2019. Breeding of a

622 New Silkworm Variety Huakang 3 with Resistance to Bombyx mori Nucleopolyhedrosis.

623 Science of Sericulture, 45(02), 201-211.

624 Kamilaris, A., Prenafeta-Boldú, X.F., 2018. Deep learning in agriculture: A survey. Comput.

625 Electron. Agric. 147, 70-90.

626 Karlekar, A., Seal, A., 2020. SoyNet: Soybean leaf diseases classification. Comput. Electron. Agric.

627 172, 105342.

Electronic copy available at: https://ssrn.com/abstract=4089053

628 Szegedy, C., Ioffe, S., Vanhoucke, V., Alex Alemi. 2016. Inception-v4, Inception-ResNet and the

629 Impact of Residual Connections on Learning. arXiv eprint arXiv: 1602.07261.

630 Wang, J., Li, Y., Feng, H., Ren, L., Du, X., Wu, J., 2020. Common pests image recognition based

631 on deep convolutional neural network. Comput. Electron. Agric. 179, 105384.

632 Altuntaş, Y., Cömert, Z., Kocamaz, A. F., 2019. Identification of haploid and diploid maize seeds

633 using convolutional neural networks and a transfer learning approach. Comput. Electron. Agric.

634 163, 104874.

635 Shi, H., Tian, Y., Yang, C., Chen, Y., Su, S., Zhang, Z., Zhang, J., Jiang, M., 2020. Research on

636 Intelligent Recognition of Silkworm Larvae Races Based on Convolutional Neural Networks.

637 Journal of Southwest University (Natural Science Edition), 42(12), 34-45.

638 Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., Adam,

639 H., 2017. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision

640 Applications. arXiv preprint arXiv: 1704.04861.

641 Xia, D., Yu, Z., Cheng, A., 2019. Development and Application of Silkworm Disease Recognition

642 System Based on Mobile App. Beijing: 10th International Conference on Image and Graphics,

643 471-482.

644 Borji, A., and Itti., 2013. State-of-the-Art in Visual Attention Modeling. IEEE Transactions on

645 Pattern Analysis and Machine Intelligence, 35, 185-207.

646 Huang, G., Liu, Z., Maaten, L. V. D., Weinberger, K. Q., 2016. Densely Connected Convolutional

647 Networks. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2261-2269.

648 Ding, J., Cheng, A., 2019 An Improved Similarity Algorithm Based on Deep Hash and Code Bit

649 Independence. 2019 4th International Conference on Insulating Materials, Material

650 Application and Electrical Engineering.

651 Krizhevsky, A., Sutskever, I., Hinton, G. E., 2017. ImageNet classification with deep convolutional

652 neural networks. Communications of the ACM, 60(6), 84-90.

653 Shi, H., Huang, L., Hu, C., Hu, G., Zhang, J., 2022. Research on recognition of silkworm diseases

654 based on Convolutional Neural Network. Journal of Chinese Agricultural Mechanization,

655 43(01), 150-157.

656 He, K., Zhang, X., Ren, S., Sun, J., 2016. Deep residual learning for image recognition. In:

657 Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778.

Electronic copy available at: https://ssrn.com/abstract=4089053

658 Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C., Berg, A. C., 2016. SSD: Single

659 Shot MultiBox Detector. arXiv preprint arXiv: 1512.02325.

660 Ren, S., He, K., Girshick, R., Sun J., 2017. Faster R-CNN: Towards Real-Time Object Detection

661 with Region Proposal Networks. IEEE Transactions on Pattern Analysis & Machine

662 Intelligence, 39(6), 1137-1149.

663 Redmon J., Farhadi, A., 2018. YOLOv3: An Incremental Improvement. arXiv preprints arXiv:

664 1804.02767.

665 Chen, C., Zhu, W., Norton, T., 2021. Behaviour recognition of pigs and cattle: Journey from

666 computer vision to deep learning. Comput. Electron. Agric. 187, 106255.

667 Riekert, M., Opderbeck, S., Wild, A., Gallmann, E., 2021. Model selection for 24/7 pig position and

668 posture detection by 2D camera imaging and deep learning. Comput. Electron. Agric. 187,

669 106213.

670 Zoph, B., Le, Q. V., 2016. Neural Architecture Search with Reinforcement Learning. arXiv preprints

671 arXiv: 1611.01578.

672 Wang, Z., Liu, T., 2022. Two-stage method based on triplet margin loss for pig face recognition.

673 Comput. Electron. Agric. 194, 106737.

674 Liu, C., Gao, T., Ma, Z., Song, Z., Li, F., Yan, Y., 2022. Target Detection Model of Corn Weeds in

675 Field Environment Based on MSRCR Algorithm and YOLO v4-tiny. Transactions of the

676 Chinese Society for Agricultural Machinery, 53(02):246-255+335.

677 Wang, H., Li, Y., Dang, L. M., Moon, H., 2022. An efficient attention module for instance

678 segmentation network in pest monitoring. Comput. Electron. Agric. 195, 106853.

679 Liu, J., Wang, X., 2020. Tomato Diseases and Pests Detection Based on Improved YOLO v3

680 Convolutional Neural Network. Frontiers in Plant Science, 11, 00898.

681 Wang, J., Wang, N., Li, L., Ren, Z., 2020. Real-time behavior detection and judgment of egg

682 breeders based on YOLO v3. Neural Computing and Applications, 32, 5471–5481.

683 Tian, Y., Yang, G., Wang, Z., Wang, H., Li, E., Liang, Z., 2019. Apple detection during different

684 growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric.157,

685 417-426.

686 Bai, Y. Guo, Y., Zhang, Q., Cao, B., Zhang, B., 2022. Multi-network fusion algorithm with transfer

687 learning for green cucumber segmentation and recognition under complex natural environment.

Electronic copy available at: https://ssrn.com/abstract=4089053

688 Comput. Electron. Agric. 194, 106789.

689 Olaf, R., Philipp, F., Thomas, B., 2015. U-Net: Convolutional Networks for Biomedical Image

690 Segmentation. Medical Image Computing and Computer-Assisted Intervention -- MICCAI

691 2015, 234—241.