Professional Documents

Culture Documents

1. LinearRegression_

1. LinearRegression_

Uploaded by

Kaleem0 ratings0% found this document useful (0 votes)

1 views38 pagesCopyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

Download as pdf

0 ratings0% found this document useful (0 votes)

1 views38 pages1. LinearRegression_

1. LinearRegression_

Uploaded by

KaleemCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

Download as pdf

You are on page 1of 38

Agenda for Today’s Session

= What is Regression?

"= Regression Use-case

: 20, Gradient Search Points and Line

= Types of Regression ~ Linear vs Logistic Regression ieeration = 93 Pe) AH

ast Bros 120]

= What is Linear Regression? 2 100|

= 10 pal

* Finding best fit regression line using Least Square Method 4, ©

£ 0)

= Checking goodness of fit using R squared Method oo 20

°

= Implementation of Linear Regression using Python Ds 00 OS 10 15 -20°0 20 40 60 80 100120

line y-intercept (8)

= Linear Regression Algorithm using Python from scratch

= Linear Regression Algorithm using Python (scikit lib)

rrotbe ecu Copyright ©, edureka and/or its affiliates. All rights reserved.

=

“Regression analysis is a form of predictive

modelling technique which investigates the

relationship between a dependent and independent

variable”

Regression?

Cree go co

Three major uses for regression analysis are

= Determining the strength of predictors

= Forecasting an effect, and

= Trend forecasting

Regression

edureka!

EEae Pee aioe) ease tee a)

Core Concept The data is modelled The probability of some

using a straight line obtained event is

Linear vs

represented as a linear

e ry function of a combination of

Logistic

predictor variables.

Used with Continuous Variable Categorical Variable

Output/Prediction Value of the variable _ Probability of occurrence of

event

Accuracy and measured by loss, R Accuracy, Precision, Recall,

Goodness of fit squared, Adjusted R F1 score, ROC curve,

squared etc. Confusion Matrix, etc

Pretec <1

Selection

Criteria

PTetbeg sc

Classification and Regression Capabilities

Data Quality

Computational Complexity

Comprehensible and Transparent

Linear

Regression

used?

Pret beg) <1

Evaluating Trends and Sales Estimates

Analyzing the Impact of Price Changes

Assessment of risk in financial services and

insurance domain

edureka!

Linear

Regression

Algorithm

Creag)

Dependent Variable

\

\

Independent Variable

Machine Learning Training with Python

| @ esimmated value

www.edureka.co/python

edureka!

Linear

Regression

Algorithm

roth cu

Dependent Variable

R

Minimize the error

Independent Variable

Machine Learning Training with Python

www.edureka.co/python

edureka!

Linear

Regression

Algorithm

rrohbee eu

Machine Learning Training with Python

Speed

www.edureka.co/python

edureka!

Linear

Regression

Algorithm

rrohbe ecu

Machine Learning Training with Python

Speed

www.edureka.co/python

edureka! ~~

Linear

Regression

Algorithm ve Relaiorship

Speed

Geese) Machine Learning Training with Python www.edureka.co/python

Understanding Linear Regression Algorithm

x y

Y TED Es.

erent atte 314

. e 32

4 [4

_ 5 [5

3 mean 3 3.6

2

st

oC a 6 Cees te ae

+

Understanding Linear Regression Algorithm

9 y =mx+e

alalwlry|A]s

A) AIM) A/Wle

RSS ce Machine Learning Training with Python Rerwnaledurslon/ca/ pythons

Understanding Linear Regression Algorithm

x y [x—* | y—y|@—x?|@_-DO_-yV

Y + ae 1 3 -2 -0.6 4 1.2

arma 2 [4 “1 0.4 1 “0.4

5 eo 3 P4 0 -1.6 0 0

4 4 1 0.4 1 0.4

7 s [5 2 14 4 28

3 3 36 E=10 r=4

21 ne BOD

a = x)? 10

One ear een See as

SS as ee

Understanding Linear Regression Algorithm

y=mxtc ES y [x-* | y-Vil@—-x)7|~-YNO—-Y)

Y Sel 1 [3 2 -0.6 4 12

2 4 -1 0.4 1 -0.4

3 2 oO -1.6 0 0

4 4 1 0.4 1 0.4

5 5 2 1.4 4 2.8

3 3.6 x= 10 v=

py GO —/) a+

(x — x)? 10

x

Independent Variable

= eas =

Understanding Linear Regression Algorithm

Y= me x y [x-x | y-y¥ [@=—)?|@-)O-y)

Y Se 1 3 -2 -0.6 4 1.2

cr 2 [4 “1 04 1 “0.4

- eo 3 2 0 -1.6 0 0

4 4 1 0.4 1 0.4

: s [5 2 14 4 28

3 3 36 E=10 r=4

2} _ ft

m=, @—x*)O—y¥)

(x — x)?

ae oie ae eG Oana

a a

Mean Square Error

: ie

£

2 5 ;

ae H

ye ae

>

So Save: 3

We Noo od tt

= WU

A 8X gored

+ 88 ANNA E

x & ett

a Saacaa

So igs KK KK

hf ge Fheye

>» —8 soocc

83 Hoh th tl

‘ > >>

as 3

aw

£3

£

a

3

é

Machine Learning Training with Python

Mean Square Error

m=04

c=24

y=04x+2.4

For givenm = 0.4 &c = 2.4, lets

predict values for y for x = {1,2,3,4,5}

y=04x14+24=28

y=0.4x2+ 2.4=3.2

0.4.x 3+ 2.4= 3.6

y=04x4+ 2.4= 4.0

y=04x5+2.4=4.4

x

Independent Variable

Machine Learning Training with Python www.edureka.co/python

Mean Square Error

Distance between actual

& predicted value

t Ebert x

o 3 > Independent Variable

SE =e

edureka! ==

20 Gradient Search Points and Line

iteration = 66 1407 y = 1.20x + 0.02

Find ay 1st ‘b= o02 120

inding the i

1.0 80

best fit line 7 “

" 40

0.0 20

oO

03515 -0.55 0.0 0.5 1.0 pj -20 0 20 40 60 80 100120

line y-intercept (8)

Algo loops to find min err

edureka! i rf www.edureka.co/python

Let’s check the Goodness of fit

edureka! Copyright ©, edureka and/or its affiliates. All rights reserved.

edureka!

What is R-

Square?

Cree) cu

= R-squared value is a statistical measure of how close

the data are to the fitted regression line

= It is also known as coefficient of determination, or the

coefficient of multiple determination

Machine Learning Training with Python ‘www.edureka.co/python

Calculation of R2

—| Actual vs Predicted Value

|__ Regression line

Distance actual - mean

vs

Distance predicted - mean

= Op - 7)"

This is nothing but R? = re

2 @—y)

HH HEH x

o 3 o Independent Variable

ee Ls

Calculation of R

Z

Machine Learning Training with Python

= y yry o-» |» Oy -)

1 06 036 28 “08

z 7 04 0.16 32 “04

2 -1.6 2.56 36 0

is 04 0.16 4.0 om

5 14 1.96 44 08

3.6 >» 5.2

www.edureka.co/python

Calculation of R2

0.3

2

R

www.edureka.co/python

Independent Variable

Machine Learning Training with Python

Actual vs Predicted Value

edureka!

Calculation of R”

7

= 0,

R2

x

Independent Variable

{| t jassume if

www.edureka.co/python

3

:

f

‘

z

E

i

Machi:

edureka!

Calculation of R2

Independent Variable

www.edureka.co/python

Machine Learning Training with Python

N

mK

°

ce

2

vw)

we

5

wo

©

O

0.02

2

R

Independent Variable

&

$s

3

5

4

3

E

£

;

i

www.edureka.co/python

Machine Learning Training with Python

edureka! > ~

Low R-squared

eu Machine Learning Training with Python ‘www.edureka.co/python

Let’s learn to

code

Creole bee

BSB rome Bi umitess3 Unites Home

Oo a tocathos =

= jupyter Untitled14 cast checkpoint: 2 minutes ago (unsaved changes) | Logout

: Kemel get Trusted | # | Py °

B+ a Bw S| Run mC) m |cose =

import pandas as pd

import matplotlib.pyplot as plt

plt.rcparams['figure.figsize’] = (20.0, 10.0)

#@ Reading bata

data = pd.read_csv(‘headbrain.csv")

print(data. shape)

data.head()

(237, 4)

out[a

Gender Age Range Head Size(em*3) Brain Weight(grams)

° 7 7 “4512 7530

1 1 1 3738 1297

2 1 1 4261 1335

3 1 ba amr 1282

4 1 1 a7 1590

[eo

BSB tome © univest3 Bi Home

Oo a locatnos x 2 @

= jupyter Untitled14 cast checkpoint: 4 minutes ago (unsaved changes) PF | Logout

Trusted # | F °

B+) a mh & + fm Run| mc | con:

Gender Age Range Head Size(em*3) Brain Weight(grams)

° 7 7 “4512 530

1 1 4 2738 1297

2 1 1 4261 1235

3 1 1 avr 1282

4 1 1 a7 1590

m cay: SSUES

X Cdate[ need size(cn3)" J values

¥ 2 dstal-erain weight (grams) 1-values

J =o

B 2B tome FS univess2 ES Untiteasa Gi Home =e cn

oO a Yocom we) & 2 2

= jupyter Untitled14 cast checkpoint: 6 minutes ago (autosaved) | Logout

, : | Keel Widg 7 Trusted Py °

Bt is Bw we) Run | S| con: =

In [ ]:|# Mean x and ¥

mean_x = np.mean(x)

mean_y = np.mean(¥)

n= len(x)

Using the formula to calculate b1 and b2

numer = 0

denom = 0

for i in range(m):

numer += (X[i] - mean_x) * (Y[i] - mean_y)

denom += (x{i] - mean_x) ** 2

bi = numer / denom

bo = mean_y - (b1 * mean_x)

# print coefficients

print(b1, be)

B 2) 5 Hone Unies TB Untitersa < |B Hore + ¥ = 9 x

oa tocatnost % e

jupyter Untitled 14 Last checkpoint 8 minutes ago (unsaved changes) PF | oout

7 \ \ Cell Kemel Widgets Hel Trusted Python 3 O

Sa + =< & BH & + Run BC Pw | Code = u

mean_y ~ np-mean(v)

# Total number of values

m = len(x)

# using the formula to calculate bi and bo

numer = @

denon = 0

for i in range(m):

numer += (X[i] - mean_x) * (Y[i] - mean_y)

denom += (x[i] - mean_x) ** 2

bi = numer / denom

bo = mean_y - (ba * mean_x)

# print coefficients

print(bi, be)

2. 263429339489399a5 325.57342104944223

m c

[os

B 5) B tome FS univest3 Unites B Home z =o

oO a tocaost x £2 2

= jupyter Untitled14 Last checkpoint: 9 minutes ago (unsaved changes) | Logout

File i ser Kemel Widget Trusted) # | Python3 ©

B+ = @ oe) [pRun| mci» 2

Print coeffictents

print(b1, bo)

@.26342933049039945 325.57342104944223

In [ ]: | # Plotting values and Regression Line

max_x = np.max(x) + 100

min_x = np-min(x) ~ 100

# calculating Line values x and y

x = np.linspace(min_x, max_x, 1000)

y = bo+bi= x

Ploting Line

plt.plot(x, y, color="#58b970", label='Regression Line’)

# Ploting Scatter Points

plt.scatter(x, Y, c="#efS423", label="scatter Plot’)

plt.xlabel(‘Head size in cm3")

pit.ylabel(‘erain weight in grams")

pit legend)

pt show()I

Sowa

* eae

BSB tome Bi Umivesss SB Unuiteasa Home is

oO a locathost

B | tooo

= jupyter Untitled14 cast checkpoint: 10 minutes ago (autosaves)

F E View er | Kemel — Widgets_-—Help. Notebook saved || Trusted Python 3 ©

B + eo BH ® +) Run Mm Cl»

plt.xlabel(‘Head size in cm3")

plt.ylabel(‘grain weight in grams‘)

plt.legend()

plt.show()

1, 1500

200

Head Size in cm3

[=

B 2B tome i Unsiiesis Unites Bi Home ry =o ox

oO a rocoto x £2 2

= jupyter Untitled14 cast checkpoint: 12 minutes ago (unsaved changes) | Logout

i Kemel get Trusted Python 3 ©

Bote a B® &) RUN mC] con: =

In [5]: | sst = ©

ss = 0

for i in range(m):

y_pred = bo + bi * x[i]

sS_t += (¥[i] - mean_y) ** 2

ss_r 4= (VLi] - y_pred) ** 2

r2= 1 - (ss_r/ss_t)

print(r2)

@.6393117199570003

[mcs

B 5) tone 1 Unites Bi Home ES Untiieas TT Sys

oO a ocathost x zoe

<= jupyter Untitled15 cast checkpoint: a few seconds ago (unsaved changes) | Logout

' : Kemel 7 Trusted) # |Python3 ©

B +i & Hw + Run mCi»

In [ ]: from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

‘annot use Rank 1 matrix in scikit Learn

X = X.reshape((m, 1))

reating Model

reg = LinearRegression()

# Fitting training data

reg = reg.fit(x, Y)

Y_pred = reg.predict(x)

calculating R2 score

r2_score = reg.score(x, Y)

yprint(r2_score)

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5834)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (405)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- 9. pca and boostingDocument39 pages9. pca and boostingKaleemNo ratings yet

- 5.6. KNN and NaiveBayesDocument46 pages5.6. KNN and NaiveBayesKaleemNo ratings yet

- 8. clustering and associateruleminingDocument51 pages8. clustering and associateruleminingKaleemNo ratings yet

- 10.reinforcement learningDocument25 pages10.reinforcement learningKaleemNo ratings yet

- 3.4. Decision ? Edureka Random Forest_Document75 pages3.4. Decision ? Edureka Random Forest_KaleemNo ratings yet

- 7. SVM EdurekaDocument22 pages7. SVM EdurekaKaleemNo ratings yet

- 2. Binary Tree 450Document57 pages2. Binary Tree 450KaleemNo ratings yet

- 2. LogisticRegression_Edureka_Document49 pages2. LogisticRegression_Edureka_KaleemNo ratings yet

- Node Js Complete Interview prep PrintDocument46 pagesNode Js Complete Interview prep PrintKaleemNo ratings yet

- Bit Manipulation HndwrittenDocument10 pagesBit Manipulation HndwrittenKaleemNo ratings yet

- Leetcode Patterns CompanyWiseDocument12 pagesLeetcode Patterns CompanyWiseKaleemNo ratings yet

- 3. Microsoft Tree QuestionsDocument29 pages3. Microsoft Tree QuestionsKaleemNo ratings yet

- E-Way Bill: E-Way Bill No: E-Way Bill Date: Generated By: Valid From: Valid UntilDocument1 pageE-Way Bill: E-Way Bill No: E-Way Bill Date: Generated By: Valid From: Valid UntilKaleemNo ratings yet

- 500 Verbs QuraanDocument16 pages500 Verbs QuraanKaleemNo ratings yet

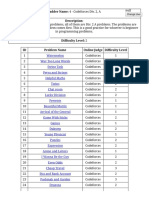

- A2oj Ladders AbcdeDocument24 pagesA2oj Ladders AbcdeKaleemNo ratings yet

- DSA Sheet by Arsh Goyal Upto Linked List SolutionDocument80 pagesDSA Sheet by Arsh Goyal Upto Linked List SolutionKaleem100% (1)