Professional Documents

Culture Documents

Cs8083 Unit III Notes

Cs8083 Unit III Notes

Uploaded by

Indhu RithikCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Cs8083 Unit III Notes

Cs8083 Unit III Notes

Uploaded by

Indhu RithikCopyright:

Available Formats

CS8083 UNIT III MCP

UNIT III SHARED MEMORY PROGRAMMING WITH OpenMP

OpenMP Execution Model – Memory Model – OpenMP Directives – Work-sharing

Constructs -Library functions – Handling Data and Functional Parallelism – Handling

Loops – Performance Considerations.

INTRODUCTION:

Shared memory is a common memory address space that can be accessed

simultaneously by more than one program. Multicore is a kind of shared memory

multiprocessor in which all cores share the same address space. A process can be divided

into many small parts and will be assigned to each core of the multicore system. The

execution of the process can be carried out in parallel on multiple cores.

Therefore the smallest sequence of instructions that is scheduled for execution in a core is

called as a thread. The execution of several of those threads in parallel is

multithreading.

OpenMP

OpenMP is an API for shared-memory parallel programming. The “MP” in OpenMP

stands for “multiprocessing”. OpenMP is designed for systems in which each thread or

process can potentially have access to all available memory.

OpenMP identifies parallel regions as block of code that may run in parallel.

It supports two types of parallelism

• Thread Parallelism

• Explicit Parallelism

3.1 OPENMP EXECUTION MODEL

OpenMP provides directives-based shared-memory API. In C and C++, this means that

there are special preprocessor instructions known as pragmas.

The preprocessor directive #pragma is used to provide the additional information to the

compiler in C/C++ language. This is used by the compiler to provide some special

features. Pragmas in C and C++ start with

#pragma

B. Shanmuga Sundari, AP/CSE 1

CS8083 UNIT III MCP

Example: Openmp program

Compiling and running OpenMP programs

To compile this with gcc we need to include the -fopenmp option

To run the program, we specify the number of threads on the command line. For

example, we might run the program with four threads and type

Output

Hello from thread 0 of 4

Hello from thread 1 of 4

Hello from thread 2 of 4

Hello from thread 3 of 4

Explanation:

▪ Omp.h is the header file

B. Shanmuga Sundari, AP/CSE 2

CS8083 UNIT III MCP

▪ Strtol() function is used to get the number of threads. It included in stdlib.h.

Syntax:

▪ Where

▪ First argument is a string

▪ Last argument is the numeric base in which the string us represented

▪ Second argument is passed in a NULL pointer since it is not used

▪ Pragma says the program should start a number of threads equal to what was

passed in via the command line.

▪ OpenMP pragmas always begin with

# pragma omp

▪ The first directive is a parallel directive, and, it specifies that the structured block

of code that follows should be executed by multiple threads.

▪ #pragma omp parallel

▪ An Open MP construct is defined to be a compiler directive plus a block of code

▪ A single statement or a compound statement with a single entry at the top an a

single exit at the bottom.

▪ Branching in or out of a structured block is not allowed

▪ The number of threads that run the following structured block of code will be

determined by the run-time system. If there are no other threads started, the

system will typically run one thread on each available core.

▪ Usually the number of threads is specified on the command line, so modify

parallel directives with the num threads clause.

▪ A clause in OpenMP is just some text that modifies a directive. The num threads

clause can be added to a parallel directive. It allows the programmer to specify the

number of threads that should execute the following block:

▪ # pragma omp parallel num_threads(thread count)

B. Shanmuga Sundari, AP/CSE 3

CS8083 UNIT III MCP

Thread is short for thread of execution. The name is meant to suggest a sequence of

statements executed by a program. Threads are typically started or forked by a process,

and they share most of the resources of the process that starts them, but each thread has

its own stack and program counter.

When a thread completes execution it joins the process that started it. This

terminology comes from diagrams that show threads as directed lines.

In OpenMP, the collection of threads executing the parallel block—the original thread

and the new threads—is called a team, the original thread is called the master, and the

additional threads are called slaves.

When the block of code is completed, when the threads return from the call to Hello—

there’s an implicit barrier. This means that a thread that has completed the block of code

will wait for all the other threads in the team to complete the block.

When all the threads have completed the block, the slave threads will terminate

and the master thread will continue executing the code that follows the block.

Error Checking

An exceptionally good idea is to check for errors while writing code. For example, After

the call to strtol,

▪ Check the the value is positive.

▪ Check that the number of threads actually created by the parallel directive is the

same as thread count

Another source of potential problem is the compiler.

▪ If the compiler doesn’t support OpenMP, it will just ignore the parallel directive.

B. Shanmuga Sundari, AP/CSE 4

CS8083 UNIT III MCP

▪ However, the attempt to include omp.h and the calls to omp_get_thread_ num and

omp_get_num_threads will cause errors.

To handle these problems, we can check whether the preprocessor macro OPENMP is

defined. If this is defined, we can include omp.h and make the calls to the OpenMP

functions.

We might make the following modifications to our program. Instead of simply

including omp.h in the line

#include <omp.h>

we can check for the definition of OPENMP before trying to include it:

#ifdef OPENMP

# include <omp.h>

#endif

Also, instead of just calling the OpenMP functions, we can first check whether OPENMP

is defined:

# ifdef OPENMP

int my_rank = omp_get_thread_num();

int thread count = omp_get_num_threads();

# else int my_rank = 0;

int thread count = 1;

# endif

Here, if OpenMP isn’t available, then the code will execute with one thread having rank

0.

3.2 MEMORY MODEL

OpenMP assumes that there is a place for storing and retrieving data that is

available to all threads, called the memory. Each thread may have a temporary view

of memory that it can use instead of memory to store data temporarily when it need

not be seen by other threads.

B. Shanmuga Sundari, AP/CSE 5

CS8083 UNIT III MCP

Data can move between memory and a thread's temporary view, but can never

move between temporary views directly, without going through memory. Each

variable used within a parallel region is either shared or private. The variable names

used within a parallel construct relate to the program variables visible at the point of

the parallel directive, referred to as their "original variables". Each shared variable

reference inside the construct refers to the original variable of the same name. For

each private variable, a reference to the variable name inside the construct refers to a

variable of the same type and size as the original variable, but private to the thread.

That is, it is not accessible by other threads.

There are two aspects of memory system behavior relating to shared memory

parallel programs: coherence and consistency.

Coherence refers to the behavior of the memory system when a single memory

location is accessed by multiple threads.

Consistency refers to the ordering of accesses to different memory locations,

observable from various threads in the system.

Device data Environment:

When an openMP program begins each device has an initial device data environment.

The initial device data environment for the host device is the data environment associated

with the initial task region.

Directives that accept data mapping attribute clauses determine how an original variable

is mapped to a corresponding variable in a device data environment. The original variable

is the variable with the same name that exists in the data environment of the task that

encounters the directive.

The Flush operation

A buffer flush is the transfer of computer data from a temporary storage area to the

computer's permanent memory. It defines a sequence point at which a thread is

guaranteed to see a consistent view of memory. The OpenMP flush operation enforces

consistency between the temporary view and memory.

B. Shanmuga Sundari, AP/CSE 6

CS8083 UNIT III MCP

The flush operation is applied to a set of variables called the flush-set. This operation

allows one thread to write a value of a variable and the other thread can read the value of

that variable. In order to achieve this, the programmer should ensure that the flush

operations performed by two threads are carried out in specified order.

A flush also causes any values of the flush set variables that were captured in the

temporary view, to be discarded, so that later reads for those variables will come directly

from memory. A flush without a list of variable names flushes all variables visible at that

point in the program. A flush with a list flushes only the variables in the list. The

OpenMP flush operation is the only way in an OpenMP program, to guarantee that a

value will move between two threads.

In order to move a value from one thread to a second thread, OpenMP requires these four

actions in exactly the following order:

1. the first thread writes the value to the shared variable,

2. the first thread flushes the variable.

3. the second thread flushes the variable and

4. the second thread reads the variable.

A flush of all visible variables is implied 1) in a barrier region, 2) at entry and

exit from parallel, critical and ordered regions, 3) at entry and exit from combined

parallel work-sharing regions, and 4) during lock API routines.

Another aspect of the memory model is the accessibility of various memory locations.

OpenMP has three types of accessibility: shared, private and threadprivate. Shared

variables are accessible by all threads of a thread team and any of their descendant

threads in nested parallel regions. Access to private variables is restricted. If a private

B. Shanmuga Sundari, AP/CSE 7

CS8083 UNIT III MCP

variable X is created for one thread upon entry to a parallel region, the sibling threads in

the same team, and their descendant threads, must not access it.

However, if the thread for which X was created encounters a new parallel directive

(becoming the master thread for the inner team), it is permissible for the descendant

threads in the inner team to access X, either directly as a shared variable, or through a

pointer.

The difference between access by sibling threads and access by the descendant threads is

that the variable X is guaranteed to be still available to descendant threads, while it might

be popped off the stack before siblings can access it. For a thread_private variable, only

the thread to which it is private may access it, regardless of nested parallelism.

3.3 OPENMP DIRECTIVES

Each open MP directive starts with #pragma omp

Syntax:

#pragma omp directive name [clause[clause]..] new line

Where

#pragma omp – Required for all open MP C/C++ directives

directive name – A valid open MP directive. Must appear after the pragma and before

any clauses

[clause[clause]..] – Optional. Clauses can be in any order and repeated as necessary

unless otherwise restricted

new line – Required. Precedes the structured block which is enclosed by this directive.

General rules:

▪ Case Sensitive

▪ Only one directive name can be specified per directive

▪ Applies to the succeeding structured block or an Open MP construct

▪ Order in which clauses appear in directives is not significant

B. Shanmuga Sundari, AP/CSE 8

CS8083 UNIT III MCP

Parallel Constructs

• Defines Parallel region

• Code that will be executed by multiple threads in parallel

Syntax:

#pragma omp parallel [clause..] new line

Structured block

Clause

For general attributes:

Clause Description

if Specifies whether a loop should be executed in parallel

or in serial.

num_threads Sets the number of threads in a thread team.

ordered Required on a parallel for statement if

an ordered directive is to be used in the loop.

schedule Applies to the for directive.

nowait Overrides the barrier implicit in a directive.

For data-sharing attributes:

Clause Description

private Declares variables in list to be PRIVATE to each thread in a team.

firstprivate Same as PRIVATE, but the copy of each variable in the list is

initialized using the value of the original variable existing before the

construct.

lastprivate Same as PRIVATE, but the original variables in list are updated

using the values assigned to the corresponding PRIVATE variables

in the last iteration in the DO construct loop or the

last SECTION construct.

shared Specifies that one or more variables should be shared among all

B. Shanmuga Sundari, AP/CSE 9

CS8083 UNIT III MCP

threads.

default Specifies the behavior of unscoped variables in a parallel region.

reduction Reduction clauses specify a reduction-identifier and one or more list

items. A reduction-identifier is either an id-expression or one of the

following operators: +, -, *, &, |, ^, && and ||

copyin Specifies that the master thread's data values be copied to

the THREADPRIVATE's copies of the common blocks or variables

specified in list at the beginning of the parallel region.

copyprivate Uses private variables in list to broadcast values, or pointers to

shared objects, from one member of a team to the other members at

the end of a single construct.

Example:

#include<stdio.h>

#include<omp.h>

int main()

{

#pragma omp parallel num_threads(4)

{

int i=omp_get_thread_num();

Printf_s(“Hello from thread %d\n”,i);

}

}

Output:

Hello from thread 0

Hello from thread 1

Hello from thread 2

Hello from thread 3

B. Shanmuga Sundari, AP/CSE 10

CS8083 UNIT III MCP

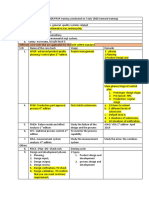

3.4 WORK SHARING CONSTRUCTS

• A work-sharing construct divides the execution of the enclosed code region

among the members of the team that encounter it.

• Work-sharing constructs do not launch new threads

• There is no implied barrier upon entry to a work-sharing construct, however there

is an implied barrier at the end of a work sharing construct.

Types of work sharing construct

Restrictions:

• A work-sharing construct must be enclosed dynamically within a parallel region in order

for the directive to execute in parallel.

• Work-sharing constructs must be encountered by all members of a team or none at all

• Successive work-sharing constructs must be encountered in the same order by all

members of a team

1. DO / for Directive

Splits the for loop so that each thread in the current team handles a different portion of the loop

Represents a type of ‘data parallelism’

#pragma omp for [clause ...] newline

schedule (type [,chunk])

ordered

B. Shanmuga Sundari, AP/CSE 11

CS8083 UNIT III MCP

private (list)

firstprivate (list)

lastprivate (list)

shared (list)

reduction (operator: list)

collapse (n)

nowait

for_loop

Clauses:

SCHEDULE: Describes how iterations of the loop are divided among the threads in the

team. The default schedule is implementation dependent.

STATIC

Loop iterations are divided into pieces of size chunk and then statically assigned to

threads. If chunk is not specified, the iterations are evenly (if possible) divided

contiguously among the threads.

DYNAMIC

Loop iterations are divided into pieces of size chunk, and dynamically scheduled among

the threads; when a thread finishes one chunk, it is dynamically assigned another.

The default chunk size is 1.

GUIDED

Iterations are dynamically assigned to threads in blocks as threads request them until no

blocks remain to be assigned. Similar to DYNAMIC except that the block size decreases

each time a parcel of work is given to a thread.

The size of the initial block is proportional to:

number_of_iterations / number_of_threads

Subsequent blocks are proportional to

B. Shanmuga Sundari, AP/CSE 12

CS8083 UNIT III MCP

number_of_iterations_remaining / number_of_threads

The chunk parameter defines the minimum block size. The default chunk size is 1.

RUNTIME

The scheduling decision is deferred until runtime by the environment variable

OMP_SCHEDULE. It is illegal to specify a chunk size for this clause.

AUTO

The scheduling decision is delegated to the compiler and/or runtime system.

▪ NO WAIT / no wait: If specified, then threads do not synchronize at the end of

the parallel loop.

▪ ORDERED: Specifies that the iterations of the loop must be executed as they

would be in a serial program.

▪ COLLAPSE: Specifies how many loops in a nested loop should be collapsed into

one large iteration space and divided according to the schedule clause.

Restrictions:

• The DO loop can not be a DO WHILE loop, or a loop without loop control. Also,

the loop iteration variable must be an integer and the loop control parameters must

be the same for all threads.

• Program correctness must not depend upon which thread executes a particular

iteration.

• It is illegal to branch (goto) out of a loop associated with a DO/for directive.

• The chunk size must be specified as a loop invariant integer expression, as there is

no synchronization during its evaluation by different threads.

• ORDERED, COLLAPSE and SCHEDULE clauses may appear once each.

Example

#include<stdio.h>

#include<omp.h>

B. Shanmuga Sundari, AP/CSE 13

CS8083 UNIT III MCP

int main()

{

#pragma omp parallel

{

#pragma omp for

For(int n=0;n<10;++n)

Printf (“%d”,n);

Printf(“/n”);

}

Output

0567182349

2. Sections directive

▪ Identifies a non-iterative work sharing construct

▪ Identifies code section to be divided among all threads

▪ Each section is executed by a thread

▪ Independent section directives are nested within a SECTIONS directive

#pragma omp sections [clause ... newline

private (list)

firstprivate (list)

lastprivate (list)

reduction (operator: list)

nowait ]

{

#pragma omp section newline

structured_block

#pragma omp section newline

structured_block

}

B. Shanmuga Sundari, AP/CSE 14

CS8083 UNIT III MCP

Clauses:

• There is an implied barrier at the end of a SECTIONS directive, unless

the NOWAIT/nowait clause is used

Example

#include<stdio.h>

#include<omp.h>

int main()

{

#pragma omp parallel sections num_threads(4)

{

Printf_s(“Hello from thread %d\n”, omp_get_thread_num());

#pragma omp section

Printf_s(“Hello from thread %d\n”, omp_get_thread_num());

}

}

Output:

Hello from thread 0

Hello from thread 0

3. Single Directive

Specifies that the associated structured block is executed by only one of the threads in the

team

Syntax:

#pragma omp single [clause ...] newline

private (list)

firstprivate (list)

nowait

B. Shanmuga Sundari, AP/CSE 15

CS8083 UNIT III MCP

structured_block

Clauses:

• Threads in the team that do not execute the SINGLE directive, wait at the end of

the enclosed code block, unless a NOWAIT/nowait clause is specified.

Restrictions:

It is illegal to branch into or out of a SINGLE block.

Example:

#include<stdio.h>

#include<omp.h>

int main()

{

#pragma omp parallel num_threads(2)

{

#pragma omp single

//Only a single thread can read the input

Printf_s(“read input\n”);

//Multiple threads in the team compute the results

Printf_s(“compute results\n”);

#pragma omp single

//Only a single thread can write the output

Printf_s(“write output\n”);

}

}

Output

read input

compute results

compute results

B. Shanmuga Sundari, AP/CSE 16

CS8083 UNIT III MCP

write output

Combined Parallel Work-Sharing Constructs

Used to specify a parallel region that contains only one work sharing construct

Example: Parallel For directive

#pragma omp parallel for[clause… ]new line

For loop

#pragma omp parallel sections directive

Provides a shortcut form for specifying a parallel region containing one sections directive

#pragma omp parallel sections[clause] new line

3.5 HANDLING DATA AND FUNCTIONAL PARALLELISM

1. Data Parallelism

▪ Data parallelism is a form of parallelization across multiple processors in

parallel computing environments.

▪ It focuses on distributing the data across different nodes, which operate on the data

in parallel. It can be applied on regular data structures like arrays and matrices by

working on each element in parallel.

▪ It contrasts to task parallelism as another form of parallelism.

▪ In a multiprocessor system executing a single set of instructions (SIMD), data

parallelism is achieved when each processor performs the same task on different

pieces of distributed data.

▪ In some situations, a single execution thread controls operations on all pieces of

data. In others, different threads control the operation, but they execute the same

code.

Example

Below is the sequential pseudo-code for multiplication and addition of two matrices

where the result is stored in the matrix C. The pseudo-code for multiplication calculates

the dot product of two matrices A, B and stores the result into the output matrix C.

B. Shanmuga Sundari, AP/CSE 17

CS8083 UNIT III MCP

If the following programs were executed sequentially, the time taken to calculate the

result would be of the O(n3) and O(n) for multiplication and addition respectively.

//Matrix Multiplication

for(i=0; i<row_length_A; i++)

{

for (k=0; k<column_length_B; k++)

{

sum = 0;

for (j=0; j<column_length_A; j++)

{

sum += A[i][j]*B[j][k];

}

C[i][k]=sum;

}

}

//Array addition

for(i=0;i<n;i++)

{

c[i]=a[i]+b[i];

}

An OpenMP directive, "omp parallel for" instructs the compiler to execute the code in the

for loop in parallel. For multiplication, we can divide matrix A and B into blocks along

rows and columns respectively. This allows us to calculate every element in matrix C

individually thereby making the task parallel.

Matrix multiplication in parallel

#pragma omp parallel for schedule(dynamic,1) collapse(2)

for(i=0; i<row_length_A; i++){

for (k=0; k<column_length_B; k++){

B. Shanmuga Sundari, AP/CSE 18

CS8083 UNIT III MCP

sum = 0;

for (j=0; j<column_length_A; j++){

sum += A[i][j]*B[j][k];

}

C[i][k]=sum;

}

}

It can be observed from the example that a lot of processors will be required as the matrix

sizes keep on increasing. Keeping the execution time low is the priority but as the matrix

size increases, we are faced with other constraints like complexity of such a system and

its associated costs. Therefore, constraining the number of processors in the system, we

can still apply the same principle and divide the data into bigger chunks to calculate the

product of two matrices.

For addition of arrays in a data parallel implementation, lets assume a more modest

system with two Central Processing Units (CPU) A and B.

▪ CPU A could add all elements from the top half of the arrays

▪ while CPU B could add all elements from the bottom half of the arrays.

▪ Since the two processors work in parallel, the job of performing array addition

would take one half the time of performing the same operation in serial using one

CPU alone.

The program expressed in pseudocode below—which applies some arbitrary operation,

foo, on every element in the array d—illustrates data parallelism:

if CPU = "a"

lower_limit := 1

upper_limit := round(d.length/2)

else if CPU = "b"

lower_limit := round(d.length/2) + 1

upper_limit := d.length

for i from lower_limit to upper_limit by 1

B. Shanmuga Sundari, AP/CSE 19

CS8083 UNIT III MCP

foo(d[i])

Steps to parallelization

The process of parallelizing a sequential program can be broken down into four discrete

steps.

2. Functional Parallelism

OpenMP allows us to assign different threads to different portions of code (functional

parallelism)

Functional Parallelism Example

v = alpha();

w = beta();

x = gamma(v, w);

y = delta();

printf ("%6.2f\n", epsilon(x,y));

May execute alpha,beta, and delta in parallel.

B. Shanmuga Sundari, AP/CSE 20

CS8083 UNIT III MCP

parallel sections Pragma

Precedes a block of k blocks of code that may be executed concurrently by k threads

Syntax:

#pragma omp parallel sections

section Pragma

Precedes each block of code within the encompassing block preceded by the parallel

sections pragma

May be omitted for first parallel section after the parallel sections pragma

Syntax:

#pragma omp section

Example of parallel sections

#pragma omp parallel sections

{

#pragma omp section /* Optional */

v = alpha();

#pragma omp section

B. Shanmuga Sundari, AP/CSE 21

CS8083 UNIT III MCP

w = beta();

#pragma omp section

y = delta();

}

x = gamma(v, w);

printf ("%6.2f\n", epsilon(x,y));

Another Approach

Execute alpha and beta in parallel.

Execute gamma and delta in parallel.

sections Pragma

Appears inside a parallel block of code

Has same meaning as the parallel sections pragma

If multiple sections pragmas inside one parallel block, may reduce fork/join costs

Use of sections Pragma

#pragma omp parallel

{

#pragma omp sections

{

v = alpha();

#pragma omp section

w = beta();

}

#pragma omp sections

{

x = gamma(v, w);

#pragma omp section

y = delta();

}

B. Shanmuga Sundari, AP/CSE 22

CS8083 UNIT III MCP

}

printf ("%6.2f\n", epsilon(x,y));

3.6 HANDLING LOOPS

During parallelization the looping statements given in openMP programs has to be

handled very carefully to avoid yielding wrong results. The following section describes

the various suitable ways to handle for loops in OpenMP.

Scheduling Loops

Assigning iterations to threads.

For example, if a for loop with 10 iterations and 5 omp threads are used in the program,

then the partitioning the loop iteration can be done as follows

Thread 1 Thread 2 Thread 3 Thread 4 Thread 5

1 2 4 6 8 10

As given in the figure, iterations one and two are assigned to thread1, iterations three and

four are assigned to thread2. Similarly, iterations nine and ten are assigned to Thread5.

The Schedule clause can be applied to for directive.

The Schedule Clause:

The schedule clause has the format:

Schedule(<type of schedule>,<chunk size>)

Where type of schedule has one of the following..

1. Static

2. Dynamic

3. Guided

4. Auto

5. Runtime

And <chunksize> is the block of iteration that each thread has to be carried out. It takes a

positive integer value. The description of the schedule type is given below:

B. Shanmuga Sundari, AP/CSE 23

CS8083 UNIT III MCP

Static Schedule clause

Here the iterations can be assigned to threads before the loop is executed. If the chunk

size is not specified, the iterations are evenly divided contiguously among the threads. To

illustrate this, let us again consider the same example given above. There are 10 iterations

used and are divided among two threads. By using,

Schedule(static,1)

The iterations can be allocated to threads as,

Thread1: 1,3,5,7,9

Thread2: 2,4,6,8,10

If the schedule clause given below is used,

Schedule(static, 5)

Then the iterations can be allocated to thread as,

Thread1: 1,2,3,4,5

Thread2: 6,7,8,9,10

The chunk size can be replaced with total no of iterations/max threads

Therefore the format of schedule clause can be written as

Schedule(<type of schedule>, total no of iterations/max threads)

In the given example, the value of total no of iterations = 10 and max threads =2,

Therefore the chunk size is 5.

Dynamic Schedule clause

In dynamic schedule clause, for loop iterations are allotted to threads during execution. If

a thread completes its assigned task, then it may request for next iteration from the

runtime system. The default chunk size is 1. The process would be continued until no

blocks remain to be assigned.

Guided Schedule clause

B. Shanmuga Sundari, AP/CSE 24

CS8083 UNIT III MCP

The function of guided schedule clause is same as the dynamic schedule clause. But there

is a little difference in allocating chunk size. When a thread completes its current

execution and request for a new one, then the size of the newly allocated ‘chunksize’

would be reduced. The size of the initial chunk size is

total no of iterations/max threads

The succeeding ‘chunksize’ assignment would be proportional to,

Total no of remaining iterations / max threads

The default chunk size is 1.

Auto schedule clause

Here the compiler may fix the schedule or the run time system may allocate the schedule

for threads.

Runtime Schedule clause

This type of clause can only be determined during runtime using omp_schedule

environment variable.

Example code: Using Schedule clause

#include<omp.h>

#define N 100

#define CHUNKSIZE 10

Void main(int argc, char *argv[])

{

int I, chunk_size;

int a[N], b[N], c[N];

for (i=0; i < N; i++)

a[i] = b[i] = i;

chunk_size = CHUNKSIZE;

#pragma omp parallel shared(a, b, c, chunk_size) private(i)

{

B. Shanmuga Sundari, AP/CSE 25

CS8083 UNIT III MCP

# pragma omp for schedule(dynamic, chunk_size) nowait

For (i=0; I < N; i++)

C[i] = a[i] + b[i];

}

}

Data Dependencies:

If one iteration depends on the results of its previous iterations, then for loop cannot be

parallelized correctly. This dependency called data dependencies.

Let us assume that the computation is carried out by two threads: Thread1 and Thread2.

The iterations 2,3 and 4 are allocated to Thread1 and iterations 5,6 and 7 are allocated to

Thread2. The computation can be done in any order by the threads.

Let us consider the situation in which the Thread 2 starts computing fib[5] before thread1

completes fib[4]. This situation definitely produces wrong result. Therefore openMP

cannot be able to parallelize the looping statements correctly if the iterations depend on

the result of previous iterations.

If the result of previous iterations is used in the subsequent iterations, then these type of

dependence is called a loop carried dependence.

Scope of variable

In serial programming, the scope of a variable consists of those parts of a program in which the

variable can be used. For example, a variable declared at the beginning of a C function has

“function-wide” scope, that is, it can only be accessed in the body of the function. On the other

hand, a variable declared at the beginning of a .c file but outside any function has “file-wide”

scope. The default scope of a variable can change with other directives, and that OpenMP

provides clauses to modify the default scope.

B. Shanmuga Sundari, AP/CSE 26

You might also like

- Bitcoin Private Key ListDocument5 pagesBitcoin Private Key Listwhat is this0% (1)

- Case Study Analysis: Voice War: Hey Google Vs Alexa Vs Siri Case SummaryDocument4 pagesCase Study Analysis: Voice War: Hey Google Vs Alexa Vs Siri Case SummaryRuhi chandelNo ratings yet

- ProCAM II CAD-Surf TutorialDocument190 pagesProCAM II CAD-Surf TutorialClaudio Salcedo Coaquira100% (3)

- Google6 - Google SearchDocument2 pagesGoogle6 - Google SearchC3CIL1KNo ratings yet

- Unit3 RMD PDFDocument25 pagesUnit3 RMD PDFMonikaNo ratings yet

- 3unit3 Mca PecnotesDocument23 pages3unit3 Mca PecnotesMonikaNo ratings yet

- 22 R. A. Kendall Et Al.: 1.3.2 The Openmp ModelDocument4 pages22 R. A. Kendall Et Al.: 1.3.2 The Openmp ModellatinwolfNo ratings yet

- Nscet E-Learning Presentation: Listen Learn LeadDocument67 pagesNscet E-Learning Presentation: Listen Learn Leaddurai muruganNo ratings yet

- K Means Clustering Using Openmp: Subject: Operating SystemsDocument12 pagesK Means Clustering Using Openmp: Subject: Operating SystemsThìn NguyễnNo ratings yet

- CCP - Parallel ComputingDocument10 pagesCCP - Parallel ComputingNaveen SettyNo ratings yet

- High Performance Scientific Computing: Module: Openmp ProgrammingDocument25 pagesHigh Performance Scientific Computing: Module: Openmp Programmingkushal bosuNo ratings yet

- Open MPDocument35 pagesOpen MPDebarshi MajumderNo ratings yet

- Beginning OpenMPDocument20 pagesBeginning OpenMPNikolina GjoreskaNo ratings yet

- Introduction To OpenMPDocument46 pagesIntroduction To OpenMPmceverin9No ratings yet

- OpenmpDocument127 pagesOpenmpivofrompisaNo ratings yet

- Unit 4 Shared-Memory Parallel Programming With OpenmpDocument37 pagesUnit 4 Shared-Memory Parallel Programming With OpenmpSudha PalaniNo ratings yet

- Unit 3 - Programming Multi-Core and Shared MemoryDocument100 pagesUnit 3 - Programming Multi-Core and Shared MemorySupreetha G SNo ratings yet

- 07 OpenMPDocument28 pages07 OpenMPHamid KishaNo ratings yet

- Day 1 1-12 Intro-OpenmpDocument57 pagesDay 1 1-12 Intro-OpenmpJAMEEL AHMADNo ratings yet

- Concurrent and Parallel Programming Unit V-Notes Unit V Openmp, Opencl, Cilk++, Intel TBB, Cuda 5.1 OpenmpDocument10 pagesConcurrent and Parallel Programming Unit V-Notes Unit V Openmp, Opencl, Cilk++, Intel TBB, Cuda 5.1 OpenmpGrandhi ChandrikaNo ratings yet

- WINSEM2022-23 CSE4001 ETH VL2022230504003 Reference Material I 22-12-2022 M-2 1parallel ArchitecturesDocument18 pagesWINSEM2022-23 CSE4001 ETH VL2022230504003 Reference Material I 22-12-2022 M-2 1parallel ArchitecturesnehaNo ratings yet

- Parallel Programming Module 2Document112 pagesParallel Programming Module 2divyansh.deathNo ratings yet

- Lec 12 OpenMPDocument152 pagesLec 12 OpenMPAvinashNo ratings yet

- Open MPDocument30 pagesOpen MPmacngocthanNo ratings yet

- Lecture 25-27Document64 pagesLecture 25-27Kripansh mehraNo ratings yet

- High Performance Computing-1 PDFDocument15 pagesHigh Performance Computing-1 PDFPriyanka JadhavNo ratings yet

- Ppi MultithreadingDocument4 pagesPpi MultithreadingmaniNo ratings yet

- Practical-02 PCDocument12 pagesPractical-02 PCSAMINA ATTARINo ratings yet

- OpenMP 2Document3 pagesOpenMP 2thatsarraNo ratings yet

- 2.3-DD2356-OpenMP DefinitionsDocument12 pages2.3-DD2356-OpenMP DefinitionsDaniel AraújoNo ratings yet

- OpenMP ExamplesDocument12 pagesOpenMP ExamplesFernando Montoya CubasNo ratings yet

- Lecture - 06 (Shared Memory Programming With OpenMP)Document65 pagesLecture - 06 (Shared Memory Programming With OpenMP)Farah JahangirNo ratings yet

- 1 Overview, Models of Computation, Brent's TheoremDocument8 pages1 Overview, Models of Computation, Brent's TheoremDr P ChitraNo ratings yet

- PDC Presentation UpdateDocument29 pagesPDC Presentation Updatemubeen.hasnat76No ratings yet

- Chap4 OpenMPDocument35 pagesChap4 OpenMPMichael ShiNo ratings yet

- CD MulticoreDocument10 pagesCD Multicorebhalchimtushar0No ratings yet

- PRAM ModelsDocument4 pagesPRAM ModelsASHWANI MISHRANo ratings yet

- Shared Memory: Openmp Environment and SynchronizationDocument32 pagesShared Memory: Openmp Environment and Synchronizationkarthik reddyNo ratings yet

- OpenmpDocument21 pagesOpenmpMark VeltzerNo ratings yet

- Openmp: Martin Kruliš Ji Ří DokulilDocument38 pagesOpenmp: Martin Kruliš Ji Ří DokulilBenni AriefNo ratings yet

- Multi ThreadingDocument17 pagesMulti ThreadingshivuhcNo ratings yet

- Lab 2: Brief Tutorial On Openmp Programming Model: Adrián Álvarez, Sergi Gil Par4207 2019/2020Document11 pagesLab 2: Brief Tutorial On Openmp Programming Model: Adrián Álvarez, Sergi Gil Par4207 2019/2020Adrián AlvarezNo ratings yet

- OpenMP BasicsDocument47 pagesOpenMP BasicscaptainnsaneNo ratings yet

- Begin Parallel Programming With OpenMP - CodeProjectDocument8 pagesBegin Parallel Programming With OpenMP - CodeProjectManojSudarshanNo ratings yet

- Multithreading in CDocument4 pagesMultithreading in Cmanju754No ratings yet

- Programming Shared-Memory Platforms With Openmp: John Mellor-CrummeyDocument46 pagesProgramming Shared-Memory Platforms With Openmp: John Mellor-CrummeyaskbilladdmicrosoftNo ratings yet

- Exploiting Loop-Level Parallelism For Simd Arrays Using: OpenmpDocument12 pagesExploiting Loop-Level Parallelism For Simd Arrays Using: OpenmpSpin FotonioNo ratings yet

- Cs6801 Mcap MgmDocument7 pagesCs6801 Mcap MgmIndhu RithikNo ratings yet

- Experiment No. 6 Aim: To Learn Basics of Openmp Api (Open Multi-Processor Api) Theory What Is Openmp?Document6 pagesExperiment No. 6 Aim: To Learn Basics of Openmp Api (Open Multi-Processor Api) Theory What Is Openmp?SAMINA ATTARINo ratings yet

- Introduction To Open MPDocument42 pagesIntroduction To Open MPahmad.nawazNo ratings yet

- Prof. Dr. Aman Ullah KhanDocument27 pagesProf. Dr. Aman Ullah KhanSibghat RehmanNo ratings yet

- OPENMP Language Features - Part 1 - 2Document38 pagesOPENMP Language Features - Part 1 - 2Ram KiranNo ratings yet

- Open MPLectureDocument54 pagesOpen MPLectureShakya GauravNo ratings yet

- PDC - Lecture - No. 2Document31 pagesPDC - Lecture - No. 2nauman tariqNo ratings yet

- Multithreading: Object Oriented Programming 1Document102 pagesMultithreading: Object Oriented Programming 1Kalyan MajjiNo ratings yet

- Multithreading Concept: by The End of This Chapter, You Will Be Able ToDocument78 pagesMultithreading Concept: by The End of This Chapter, You Will Be Able ToBlack Panda100% (1)

- Openmp: Dr. Nitya Hariharan (Intel)Document42 pagesOpenmp: Dr. Nitya Hariharan (Intel)KiaraNo ratings yet

- Compiler CostructionDocument15 pagesCompiler CostructionAnonymous UDCWUrBTSNo ratings yet

- Task Level Parallelization of All Pair Shortest Path Algorithm in Openmp 3.0Document4 pagesTask Level Parallelization of All Pair Shortest Path Algorithm in Openmp 3.0Hoàng VănNo ratings yet

- Java MultiThreadingDocument74 pagesJava MultiThreadingkasim100% (1)

- ST7 SHP 2.1 Multithreading On Multicores 1spp 2Document18 pagesST7 SHP 2.1 Multithreading On Multicores 1spp 2joshNo ratings yet

- A Comparison of Co-Array Fortran and Openmp Fortran For SPMD ProgrammingDocument20 pagesA Comparison of Co-Array Fortran and Openmp Fortran For SPMD Programmingandres pythonNo ratings yet

- W8L2 OpenMP6 FurthertopicsDocument20 pagesW8L2 OpenMP6 Furthertopicsl215376No ratings yet

- Process Schedulers in Operating SystemDocument8 pagesProcess Schedulers in Operating Systemlakshmi.tNo ratings yet

- Data Types in CDocument8 pagesData Types in Clakshmi.tNo ratings yet

- Decision MakingDocument6 pagesDecision Makinglakshmi.tNo ratings yet

- Test (23-05-24)Document1 pageTest (23-05-24)lakshmi.tNo ratings yet

- Test (16-05-24)Document1 pageTest (16-05-24)lakshmi.tNo ratings yet

- HA250 - Database Migration Using DMO - SAP HANA 2.0 SPS05Document107 pagesHA250 - Database Migration Using DMO - SAP HANA 2.0 SPS05srinivas6321No ratings yet

- New Connection LT New Connection Flow Hierarchy (NPDCL) :: With Out Extension Flow DescriptionDocument2 pagesNew Connection LT New Connection Flow Hierarchy (NPDCL) :: With Out Extension Flow DescriptionchinnaNo ratings yet

- Manual ArdupilotDocument16 pagesManual ArdupilotRaden TunaNo ratings yet

- DaftarDocument29 pagesDaftarHamsyah SianturiNo ratings yet

- Kyland DG A8 A16 en v1.0Document3 pagesKyland DG A8 A16 en v1.0Fabián MejíaNo ratings yet

- MD120 Installation InstructionsDocument5 pagesMD120 Installation InstructionsAmNe BizNo ratings yet

- TL-WR844N (EU) 1.0 DatasheetDocument5 pagesTL-WR844N (EU) 1.0 DatasheetCarlos Alberto100% (1)

- Final Project ReportDocument48 pagesFinal Project ReportfazalabbasNo ratings yet

- MSGuideDocument18 pagesMSGuideMahesh ChandrappaNo ratings yet

- Notes Taken During 3 July APQP PPAP ClassDocument2 pagesNotes Taken During 3 July APQP PPAP Classrosemarie tolentinoNo ratings yet

- Lesson 4.2 Literary Genre With ICT Skills EmpowermentDocument20 pagesLesson 4.2 Literary Genre With ICT Skills EmpowermentPaul Zacchaeus MapanaoNo ratings yet

- Tricky C Questions For GATEDocument114 pagesTricky C Questions For GATEKirti KumarNo ratings yet

- Package BCDating'Document12 pagesPackage BCDating'The IsaiasNo ratings yet

- Lec-3 Layer OSI ModelDocument34 pagesLec-3 Layer OSI ModelDivya GuptaNo ratings yet

- If ExercisesDocument5 pagesIf ExercisesDolores Alvarez GowlandNo ratings yet

- Week 2-3 1st Day ActivitiesDocument9 pagesWeek 2-3 1st Day ActivitiesJp TibayNo ratings yet

- 7-8 TLE Handicrafts Q2 Week 2-3Document10 pages7-8 TLE Handicrafts Q2 Week 2-3Grace100% (1)

- Ericsson Zero 3 (12mtr) Site Installation ManualDocument80 pagesEricsson Zero 3 (12mtr) Site Installation ManualAlyonaNo ratings yet

- Final DocumentDocument107 pagesFinal DocumentNikhil SaxenaNo ratings yet

- Winshuttle MDG Ebook ENDocument15 pagesWinshuttle MDG Ebook ENVenkata Ramana GottumukkalaNo ratings yet

- 25 Run Commands in Windows You Should Memorize - ENDocument19 pages25 Run Commands in Windows You Should Memorize - ENStayNo ratings yet

- Lab 12-NewDocument17 pagesLab 12-NewSumaira KhanNo ratings yet

- Test Plan-350431-Backend Client Data Enhancement For KYC ComplianceDocument5 pagesTest Plan-350431-Backend Client Data Enhancement For KYC ComplianceSyedNo ratings yet

- Factorytalk Batch View Quick Start GuideDocument22 pagesFactorytalk Batch View Quick Start GuideNelsonNo ratings yet

- Build Your Own Clone Parametric EQ Kit Instructions: WarrantyDocument27 pagesBuild Your Own Clone Parametric EQ Kit Instructions: Warrantyjohan ardinNo ratings yet

- Product Overview For SIMATIC S7-1200Document11 pagesProduct Overview For SIMATIC S7-1200Jorge CamachoNo ratings yet