Professional Documents

Culture Documents

friday_final_ppt

friday_final_ppt

Uploaded by

chandan kumar g p0 ratings0% found this document useful (0 votes)

1 views12 pagesCopyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

1 views12 pagesfriday_final_ppt

friday_final_ppt

Uploaded by

chandan kumar g pCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 12

Contrastive Learning methods for Graph

Representation Learning

Chandan Kumar G P

M Tech AI

Indian Institute of Science

Bangalore

July 21, 2023

Chandan Kumar G P 1/12

Graph representation learning :

Aim of graph representation learning is to learn effective

representations of graphs.

The main goal of graph representation learning is to map each

node in a graph to a vector representation in a continuous

vector space, commonly referred to as an embedding.

Some methods of representation learning are Node2Vec ,

Graph Neural Networks (GNNs) , Graph Autoencoders.

Some applications are Node Classification, Link Prediction,

Graph Clustering and Community Detection, etc.

Chandan Kumar G P 2/12

Contrastive Learning:

Contrastive learning is a selfsupervised representation learning

method

Contrastive learning in computer vision

Figure 1

Chandan Kumar G P 3/12

Contrastive learning in graphs

Get two diffrent views from a single graphs and learn better

representations

Figure 2

Encode using encoders and use contrastive loss to learn better

representations

Chandan Kumar G P 4/12

Contrastive Learning framework:

Let G = (V, E) denote a graph, where V = {v1 , v2 , ......., vN }

and E ∈ V × V represent the node set and the edge set

respectively , X ∈ RN ×F , A ∈ {0, 1}N ×N denote the feature

matrix and the adjacency matrix respectively .

′

Our objective is to learn a GNN encoder f (X, A) ∈ RN ×F

that produces node embeddings in low dimensionality.

Figure 3: Illustrative model

Chandan Kumar G P 5/12

Loss function: for each positive pair (ui , vi )

θ(u ,v )/τ

ℓ(ui , vi ) = log θ(u ,v )/τ X eθ(u i,v i)/τ X θ(u ,u )/τ

e| {z } +

i i

e i k + e i k

positive pair k̸=i k̸=i

| {z } | {z }

inter-view negative pairs intra-view negative pairs

where τ is a temperature parameter,θ(u, v) = s(g(u), g(v)) ,

where s(., .) is the cosine similarity and g(.) is a nonlinear

projection (implemented with a two-layer perceptron model).

The overall objective to be maximized is the average over all

positive P

pairs

1 N

L = 2N i=1 [ℓ(ui , vi ) + ℓ(ui , vi )]

Chandan Kumar G P 6/12

Adaptive Graph Augmentation:

This augmentation scheme tend to keep important structures

and attributes unchanged, while perturbing possibly

unimportant links and features.

T opology level augmentation : we sample a modified

subset Ẽ from the original E with probability

P ((u, v) ∈ Ẽ) = 1 − peuv

1 − peuv should reflect the importance of (u, v)

We define edge centrality as the average of two adjacent

e = (ϕ (u) + ϕ (v))/2

node’s centrality scores i.e., wuv c c

On directed graph, we simply use the centrality of the tail i.e.,

e = ϕ (v)

wuv c

seuv = log(wuv

e )

e −suv e

peuv = min( ssmax

e −µe .pe , pτ )

max s

Chandan Kumar G P 7/12

We can use Degree centrality , Eigenvector centrality or

PageRank centrality

N ode attribute level augmentation : We add noise to

node attributes via randomly masking a fraction of dimensions

with zeros in node features. with probability pfi

the probability pfi should reflect the importance of the i-th

dimension of node features.

For each feature dimension we calculate weights as

wif = u∈V |xui |.ϕc (u)

P

We compute probabilty as

seuv = log(wuv

e )

f f

pfi = min( smax

f

−suv

f .pf , pτ )

smax −µs

Chandan Kumar G P 8/12

Canonical Correlation Analysis based Contrastive learning:

This introduces a non-contrastive and non-discriminative

objective for self-supervised learning, which is inspired by

Canonical Correlation Analysis methods.

Canonical Correlation Analysis: For two random variables

xP∈ Rm and y ∈ Rn , their covariance matrix is

xy = Cov(x, y)

CCA aims at seeking two vectors a ∈ Rm and b ∈PRn such

aT xy b

that the correlation, ρ =corr(aT x, bT y)= √ q P

aT xy a bT xy b

P

is maximized

Objective is: maxa,b aT s.t aT = bT

P P P

xy b xy a xy b =1

Chandan Kumar G P 9/12

By replacing the linear transformation with neural networks.

Concretely, assuming x1, x2 as two views of an input data.

objective is: maxθ1 ,θ2 Tr(PθT1 (x1)Pθ2 (x2)) s.t

PθT1 (x1)Pθ1 (x1) = PθT2 (x2)Pθ2 (x2) =I

where Pθ1 and Pθ2 are two feedforward neural networks and I

is an identity matrix.

still such computation is really expensive and soft CCA

removes the hard decorrelation constraint by adopting the

following Lagrangian relaxation:

minθ1 ,θ2

Ldist (Pθ1 (x1), Pθ2 (x2))+λ(LSDL (Pθ1 (x1)) + LSDL (Pθ2 (x2)))

Ldist measures correlation between two views representations

and LSDL called stochastic decorrelation loss

2 2 2

L = ∥Z̃A − Z̃B ∥F +λ (∥Z̃TA Z̃A − I∥F + ∥Z̃TB Z̃B − I∥F )

| {z } | {z }

invariance term decorrelation term

Chandan Kumar G P 10/12

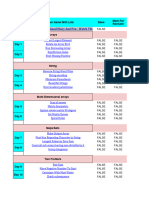

Results:

X for node features, A for adjacency matrix, S for diffusion matrix,

and Y for node labels

Chandan Kumar G P 11/12

Thank You

Chandan Kumar G P 12/12

You might also like

- Homework For Module 3 Part 2Document6 pagesHomework For Module 3 Part 2bita younesian100% (3)

- Stanford University CS 229, Autumn 2014 Midterm ExaminationDocument23 pagesStanford University CS 229, Autumn 2014 Midterm ExaminationErico ArchetiNo ratings yet

- DRDO_PPT_m1Document16 pagesDRDO_PPT_m1chandan kumar g pNo ratings yet

- Bi-Polynomial Rank and Determinantal ComplexityDocument20 pagesBi-Polynomial Rank and Determinantal ComplexityKunal MittalNo ratings yet

- On Deep Learning As A Remedy For The Curse of Dimensionality in Nonparametric RegressionDocument25 pagesOn Deep Learning As A Remedy For The Curse of Dimensionality in Nonparametric RegressionQardawi HamzahNo ratings yet

- Parameter EstimationDocument32 pagesParameter EstimationRohit SinghNo ratings yet

- Minka Dirichlet PDFDocument14 pagesMinka Dirichlet PDFFarhan KhawarNo ratings yet

- Appendix: 12.1 Inventory of DistributionsDocument6 pagesAppendix: 12.1 Inventory of DistributionsAhmed Kadem ArabNo ratings yet

- BRLF Miccai2013 FixedrefsDocument8 pagesBRLF Miccai2013 FixedrefsSpmail AmalNo ratings yet

- Studying - Dual Graph Convolutional Networks For Aspect-Based Sentiment AnalysisDocument12 pagesStudying - Dual Graph Convolutional Networks For Aspect-Based Sentiment Analysis蔡佳穎No ratings yet

- 10.1515 - Math 2021 0044Document15 pages10.1515 - Math 2021 0044Ahmad SobirinNo ratings yet

- SAA For JCCDocument18 pagesSAA For JCCShu-Bo YangNo ratings yet

- Machine Learning - Unit 2Document104 pagesMachine Learning - Unit 2sandtNo ratings yet

- 2 Linear TransformationsDocument32 pages2 Linear TransformationsShanNo ratings yet

- Gaussian Mixture Model: P (X - Y) P (Y - X) P (X)Document3 pagesGaussian Mixture Model: P (X - Y) P (Y - X) P (X)Kishore Kumar DasNo ratings yet

- Midterm 2014Document23 pagesMidterm 2014Zeeshan Ali SayyedNo ratings yet

- Monogamy of Entanglement: IjDocument3 pagesMonogamy of Entanglement: IjDaniel Sebastian PerezNo ratings yet

- Tutte CoseDocument8 pagesTutte CoseMauro PiazzaNo ratings yet

- Ariella 413098318Document17 pagesAriella 413098318Ravi DixitNo ratings yet

- Hierarchical Matrices and Adaptive CrossDocument10 pagesHierarchical Matrices and Adaptive CrossChee Zhen QiNo ratings yet

- Lec15 16 HandoutDocument33 pagesLec15 16 HandoutNe Naing LinNo ratings yet

- Random Processes: Version 2, ECE IIT, KharagpurDocument8 pagesRandom Processes: Version 2, ECE IIT, KharagpurHarshaNo ratings yet

- SinhaDu16 PDFDocument20 pagesSinhaDu16 PDFÜmit AslanNo ratings yet

- A New Index Calculus Algorithm With Complexity L (1/4 + o (1) ) in Small CharacteristicDocument23 pagesA New Index Calculus Algorithm With Complexity L (1/4 + o (1) ) in Small Characteristicawais04No ratings yet

- Class19 ApproxinfDocument45 pagesClass19 ApproxinfKhadija M.No ratings yet

- CS19M016 PGM Assignment1Document9 pagesCS19M016 PGM Assignment1avinashNo ratings yet

- Adaptive and Statistical Signal ProcessingDocument21 pagesAdaptive and Statistical Signal Processingfff9210No ratings yet

- 978-1-6654-7661-4/22/$31.00 ©2022 Ieee 25Document12 pages978-1-6654-7661-4/22/$31.00 ©2022 Ieee 25vnodataNo ratings yet

- Roychowdhury 2010 MSCDocument16 pagesRoychowdhury 2010 MSCJohnNo ratings yet

- CS6785 HW2Document4 pagesCS6785 HW2darklanxNo ratings yet

- Qm Summary Final: h μ x σ σ s s σ s ρ δ dDocument13 pagesQm Summary Final: h μ x σ σ s s σ s ρ δ dJan BorowskiNo ratings yet

- Exercise 1Document22 pagesExercise 1Adal Arasu100% (1)

- 08 - Chapter - 05Document33 pages08 - Chapter - 05Mustafa khanNo ratings yet

- On The Connection Between Neural Processes and Gaussian Processes With Deep KernelsDocument6 pagesOn The Connection Between Neural Processes and Gaussian Processes With Deep KernelsNina MouhoubNo ratings yet

- CS 563 Advanced Topics in Computer Graphics Monte Carlo Integration: Basic ConceptsDocument38 pagesCS 563 Advanced Topics in Computer Graphics Monte Carlo Integration: Basic ConceptsNeha SameerNo ratings yet

- Ca 1606693858Document40 pagesCa 1606693858epsi76No ratings yet

- Complex-Network Modelling and InferenceDocument26 pagesComplex-Network Modelling and InferencemannycarNo ratings yet

- ThetalogDocument16 pagesThetalogSimos SoldatosNo ratings yet

- RBFDocument18 pagesRBFAmin ZoljanahiNo ratings yet

- Digital Image ProcessingDocument2 pagesDigital Image ProcessingGeremu TilahunNo ratings yet

- Suppl Mat QDocument2 pagesSuppl Mat QChiru MukherjeeNo ratings yet

- WBMT2049-T2/WI2032TH - Numerical Analysis For ODE'sDocument23 pagesWBMT2049-T2/WI2032TH - Numerical Analysis For ODE'sJoost SchinkelshoekNo ratings yet

- 21-Lp-And LPDocument5 pages21-Lp-And LPDmitri ZaitsevNo ratings yet

- Pattern Classification: All Materials in These Slides Were Taken FromDocument18 pagesPattern Classification: All Materials in These Slides Were Taken FromavivroNo ratings yet

- hw8 (5555)Document3 pageshw8 (5555)Ezekiel ElliottNo ratings yet

- Singular Subelliptic Equations and Sobolev InequalDocument19 pagesSingular Subelliptic Equations and Sobolev InequalАйкын ЕргенNo ratings yet

- A 18-Page Statistics & Data Science Cheat SheetsDocument18 pagesA 18-Page Statistics & Data Science Cheat SheetsAniket AggarwalNo ratings yet

- Irreducible Polynomials That Factor Mod Every Prime: Reed JacobsDocument23 pagesIrreducible Polynomials That Factor Mod Every Prime: Reed JacobsValentioNo ratings yet

- (Rough) Notes On SUSY Gauge Theories and D-Branes: Christian S AmannDocument19 pages(Rough) Notes On SUSY Gauge Theories and D-Branes: Christian S AmannfasdfasdfNo ratings yet

- A Proof of Local Convergence For The Adam OptimizerDocument8 pagesA Proof of Local Convergence For The Adam OptimizerPuja Dwi LestariNo ratings yet

- Advanced Topics in Learning and VisionDocument26 pagesAdvanced Topics in Learning and VisionHemanth MNo ratings yet

- Case Study With Probabilistic ModelsDocument85 pagesCase Study With Probabilistic ModelsSmita BhutadaNo ratings yet

- Linear RegressionDocument34 pagesLinear RegressionRaksa KunNo ratings yet

- Lecture 12Document38 pagesLecture 12Võ Minh TríNo ratings yet

- Appendix C An Introduction To The Green' S Function MethodDocument7 pagesAppendix C An Introduction To The Green' S Function MethodAnonymous Gd16J3n7No ratings yet

- CSD311: Artificial IntelligenceDocument33 pagesCSD311: Artificial IntelligenceAyaan KhanNo ratings yet

- Scattering Matrix in Conformal Geometry: The Erwin SCHR Odinger International Institute For Mathematical PhysicsDocument29 pagesScattering Matrix in Conformal Geometry: The Erwin SCHR Odinger International Institute For Mathematical PhysicsJose RamirezNo ratings yet

- Xi - Mat - Vol I & II - RRDocument57 pagesXi - Mat - Vol I & II - RRSpedNo ratings yet

- FinalDocument5 pagesFinalBeyond WuNo ratings yet

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- HashingDocument3 pagesHashingMarti Rishi kumarNo ratings yet

- אלגוריתמים- הרצאה 4 - Dynamic ProgrammingDocument4 pagesאלגוריתמים- הרצאה 4 - Dynamic ProgrammingRonNo ratings yet

- Simulink PCMDocument11 pagesSimulink PCMbipbul100% (1)

- Answers Association Rules We KaDocument7 pagesAnswers Association Rules We KaBảo BrunoNo ratings yet

- Control Systems (CS) : Lecture-2 Laplace Transform Transfer Function and Stability of LTI SystemsDocument41 pagesControl Systems (CS) : Lecture-2 Laplace Transform Transfer Function and Stability of LTI Systemskamranzeb057No ratings yet

- Introduction To Numerical Methods With Examples in JavascriptDocument55 pagesIntroduction To Numerical Methods With Examples in JavascriptFrancis DrakeNo ratings yet

- EG55P7 Tutorial 01 SolutionsDocument7 pagesEG55P7 Tutorial 01 SolutionsAndrew RobertsonNo ratings yet

- Impulse Response MeasurementsDocument31 pagesImpulse Response MeasurementsTrajkoNo ratings yet

- Runge-Kutta 4th Order MethodDocument14 pagesRunge-Kutta 4th Order MethodkomalNo ratings yet

- MBLLENDocument14 pagesMBLLENanonymous010303No ratings yet

- Solving A Transportation Problem Actual Problem Using Excel SolverDocument5 pagesSolving A Transportation Problem Actual Problem Using Excel SolverKim HyunaNo ratings yet

- Generate MPC Controller Using Generalized Predictive Controller (GPC) SettinDocument3 pagesGenerate MPC Controller Using Generalized Predictive Controller (GPC) SettinTout Sur L'algerieNo ratings yet

- Cs8080 Unit3 Text Classification and ClusteringDocument171 pagesCs8080 Unit3 Text Classification and ClusteringGnanasekaranNo ratings yet

- Digital Communication Unit 5Document105 pagesDigital Communication Unit 5mayur.chidrawar88No ratings yet

- AI&ML Lab ReportDocument19 pagesAI&ML Lab ReportMd. Mahabub Alam NishatNo ratings yet

- 100 Days DSA RoadmapDocument15 pages100 Days DSA Roadmapakanksha1singh9100% (1)

- Zfi Inc Activfi F01Document57 pagesZfi Inc Activfi F01JonathanMuñozSolanoNo ratings yet

- Expt 8 MorphsspDocument4 pagesExpt 8 MorphsspShubhamNo ratings yet

- Data Structure - AVL TreeDocument6 pagesData Structure - AVL TreeWeerasingheNo ratings yet

- 05 HashFunctionsDocument61 pages05 HashFunctionssoekrisNo ratings yet

- CS 131 Discussion 2 ExercisesDocument4 pagesCS 131 Discussion 2 Exercisesisa-mateo-3052No ratings yet

- CH 10 Error Detection and Correction Multiple Choice Questions and Answers MCQ PDF - Data CommunicationDocument9 pagesCH 10 Error Detection and Correction Multiple Choice Questions and Answers MCQ PDF - Data CommunicationAli TehreemNo ratings yet

- 5 2017 04 1709 57 49 AmDocument32 pages5 2017 04 1709 57 49 AmJonathan PolaniaNo ratings yet

- Specilization in Ai&mlDocument8 pagesSpecilization in Ai&mlASHISHNo ratings yet

- Advanced Training Course On FPGA Design and VHDL For Hardware Simulation and SynthesisDocument20 pagesAdvanced Training Course On FPGA Design and VHDL For Hardware Simulation and SynthesisLalita KumariNo ratings yet

- Local Search and Optimization ProblemsDocument10 pagesLocal Search and Optimization ProblemsGOWRI MNo ratings yet

- Ebook Deep Learning Objective Type QuestionsDocument102 pagesEbook Deep Learning Objective Type QuestionsNihar ShahNo ratings yet

- Matched Filter - HaykinDocument3 pagesMatched Filter - HaykinSivaranjan GoswamiNo ratings yet

- Advanced ML PDFDocument25 pagesAdvanced ML PDFsushanthNo ratings yet

- Important ProgramsDocument2 pagesImportant ProgramsAro JayaNo ratings yet