Professional Documents

Culture Documents

PROBLEM SOLVING

PROBLEM SOLVING

Uploaded by

Nicholas OmondiCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

PROBLEM SOLVING

PROBLEM SOLVING

Uploaded by

Nicholas OmondiCopyright:

Available Formats

PROBLEM SOLVING, SEARCH AND CONTROL STRATEGIES

PROBLEM SOLVING

Problem solving is fundamental to many AI-based applications. Problem solving is a process of

generating solutions from observed data. A problem is characterized by:

A set of goals

A set of objects and

A set of operations

These could be ill-defined and may evolve during problem solving. To develop a computer

system that is capable of solving problems it is necessary to perform 4 activities:

Define the problem precisely including detailed specifications and what constitutes an

acceptable solution.

Analyse the problem thoroughly for some features may have a dominant affect on the

chosen method of solution.

Isolate and represent the background knowledge needed in the solution of the problem.

Choose the best problem solving techniques in the solution.

DEFINING PROBLEM AS A STATE SPACE SEARCH

Problems dealt with in artificial intelligence generally use a common term called 'state'. A state

represents a status of the solution at a given step of the problem solving procedure. The solution

of a problem is thus a collection of the problem states.

The problem solving procedure applies an operator to a state to get the next state. Then it

applies another operator to the resulting state to derive a new state. The process of applying an

operator to a state and its subsequent transition to the next state, thus, is continued until the goal

(desired) state is derived. Such a method of solving a problem is generally referred to as state

space approach.

Problem Definitions

A problem is defined by its elements and their relations. To provide a formal description of a

problem the following are required:

Define a state space this is a set of all possible states of a given problem reachable from the

initial state. A state space representation allow for the formal definition of a problem which

makes the movement from initial state to the goal state quite easy.

Specify one or more states that describe possible situations, from which the problem-solving

process may start. These states are called initial states.

Specify one or more states that would be acceptable solution to the problem. These states are

called goal states.

Specify a set of rules that describe the actions (operators) available. The problem can then be

solved by using the rules, in combination with an appropriate control strategy, to move

through the problem space until a path from an initial state to a goal state is found. This

process is known as ‘search’.

Notes prepared by peninah J. limo Page 1

Search is fundamental to the problem-solving process. Search is a general mechanism that can be

used when more direct method is not known. Search provides the framework into which more

direct methods for solving subparts of a problem can be embedded. A very large number of AI

problems are formulated as search problems.

Advantages of state space reprentation

This representation is very useful in AI because it provides a set of all possible states, operations

and goals. If the entire state-space representation for a problem is given then it is possible to

trace the path from initial to goal state and identify the sequence of operators required for doing

this.

Disadvantages

It is not possible to visualize all states of a given problem. Also the resources of the computer

system are limited to handle huge state space representation.

PROBLEM CHARACTERISTICS

A problem may have different aspects of representation and explanation. In order to choose the

most appropriate method for a particular problem, it is necessary to analyze the problem along

several key dimensions. Some of the main key features of a problem are given below.

1. Is the problem decomposable into set of sub problems?

2. Can the solution step be ignored or undone?

3. Is the problem universally predictable?

4. Is a good solution to the problem obvious without comparison to all the possible

solutions?

5. Is the desire solution a state of world or a path to a state?

6. Is a large amount of knowledge absolutely required to solve the problem?

7. Will the solution of the problem required interaction between the computer and the

person?

The above characteristics of a problem are called as 7-problem characteristics under which the

solution must take place.

EXAMPLES OF SEARCH STATE SPACE PROBLEMS

Example 1: 8-Puzzle

Problem formulation: The eight tile puzzle consist of a 3 by 3(3*3) square frame board which

holds (8) movable tiles numbered as 1 to 8. One square is empty, allowing the adjacent tile to be

shifted. The objective of the puzzle is to find a sequence of tiles movement that leads from a

starting configuration to a goal configuration as shown below

1 4 3 1 4 3

7 6 To be tranformed 7 6 2

5 8 2 5 8

Start state(initial) Goal state(Final)

Notes prepared by peninah J. limo Page 2

NB: Please note that an optimal solution is the one that maps an initial arrangement of tiles to

the goal with the smallest (minimum) number of moves. Let’s do standard formulation of this

problem:

State: It specifies the location of each of the 8 tiles and the blank in one of the nine squares

Initial State: Any state can be designed as the initial state.

Goal: Many goal configuration are possible as shown in figure below

Legal Moves (or states): They generate legal states that result from trying. The four actions

Black moves left

Black moves right

Black moves up

Blank moves down

Path cost: Each step cost 1, so the path is number of steps in the path.

The tree diagram below shows search space

Notes prepared by peninah J. limo Page 3

Example 2: Water Jug Problem

The problem domain is as follows “Given two jugs, a 4-gallon and 3-gallon having no measuring

markers on them. There is a pump that can be used to fill the jugs with water. How can you get

exactly 2 gallons of water into 4-gallon jug.

State space representation

State space for this problem can be described as the set of ordered pairs of integers (X, Y)

such that X=0,1,2,3, or 4 and Y=0,1,2 or 3. Here X is the number of gallons of water in the 4-

gallon jug and Y is the quantity of water in the 3-gallon jug.

Start state is (0,0) and

the Goal state is (2, N) for any value of N. Since the problem does not specify how many

gallons need to be filled in the three gallon jug (0,1,2,3). Also note that the problem has

one initial state and many goal state. Some problems may have many initial states and

one or many goals.

The operators to be used to solve the problem can be described below. They are

represented as rules whose left side are matched against the current state and whose right

sides describe the new state that results from applying the rule.

Table 1:Production rule(or operators) for the water jug problem

1. (X,Y) (4,Y) If X<4 Fill the 4- gallon jug

2. (X,Y) (X,3) If X<3 Fill the 3-gallon jug

3. (X,Y) (X-D,Y) If Y>0 Pour some water out of the 4- gallon jug

4. (X,Y) (X,Y-D) If Y>0 Pour some water out of the 3- gallon jug

5. (X,Y) (0,Y) If X>0 Empty the 4- gallon jug on the ground

6. (X,Y) (X,0) If Y>0 Empty the 3- gallon jug on the ground

7. (X,Y) (4,Y-(4-X)) If Pour water from the 3- gallon jug into the 4-gallon

X+Y>=4 and Y >0 jug until the 4-galoon jug is full

8 (X,Y) (X-(3-Y),3)) If Pour water from the 4- gallon jug into the 3-gallon

X+Y>=3 and X>0 jug until the

9. (X,Y) (X+Y,0) If X+Y<=4 Pour all the water from the 3 -gallon jug into

and Y>0 the 3-gallon jug

10. (X,Y) (0,X+Y) If X+Y<=3 Pour all the water from the 4 -gallon jug into

Notes prepared by peninah J. limo Page 4

and X>0 the 3-gallon jug

11. (0,2) (2,0) Pour the 2-gallon from the 3 -gallon jug into

the 4-gallon jug

12. (2,Y) (0,X) Empty the 2 gallon in the 4 gallon on the ground

Please note that the speed with which the problem is solved depends upon the mechanism control

structure which is used to select the next operation. Two such possible solutions are shown

below:

Trace of steps involved in solving the water jug problem First solution

Number Rules applied 4-g jug 3-g jug

of steps

1 Initial state 0 0

2 R2 {Fill 3-g jug} 0 3

3 R7 {Pour all water from 3 to 4-g jug} 3 0

4 R2 {Fill 3-g jug} 3 3

5 R5 {Pour from 3 to 4-g jug until it is full 4 2

6 R3 {Empty 4-gallon jug} 0 2

7 R7 {Pour all water from 3 to 4-g jug} 2 0

Goal state

The 2nd solution can be:-

Number Rules applied 4-g jug 3-g jug

of steps

1 Initial state 0 0

2 R1 {Fill 4-gallon jug} 4 0

3 R6 {Pour from water 4 to 3-g jug} 1 3

4 R4 {Empty 3-gallon jug} 1 0

5 R8 {Pour all water from 4 to 3-gallon jug} 0 1

6 R1 {Fill 4-gallon jug} 4 1

7 R6 {Pour from 4 to 3-g jug until it is full} 2 3

8 R4 {Empty 3-gallon jug} 2 0

Goal state

Notes prepared by peninah J. limo Page 5

State space graph of water jug problem.

Notes prepared by peninah J. limo Page 6

Example 3 : Missionaries and Cannibals

The Missionaries and Cannibals problem illustrates the use of state space search for planning

under constraints:

Three missionaries and three cannibals wish to cross a river using a two person boat. If at any

time the cannibals outnumber the missionaries on either side of the river, they will eat the

missionaries. How can a sequence of boat trips be performed that will get everyone to the other

side of the river without any missionaries being eaten?

State representation:.

An initial state is: (3, 3, LEFT, 0, 0) - 3 missionaries and 3 cannibals on the left bank of

the river

A goal state is: (0, 0, RIGHT, 3, 3) -3 missionaries and 3 cannibals on the right bank of the

river

Possible moves are:

from (3, 3, LEFT, 0, 0) to (2, 2, RIGHT, 1, 1)

from (2, 2, RIGHT, 1, 1) to (2, 3, LEFT, 1, 0)

Operators for M&C:

Move-1m1c-lr, Move-1m1c-rl, Move-2c-lr, Move-2c-rl, Move-2m-lr ,Move-2m-rl,

Move-1c-lr, Move-1c-rl, Move-1m-lr, Move-1m-rl

The state space graph for missionary and cannibals is shown below:

Notes prepared by peninah J. limo Page 7

Example 4: Towers of Hanoi

The “Towers of Hanoi” puzzle is an interesting example of a state space for solving a puzzle

problem. The object of this puzzle is to move a number of disks from one peg to another (one at

a time), with a number of constraints that must be met. Each disk is of a unique size and it’s not

legal for a larger disk to sit on top of a smaller disk. The initial state of the puzzle is such that

all disks begin on one peg in increasing size order as shown below.

Initial position Goal Position

Our goal (the solution) is to move all disks to the last peg as shown . As in many state spaces,

there are potential transitions that are not legal. For example, we can only move a peg that has no

object above it. Further,we can’t move a large disk onto a smaller disk (though we can move any

disk to an empty peg). The space of possible operators is therefore constrained only to legal

moves. The state space can also be constrained to moves that have not yet been performed for a

given subtree. For example, if we move a small disk from Peg A to Peg C, moving the same disk

back to Peg A could be defined as an invalid transition. Not doing so would result in loops and

an infinitely deep tree.

Consider our initial position shown above. The only disk that may move is the small disk at the

top of Peg A. For this disk, only two legal moves are possible, from Peg A to Peg B or C. From

this state, there are three potential moves:

1. Move the small disk from Peg C to Peg B.

2. Move the small disk from Peg C to Peg A.

3. Move the medium disk from Peg A to Peg B.

The first move (small disk from Peg C to Peg B), while valid is not a potential

move, as we just moved this disk to Peg C (an empty peg). Moving it a second

time serves no purpose (as this move could have been done during the prior

transition), so there’s no value in doing this now (a heuristic). The second

move is also not useful (another heuristic), because it’s the reverse of the

The state space tree generated by this problem is illustrated below

Notes prepared by peninah J. limo Page 8

Note that, because some transitions return the disks to an earlier state, the tree if fully expanded

would be of infinite size. So whilst generating the entire tree, searching it for the goal state, and

reading off the transitions that lead to that state, is a guaranteed method of solving the problem,

it's not actually possible to do (in a finite universe).

Notes prepared by peninah J. limo Page 9

SEARCH IN ARTIFICIAL INTELLIGENCE

Search is a problem-solving technique that systematically consider all possible action to find a

path from initial to target state.

In solving a problem, it’s convenient to think about the solution space in terms of a

number of actions that we can take, and the new state of the environment as we perform those

actions. As we take one of multiple possible actions (each have their own cost), our environment

changes and opens up alternatives for new actions. As is the case with many kinds of problem

solving, some paths lead to dead-ends where others lead to solutions. And there may also be

multiple solutions, some better than others.

The problem of search is to find a sequence of operators that transition from the start to

goal state. That sequence of operators is the solution. How we avoid dead-ends and then select

the best solution available is a product of our particular search strategy.

Search strategies

A strategy is defined by picking the order of node expansion. Strategies are evaluated based on:

completeness—does it always find a solution if one exists?

time complexity—number of nodes generated/expanded

space complexity—maximum number of nodes in memory

optimality—does it always find a least-cost solution?

There two major types of search strategies this include Uninformed and informed search strategy

1. Uninformed Search: sometimes called blind, exhaustive or bruto-force. Methods that do not

use any specific knowledge about the problem to guide the search and therefore may not be

very efficient. Search through the search space all possible candidates for the solution

checking whether each candidate satisfies the problem's statement. The search techniques in

this strategy include:

Breadth-first search BFS

depth-first search DFS

Depth limited search DLS

Depth first search Iterative deepening DFSID

Uniform cost search UCS

Bi-directional search

2. Informed Search: sometime called heuristics or intelligent search which uses information

about the problem to guide the search - usually guesses the distance to a goal state and

therefore efficient, but the search may not be always possible. They are specific to the

problem. The methods in this strategy include:

Best-First Search

o Greedy best-first Search

o A* Search

Notes prepared by peninah J. limo Page 10

1. UNINFORMED/EXHAUSTIVE/BRUTE FORCE SEARCH

(i) Breadth-first search

Breadth-first search is a simple strategy in which the root node is expanded first,then all

successors of the root node are expanded next,then their successors,and so on. In general,all the

nodes are expanded at a given depth in the search tree before any nodes at the next level are

expanded.

Figure 1: Breadth-first search on a simple binary tree.

Advantages

Breath First Search is an exhaustive search algorithm. It is simple to implement. And it can

be applied to any search problem.

Comparing Breath First Search to depth-first search algorithm, BFS does not suffer from any

potential infinite loop problem , which may cause the computer to crash whereas depth first

search goes deep down searching.

Breath First Search will perform well if the search space is small.

It performs best if the goal state lies in upper left-hand side of the tree.

If there is more than one solution then Breath First search find the minimal one that requires

less number of steps.

Disadvantages

Breath first search performs relatively poorly relative to the depth-first search algorithm if

the goal state lies in the bottom of the tree.

memory utilization is poor in Breath First Search so we can say that Breath First Search

needs more memory as compared to DFS.

(ii) Depth-First Search

The depth first search follow a path to its end before stating to explore another path.

Notes prepared by peninah J. limo Page 11

Figure 2: Depth-first-search on a binary tree.

Advantages:

Low storage requirement: linear with tree depth.

Easily programmed: function call stack does most of the work of maintaining state of the

search.

Disadvantages:

May find a sub-optimal solution (one that is deeper or more costly than the best solution).

Incomplete: without a depth bound, may not find a solution even if one exists.)

The drawback of depth-first-search is that it can make a wrong choice and get stuck going

down very long(or even infinite) path when a different choice would lead to solution near the

root of the search tree.

Notes prepared by peninah J. limo Page 12

(iii) Depth-Limited Search

Depth-limited search essentially does a depth-first search with a cutoff at a specified depth limit.

When the search hits a node at that depth, it stops going down that branch and moves over to the

next one. This avoids the potential problem with depth-first search of going down one branch

indefinitely.

Advantages

Depth limited search avoids the pitfalls of depth search by imposing a cut-off on the

maximum depth of a path.

Disavantages

Depth limited search is complete but not optimal.

If we choose a depth limit that is too small, then depth limited search is not even complete.

The time and space complexity of depth limited search is similar to depth first search.

(iv) Depth First Search Iterative Deepening

Depth First Search Iterative Deepening is a kind of search performs depth first search to

bounded depth d , starting d=1, and on each iteration it increases by 1.Depth First Search

Iterative Deepening was created as an attempt to combine the ability of BFS to always find an

optimal solution. With the lower memory overhead of the DFS, we can say it combines the best

features of breadth first and depth first search. It performs the DFS search to depth one, then

starts over, executing a complete DFS to depth two, and continues to run depth first searches to

successfully greater depths until a solution is found.

Figure 3: Stages of iterative deepening search

Advantages:

Finds an optimal solution (shortest number of steps).

Notes prepared by peninah J. limo Page 13

Has the low (linear in depth) storage requirement of depth-first search.

Unlike normal depth-first search and depth-limited search, it is complete. It also does this

without greatly increasing the expected runtime.

Disadvantages:

The disadvantage is wasted search

(v) Uniform-cost search (UCS)

Uniform-cost search is an uninformed search strategy. Uniform-cost is guided by path cost rather

than path length like in Breath First Search, the algorithms starts by expanding the root, then

expanding the node with the lowest cost from the root, the search continues in this manner for all

nodes.

UCS finds the least-cost path through a graph by maintaining an ordered list of nodes in order of

least-greatest cost(Priority Queue).This allows evaluation of the least cost path first. The

algorithm uses the accumulated path cost and a priority queue to determine the path to evaluate.

The priority queue (least cost) contains the nodes to be evaluated. As node children are

evaluated, we add their cost to the node with the aggregate sum of the current path. This node is

then added to the queue, and when all children have been evaluated, the queue is sorted in order

of ascending cost. When the first element in the priority queue is the goal node, then the best

solution has been found.

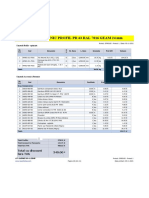

Step-by-Step Execution of UCS

Step0: { [ S , 0 ] }

Step1: { [ S->A , 1 ] , [ S->G , 12 ] }

Step2: { [ S->A->C , 2 ] , [ S->A->B , 4 ] , [ S->G , 12] }

Step3: { [ S->A->C->D , 3 ] , [ S->A->B , 4 ] ,[ S->A->C->G

, 4 ] , [ S->G , 12 ] }

Step4: { [ S->A->B , 4 ] , [ S->A->C->G , 4 ] ,[ S->A->C-

>D->G , 6 ] , [ S->G , 12 ] }

Step5: { [ S->A->C->G , 4 ] , [ S->A->C->D->G , 6 ] ,

[ S->A->B->D , 7 ] , [ S->G , 12 ] }

Step6 Output as S->A->C->G.

Waheed

Notes prepared by peninah J. limo Page 14

Advantages

1. Can set to prune (remove) duplicate entries in the queue.

2. Can set to search exhaustively for optimal solution, if possible,among different lest-cost

candidate paths to the goal.

Disadvantages

1. It performs wasted computation before reaching the goal depth.

(vi) Bidirectional Search

Bidirectional Search The idea behind bidirectional search is to run two searches same time, one

forward from the initial state and other backward from the goal stopping when the two searches

meet in the middle .Bidirectional search is implemented by having one or both of the searches

check each mode before it is expanded to see if it is in the fringe of other search tree; if it is so, a

solution has been found.

For example if a problem has solution depth d=6, and each direction runs breadth-first search one

node at a time then in the worst case the two searches meet when each has expanded all but one

of the nodes at depth 3. For b=10, it means a total of 22,200 node generation, compared with

11,111,100 for a standard breadth-first search. This algorithm is complete and optimal, if both

searches are breadth first; other combinations may sacrifice completeness, optimally or both.

Advantages

Time and memory requirements good, comparatively

Disadvantages

Not always feasible, or possible, to search backward through possible states.

Notes prepared by peninah J. limo Page 15

2. INFORMED SEARCH/HEURISTICS SEARCH

A heuristic is a method that might not always find the best solution but is guaranteed to find a

good solution in reasonable time. By sacrificing completeness it increases efficiency. It is

particularly useful in solving tough problems which could not be solved any other way and if a

complete solution was to be required infinite time would be needed i.e. far longer than a lifetime.

To use heuristics to find a solution in acceptable time rather than a complete solution in infinite

time.

A heuristic function or simply a heuristic is a function that ranks alternatives in various search

algorithms at each branching step basing on an available information in order to make a decision

which branch is to be followed during a search

Heuristic or informed search exploits additional knowledge about the problem that helps direct

search to more promising paths. A heuristic function, h(n), provides an estimate of the cost of

the path from a given node to the closest goal state. Must be zero if node represents a goal state.

Example:

Straight-line distance from current location to the goal location in a road navigation problem.

(i) Best First Search

This is simply breadth-first search, but with the nodes re-ordered by their heuristic value. Best-

first retains a record of every state that has been visited as well as the heuristic value of that state.

The best state ever visited is retrieved and search continues from there. This makes best-first

search appear to jump around the search tree, like a random search, but of course best-first search

is not random. The memory requirements for best-first search are worse than hill-climbing but

not as bad as breadth-first. This is because breadth-first search does not use a heuristic to avoid

obviously worse states. There are two types of Best first search this include:

Greedy Best First Search

A* Search

a) Greedy Best First Search

Greedy searchis a best-first strategy where we try to minimize estimated cost to reach the goal.

Since we are greedy always expand the node that is estimated to be closest to the goal state,

Unfortunately the exact cost of reaching the goal state usually can’t be computed, but we can

estimate it by using a cost estimate or heuristic function h().

f(n) = h(n)

When we are examining node n, then h() gives us the estimated cost of the cheapest path from

n’s state to the goal state. Of course the better an estimate h() gives, the better and faster we will

find a solution to our problem Greedy search has similar behavior to depth-first search. Its

advantages are delivered via the use of a quality heuristic function to direct the search.

Notes prepared by peninah J. limo Page 16

Advantages

The main advantage of this search is that it is simple and finds solution quickly

Disadvantages

Like depth-first search, greedy search is not complete.

Greedy search is not guaranteed to find the solution with the shortest path i.e not optimal

It is possible for greedy search to proceed down an infinitely long branch without finding a

solution, even when one exists i.e likely to get stuck in loops

b) A* Search

A* Search One of the best-known form of Best First search. A* search algorithm, which

combines the greedy search algorithm for efficiency with the uniform cost search for optimality

and completeness. Unlike greedy search, with A* the heuristic function also takes into account

the existing cost from the starting point to the current node. This searching technique avoids

expanding paths that are already expensive, but expands most promising paths first.

In A* the evaluation function is computed by the two heuristic measures h(n) and g(n).

f(n) = g(n) + h(n), where

g(n) the cost of the shortest path from the start node to node n

h(n) returns the actual cost of the shortest path from n to the goal

f(n) this is the actual cost of the optimal path from start node to goal node that passes

through node n

This combination of strategies turns out to provide A* with both completeness(does it always

find a solution if one exists?) and optimality(does it always find a least-cost solution?).

Advantages

A* search is complete - it will always find a solution if one exists.

A* search is optimal - to be optimal it must be used with anadmissibile heuristic. An

admissibile heuristic, also known as an optimistic heuristic, never overestimates the cost

of reaching the goal.

Disadvantages

A* search is that, as it needs to maintain a list of unsearched nodes, it can require large

amounts of memory.

Notes prepared by peninah J. limo Page 17

(ii) Hill-climbing search

The basic idea of hill-climbing search is that it simply evaluates the objective function for all

states that are neighbors to the current state, and takes the neighbor state with the best objective

function value as the new current state. If there are more than one next best states, one is picked

randomly.

Hill-climbing search is sometimes called greedy search, because a step is taken after only

considering the immediate neighbors. No time is spent considering possible future states.

Hill-climbing is easy to formulate and implement and often finds pretty good states quickly. But,

it has the following problems:

it gets stuck on local optima (hills for maximizing searches, valleys for minimizing searches,

it may get stuck on a ridge, if no single action can advance the search along the ridge,

it may get stuck wandering on a plateau for which all neighboring states have equal value.

Common variations include

allow sideways moves (when on a plateau)

stochastic hill-climbing: choose next state with probability related to increase in value of

objective function

first-choice hill-climbing: generate neighbors by random choice of available actions and keep

first state that has better value,

random-restart hill climbing: conduct multiple hill-climbing searches from multiple,

randomly generated, initial states.

Only this last one, with random-restarts, is complete. In the limit, all states will be tried as

starting states so the goal, or best state, will eventually be found.

Advantages

1. Acceptable for simple problems.

Disadvantages

1. Local Maxima: peaks that aren’t the highest point in the space

2. Plateaus: the space has a broad flat region that gives the search algorithm no direction

(random walk)

3. Ridge:The orientation of the high region, compared to the set of available moves, makes

it impossible to climb up. However, two moves executed serially may increase the height.

Notes prepared by peninah J. limo Page 18

You might also like

- Catalogue-Mitutoyo-2022 Final Ok PDFDocument638 pagesCatalogue-Mitutoyo-2022 Final Ok PDFHuỳnh Quốc DũngNo ratings yet

- Software Testing Principles and PracticesDocument674 pagesSoftware Testing Principles and PracticesTiago Martins100% (1)

- Hobart Handler 175 220 VAC MIG Welder User Manual O944 - HobDocument48 pagesHobart Handler 175 220 VAC MIG Welder User Manual O944 - HobrbastaNo ratings yet

- Artificial Intelligence and Machine LearningDocument19 pagesArtificial Intelligence and Machine LearningHIRAL AMARNATH YADAVNo ratings yet

- Problems, Problem Spaces and SearchDocument11 pagesProblems, Problem Spaces and Searchsi89No ratings yet

- Chapter-2 Search and Constraint Specifications: Intelligent SystemDocument15 pagesChapter-2 Search and Constraint Specifications: Intelligent SystemBhargavNo ratings yet

- Artificial IntelligenceDocument106 pagesArtificial IntelligenceCharith RcNo ratings yet

- Problems, Problem Spaces and Search: 1 Prof. L. B. Damahe, CT, YCCEDocument58 pagesProblems, Problem Spaces and Search: 1 Prof. L. B. Damahe, CT, YCCEyoutube channelNo ratings yet

- Artificial Intelligence WINTER 2021 SOLU.Document15 pagesArtificial Intelligence WINTER 2021 SOLU.VivekNo ratings yet

- Chapter 2 Problem State Space Search - Heuristic Search TechniquesDocument56 pagesChapter 2 Problem State Space Search - Heuristic Search TechniquesImran SNo ratings yet

- Syllabus: What Is Artificial Intelligence? ProblemsDocument66 pagesSyllabus: What Is Artificial Intelligence? ProblemsUdupiSri groupNo ratings yet

- Ai Chapter 2Document24 pagesAi Chapter 2d2gb6whnd9No ratings yet

- Unit 2 - Part 1Document67 pagesUnit 2 - Part 1Priyal PatelNo ratings yet

- P, P S A S: Roblems Roblem Paces ND EarchDocument52 pagesP, P S A S: Roblems Roblem Paces ND EarchPrakhar PathakNo ratings yet

- Unit 2a PROBLEM SOLVING TECHNIQUES - Uninformed Search PDFDocument65 pagesUnit 2a PROBLEM SOLVING TECHNIQUES - Uninformed Search PDFMadhav ChaudharyNo ratings yet

- Artificial Intelligence SUMMER 2022 SOLU.Document14 pagesArtificial Intelligence SUMMER 2022 SOLU.VivekNo ratings yet

- Artificial Intelligence Module-1 Chapter 2 & 3: AI/JB/ISE/SIT/2019-20Document100 pagesArtificial Intelligence Module-1 Chapter 2 & 3: AI/JB/ISE/SIT/2019-20Charith RcNo ratings yet

- Problems, Problem Spaces and SearchDocument54 pagesProblems, Problem Spaces and SearchTania CENo ratings yet

- AI ProblemsDocument46 pagesAI ProblemsLini IckappanNo ratings yet

- Problems, Problem Space N Search (Lecture 6-11)Document86 pagesProblems, Problem Space N Search (Lecture 6-11)Roshan Mishra100% (1)

- L2 Prob Solving 07Document30 pagesL2 Prob Solving 07Aman VermaNo ratings yet

- Unit 1 - Ai - KCS071Document32 pagesUnit 1 - Ai - KCS071Minal SinghNo ratings yet

- Unit I-AiDocument31 pagesUnit I-Aigunalan gNo ratings yet

- FAI - Unit-2 - State Space Search & Heuristic Search TechniquesDocument19 pagesFAI - Unit-2 - State Space Search & Heuristic Search TechniquesSR SRNo ratings yet

- Unit-2: Problems, State Space Search & HeuristicDocument64 pagesUnit-2: Problems, State Space Search & Heuristic131 nancyNo ratings yet

- What Is Artificial IntelligenceDocument18 pagesWhat Is Artificial IntelligenceRajkumar DharmarajNo ratings yet

- Unit - 2 AiDocument66 pagesUnit - 2 Aichandru kNo ratings yet

- Production System State-Space Formulation: Representation of Problem and Its SolutionDocument29 pagesProduction System State-Space Formulation: Representation of Problem and Its SolutionVishal SinghNo ratings yet

- 18CS71-Artificial Intelligence Question BankDocument5 pages18CS71-Artificial Intelligence Question BankMANAS DUTTANo ratings yet

- 3-Problem, Production SystemDocument21 pages3-Problem, Production SystemEAGLE FFNo ratings yet

- Problem SolvingDocument10 pagesProblem SolvingAnup MaharjanNo ratings yet

- Artificial Intelligence (6CS6.2) Unit 1. A Introduction To Artificial IntelligenceDocument52 pagesArtificial Intelligence (6CS6.2) Unit 1. A Introduction To Artificial Intelligencevishakha_18No ratings yet

- IAT-I Question Paper With Solution of 18CS71 Artificial Intelligence and Machine Learning Oct-2022-Dr. Paras Nath SinghDocument7 pagesIAT-I Question Paper With Solution of 18CS71 Artificial Intelligence and Machine Learning Oct-2022-Dr. Paras Nath SinghBrundaja D NNo ratings yet

- Introduction To AI Lecture 3: Uninformed Search: Heshaam Faili Hfaili@ece - Ut.ac - Ir University of TehranDocument64 pagesIntroduction To AI Lecture 3: Uninformed Search: Heshaam Faili Hfaili@ece - Ut.ac - Ir University of Tehranlamba5No ratings yet

- Searching TechniquesDocument99 pagesSearching Techniquesjaspreetkaur20004No ratings yet

- UNIT - 2:problem Solving: State-Space Search and Control StrategiesDocument55 pagesUNIT - 2:problem Solving: State-Space Search and Control StrategiesRakeshNo ratings yet

- Chapter 3-Problem Solving by Searching Part 1Document80 pagesChapter 3-Problem Solving by Searching Part 1Dewanand GiriNo ratings yet

- Chapitre 2Document80 pagesChapitre 2Chaima BelhediNo ratings yet

- Chapter 3 Problem Solving AgentsDocument69 pagesChapter 3 Problem Solving Agentshamba AbebeNo ratings yet

- AI Module2Document7 pagesAI Module2tushar67No ratings yet

- Cryptanalysis Cryptarithmetic ProblemDocument43 pagesCryptanalysis Cryptarithmetic ProblemAbhinish Swaroop50% (2)

- AI L-02-Problem SolvingDocument20 pagesAI L-02-Problem Solvingsikandar070308No ratings yet

- Problems, Problem Spaces and SearchDocument31 pagesProblems, Problem Spaces and Searchvirgulatiit21a1068No ratings yet

- Chapter 2 Problem SolvingDocument190 pagesChapter 2 Problem SolvingMegha GuptaNo ratings yet

- AI 0ML Assignment-2Document7 pagesAI 0ML Assignment-2Dileep KnNo ratings yet

- Chapter 2 Problem SolvingDocument205 pagesChapter 2 Problem SolvingheadaidsNo ratings yet

- AI Problems & State Space: J. Felicia LilianDocument32 pagesAI Problems & State Space: J. Felicia LilianAbhinav PanchumarthiNo ratings yet

- Defining The Problem As A State Space SearchDocument9 pagesDefining The Problem As A State Space SearchashNo ratings yet

- Problems, Problem Spaces, and SearchDocument111 pagesProblems, Problem Spaces, and SearchdollsicecreamNo ratings yet

- Session 10 Uninfomed Search StrategiesDocument24 pagesSession 10 Uninfomed Search StrategiesMarkapuri Santhoash kumarNo ratings yet

- Water Jug Problem in Artificial IntelligenceDocument2 pagesWater Jug Problem in Artificial IntelligenceAvinash AllaNo ratings yet

- Experiment No - 02Document4 pagesExperiment No - 02ayesha sheikhNo ratings yet

- State Space Search and Heuristic Search TechniquesDocument16 pagesState Space Search and Heuristic Search Techniques28Akshay LokhandeNo ratings yet

- Ai Mod2Document33 pagesAi Mod2SapnaNo ratings yet

- Artificial IntelligenceDocument480 pagesArtificial Intelligencekaustavkakoty4No ratings yet

- Aids - Co1 - Session 3&4Document25 pagesAids - Co1 - Session 3&4sunny bandelaNo ratings yet

- Lecture 4Document17 pagesLecture 4SOUMYODEEP NAYAK 22BCE10547No ratings yet

- Artificial IntelligenceDocument143 pagesArtificial IntelligenceBhuvaneshAngelsAngelsNo ratings yet

- Lecture AI Problems SpaceDocument33 pagesLecture AI Problems Spaceadragon64No ratings yet

- Unit 2Document243 pagesUnit 2Gangadhar ReddyNo ratings yet

- Problem FormulationDocument11 pagesProblem FormulationAdri JovinNo ratings yet

- Monster Power - HTS3500 Manual PDFDocument42 pagesMonster Power - HTS3500 Manual PDFJose MondragonNo ratings yet

- 4936 Im Ap 02 10005Document7 pages4936 Im Ap 02 10005mauroalexandreandreNo ratings yet

- Solidworks 2017 Intermediate SkillsDocument40 pagesSolidworks 2017 Intermediate SkillsMajd HusseinNo ratings yet

- Advanced Linux: Exercises: 0 Download and Unpack The Exercise Files (Do That First Time Only)Document6 pagesAdvanced Linux: Exercises: 0 Download and Unpack The Exercise Files (Do That First Time Only)Niran SpiritNo ratings yet

- 380 KV Underground Transmission LineDocument15 pages380 KV Underground Transmission LineMohmmad NawabNo ratings yet

- Hibon VTBDocument8 pagesHibon VTBr rNo ratings yet

- STK415-100-E: 2-Channel Power Switching Audio Power IC, 60W+60WDocument12 pagesSTK415-100-E: 2-Channel Power Switching Audio Power IC, 60W+60WedisonpcNo ratings yet

- RTU QuestionnaireDocument7 pagesRTU Questionnaireimadz853No ratings yet

- Strategic Management Course Outline SUKCM 0220Document4 pagesStrategic Management Course Outline SUKCM 0220Niswarth TolaNo ratings yet

- Introduction of Insulation CoordinationDocument5 pagesIntroduction of Insulation CoordinationTaher El NoamanNo ratings yet

- FB Test CaseDocument8 pagesFB Test CaseLionKingNo ratings yet

- Artist 22 Pro User Manual (Spanish)Document12 pagesArtist 22 Pro User Manual (Spanish)Jaime RománNo ratings yet

- MJD122 889583Document8 pagesMJD122 889583Mahmoud Elpop ElsalhNo ratings yet

- Mobil DTE 700 Series: High-Performance Turbine Oil For Gas and Steam TurbinesDocument2 pagesMobil DTE 700 Series: High-Performance Turbine Oil For Gas and Steam TurbinesSimon CloveNo ratings yet

- OFERTA TEHNIC PROFIL PR 63 RAL 7016 GEAM 24 MMDocument1 pageOFERTA TEHNIC PROFIL PR 63 RAL 7016 GEAM 24 MMLucianNo ratings yet

- Guide To Syncsort PDFDocument41 pagesGuide To Syncsort PDFblueniluxNo ratings yet

- Assistant Drill Rig Operator Interview Questions and Answers GuideDocument13 pagesAssistant Drill Rig Operator Interview Questions and Answers GuideBassam HSENo ratings yet

- Textbook Programming Language Concepts Peter Sestoft Ebook All Chapter PDFDocument51 pagesTextbook Programming Language Concepts Peter Sestoft Ebook All Chapter PDFcandace.conklin507100% (7)

- XPO Q2 2021 TranscriptDocument16 pagesXPO Q2 2021 Transcriptshivshankar HondeNo ratings yet

- MCQ Artificial Intelligence Class 10 Computer VisionDocument41 pagesMCQ Artificial Intelligence Class 10 Computer VisionpratheeshNo ratings yet

- Flyers DesignDocument4 pagesFlyers DesignRamdas M NambisanNo ratings yet

- Fujifilm X100vi Digital Camera in Silver Camera ResearchDocument2 pagesFujifilm X100vi Digital Camera in Silver Camera Researchapi-728188368No ratings yet

- Conditional Exercise 3 - ENGLISH PAGE DAYANA SARAGUAYO 3Document1 pageConditional Exercise 3 - ENGLISH PAGE DAYANA SARAGUAYO 3Dayana SaraguayoNo ratings yet

- Inheritance Quiz (CS)Document23 pagesInheritance Quiz (CS)AylaNo ratings yet

- Theory On Wiring Large SystemsDocument17 pagesTheory On Wiring Large SystemsoespanaNo ratings yet

- Acer Aspire 3610 - Wistron Morar - Rev SBDocument40 pagesAcer Aspire 3610 - Wistron Morar - Rev SBPer JensenNo ratings yet

- Project Report For Granite Tiling Plant: Market Position:-Present Granite Tile Manufacturers in IndiaDocument6 pagesProject Report For Granite Tiling Plant: Market Position:-Present Granite Tile Manufacturers in Indiaram_babu_59No ratings yet