Professional Documents

Culture Documents

A Naive Relevance Feedback Model For Content-Basedimageretrievalusingmultiple

A Naive Relevance Feedback Model For Content-Basedimageretrievalusingmultiple

Uploaded by

Tmatoz KitchenCopyright:

Available Formats

You might also like

- FDP On AIDocument10 pagesFDP On AIarunasekaran100% (1)

- Mini ProjectDocument31 pagesMini ProjectDakamma DakaNo ratings yet

- Autoencoders - PresentationDocument18 pagesAutoencoders - PresentationPhenil BuchNo ratings yet

- The Influence of The Similarity Measure To Relevance FeedbackDocument5 pagesThe Influence of The Similarity Measure To Relevance FeedbackAnonymous ntIcRwM55RNo ratings yet

- Unifying User-Based and Item-Based Collaborative Filtering Approaches by Similarity FusionDocument8 pagesUnifying User-Based and Item-Based Collaborative Filtering Approaches by Similarity FusionEvan John O 'keeffeNo ratings yet

- Latent Semantic Models For Collaborative Filtering: Thomas Hofmann Brown UniversityDocument27 pagesLatent Semantic Models For Collaborative Filtering: Thomas Hofmann Brown Universitymulte123No ratings yet

- Design of An Effective Method For Image RetrievalDocument6 pagesDesign of An Effective Method For Image RetrievalIJIRAENo ratings yet

- Medical Images Retrieval Using Clustering TechniqueDocument8 pagesMedical Images Retrieval Using Clustering TechniqueEditor IJRITCCNo ratings yet

- Recommender: An Analysis of Collaborative Filtering TechniquesDocument5 pagesRecommender: An Analysis of Collaborative Filtering TechniquesParas MirraNo ratings yet

- CS6007 - Information RetrievalDocument38 pagesCS6007 - Information RetrievalvNo ratings yet

- A Method For Comparing Content Based Image Retrieval MethodsDocument8 pagesA Method For Comparing Content Based Image Retrieval MethodsAtul GuptaNo ratings yet

- Matchminer: Efficient Spanning Structure Mining in Large Image CollectionsDocument14 pagesMatchminer: Efficient Spanning Structure Mining in Large Image CollectionsFrank PukNo ratings yet

- Mixture Models and ApplicationsFrom EverandMixture Models and ApplicationsNizar BouguilaNo ratings yet

- ML Project Proposal Group (Num 10)Document7 pagesML Project Proposal Group (Num 10)wanyonyi kizitoNo ratings yet

- Active Learning Methods For Interactive Image RetrievalDocument78 pagesActive Learning Methods For Interactive Image RetrievalAnilkumar ManukondaNo ratings yet

- I Jsa It 01132012Document5 pagesI Jsa It 01132012WARSE JournalsNo ratings yet

- Real-Time Fine-Grained Air Quality Sensing Networks in Smart City: Design, Implementation and OptimizationDocument4 pagesReal-Time Fine-Grained Air Quality Sensing Networks in Smart City: Design, Implementation and OptimizationRoshan MNo ratings yet

- Improved Visual Final Version NewDocument9 pagesImproved Visual Final Version NewAmanda CarterNo ratings yet

- Collaborative Filtering Using A Regression-Based Approach: Slobodan VuceticDocument22 pagesCollaborative Filtering Using A Regression-Based Approach: Slobodan VuceticmuhammadrizNo ratings yet

- Multimodal Biometrics: Issues in Design and TestingDocument5 pagesMultimodal Biometrics: Issues in Design and TestingMamta KambleNo ratings yet

- 2378-Article Text-6919-2-10-20161127Document18 pages2378-Article Text-6919-2-10-20161127chonjinhuiNo ratings yet

- Visual Categorization With Bags of KeypointsDocument17 pagesVisual Categorization With Bags of Keypoints6688558855No ratings yet

- Visual Information Retrieval For Content Based Relevance Feedback: A ReviewDocument6 pagesVisual Information Retrieval For Content Based Relevance Feedback: A ReviewKarishma JainNo ratings yet

- Google Inc., Mountain View, California, United States of AmericaDocument5 pagesGoogle Inc., Mountain View, California, United States of AmericamadsyairNo ratings yet

- Rocchio RelevanceDocument10 pagesRocchio RelevanceOmar El MidaouiNo ratings yet

- Automatic Image Annotation and Retrieval Using Cross-Media Relevance ModelsDocument8 pagesAutomatic Image Annotation and Retrieval Using Cross-Media Relevance ModelsjimakosjpNo ratings yet

- Combining Colour SignaturesDocument6 pagesCombining Colour SignaturesSEP-PublisherNo ratings yet

- An Overview of Advances of Pattern Recognition Systems in Computer VisionDocument27 pagesAn Overview of Advances of Pattern Recognition Systems in Computer VisionHoang LMNo ratings yet

- An Efficient Perceptual of Content Based Image Retrieval System Using SVM and Evolutionary AlgorithmsDocument7 pagesAn Efficient Perceptual of Content Based Image Retrieval System Using SVM and Evolutionary AlgorithmsAnonymous lPvvgiQjRNo ratings yet

- Osteoporosis Detection Using Machine and Deep Learning TechniquesDocument15 pagesOsteoporosis Detection Using Machine and Deep Learning TechniquesSeddik KhamousNo ratings yet

- Graph Autoencoder-Based Unsupervised Feature Selection With Broad and Local Data Structure PreservationDocument28 pagesGraph Autoencoder-Based Unsupervised Feature Selection With Broad and Local Data Structure PreservationriadelectroNo ratings yet

- Multilabel Neighborhood Propagation For Region-Based Image RetrievalDocument13 pagesMultilabel Neighborhood Propagation For Region-Based Image RetrievalGulsah OzNo ratings yet

- 12562-Article (PDF) - 27062-1-10-20210527Document22 pages12562-Article (PDF) - 27062-1-10-20210527JITUL MORANNo ratings yet

- Stage 2014 Rapport RoyerDocument18 pagesStage 2014 Rapport RoyerGaneshNo ratings yet

- Prediction Analysis Techniques of Data Mining: A ReviewDocument7 pagesPrediction Analysis Techniques of Data Mining: A ReviewEdwardNo ratings yet

- Image Recognition Using CNNDocument12 pagesImage Recognition Using CNNshilpaNo ratings yet

- Symmetry: Design of An Unsupervised Machine Learning-Based Movie Recommender SystemDocument27 pagesSymmetry: Design of An Unsupervised Machine Learning-Based Movie Recommender SystemMIGUEL ANGEL PICO LEALNo ratings yet

- Matrix FactiorizationDocument12 pagesMatrix FactiorizationVăn ĐỗNo ratings yet

- Content-Based Image RetrievalDocument4 pagesContent-Based Image RetrievalKuldeep SinghNo ratings yet

- Machine Learning Project Phase 2Document7 pagesMachine Learning Project Phase 2Shaam mkNo ratings yet

- Efficient Rec SpringerDocument16 pagesEfficient Rec SpringerQuan VuNo ratings yet

- Anomalous Topic Discovery in High Dimensional Discrete DataDocument4 pagesAnomalous Topic Discovery in High Dimensional Discrete DataBrightworld ProjectsNo ratings yet

- International Journal of Engineering Research and DevelopmentDocument5 pagesInternational Journal of Engineering Research and DevelopmentIJERDNo ratings yet

- Graph Filtering For Recommendation On Heterogeneous Information NetworksDocument12 pagesGraph Filtering For Recommendation On Heterogeneous Information Networksnaresh.kosuri6966No ratings yet

- A Hybrid Approach For Personalized Recommender System Using Weighted TFIDF On RSS ContentsDocument11 pagesA Hybrid Approach For Personalized Recommender System Using Weighted TFIDF On RSS ContentsATSNo ratings yet

- Synthetic Generation of High Dimensional DatasetDocument8 pagesSynthetic Generation of High Dimensional Datasetmohammad nasir AbdullahNo ratings yet

- Bi-Similarity Mapping Based Image Retrieval Using Shape FeaturesDocument7 pagesBi-Similarity Mapping Based Image Retrieval Using Shape FeaturesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Moving Object DetectionDocument14 pagesMoving Object DetectionAmith Ram Reddy100% (1)

- Collaborative Semantic Annotation of Images Ontology-Based ModelDocument11 pagesCollaborative Semantic Annotation of Images Ontology-Based ModelsipijNo ratings yet

- Visual Diversification of Image Search ResultsDocument10 pagesVisual Diversification of Image Search ResultsAlessandra MonteiroNo ratings yet

- Sensors: Multi-Modality Medical Image Fusion Using Convolutional Neural Network and Contrast PyramidDocument17 pagesSensors: Multi-Modality Medical Image Fusion Using Convolutional Neural Network and Contrast PyramidShrida Prathamesh KalamkarNo ratings yet

- Active Learning Methods For Interactive Image RetrievalDocument12 pagesActive Learning Methods For Interactive Image RetrievalAnilkumar ManukondaNo ratings yet

- Wang 2017Document12 pagesWang 2017AI tailieuNo ratings yet

- Mathematics 11 01383Document18 pagesMathematics 11 01383Jesus QSNo ratings yet

- Semi Supervised Biased Maximum Margin Analysis For Interactive Image RetrievalDocument62 pagesSemi Supervised Biased Maximum Margin Analysis For Interactive Image RetrievalkhshethNo ratings yet

- Image PruningDocument69 pagesImage Pruningnarendran kNo ratings yet

- Building A Movie Recommendation System Using Collaborative Filtering With TF-IDF-IJRASETDocument13 pagesBuilding A Movie Recommendation System Using Collaborative Filtering With TF-IDF-IJRASETIJRASETPublicationsNo ratings yet

- Convolutional Neural Network For Satellite Image ClassificationDocument14 pagesConvolutional Neural Network For Satellite Image Classificationwilliam100% (1)

- A Study On Visualizing Semantically Similar Frequent Patterns in Dynamic DatasetsDocument6 pagesA Study On Visualizing Semantically Similar Frequent Patterns in Dynamic DatasetsInternational Journal of computational Engineering research (IJCER)No ratings yet

- A FFT Based Technique For Image Signature Generation: Augusto Celentano and Vincenzo Di LecceDocument10 pagesA FFT Based Technique For Image Signature Generation: Augusto Celentano and Vincenzo Di LecceKrish PrasadNo ratings yet

- International Journal of Computational Engineering Research (IJCER)Document6 pagesInternational Journal of Computational Engineering Research (IJCER)International Journal of computational Engineering research (IJCER)No ratings yet

- IoT Seminar Week 2Document1 pageIoT Seminar Week 2Jiahui HENo ratings yet

- Resource Constrained Project Scheduling ProblemDocument15 pagesResource Constrained Project Scheduling ProblemFurkan UysalNo ratings yet

- Deep Learning and Multilingual Sentiment Analysis On Social MediaDocument11 pagesDeep Learning and Multilingual Sentiment Analysis On Social Mediarajghag0409No ratings yet

- (SSNMF) Semi-Supervised Nonnegative Matrix FactorizationDocument4 pages(SSNMF) Semi-Supervised Nonnegative Matrix FactorizationprabhakaranNo ratings yet

- Long Short Term Memory Recurrent NetworkDocument11 pagesLong Short Term Memory Recurrent Network陈丁鸿No ratings yet

- Industrial2 - PIDDocument66 pagesIndustrial2 - PIDCiubotaru Diana ClaudiaNo ratings yet

- Rane, Govilkar - 2019 - Recent Trends in Deep Learning Based Abstractive Text Summarization-AnnotatedDocument8 pagesRane, Govilkar - 2019 - Recent Trends in Deep Learning Based Abstractive Text Summarization-AnnotatedMiguel Ignacio Valenzuela ParadaNo ratings yet

- DWDM Unit 2Document21 pagesDWDM Unit 221jr1a43d1No ratings yet

- Chapter1 Artificial IntelligenceDocument66 pagesChapter1 Artificial IntelligenceRaj Shrestha0% (1)

- Support Vector Machine: Scenario 1Document3 pagesSupport Vector Machine: Scenario 1Garvit MehtaNo ratings yet

- Artificial IntelligencyDocument23 pagesArtificial IntelligencyDhwani DesaiNo ratings yet

- The Adaline Learning AlgorithmDocument11 pagesThe Adaline Learning AlgorithmDr MohammedNo ratings yet

- Control System Engineering 3Document3 pagesControl System Engineering 3Mohamed RiyaazNo ratings yet

- The NARMAX Model For A DC Motor Using ML PDFDocument5 pagesThe NARMAX Model For A DC Motor Using ML PDFTancho IndraNo ratings yet

- Artificial IntelligenceDocument7 pagesArtificial IntelligenceSimone RinaldiNo ratings yet

- Answer: ADocument48 pagesAnswer: AXeno NeoxNo ratings yet

- Oral and Written CommunicationDocument5 pagesOral and Written Communicationhaq nawaz88% (8)

- Dr. SaddamHussainResumeDocument3 pagesDr. SaddamHussainResumeSaddam Hussain. Welcome to AI WorldNo ratings yet

- Oral ComDocument34 pagesOral ComJ O AN GayonaNo ratings yet

- Discretization of Continuous AttributesDocument38 pagesDiscretization of Continuous AttributesBIT Graduation DayNo ratings yet

- Linear Fractional Transformation: F: C 7→ C F (s) = a + bs c + ds a, b, c d ∈ C c 6= 0 F (s) = α + βs (1 − γs)Document42 pagesLinear Fractional Transformation: F: C 7→ C F (s) = a + bs c + ds a, b, c d ∈ C c 6= 0 F (s) = α + βs (1 − γs)Thanh VoNo ratings yet

- Mongo DBDocument21 pagesMongo DBAmrit RanabhatNo ratings yet

- Cognitive SemanticsDocument3 pagesCognitive Semanticsgominola_92No ratings yet

- Research ReportDocument57 pagesResearch ReportSuraj DubeyNo ratings yet

- Answer Any Two Full Questions, Each Carries 15 MarksDocument3 pagesAnswer Any Two Full Questions, Each Carries 15 MarkskayyurNo ratings yet

- Alvinn: An Autonomous Land Vehicle in A Neural NetworkDocument9 pagesAlvinn: An Autonomous Land Vehicle in A Neural Networkshishir4870No ratings yet

A Naive Relevance Feedback Model For Content-Basedimageretrievalusingmultiple

A Naive Relevance Feedback Model For Content-Basedimageretrievalusingmultiple

Uploaded by

Tmatoz KitchenOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Naive Relevance Feedback Model For Content-Basedimageretrievalusingmultiple

A Naive Relevance Feedback Model For Content-Basedimageretrievalusingmultiple

Uploaded by

Tmatoz KitchenCopyright:

Available Formats

Pattern Recognition 43 (2010) 619 -- 629

Contents lists available at ScienceDirect

Pattern Recognition

journal homepage: w w w . e l s e v i e r . c o m / l o c a t e / p r

A naive relevance feedback model for content-based image retrieval using multiple similarity measures

Miguel Arevalillo-Herrez a, , Francesc J. Ferri a , Juan Domingo b

a b

Department of Computer Science, University of Valencia, Avda. Vicente Andrs Estells, 1, 46100-Burjasot, Spain Institute of Robotics, University of Valencia, Spain

A R T I C L E

I N F O

A B S T R A C T

Article history: Received 19 January 2009 Received in revised form 27 June 2009 Accepted 13 August 2009 Keywords: Content-based image retrieval Relevance feedback Similarity combination

This paper presents a novel probabilistic framework to process multiple sample queries in content based image retrieval (CBIR). This framework is independent from the underlying distance or (dis)similarity measures which support the retrieval system, and only assumes mutual independence among their outcomes. The proposed framework gives rise to a relevance feedback mechanism in which positive and negative data are combined in order to optimally retrieve images according to the available information. A particular setting in which users interactively supply feedback and iteratively retrieve images is set both to model the system and to perform some objective performance measures. Several repositories using different image descriptors and corresponding similarity measures have been considered for benchmarking purposes. The results have been compared to those obtained with other representative strategies, suggesting that a significant improvement in performance can be obtained. 2009 Elsevier Ltd. All rights reserved.

1. Introduction Content based image retrieval (CBIR) embraces a set of techniques which aim to recover pictures from large image repositories according to the interests of the user. Usually, a CBIR system represents each image in the repository as a set of descriptors, and uses a corresponding set of distance or (dis)similarity functions defined over this composite feature space to estimate the relevance of a picture to a query. Although other methods also exist (e.g. [1]), the most common approach to formulate the query is based on submitting an example image (or selecting the most similar from a set of images). This method is usually referred to as query by example (QBE), and can be extended to let the user submit multiple pictures [2]. If this is the case, negative examples can also be considered to let the user specify image aspects which are not desired. The assumption that semantic similarity is related to the similarity computed from low level image descriptors is implicit to these procedures. Since this usually does not hold true, the objective of most CBIR techniques proposed so far is to reduce the existing gap between the semantics induced from the low level features and the meaningful semantics of the image from a high level viewpoint.

Corresponding author. Tel.: +34 96 354 39 62. E-mail addresses: Miguel.Arevalillo@uv.es (M. Arevalillo-Herrez), francesc.ferri@uv.es (F.J. Ferri), Juan.Domingo@uv.es (J. Domingo). 0031-3203/$ - see front matter 2009 Elsevier Ltd. All rights reserved. doi:10.1016/j.patcog.2009.08.010

Relevance feedback has been adopted by most recent approaches to reduce this gap in a convenient way. When relevance feedback is used, image retrieval is considered an iterative process in which the original query is refined or modified interactively, to progressively obtain a more accurate result. At each iteration, the system retrieves a series of images ordered according to a given similarity measure, and requires user interaction to mark the relevant and non-relevant images among the retrieved ones. This information is used to adapt the settings of the system and to produce a new set of retrieved images. This process is repeated until the retrieval contains relevant enough images or a desired or sufficiently good (in terms of user's interest) picture is found. This paper presents a relevance feedback framework to process multiple image queries consisting of both positive and negative samples. Although this can be used with other simpler combination strategies, it is also shown that the methodology benefits from the use of a recently introduced probabilistic model [3] to appropriately combine several similarity measures. The remainder of the paper is organized around several major sections. In the next section, the state of the art and the context in which the present work lies is outlined. Section 3 presents the model used, outlines the assumptions made, and gives a detailed motivation and description of the proposed technique. Section 4 describes an implementation of the proposed approach and provides all the corresponding details along with the results of a number of comparative experiments performed whose results support the main conclusions of the present work that are outlined in the last section.

620

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

2. Relevance feedback in image retrieval In the last decade, a large number of different strategies to process the information captured from the user interaction have been proposed in the context of image and information retrieval. In this section, some of the most relevant methods to our particular interest are reviewed. For a more comprehensive and broader review, the reader is referred to e.g. [4,5]. The first approaches to relevance feedback were inspired by other techniques typically used in the context of general information retrieval [6]. These were based on adapting the similarity measure or moving the query point so that it gets closer to the relevant results and farther from those which are non-relevant. The MindReader system proposed by Ishikawa uses a method that combines ideas from both approaches [7]. The goal of this method is to compute the parameters (weights) of a given distance function at the same time as the best query point, given the available feedback from the user at each iteration. Under certain circumstances, this results in a simple and effective algorithm that only does simple computations on the available images. Rui and coworkers proposed an interactive retrieval approach which takes into account the user's subjective perception by dynamically updating certain weights [8]. Specifically, the images are seen as vectors of weights in the space of low level features; these weights represent the relative importance of each feature within a vector as well as their importance across the entire data set. The system then updates the queries so as to place more weight on relevant elements and less on irrelevant ones. Another procedure that belongs to this group was proposed by Ciocca and Schettini [9]. They presented a simple algorithm for computing a new query point at each iteration that can represent better the images of interest to the user. The procedure takes the set of relevant images the user has selected and computes a new point based on the standard deviation of the features used, computed separately one by one. This type of mechanisms embraces some of the fastest approaches to relevance feedback. However, they present several disadvantages. On the one hand, feature weighting techniques treat the feature spaces globally rather than locally [10]. On the other hand, dependencies between image features are usually ignored [11]. In general, these approaches work well if the query concept is convex in the feature space. Unfortunately, this is rarely the case. A current trend in the design of relevance feedback mechanism is to look at it as a pattern recognition problem and then use appropriate methodologies and tools to conveniently solve it. It is worth mentioning the use of support vector machines (SVM) in order to robustly achieve a maximum separation between relevant and nonrelevant examples and be able to retrieve more accurate and relevant images [1214]. One-class SVMs and other extensions have been adapted to the particular context of image retrieval [1518]. The main problem with all these formulations is that parameter setting may become critical for different databases and different descriptors [19]. Besides, most of these methods suffer from the small sample size problem (lack of data), although some recent attempts have been made to address this issue [20]. Other interesting and successful approaches to CBIR include the one presented in [10], in which a separate self-organizing map (SOM) is built for a number of different content descriptors. Each SOM classifies every image in the database into one of a two dimensional grid of cells, so that pictures with similar descriptor values are classified under the same or a close cell, and others with more dissimilar values appear distant in the map. At each iteration all SOMs are processed in parallel, awarding a score to each cell which depends on how many of the positive and negative user selections it contains. The resulting maps are then low-pass filtered to spread the information into neighboring cells. Finally the score assigned to each picture is the addition of the scores awarded to the cell it belongs to in each of the SOMs, and these are used to yield a new image

ranking. In [21], a cluster-based unsupervised learning strategy is used to improve the search performance by fully exploiting the similarity information. Instead of retrieving a set of ordered images, it retrieves groups of pictures by applying a graph-theoretic clustering algorithm to a collection of images in the vicinity of the query. In [19], an approach based on the nearest neighbor paradigm is proposed. Each image is ranked according to a relevance score depending on nearest-neighbor distances. This approach is proposed both in low-level feature spaces, and in dissimilarity spaces, where images are represented in terms of their dissimilarities from the set of relevant images [22]. In [23], the authors propose a technique that defines a fuzzy set so that the degree of membership of each image in the repository to this fuzzy set is related to the user's interest in that image. Then, positive and negative selections are used to determine the degree of membership of each picture to this set. The system attempts to capture the meaning of a selection by modifying a series of parameters at each iteration to imitate the user's behavior, becoming more selective as the search progresses. The application of probability theory to the CBIR problem is not new, and constitutes a major research line. In this direction, a relatively large number of probabilistic relevance feedback techniques have also been proposed, most of them based on Bayesian frameworks. A representative example is the method used in the PicHunter system, proposed by Cox et al. [24]. In this system, an adaptive Bayesian scheme which incorporates the user preferences by means of a user model is used. This model, together with the prior, gives rise inductively to a probability distribution on the event space (databases and set of all possible history sequences). Both memory requirements and execution time scale linearly with the number of images in the database. Another similar approach was presented in [25], based on the modeling of user preferences as a probability distribution on the image space. This distribution is the prior distribution and its parameters are modified based on the information provided by the user. This yields the a posteriori from which the predictive distribution is calculated and used to show to the user a new set of images until she is satisfied or the target image has been found. A regression model has been proposed recently in [11]. This algorithm considers the probability of an image belonging to the set of those sought by the user, and models the logit of this probability as the output of a linear model whose inputs are the low level image features. Other algorithms that belong to this approach include [2628] in which a sound formulation of the problem is given. One of the problems with these approaches comes from the fact that the corresponding estimators need to be simplified and combined with other approaches (as e.g. dimensionality reduction) in order to apply them reliably in the image retrieval context where a severe unbalanced small sample size classification problem needs to be faced. Another general problem of most methods is that they require an initial set of good positive samples, and make little use of negative samples. In fact, many methods give positive and negative samples the same or a similar treatment (i.e. their role can be exchanged). Besides many of the existing methods are parametric and require an adjustment process to reach their optimum performance levels. Usually, the parameter values cannot be generalized and depend on the particular database and descriptors used for retrieval (sometimes requiring the use of heuristics). In the approach we present in this paper, negative and positive samples are given a different treatment. In particular, negative instances are used to determine the context of the positive selection and disambiguate the concept being searched, by considering which other pictures were shown to the user. This use of negative samples makes the algorithm immune to the unbalanced positive and negative feedback problem that occurs with other methods (in fact the more negative samples, the more information is considered). Moreover, the approach is non-parametric and independent of the low level features used, the distance measures applied and the

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

621

indexing techniques utilized. This makes it possible to use it in conjunction with dimensionality reduction methods. These techniques are commonly used in this context because approaches based on fitting models need to trade off the number of parameters and the effective dimensionality. Indeed, the method proposed here may in principle work well with either low or high dimensionalities of the feature vector. 3. A novel relevance feedback approach The objective of any probability-based CBIR system consists of ordering all the images in the database according to the probability that they are relevant from the users' point of view. This in turn leads to the necessity of estimating such probability. For this purpose, most existing methods make use of some form of a feedback mechanism, using relevance information directly provided by the user. The proposed method estimates intermediate probabilities considering that additional and independent sources of information are available. Then, these are used to compute a final ranking according to which pictures are sorted by relevance probability. In our case, these sources of information are taken from the multiple representations, i.e. the different types of descriptors such as local color, global color, texture, etc. that can be extracted from an image. 3.1. Formalization Let us assume we have a database of images, X, composed of m individuals xi , X = {xi }m , and that for each of them different i=1 descriptors or representations can be obtained. Let us also assume that a particular user is interested in retrieving images from X related to a particular concept he/she has in mind, and that this concept can be materialized as an image q that can be represented as any other image in X. This does not mean that a particular image representing the searched concept is necessarily present in the collection; it just means that such image must exist and, if we could have access to it, the same kind of representation or descriptors could be computed for this image. We also assume that a form of measuring semantic or subjective similarity between any two images is given. Without loss of generality, we also assume that this similarity can be identified with the probability of a pair of images being considered similar by the user, pM (similar|xi , xj ), where M stands for the model of image similarity considered. In principle this can be as simple as measuring the (normalized) Euclidean distance between xi and xj or as elaborated as measuring relevance from several distances taking user's preferences into account [3]. Our CBIR system consists of an iterative and interactive system in which the user gives feedback by marking as relevant some of the images that are shown at each iteration. The goal is to produce the best possible ranking given all the information available at a particular moment. At a particular iteration t, we can consider there is a set of already seen images St X, from which a subset Pt St has been marked as relevant. From now on, we will drop all subindices t and assume we are referring to a particular iteration of the relevance feedback loop. So, at iteration t we have P = {y1 , . . . , yp } and N = S\P = {z1 , . . . , zn } as positive and negative information about the concept or kind of image being searched for. The problem consists of obtaining an appropriate relevance score or ranking for any image xi in X given P and N which will be referred to as R(xi , P, N). If the sought concept was available in the form of an image, i.e. a point q in the feature space, we could define this score as based on any available measure of probability of being relevant (or subjectively similar) with regard to q, pM (similar|xi , q) which we can read as the probability that image xi is similar to q.

But, as this is not possible, we need to define this score using instead the information contained in P and N at a particular iteration of the relevance feedback loop. It is worth noting that the problem has been significantly simplified by considering that all seen images have been given at the same time. In fact, as considered in this work, the information about the sought concept contained in P and N consists only of a mere bipartition b from the universe of all possible bipartitions of S. The definition of which images are included in the set S is left to the implementation. A possible choice is to include all images seen in all previous iterations; another one is to keep only the latest iterations or even only the last one. Whatever the case, this can be considered as a memory policy that does not alter the rest of the algorithm. In our case we have chosen to keep in S all images seen in former iterations. Once the problem has been stated like this, the probability that an image xi X be relevant to the user (semantically close enough to the sought concept or subjectively equal to the image q representing it) can be estimated as the conditional probability of xi being relevant given that the user has selected this particular bipartition of S. This probability is p(xi |b) and by applying the Bayes theorem we have p(xi |b) = p(b|xi )P(xi ) p(b)

In this equation, b represents a bipartition of S into P and N; P(xi ) is the prior probability of image with feature vector xi being relevant and p(b) (which will not be further needed) is the unconditional probability associated to the bipartition b. Notice that this formula applies equally to either images in S or in X\S, i.e. the image xi has not necessarily been previously seen by the user. Moreover, by assuming that the prior probability of any image being considered relevant is the same for all images in X it is possible to use the probability p(b|xi ) as the normalized relevance score R(xi , P, N). This p(b|xi ) is the probability that the particular user selects the bipartition b given that the sought concept is conveniently (or exactly) represented by image xi (as it was q in the original problem). In order to obtain a convenient approximation of the above probability, we will concentrate on the particular selection of the user giving rise to b. In particular, we will decompose the bipartition selected by the user into a series of unitary selections as if the user had been forced to select only one image as relevant. In more detail, given a bipartition b=({y1 , . . . , yp }, N) of the elements in S, we consider instead the set of p unitary selections bj = ({yj }, N) which are particular cases of bipartitions of the elements in the sets {yj } N for j = 1, . . . , p. Our purpose is to identify the probability of choosing b from among all possible bipartitions of S with the joint probability of choosing all bj where each positive image yj is selected when the user is seeing only the subset {yj } N. Note that this can be a very crude approximation but our assumption is that it will suffice from the point of view of ranking purposes. Assuming that a pairwise measure of the probability of relevance of any image with regard to xi exists, then the conditional probability of the user selecting precisely bj (that is, choosing yj among the n + 1 images in {yj } N), given that the sought image is (represented by) xi , can be assumed proportional to the probability of relevance of yj and inversely proportional to the probability of relevance of the images being shown together with yj . Consequently, we can write p(bj |xi ) = p(yj |xi )

x{yj }N p(x|xi )

In other words, the conditional probability of selecting bj given xi , p(bj |xi ), can be characterized as the probability of relevance of yj with regard to xi , p(yj |xi ) and a rescaling term that will be different for each bj .

622

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

To conclude, if we assume mutual independence among the above unitary selections bj for j = 1, . . . , p, a reasonable approximation of their joint probability will be given by

p p

All denominators in the previous equation can be dropped by applying a convenient mapping (equalization) to each dk defined as

k

p(b1 , . . . , bp |xi )

j=1

p(bj |xi ) =

j=1

p(yj |xi ) x{yj }N p(x|xi )

= Ek (dk ) =

dk 0

p(z) dz

Now, notice that for any image x, p(x|xi ) is the probability of x being (semantically) relevant when the concept that is being sought is xi . This probability can be reliably approximated by any available probability of subjective similarity between the two images, pM (similar|x, xi ), based on any image similarity model M. Consequently, the final ranking for images in database X at each iteration will be computed only in terms of subjective pairwise similarities with regard to images in the already seen set S = P N and will be defined as R(xi , P, N)

yP

in such a way that by changing the corresponding variables in Eq. (2) we can write

L

p(similar|d)

k=1

p(

k |similar)

(3)

where the proportionality constant has been dropped because it does not depend on d. The righthand side of Eq. (3) can now be seen as a mapping from distance values, d, to similarity scores proportional to the probability of subjective similarity. This mapping is

L L

pM (similar|y, xi ) x{y}N pM (similar|x, xi )

(1)

S(d)

k=1

p(

k |similar) = k=1

p(Ek (dk )|similar)

(4)

3.2. Probability of relevance from user data The above outlined approach computes the conditional probability of relevance (given a particular selection) from pairwise subjective similarities given by pM (similar|xi , xj ) where images x may have multiple representations, x1 , . . . , xL , and each of these representations has an associated distance measure dk (xk , xk ), k = 1, . . . , L. i j In principle, either a trivial or more elaborated combination of the measures defined in each of the multiple representations could be used after proper normalization as an estimate of pM (similar|xi , xj ). Pre-processing operations such as Gaussian or linear feature normalizations [29,30], the assignment of different weights to each set of descriptors [29,31,32], and the use of the product and sum rules are common in this context. Nevertheless, a much more appropriate option is to incorporate subjectivity into the model by using previously gathered user preferences. A recent approach to combine plain similarity measures in an adaptive way taking into account user preferences [3] has been adopted in this work in order to obtain an appropriate estimate of pM (similar|xi , xj ). The precise method [3] starts by composing a distance vector containing all available distances, d(, ) d (d1 , . . . , dL ), and relating the probability of subjective similarity between two images, xi and xj , to the fact that its composite distance is d(xi , xj ). Then, pM (similar|xi , xj ) p(similar|d(xi , xj )) = p(similar|d) Using the Bayes theorem, this new probability which depends on distance values only and not on particular images, can now be written as p(similar|d) = p(d|similar) P(similar) p(d)

and can be conveniently represented and managed by estimating in a parametric or semiparametric way [3] each of the probability distribution functions p( k |similar) and the corresponding equalization mappings, Ek ( ). In this particular work as in the original one, look-up tables for each mapping Ek have been constructed from a given training set of pairs of images. Moreover, the probability density functions have been estimated on equalized distances, k , using pairs of similar images through a kernel method with Gaussian windows [33]. By substituting the probabilities of similarity in Eq. (1) by their corresponding similarity scores obtained through the score function, S, the final ranking for each image xi is given as R(xi , P, N)

yP

S(d(y, xi )) x{y}N S(d(x, xi ))

Note that the above substitution can be safely done because in Eq. (1) multiplicative constants neglected in the definition of the involved scores get conveniently cancelled. 4. Experiments and results 4.1. Databases used A number of comparative experiments have been carried out in order to assess the relative merits of the proposed approach in its different aspects and in comparison to other competing or alternative approaches. For this purpose, we have used objective measures of performance on several different retrieval experiments using three different databases which are representative of a range of different situations. These databases are: A small repository which was intentionally assembled for testing, using some images obtained from the Web and others taken by the authors. The 1508 pictures it contains have been manually classified as belonging to 28 different themes such as flowers, horses, paintings, skies, textures, ceramic tiles, buildings, clouds, trees, etc. This repository is a subset of the one used in the evaluation sections of [25,11]. In this case, the descriptors include a 10 3 HS color histogram and texture information in the form of two granulometric cumulative distribution functions [34]. A subset composed of 5476 images extracted from a commercial collection called Art Explosion, distributed by the company Nova Development (http://www.novadevelopment.com). These have been carefully classified by experts into 62 categories so that images under the same category represent a similar semantic

where P(similar) is the prior probability of two images whatsoever be considered similar by the user, p(d|similar) is the conditional probability of obtaining particular distance values on pairs of similar images and p(d) the unconditional probability of getting d as the value for the vector of similarities given two randomly chosen images. In this work, as in most closely related prior work, the similarity measures defined on the different subsets of features can be considered as both conditionally and unconditionally independent. This is merely an approximation for particular pairs of closely related similarities. By using the independence assumption, the above expression for the composite probability may be rewritten as

L

p(similar|d) = P(similar)

k=1

p(dk |similar) p(dk )

(2)

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629 Table 1 The three databases used in the present work along with their main characteristics. Name Web Art Corel Size 1508 5476 30 000 Categories 28 62 71 Descriptors/similarities 3 7 4 Dimensionalities

623

(30, 10, 10) (30, 12, 7, 3, 6, 10, 10) (9, 16, 32, 32)

concept. The 10 3 HS color histogram and six texture descriptors have been computed for each picture in this database, namely Gabor convolution energies [35], Gray level co-occurrence matrix [36], Gaussian random Markov fields [37], the coefficients of fitting the granulometry distribution with a B-spline basis [38] and two versions of the spatial size distribution [39], respectively, using horizontal and vertical segments as structuring elements. The subset of the Corel database used in [30]. This is composed of 30 000 images which were manually classified into 71 categories. The descriptors used are those provided in the KDD-UCI repository [40] namely: A nine dimensional vector with the mean, standard deviation and skewness for each hue, saturation and value in the HSV color space; a 16 dimensional vector with the co-occurrence in horizontal, vertical and the two diagonal directions; a 32 dimensional vector with the 4 2 color HS histograms for each of the resulting subimages after one horizontal and one vertical split; and a 32 dimensional vector with the HS histogram for the entire image. Although the chosen feature sets are not well suited to the retrieval problem as mentioned in [19], the size of this repository and the large diversity of its contents make it a good candidate for evaluation and benchmarking purposes. The (dis)similarity between pairs of images in each considered representation space (descriptor) is computed as the Euclidean distance except for the case of HS histograms in which histogram intersection [41] has been used. A summary of the databases used in this work and their main characteristics is given in Table 1. 4.2. Experimental setting In order to be able to assess the methods in a way as objective as possible, a similar experimental setting to that used in [19,42] has been adopted. The available categories have been used as concepts, and user judgments about similarity/relevance have been simulated considering that all pictures under the same category are similar, and all images under different categories represent different concepts. In other words, the available information about classes is used as a ground truth for considering images as relevant or not and to consequently measure the performance of each method. A fixed number of images has been selected at random from each class and submitted as targets (initial relevant image) to the system together with an initial set S0 of images retrieved from the database X using a random ordering (the same images and the same initial order have been used for all algorithms). At each iteration, the interactive role of the user is simulated by taking into account the true class labels of the images shown. This is, shown images from the same class are marked as relevant and the remaining ones are marked as non-relevant. This selection accumulates the elements in the sets P and N, which are submitted to the system for processing. As we are not dealing with real users, the number of images shown at each iteration has been set to 50 and the number of relevance feedback iterations was 10 for all reported experiments. Lowering the first of did not show significantly different behavior in the experimentation considered.

To obtain more reliable results, a relatively large number of different searches have been performed and the corresponding results have been averaged. Twenty targets per class (60 for the smallest database) have been selected, except for the classes with a cardinality below this number (in this case, the number of targets chosen from the class has been limited to the number of pictures in that class). This has lead to a total of 1022, 1203 and 1420 individual retrieval processes, respectively, for each database. The performance of the system has been measured as averaged precision and recall values. Once a cutoff value (number of retrieved images) is set, precision is defined as the ratio of relevant images among them, while recall is the ratio of relevant images retrieved with regard to the total number of relevant images in the database. As in many related previous works [30,42,18], the averaged precision on the retrieved images (50) at each iteration has been used to compare the different algorithms. Apart from this, a smaller cutoff value of 20 is also considered to evaluate the behavior of the algorithms when considering the first positions in the rankings. Moreover, in order to evaluate the performance of the technique at producing the entire ranking, full precision vs. recall curves considering all possible cutoff values for each particular iteration have been computed. 4.3. Comparative analysis In this subsection, a comparative analysis of the results obtained with the proposed and other existing representative CBIR strategies is carried out. In particular, we consider (a) a classical feature weighting and query movement approach, implemented as in [9,44]; (b) a classification based engine that uses similar principles to that used in the PicSOM system [10]; (c) a fuzzy strategy implemented as described in [23]; (d) a biased SVM approach using the models in [12,17]; and (e) the nearest neighbor approach presented in [30]. We will refer to these algorithms as QPM (query point movement), SOM, fuzzy, SVM and NN, respectively. The SOM-based approach uses 64 64 SOMs for the first repository, 32 32 SOMs for the second and 16 16 SOMs for the third. Apart from minor details, all different methods including any parameter settings have been implemented as in the corresponding references. SVMs used Gaussian kernels and required specific tuning for each database. All the algorithms implemented have been adapted to work with the same feature sets to allow for a fair comparison. Also, all relevant images from previous iterations have been plugged into the retrieved results for the next and negative selections have been banned to avoid that they appear again in the next iterations of the same search. This causes that all methods obtain monotonically increasing results with regard to the number of iterations. The use of the probabilistic distance normalization (PN) presented in Section 3.2 [3] optimizes the proposed system in combining feature spaces, but it requires a training stage and thus the use of some extra information which is not used in the other methods. This could make a direct comparison between the methods unfair. For this reason, another distance combination method has also been used. In particular, the proposed approach has also been tested by substituting the training phase in the PN method (proposed + PN) by the use of a simple Gaussian normalization (GN) of distances as in the QPM approach [44] (proposed + GN). Besides, a further experiment has been done integrating the PN method into the NN approach

624

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

Table 2 Precision obtained at a cutoff value of 20 for all approaches considered. Database Method Iteration 1 Web Proposed + PN Proposed + GN NN + PN NN SVM QPM Fuzzy SOM Proposed + PN Proposed + GN NN + PN NN SVM QPM Fuzzy SOM Proposed + PN Proposed + GN NN + PN NN SVM QPM Fuzzy SOM 0.530 0.490 0.509 0.527 0.476 0.480 0.391 0.452 0.363 0.311 0.347 0.253 0.162 0.358 0.192 0.281 0.226 0.206 0.234 0.250 0.119 0.221 0.112 0.196 2 0.812 0.790 0.776 0.808 0.734 0.737 0.643 0.743 0.617 0.587 0.598 0.451 0.307 0.591 0.429 0.524 0.445 0.400 0.436 0.435 0.290 0.391 0.192 0.369 3 0.891 0.886 0.854 0.872 0.842 0.827 0.801 0.836 0.741 0.738 0.711 0.551 0.576 0.691 0.612 0.652 0.596 0.557 0.572 0.530 0.464 0.505 0.311 0.480 4 0.928 0.923 0.886 0.909 0.885 0.870 0.891 0.882 0.808 0.813 0.763 0.626 0.759 0.752 0.722 0.719 0.697 0.666 0.661 0.609 0.594 0.585 0.454 0.557 5 0.948 0.944 0.909 0.927 0.905 0.896 0.925 0.911 0.851 0.858 0.790 0.671 0.843 0.795 0.779 0.763 0.760 0.739 0.722 0.663 0.683 0.643 0.566 0.615 6 0.962 0.957 0.927 0.942 0.916 0.914 0.948 0.932 0.875 0.886 0.808 0.711 0.887 0.827 0.819 0.796 0.803 0.787 0.765 0.708 0.741 0.688 0.658 0.663 7 0.969 0.963 0.939 0.953 0.924 0.927 0.960 0.948 0.893 0.906 0.822 0.743 0.909 0.850 0.847 0.820 0.835 0.823 0.797 0.744 0.783 0.724 0.726 0.703 8 0.973 0.968 0.949 0.961 0.930 0.941 0.966 0.958 0.906 0.919 0.832 0.771 0.919 0.868 0.867 0.841 0.857 0.850 0.824 0.772 0.813 0.754 0.773 0.734 9 0.975 0.970 0.956 0.964 0.934 0.950 0.969 0.965 0.916 0.928 0.841 0.794 0.925 0.882 0.883 0.859 0.874 0.870 0.845 0.795 0.836 0.779 0.811 0.762 10 0.975 0.971 0.962 0.968 0.935 0.957 0.972 0.969 0.925 0.935 0.849 0.814 0.929 0.894 0.894 0.873 0.887 0.887 0.864 0.817 0.856 0.801 0.840 0.785

Art

Corel

Table 3 Precision obtained at a cutoff value of 50 for all approaches considered. Database Method Iteration 1 Web Proposed + PN Proposed + GN NN + PN NN SVM QPM Fuzzy SOM Proposed + PN Proposed + GN NN + PN NN SVM QPM Fuzzy SOM Proposed + PN Proposed + GN NN + PN NN SVM QPM Fuzzy SOM 0.387 0.366 0.356 0.377 0.337 0.338 0.301 0.327 0.259 0.227 0.246 0.177 0.108 0.249 0.135 0.201 0.163 0.153 0.169 0.177 0.072 0.152 0.068 0.135 2 0.547 0.526 0.503 0.502 0.492 0.443 0.432 0.455 0.420 0.391 0.390 0.267 0.223 0.357 0.285 0.335 0.291 0.267 0.275 0.268 0.195 0.230 0.108 0.230 3 0.621 0.612 0.571 0.574 0.574 0.500 0.549 0.521 0.517 0.502 0.475 0.339 0.420 0.419 0.409 0.407 0.388 0.361 0.359 0.329 0.304 0.288 0.204 0.287 4 0.664 0.661 0.610 0.616 0.622 0.534 0.604 0.564 0.576 0.574 0.528 0.389 0.547 0.457 0.478 0.459 0.464 0.435 0.425 0.379 0.394 0.331 0.283 0.334 5 0.694 0.688 0.634 0.645 0.650 0.566 0.639 0.597 0.616 0.623 0.562 0.430 0.620 0.494 0.525 0.495 0.525 0.497 0.478 0.419 0.463 0.372 0.357 0.373 6 0.714 0.707 0.652 0.666 0.669 0.589 0.664 0.622 0.646 0.657 0.588 0.463 0.670 0.521 0.562 0.526 0.574 0.547 0.522 0.456 0.517 0.403 0.421 0.409 7 0.728 0.722 0.668 0.684 0.684 0.613 0.685 0.645 0.668 0.681 0.609 0.491 0.701 0.548 0.590 0.551 0.613 0.592 0.561 0.487 0.563 0.434 0.469 0.439 8 0.738 0.732 0.680 0.697 0.695 0.630 0.703 0.663 0.688 0.701 0.624 0.515 0.722 0.570 0.613 0.574 0.646 0.627 0.593 0.516 0.599 0.461 0.509 0.466 9 0.745 0.740 0.691 0.708 0.702 0.648 0.717 0.678 0.703 0.719 0.635 0.537 0.738 0.590 0.632 0.594 0.672 0.658 0.621 0.539 0.631 0.486 0.543 0.491 10 0.752 0.747 0.699 0.717 0.708 0.662 0.728 0.692 0.716 0.733 0.644 0.557 0.750 0.608 0.649 0.611 0.694 0.685 0.646 0.563 0.658 0.508 0.571 0.514

Art

Corel

(NN + PN). These tests aim to evaluate the individual contribution to the improvements achieved by the approach presented in this work and the PN method described in [3]. In the case of using the PN method, the training of the equalizing functions Ek ( ) in Eq. (4) has been done offline by gathering judgments from real users on a neutral set of pairs of images (not contained in any of the databases). In particular, each of a group of 15 users has performed a total of 300 binary judgments, resulting on a total of 4500 evaluations. These have been used to estimate the probability

distributions which are required by the approach. Note that the same training data have been used to build all functions Ek ( ) used in the experiments.

4.4. Results and discussion The averaged precision at cutoff values 20 and 50 for all the algorithms considered are presented in Tables 2 and 3, respectively. The

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

625

Precision at a cutoff value of 20

Precision at a cutoff value of 50

0.9 0.8 0.7 0.6 0.5 0.4 1 2 3 4

Proposed+PN NN SVM Fuzzy QPM SOM

0.7 0.6 0.5 0.4 0.3 1 2 3 4

Proposed+PN NN SVM Fuzzy QPM SOM

Web

5 6 7 Iteration

10

Precision at a cutoff value of 50

5 6 7 Iteration

10

Precision at a cutoff value of 20

0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 1 2 3 4

Proposed+PN NN SVM Fuzzy QPM SOM

0.7 0.6 0.5 0.4 0.3 0.2 0.1 1 2 3 4

Proposed+PN NN SVM Fuzzy QPM SOM

Art

5 6 7 Iteration

10

Precision at a cutoff value of 50

5 6 7 Iteration

10

Precision at a cutoff value of 20

0.8 0.7 0.6 0.5 0.4 0.3 0.2 1 2 3 4

Proposed+PN NN SVM Fuzzy QPM SOM

0.6 0.5 0.4 0.3 0.2 0.1 1 2 3 4

Proposed+PN NN SVM Fuzzy QPM SOM

Corel

5 6 7 Iteration

10

5 6 7 Iteration

10

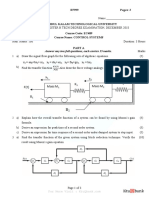

Fig. 1. On the left, averaged retrieval precision at a cutoff value 20 is compared to that of other algorithms in the three databases considered. On the right, the same information for a cutoff value of 50.

same results are also graphically shown in Fig. 1. As it can be seen from the results for a cutoff value of 20 in Fig. 1 (left), all methods give similar results for the smallest database but differences between the proposed method and the others become more significant as the size of the database increases. This effect gets somewhat clearer for a cutoff value of 50, at the right side of the figure. In this case, differences increase and the superiority of the proposed method becomes more evident. The NN method is the second or third best for Web and Corel databases but ranks as the worst for Art maybe because the type of images in this database (given the descriptors adopted in this work). This behavior can be observed for both cutoff values considered. The SVM approach on the other hand, has a remarkable behavior in Corel and specially in Art, in which the method is the best at the last iterations. Another trend worth mentioning is the increase rate of the Fuzzy method that starts as the worst and consistently ranks amongst the best ones at the end of the iterative process. While no other algorithm performs consistently better than the rest in all the repositories tested, the probabilistic algorithm

proposed clearly shows better retrieval results in all databases with the only exception of SVM for Art database in the last iterations. Besides, differences in performance become greater as the repository size becomes larger, evidencing the potential of the method in realistic CBIR systems composed of tens of thousands of even millions of images. In particular, in the largest repository and for k = 50, differences in precision close to 25% with respect to the following best method can be observed around the third iteration. Lesser differences are obtained if precision is measured at k = 20, still with significant improvements over the NN approach ranging from 8% to 15% at the same iterations. The precision vs. recall graphs shown in Fig. 2 aim to evaluate the entire ranking produced, and show that the behavior exhibited by the methods can be generalized regardless of the cutoff value considered. In particular, at the third iteration, the proposed method clearly dominates all other methods in the whole range of the curve. The same happens at iteration 7 with the only exception of SVM in Art database. This good behavior can be explained in part because a

626

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

Iteration 3

1 0.8

Precision

Iteration 7

1 0.8

Precision

Web

0.6 0.4 0.2 0 0 1 0.8

Proposed+PN NN SVM Fuzzy QPM SOM Proposed+PN NN SVM Fuzzy QPM SOM

0.6 0.4 0.2 0

Proposed+PN NN SVM Fuzzy QPM SOM

0.2

0.4 0.6 Recall

0.8

1 1 0.8

Precision

0.2

0.4 0.6 Recall

0.8

Precision

0.6 0.4 0.2 0 0 1 0.8 0.2

0.6 0.4 0.2 0

Art

Proposed+PN NN SVM Fuzzy QPM SOM

0.4 0.6 Recall

0.8

1 1

0.2

0.4 0.6 Recall

0.8

Precision

Corel

0.6 0.4 0.2 0 0 0.2

Precision

Proposed+PN NN SVM Fuzzy QPM SOM

0.8 0.6 0.4 0.2 0

Proposed+PN NN SVM Fuzzy QPM SOM

0.4 0.6 Recall

0.8

0.2

0.4 0.6 Recall

0.8

Fig. 2. Compared precision vs. recall graphs for iterations 3 (left) and 7 (right), in the three databases considered.

very good and accurate labelling is available for this database. This fact together with the large amount of feedback available at the last iterations convert the learning problem into a standard and wellbehaved one. So it is not surprising that with no noise in the labels and with enough training data an appropriate SVM model obtains the best results. To evaluate the extent of the influence of incorporating the PN method presented in [3], Fig. 3 and 4 compare the results obtained with the proposed approach and when replacing this combination technique by a standard GN approach (subtracting the mean and dividing by 3 times the standard deviation). Besides, the results obtained with the plain NN approach and when this is used in combination with the PN method have also been plotted (note that other methods have their own built-in feature combination strategies and cannot benefit from this distance combination procedure). Although the results show that the proposed method produces slightly better

results when it is combined with the PN method, a higher retrieval precision than with the other methods tested is still obtained when this is replaced by a simpler GN. This suggests that the improvements achieved are mostly caused by the relevance feedback model presented in this paper, and not by the use of a more complex combination strategy. As a general trend, the simple rule arrived at in this paper together with the offline information about user preferences implicitly contained in the similarity combination rule used as a subjective similarity model, have allowed us to obtain a very competitive relevance feedback model that is able to consistently improve the results of recently proposed approaches with very similar foundations and assumptions. It is important to point out that although the performance evaluation carried out in this work is totally objective and fair, it may not be representative enough for a more realistic situation in which real

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

627

Precision at a cutoff value of 20

Precision at a cutoff value of 50

0.9 0.8 0.7 0.6 0.5 1 2 3 4

Proposed+PN Proposed+GN NN+PN NN

0.7

0.6

Web

0.5

Proposed+PN Proposed+GN NN+PN NN

0.4 1 2 3 4

5 6 7 Iteration

10

Precision at a cutoff value of 50

5 6 7 Iteration

10

Precision at a cutoff value of 20

0.9 0.8 0.7 0.6 0.5 0.4 0.3 1 2 3 4

Proposed+PN Proposed+GN NN+PN NN

0.7 0.6 0.5 0.4 0.3 0.2 1 0.7 2 3 4

Proposed+PN Proposed+GN NN+PN NN

Art

5 6 7 Iteration

10

5 6 7 Iteration

10

Precision at a cutoff value of 20

0.8 0.7 0.6 0.5 0.4 0.3 0.2 1 2 3 4

Proposed+PN Proposed+GN NN+PN NN

Precision at a cutoff value of 50

0.6 0.5 0.4 0.3 0.2 1 2 3 4

Proposed+PN Proposed+GN NN+PN NN

Corel

5 6 7 Iteration

10

5 6 7 Iteration

10

Fig. 3. Evaluation of the effect of using the PN approach in [3].

users are driving the relevance feedback mechanism of the methods. In particular and as commented above, some settings should be adapted for a more realistic implementations and other issues such as user fatigue and concept drift should be addressed. This is a limitation present in most of the recent proposals and it is due to the great difficulty of establishing an appropriate benchmarking for relevance feedback schemes [42,45]. 5. Concluding remarks In this paper, a novel relevance feedback mechanism for contentbased image retrieval that integrates seamlessly with similarity fusion methods adapted to user preferences is proposed. The method has been derived from basic assumptions using a probabilistic approach but generates a straightforward rule that can be easily implemented. According to the particular empirical setting and corresponding objective measures of performance obtained, the proposed

method consistently outperforms other recently presented similar approaches that are based on very similar assumptions. This behavior is more apparent at the first relevance feedback iterations when a limited amount of training data is available. Further work is being carried out in several different directions. On the one hand, more exhaustive experiments using more and bigger databases will lead to a more careful evaluation of the relative merits of each of the components of the proposed approach e.g. the contribution of the relevance feedback for different subjective similarity models and not only the one considered in this work. On the other hand, carefully designed experiments with real users are being developed. This experiments would represent the real context in which the system is meant to work. Another improvement under study is to adapt the probability distributions used to compute Eq. (4) to each particular user by considering specific training sets. In this case, a major concern would be obtaining sufficient data to construct the probability distributions. A major challenge but more feasible approach, would be to start with

628

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

1 0.8

Precision

Iteration 3

1 0.8

Precision

Iteration 7

Web

0.6 0.4 0.2 0 0 1 0.8

Proposed+PN Proposed+GN NN+PN NN Proposed+PN Proposed+GN NN+PN NN

0.6 0.4 0.2 0

Proposed+PN Proposed+GN NN+PN NN

0.2

0.4 0.6 Recall

0.8

1 1 0.8

Precision

0.2

0.4 0.6 Recall

0.8

Proposed+PN Proposed+GN NN+PN NN

Precision

0.6 0.4 0.2 0 0 1 0.8

Proposed+PN Proposed+GN NN+PN NN

0.6 0.4 0.2 0

Art

0.2

0.4 0.6 Recall

0.8

1 1 0.8

Precision

0.2

0.4 0.6 Recall

0.8

Proposed+PN Proposed+GN NN+PN NN

Precision

Corel

0.6 0.4 0.2 0 0 0.2 0.4 0.6 Recall 0.8 1

0.6 0.4 0.2 0 0 0.2 0.4 0.6 Recall 0.8 1

Fig. 4. Evaluation of the effect of using the PN approach in [3]. Compared precision vs. recall graphs for iterations 3 (left) and 7 (right), in the three databases considered.

generic functions (without any prior knowledge) and update them with data captured from the user interaction. Acknowledgments We would like to thank Dr. G. Giacinto for his help on the evaluation of this paper, facilitating the manually performed classification of the 30 000 images repository. We would also like to thank Dr. M. Ortega for providing the thumbnails of these images. Without this repository, such an extensive evaluation would have turned much more difficult. This work has been partially funded by FEDER, the Valencian Regional Government, and Spanish MEC through projects GV2008-032, TIN2006-10134, TIN2006-12890, TIN2009-14205-C04-03, DPI200615542-C04-04 and Consolider Ingenio 2010 CSD2007-00018.

References

[1] M. Flickner, H. Sawhney, W. Niblack, J. Ashley, Q. Huang, B. Dom, M. Gorkani, J. Hafner, D. Lee, D. Petkovic, D. Steele, P. Yanker, Query by image and video content: the qbic system, Computer 28 (9) (1995) 2332. [2] S.M.M. Tahaghoghi, J.A. Thom, H.E. Williams, Multiple example queries in content-based image retrieval, in: SPIRE 2002: Proceedings of the 9th International Symposium on String Processing and Information Retrieval, Springer, London, UK, 2002, pp. 227240. [3] M. Arevalillo-Herrez, J. Domingo, F.J. Ferri, Combining similarity measures in content-based image retrieval, Pattern Recognition Letters 29 (16) (2008) 21742181. [4] X. Zhou, T. Huang, Relevance feedback for image retrieval: a comprehensive review, Multimedia systems 8 (6) (2003) 536544. [5] R. Datta, D. Joshi, J. Li, J.Z. Wang, Image retrieval: ideas, influences and trends of the new age, CSE Technical Report 06-009, Penn State University, 2006. [6] G. Salton, M.J. McGill, Introduction to Modern Information Retrieval, McGrawHill, New York, 1983.

M. Arevalillo-Herrez et al. / Pattern Recognition 43 (2010) 619 -- 629

629

[7] Y. Ishikawa, R. Subramanya, C. Faloutsos, Mindreader: querying databases through multiple examples, in: Proceedings of the 24th International Conference on Very Large Data Bases, VLDB, New York, USA, 1998, pp. 433438. [8] Y. Rui, S. Huang, M. Ortega, S. Mehrotra, Relevance feedback: a power tool for interactive content-based image retrieval, IEEE Transaction on Circuits and Video Technology 8 (5) (1998) 644655. [9] G. Ciocca, R. Schettini, A relevance feedback mechanism for content-based image retrieval, Information Processing and Management 35 (1) (1999) 605632. [10] J. Laaksonen, M. Koskela, E. Oja, PicSOM: self-organizing image retrieval with MPEG-7 content descriptors, IEEE Transactions on Neural Networks 13 (4) (2002) 841853. [11] T. Len, P. Zuccarello, G. Ayala, E. de Ves, J. Domingo, Applying logistic regression to relevance feedback in image retrieval systems, Pattern Recognition 40 (10) (2007) 26212632. [12] S. Tong, E. Chang, Support vector machine active learning for image retrieval, in: ACM Multimedia Conference, ACM Press, Ottawa, Canada, 2001, pp. 107118. [13] X.S. Zhou, T.S. Huang, Small sample learning during multimedia retrieval using biasmap, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2001, pp. 1117. [14] S. Tong, E. Chang, Support vector machine active learning for image retrieval, in: Proceedings of the 9th ACM International Conference on Multimedia, ACM Press, New York, NY, USA, 2001, pp. 107118. [15] Y. Chen, X.S. Zhou, T.S. Huang, One-class SVM for learning in image retrieval, in: Proceedings of the IEEE International Conference on Image Processing, 2001, pp. 3437. [16] I. Gondra, R. Heisterkamp, J. Peng, Improving image retrieval performance by inter-query learning with one-class support vector machines, Neural Computing and Applications 13 (2) (2004) 130139. [17] C.-H. Hoi, C.-H. Chan, K. Huang, M. R. Lyu, I. King, Biased support vector machine for relevance feedback in image retrieval, in: Proceedings of International Joint Conference on Neural Networks (IJCNN2004), Budapest, Hungary, 2004, pp. 31893194. [18] D. Tao, X. Tang, X. Li, X. Wu, Asymmetric bagging and random subspace for support vector machines-based relevance feedback in image retrieval, IEEE Transactions on Pattern Analysis and Machine Intelligence 28 (7) (2006) 10881099. [19] G. Giacinto, A nearest-neighbor approach to relevance feedback in content based image retrieval, in: Proceedings of the 6th ACM International Conference on Image and video retrieval (CIVR'07), ACM Press, Amsterdam, The Netherlands, 2007, pp. 456463. [20] D. Tao, X. Tang, Nonparametric discriminant analysis in relevance feedback for content-based image retrieval, in: Proceedings of the 17th International Conference on Pattern Recognition (ICPR), vol. 2, 2004, pp. 10131016. [21] Y. Chen, J. Wang, R. Krovetz, Clue: cluster-based retrieval of images by unsupervised learning, IEEE Transactions on Image Processing 14 (8) (2005) 11871201. [22] E. Pekalska, R.P.W. Duin, Dissimilarity representations allow for building good classifiers, Pattern Recognition Letters 23 (8) (2002) 943956. [23] M. Arevalillo-Herrez, M. Zacars, X. Benavent, E. de Ves, A relevance feedback CBIR algorithm based on fuzzy sets, Signal Processing: Image Communication 23 (7) (2008) 490504. [24] I. Cox, M. Miller, T. Minka, T.V. Papathomas, P. Yianilos, The Bayesian image retrieval system PicHunter: theory implementation and psychophysical experiments, IEEE Transaction on Image Processing 9 (1) (2000) 2037.

[25] E. de Ves, J. Domingo, G. Ayala, P. Zuccarello, A novel Bayesian framework for relevance feedback in image content-based retrieval systems, Pattern Recognition 39 (2006) 16221632. [26] N.D. Freitas, E. Brochu, K. Barnard, P. Duygulu, D. Forsyth, Bayesian models for massive multimedia databases: a new frontier, in: 7th Valencia International ISBA Meeting on Bayesian Statistics, 2002, pp. 112. [27] N. Vasconcelos, A. Lippman, Learning from user feedback in image retrieval systems, Advances in Neural Information Processing Systems 12 (2000) 10091015. [28] Z. Su, H. Zhang, S. Li, S. Ma, Relevance feedback in content-based image retrieval: Bayesian framework, feature subspaces and progressive learning, IEEE Transactions Image Processing 12 (2003) 924937. [29] Q. Iqbal, J. Aggarwal, Combining structure, color and texture for image retrieval: a performance evaluation, in: 16th International Conference on Pattern Recognition (ICPR), Quebec, City, QC, Canada, 2002, pp. 438443. [30] G. Giacinto, F. Roli, Nearest-prototype relevance feedback for content based image retrieval, in: ICPR '04: Proceedings of the Pattern Recognition, 17th International Conference on (ICPR'04), vol. 2, IEEE Computer Society, Washington, DC, USA, 2004, pp. 989992. [31] Q. Zhang, E. Izquierdo, Optimizing metrics combining low-level visual descriptors for image annotation and retrieval, in: Proceeding of IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 06), Toulouse, France, 2006, pp. 405408. [32] R. da S. Torres, A.X. Falcao, M.A. Goncalves, B. Zhang, W. Fan, E.A. Fox, P. Calado, A new framework to combine descriptors for content-based image retrieval, in: 14th Conference on Information and Knowledge Management, Bremen, Germany, 2005, pp. 335336. [33] R.O. Duda, P.E. Hart, D.G. Stork, Pattern Classification, second ed., WileyInterscience, New York, 2000. [34] P. Soille, Morphological Image Analysis: Principles and Applications, Springer, Berlin, 2003. [35] G. Smith, I. Burns, Measuring texture classification algorithms, Pattern Recognition Letters 18 (14) (1997) 14951501. [36] R.W. Conners, M.M. Trivedi, C.A. Harlow, Segmentation of a high-resolution urban scene using texture operators, Computer Vision, Graphics, and Image Processing 25 (3) (1984) 273310. [37] R. Chellappa, S. Chatterjee, Classification of textures using Gaussian Markov random fields, IEEE Transactions on Acoustics Speech and Signal Processing 33 (1985) 959963. [38] Y. Chen, E. Dougherty, Gray-scale morphological granulometric texture classification, Optical Engineering 33 (8) (1994) 27132722. [39] G. Ayala, J. Domingo, Spatial size distributions. Applications to shape and texture analysis, IEEE Transactions on Pattern Analysis and Machine Intelligence 23 (12) (2001) 14301442. [40] S. Hettich, S.D. Bay, The UCI KDD archive, 1999 http://kdd.ics.uci.edu . [41] M.J. Swain, D.H. Ballard, Color indexing, International Journal of Computer Vision 7 (1) (1991) 1132. [42] H. Muller, W. Muller, D.M. Squire, S. Marchand-Maillet, T. Pun, Performance evaluation in content-based image retrieval: overview and proposals, Pattern Recognition Letters 22 (5) (2001) 593601. [44] G. Ciocca, R. Schettini, Content-based similarity retrieval of trademarks using relevance feedback, Pattern Recognition 34 (8) (2001) 16391655. [45] N. Gunther, G. Beretta, A benchmark for image retrieval using distributed systems over the internet: Birds-I, HPL Technical Report HPL-2000-162, HP Labs, Palo Alto, San Jose, USA, 2001.

About the AuthorMIGUEL AREVALILLO-HERREZ received the first degree in computing from the Technical University of Valencia, Spain, in 1993; the BSc in Computing from Liverpool John Moores University, UK, in 1994; and the PgCert in Teaching and Learning in Higher Education and the PhD degree in 1997, both also from Liverpool John Moores University, UK. He was a senior lecturer at this institution until 1999. Then he left to work for private industry for a one year period, and came back to academy in 2000. He was the program leader for the computing and business degrees at the Mediterranean University of Science and Technology until 2006. From this year, he works as a lecturer in the University of Valencia (Spain). His research now concentrates in the area of content based image retrieval systems. About the AuthorFRANCESC J. FERRI received the Licenciado degree in Physics (Electricity, Electronics and Computer Science) in 1987 and the PhD degree in Pattern Recognition in 1993 both from the Universitat de Valncia. He has been with the Computer Science Department of the Universtitat de Valncia since 1986; first as a research fellow and as a teacher of Computer Science and Pattern Recognition since 1988. Since 2008 he is holding a professorship in Computer Science and Artificial Intelligence. He has been involved in a number of scientific and technical projects on Computer Vision and Pattern Recognition. He spent a sabbatical in 1993 with J. Kittler at the Vision, Speech and Signal Processing Research group, University of Surrey and a semester in 2005 with A.K. Jain at the Pattern Recognition and Image Processing Lab, Michigan State University where he was working on Feature Selection, Dimensionality Reduction and Statistical Pattern Recognition Methodologies. Dr. Ferri is a member of the ACM, IEEE and the IAPR. He has authored or coauthored more than 100 technical papers in international conferences and well-established journals in his fields of interest. He has also helped in refereeing in several of these journals and major conferences including Pattern Recognition Letters, PAMI and the Pattern Recognition Journal where he has also served as a guest editor. His current research interests include feature selection, nonparametric classification methods, machine learning, computational geometry, computer vision, image retrieval and motion understanding. About the AuthorJUAN DOMINGO was born in Valencia (Spain) in 1965. He was scholarship holder at CMU S. Juan de Ribera (Burjasot, Spain) from 1983 to 1988. He graduated in Physics in 1988 at the University of Valencia; obtained his MSc in Information Technology in 1991 at the University of Edinburgh and his PhD in Computing in 1993 at the University of Valencia, where he is currently senior lecturer at the Department of Informatics. His research interests include medical image analysis and content based image retrieval.

You might also like

- FDP On AIDocument10 pagesFDP On AIarunasekaran100% (1)

- Mini ProjectDocument31 pagesMini ProjectDakamma DakaNo ratings yet

- Autoencoders - PresentationDocument18 pagesAutoencoders - PresentationPhenil BuchNo ratings yet

- The Influence of The Similarity Measure To Relevance FeedbackDocument5 pagesThe Influence of The Similarity Measure To Relevance FeedbackAnonymous ntIcRwM55RNo ratings yet

- Unifying User-Based and Item-Based Collaborative Filtering Approaches by Similarity FusionDocument8 pagesUnifying User-Based and Item-Based Collaborative Filtering Approaches by Similarity FusionEvan John O 'keeffeNo ratings yet

- Latent Semantic Models For Collaborative Filtering: Thomas Hofmann Brown UniversityDocument27 pagesLatent Semantic Models For Collaborative Filtering: Thomas Hofmann Brown Universitymulte123No ratings yet

- Design of An Effective Method For Image RetrievalDocument6 pagesDesign of An Effective Method For Image RetrievalIJIRAENo ratings yet

- Medical Images Retrieval Using Clustering TechniqueDocument8 pagesMedical Images Retrieval Using Clustering TechniqueEditor IJRITCCNo ratings yet

- Recommender: An Analysis of Collaborative Filtering TechniquesDocument5 pagesRecommender: An Analysis of Collaborative Filtering TechniquesParas MirraNo ratings yet

- CS6007 - Information RetrievalDocument38 pagesCS6007 - Information RetrievalvNo ratings yet

- A Method For Comparing Content Based Image Retrieval MethodsDocument8 pagesA Method For Comparing Content Based Image Retrieval MethodsAtul GuptaNo ratings yet

- Matchminer: Efficient Spanning Structure Mining in Large Image CollectionsDocument14 pagesMatchminer: Efficient Spanning Structure Mining in Large Image CollectionsFrank PukNo ratings yet

- Mixture Models and ApplicationsFrom EverandMixture Models and ApplicationsNizar BouguilaNo ratings yet

- ML Project Proposal Group (Num 10)Document7 pagesML Project Proposal Group (Num 10)wanyonyi kizitoNo ratings yet

- Active Learning Methods For Interactive Image RetrievalDocument78 pagesActive Learning Methods For Interactive Image RetrievalAnilkumar ManukondaNo ratings yet

- I Jsa It 01132012Document5 pagesI Jsa It 01132012WARSE JournalsNo ratings yet

- Real-Time Fine-Grained Air Quality Sensing Networks in Smart City: Design, Implementation and OptimizationDocument4 pagesReal-Time Fine-Grained Air Quality Sensing Networks in Smart City: Design, Implementation and OptimizationRoshan MNo ratings yet

- Improved Visual Final Version NewDocument9 pagesImproved Visual Final Version NewAmanda CarterNo ratings yet

- Collaborative Filtering Using A Regression-Based Approach: Slobodan VuceticDocument22 pagesCollaborative Filtering Using A Regression-Based Approach: Slobodan VuceticmuhammadrizNo ratings yet

- Multimodal Biometrics: Issues in Design and TestingDocument5 pagesMultimodal Biometrics: Issues in Design and TestingMamta KambleNo ratings yet

- 2378-Article Text-6919-2-10-20161127Document18 pages2378-Article Text-6919-2-10-20161127chonjinhuiNo ratings yet

- Visual Categorization With Bags of KeypointsDocument17 pagesVisual Categorization With Bags of Keypoints6688558855No ratings yet