Professional Documents

Culture Documents

On Choosing Parameters in Retrospective-Approximation Algorithms For Stochastic Root Finding and Simulation Optimization

On Choosing Parameters in Retrospective-Approximation Algorithms For Stochastic Root Finding and Simulation Optimization

Uploaded by

Vijay KumarCopyright:

Available Formats

You might also like

- Misinterpretation of TasawwufDocument260 pagesMisinterpretation of TasawwufSpirituality Should Be LivedNo ratings yet

- Experiment 8: Fixed and Fluidized BedDocument6 pagesExperiment 8: Fixed and Fluidized BedTuğbaNo ratings yet

- Pull Up EbookDocument12 pagesPull Up EbookDanko Kovačević100% (2)

- An Enhanced Sample Average Approximation Method For Stochastic Optimization - 2016 - International Journal of Production Economics PDFDocument23 pagesAn Enhanced Sample Average Approximation Method For Stochastic Optimization - 2016 - International Journal of Production Economics PDFAntonio Carlos RodriguesNo ratings yet

- SPE 164824 An Adaptive Evolutionary Algorithm For History-MatchingDocument13 pagesSPE 164824 An Adaptive Evolutionary Algorithm For History-MatchingAditya FauzanNo ratings yet

- System Reliability Analysis With Small Failure ProDocument17 pagesSystem Reliability Analysis With Small Failure Probikramjit debNo ratings yet

- 6.mass BalancingDocument36 pages6.mass BalancingRaul Dionicio100% (1)

- 1 s2.0 S0377221714005992 MainDocument12 pages1 s2.0 S0377221714005992 MaincengizNo ratings yet

- Multiple Sequence Alignment Using Modified Dynamic Programming and Particle Swarm Optimization (Journal of The Chinese Institute of Engineers)Document15 pagesMultiple Sequence Alignment Using Modified Dynamic Programming and Particle Swarm Optimization (Journal of The Chinese Institute of Engineers)wsjuangNo ratings yet

- Development of A Variance Prioritized Multiresponse Robust Design Framework For Quality ImprovementDocument17 pagesDevelopment of A Variance Prioritized Multiresponse Robust Design Framework For Quality Improvementaqeel shoukatNo ratings yet

- SSRN Id22337955421Document12 pagesSSRN Id22337955421Naveed KhalidNo ratings yet

- 2016 - A Novel Evolutionary Algorithm Applied To Algebraic Modifications of The RANS Stress-Strain RelationshipDocument33 pages2016 - A Novel Evolutionary Algorithm Applied To Algebraic Modifications of The RANS Stress-Strain RelationshipEthemNo ratings yet

- Modal Assurance CriterionDocument8 pagesModal Assurance CriterionAndres CaroNo ratings yet

- 02 Efficient Characterization of The Random Eigenvalue Problem inDocument19 pages02 Efficient Characterization of The Random Eigenvalue Problem inJRNo ratings yet

- Mathematical Programming For Piecewise Linear Regression AnalysisDocument43 pagesMathematical Programming For Piecewise Linear Regression AnalysishongxinNo ratings yet

- Issues and Procedures in Adopting Structural Equation Modelling TDocument9 pagesIssues and Procedures in Adopting Structural Equation Modelling TShwetha GyNo ratings yet

- 3 Theoretical Framework For Comparing Several Stochastic Optimization ApproachesDocument2 pages3 Theoretical Framework For Comparing Several Stochastic Optimization ApproachessjoerdNo ratings yet

- Delac 01Document6 pagesDelac 01Sayantan ChakrabortyNo ratings yet

- Analysis, and Result InterpretationDocument6 pagesAnalysis, and Result InterpretationDainikMitraNo ratings yet

- Optimal Sample Size Selection For Torusity Estimation Using A PSO Based Neural NetworkDocument14 pagesOptimal Sample Size Selection For Torusity Estimation Using A PSO Based Neural NetworkSiwaporn KunnapapdeelertNo ratings yet

- Literature Review On Response Surface MethodologyDocument4 pagesLiterature Review On Response Surface Methodologyea6mkqw2No ratings yet

- Least SquareDocument25 pagesLeast SquareNetra Bahadur KatuwalNo ratings yet

- Mixture of Partial Least Squares Regression ModelsDocument13 pagesMixture of Partial Least Squares Regression ModelsalexeletricaNo ratings yet

- Multi-Reservoir Operation Rules Multi-SwDocument21 pagesMulti-Reservoir Operation Rules Multi-SwdjajadjajaNo ratings yet

- On Random Sampling Over JoinsDocument12 pagesOn Random Sampling Over JoinsStavrosNo ratings yet

- 2014 Article 6684Document11 pages2014 Article 6684Mohammed BenbrahimNo ratings yet

- Time Series RDocument14 pagesTime Series RVarunKatyalNo ratings yet

- Meta-Heuristics AlgorithmsDocument13 pagesMeta-Heuristics AlgorithmsANH NGUYỄN TRẦN THẾNo ratings yet

- J.D. Opdyke - Permutation Tests (And Sampling Without Replacement) Orders of Magnitude Faster Using SAS - ScribeDocument40 pagesJ.D. Opdyke - Permutation Tests (And Sampling Without Replacement) Orders of Magnitude Faster Using SAS - ScribejdopdykeNo ratings yet

- 1 s2.0 S0266352X16300143 MainDocument10 pages1 s2.0 S0266352X16300143 Maina4abhirawatNo ratings yet

- Research Paper Using Linear RegressionDocument5 pagesResearch Paper Using Linear Regressionh02rj3ek100% (1)

- Bootstrapping and Learning PDFA in Data Streams: JMLR: Workshop and Conference Proceedings 21: - , 2012 The 11th ICGIDocument15 pagesBootstrapping and Learning PDFA in Data Streams: JMLR: Workshop and Conference Proceedings 21: - , 2012 The 11th ICGIKevin MondragonNo ratings yet

- First Order SchedulingDocument45 pagesFirst Order SchedulingChris VerveridisNo ratings yet

- Robust Characterization of Naturally Fractured Carbonate Reservoirs Through Sensitivity Analysis and Noise Propa...Document18 pagesRobust Characterization of Naturally Fractured Carbonate Reservoirs Through Sensitivity Analysis and Noise Propa...Louis DoroteoNo ratings yet

- Topology and Geometry in Machine Learning For Logistic Regression ProblemsDocument30 pagesTopology and Geometry in Machine Learning For Logistic Regression ProblemssggtioNo ratings yet

- S, Anno LXIII, N. 2, 2003: TatisticaDocument22 pagesS, Anno LXIII, N. 2, 2003: TatisticaAbhraneilMeitBhattacharyaNo ratings yet

- A Review of Surrogate Assisted Multiobjective EADocument15 pagesA Review of Surrogate Assisted Multiobjective EASreya BanerjeeNo ratings yet

- From Explanations To Feature Selection: Assessing SHAP Values As Feature Selection MechanismDocument8 pagesFrom Explanations To Feature Selection: Assessing SHAP Values As Feature Selection MechanismasdsafdsNo ratings yet

- AIAA-2000-1437 Validation of Structural Dynamics Models at Los Alamos National LaboratoryDocument15 pagesAIAA-2000-1437 Validation of Structural Dynamics Models at Los Alamos National LaboratoryRomoex R RockNo ratings yet

- General-To-Specific Modeling in Stata: 14, Number 4, Pp. 895-908Document14 pagesGeneral-To-Specific Modeling in Stata: 14, Number 4, Pp. 895-908Abdou SoumahNo ratings yet

- Ijaiem 2014 05 20 056Document11 pagesIjaiem 2014 05 20 056International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Size and Shape Optimization of Truss STRDocument15 pagesSize and Shape Optimization of Truss STRalexander sebastian diazNo ratings yet

- Model Updating Using Genetic Algorithms With Sequential Niche TechniqueDocument17 pagesModel Updating Using Genetic Algorithms With Sequential Niche TechniqueShruti SharmaNo ratings yet

- An Overview of Software For Conducting Dimensionality Assessmentent in Multidiomensional ModelsDocument11 pagesAn Overview of Software For Conducting Dimensionality Assessmentent in Multidiomensional ModelsPaola PorrasNo ratings yet

- (Methods in Molecular Biology, 2231) Kazutaka Katoh - Multiple Sequence Alignment - Methods and Protocols-Humana (2020)Document322 pages(Methods in Molecular Biology, 2231) Kazutaka Katoh - Multiple Sequence Alignment - Methods and Protocols-Humana (2020)Diego AlmeidaNo ratings yet

- Adaptive TimeDomainDocument29 pagesAdaptive TimeDomainJimmy PhoenixNo ratings yet

- A Hybrid Approach To Parameter Tuning in Genetic Algorithms: Bo Yuan Marcus GallagherDocument8 pagesA Hybrid Approach To Parameter Tuning in Genetic Algorithms: Bo Yuan Marcus GallagherPraveen Kumar ArjalaNo ratings yet

- Kernels, Model Selection and Feature SelectionDocument5 pagesKernels, Model Selection and Feature SelectionGautam VashishtNo ratings yet

- Applications of Simulations in Social ResearchDocument3 pagesApplications of Simulations in Social ResearchPio FabbriNo ratings yet

- Engineering Applications of Arti Ficial Intelligence: Zeineb Lassoued, Kamel AbderrahimDocument9 pagesEngineering Applications of Arti Ficial Intelligence: Zeineb Lassoued, Kamel AbderrahimEduardo PutzNo ratings yet

- RrcovDocument49 pagesRrcovtristaloidNo ratings yet

- BuildingPredictiveModelsR CaretDocument26 pagesBuildingPredictiveModelsR CaretCliqueLearn E-LearningNo ratings yet

- Li 2019Document28 pagesLi 2019Jocilene Dantas Torres NascimentoNo ratings yet

- Optimal Model Selection by A Genetic Algorithm Using SAS®: Jerry S. Tsai, Clintuition, Los Angeles, CADocument13 pagesOptimal Model Selection by A Genetic Algorithm Using SAS®: Jerry S. Tsai, Clintuition, Los Angeles, CAFritzNo ratings yet

- When To Use and How To Report The Results of PLS-SEM: Joseph F. HairDocument23 pagesWhen To Use and How To Report The Results of PLS-SEM: Joseph F. HairSaifuddin HaswareNo ratings yet

- PLS-SEM Results Reporting FormatDocument46 pagesPLS-SEM Results Reporting FormatMohiuddin QureshiNo ratings yet

- Fitting Nonlinear Calibration Curves No Models PerDocument17 pagesFitting Nonlinear Calibration Curves No Models PerLuis LahuertaNo ratings yet

- Optimization Under Stochastic Uncertainty: Methods, Control and Random Search MethodsFrom EverandOptimization Under Stochastic Uncertainty: Methods, Control and Random Search MethodsNo ratings yet

- Process Performance Models: Statistical, Probabilistic & SimulationFrom EverandProcess Performance Models: Statistical, Probabilistic & SimulationNo ratings yet

- Assessing and Improving Prediction and Classification: Theory and Algorithms in C++From EverandAssessing and Improving Prediction and Classification: Theory and Algorithms in C++No ratings yet

- Casting MetallurgyDocument16 pagesCasting MetallurgyVijay KumarNo ratings yet

- On The Knowledge and Experience of ProgrammerDocument21 pagesOn The Knowledge and Experience of ProgrammerVijay KumarNo ratings yet

- Product Design - BasicsDocument18 pagesProduct Design - BasicsVijay KumarNo ratings yet

- Automated InspectionDocument31 pagesAutomated InspectionVijay Kumar100% (8)

- Flexible Manufacturing SystemsDocument18 pagesFlexible Manufacturing SystemsVijay Kumar100% (1)

- Pressure Vessels PDFDocument19 pagesPressure Vessels PDFArnaldo BenitezNo ratings yet

- Government of Andhra Pradesh A.P. Teacher Eligibility Test - July 2011Document3 pagesGovernment of Andhra Pradesh A.P. Teacher Eligibility Test - July 2011Vijay KumarNo ratings yet

- FMEDA E3 ModulevelDocument21 pagesFMEDA E3 ModulevelRonny AjaNo ratings yet

- Lightnng Protection As Per IEC 62305Document40 pagesLightnng Protection As Per IEC 62305Mahesh Chandra Manav100% (1)

- Tc1057-Eng-10004 0 002 PDFDocument507 pagesTc1057-Eng-10004 0 002 PDFKenaia AdeleyeNo ratings yet

- MPD and MPK Series Midi Io Details 02Document3 pagesMPD and MPK Series Midi Io Details 02Jorge David Monroy PerezNo ratings yet

- Narrative Text - Infografis - KurmerDocument2 pagesNarrative Text - Infografis - KurmerCitra Arba RojunaNo ratings yet

- Valve SelectionDocument5 pagesValve SelectionmansurNo ratings yet

- 6 Biomechanical Basis of Extraction Space Closure - Pocket DentistryDocument5 pages6 Biomechanical Basis of Extraction Space Closure - Pocket DentistryParameswaran ManiNo ratings yet

- Gateway To Art - 1.08Document40 pagesGateway To Art - 1.08i am bubbleNo ratings yet

- Atlas FiltriDocument18 pagesAtlas FiltriCristi CicireanNo ratings yet

- Confucianism: By: Alisha, Mandy, Michael, and PreshiaDocument15 pagesConfucianism: By: Alisha, Mandy, Michael, and PreshiaAvi Auerbach AvilaNo ratings yet

- Buttermilk Pancakes and Honey Roasted Figs Recipe HelloFreshDocument1 pageButtermilk Pancakes and Honey Roasted Figs Recipe HelloFreshxarogi1776No ratings yet

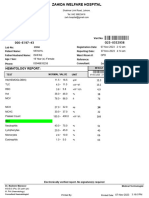

- Hematology Report:: MRN: Visit NoDocument1 pageHematology Report:: MRN: Visit Nojuniadsheikh6No ratings yet

- Fish JordanDocument29 pagesFish JordanSarah SobhiNo ratings yet

- Product Range: Trelleborg Se Aling SolutionsDocument39 pagesProduct Range: Trelleborg Se Aling SolutionssandeepNo ratings yet

- DLL Science 9 JulyDocument10 pagesDLL Science 9 JulyMark Kiven MartinezNo ratings yet

- To Study Well Design Aspects in HPHT EnvironmentDocument40 pagesTo Study Well Design Aspects in HPHT Environmentnikhil_barshettiwat100% (1)

- Hotpoint Washing Machine Wmf740Document16 pagesHotpoint Washing Machine Wmf740furheavensakeNo ratings yet

- MSD Numbers 0410612020 Am: ClosingDocument11 pagesMSD Numbers 0410612020 Am: ClosingSanjeev JayaratnaNo ratings yet

- 20 Types of Pasta - TLE9Document2 pages20 Types of Pasta - TLE9Chloe RaniaNo ratings yet

- Manual Romi GL 240Document170 pagesManual Romi GL 240FlavioJS10No ratings yet

- Verb Patterns: Verb + Infinitive or Verb + - Ing?: Verbs Followed by A To-InfinitiveDocument5 pagesVerb Patterns: Verb + Infinitive or Verb + - Ing?: Verbs Followed by A To-InfinitiveTeodora PluskoskaNo ratings yet

- Manual Spare Parts DB540!72!07Document124 pagesManual Spare Parts DB540!72!07Gustavo CarvalhoNo ratings yet

- Dawson A J.design of Inland Wat.1950.TRANSDocument26 pagesDawson A J.design of Inland Wat.1950.TRANSKelvin XuNo ratings yet

- Lesson 02 PDFDocument13 pagesLesson 02 PDFJeremy TohNo ratings yet

- 31-SDMS-03 Low Voltage Cabinet For. Pole Mounted Transformer PDFDocument20 pages31-SDMS-03 Low Voltage Cabinet For. Pole Mounted Transformer PDFMehdi SalahNo ratings yet

- Tech Mahindra Antonyms and QuestionsDocument27 pagesTech Mahindra Antonyms and QuestionsNehaNo ratings yet

- Physics ProjectDocument8 pagesPhysics Projectmitra28shyamalNo ratings yet

On Choosing Parameters in Retrospective-Approximation Algorithms For Stochastic Root Finding and Simulation Optimization

On Choosing Parameters in Retrospective-Approximation Algorithms For Stochastic Root Finding and Simulation Optimization

Uploaded by

Vijay KumarOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

On Choosing Parameters in Retrospective-Approximation Algorithms For Stochastic Root Finding and Simulation Optimization

On Choosing Parameters in Retrospective-Approximation Algorithms For Stochastic Root Finding and Simulation Optimization

Uploaded by

Vijay KumarCopyright:

Available Formats

OPERATIONS RESEARCH

Vol. 58, No. 4, Part 1 of 2, JulyAugust 2010, pp. 889901

issn0030-364X[ eissn1526-5463[ 10[ 5804[ 0889

informs

doi 10.1287/opre.1090.0773

2010 INFORMS

On Choosing Parameters in

Retrospective-Approximation Algorithms for

Stochastic Root Finding and Simulation Optimization

Raghu Pasupathy

Grado Department of Industrial and Systems Engineering, Virginia Tech, Blacksburg, Virginia 24061, pasupath@vt.edu

The stochastic root-nding problem is that of nding a zero of a vector-valued function known only through a stochastic

simulation. The simulation-optimization problem is that of locating a real-valued functions minimum, again with only

a stochastic simulation that generates function estimates. Retrospective approximation (RA) is a sample-path technique

for solving such problems, where the solution to the underlying problem is approached via solutions to a sequence of

approximate deterministic problems, each of which is generated using a specied sample size, and solved to a specied

error tolerance. Our primary focus, in this paper, is providing guidance on choosing the sequence of sample sizes and

error tolerances in RA algorithms. We rst present an overview of the conditions that guarantee the correct convergence

of RAs iterates. Then we characterize a class of error-tolerance and sample-size sequences that are superior to others in a

certain precisely dened sense. We also identify and recommend members of this class and provide a numerical example

illustrating the key results.

Subject classications: simulation: efciency, design of experiments; programming: stochastic.

Area of review: Simulation.

History: Received March 2007; revisions received November 2007, August 2008, September 2008, October 2008;

accepted November 2008. Published online in Articles in Advance March 24, 2010.

1. Introduction and Motivation

The stochastic root-nding problem (SRFP) and the sim-

ulation optimization problem (SOP) are simulation-based

stochastic analogues of the well-researched root-nding

and optimization problems, respectively. These problems

have recently generated a tremendous amount of attention

amongst researchers and practitioners, primarily owing to

their generality. Because the functions involved in these

formulations are specied implicitly through a stochastic

simulation, virtually any level of complexity is afforded.

Various avors of SRFP and SOP have thus found appli-

cation in an enormous range of large-scale, real-world

contexts such as vehicular transportation networks, qual-

ity control, telecommunication systems, and health care.

See Andradttir (2006), Spall (2003), Fu (2002), Barton

and Meckesheimer (2006), Chen and Schmeiser (2001),

and lafsson (2006) for entry points into this literature and

overviews on the subject.

For solving SOPs and SRFPs, sample-average approxi-

mation (SAA), amongst a few other popular classes of algo-

rithms (see 3), has found particular expediency amongst

researchers and practitioners. In its most basic form, SAA

involves generating a sample-path problem using an appro-

priately chosen sample size, and then solving it to desired

precision using a chosen numerical procedure. This step is

usually followed by an analysis of the solution estimator,

using the now well-established large-sample (Robinson

1996, Shapiro 2004, Atlason et al. 2004) and small-sample

properties (Mak et al. 1999), and theories on assessing

solution quality (Bayraksan and Morton 2007). Due to

its simplicity, and the ability to incorporate powerful and

mature tools from the deterministic context, SAA has

been widely applied. Some examples of SAA applica-

tion by experts include optimal release times in produc-

tion ow lines (Homem-de-Mello et al. 1999), call-center

stafng (Atlason et al. 2004, 2008), tandem production

lines and stochastic PERT (Plambeck et al. 1996), trans-

shipment problems (Herer et al. 2006), design for health

care (Prakash et al. 2008), and vehicle-routing problems

(Verweij et al. 2003).

Our specic focus in this paper is a recent renement of

the SAA class of methods called, variously, retrospective

approximation (RA) (Chen and Schmeiser 2001, Pasupathy

and Schmeiser 2009) and variable sample size (Homem-

de-Mello 2003) methods. Whereas a generic SAA method

generates a single sample-path problem with a large enough

sample size, and then solves it to a prescribed error tol-

erance, the said SAA renements generate a sequence of

sample-path problems with progressively increasing sam-

ple sizes, and then solve these to progressively decreasing

error tolerances. This elaborate structure in the SAA rene-

ments is explicitly constructed to gain overall efciency.

The early iterations are efcient, in principle, because the

small sample sizes ensure that not much computing effort

889

I

N

F

O

R

M

S

h

o

l

d

s

c

o

p

y

r

i

g

h

t

t

o

t

h

i

s

a

r

t

i

c

l

e

a

n

d

d

i

s

t

r

i

b

u

t

e

d

t

h

i

s

c

o

p

y

a

s

a

c

o

u

r

t

e

s

y

t

o

t

h

e

a

u

t

h

o

r

(

s

)

.

A

d

d

i

t

i

o

n

a

l

i

n

f

o

r

m

a

t

i

o

n

,

i

n

c

l

u

d

i

n

g

r

i

g

h

t

s

a

n

d

p

e

r

m

i

s

s

i

o

n

p

o

l

i

c

i

e

s

,

i

s

a

v

a

i

l

a

b

l

e

a

t

h

t

t

p

:

/

/

j

o

u

r

n

a

l

s

.

i

n

f

o

r

m

s

.

o

r

g

/

.

Pasupathy: On Choosing Parameters in RA Algorithms for Stochastic Root Finding

890 Operations Research 58(4, Part 1 of 2), pp. 889901, 2010 INFORMS

is expended in generating sample-path problems. The later

iterations are efcient, again in principle, because the start-

ing solution for the sample-path problem is probably close

to the true solution, and not much effort is expended in

solving sample-path problems. The solving of the individ-

ual sample-path problems, as in generic SAA, is accom-

plished by choosing any numerical procedure from amongst

the powerful host of deterministic root-nding/optimization

techniques that are currently available.

The general RA structure is indeed attractive from both

the efciency and the implementability perspectives, and

the resulting RA estimators inherit much of the well-

studied large-sample properties of generic SAA estima-

tors. Little is currently known, however, about how exactly

to trade off parameters, i.e., sample sizes and error tol-

erances, within such frameworks. Although these frame-

works seem to serve as viable implementation renements

to SAA, the stipulations dictated by convergence allow an

enormous number of possible choices for sample-size and

error-tolerance sequences, some of which are conceivably

much inferior to others from an algorithm efciency stand-

point. It seems intuitively clear that the sample-size and

error-tolerance sequences should be chosen in balance

increasing sample sizes too fast compared to the decrease

rate of error tolerances will lead to residual bias from the

undersolving of sample-path problems; increasing sample

sizes too slowly compared to the decreasing rate of error

tolerances will lead to wasted computational effort result-

ing from the oversolving of the sample-path problems. This

trade-off resulting from the choice of sample sizes and error

tolerances motivates our central questions:

Does there exist a balanced choice of sample sizes and

error tolerances in SAA renements such as RA, where the

terms sample size and error tolerance refer to some

generic measures of problem-generation effort and solu-

tion quality, respectively? Furthermore, can this balance be

characterized rigorously?

Can the characterization of a balanced choice be used

to provide guidance in choosing particular sample-size and

error-tolerance sequences automatically across iterations

within SAA renements such as RA?

As we shall see, the answer to both of the above ques-

tions is in the afrmative. Why is parameter choice such

an important question? In broad terms, parameter choice is

intimately linked to algorithm efciency. Frequently, SOPs

and SRFPs arise in contexts where simulations are time

consuming, taking anywhere from a few seconds to a few

hours for each run. In such situations, algorithm parame-

ter choices can have a dramatic effect on solution quality

as a function of time or computing effort. Moreover, the

researcher/practitioner often does not have the exibility to

tweak algorithm parameters so as to identify good parame-

ters. This may be because of the lack of expertise, or sim-

ply because solutions need to be obtained in a rapid and

automatic fashion. Proper guidance on the choice of param-

eters is thus imperative to ensure that resources are utilized

in an efcient fashion within RA-type algorithms. Consid-

ering applicability to a wide audience, such guidance is

especially useful if identied within a generic framework

such as we consider in this paper. Optimal parameter choice

is not an RA-specic issue. It has been recognized as an

important question in other algorithm classes as well, e.g.,

stochastic approximation algorithms (Spall 2003, 2006).

1.1. Contributions

That SAA is currently amongst the attractive methods of

solving SRFPs and SOPs is undeniable. The enormous

amount of recent theoretical development (Bayraksan and

Morton 2007, 2010; Chen and Schmeiser 2001; Higle and

Sen 1991; Homem-de-Mello 2003; Kleywegt et al. 2001;

Mak et al. 1999; Plambeck et al. 1996; Polak and Royset

2008; Shapiro 2000, 1991, 2004), and its expediency in a

wide range of actual applications (Homem-de-Mello et al.

1999; Atlason et al. 2004, 2008; Plambeck et al. 1996;

Herer et al. 2006; Prakash et al. 2008; Verweij et al.

2003), stand in evidence. Considering this broad appeal of

SAA, we address key questions relating to how parameters,

specically sample sizes and error tolerances, should be

chosen within implementable renements of SAA. Param-

eter choice is crucial in that it plays an important role in

deciding the efciency of the solutions resulting from SAA

algorithm execution.

The following are specic contributions of this paper.

1. We rigorously characterize an optimal class of sam-

ple sizes and error tolerances for use within sample-path

methods. The characterization of this optimal class is in

the form of three limiting conditions involving the param-

eter sequences, and the convergence rate of the numerical

procedure used to solve the sample-path problem. We show

that the characterization is tight in the sense that optimality,

as dened, is achieved if and only if the chosen parameters

fall within the characterized class.

2. With a view toward actual implementation, we iden-

tify commonly considered classes of sample-size and

error-tolerance sequences that lie inside and outside the

characterized optimal class. For sublinear, linear, and poly-

nomially converging numerical procedures used within

sample-path problems, we provide guidance on the rate at

which sample sizes should be increasing if one is looking

to choose parameters that belong to the optimal class.

3. For completeness and ease of exposition, we clarify

the conditions under which RA algorithms converge to the

correct solution with probability one (wp1). In addition, we

prove a certain central limit theorem for RA estimators on

SRFPs. Similar results already exist for SOPs.

1.2. Organization

The remainder of the paper is organized as follows. In 2

we provide the problem statements for SRFPs and SOPs,

followed by some notation and terminology used in this

paper. In 3 we present a brief literature review on the

I

N

F

O

R

M

S

h

o

l

d

s

c

o

p

y

r

i

g

h

t

t

o

t

h

i

s

a

r

t

i

c

l

e

a

n

d

d

i

s

t

r

i

b

u

t

e

d

t

h

i

s

c

o

p

y

a

s

a

c

o

u

r

t

e

s

y

t

o

t

h

e

a

u

t

h

o

r

(

s

)

.

A

d

d

i

t

i

o

n

a

l

i

n

f

o

r

m

a

t

i

o

n

,

i

n

c

l

u

d

i

n

g

r

i

g

h

t

s

a

n

d

p

e

r

m

i

s

s

i

o

n

p

o

l

i

c

i

e

s

,

i

s

a

v

a

i

l

a

b

l

e

a

t

h

t

t

p

:

/

/

j

o

u

r

n

a

l

s

.

i

n

f

o

r

m

s

.

o

r

g

/

.

Pasupathy: On Choosing Parameters in RA Algorithms for Stochastic Root Finding

Operations Research 58(4, Part 1 of 2), pp. 889901, 2010 INFORMS 891

existing methods to solve SRFPs and SOPs. Convergence

results on RA algorithms appear in 4, followed by the

main results in 5. Some of these results, especially those

appearing in 4, have been stated in abbreviated form or

without proofs in Pasupathy (2006). In 6, we discuss

our choice of efciency measure and termination criteria.

Section 7 includes an illustration of the results obtained in

the paper through a numerical example. We provide con-

cluding remarks in 8.

2. Problem Statements and Notation

The following is a list of key notation and denitions

adopted in the paper: (i) x

denotes a true solution to

the SRFP or SOP; (ii) X

k

denotes a true solution to the

kth sample-path problem; (iii) X

k

denotes the kth retro-

spective solution, i.e., the estimated solution to the kth

sample-path problem; (iv) X

n

X means that the sequence

of random variables ]X

n

] converges to the random vari-

able X in probability; (v) X

n

X wp1 means that the

sequence of random variables ]X

n

] converges to the ran-

dom variable X with probability one; (vi) X

n

J

X means

that the sequence of random variables ]X

n

] converges to the

random variable X in distribution; (vii) ]m

k

] ~ means

that the sequence ]m

k

] is an increasing sequence going

to innity; (viii) the sequence ]m

k

] ~ exhibits sublin-

ear growth if limsup

k~

m

k

,m

k1

1, linear growth if

1 - limsup

k~

m

k

,m

k1

- ~, polynomial growth if 0 -

limsup

k~

m

k

,(m

k1

)

-~ for some >1, and exponen-

tial growth if limsup

k~

m

k

,(m

k1

)

= ~ for all > 1;

(ix) x denotes the smallest integer greater than or equal

to x ; (x) dist(x, D) = inf][x ,[: , D] denotes

distance between a point x and a set D; (xi) B(x, r)

denotes a ball of radius r centered on x.

Formally, the SRFP is stated as follows.

Given: A simulation capable of generating, for any x

q

, an estimator

Y

m

(x) of the function g:

q

such that

Y

m

(x)

J

g(x) as m~, for all x .

Find: A zero x

of g, i.e., nd x

such that

g(x

) =0, assuming that one such exists.

Similarly, the version of SOP we will use in this paper

is as follows.

Given: A simulation capable of generating, for any x

q

, an estimator

Y

m

(x) of the function g: such

that

Y

m

(x)

J

g(x) as m~, for all x .

Find: A local minimizer x

of g, i.e., nd x

having

a neighborhood V(x

) such that every x V(x

) satises

g(x) g(x

), assuming that one such x

exists.

As stated, the SRFP and SOP make no assumptions

about the nature of

Y

m

(x) except that

Y

m

(x)

J

g(x) as

m~. Also, the feasible set is assumed to be known

in the sense that the functions involved in the specication

of are observed without error. Various slightly differing

avors of the SOP have appeared in the literature. See, for

example, Nemirovski and Shapiro (2004).

3. Abbreviated Literature Review

In this section, we present a brief overview of the impor-

tant works related to solving SRFPs and SOPs. We limit

ourselves to a broad categorization, providing references

only to summary articles. Sample-average approximation,

being the topic of this paper, is discussed in greater

detail.

Current methods to solve SOPs fall into six broad cat-

egories: (i) metamodels, (ii) metaheuristics, (iii) compar-

ison methods, (iv) random-search methods, (v) stochastic

approximation (SA), and (vi) sample-average approxima-

tion (SAA). This categorization is not mutually exclusive,

but methods in each category share some set of distin-

guishing features. For instance, metamodels (category (i))

estimate a functional relationship between the objective

function and the variables in the search space, and then

employ deterministic optimization techniques on the esti-

mated objective function. See Barton and Meckesheimer

(2006) for detailed ideas. The methods in category (ii) are

based on metaheuristics such as tabu search, evolution-

ary algorithms, simulated annealing, and nested parti-

tions (lafsson 2006). Comparison methods (category (iii)),

a mature class of methods predominantly based on statis-

tical procedures, have been specially developed to solve

SOPs where the set of feasible solutions is discrete and

small. See Kim and Nelson (2006) for a broad overview.

Random-search methods (category (iv)) include algorithms

that use some intelligent sampling procedure to traverse

the search space, followed by an update mechanism to

report the current best solution. See Andradttir (2006)

for an overview. SA methods (category (v)) are based

on a recursion introduced in Robbins and Monro (1951),

and have seen enormous development. Several books

(Kushner and Clark 1978, Kushner and Yin 2003, Spall

2003) are available on the subject. Multiscale SA (Bhat-

nagar and Borkar 1998, Bhatnagar et al. 2001, Tadic and

Meyn 2003), an interesting subclass of SA, improves on

generic SA using paired recursions for parameter updating

and system evolution.

SAA (category (vi)) is the category of most interest in

this paper. SAA techniques seem to have rst appeared

in Shapiro (1991) and Healy and Schruben (1991) and were

later used by several other authors (Rubinstein and Shapiro

1993, Plambeck et al. 1996, Atlason et al. 2004) in vari-

ous contexts. The idea of SAA is easily stated. Obtain an

estimator for the true solution x

of the SOP (or SRFP)

by solving an approximate problem S. The approximate

problem S substitutes the unknown function g in the orig-

inal problem by its sample-path approximation ,

m

(x; w)

generated with sample size m, and the vector of random

numbers w. SAA has a well-developed small-sample (Mak

et al. 1999) and large-sample (Robinson 1996, Shapiro

2004, Kleywegt et al. 2001, Atlason et al. 2004) theory.

Recent work includes methods to assess solution quality

(Bayraksan and Morton 2007).

I

N

F

O

R

M

S

h

o

l

d

s

c

o

p

y

r

i

g

h

t

t

o

t

h

i

s

a

r

t

i

c

l

e

a

n

d

d

i

s

t

r

i

b

u

t

e

d

t

h

i

s

c

o

p

y

a

s

a

c

o

u

r

t

e

s

y

t

o

t

h

e

a

u

t

h

o

r

(

s

)

.

A

d

d

i

t

i

o

n

a

l

i

n

f

o

r

m

a

t

i

o

n

,

i

n

c

l

u

d

i

n

g

r

i

g

h

t

s

a

n

d

p

e

r

m

i

s

s

i

o

n

p

o

l

i

c

i

e

s

,

i

s

a

v

a

i

l

a

b

l

e

a

t

h

t

t

p

:

/

/

j

o

u

r

n

a

l

s

.

i

n

f

o

r

m

s

.

o

r

g

/

.

Pasupathy: On Choosing Parameters in RA Algorithms for Stochastic Root Finding

892 Operations Research 58(4, Part 1 of 2), pp. 889901, 2010 INFORMS

Retrospective approximation (RA) (Chen and Schmeiser

2001, Pasupathy and Schmeiser 2009) is a variant of SAA

where instead of generating and solving a single sample-

path problem S, a sequence of sample-path problems ]S

k

]

are generated with sample sizes ]m

k

] ~, and solved to

error tolerances ]e

k

] 0. The estimated solution X

k1

of

the sample-path problem S

k1

, also called the (k1)th ret-

rospective solution, is used as the initial solution to the sub-

sequent sample-path problem S

k

. The philosophy behind

the RA structure is as follows: During the early iterations,

use small sample-sizes m

k

and large error-tolerances e

k

in solving the sample-path problem S

k

; in later iterations, as

the retrospective solution X

k

tends closer to the true solu-

tion x

, use larger sample sizes and smaller error tol-

erances. In implementing RA algorithms, three parameters

need to be chosen: (i) a numerical procedure for solving

the sample-path problem S

k

; (ii) a sequence of sample sizes

]m

k

] to be used for generating the sample-path functions

] ,

m

k

(x; w

k

)]; and (iii) a sequence of error tolerances ]e

k

]

to guide termination of iterations. The subject of this paper

is the rigorous choice of parameters (i), (ii), and (iii), with

an emphasis on (ii) and (iii).

Three recent papers are directly relevant to what we dis-

cuss in this paper. In Homem-de-Mello (2003), through a

setup that is very similar to that considered in this paper,

stipulations on sample-size growth rates are identied so

as to ensure the consistency of the objective function esti-

mator. The results derived in this paper thus complement

those in Homem-de-Mello (2003). In Polak and Royset

(2008), through a framework that is slightly less generic

than that considered in this paper, an efcient schedule

of sample sizes and stages is identied through the solu-

tion of an auxiliary optimization problem, and under the

assumption that the procedure used to solve the sample-

path problems is linearly convergent. Most recently, in

Bayraksan and Morton (2010), a sequential sampling pro-

cedure is suggested for use within SAA-type algorithms.

The primary contribution of Bayraksan and Morton (2010)

is the identifying of a schedule of sample sizes, along

with the set of conditions, sufcient to provide probabilis-

tic guarantees on the optimality gap of a candidate solution

obtained through SAA. Differences between the current

work and Bayraksan and Morton (2010) are worth noting

whereas the broad objective in Bayraksan and Morton

(2010) is obtaining an SAA solution that is of a guaranteed

prespecied quality (in a probabilistic sense), ours is ensur-

ing that the sequence of obtained SAA solutions converges

optimally. For the same reason, our results are intimately

linked to the convergence rate of the numerical procedure

used to solve the sample-path problems within SAA, unlike

in Bayraksan and Morton (2010), where the optimality gap

is estimated. The controllables in both the current paper

and Bayraksan and Morton (2010) are the sample sizes

used in generating the sample-path problems.

4. Conditions for Guaranteed

Convergence

In this section, we state sufcient conditions to ensure that

the sequence ]X

k

] of retrospective solutions in RA algo-

rithms converges to the true solution x

wp1. Separate treat-

ments are provided for the SRFP and the SOP contexts. The

results presented in this section are a simple consequence

of some well-known results in the literature.

We start with Theorem 1, which appears as Theorem 5.1

in Shapiro (2000). Theorem 1 asserts that under mild condi-

tions, a sequence ]X

k

] of global minimizers of the sample-

path functions ] ,

m

k

(x; w

k

)] converges in distance to the set

r

of global minimizers of the limiting function g wp1.

Theorem 1. Assume that (i) the set

q

is compact;

(ii) the function g:

q

is continuous; and (iii) the

functional sequence ] ,

m

k

(x; w

k

)] converges to g uniformly

wp1. Then, if X

k

is a global minimizer of ,

m

k

(x; w

k

), and

r

is the nonempty set of global minimizers of g, the dis-

tance dist(X

k

, r

) 0 wp1.

Theorem 1 is more useful in proving convergence of RA

iterates for the SRFP context than for the SOP context.

This is because although we seek a local minimizer of the

limit function g in SOPs, Theorem 1 is global in nature.

In other words, Theorem 1 talks about the convergence of

global minimizers of the sample-path functions to the set

of global minimizers of the limit function g.

4.1. SRFP Context

To establish convergence of the retrospective solutions ]X

k

]

to the true solution x

in the SRFP context, we rst con-

vert the original SRFP into an equivalent SOP where every

local minimizer is also a global minimizer, and then apply

Theorem 1. This is demonstrated in Theorem 2, the main

convergence result for RA iterates in the context of SRFPs.

Theorem 2 (SRFP). Assume that (i) the set

q

is

compact; (ii) the function g:

q

q

is continuous; and

(iii) the functional sequence ] ,

m

k

(x; w

k

)] converges to g

uniformly wp1. Let the positive-valued sequence ]e

k

]

0, and X

k

satisfy [X

k

X

k

[ e

k

, where X

k

is a zero of

,

m

k

(x; w

k

). Then, dist(X

k

, r

) 0 wp1, where r

is the

set of zeros of the function g.

Proof. See appendix.

4.2. SOP Context

Theorem 1 is a statement about the behavior of a sequence

of global minimizers of sample-path functions. In study-

ing SOPs, Theorem 1 is thus useful only if the numerical

procedure used to solve the sample-path problems returns

a global minimizer. Our interest, however, is in analyzing

RA algorithms where sample-path problems are solved for

a local minimizer, and that too with nite stopping. For

such situations, Theorem 1 is not as useful because it says

nothing about the behavior of any particular sequence of

I

N

F

O

R

M

S

h

o

l

d

s

c

o

p

y

r

i

g

h

t

t

o

t

h

i

s

a

r

t

i

c

l

e

a

n

d

d

i

s

t

r

i

b

u

t

e

d

t

h

i

s

c

o

p

y

a

s

a

c

o

u

r

t

e

s

y

t

o

t

h

e

a

u

t

h

o

r

(

s

)

.

A

d

d

i

t

i

o

n

a

l

i

n

f

o

r

m

a

t

i

o

n

,

i

n

c

l

u

d

i

n

g

r

i

g

h

t

s

a

n

d

p

e

r

m

i

s

s

i

o

n

p

o

l

i

c

i

e

s

,

i

s

a

v

a

i

l

a

b

l

e

a

t

h

t

t

p

:

/

/

j

o

u

r

n

a

l

s

.

i

n

f

o

r

m

s

.

o

r

g

/

.

Pasupathy: On Choosing Parameters in RA Algorithms for Stochastic Root Finding

Operations Research 58(4, Part 1 of 2), pp. 889901, 2010 INFORMS 893

local minimizers. For instance, consider g(x) = [x[, and

,

m

k

(x; w

k

) = g(x) ]

k

(x)I

|11,k, 11,k]

, where ]

k

is any

continuous function with a unique minimum at x = 1,

]

k

(x) 0 for all x |1 1,k, 1 1,k], ]

k

(1 1,k) =

]

k

(1 1,k) = 0, and lim

k~

]

k

(1) = 0 (e.g., ]

k

(x) =

(1x)

2

(1,k)

2

). Then, ,

m

k

(x; w

k

) has the same two local

minima for each k and differs from g(x) only in the inter-

val (1 1,k, 1 1,k). Also, the sequence ] ,

m

k

(x; w

k

)]

converges to g(x) uniformly, and the set of global mini-

mizers of the function ,

m

k

(x; w

k

) converges to the global

minimizer of g(x) as k ~. Notice, however, that the

sequence ]x

k

=1] of local minimizers of ] ,

m

k

(x; w

k

)] does

not converge to a local minimizer of the limit function g.

This example implies, as Homem-de-Mello (2003) notes,

that using locally convergent algorithms to solve sample-

path problems may trap SAA solutions at a local min-

imizer. In other words, even in the hypothetical scenario

where a local minimizer is solved to innite precision dur-

ing each iteration, there is no guarantee that the obtained

sequence of local minimizers will converge to a true solu-

tion x

.

The following is a technical assumption devised by

Bastin et al. (2006) to exclude possibilities of the type

illustrated in the previous example. We call it the rigidity

assumption to reect the fact that it excludes such exam-

ples by stipulating that local minimizers of sample-path

functions that persist across iterations, do so over some

arbitrarily small ball whose size remains rigid, i.e., whose

radius remains larger than some threshold.

Assumption 1. Let

k

(w

k

) be the set of local minimizers

of ,

m

k

(x; w

k

), and let

be the set of cluster points of all

sequences ]X

k

], X

k

k

(w

k

). If I

, then there exists a

subsequence ]X

k

]

] ]X

k

] converging to I

, and constants

o >0, t >0, such that X

k

]

is a minimizer of ,

m

k

(x; w

k

) in

B(X

k

]

, o) for all ] >t.

This assumption has the same implications as in Bastin

et al. (2006)it prevents the occurrence of kinks in the

sample-path functions that vanish with increasing sample

size. This is a reasonable stipulation because such examples

where local minima appear articially in the sample-path

problems and then disappear as the sample size is increased

seem infrequent in practice. Theorem 4.1 in Bastin et al.

(2006) then directly applies to any sequence of local min-

imizers ]X

k

] of the sample-path functions ] ,

m

k

(x; w

k

)].

Specically, it guarantees that when Assumption 1 holds,

]X

k

] converges to some local minimizer x

of the limit

function g wp1. Now recall that the retrospective solution

X

k

identied during the kth iteration in RA is at most e

k

away from X

k

. Because ]e

k

] 0, the convergence of ]X

k

]

to some local minimizer x

of g becomes a trivial exten-

sion of Theorem 4.1 in Bastin et al. (2006). We formally

state this next without a proof.

Theorem 3 (SOP). Let the conditions in Theorem 1 and

Assumption 1 hold. Let the positive-valued sequence

]e

k

] 0, and X

k

satisfy [X

k

X

k

[ e

k

, where X

k

is a

local minimizer of ,

m

k

(x; w

k

). Then, dist(X

k

, r

) 0 wp1,

where r

is the set of local minimizers of the function g.

5. Choosing Sample-Size and

Error-Tolerance Sequences

Having discussed convergence of RA iterates in the

previous section, our objective in this section is more

interestingprovide rigorous guidance in choosing RA

algorithm parameters, specically the error-tolerance

sequence ]e

k

] and the sample-size sequence ]m

k

]. We wish

to automatically choose the sequences ]e

k

] and ]m

k

] in a

fashion that ensures that the retrospective solutions ]X

k

]

converge to the true solution x

in some reasonably and

precisely dened optimal sense.

Before we present the main ideas on choosing parameter

sequences, we present a type of central limit theorem (CLT)

on the sample-path solution X

k

in the context of SRFPs,

essential for later exposition. A corresponding result for the

context of SOPs appears in Shapiro (2000, Theorems 5.2,

5.3, 5.4). We follow the notation established in the previous

section ,

m

k

(x; w

k

) is the kth sample-path function gen-

erated with sample size m

k

, the sample-path solution X

k

is a zero of ,

m

k

(x; w

k

) in the context of SRFPs and some

local minimizer of ,

m

k

(x; w

k

) in the context of SOPs, and

the retrospective solution X

k

is obtained by solving the kth

sample-path problem to within the chosen tolerance e

k

. A

proof is provided in the appendix.

Theorem 4 (SRFP). Let the conditions of Theorem 2 hold.

Furthermore, assume (i) X

k

and x

are the unique zeros

of the functions ,

m

k

(x; w

k

) and g(x), respectively; (ii) the

functions ,

m

k

(x; w

k

) and g(x) have nonsingular deriva-

tives V ,

m

k

(x; w

k

), Vg(x) in some neighborhood around x

;

(iii) the sequence ]V ,

m

k

(x; w

k

)] converges uniformly (ele-

mentwise) to Vg(x) in some neighborhood around x

wp1;

and (iv) a central limit theorem holds for ,

m

k

(x; w

k

), i.e.,

m

k

( ,

m

k

(x; w

k

) g(x))

J

N(0, l), where N(0, l) is the

Gaussian random variable with mean zero and covari-

ance l. Then,

m

k

(X

k

x

)

J

N(0, Vg(x

)

1

l(Vg(x

)

1

)

T

).

The assumption (iii) on the sequence of derivatives

]V ,

m

k

(x; w

k

)] is important and ensures that the sample-

path functions smoothly approximate the true function.

Under what conditions can this assumption be expected

to hold? The most general answer to this question is

provided by standard results in most real-analysis text-

books. For example, Theorem 7.17 in Rudin (1976, p. 152)

guarantees that the uniform convergence of ]V ,

m

k

(x; w

k

)]

is sufcient to ensure that ]V ,

m

k

(x; w

k

)] converges to

Vg(x). Similarly, when g(x) is an expectation, the uni-

form integrability of the nite differences formed from

] ,

m

k

(x; w

k

)], along with the niteness of g(x), provide

I

N

F

O

R

M

S

h

o

l

d

s

c

o

p

y

r

i

g

h

t

t

o

t

h

i

s

a

r

t

i

c

l

e

a

n

d

d

i

s

t

r

i

b

u

t

e

d

t

h

i

s

c

o

p

y

a

s

a

c

o

u

r

t

e

s

y

t

o

t

h

e

a

u

t

h

o

r

(

s

)

.

A

d

d

i

t

i

o

n

a

l

i

n

f

o

r

m

a

t

i

o

n

,

i

n

c

l

u

d

i

n

g

r

i

g

h

t

s

a

n

d

p

e

r

m

i

s

s

i

o

n

p

o

l

i

c

i

e

s

,

i

s

a

v

a

i

l

a

b

l

e

a

t

h

t

t

p

:

/

/

j

o

u

r

n

a

l

s

.

i

n

f

o

r

m

s

.

o

r

g

/

.

Pasupathy: On Choosing Parameters in RA Algorithms for Stochastic Root Finding

894 Operations Research 58(4, Part 1 of 2), pp. 889901, 2010 INFORMS

necessary and sufcient conditions for ]V ,

m

k

(x; w

k

)]

Vg(x) to hold (Glasserman 1991, p. 14). The most pop-

ular method to guarantee ]V ,

m

k

(x; w

k

)] Vg(x) when

g(x) is an expectation is through Lebesgues dominated

convergence theorem (Rudin 1976, p. 321) in combina-

tion with the generalized mean value theorem (Glasserman

1991, p. 15).

These results, although providing conditions in all gen-

erality, still do not leave us with complete intuition on

whether ]V ,

m

k

(x; w

k

)] Vg(x) will be satised for a

given system. This question of when ]V ,

m

k

(x; w

k

)]

Vg(x) is addressed in great detail in Glasserman (1991).

The key factor turns out to be the continuity of the per-

formance measure ,

m

k

(x; w

k

). Although neither necessary

nor sufcient to ensure ]V ,

m

k

(x; w

k

)] Vg(x), the con-

tinuity of ,

m

k

(x; w

k

) ensures that only a few other mild

assumptions are needed to deem the assumption valid.

For example, let g(x) =E| ,

m

k

(x; w

k

)]. Then, if ,

m

k

(x; w

k

)

is continuously differentiable on a compact set C and

E|sup

xC

V ,

m

k

(x; w

k

)] - ~, the interchange holds, i.e.,

E|V ,

m

k

(x; w

k

)] = Vg(x) on C. Furthermore, in general-

ized semi-Markov processes (GSMP)a general model for

discrete-event systems seen in practicethe stipulation of

continuity in ,

m

k

(x; w

k

) can be checked through a commut-

ing condition on the GSMP representation of the system in

consideration (Glasserman 1991, Chapter 3).

Various other specic contexts have routinely used

]V ,

m

k

(x; w

k

)] Vg(x), particularly when g(x) is an

expectation. For example, the said exchange has been val-

idated in great detail in uid-ow models (Wardi et al.

2002). In stochastic linear programs (Higle and Sen 1991),

the structure of the problem automatically produces con-

tinuous sample paths, thereby naturally allowing exchanges

of this type.

The other important assumption (i) in Theorem 4

the functions ,

m

k

(x; w

k

) and g(x) have unique zerosis

admittedly stringent. Two remarks are relevant regarding

this assumption. First, it has been our experience that in

SOP and SRFP contexts, sample paths almost invariably

mimic the structural properties of the limiting function.

(A notable exception is an empirical cumulative distribu-

tion function.) This is indeed interesting and lends some

credibility to assuming similar properties on ,

m

k

(x; w

k

) and

g(x). Second, virtually all Newton-based iterations to nd

zeros of a function seem to need an assumption on the

nearness of the starting point (to a zero) in order to prove

convergence. Our assumption of a unique zero is compara-

ble, and can be relaxed through similar assumptions on the

closeness of the starting solution when solving a sample-

path problem.

5.1. Relation Between Sample-Size and

Error-Tolerance Sequences

Our message is that for optimal convergence, choose ]e

k

]

and ]m

k

] in balance so that they converge to their respec-

tive limits, zero and innity, at similar rates. We make this

claim precise through Theorem 5, where we prove that

those sample-size and error-tolerance sequences that satisfy

conditions C.1, C.2, and C.3, to be stated, are superior to

others in a certain precise sense.

Before we state conditions C.1, C.2, and C.3, we

remind the reader of the notions of sublinear, linear, and

superlinear convergence. Let the deterministic sequence

]z

k

] z

with z

k

,= z

for all k. Then the quotient

convergence factors, or Q-factors, of the sequence ]z

k

]

are Q

= limsup

k~

[z

k1

z

[,[z

k

z

dened for

|1, ~) (Ortega and Rheinboldt 1970). The sequence

]z

k

] exhibits linear convergence if 0 - Q

1

- 1, sublin-

ear convergence if Q

1

1, and superlinear convergence if

Q

1

=0. In this paper, for a more specic characterization

of superlinear convergence, we say that the sequence ]z

k

]

exhibits polynomial convergence if Q

1

=0 and Q

>0 for

some >1.

We now state the conditions C.1, C.2, and C.3, to be

satised by the sequences ]e

k

] and ]m

k

].

C.1. When the numerical procedure used to solve

sample-path problems exhibits

(a) linear convergence: liminf

k~

e

k

m

k1

>0;

(b) polynomial convergence: liminf

k~

(log(1,

m

k1

),log(e

k

)) >0.

C.2. limsup

k~

(

k

]=1

m

]

)e

2

k

-~.

C.3. limsup

k~

(

k

]=1

m

]

)m

1

k

-~.

The conditions C.1, C.2, and C.3 have a clear phys-

ical interpretation. The condition C.1 says, for instance,

that the sequence ]e

k

] of error tolerances should not be

reduced to zero too fast compared to the sequence of

sample sizes ]m

k

]. Understandably, the notion of too fast

depends on the convergence rate of the numerical procedure

in use, thus warranting the expression of condition C.1 in

two parts. The condition C.1(a) is a more stringent special

case of C.1(b), i.e., sequences ]e

k

], ]m

k

] that satisfy con-

dition C.1(a) automatically satisfy C.1(b). This is expected

because procedures that exhibit polynomial convergence, as

dened, take a smaller far number of asymptotic steps

to solve to a specic error tolerance than do procedures

that exhibit linear convergence. The intuitive sense behind

condition C.1 is solving the sample-path problems for only

as long as we do not chase randomness, i.e., only to the

extent that it is benecial from the standpoint of getting

closer to the true solution x

. In order to understand con-

ditions C.2 and C.3, we notice that the number of points

visited N

k

1 for all k. Therefore, during the kth iteration,

at least m

k

amount of work is done. This work would be

wasted if there is no movement in going from the (k1)th

retrospective solution X

k1

to the kth retrospective solution

X

k

. This can happen either because e

k

is chosen so large

that the stipulated tolerance is too easily satised, or if the

difference between m

k1

and m

k

is so small that there is lit-

tle change between the (k1)th sample-path solution X

k1

and the kth sample-path solution X

k

. To avoid these sce-

narios, the conditions C.2 and C.3 impose a lower bound

on the rate at which the error-tolerance sequence ]e

k

] and

I

N

F

O

R

M

S

h

o

l

d

s

c

o

p

y

r

i

g

h

t

t

o

t

h

i

s

a

r

t

i

c

l

e

a

n

d

d

i

s

t

r

i

b

u

t

e

d

t

h

i

s

c

o

p

y

a

s

a

c

o

u

r

t

e

s

y

t

o

t

h

e

a

u

t

h

o

r

(

s

)

.

A

d

d

i

t

i

o

n

a

l

i

n

f

o

r

m

a

t

i

o

n

,

i

n

c

l

u

d

i

n

g

r

i

g

h

t

s

a

n

d

p

e

r

m

i

s

s

i

o

n

p

o

l

i

c

i

e

s

,

i

s

a

v

a

i

l

a

b

l

e

a

t

h

t

t

p

:

/

/

j

o

u

r

n

a

l

s

.

i

n

f

o

r

m

s

.

o

r

g

/

.

Pasupathy: On Choosing Parameters in RA Algorithms for Stochastic Root Finding

Operations Research 58(4, Part 1 of 2), pp. 889901, 2010 INFORMS 895

the sample-size sequence ]m

k

] go to their respective lim-

its. We discuss specic examples of sequences that satisfy

conditions C.1, C.2, and C.3 in 5.3.

As a measure of effectiveness, we use the product of

work and squared error. Therefore, at the end of the kth iter-

ation, if the retrospective solution is X

k

and the total num-

ber of simulation calls expended from iterations 1 through k

is W

k

, then the random variable of interest is W

k

[X

k

x

[

2

,

with smaller values of the random variable being better.

See 6 for more on this measure.

We also remind the reader of the stochastic version O

()

of the O() relation, useful in discussing stochastic conver-

gence. By X

n

=O

(1) we mean that the sequence of ran-

dom variables ]X

n

] is such that for every e >0 there exist

M

e

, N

e

such that E

n

(M

e

) E

n

(M

e

) >1e for all n >N

e

,

where E

n

is the distribution function of X

n

. Furthermore,

we say X

n

is O

(Y

n

) if X

n

,Y

n

is O

(1). Therefore, if ]X

n

]

and ]Y

n

] are sequences of random variables that converge

to zero wp1, and if X

n

is O

(Y

n

), we say in loose terms

that ]X

n

] converges at least as fast as ]Y

n

].

We are now ready to state two results that establish the

sense in which it is benecial to choose sequences ]e

k

] and

]m

k

] that satisfy conditions C.1, C.2, and C.3.

Theorem 5. Assume that the kth sample-path problem is

solved successfully wp1, i.e., a retrospective solution X

k

is

found during the kth iteration so that [X

k

X

k

[ e

k

wp1,

where X

k

is the unique sample-path solution to the kth

sample-path problem. Assume that ]e

k

] 0 and ]m

k

] ~.

Let W

k

=

k

]=1

N

]

m

]

be the total number of simulation calls

expended from iterations 1 through k in solving the sample-

path problems, where N

]

is the number of points observed

during the ]th iteration and m

]

is the sample-size used to

generate the sample-path function during the ]th iteration.

If the sequences ]e

k

], ]m

k

] satisfy conditions C.1, C.2, and

C.3, then W

k

[X

k

x

[

2

=O

(1).

Proof. In solving the sample-path problem during the

kth iteration, the initial solution is X

k1

, and the objective

is to reach a point within a ball of radius e

k

centered on X

k

.

Let N

k

be the number of points visited in achieving this.

Then, because the sample size used during the kth iteration

is m

k

, the total work done up to the kth iteration is W

k

=

k

]=1

N

]

m

]

. We will now prove the result in two cases char-

acterized by whether the numerical procedure used to solve

the sample-path problems exhibits (i) polynomial conver-

gence or (ii) linear convergence.

Case (i) (polynomial convergence): Recall that for the

kth sample-path problem, [X

k1

X

k

[ is the initial dis-

tance to the solution, and e

k

is the stipulated error toler-

ance. Because the numerical procedure used for solving the

sample-path problem converges superlinearly with Q-order

>1, the number of points visited

N

k

=O

_

1

1

log

_

log

loge

k

log[X

k1

X

k

[

__

. (1)

The above expression for N

k

is obtained upon noting that

the errors for a numerical procedure exhibiting polyno-

mial convergence look like [X

k1

X

k

[, [X

k1

X

k

[

,

[X

k1

X

k

[

2

, . . . , and then solving for the smallest n such

that [X

k1

X

k

[

n

e

k

.

We know from the CLT on X

k

(Theorem 4 and Shapiro

2000), and because X

k1

is located within a ball of radius

e

k1

around X

k1

, that [X

k1

X

k

[ = O

(1,

m

k1

1,

m

k

e

k1

), and so

N

k

=O

_

1

1

log

_

log

loge

k

log(1,

m

k1

1,

m

k

e

k1

)

__

.

From the above expression for N

k

, and because the condi-

tion C.1(b) holds,

N

k

=O

(1) and W

k

=

k

_

]=1

N

]

m

]

=O

_

k

_

]=1

m

]

_

. (2)

Again, from the CLT on X

k

and the relation between X

k

and X

k

, we know that [X

k

x

[

2

= O

((1,

m

k

e

k

)

2

).

Therefore, if ]e

k

] and ]m

k

] are chosen to satisfy conditions

C.2 and C.3,

W

k

[X

k

x

[

2

=O

__

k

_

]=1

m

]

__

1

m

k

e

k

_

2

_

=O

(1).

Case (ii) (linear convergence): Proof is very similar to

the previous case, with the condition C.1(a) active instead

of C.1(b), and the following alternate expression for N

k

instead of (1):

N

k

=O

_

1

1

logr

_

log

e

k

[X

k1

X

k

[

__

, (3)

where r is a constant satisfying 0 - r - 1. The expres-

sion for N

k

is obtained upon noting that the errors for a

numerical procedure exhibiting linear convergence look

like [X

k1

X

k

[, r[X

k1

X

k

[, r

2

[X

k1

X

k

[, . . . ,

and then solving for the smallest n such that

r

n

[X

k1

X

k

[ e

k

.

Having shown that conditions C.1, C.2, and C.3 ensure

that the random variable of interest W

k

[X

k

x

[

2

is O

(1),

we now turn to what happens when one or more of the

conditions C.1, C.2, and C.3 are violated. We show in The-

orem 6 that under Assumption 2, when at least one of the

conditions C.1, C.2, or C.3 is violated, the random variable

W

k

[X

k

x

[

2

~.

Assumption 2. The sequence

](1,

m

k

e

k

)

2

[X

k

x

[

2

]

J

+,

where Pr]+ =0] =0.

In loose terms, Assumption 2 states that the RA algo-

rithm in use does not magically know the location of the

I

N

F

O

R

M

S

h

o

l

d

s

c

o

p

y

r

i

g

h

t

t

o

t

h

i

s

a

r

t

i

c

l

e

a

n

d

d

i

s

t

r

i

b

u

t

e

d

t

h

i

s

c

o

p

y

a

s

a

c

o

u

r

t

e

s

y

t

o

t

h

e

a

u

t

h

o

r

(

s

)

.

A

d

d

i

t

i

o

n

a

l

i

n

f

o

r

m

a

t

i

o

n

,

i

n

c

l

u

d

i

n

g

r

i

g

h

t

s

a

n

d

p

e

r

m

i

s

s

i

o

n

p

o

l

i

c

i

e

s

,

i

s

a

v

a

i

l

a

b

l

e

a

t

h

t

t

p

:

/

/

j

o

u

r

n

a

l

s

.

i

n

f

o

r

m

s

.

o

r

g

/

.

Pasupathy: On Choosing Parameters in RA Algorithms for Stochastic Root Finding

896 Operations Research 58(4, Part 1 of 2), pp. 889901, 2010 INFORMS

true solution x

relative to the sample-path solution X

k

.

Because X

k

=X

k

A

k

, where A

k

is a random variable sup-

ported on a sphere of radius e

k

, the factor (1,

m

k

e

k

)

2

is the correct scaling for stabilizing the random variable

[X

k

x

[

2

.

Theorem 6. Let the retrospective solutions ]X

k

] satisfy

Assumption 2. Then, if at least one of the conditions C.1,

C.2, or C.3 are violated, W

k

[X

k

x

[

2

~.

Proof. If condition C.1 is violated, and if the limit in

the denition of Q-factors exists, the number of points

N

k

~. Because Assumption 2 holds, for given > 0

there exists o() >0 so that

Pr](1,

m

k

e

k

)

2

[X

k

x

[

2

o()] 1 (4)

for large enough k. Also,

W

k

[X

k

x

[

2

N

k

m

k

(1,

m

k

e

k

)

2

[X

k

x

[

2

(1,

m

k

e

k

)

2

. (5)

Using (4), and because N

k

~, we see that for any given

M, >0,

Pr

_

N

k

m

k

(1,

m

k

e

k

)

2

[X

k

x

[

2

(1,

m

k

e

k

)

2

M

_

Pr

__

N

k

m

k

(1,

m

k

e

k

)

2

M

o()

_

](1,

m

k

e

k

)

2

[X

k

x

[

2

o()]

_

=2, (6)

for large enough k, and some o() >0. Conclude from (5)

and (6) that W

k

[X

k

x

[

2

~.

If conditions C.2 or C.3 are violated, the proof is

similarafter noticing that

W

k

[X

k

x

[

2

_

k

_

]=1

m

]

_

(1,

m

k

e

k

)

2

[X

k

x

[

2

(1,