Professional Documents

Culture Documents

DT131 Easier Approaches Harder Problems

DT131 Easier Approaches Harder Problems

Uploaded by

Divya ChanderOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

DT131 Easier Approaches Harder Problems

DT131 Easier Approaches Harder Problems

Uploaded by

Divya ChanderCopyright:

Available Formats

www.kimballgroup.

com

Number 131, February 3, 2011

Design Tip #131 Easier Approaches For Harder Problems By Ralph Kimball Data warehouses are under intense architectural pressure. The business world has become obsessed with data, and new opportunities for adding data sources to our data warehouse environments are arriving every day. In many cases analysts within our organizations are discovering "data features" that can be demonstrably monetized by the business to improve the customer experience, or increase the conversion rate to purchase a product, or discover a demographic characteristic of particularly valuable customers. When the analyst makes a compelling business case to bring a new data source into our environment there is often pressure to do it quickly. And then the hard work begins. How do we integrate the new data source with our current environment? Do the keys match? Are geographies and names defined the same way? What do we do about the quality issues in new data source that are revealed after we do some sleuthing with our data profiling tool? We often do not have the luxury of conforming all the attributes in the new data source to our current data warehouse content, and in many cases we cannot immediately control the data collection practices at the source in order to eliminate bad data. As data warehouse professionals we are expected to solve these problems without complaining! Borrowing a page from the agile playbook, we need to address these big problems with an iterative approach that delivers meaningful data payloads to the business users quickly, ideally within days or weeks of first seeing the requirement. Let's tackle the two hardest problems: integration and data quality. Incremental Integration In a dimensionally modeled data warehouse, integration takes the form of commonly defined fields in the dimension tables appearing in each of the sources. We call these conformed dimensions. Take for example a dimension called Customer that is attached to virtually every customer facing process for which we have data in the data warehouse. It is possible, maybe even typical, for the original customer dimensions supplied with each source to be woefully incompatible. There may be dozens or even hundreds of fields that have different field names and whose contents are drawn from incompatible domains. The secret powerful idea for addressing this problem is not to take on all the issues at once. Rather, a very small subset of the fields in the Customer dimension is chosen to participate in the conforming process. In some cases, special new "enterprise fields" are defined that are populated in a consistent way across all of the otherwise incompatible sources. For example a new field could be called "enterprise customer category." Although this sounds like a rather humble start on the larger integration challenge, even this one field allows drilling across all of the data sources that have added this field to their customer dimension in a consistent way. Once one or more conformed fields exist in some or hopefully all of the data sources, then you need a BI tool that is capable of issuing a federated query. We have described this final BI step many times in our classes and books, but the essence is that you fetch back separate answer sets from the disparate data sources, each constrained and grouped only on the conformed fields, and then you sort merge these answer sets into the final payload delivered by the BI tool to the business user. There are many powerful advantages to this approach, including the ability to proceed incrementally by adding new conformed fields within the important dimensions, by adding new data sources that sign up to using the conformed fields, and being able to perform highly distributed federated queries across incompatible technologies and physical locations. What's not to like about this?

Incremental Data Quality Important issues of data quality can be addressed with the same mindset that we used with the integration problem. In this case, we have to accept the fact that very little corrupt or dirty data can be reliably fixed by the data warehouse team downstream from the source. The long-term solution to data quality involves two major steps: first we have to diagnose and tag the bad data so that we can avoid being misled while making decisions; and second, we need to apply pressure where we can on the original sources to improve their business practices so that better data enters the overall data flow. Again, like integration, we chip off small pieces of the data quality problem, in a nondisruptive and incremental way so that over a period of time we develop a comprehensive understanding of where the data problems reside and what progress we're making in fixing them. The data quality architecture that we recommend is to introduce a series of quality tests, or "screens" that watch the data flow and post error event records into a special dimensional model in the back room whose sole function is to record data quality error events. Again, the development of the screens can proceed incrementally from a modest start to gradually create a comprehensive data quality architecture suffusing all of the data pipelines from the original sources through to the BI tool layer. We have written extensively about the two overarching challenges of integration and data quality. Besides our modeling and ETL books, Ive written two white papers on these topics if you want to take a deeper dive into the detailed techniques. While these papers were commissioned by Informatica, the content is vendor agnostic. Links to the white papers (An Architecture for Data Quality written in October 2007 and Essential Steps to an Integrated DW written in February 2008) are available from our website, however be forewarned that youll need to register with Informatica to download them.

You might also like

- 5.case ToolsDocument16 pages5.case ToolsﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞﱞNo ratings yet

- Company Profile - Trans Shipping Agency 2Document4 pagesCompany Profile - Trans Shipping Agency 2Muhammad Abdu Rahman BatuBaraNo ratings yet

- Big Data TestingDocument9 pagesBig Data TestingbipulpwcNo ratings yet

- Report Requirements DatawarehouseDocument41 pagesReport Requirements DatawarehouseYuki ChanNo ratings yet

- Manish SharmaDocument1 pageManish SharmaPiyush ChauhanNo ratings yet

- Aws Data Analytics FundamentalsDocument15 pagesAws Data Analytics Fundamentals4139 NIVEDHA S100% (1)

- Meeting DWH QA Challenges Part 1Document9 pagesMeeting DWH QA Challenges Part 1Wayne YaddowNo ratings yet

- Why You Need A Data WarehouseDocument8 pagesWhy You Need A Data WarehouseNarendra ReddyNo ratings yet

- Module 6 AssignmentDocument3 pagesModule 6 AssignmentPATRICIA MAE BELENNo ratings yet

- 2014 Solutions Review Data Integration Buyers Guide MK63Document18 pages2014 Solutions Review Data Integration Buyers Guide MK63wah_hasNo ratings yet

- Database Design and Management WhitepaperDocument10 pagesDatabase Design and Management WhitepaperPrince Sorison OriatareNo ratings yet

- PlanningDocument12 pagesPlanningFlorrNo ratings yet

- Data Warehouse and Business Intelligence Testing: Challenges, Best Practices & The SolutionDocument15 pagesData Warehouse and Business Intelligence Testing: Challenges, Best Practices & The SolutionAnonymous S5fcPaNo ratings yet

- Data Cleaning Research PaperDocument5 pagesData Cleaning Research Paperzeiqxsbnd100% (1)

- DataStage Product Family Architectural Overview WhitepaperDocument34 pagesDataStage Product Family Architectural Overview WhitepaperfortizandresNo ratings yet

- Data AnalyticsDocument8 pagesData AnalyticssoniiimoniiikaluNo ratings yet

- Free Research Paper On Data WarehousingDocument4 pagesFree Research Paper On Data Warehousinggpxmlevkg100% (1)

- Data Mining Unit - 1 NotesDocument16 pagesData Mining Unit - 1 NotesAshwathy MNNo ratings yet

- DMDWDocument4 pagesDMDWRKB100% (1)

- Fables FactsDocument7 pagesFables FactsAnirban SarkerNo ratings yet

- Test 8Document6 pagesTest 8Robert KegaraNo ratings yet

- Thesis Data WarehouseDocument8 pagesThesis Data Warehousesyn0tiwemym3100% (2)

- Data Warehouse Thesis PDFDocument5 pagesData Warehouse Thesis PDFgjbyse71100% (2)

- Executive Overview: What Exists AlreadyDocument5 pagesExecutive Overview: What Exists AlreadyrizzappsNo ratings yet

- An Introduction To SQL Server 2005 Integration ServicesDocument18 pagesAn Introduction To SQL Server 2005 Integration ServicesAndy SweepstakerNo ratings yet

- Dissertation Data WarehouseDocument8 pagesDissertation Data WarehouseWriteMyPapersCanada100% (1)

- Building A DWDocument3 pagesBuilding A DWmmjerryNo ratings yet

- 1.4 The Database Approach: Four Entities of Enterprise Data ModelDocument12 pages1.4 The Database Approach: Four Entities of Enterprise Data ModelJoychii SyNo ratings yet

- Data Warehouse HomeworkDocument8 pagesData Warehouse Homeworkqmgqhjulg100% (1)

- DataStage MatterDocument81 pagesDataStage MatterEric SmithNo ratings yet

- Business IntelligenceDocument27 pagesBusiness IntelligencemavisNo ratings yet

- See How Talend Helped Domino's: Integrate Data From 85,000 SourcesDocument6 pagesSee How Talend Helped Domino's: Integrate Data From 85,000 SourcesDastagiri SahebNo ratings yet

- Business IntelligenceDocument144 pagesBusiness IntelligencelastmadanhireNo ratings yet

- All The Ingredients For Success: Data Governance, Data Quality and Master Data ManagementDocument22 pagesAll The Ingredients For Success: Data Governance, Data Quality and Master Data ManagementStrauNo ratings yet

- Data Warehousing Research Paper TopicsDocument8 pagesData Warehousing Research Paper Topicsgvyztm2f100% (1)

- Oracle: Big Data For The Enterprise: An Oracle White Paper October 2011Document15 pagesOracle: Big Data For The Enterprise: An Oracle White Paper October 2011Manish PalaparthyNo ratings yet

- Data Warehouse Dissertation TopicsDocument7 pagesData Warehouse Dissertation TopicsBestOnlinePaperWritersBillings100% (1)

- Fall 2013 Assignment Program: Bachelor of Computer Application Semester 6Th Sem Subject Code & Name Bc0058 - Data WarehousingDocument9 pagesFall 2013 Assignment Program: Bachelor of Computer Application Semester 6Th Sem Subject Code & Name Bc0058 - Data WarehousingMrinal KalitaNo ratings yet

- DataStage MatterDocument81 pagesDataStage MatterShiva Kumar0% (1)

- Data Warehousing and Business IntelligenceDocument15 pagesData Warehousing and Business IntelligenceBenz ChoiNo ratings yet

- Pbbi Data Warehousing Keys To Success WP UsaDocument16 pagesPbbi Data Warehousing Keys To Success WP UsasubramanyamsNo ratings yet

- Data Warehousing Keys To Success WP UsDocument16 pagesData Warehousing Keys To Success WP UssatheeshbabunNo ratings yet

- Unit 3 NotesDocument20 pagesUnit 3 NotesRajkumar DharmarajNo ratings yet

- Customer Relationship Management: Service Quality Gaps, Data Warehousing, Data MiningDocument6 pagesCustomer Relationship Management: Service Quality Gaps, Data Warehousing, Data MiningAsees Babber KhuranaNo ratings yet

- Dissertation Topics in Data WarehousingDocument5 pagesDissertation Topics in Data WarehousingWriteMyPsychologyPaperUK100% (1)

- DWH Interview Questions - Data WarehousingDocument57 pagesDWH Interview Questions - Data WarehousingMichael ColdNo ratings yet

- Data Warehouse Augmentation, Part 1 - Big Data and Data Warehouse AugmentationDocument7 pagesData Warehouse Augmentation, Part 1 - Big Data and Data Warehouse Augmentationmukhopadhyay00No ratings yet

- Conceptual Modelling in Data WarehousesDocument7 pagesConceptual Modelling in Data WarehousesMuhammad JavedNo ratings yet

- BMD0003 Intelligent Business Information SystemsDocument11 pagesBMD0003 Intelligent Business Information SystemsRabel ShaikhNo ratings yet

- Business Intelligence Introduced by HowardDocument59 pagesBusiness Intelligence Introduced by HowardkuldiipsNo ratings yet

- Data Warehousing BC0058 BCA Sem6 Fall2013 AssignmentDocument13 pagesData Warehousing BC0058 BCA Sem6 Fall2013 AssignmentShubhra Mohan MukherjeeNo ratings yet

- Virtual Versus Physical Data IntegrationDocument6 pagesVirtual Versus Physical Data IntegrationgeoNo ratings yet

- CS614 Final Term Solved Subjectives With Referencesby MoaazDocument27 pagesCS614 Final Term Solved Subjectives With Referencesby MoaazchiNo ratings yet

- BDA Unit - 4Document8 pagesBDA Unit - 4ytpre1528No ratings yet

- Decision Support System: Unit 1Document34 pagesDecision Support System: Unit 1ammi890No ratings yet

- Populating A DW With SS2KDocument5 pagesPopulating A DW With SS2Kwinit1478No ratings yet

- Real-Time Database Sharing: What Can It Do For Your Business?Document10 pagesReal-Time Database Sharing: What Can It Do For Your Business?George AmousNo ratings yet

- Knight's Microsoft Business Intelligence 24-Hour Trainer: Leveraging Microsoft SQL Server Integration, Analysis, and Reporting Services with Excel and SharePointFrom EverandKnight's Microsoft Business Intelligence 24-Hour Trainer: Leveraging Microsoft SQL Server Integration, Analysis, and Reporting Services with Excel and SharePointRating: 3 out of 5 stars3/5 (1)

- Spreadsheets To Cubes (Advanced Data Analytics for Small Medium Business): Data ScienceFrom EverandSpreadsheets To Cubes (Advanced Data Analytics for Small Medium Business): Data ScienceNo ratings yet

- Navigating Big Data Analytics: Strategies for the Quality Systems AnalystFrom EverandNavigating Big Data Analytics: Strategies for the Quality Systems AnalystNo ratings yet

- Building and Operating Data Hubs: Using a practical Framework as ToolsetFrom EverandBuilding and Operating Data Hubs: Using a practical Framework as ToolsetNo ratings yet

- The Origins of Michael Burry, OnlineDocument2 pagesThe Origins of Michael Burry, OnlineekidenNo ratings yet

- Invoice 41071Document2 pagesInvoice 41071Zinhle MpofuNo ratings yet

- Export Import Newsletter 18NOV2022Document12 pagesExport Import Newsletter 18NOV2022sbsreddyNo ratings yet

- Dematic - JDDocument1 pageDematic - JDVarun HknzNo ratings yet

- Spookyworld, Inc. v. Town of Berlin, 346 F.3d 1, 1st Cir. (2003)Document12 pagesSpookyworld, Inc. v. Town of Berlin, 346 F.3d 1, 1st Cir. (2003)Scribd Government DocsNo ratings yet

- Statement of Cash FlowsDocument30 pagesStatement of Cash FlowsNocturnal Bee100% (1)

- Afd - AENT220218Document10 pagesAfd - AENT220218Asbini SitharamNo ratings yet

- Change ManagementDocument14 pagesChange ManagementLegese TusseNo ratings yet

- 3 PLDocument26 pages3 PLNigam MohapatraNo ratings yet

- EAM PresentationDocument27 pagesEAM PresentationasadnawazNo ratings yet

- Designation ListDocument16 pagesDesignation ListnamratabarotNo ratings yet

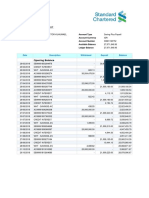

- Statement of Account: Opening Balance 0.00Document2 pagesStatement of Account: Opening Balance 0.00William VictorNo ratings yet

- Script 01Document21 pagesScript 01Raj RautNo ratings yet

- HCL Named A Leader in New Life Sciences IT Outsourcing Research Report (Company Update)Document3 pagesHCL Named A Leader in New Life Sciences IT Outsourcing Research Report (Company Update)Shyam SunderNo ratings yet

- The Duty of Good Faith in Franchise AgreementsDocument16 pagesThe Duty of Good Faith in Franchise Agreementsanne_uniNo ratings yet

- Direct Costing and Absorption Costing QuestionsDocument2 pagesDirect Costing and Absorption Costing QuestionsAbigailGraceCascoNo ratings yet

- 1661937077369693Document3 pages1661937077369693Ral's Resources, Inc.No ratings yet

- Role and Functions of Reserve Bank of IndiaDocument5 pagesRole and Functions of Reserve Bank of Indiaanusaya1988No ratings yet

- Store Accounting and DisposalDocument45 pagesStore Accounting and DisposalAnand Dubey100% (2)

- A Product Is What The Company Has To OfferDocument4 pagesA Product Is What The Company Has To OfferapplesbyNo ratings yet

- Case Analysis - 2Document2 pagesCase Analysis - 2Suyash KulkarniNo ratings yet

- What Is StrategyDocument74 pagesWhat Is StrategyBrawinNo ratings yet

- Bill Date 04/12/2021 Member Number 20222 Acount Number 20178245Document1 pageBill Date 04/12/2021 Member Number 20222 Acount Number 20178245edgarNo ratings yet

- Victoria Vs Inciong Case DigestDocument1 pageVictoria Vs Inciong Case DigestMelody Lim DayagNo ratings yet

- Basic Economics Understanding TestDocument5 pagesBasic Economics Understanding TestDr Rushen SinghNo ratings yet

- Newsletter SampleDocument35 pagesNewsletter Samplemkss11_kli053No ratings yet

- Private Equity - Investment Banks - United Arab Emirates - Draft 1Document8 pagesPrivate Equity - Investment Banks - United Arab Emirates - Draft 1hdanandNo ratings yet

- Use Financial Terms To Get Management To Take Notice of Your Quality MessageDocument12 pagesUse Financial Terms To Get Management To Take Notice of Your Quality MessageAnahii KampanithaNo ratings yet