Professional Documents

Culture Documents

05301722

05301722

Uploaded by

Heyam Um AdamCopyright:

Available Formats

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5822)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Testing Staircase PressurizationDocument5 pagesTesting Staircase Pressurizationthanhlamndl100% (1)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Manual e Stufa Sara Auto Constructor EsDocument15 pagesManual e Stufa Sara Auto Constructor EsDamián Follino80% (5)

- GPT Au480Document1 pageGPT Au480xuanhungyteNo ratings yet

- Large Data Set Lds-EdexcelDocument146 pagesLarge Data Set Lds-EdexcelhadzNo ratings yet

- Gold BugDocument5 pagesGold BugMarky MaypoNo ratings yet

- AWRDocument8 pagesAWRChetan PawarNo ratings yet

- Bosch BPT S String Inverters PDFDocument4 pagesBosch BPT S String Inverters PDFUsmanNo ratings yet

- Thermodynamics (Module 1)Document22 pagesThermodynamics (Module 1)Christine SalamatNo ratings yet

- JCN 10 774 Wald TestDocument1 pageJCN 10 774 Wald TestEnggar Rindu PrimandaniNo ratings yet

- FR - Chemical KineticsDocument5 pagesFR - Chemical KineticsKenneth Dionysus SantosNo ratings yet

- Linux Privilege Escalation Using Capabilities: Table of ContentDocument8 pagesLinux Privilege Escalation Using Capabilities: Table of Contentmr z3iyaNo ratings yet

- General Science Notes: How Rapidly Can Wood Petrify?Document2 pagesGeneral Science Notes: How Rapidly Can Wood Petrify?Milan StepanovNo ratings yet

- 4.hardcopy Devices-MerinDocument20 pages4.hardcopy Devices-MerinMerin ThomasNo ratings yet

- Pirates InesDocument17 pagesPirates Inesdjelaibia2789No ratings yet

- Modeling Global Tsunamis With TelemacDocument14 pagesModeling Global Tsunamis With TelemacLa SailleNo ratings yet

- SALINITYDocument6 pagesSALINITYNEENU M GNo ratings yet

- VBA12 - Monte Carlo SimulationDocument3 pagesVBA12 - Monte Carlo SimulationzarasettNo ratings yet

- jss3 NotesDocument33 pagesjss3 NotesQueen TochiNo ratings yet

- TN-1-IPL Applicators Modification and New Supporting Software VersionsDocument4 pagesTN-1-IPL Applicators Modification and New Supporting Software VersionsHill WnagNo ratings yet

- IR400 Data SheetDocument2 pagesIR400 Data Sheetdarkchess76No ratings yet

- Grade 5 Specifications CambridgeDocument2 pagesGrade 5 Specifications CambridgekatyaNo ratings yet

- Secondary Ion Mass SpectrometryDocument4 pagesSecondary Ion Mass SpectrometryimamuddeenNo ratings yet

- Arstruct Reviewer Compilation (GRP 7)Document9 pagesArstruct Reviewer Compilation (GRP 7)Nicole FrancisNo ratings yet

- Gregory M Sped841 UnitlessonDocument1 pageGregory M Sped841 Unitlessonapi-271896767No ratings yet

- Effectiveness of Brandt Daroff, Semont and Epley ManeuversDocument8 pagesEffectiveness of Brandt Daroff, Semont and Epley ManeuversNestri PrabandaniNo ratings yet

- PCM FlasherDocument5 pagesPCM FlasherДрагиша Небитни ТрифуновићNo ratings yet

- Contact: ULVAC, IncDocument8 pagesContact: ULVAC, Inc黄爱明No ratings yet

- Influence of Nitrogen On Hot Ductility of Steels and Its Relationship To Problem of Transverse CrackingDocument5 pagesInfluence of Nitrogen On Hot Ductility of Steels and Its Relationship To Problem of Transverse CrackingMada TetoNo ratings yet

- 432 - Kishankumar Goud - CJDocument86 pages432 - Kishankumar Goud - CJKajal GoudNo ratings yet

- BRUCE MK4 Handling ProcedureDocument81 pagesBRUCE MK4 Handling ProcedureSamo SpontanostNo ratings yet

05301722

05301722

Uploaded by

Heyam Um AdamOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

05301722

05301722

Uploaded by

Heyam Um AdamCopyright:

Available Formats

Credit Risk Assessment Model of Commercial Banks Based on Support Vector Machines

Xin_ying Zhang

Department of Management Science and Engineering School of Economy and Management in Harbin Institute of Technology Harbin, P.R.China alpha3068@sina.com

Chong Wu

Department of Management Science and Engineering School of Economy and Management in Harbin Institute of Technology Harbin, P.R.China wuchong@hit.edu.cn

Avv_ Federico Ferretti

Brunel Law School of Brunel University West London, Uxbridge, United Kingdom federico.ferretti@brunel.ac.uk

AbstractScope: Commercial banks, as the key of the nations economy and the center of financial credit, play a multiple irreplaceable role in the financial system. Credit risks threaten the economic system as a whole. Therefore, predicting bank financial credit risks is crucial to prevent and lessen the incoming negative effects on the economic system. Objective: This study aims to apply a credit risk assessment model based on support vector machines (SVMs) in a Chinese case, after analyzing the credit risk rules and building a credit risk system. After the modeling, it presents a comprehensive computational comparison of the classification performances of the techniques tested, including Back-Propagation Neural Network (BPN) and SVMs. Method: In this empirical study, we utilize statistical product and service solutions (SPSS) for the factor analysis on the financial data from the 157 companies and Matlab and Libsvm toolbox for the experimental analysis. Conclusion: We compare the assessment results of SVMs and BPN and get the indication that SVMs are very suitable for the credit risk assessment of commercial banks. Empirical results show that SVMs are effective and more advantageous than BPN. SVMs, with the features of simple classification hyperplane, good generalization ability, accurate goodness of fit, and strong robustness, have a better developing prospect although there are still some problems with them, such as the space mapping of the kernels, the optimizing scale, and so on. They are worthy of our further exploration and research.

Keywords-credit risk assessment; commercial bank; support vector machine

I. INTRODUCTION As one of the most important parts of the national financial system, commercial banks play an irreplaceable role in economy development and currency stabilization, like fund collection, fund flow guidance and the regulation of the social supply and demand [1].However commercial banks often encounter a lot of risks. Of these, credit risk has commonly been identified as the key risk in terms of its influence on bank performance [2] and bank failure. So, credit risk management and controlling have great effects on commercial banks. The exploration of credit risk assessment methods can be traced back to the 1930s and in the 1960s, it becomes hot. Roughly, it

Sponsored by Heilongjiang Youth Science Fund QC04C25

has experienced three developing stages -- the proportion analysis, statistical analysis and artificial intelligence. After the traditional proportion analysis, the introduction of statistical analysis overcomes the shortcomings of traditional proportional analysis, such as poor comprehensive analysis and the lack of quantitative analysis. But it also has many problems. Since the 1980s, the artificial intelligence technologies have been introduced into the credit risk assessment and they overcome the shortcoming that statistical methods request strong assumptions and static risks reflection. Especially NN, a non - parametric method, has self-organizing, self-adaptive, self-learning characteristics. It has not only the nonlinear mapping capabilities and generalization ability, but also the strong robustness and high prediction accuracy [3, 4]. But BPN has its own unavoidable shortcomings: (1) it is difficult to determine the network structure; (2) when training, it is easy to be at the local extreme vulnerability, with low training efficiency. According to the domestic status quo for the credit assessment like less accumulation of data, ineffective statistical methods, and neural networks with poorly learning capacity, this study introduces a generic learning algorithm ,support vector machines(SVMs), which is based on learning theory of small samples, and is used it in commercial bank credit risk assessment with achieved better results[5,6]. The main difference between BPN and SVMs is the principle of risk minimization. While BPN implements empirical risk minimization to minimize the error on the training data, SVMs implement the principle of Structural Risk Minimization by constructing an optimal separating hyperplane in the hidden feature space, using quadratic programming (QP) to find a unique solution. Recently SVMs, developed by Vapnik [7] receive increasing attention with remarkable results. In financial applications, time series prediction such as stock price indexing, and classification such as credit rating, and bankruptcy are main areas with SVMs [8].

978-1-4244-4639-1/09/$25.00 2009 IEEE

II. SUPPORT VECTOR MACHINES The basic procedure for applying SVMs to a classification model can be stated briefly as follows. First, map the input vectors into a feature space in a higher dimension. The mapping is either linear or non-linear, depending on the kernel function selected. Then, within the feature space, seek an optimal separation, i.e. construct a hyper-plane that separates two or more classes. Using the structural risk minimization rule, the training of SVMs always seeks a global optimal solution and avoids over-fitting. It, therefore, has the ability to deal with a large number of features. The decision function (or hyper-plane) determined by SVMs is composed of a set of support vectors, which are selected from the training samples. SVMs use a linear model to implement nonlinear class boundaries through some nonlinear mapping input vectors into a high-dimensional feature space. The linear model constructed in the new space can represent a nonlinear decision boundary in the original space. In the new space, an optimal separating hyperplane (OSH) is constructed. Thus, SVMs are known as the algorithm to find a special kind of linear model, the maximum margin hyperplane. The maximum margin hyperplane gives the maximum separation between decision classes. The training examples that are closest to the maximum margin hyperplane are called support vectors. All other training examples are irrelevant for defining the binary class boundaries [9]. As can be seen in figure1, the star points and the circular points stand for two groups of samples. The line in the middle is OSH and H1, H2 respectively stand for the hyperplanes which pass through the closest samples in either group. The two hyper lines are parallel to OSH with the same distances to it. The margin between them is called classification interval. The so-called OSH is the hyperplane which correctly separates the two categories of samples with the greatest optimal spacing. A simple description of the SVMs algorithm is provided as follows. The decision function of hyperplane used to classify is wTx+b=0, where x is the input vector, w is the adjustable weight vector, b is the bias vector and y is the corresponding expectation (target output).Assume that the two class labels which are represented by yi=+1 and yi=-1 are linearly separable. The inequalities (1) and (2) are as follows:

wTxi + b < 0

y i = 1

(2)

The linearly separable sample set (xi, yi) meets: (3) That is, the distance between the closest points of two groups equals to minimize

y i [( w T x) + b] - 1 0

2 , so to maximize the margin is equivalent to w

w .The hyperplane that meets the inequalities (3)

1 w 2

2

and minimizes

is the optimal hyperplane,

and the

input vector when the equality in (3) holds is called the support vector . Lagrange optimization can be used to turn the issue of optimal classification plane into its dual issue [10], with the same optimal value as that of the original issue. Hence, we can get the optimal solution through the Lagrange multipliers. The minimization procedure uses Lagrange multipliers and QP optimization methods to get the only optimal solution. The optimal classification function is n f ( x ) = sgn {(w T x ) + b} = sgn y (x x ) + b (4)

j=1

j j j

Where is the Lagrange multiplier corresponding to j every sample, and it can be attested that the corresponding sample is the support vector as long as one part of is not j zero, and b is the classification threshold and can be obtained from any support vector, or through getting the mid-value in any pair of support vectors from the two types. For the linearly no separable cases, a slack variable i, i=1,, n can be introduced with i=0[11] so that (3) can be written as:

y i ( w x i + b) + i 1

(5)

and the solution to find a generalized OSH, also called a soft margin hyperplane, can be obtained using the conditions:

1 w 2

wTxi + b 0

margin

y i = +1

(1)

w , b , 1 ,...

min

[

n

+ C

(6)

i]

i =1

w Txi + b 0

yi = +1

s.t.

yi (w +b) 1i ,

i =1,..., n

where

i 0

(7)

1 2 w in (9) is same as in the linearly separable case to control 2

w Txi + b < 0 y i = 1

the learning capacity, while C i controls the number of

H1 H2

Fig.1 OSH in Linearly Separable Case

i =1

misclassified errors, and C is chosen by the user as a non-negative parameter, deciding a trade-off between the

OSH

training error and the margin[12]. Larger value of C means assigning a higher penalty to errors. The solving procedures are similar to linear cases as long as the constraints should be

A.

Index System and Sample datum processing

a y

i i =1

= 0, , where 0 ai C , i = 1,2,..., n

(8)

In nonlinear cases, we can, through the nonlinear transformation, turn them into a linear one in high-dimensional linear space, and get the optimal separating plane in the transformed space. As long as the proper kernel function K ( xi , x j ) [13] is used to take the place of the inner product in the original space, a certain linear separation can be gained after the nonlinear transformation [14]. Thus we avoid a specific nonlinear transformation, and this separating function can be written as: n (9) f ( x ) = sgn i yi K ( x i , x j ) + b i =1 The classification function can be used to classify the credit data so as to determine whether or not to grant loans to enterprises [15]. The whole process of classification based on SVMs can be seen in Fig 2.

y

Sgn

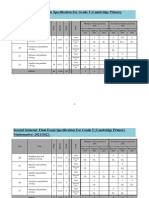

In this research, we ultimately select 16 indexes in credit risk assessment of commercial banks and get 4 factors with SPSS as can be seen in Tab.1. Credit risk is separated into two classes in this study. They are normality class and loss class. Normality class indicates the debtors can pay off the loan and interest on time without any risk while loss class indicates the debtors can not pay off the loan and interest on time. We use +1 to represent the normality risk class and -1 to represent the loss class when we use SVMs to assess credit risk. First, we work on the stability of the samples and eliminate the abnormal data, using twice or three times standard deviation testand ultimately obtain 157 sample data. Among these data from 157 enterprises, 80 enterprises' financial conditions are good and the risks for banks loans are small. We refer to these companies as normality class and we call the remaining 77 companies loss class because their financial conditions are bad and the possibility of default is larger. The numbers of the two types of samples are nearly the same, which is suitable for SVM study. Then we use SPSS for the factor analysis on the financial data from the 157 companies. Based on eigenvalue, we can extract 4 interpretation factors from the 16 indexes. They are the operating capacity factors, the refunding capacity factors, profiting capacity factors and loan type factors, as shown in Tab.1.

TABLE 1. THE FINANCIAL FACTORS AND INDEXES

1 y1

K ( x1 , x)

2 y2

K ( x2 , x)

n yn

K ( xn , x)

Factor No. Name Operating capacity factor No. X1 X2 X3 I X4 X5 X6

Index Description Sales income/total assets Turnover of total assets Turnover of fixed assets Turnover of mobile assets Inventory turnover Turnover of account receivable Working capital/total assets Current ratio Liquidity ratio Super liquid ratio Assets liability ratio Net profit ratio Return on equity Rate of the cost-profit Yield rate on assets Types of loans

x1

x2

xn

Figure2. Classification Process Based on SVMs

III. AN EMPIRICAL STUDY The research data used in this study are obtained from Harbin Branch of Industrial and Commercial Bank in China. While collecting data, we pay much attention to sample features in different industries. As enterprises of different industries have various business environments and ranges, the enterprises' financial and non-financial indexes are not comparable. So, we choose the short-term loan sample data in same the industry for the model. The whole process is described as the following: (1) establish the index system and select the samples including the normality class companies and the loss class companies. (2) construct the SVM model, and choose the final training set. (3) classify the testing data and make the comparison.

II

X7 Refunding capacity factor X8 X9 X10 X11 Profiting capacity factor X12 X13 X14 X15 IV Loan type factor X16

III

On this basis, we divide the sample set into a training sample set and a testing sample set. In order to reflectin a better way, the learning ability of SVMs for small sample

data and the generalization ability of the modelwe randomly choose 35% (56 companies) as a training sample set to construct the SVM model, and the remaining 65% (101 companies) as the testing sample set to test the generalization ability of the model. The numbers of two types of samples in each set are approximately equal. B. SVM modeling

IV. SUMMARY AND CONLUSION The SVMs are machine learning algorithms based on statistical learning theory. In this study, an experiment is performed to evaluate the comparative performances of this algorithm and another popular classifierBPN in credit risk assessment of a commercial bank. Results from this experiment reveal the features of SVMs, like simple classification plane, good generalization ability, accurate goodness of fit, strong robustness and so on. It has a better developing prospect. However, due to the short study time in this field, there are still some problems, such as the space mapping of the kernels, the optimizing scale, and so on. They are worthy of our continuous exploration and research. REFERENCES

[1] P. Han and Y. M. Xi, Analysis on credit risks of commercial banks in China in the period of economic transformation, Contemporary Economic Sciences, Shaanxi, Chinavol.4, pp: 22-26, 1999. (references) [2] J.F.Sinkey, Commercial Bank Financial Management in the Financial-services Industry, J.L. Huang Trans. Beijing: China Rennin Univ. Press, 2005, p.279. [3] I.Kaastra and M. Boyd, Designing a neural network for forecasting financial and economic time series, Neurocomputing, Spectrum, IEEE, vol.10, pp: 215-236, 1996. [4] J.E.Boritz and D.B.Kennedy, Effectiveness of neural network types for prediction of business failure, Expert System with Applications, Elsevier Science, vol.9, pp: 503~512, 1995. [5] K. S. Shin, T. S. Lee, and H.J. Kim, An application of support vector machines in bankruptcy prediction model, Expert System with Applications, Elsevier Science, vol. 28, pp: 127-135, 2005. [6] J. H. Min and Y.C. Lee, Bankruptcy prediction using support vector machine with optimal choice of kernel function, Expert System with Applications, Elsevier Science, vol.28, pp.:603-614, 2005. [7] V. Vapnik, The Nature of Statistical Learning Theory, New York: Spring-Verlag, 1995. [8] S. H. Min, J.M. Lee, and I. Han, Hybrid genetic algorithms and support vector machines for bankruptcy prediction, Expert Systems with Applications, Elsevier Science, vol. 31, pp.:652-660, 2006. [9] Y. C. Lee, Application of support vector machines to corporate credit rating prediction, Expert Systems with Applications, Elsevier Science, vol.33, pp.:67-74, 2007. [10] C.J.C. Burges, A tutorial on support vector machines for pattern recognition, Data Mining and Knowledge Discovery, Springer Netherlands, vol. 2, pp.:955~974, 1998. [11] C. Cortes and V.N. Vapnik, Support vector networks, Machine Learning, Springer Netherlands, vol.20, pp.: 273297, 1995. [12] M. Minoux, Mathematical Programming: Theory and Algorithms, New York: Wiley, pp.:224-226, 1986. [13] B. Boser, I. Guyon, and V.N. Vapnik, A training algorithm for optimal margin classifiers, in: Proceedings of the Fifth Annual Workshop on Computer Learning Theory, ACM, Pittsburgh, PA, USA,pp. 144152,1992. [14] J. H. Xiao and J. P. Wu. SVM model with unequal sample number between classes, Computer Science, Chongqing, China, vol. 2, pp.: 165 ~ 167, 2003. (reference) [15] C.G. Yang, Study of Credit Risk Assessment in Commercial Banks, Technology Economy and Management, Beijing, North China Electric Power Univ. P.R.China, 2006. (reference)

Based on the analysis above, we can construct the sample set (x, y), where the dimension of x is 4 and y is the categorical attribute of the samplesFor the normality class, y = 1, and for the loss class, y =- 1. For the choice of the function of kernel, empirical researches show that SVMs can obtain the results with similar properties from three different kernels---polynomial kernel, RBF and Sigmoid function [35], and the distribution of support vectors is of little difference. The kernel used to construct the SVM model in this study is the most common function, RBF. Considering fewest misclassified samples and the maximum classification margin, we construct a soft plane in the high-dimensional space. We can get 2 =225C=105 in cross-validation. Then we use Matlab and Libsvm toolbox for the experimental analysis. C. Empirical Analysis

In Tab.2, the assessment results of SVM and Neural Network (NN) are compared. BPN uses BP algorithm and gets the numbers of the errors and hidden layers in the cross-validation. (The target error is 0.1 and the number of hide layers is 12). We call it an error when a sample in the normality class is discriminated as one from the loss class and vice versa. We can get the error rate when the total error number is divided by the total sample number.

TABLE 2. DISCRIMINATION RESULTS Model types BP neural network model Support vector machine model Training sample set (56) Accuracy Rate of errors 17.86% 82.14% a (10) (46 ) 85.71% 14.29% (48) (8) Testing sample set (101) Accuracy Rate of errors 79.2% 20.8% (80) (21) 83.17% 16.83% (84) (17)

a. The number in the brackets in the table is the number of samples. From Tab.2, we can see that the accuracy of SVMs in the testing sample set is 83.78% which is significantly better than that of BP Neural Network Model--77.38%. We also compare the robustness of the two models and we can see that for the training sample set, the accuracy of neural network is 82.14%, while SVM 85.71%, and in the testing sample set, the accuracies both drop to varying degrees. Neural network drops by 3.6% and SVMs [(82.14% 79.2%) / 82.41 %] 2.9% [(85.71% 83.17%) / 85.71%] . Obviously, the robustness of SVMs is better and can meet the requirements of the application.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5822)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Testing Staircase PressurizationDocument5 pagesTesting Staircase Pressurizationthanhlamndl100% (1)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Manual e Stufa Sara Auto Constructor EsDocument15 pagesManual e Stufa Sara Auto Constructor EsDamián Follino80% (5)

- GPT Au480Document1 pageGPT Au480xuanhungyteNo ratings yet

- Large Data Set Lds-EdexcelDocument146 pagesLarge Data Set Lds-EdexcelhadzNo ratings yet

- Gold BugDocument5 pagesGold BugMarky MaypoNo ratings yet

- AWRDocument8 pagesAWRChetan PawarNo ratings yet

- Bosch BPT S String Inverters PDFDocument4 pagesBosch BPT S String Inverters PDFUsmanNo ratings yet

- Thermodynamics (Module 1)Document22 pagesThermodynamics (Module 1)Christine SalamatNo ratings yet

- JCN 10 774 Wald TestDocument1 pageJCN 10 774 Wald TestEnggar Rindu PrimandaniNo ratings yet

- FR - Chemical KineticsDocument5 pagesFR - Chemical KineticsKenneth Dionysus SantosNo ratings yet

- Linux Privilege Escalation Using Capabilities: Table of ContentDocument8 pagesLinux Privilege Escalation Using Capabilities: Table of Contentmr z3iyaNo ratings yet

- General Science Notes: How Rapidly Can Wood Petrify?Document2 pagesGeneral Science Notes: How Rapidly Can Wood Petrify?Milan StepanovNo ratings yet

- 4.hardcopy Devices-MerinDocument20 pages4.hardcopy Devices-MerinMerin ThomasNo ratings yet

- Pirates InesDocument17 pagesPirates Inesdjelaibia2789No ratings yet

- Modeling Global Tsunamis With TelemacDocument14 pagesModeling Global Tsunamis With TelemacLa SailleNo ratings yet

- SALINITYDocument6 pagesSALINITYNEENU M GNo ratings yet

- VBA12 - Monte Carlo SimulationDocument3 pagesVBA12 - Monte Carlo SimulationzarasettNo ratings yet

- jss3 NotesDocument33 pagesjss3 NotesQueen TochiNo ratings yet

- TN-1-IPL Applicators Modification and New Supporting Software VersionsDocument4 pagesTN-1-IPL Applicators Modification and New Supporting Software VersionsHill WnagNo ratings yet

- IR400 Data SheetDocument2 pagesIR400 Data Sheetdarkchess76No ratings yet

- Grade 5 Specifications CambridgeDocument2 pagesGrade 5 Specifications CambridgekatyaNo ratings yet

- Secondary Ion Mass SpectrometryDocument4 pagesSecondary Ion Mass SpectrometryimamuddeenNo ratings yet

- Arstruct Reviewer Compilation (GRP 7)Document9 pagesArstruct Reviewer Compilation (GRP 7)Nicole FrancisNo ratings yet

- Gregory M Sped841 UnitlessonDocument1 pageGregory M Sped841 Unitlessonapi-271896767No ratings yet

- Effectiveness of Brandt Daroff, Semont and Epley ManeuversDocument8 pagesEffectiveness of Brandt Daroff, Semont and Epley ManeuversNestri PrabandaniNo ratings yet

- PCM FlasherDocument5 pagesPCM FlasherДрагиша Небитни ТрифуновићNo ratings yet

- Contact: ULVAC, IncDocument8 pagesContact: ULVAC, Inc黄爱明No ratings yet

- Influence of Nitrogen On Hot Ductility of Steels and Its Relationship To Problem of Transverse CrackingDocument5 pagesInfluence of Nitrogen On Hot Ductility of Steels and Its Relationship To Problem of Transverse CrackingMada TetoNo ratings yet

- 432 - Kishankumar Goud - CJDocument86 pages432 - Kishankumar Goud - CJKajal GoudNo ratings yet

- BRUCE MK4 Handling ProcedureDocument81 pagesBRUCE MK4 Handling ProcedureSamo SpontanostNo ratings yet