Professional Documents

Culture Documents

Evalutating Face Models Animated by Mpeg-4 Faps: J.Ahlberg, I.S.Pandzic, L.You Image Coding Group Linköping University

Evalutating Face Models Animated by Mpeg-4 Faps: J.Ahlberg, I.S.Pandzic, L.You Image Coding Group Linköping University

Uploaded by

StarLink1Copyright:

Available Formats

You might also like

- IELTS Writing Task 1: Interactive Model Answers & Practice TestsFrom EverandIELTS Writing Task 1: Interactive Model Answers & Practice TestsRating: 4.5 out of 5 stars4.5/5 (9)

- MSDS For PN 3000671, Beacon Replacement Kit (Part 1) PDFDocument15 pagesMSDS For PN 3000671, Beacon Replacement Kit (Part 1) PDFAndrianoNo ratings yet

- Talal Asad - Anthropology and The Colonial EncounterDocument4 pagesTalal Asad - Anthropology and The Colonial EncounterTania Saha100% (1)

- Department of Computing Technologies Software Testing and ReliabilityDocument6 pagesDepartment of Computing Technologies Software Testing and ReliabilitySyed EmadNo ratings yet

- Blood Sorcery Rites of Damnation Spell Casting SummaryDocument3 pagesBlood Sorcery Rites of Damnation Spell Casting SummaryZiggurathNo ratings yet

- A Matlab Script To Explore Linear Predictive Coding With VocalDocument6 pagesA Matlab Script To Explore Linear Predictive Coding With VocalBolo BTNo ratings yet

- Geriatric Consideration in NursingDocument31 pagesGeriatric Consideration in NursingBabita Dhruw100% (5)

- Wiring Diagram Elevator: 123/INDSBY-ELC/1014Document22 pagesWiring Diagram Elevator: 123/INDSBY-ELC/1014Gogik Anto85% (13)

- Generalized Problematic Internet Use Scale 2 (Gpius 2) Scale Items & InstructionsDocument2 pagesGeneralized Problematic Internet Use Scale 2 (Gpius 2) Scale Items & InstructionsShariqa100% (1)

- Afar 2 - Summative Test (Consolidated) Theories: Realized in The Second Year From Upstream Sales Made in Both YearsDocument23 pagesAfar 2 - Summative Test (Consolidated) Theories: Realized in The Second Year From Upstream Sales Made in Both YearsVon Andrei Medina100% (1)

- Neurofeedback: Emotive EEG Epoch Is A Research Grade DeviceDocument19 pagesNeurofeedback: Emotive EEG Epoch Is A Research Grade Deviceavalon_moonNo ratings yet

- Algebra 1 Crns 12-13 3rd Nine WeeksDocument20 pagesAlgebra 1 Crns 12-13 3rd Nine Weeksapi-201428071No ratings yet

- Article 2Document5 pagesArticle 2João Paulo CabralNo ratings yet

- From Physics To Medical Imaging, Through Electronics and DetectorsDocument110 pagesFrom Physics To Medical Imaging, Through Electronics and Detectorsmahdi123456789No ratings yet

- Laboratory Practice, Testing, and Reporting: Time-Honored Fundamentals for the SciencesFrom EverandLaboratory Practice, Testing, and Reporting: Time-Honored Fundamentals for the SciencesNo ratings yet

- Predicting Performance For Natural Language Processing TasksDocument22 pagesPredicting Performance For Natural Language Processing Tasksfuzzy_slugNo ratings yet

- Table 3: Animations Combining Nonmanual Signals With Affect and Manual ClassifiersDocument1 pageTable 3: Animations Combining Nonmanual Signals With Affect and Manual ClassifiersjwehrwNo ratings yet

- Multimodal Sentiment AnalysisDocument6 pagesMultimodal Sentiment AnalysisVasanth KumarNo ratings yet

- Week 9 and 10Document129 pagesWeek 9 and 10SamwelNo ratings yet

- Machine Learning Tutorial Machine Learning TutorialDocument33 pagesMachine Learning Tutorial Machine Learning TutorialSudhakar MurugasenNo ratings yet

- Emotiv EPOC: Psychology Lab University of HuelvaDocument13 pagesEmotiv EPOC: Psychology Lab University of HuelvaalberhernandezNo ratings yet

- Exploring Dataset Similarities Using PCA-based Feature SelectionDocument7 pagesExploring Dataset Similarities Using PCA-based Feature SelectionVictor Andres Melchor EspinozaNo ratings yet

- ITPsession2 A PDFDocument100 pagesITPsession2 A PDFLauro EncisoNo ratings yet

- Kunal AI LabDocument18 pagesKunal AI Labcomputerguys666No ratings yet

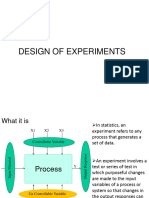

- Design of Experiments (DOE) : © 2013 ICOPE - All Rights ReservedDocument49 pagesDesign of Experiments (DOE) : © 2013 ICOPE - All Rights ReservedMarcionilo ChagasNo ratings yet

- Unit 1 - FullDocument115 pagesUnit 1 - FullMALE CHARANJEETH KUMAR (RA2111026010082)No ratings yet

- Compa NEODocument14 pagesCompa NEORoberth Alexander Gutierrez CaballeroNo ratings yet

- Towards Automatic Generation of Product Reviews From Aspect-Sentiment ScoresDocument10 pagesTowards Automatic Generation of Product Reviews From Aspect-Sentiment ScoreshaipeiNo ratings yet

- Artificial Intelligence Lab FilesDocument13 pagesArtificial Intelligence Lab FilesJhonNo ratings yet

- LAB 6 - Testing The ALU: GoalsDocument9 pagesLAB 6 - Testing The ALU: GoalsJoey WangNo ratings yet

- AI 11 AliDocument10 pagesAI 11 AliSoo NuuNo ratings yet

- Convention Paper: Audio Engineering SocietyDocument6 pagesConvention Paper: Audio Engineering SocietyNeel ShahNo ratings yet

- Assessing Quality in Live Interlingual Subtitling: A New ChallengeDocument29 pagesAssessing Quality in Live Interlingual Subtitling: A New ChallengeGeraldine Polo CastilloNo ratings yet

- Comparison de Los NeoDocument14 pagesComparison de Los NeoLady Huaman AguilarNo ratings yet

- Exp I ProbabilityDocument1 pageExp I ProbabilitykequankedianNo ratings yet

- 5 DOE Steps GuidelineDocument13 pages5 DOE Steps Guidelinebuvana1980No ratings yet

- Vowel RecognitionDocument3 pagesVowel Recognitionlad0shechkaNo ratings yet

- Joint Dictionary Learning-Based Non-Negative Matrix Factorization For Voice Conversion To Improve Speech Intelligibility After Oral SurgeryDocument10 pagesJoint Dictionary Learning-Based Non-Negative Matrix Factorization For Voice Conversion To Improve Speech Intelligibility After Oral SurgeryJBNo ratings yet

- A Model For Spectra-Based Software DiagnosisDocument37 pagesA Model For Spectra-Based Software DiagnosisInformatika Universitas MalikussalehNo ratings yet

- Taguchi Design With Pooled ANOVA: Perancangan KualitasDocument27 pagesTaguchi Design With Pooled ANOVA: Perancangan Kualitas06 andrew putra hartantoNo ratings yet

- ARDL Model - Hossain Academy Note PDFDocument5 pagesARDL Model - Hossain Academy Note PDFabdulraufhcc100% (1)

- A VHDL REview basedON) Tutorial June 30 To StudentsDocument2 pagesA VHDL REview basedON) Tutorial June 30 To Studentsdd sasdsdNo ratings yet

- EEG-based Classification of Bilingual Unspoken Speech Using ANNDocument4 pagesEEG-based Classification of Bilingual Unspoken Speech Using ANNdfgsdNo ratings yet

- Experiment 14Document5 pagesExperiment 14Rizwan Ahmad Muhammad AslamNo ratings yet

- Parte BDocument29 pagesParte Brenato otaniNo ratings yet

- AI Lab - GarimaDocument24 pagesAI Lab - GarimaGarima PaudelNo ratings yet

- ELPAC - Test Taker Preparation - Air Traffic Controllers (v6.0)Document13 pagesELPAC - Test Taker Preparation - Air Traffic Controllers (v6.0)Mark KeyNo ratings yet

- 1introduction To DoEDocument5 pages1introduction To DoERay PratamaNo ratings yet

- Kim EsbensenDocument25 pagesKim EsbensenJaime Mercado BenavidesNo ratings yet

- EPiT A Software Testing Tool ForDocument5 pagesEPiT A Software Testing Tool Fordidier.diazmenaNo ratings yet

- How to Find Inter-Groups Differences Using Spss/Excel/Web Tools in Common Experimental Designs: Book 1From EverandHow to Find Inter-Groups Differences Using Spss/Excel/Web Tools in Common Experimental Designs: Book 1No ratings yet

- Digital Electronics Lab (Open Elective-II) Paper Code: ETVEC-554 L T/P C Paper: Digital Electronics Lab 0 2 2Document5 pagesDigital Electronics Lab (Open Elective-II) Paper Code: ETVEC-554 L T/P C Paper: Digital Electronics Lab 0 2 2Rahul YadavNo ratings yet

- Using Simulations To Teach Statistical Inference: Beth Chance, Allan Rossman (Cal Poly)Document66 pagesUsing Simulations To Teach Statistical Inference: Beth Chance, Allan Rossman (Cal Poly)thamizh555No ratings yet

- Experimental Eye ResearchDocument3 pagesExperimental Eye ResearchNikhil ThakreNo ratings yet

- Quantization and Sampling Using MatlabDocument3 pagesQuantization and Sampling Using MatlabAbdullah SalemNo ratings yet

- Electroencephalograph (EEG) Signal Feature Extraction For Unspoken-Speech Identification Using EEGLABDocument5 pagesElectroencephalograph (EEG) Signal Feature Extraction For Unspoken-Speech Identification Using EEGLABAbu FatihNo ratings yet

- ISE 5110 Design of Experiments: Test I Study GuideDocument1 pageISE 5110 Design of Experiments: Test I Study GuideChaitanya KarwaNo ratings yet

- Chapter 1:introduction To Design of ExperimentsDocument20 pagesChapter 1:introduction To Design of ExperimentsSachin K KambleNo ratings yet

- Programación EvolutivaDocument21 pagesProgramación EvolutivaFederico Gómez GonzálezNo ratings yet

- Construction MTRLS and Testing ManualDocument9 pagesConstruction MTRLS and Testing ManualChristian Jim PollerosNo ratings yet

- Brief Papers: An Orthogonal Genetic Algorithm With Quantization For Global Numerical OptimizationDocument13 pagesBrief Papers: An Orthogonal Genetic Algorithm With Quantization For Global Numerical OptimizationMuhammad Wajahat Ali Khan FastNUNo ratings yet

- Presentation4gregoire PDFDocument20 pagesPresentation4gregoire PDFJesika Andilia Setya WardaniNo ratings yet

- Design of ExperimentsDocument44 pagesDesign of ExperimentsJYNo ratings yet

- The Future ofDocument25 pagesThe Future ofHoàng Tố UyênNo ratings yet

- 12 Procedure of DT Data AnalyseDocument1 page12 Procedure of DT Data AnalyseMerlene PerryNo ratings yet

- 12 Procedure of DT Data AnalyseDocument1 page12 Procedure of DT Data Analyseamarjith1341No ratings yet

- P300 and Emotiv PDFDocument16 pagesP300 and Emotiv PDFPaasol100% (1)

- 2007-08 Highlights Finalsep 12Document2 pages2007-08 Highlights Finalsep 12StarLink1No ratings yet

- Annual Report HighlightsDocument2 pagesAnnual Report HighlightsStarLink1No ratings yet

- Ar2011 12tableofcontentsDocument6 pagesAr2011 12tableofcontentsStarLink1No ratings yet

- Ab Teachers RetirementDocument14 pagesAb Teachers RetirementStarLink1No ratings yet

- Appendix 2: Endnotes/Methodology For Results AnalysisDocument0 pagesAppendix 2: Endnotes/Methodology For Results AnalysisStarLink1No ratings yet

- Appendix 1: Summary of Accomplishments - Alberta Learning Business PlanDocument0 pagesAppendix 1: Summary of Accomplishments - Alberta Learning Business PlanStarLink1No ratings yet

- Appendix 4: Alphabetical List of Entities' Financial Information in Ministry 2000-01 Annual ReportsDocument0 pagesAppendix 4: Alphabetical List of Entities' Financial Information in Ministry 2000-01 Annual ReportsStarLink1No ratings yet

- Ministry of Education: Ministry Funding Provided To School JurisdictionsDocument5 pagesMinistry of Education: Ministry Funding Provided To School JurisdictionsStarLink1No ratings yet

- Appendix 3: Government Organization ChangesDocument0 pagesAppendix 3: Government Organization ChangesStarLink1No ratings yet

- Results Analysis: Education Annual ReportDocument76 pagesResults Analysis: Education Annual ReportStarLink1No ratings yet

- Alberta Education Public, Separate, Francophone, and Charter AuthoritiesDocument9 pagesAlberta Education Public, Separate, Francophone, and Charter AuthoritiesStarLink1No ratings yet

- Financial InfoDocument72 pagesFinancial InfoStarLink1No ratings yet

- Eis 1003 PDocument282 pagesEis 1003 PStarLink1No ratings yet

- Grade 9 Applied Mathematics Exam Review: Strand 1: Number Sense and AlgebraDocument9 pagesGrade 9 Applied Mathematics Exam Review: Strand 1: Number Sense and AlgebraStarLink1No ratings yet

- Ministry of Education: Ministry Funding Provided To School JurisdictionsDocument5 pagesMinistry of Education: Ministry Funding Provided To School JurisdictionsStarLink1No ratings yet

- Education: Annual Report 2008-2009Document20 pagesEducation: Annual Report 2008-2009StarLink1No ratings yet

- General Order Form: Quantity Code Item Price TotalDocument1 pageGeneral Order Form: Quantity Code Item Price TotalStarLink1No ratings yet

- Financial InformationDocument80 pagesFinancial InformationStarLink1No ratings yet

- Results Analysis: (Original Signed By)Document32 pagesResults Analysis: (Original Signed By)StarLink1No ratings yet

- Summary SCH JurDocument46 pagesSummary SCH JurStarLink1No ratings yet

- Ministry Funding SCH JurisdictionDocument5 pagesMinistry Funding SCH JurisdictionStarLink1No ratings yet

- Highlights: Key Accomplishments, 1999/2000: Lifelong LearningDocument0 pagesHighlights: Key Accomplishments, 1999/2000: Lifelong LearningStarLink1No ratings yet

- 2013-14 IAABO Refresher ExamDocument4 pages2013-14 IAABO Refresher ExamStarLink1100% (2)

- University of Ontario Institute of Technology Pre-Participation ExamDocument1 pageUniversity of Ontario Institute of Technology Pre-Participation ExamStarLink1No ratings yet

- 2012-13 Refresher ExamDocument3 pages2012-13 Refresher ExamStarLink1No ratings yet

- Table of ContentDocument6 pagesTable of ContentStarLink1No ratings yet

- 2013 Exam Schedule-JuneDocument2 pages2013 Exam Schedule-JuneStarLink1No ratings yet

- 92 Return Policy 2011Document2 pages92 Return Policy 2011StarLink1No ratings yet

- SPC January 2014 Examination Schedule: Provincial English Lanaguage Arts 40SDocument2 pagesSPC January 2014 Examination Schedule: Provincial English Lanaguage Arts 40SStarLink1No ratings yet

- 2013 Exam General InformationDocument1 page2013 Exam General InformationStarLink1No ratings yet

- ME-458 Turbomachinery: Muhammad Shaban Lecturer Department of Mechanical EngineeringDocument113 pagesME-458 Turbomachinery: Muhammad Shaban Lecturer Department of Mechanical EngineeringAneeq Raheem50% (2)

- Report 1Document9 pagesReport 135074Md Arafat Khan100% (1)

- CGF FPC Palm Oil RoadmapDocument31 pagesCGF FPC Palm Oil RoadmapnamasayanazlyaNo ratings yet

- Tuyển Sinh 10 - đề 1 -KeyDocument5 pagesTuyển Sinh 10 - đề 1 -Keynguyenhoang17042004No ratings yet

- Toward The Efficient Impact Frontier: FeaturesDocument6 pagesToward The Efficient Impact Frontier: Featuresguramios chukhrukidzeNo ratings yet

- Whittington 22e Solutions Manual Ch14Document14 pagesWhittington 22e Solutions Manual Ch14潘妍伶No ratings yet

- Jatiya Kabi Kazi Nazrul Islam UniversityDocument12 pagesJatiya Kabi Kazi Nazrul Islam UniversityAl-Muzahid EmuNo ratings yet

- Muhammad Fauzi-855677765-Fizz Hotel Lombok-HOTEL - STANDALONEDocument1 pageMuhammad Fauzi-855677765-Fizz Hotel Lombok-HOTEL - STANDALONEMuhammad Fauzi AndriansyahNo ratings yet

- XXX (Topic) 好处影响1 相反好处 2. 你⾃自⼰己的观点,后⾯面会展开的Document3 pagesXXX (Topic) 好处影响1 相反好处 2. 你⾃自⼰己的观点,后⾯面会展开的Miyou KwanNo ratings yet

- BlueStack Platform Marketing PlanDocument10 pagesBlueStack Platform Marketing PlanFıratcan KütükNo ratings yet

- Miller PreviewDocument252 pagesMiller PreviewcqpresscustomNo ratings yet

- Form 137Document2 pagesForm 137Raymund BondeNo ratings yet

- Ccc221coalpowerR MDocument58 pagesCcc221coalpowerR Mmfhaleem@pgesco.comNo ratings yet

- Getting The Most From Lube Oil AnalysisDocument16 pagesGetting The Most From Lube Oil AnalysisGuru Raja Ragavendran Nagarajan100% (2)

- Dungeon 190Document77 pagesDungeon 190Helmous100% (4)

- Applications Training For Integrex-100 400MkIII Series Mazatrol FusionDocument122 pagesApplications Training For Integrex-100 400MkIII Series Mazatrol Fusiontsaladyga100% (6)

- Chap 09Document132 pagesChap 09noscribdyoucantNo ratings yet

- In-Band Full-Duplex Interference For Underwater Acoustic Communication SystemsDocument6 pagesIn-Band Full-Duplex Interference For Underwater Acoustic Communication SystemsHarris TsimenidisNo ratings yet

- Sip 2019-2022Document4 pagesSip 2019-2022jein_am97% (29)

- March 16 - IM Processors DigiTimesDocument5 pagesMarch 16 - IM Processors DigiTimesRyanNo ratings yet

- Decision Trees and Boosting: Helge Voss (MPI-K, Heidelberg) TMVA WorkshopDocument30 pagesDecision Trees and Boosting: Helge Voss (MPI-K, Heidelberg) TMVA WorkshopAshish TiwariNo ratings yet

- Elrc 4507 Unit PlanDocument4 pagesElrc 4507 Unit Planapi-284973023No ratings yet

- Dbms Lab Dbms Lab: 23 March 202Document12 pagesDbms Lab Dbms Lab: 23 March 202LOVISH bansalNo ratings yet

Evalutating Face Models Animated by Mpeg-4 Faps: J.Ahlberg, I.S.Pandzic, L.You Image Coding Group Linköping University

Evalutating Face Models Animated by Mpeg-4 Faps: J.Ahlberg, I.S.Pandzic, L.You Image Coding Group Linköping University

Uploaded by

StarLink1Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Evalutating Face Models Animated by Mpeg-4 Faps: J.Ahlberg, I.S.Pandzic, L.You Image Coding Group Linköping University

Evalutating Face Models Animated by Mpeg-4 Faps: J.Ahlberg, I.S.Pandzic, L.You Image Coding Group Linköping University

Uploaded by

StarLink1Copyright:

Available Formats

Evalutating Face Models animated by MPEG-4 FAPs

OZHCI 2001 Talking Head workshop

J.Ahlberg, I.S.Pandzic, L.You Image Coding Group Linkping University

OZHCI 2001, Talking Head workshop

Outline

Purpose Creating Test Data Performing the Test Measuring the Results Reproducing the Test Conclusion

OZHCI 2001, Talking Head workshop

Purpose

To investigate how well animated face models can express emotions when controlled by low level MPEG-4 FAPs reproducing the motion captured from real faces acting as the emotion. Proposing a standard benchmark test for MPEG-4 animated face models.

OZHCI 2001, Talking Head workshop

Creating Test Data

Feature points were tracked using a system with four IR-sensitive cameras and IR-markers. Different emotions were acted out, without any other action as well as when reading a sentence. Video was recorded simultaneously.

OZHCI 2001, Talking Head workshop

The Tracking System

OZHCI 2001, Talking Head workshop

The Test Sequences

21 sequences with 4 different people acting out different emotions. Each sequence recorded as a real video and also synthesized using FAPs with two different models. Thus, in total, 63 sequences were created.

OZHCI 2001, Talking Head workshop

The Test Sequences

Real video

Jorgen model (Candide-3)

Oscar model

OZHCI 2001, Talking Head workshop

Performing the Test

150 persons each watched 2/3 of the sequences (each sequence watched by 100 persons). The subjects marked for each sequence which emotion they thought the sequence showed. Each sequence was shown a few consecutive times. The following slides show the layout of the test.

OZHCI 2001, Talking Head workshop

OZHCI 2001, Talking Head workshop

OZHCI 2001, Talking Head workshop

OZHCI 2001, Talking Head workshop

OZHCI 2001, Talking Head workshop

Measuring the Results

Compare the synthetic, real, ideal and random cases. Absolute Expressive Performance

Compare the dispersion matrices and calculate the

L1-norm of the differences. Normalize so that the random case == 0 and the ideal case == 100.

Relative Expressive Performance

Percentage of the AEP for the real case.

OZHCI 2001, Talking Head workshop

Test Results

Absolute Expressive Performance

The real case: 58.1 The Oscar model / FAE: 9.1

The Jorgen model (Candide-3) / MpegWeb: 9.4

Relative Expressive Performance

The Oscar model / FAE: 15.6

The Jorgen model (Candide-3) / MpegWeb: 16.2

Difference between the two synthetic cases statistically insignificant.

OZHCI 2001, Talking Head workshop

Reproducing the Test

The test is intended to be reproducible by anyone who wants to test/compare their face model(s). Video-, FAP- and SMIL-files will be available in a package that can be downloaded for free from the Image Coding Group website.

OZHCI 2001, Talking Head workshop

Conclusion

The expressive performance of the face models much worse than of the real video. No significant difference between the two face models. Main result: A reproducible test proposed as a standard benchmark test for the expressive performance of face models.

You might also like

- IELTS Writing Task 1: Interactive Model Answers & Practice TestsFrom EverandIELTS Writing Task 1: Interactive Model Answers & Practice TestsRating: 4.5 out of 5 stars4.5/5 (9)

- MSDS For PN 3000671, Beacon Replacement Kit (Part 1) PDFDocument15 pagesMSDS For PN 3000671, Beacon Replacement Kit (Part 1) PDFAndrianoNo ratings yet

- Talal Asad - Anthropology and The Colonial EncounterDocument4 pagesTalal Asad - Anthropology and The Colonial EncounterTania Saha100% (1)

- Department of Computing Technologies Software Testing and ReliabilityDocument6 pagesDepartment of Computing Technologies Software Testing and ReliabilitySyed EmadNo ratings yet

- Blood Sorcery Rites of Damnation Spell Casting SummaryDocument3 pagesBlood Sorcery Rites of Damnation Spell Casting SummaryZiggurathNo ratings yet

- A Matlab Script To Explore Linear Predictive Coding With VocalDocument6 pagesA Matlab Script To Explore Linear Predictive Coding With VocalBolo BTNo ratings yet

- Geriatric Consideration in NursingDocument31 pagesGeriatric Consideration in NursingBabita Dhruw100% (5)

- Wiring Diagram Elevator: 123/INDSBY-ELC/1014Document22 pagesWiring Diagram Elevator: 123/INDSBY-ELC/1014Gogik Anto85% (13)

- Generalized Problematic Internet Use Scale 2 (Gpius 2) Scale Items & InstructionsDocument2 pagesGeneralized Problematic Internet Use Scale 2 (Gpius 2) Scale Items & InstructionsShariqa100% (1)

- Afar 2 - Summative Test (Consolidated) Theories: Realized in The Second Year From Upstream Sales Made in Both YearsDocument23 pagesAfar 2 - Summative Test (Consolidated) Theories: Realized in The Second Year From Upstream Sales Made in Both YearsVon Andrei Medina100% (1)

- Neurofeedback: Emotive EEG Epoch Is A Research Grade DeviceDocument19 pagesNeurofeedback: Emotive EEG Epoch Is A Research Grade Deviceavalon_moonNo ratings yet

- Algebra 1 Crns 12-13 3rd Nine WeeksDocument20 pagesAlgebra 1 Crns 12-13 3rd Nine Weeksapi-201428071No ratings yet

- Article 2Document5 pagesArticle 2João Paulo CabralNo ratings yet

- From Physics To Medical Imaging, Through Electronics and DetectorsDocument110 pagesFrom Physics To Medical Imaging, Through Electronics and Detectorsmahdi123456789No ratings yet

- Laboratory Practice, Testing, and Reporting: Time-Honored Fundamentals for the SciencesFrom EverandLaboratory Practice, Testing, and Reporting: Time-Honored Fundamentals for the SciencesNo ratings yet

- Predicting Performance For Natural Language Processing TasksDocument22 pagesPredicting Performance For Natural Language Processing Tasksfuzzy_slugNo ratings yet

- Table 3: Animations Combining Nonmanual Signals With Affect and Manual ClassifiersDocument1 pageTable 3: Animations Combining Nonmanual Signals With Affect and Manual ClassifiersjwehrwNo ratings yet

- Multimodal Sentiment AnalysisDocument6 pagesMultimodal Sentiment AnalysisVasanth KumarNo ratings yet

- Week 9 and 10Document129 pagesWeek 9 and 10SamwelNo ratings yet

- Machine Learning Tutorial Machine Learning TutorialDocument33 pagesMachine Learning Tutorial Machine Learning TutorialSudhakar MurugasenNo ratings yet

- Emotiv EPOC: Psychology Lab University of HuelvaDocument13 pagesEmotiv EPOC: Psychology Lab University of HuelvaalberhernandezNo ratings yet

- Exploring Dataset Similarities Using PCA-based Feature SelectionDocument7 pagesExploring Dataset Similarities Using PCA-based Feature SelectionVictor Andres Melchor EspinozaNo ratings yet

- ITPsession2 A PDFDocument100 pagesITPsession2 A PDFLauro EncisoNo ratings yet

- Kunal AI LabDocument18 pagesKunal AI Labcomputerguys666No ratings yet

- Design of Experiments (DOE) : © 2013 ICOPE - All Rights ReservedDocument49 pagesDesign of Experiments (DOE) : © 2013 ICOPE - All Rights ReservedMarcionilo ChagasNo ratings yet

- Unit 1 - FullDocument115 pagesUnit 1 - FullMALE CHARANJEETH KUMAR (RA2111026010082)No ratings yet

- Compa NEODocument14 pagesCompa NEORoberth Alexander Gutierrez CaballeroNo ratings yet

- Towards Automatic Generation of Product Reviews From Aspect-Sentiment ScoresDocument10 pagesTowards Automatic Generation of Product Reviews From Aspect-Sentiment ScoreshaipeiNo ratings yet

- Artificial Intelligence Lab FilesDocument13 pagesArtificial Intelligence Lab FilesJhonNo ratings yet

- LAB 6 - Testing The ALU: GoalsDocument9 pagesLAB 6 - Testing The ALU: GoalsJoey WangNo ratings yet

- AI 11 AliDocument10 pagesAI 11 AliSoo NuuNo ratings yet

- Convention Paper: Audio Engineering SocietyDocument6 pagesConvention Paper: Audio Engineering SocietyNeel ShahNo ratings yet

- Assessing Quality in Live Interlingual Subtitling: A New ChallengeDocument29 pagesAssessing Quality in Live Interlingual Subtitling: A New ChallengeGeraldine Polo CastilloNo ratings yet

- Comparison de Los NeoDocument14 pagesComparison de Los NeoLady Huaman AguilarNo ratings yet

- Exp I ProbabilityDocument1 pageExp I ProbabilitykequankedianNo ratings yet

- 5 DOE Steps GuidelineDocument13 pages5 DOE Steps Guidelinebuvana1980No ratings yet

- Vowel RecognitionDocument3 pagesVowel Recognitionlad0shechkaNo ratings yet

- Joint Dictionary Learning-Based Non-Negative Matrix Factorization For Voice Conversion To Improve Speech Intelligibility After Oral SurgeryDocument10 pagesJoint Dictionary Learning-Based Non-Negative Matrix Factorization For Voice Conversion To Improve Speech Intelligibility After Oral SurgeryJBNo ratings yet

- A Model For Spectra-Based Software DiagnosisDocument37 pagesA Model For Spectra-Based Software DiagnosisInformatika Universitas MalikussalehNo ratings yet

- Taguchi Design With Pooled ANOVA: Perancangan KualitasDocument27 pagesTaguchi Design With Pooled ANOVA: Perancangan Kualitas06 andrew putra hartantoNo ratings yet

- ARDL Model - Hossain Academy Note PDFDocument5 pagesARDL Model - Hossain Academy Note PDFabdulraufhcc100% (1)

- A VHDL REview basedON) Tutorial June 30 To StudentsDocument2 pagesA VHDL REview basedON) Tutorial June 30 To Studentsdd sasdsdNo ratings yet

- EEG-based Classification of Bilingual Unspoken Speech Using ANNDocument4 pagesEEG-based Classification of Bilingual Unspoken Speech Using ANNdfgsdNo ratings yet

- Experiment 14Document5 pagesExperiment 14Rizwan Ahmad Muhammad AslamNo ratings yet

- Parte BDocument29 pagesParte Brenato otaniNo ratings yet

- AI Lab - GarimaDocument24 pagesAI Lab - GarimaGarima PaudelNo ratings yet

- ELPAC - Test Taker Preparation - Air Traffic Controllers (v6.0)Document13 pagesELPAC - Test Taker Preparation - Air Traffic Controllers (v6.0)Mark KeyNo ratings yet

- 1introduction To DoEDocument5 pages1introduction To DoERay PratamaNo ratings yet

- Kim EsbensenDocument25 pagesKim EsbensenJaime Mercado BenavidesNo ratings yet

- EPiT A Software Testing Tool ForDocument5 pagesEPiT A Software Testing Tool Fordidier.diazmenaNo ratings yet

- How to Find Inter-Groups Differences Using Spss/Excel/Web Tools in Common Experimental Designs: Book 1From EverandHow to Find Inter-Groups Differences Using Spss/Excel/Web Tools in Common Experimental Designs: Book 1No ratings yet

- Digital Electronics Lab (Open Elective-II) Paper Code: ETVEC-554 L T/P C Paper: Digital Electronics Lab 0 2 2Document5 pagesDigital Electronics Lab (Open Elective-II) Paper Code: ETVEC-554 L T/P C Paper: Digital Electronics Lab 0 2 2Rahul YadavNo ratings yet

- Using Simulations To Teach Statistical Inference: Beth Chance, Allan Rossman (Cal Poly)Document66 pagesUsing Simulations To Teach Statistical Inference: Beth Chance, Allan Rossman (Cal Poly)thamizh555No ratings yet

- Experimental Eye ResearchDocument3 pagesExperimental Eye ResearchNikhil ThakreNo ratings yet

- Quantization and Sampling Using MatlabDocument3 pagesQuantization and Sampling Using MatlabAbdullah SalemNo ratings yet

- Electroencephalograph (EEG) Signal Feature Extraction For Unspoken-Speech Identification Using EEGLABDocument5 pagesElectroencephalograph (EEG) Signal Feature Extraction For Unspoken-Speech Identification Using EEGLABAbu FatihNo ratings yet

- ISE 5110 Design of Experiments: Test I Study GuideDocument1 pageISE 5110 Design of Experiments: Test I Study GuideChaitanya KarwaNo ratings yet

- Chapter 1:introduction To Design of ExperimentsDocument20 pagesChapter 1:introduction To Design of ExperimentsSachin K KambleNo ratings yet

- Programación EvolutivaDocument21 pagesProgramación EvolutivaFederico Gómez GonzálezNo ratings yet

- Construction MTRLS and Testing ManualDocument9 pagesConstruction MTRLS and Testing ManualChristian Jim PollerosNo ratings yet

- Brief Papers: An Orthogonal Genetic Algorithm With Quantization For Global Numerical OptimizationDocument13 pagesBrief Papers: An Orthogonal Genetic Algorithm With Quantization For Global Numerical OptimizationMuhammad Wajahat Ali Khan FastNUNo ratings yet

- Presentation4gregoire PDFDocument20 pagesPresentation4gregoire PDFJesika Andilia Setya WardaniNo ratings yet

- Design of ExperimentsDocument44 pagesDesign of ExperimentsJYNo ratings yet

- The Future ofDocument25 pagesThe Future ofHoàng Tố UyênNo ratings yet

- 12 Procedure of DT Data AnalyseDocument1 page12 Procedure of DT Data AnalyseMerlene PerryNo ratings yet

- 12 Procedure of DT Data AnalyseDocument1 page12 Procedure of DT Data Analyseamarjith1341No ratings yet

- P300 and Emotiv PDFDocument16 pagesP300 and Emotiv PDFPaasol100% (1)

- 2007-08 Highlights Finalsep 12Document2 pages2007-08 Highlights Finalsep 12StarLink1No ratings yet

- Annual Report HighlightsDocument2 pagesAnnual Report HighlightsStarLink1No ratings yet

- Ar2011 12tableofcontentsDocument6 pagesAr2011 12tableofcontentsStarLink1No ratings yet

- Ab Teachers RetirementDocument14 pagesAb Teachers RetirementStarLink1No ratings yet

- Appendix 2: Endnotes/Methodology For Results AnalysisDocument0 pagesAppendix 2: Endnotes/Methodology For Results AnalysisStarLink1No ratings yet

- Appendix 1: Summary of Accomplishments - Alberta Learning Business PlanDocument0 pagesAppendix 1: Summary of Accomplishments - Alberta Learning Business PlanStarLink1No ratings yet

- Appendix 4: Alphabetical List of Entities' Financial Information in Ministry 2000-01 Annual ReportsDocument0 pagesAppendix 4: Alphabetical List of Entities' Financial Information in Ministry 2000-01 Annual ReportsStarLink1No ratings yet

- Ministry of Education: Ministry Funding Provided To School JurisdictionsDocument5 pagesMinistry of Education: Ministry Funding Provided To School JurisdictionsStarLink1No ratings yet

- Appendix 3: Government Organization ChangesDocument0 pagesAppendix 3: Government Organization ChangesStarLink1No ratings yet

- Results Analysis: Education Annual ReportDocument76 pagesResults Analysis: Education Annual ReportStarLink1No ratings yet

- Alberta Education Public, Separate, Francophone, and Charter AuthoritiesDocument9 pagesAlberta Education Public, Separate, Francophone, and Charter AuthoritiesStarLink1No ratings yet

- Financial InfoDocument72 pagesFinancial InfoStarLink1No ratings yet

- Eis 1003 PDocument282 pagesEis 1003 PStarLink1No ratings yet

- Grade 9 Applied Mathematics Exam Review: Strand 1: Number Sense and AlgebraDocument9 pagesGrade 9 Applied Mathematics Exam Review: Strand 1: Number Sense and AlgebraStarLink1No ratings yet

- Ministry of Education: Ministry Funding Provided To School JurisdictionsDocument5 pagesMinistry of Education: Ministry Funding Provided To School JurisdictionsStarLink1No ratings yet

- Education: Annual Report 2008-2009Document20 pagesEducation: Annual Report 2008-2009StarLink1No ratings yet

- General Order Form: Quantity Code Item Price TotalDocument1 pageGeneral Order Form: Quantity Code Item Price TotalStarLink1No ratings yet

- Financial InformationDocument80 pagesFinancial InformationStarLink1No ratings yet

- Results Analysis: (Original Signed By)Document32 pagesResults Analysis: (Original Signed By)StarLink1No ratings yet

- Summary SCH JurDocument46 pagesSummary SCH JurStarLink1No ratings yet

- Ministry Funding SCH JurisdictionDocument5 pagesMinistry Funding SCH JurisdictionStarLink1No ratings yet

- Highlights: Key Accomplishments, 1999/2000: Lifelong LearningDocument0 pagesHighlights: Key Accomplishments, 1999/2000: Lifelong LearningStarLink1No ratings yet

- 2013-14 IAABO Refresher ExamDocument4 pages2013-14 IAABO Refresher ExamStarLink1100% (2)

- University of Ontario Institute of Technology Pre-Participation ExamDocument1 pageUniversity of Ontario Institute of Technology Pre-Participation ExamStarLink1No ratings yet

- 2012-13 Refresher ExamDocument3 pages2012-13 Refresher ExamStarLink1No ratings yet

- Table of ContentDocument6 pagesTable of ContentStarLink1No ratings yet

- 2013 Exam Schedule-JuneDocument2 pages2013 Exam Schedule-JuneStarLink1No ratings yet

- 92 Return Policy 2011Document2 pages92 Return Policy 2011StarLink1No ratings yet

- SPC January 2014 Examination Schedule: Provincial English Lanaguage Arts 40SDocument2 pagesSPC January 2014 Examination Schedule: Provincial English Lanaguage Arts 40SStarLink1No ratings yet

- 2013 Exam General InformationDocument1 page2013 Exam General InformationStarLink1No ratings yet

- ME-458 Turbomachinery: Muhammad Shaban Lecturer Department of Mechanical EngineeringDocument113 pagesME-458 Turbomachinery: Muhammad Shaban Lecturer Department of Mechanical EngineeringAneeq Raheem50% (2)

- Report 1Document9 pagesReport 135074Md Arafat Khan100% (1)

- CGF FPC Palm Oil RoadmapDocument31 pagesCGF FPC Palm Oil RoadmapnamasayanazlyaNo ratings yet

- Tuyển Sinh 10 - đề 1 -KeyDocument5 pagesTuyển Sinh 10 - đề 1 -Keynguyenhoang17042004No ratings yet

- Toward The Efficient Impact Frontier: FeaturesDocument6 pagesToward The Efficient Impact Frontier: Featuresguramios chukhrukidzeNo ratings yet

- Whittington 22e Solutions Manual Ch14Document14 pagesWhittington 22e Solutions Manual Ch14潘妍伶No ratings yet

- Jatiya Kabi Kazi Nazrul Islam UniversityDocument12 pagesJatiya Kabi Kazi Nazrul Islam UniversityAl-Muzahid EmuNo ratings yet

- Muhammad Fauzi-855677765-Fizz Hotel Lombok-HOTEL - STANDALONEDocument1 pageMuhammad Fauzi-855677765-Fizz Hotel Lombok-HOTEL - STANDALONEMuhammad Fauzi AndriansyahNo ratings yet

- XXX (Topic) 好处影响1 相反好处 2. 你⾃自⼰己的观点,后⾯面会展开的Document3 pagesXXX (Topic) 好处影响1 相反好处 2. 你⾃自⼰己的观点,后⾯面会展开的Miyou KwanNo ratings yet

- BlueStack Platform Marketing PlanDocument10 pagesBlueStack Platform Marketing PlanFıratcan KütükNo ratings yet

- Miller PreviewDocument252 pagesMiller PreviewcqpresscustomNo ratings yet

- Form 137Document2 pagesForm 137Raymund BondeNo ratings yet

- Ccc221coalpowerR MDocument58 pagesCcc221coalpowerR Mmfhaleem@pgesco.comNo ratings yet

- Getting The Most From Lube Oil AnalysisDocument16 pagesGetting The Most From Lube Oil AnalysisGuru Raja Ragavendran Nagarajan100% (2)

- Dungeon 190Document77 pagesDungeon 190Helmous100% (4)

- Applications Training For Integrex-100 400MkIII Series Mazatrol FusionDocument122 pagesApplications Training For Integrex-100 400MkIII Series Mazatrol Fusiontsaladyga100% (6)

- Chap 09Document132 pagesChap 09noscribdyoucantNo ratings yet

- In-Band Full-Duplex Interference For Underwater Acoustic Communication SystemsDocument6 pagesIn-Band Full-Duplex Interference For Underwater Acoustic Communication SystemsHarris TsimenidisNo ratings yet

- Sip 2019-2022Document4 pagesSip 2019-2022jein_am97% (29)

- March 16 - IM Processors DigiTimesDocument5 pagesMarch 16 - IM Processors DigiTimesRyanNo ratings yet

- Decision Trees and Boosting: Helge Voss (MPI-K, Heidelberg) TMVA WorkshopDocument30 pagesDecision Trees and Boosting: Helge Voss (MPI-K, Heidelberg) TMVA WorkshopAshish TiwariNo ratings yet

- Elrc 4507 Unit PlanDocument4 pagesElrc 4507 Unit Planapi-284973023No ratings yet

- Dbms Lab Dbms Lab: 23 March 202Document12 pagesDbms Lab Dbms Lab: 23 March 202LOVISH bansalNo ratings yet