Professional Documents

Culture Documents

Differentiated I/O Services in Virtualized Environments: Tyler Harter, Salini SK & Anand Krishnamurthy

Differentiated I/O Services in Virtualized Environments: Tyler Harter, Salini SK & Anand Krishnamurthy

Uploaded by

Muthuraman SankaranOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Differentiated I/O Services in Virtualized Environments: Tyler Harter, Salini SK & Anand Krishnamurthy

Differentiated I/O Services in Virtualized Environments: Tyler Harter, Salini SK & Anand Krishnamurthy

Uploaded by

Muthuraman SankaranCopyright:

Available Formats

Differentiated I/O services in

virtualized environments

Tyler Harter, Salini SK & Anand Krishnamurthy

1

Overview

Provide differentiated I/O services for applications in

guest operating systems in virtual machines

Applications in virtual machines tag I/O requests

Hypervisors I/O scheduler uses these tags to provide

quality of I/O service

2

Motivation

Variegated applications with different I/O requirements

hosted in clouds

Not optimal if I/O scheduling is agnostic of the

semantics of the request

3

Motivation

4

Hypervisor

VM 1

VM 2 VM 3

Motivation

5

Hypervisor

VM

2

VM

3

Motivation

We want to have high and low priority processes that

correctly get differentiated service within a VM and

between VMs

6

Can my webserver/DHT log pushers IO

be served differently

from my webserver/DHTs IO?

Existing work & Problems

Vmwares ESX server offers Storage I/O Control (SIOC)

Provides I/O prioritization of virtual machines that

access a shared storage pool

7

But it supports prioritization only at host granularity!

Existing work & Problems

Xen credit scheduler also works at domain level

Linuxs CFQ I/O scheduler supports I/O prioritization

Possible to use priorities at both guest and hypervisors I/O

scheduler

8

Original Architecture

9

QEMU Virtual

SCSI Disk

Syscalls

I/O Scheduler

(e.g., CFQ)

Syscalls

I/O Scheduler

(e.g., CFQ)

Guest

VMs

Host

High Low High Low

Original Architecture

10

Problem 1: low and high may get same service

11

Problem 2: does not utilize host caches

12

Existing work & Problems

Xen credit scheduler also works at domain level

Linuxs CFQ I/O scheduler supports I/O prioritization

Possible to use priorities at both guest and hypervisors I/O

scheduler

Current state of the art doesnt provide differentiated

services at guest application level granularity

13

Solution

14

Tag I/O and prioritize in the hypervisor

Outline

KVM/Qemu, a brief intro

KVM/Qemu I/O stack

Multi-level I/O tagging

I/O scheduling algorithms

Evaluation

Summary

15

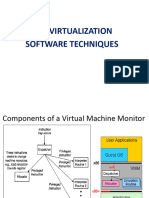

KVM/Qemu, a brief intro..

16

Hardware

Linux Standard Kernel with KVM - Hypervisor

KVM module part of

Linux kernel since

version 2.6

Linux has all the

mechanisms a VMM

needs to operate

several VMs.

Has 3 modes:- kernel,

user, guest

kernel-mode: switch into guest-mode and handle

exits due to I/O operations

user-mode: I/O when guest needs to access

devices

guest-mode: execute guest code, which is the

guest OS except I/O

Relies on a

virtualization capable

CPU with either Intel

VT or AMD SVM

extensions

KVM/Qemu, a brief intro..

17

Hardware

Linux Standard Kernel with KVM - Hypervisor

KVM module part of

Linux kernel since

version 2.6

Linux has all the

mechanisms a VMM

needs to operate

several VMs.

Has 3 modes:- kernel,

user, guest

kernel-mode: switch into guest-mode and handle

exits due to I/O operations

user-mode: I/O when guest needs to access

devices

guest-mode: execute guest code, which is the

guest OS except I/O

Relies on a

virtualization capable

CPU with either Intel

VT or AMD SVM

extensions

KVM/Qemu, a brief intro..

18

Hardware

Linux Standard Kernel with KVM - Hypervisor

Each Virtual Machine is

an user space process

KVM/Qemu, a brief intro..

19

Hardware

Linux Standard Kernel with KVM - Hypervisor

libvirt

Other

user

space

ps

KVM/Qemu I/O stack

Application in

guest OS

Application in

guest OS

System calls layer

read, write, stat ,

VFS

FileSystem

BufferCache

Block

SCSI

ATA

Issues an I/O-related system call

(eg: read(), write(), stat()) within

a user-space context of the

virtual machine.

This system call will lead to

submitting an I/O request from

within the kernel-space of the

VM

The I/O request will reach a device

driver - either an ATA-compliant

(IDE) or SCSI

KVM/Qemu I/O stack

Application in

guest OS

Application in

guest OS

System calls layer

read, write, stat ,

VFS

FileSystem

BufferCache

Block

SCSI

ATA

The device driver will issue privileged

instructions to read/write to the

memory regions exported over PCI by

the corresponding device

KVM/Qemu I/O stack

Hardware

Linux Standard Kernel with KVM - Hypervisor

These instructions will trigger VM-exits, that

will be handled by the core

KVM module within the Host's kernel-space

context

Qemu

emulator

The privileged I/O related

instructions are passed by the hypervisor to

the QEMU machine emulator

A VM-exit will take place for each of the

privileged

instructions resulting from the original I/O

request in the VM

KVM/Qemu I/O stack

Hardware

Linux Standard Kernel with KVM - Hypervisor

Qemu

emulator

These instructions will then be

emulated by device-controller emulation

modules within QEMU (either as ATA or as

SCSI commands)

QEMU will generate block-access I/O

requests, in a special blockdevice

emulation module

Thus the original I/O request will generate

I/O requests to the kernel-space of the Host

Upon completion of the system calls, qemu

will "inject" an interrupt into the VM that

originally issued the I/O request

Multi-level I/O tagging modifications

Modification 1: pass priorities via

syscalls

Modification 2: NOOP+ at guest I/O scheduler

Modification 3: extend SCSI protocol with

prio

Modification 2: NOOP+ at guest I/O scheduler

Modification 4: share-based prio sched in

host

Modification 5: use new calls in

benchmarks

Scheduler algorithm-Stride

- ID of application

= Shares assigned to

Virtual IO counter for

= Global_shares/

Dispatch request()

{

Select the ID which has lowest Virtual IO counter

Increase

by

if (

reaches threshold)

Reinitialize all

to 0

Dispatch request in the queue

}

31

Scheduler algorithm cntd

Problem: Sleeping process can monopolize the resource

once it wakes up after a long time

Solution:

If a sleeping process k wakes up, then set

= max( min(all

which are non zero),

)

32

Evaluation

Tested on HDD and SSD

Configuration:

33

Guest RAM size 1GB

Host RAM size 8GB

Hard disk RPM 7200

SSD 35000 IOPS Rd, 85000 IOPS

Wr

Guest OS Ubuntu Server 12.10 LK 3.2

Host OS Kubuntu 12.04 LK 3.2

Filesystem(Host/Guest) Ext4

Virtual disk image format qcow2

Results

Metrics:

Throughput

Latency

Benchmarks:

Filebench

Sysbench

Voldemort(Distributed Key Value Store)

34

Shares vs Throughput for different workloads : HDD

35

Shares vs Latency for different workloads : HDD

36

Priorities are

better

respected if

most of the read

request hits the

disk

Effective Throughput for various dispatch numbers : HDD

37

Priorities are

respected only when

dispatch numbers of

the disk is lower than

the number of read

requests generated

by the system at a

time

Downside: Dispatch

number of the disk is

directly proportional

to the effective

throughput

Shares vs Throughput for different workloads : SSD

38

Shares vs Latency for different workloads : SSD

39

Priorities in

SSDs are

respected only

under heavy

load, since

SSDs are faster

Comparison b/w different schedulers

40

Only Noop+LKMS respects priority! (Has to be, since we did it)

Results

Hard

drive/SSD

Webserver Mailserver

Random

Reads

Sequential

Reads

Voldemort

DHT Reads

Hard disk

Flash

41

Summary

It works!!!

Preferential services are possible only when dispatch

numbers of the disk is lower than the number of read

requests generated by the system at a time

But lower dispatch number reduces the effective throughput

of the storage

In SSD, preferential service is only possible under heavy load

Scheduling at the lowermost layer yields better

differentiated services

42

Future work

Get it working for writes

Get evaluations on VMware ESX SIOC and compare with

our results

43

44

You might also like

- VM Ware PerfDocument279 pagesVM Ware PerfeeeprasannaNo ratings yet

- Chap 18Document45 pagesChap 18Musah IbrahimNo ratings yet

- Virtualization Student NotesDocument47 pagesVirtualization Student Notesm.zeeshanpc1No ratings yet

- CS6456: Graduate Operating Systems: Bradjc@virginia - EduDocument45 pagesCS6456: Graduate Operating Systems: Bradjc@virginia - EduvarshiniNo ratings yet

- Aix and Powervm Workshop Workshop: Unit 3 Hardware Management ConsoleDocument54 pagesAix and Powervm Workshop Workshop: Unit 3 Hardware Management ConsolegagbadaNo ratings yet

- OpenVMS State of Port 20171006Document39 pagesOpenVMS State of Port 20171006KiranNo ratings yet

- Chapter-2 Processes and Threads in DSDocument54 pagesChapter-2 Processes and Threads in DSGUTAMA KUSSE GELEGLONo ratings yet

- Virtualization Technology: Argentina Software Pathfinding and InnovationDocument42 pagesVirtualization Technology: Argentina Software Pathfinding and InnovationNguyen Ba HoangNo ratings yet

- Week 5b Trap and Emulate Model Theorems 30032023 110444am PDFDocument26 pagesWeek 5b Trap and Emulate Model Theorems 30032023 110444am PDFFaiza FidaNo ratings yet

- Virtual MachinesDocument35 pagesVirtual MachinesArun KumarNo ratings yet

- Tuning For Web Serving On RHEL 64 KVMDocument19 pagesTuning For Web Serving On RHEL 64 KVMHạt Đậu NhỏNo ratings yet

- 7583 Lesson-10Document18 pages7583 Lesson-10nyamutoka rukiaNo ratings yet

- Processes: Hongfei Yan School of EECS, Peking University 3/15/2010Document69 pagesProcesses: Hongfei Yan School of EECS, Peking University 3/15/2010gaigoleb2No ratings yet

- Deploy Rac BPDocument39 pagesDeploy Rac BPAbuzaid Saad ElMahs100% (1)

- WINSEM2023-24 CSE4011 ETH VL2023240507664 2024-02-21 Reference-Material-IDocument27 pagesWINSEM2023-24 CSE4011 ETH VL2023240507664 2024-02-21 Reference-Material-Iyashwantkumar.mishra2020No ratings yet

- AL3452 OS Unit-5Document25 pagesAL3452 OS Unit-5msvimal07No ratings yet

- CH 3Document29 pagesCH 3Natanem YimerNo ratings yet

- Virtualization: Dr. Yingwu ZhuDocument46 pagesVirtualization: Dr. Yingwu Zhushazrah JamshaidNo ratings yet

- Processes: Hongfei Yan School of EECS, Peking University 3/16/2011Document69 pagesProcesses: Hongfei Yan School of EECS, Peking University 3/16/2011pbjoshiNo ratings yet

- Vmware Certified Professional Sample Questions and Answers: Mikelaverick@rtfm-Ed - Co.ukDocument23 pagesVmware Certified Professional Sample Questions and Answers: Mikelaverick@rtfm-Ed - Co.ukpaulalbert10No ratings yet

- Introduction To HMC On I5 Servers: Allyn WalshDocument45 pagesIntroduction To HMC On I5 Servers: Allyn WalshBlahhh1No ratings yet

- VirtualizationDocument49 pagesVirtualizationFerooz KhanNo ratings yet

- Redhat VirtualizationDocument54 pagesRedhat VirtualizationAnkur Verma50% (2)

- ITCNA - Chapter 7 - Virtualisation and CloudDocument34 pagesITCNA - Chapter 7 - Virtualisation and CloudLinda BurgNo ratings yet

- 3 Processes: 3.1 ThreadsDocument11 pages3 Processes: 3.1 ThreadsFyruz FyFiveNo ratings yet

- V Sphere Apis For Performance MonitoringDocument92 pagesV Sphere Apis For Performance MonitoringjjnewsNo ratings yet

- EVA Architecture IntroductionDocument61 pagesEVA Architecture IntroductionSuman Reddy TNo ratings yet

- AN121 G 01Document23 pagesAN121 G 01Manoranjan SahooNo ratings yet

- Virtualization and CloudDocument48 pagesVirtualization and CloudJuhi PandeyNo ratings yet

- Battle of SKM and Ium: How Windows 10 Rewrites Os ArchitectureDocument65 pagesBattle of SKM and Ium: How Windows 10 Rewrites Os ArchitecturebarpbarpNo ratings yet

- Lec05 PDFDocument8 pagesLec05 PDFshazrah JamshaidNo ratings yet

- 01 IntroductionDocument23 pages01 IntroductionBùi Đức HảiNo ratings yet

- User-Mode Linux: Ken C.K. Lee Cklee@cse - Psu.eduDocument15 pagesUser-Mode Linux: Ken C.K. Lee Cklee@cse - Psu.eduBhudy SetyawanNo ratings yet

- CodaDocument25 pagesCodamourya_chandraNo ratings yet

- 3 Virtualization Implementation LevelsDocument22 pages3 Virtualization Implementation LevelsSaravanaKumar MNo ratings yet

- TLC PresentationDocument42 pagesTLC PresentationPt BuddhakirdNo ratings yet

- Debugging Operating Systems With Time-Traveling Virtual MachinesDocument18 pagesDebugging Operating Systems With Time-Traveling Virtual MachinessushmsnNo ratings yet

- Virtualization and Cloud Computing: Vera Asodi VmwareDocument39 pagesVirtualization and Cloud Computing: Vera Asodi Vmwareshashona333100% (1)

- Blackhat 2015Document65 pagesBlackhat 2015hungbkpro90No ratings yet

- Elden Christensen - Principal Program Manager Lead - Microsoft Symon Perriman - Vice President - 5nine SoftwareDocument34 pagesElden Christensen - Principal Program Manager Lead - Microsoft Symon Perriman - Vice President - 5nine Softwarekranthi macharapuNo ratings yet

- AFinn Day 1 NetworkingDocument36 pagesAFinn Day 1 Networkingamlesh80No ratings yet

- HCL Interview VMDocument50 pagesHCL Interview VMAshokan J100% (1)

- ארכיטקטורה - הרצאה 5 - Virtual Memory and Memory HierarchyDocument59 pagesארכיטקטורה - הרצאה 5 - Virtual Memory and Memory HierarchyRonNo ratings yet

- Processes: CS403/534 Distributed Systems Erkay Savas Sabanci UniversityDocument46 pagesProcesses: CS403/534 Distributed Systems Erkay Savas Sabanci UniversityUmesh ThoriyaNo ratings yet

- Experiment No 2 Aim: To Study Virtualization and Install KVM. VirtualizationDocument12 pagesExperiment No 2 Aim: To Study Virtualization and Install KVM. VirtualizationsiddheshNo ratings yet

- CS431 Virtualization 12 BWDocument38 pagesCS431 Virtualization 12 BWSushil SharmaNo ratings yet

- Free Video Lectures For MBADocument39 pagesFree Video Lectures For MBAedholecomNo ratings yet

- Hyper-V Security by Kevin LimDocument44 pagesHyper-V Security by Kevin LimMicrosoft TechDays APACNo ratings yet

- 07 DriversDocument72 pages07 DriversRaja NaiduNo ratings yet

- 02 OperationsDocument19 pages02 OperationsEbbaqhbqNo ratings yet

- OS Unit 5Document29 pagesOS Unit 5prins lNo ratings yet

- KVM Virtualization TechnologyDocument19 pagesKVM Virtualization TechnologySyamsul Anuar100% (1)

- An Intrusion-Tolerant and Self-Recoverable Network Service System Using A Security Enhanced Chip MultiprocessorDocument18 pagesAn Intrusion-Tolerant and Self-Recoverable Network Service System Using A Security Enhanced Chip MultiprocessorlarryshiNo ratings yet

- HMCDocument46 pagesHMCsupriyabhandari24No ratings yet

- Desktop Support Engineer Materials 2Document20 pagesDesktop Support Engineer Materials 2kapishkumar100% (1)

- Ct5054ni WK05 L 93481Document41 pagesCt5054ni WK05 L 93481gautamdipendra968No ratings yet

- Introduction To VirtualizationDocument29 pagesIntroduction To VirtualizationUzair MayaNo ratings yet

- VMware Technical Journal - Summer 2013Document64 pagesVMware Technical Journal - Summer 2013cheese70No ratings yet

- L Libvirt PDFDocument11 pagesL Libvirt PDFmehdichitiNo ratings yet

- XenServer - Understanding Snapshots (v1.1)Document24 pagesXenServer - Understanding Snapshots (v1.1)ewdnaNo ratings yet

- Xenserver 7 0 Quick Start GuideDocument26 pagesXenserver 7 0 Quick Start GuideBersama SeiringNo ratings yet

- Using QEMU To Build and Deploy Virtual Machines (VMS) From Scratch On Ubuntu 10.04 LTS v1.2Document15 pagesUsing QEMU To Build and Deploy Virtual Machines (VMS) From Scratch On Ubuntu 10.04 LTS v1.2Kefa RabahNo ratings yet

- Dell Latitude E6440 - Owner ManualDocument69 pagesDell Latitude E6440 - Owner ManualaksdaisNo ratings yet

- OpenStack Virtual Machine Image GuideDocument64 pagesOpenStack Virtual Machine Image GuideAhamed FasilNo ratings yet

- GitHub - Bareflank - MicroV - A Micro Hypervisor For Running Micro VMsDocument11 pagesGitHub - Bareflank - MicroV - A Micro Hypervisor For Running Micro VMs宛俊No ratings yet

- Step-By-Step Install Guide Ubuntu 9.10 Karmic ServerDocument36 pagesStep-By-Step Install Guide Ubuntu 9.10 Karmic ServerKefa RabahNo ratings yet

- Oracle VM: Administrator's Guide For Release 3.4Document168 pagesOracle VM: Administrator's Guide For Release 3.4b LiewNo ratings yet

- Forti ADCDocument12 pagesForti ADCPedro RochaNo ratings yet

- Migrate SparcDocument8 pagesMigrate SparcAnil RawatNo ratings yet

- Dell Storage Best Practices For Oracle VM (CML1118)Document61 pagesDell Storage Best Practices For Oracle VM (CML1118)rambabuNo ratings yet

- Module 6 VirtualizationDocument29 pagesModule 6 VirtualizationSanthosh KumarNo ratings yet

- Panduan Cara Install Xen ServerDocument14 pagesPanduan Cara Install Xen ServerherwinsinagaNo ratings yet

- Hypervisor Porting KVM ARM PDFDocument15 pagesHypervisor Porting KVM ARM PDFMuraliKrishnanNo ratings yet

- Red - Hat - Enterprise - Linux 5 Virtualization en USDocument363 pagesRed - Hat - Enterprise - Linux 5 Virtualization en USeusphorusNo ratings yet

- Recent Trends in Operating Systems and Their Applicability To HPCDocument7 pagesRecent Trends in Operating Systems and Their Applicability To HPCSHASHWAT 20MIS0255No ratings yet

- 02 Introduction To Compute VirtualizationDocument30 pages02 Introduction To Compute Virtualizationmy pcNo ratings yet

- The Xen HypervisorDocument15 pagesThe Xen Hypervisorapi-19455397No ratings yet

- Best Practices For Deploying Citrix XenServer On HP Proliant BladeDocument35 pagesBest Practices For Deploying Citrix XenServer On HP Proliant Bladevpadki5238No ratings yet

- FortiManager VM Install Guide v5.4Document53 pagesFortiManager VM Install Guide v5.4M̶i̶n̶ N̶a̶m̶i̶k̶a̶s̶e̶No ratings yet

- Remus: High Availability Via Asynchronous Virtual Machine ReplicationDocument14 pagesRemus: High Availability Via Asynchronous Virtual Machine ReplicationsushmsnNo ratings yet

- Unitrends Backup Data SheetDocument3 pagesUnitrends Backup Data SheetResolehtmai DonNo ratings yet

- Fortianalyzer v6.2.1 Upgrade GuideDocument25 pagesFortianalyzer v6.2.1 Upgrade GuideLucy TerrazasNo ratings yet

- Lecture VDIDocument98 pagesLecture VDIEtsitaSimonNo ratings yet

- Fortianalyzer: Single-Pane Orchestration, Automation, and ResponseDocument6 pagesFortianalyzer: Single-Pane Orchestration, Automation, and ResponseFayçal LbabdaNo ratings yet

- Dell Compellent - Oracle Database 12c Best Practices PDFDocument28 pagesDell Compellent - Oracle Database 12c Best Practices PDFSubhendu DharNo ratings yet

- Scaler 10.Ns Gen NSVPX Wrapper Con 10Document156 pagesScaler 10.Ns Gen NSVPX Wrapper Con 10Hernan CopaNo ratings yet