Professional Documents

Culture Documents

0 ratings0% found this document useful (0 votes)

13 viewsLearning Scenarios Supervised Learning Unsupervised Learning Unit 1 Part B

Learning Scenarios Supervised Learning Unsupervised Learning Unit 1 Part B

Uploaded by

Ankur ChoudharyCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5822)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Sample QP - Paper 1 Edexcel Maths As-LevelDocument28 pagesSample QP - Paper 1 Edexcel Maths As-Levelmaya 1DNo ratings yet

- 3i Research Paper Noriels GroupDocument76 pages3i Research Paper Noriels GroupMICHELLE LENDESNo ratings yet

- Chapter 1 5 AssessmentDocument80 pagesChapter 1 5 AssessmentJulianna Mae OpianaNo ratings yet

- Cse Btech IV Yr Vii Sem Scheme Syllabus July 2022Document25 pagesCse Btech IV Yr Vii Sem Scheme Syllabus July 2022Ved Kumar GuptaNo ratings yet

- Sociological LensesDocument14 pagesSociological LensesMariano, Carl Jasper S.No ratings yet

- Draft Research ProposalDocument15 pagesDraft Research Proposalrufrias78No ratings yet

- Personality Classification System Using Data Mining: Abstract - Personality Is One Feature That Determines HowDocument4 pagesPersonality Classification System Using Data Mining: Abstract - Personality Is One Feature That Determines HowMeena MNo ratings yet

- Python UNIT-5Document67 pagesPython UNIT-5Chandan Kumar100% (1)

- PR2 Topic 4 - Variables and Scales of MeasurementDocument23 pagesPR2 Topic 4 - Variables and Scales of Measurementibrahim coladaNo ratings yet

- A Research Study On The Impact of Training and Development On Employee Performance During Covid-19 PandemicDocument10 pagesA Research Study On The Impact of Training and Development On Employee Performance During Covid-19 PandemicKookie ShresthaNo ratings yet

- Roles of Technology For TeachingDocument7 pagesRoles of Technology For Teachingsydney andersonNo ratings yet

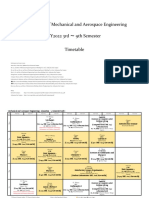

- FT2022 Timetable (3-9 Semesters)Document21 pagesFT2022 Timetable (3-9 Semesters)Avin SharmaNo ratings yet

- Handouts (Workshop 1)Document50 pagesHandouts (Workshop 1)touseefahmadNo ratings yet

- I ObjectivesDocument3 pagesI ObjectivesSharie MagsinoNo ratings yet

- Jurnal Skripsi - Wilkinson Mardika R MDocument13 pagesJurnal Skripsi - Wilkinson Mardika R MWilkinson MardikaNo ratings yet

- Factors Affecting Customers PrefernceDocument58 pagesFactors Affecting Customers PreferncecityNo ratings yet

- Survival of Women: An Ecofeminist View in Anita Desai'S Fire On The MountainDocument4 pagesSurvival of Women: An Ecofeminist View in Anita Desai'S Fire On The MountainMashela mimi Suarti, S.PdNo ratings yet

- Employment 5 0 The Work of The Future and The Future - 2022 - Technology in SocDocument15 pagesEmployment 5 0 The Work of The Future and The Future - 2022 - Technology in SocTran Huong TraNo ratings yet

- Reshaping The Workplace: The Future of Work Will Be Driven by New Hybrid SpacesDocument14 pagesReshaping The Workplace: The Future of Work Will Be Driven by New Hybrid SpacesFraNo ratings yet

- MPH - Materi 10 (B) - (Penulisan Ilmiah)Document51 pagesMPH - Materi 10 (B) - (Penulisan Ilmiah)andiNo ratings yet

- UTS Module 2 - The Self Society and CultureDocument10 pagesUTS Module 2 - The Self Society and Culturegenesisrhapsodos7820No ratings yet

- Deep Convolutional Autoencoder For Assessment ofDocument7 pagesDeep Convolutional Autoencoder For Assessment ofRadim SlovakNo ratings yet

- K. O'Halloran: Multimodal Discourse Analysis: Systemic FUNCTIONAL PERSPECTIVES. Continuum, 2004Document3 pagesK. O'Halloran: Multimodal Discourse Analysis: Systemic FUNCTIONAL PERSPECTIVES. Continuum, 2004enviNo ratings yet

- Bart Graduate Book2018 Final IssuDocument40 pagesBart Graduate Book2018 Final IssuG JNo ratings yet

- Relación Entre Ley y Moral, Razonamiento de Niños Sobre Leyes InjustasDocument13 pagesRelación Entre Ley y Moral, Razonamiento de Niños Sobre Leyes InjustasKaren ForeroNo ratings yet

- Prediction of Wildfire-Induced Trips of Overhead Transmission Line Based On Data MiningDocument4 pagesPrediction of Wildfire-Induced Trips of Overhead Transmission Line Based On Data MiningLucas BotelhoNo ratings yet

- Sexty 5e CH 04 FinalDocument28 pagesSexty 5e CH 04 FinalDinusha FernandoNo ratings yet

- 2010 RICCUCCI Public Administration - Traditions of Inquiry and Philosophies of KnowledgeDocument179 pages2010 RICCUCCI Public Administration - Traditions of Inquiry and Philosophies of KnowledgeAtik SaraswatiNo ratings yet

- Chapter 03 MMDocument38 pagesChapter 03 MMBappy PaulNo ratings yet

- The Significant Relationship Between Sleep Duration and Exam Scores Amongst ABM Students in SPSPSDocument7 pagesThe Significant Relationship Between Sleep Duration and Exam Scores Amongst ABM Students in SPSPSDe Asis AndreiNo ratings yet

Learning Scenarios Supervised Learning Unsupervised Learning Unit 1 Part B

Learning Scenarios Supervised Learning Unsupervised Learning Unit 1 Part B

Uploaded by

Ankur Choudhary0 ratings0% found this document useful (0 votes)

13 views25 pagesOriginal Title

00060982152 Learning Scenarios Supervised Learning Unsupervised Learning Unit 1 Part B (1) (1)

Copyright

© © All Rights Reserved

Available Formats

PPTX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

Download as pptx, pdf, or txt

0 ratings0% found this document useful (0 votes)

13 views25 pagesLearning Scenarios Supervised Learning Unsupervised Learning Unit 1 Part B

Learning Scenarios Supervised Learning Unsupervised Learning Unit 1 Part B

Uploaded by

Ankur ChoudharyCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

Download as pptx, pdf, or txt

You are on page 1of 25

Learning Scenarios( Supervised learning, Unsupervised

learning, Semi- Supervised learning, Transductive inference,

On-line learning, Reinforcement learning, Active learning),

Generalization

Supervised Learning, Unsupervised Learning, Reinforcement

learning )

Learning is a search through the space of possible hypotheses for

one that will perform well, even on new examples beyond the

training set. To measure the accuracy of a hypothesis we give it a

test set of examples that are distinct from the training set.

Learning Scenarios

Learning Problems Statistical Inference

7. Inductive Learning

1. Supervised Learning

8. Deductive Inference

2. Unsupervised Learning 9. Transductive Learning

3. Reinforcement Learning Learning Techniques

Hybrid Learning Problems 10. Multi-Task Learning

4. Semi-Supervised Learning 11. Active Learning

5. Self-Supervised Learning 12. Online Learning

13. Transfer Learning

6. Multi-Instance Learning

14. Ensemble Learning

1. Supervised Learning

• “Applications in which the training data comprises examples of the input

vectors along with their corresponding target vectors are known as

supervised learning problems.”

• Supervised Learning is the process of making an algorithm to learn to map

an input to a particular output. This is achieved using the labelled datasets

that you have collected. If the mapping is correct, the algorithm has

successfully learned. Else, you make the necessary changes to the

algorithm so that it can learn correctly. Supervised Learning algorithms

can help make predictions for new unseen data that we obtain later in the

future.

• Types of Supervised Learning

• Supervised Learning has been broadly classified into 2 types.

• Regression

• Classification

• Regression is the kind of Supervised Learning that learns from the

Labelled Datasets and is then able to predict a continuous-valued

output for the new data given to the algorithm. It is used whenever

the output required is a number such as money or height etc.

• Classification, on the other hand, is the kind of learning where the

algorithm needs to map the new data that is obtained to any one

of the 2 classes that we have in our dataset. The classes need to be

mapped to either 1 or 0 which in real-life translated to ‘Yes’ or ‘No’,

‘Rains’ or ‘Does Not Rain’ and so forth. The output will be either

one of the classes and not a number as it was in Regression.

2. Unsupervised Learning

• In unsupervised learning, there is no instructor or teacher, and the

algorithm must learn to make sense of the data without this guide.

• Compared to supervised learning, unsupervised learning operates

upon only the input data without outputs or target variables. As

such, unsupervised learning does not have a teacher correcting the

model, as in the case of supervised learning.

• There are many types of unsupervised learning, although there are

two main problems that are often encountered by a practitioner:

they are clustering that involves finding groups in the data and

density estimation that involves summarizing the distribution of

data.

• Clustering: Unsupervised learning problem that involves finding

groups in data.

• Density Estimation: Unsupervised learning problem that involves

summarizing the distribution of data.

The most common unsupervised learning task is clustering: detecting potentially

useful clusters of input examples. For example, a taxi agent might gradually develop

a concept of “good traffic days” and “bad traffic days” without ever being given

labeled examples of each by a teacher.

3. Reinforcement Learning

• Reinforcement learning is learning what to do — how

to map situations to actions—so as to maximize a

numerical reward signal. The learner is not told

which actions to take, but instead must discover

which actions yield the most reward by trying them.

• Some machine learning algorithms do not just

experience a fixed dataset. For example,

reinforcement learning algorithms interact with an

environment, so there is a feedback loop between the

learning system and its experiences.

4. Semi-Supervised Learning

• Semi-supervised learning is supervised learning where the training data

contains very few labeled examples and a large number of unlabeled

examples.

• The goal of a semi-supervised learning model is to make effective use of all

of the available data, not just the labelled data like in supervised learning.

• Making effective use of unlabelled data may require the use of or inspiration

from unsupervised methods such as clustering and density estimation. Once

groups or patterns are discovered, supervised methods or ideas from

supervised learning may be used to label the unlabeled examples or apply

labels to unlabeled representations later used for prediction.

• “Semisupervised” learning attempts to improve the accuracy of supervised

learning by exploiting information in unlabeled data. This sounds like magic,

but it can work!

• For example, classifying photographs requires a dataset of photographs that

have already been labeled by human operators.

5. Self-Supervised Learning

• The self-supervised learning framework requires only unlabeled data in order

to formulate a pretext learning task such as predicting context or image

rotation, for which a target objective can be computed without supervision.

• A common example of self-supervised learning is computer vision

where a corpus of unlabeled images is available and can be used to

train a supervised model, such as making images grayscale and having

a model predict a color representation (colorization) or removing

blocks of the image and have a model predict the missing parts

(inpainting).

• Another example of self-supervised learning is generative adversarial

networks, or GANs. These are generative models that are most

commonly used for creating synthetic photographs using only a

collection of unlabeled examples from the target domain.

6. Multi-Instance Learning

• Multi-instance learning is a supervised learning

problem where individual examples are unlabeled;

instead, bags or groups of samples are labeled.

• In multi-instance learning, an entire collection of

examples is labeled as containing or not containing an

example of a class, but the individual members of the

collection are not labeled.

• Modeling involves using knowledge that one or some of

the instances in a bag are associated with a target label,

and to predict the label for new bags in the future given

their composition of multiple unlabeled examples.

7. Inductive Learning

• Inductive learning involves using evidence to

determine the outcome.

• Inductive reasoning refers to using specific cases to

determine general outcomes, e.g. specific to general.

• However, one disadvantage of the inductive

approach is the risk that the learner will formulate a

rule incorrectly. For this reason, it is important to

check that the learner has inferred the correct rule.

Also, if a rule is complex it may be better to use the

deductive approach and give the rule first, or give

some guidance.

8. Deductive Learning

• Deduction is the reverse of induction. If

induction is going from the specific to the

general, deduction is going from the general to

the specific.

• Deduction is a top-down type of reasoning that

seeks for all premises to be met before

determining the conclusion, whereas induction

is a bottom-up type of reasoning that uses

available data as evidence for an outcome.

9. Transductive Learning

• Transduction is reasoning from observed, specific

(training) cases to specific (test) cases. In

contrast, induction is reasoning from observed

training cases to general rules, which are then

applied to the test cases.

10. Multi-Task Learning

• Multi-task learning is a type of supervised learning that involves fitting a

model on one dataset that addresses multiple related problems.

• It involves devising a model that can be trained on multiple related tasks

in such a way that the performance of the model is improved by training

across the tasks as compared to being trained on any single task.

• Multi-task learning is a way to improve generalization by pooling the

examples (which can be seen as soft constraints imposed on the

parameters) arising out of several tasks.

• For example, it is common for a multi-task learning problem to involve the

same input patterns that may be used for multiple different outputs or

supervised learning problems. In this setup, each output may be predicted

by a different part of the model, allowing the core of the model to

generalize across each task for the same inputs.

11. Active Learning

• Active learning is a type of supervised learning and seeks to

achieve the same or better performance of so-called “passive”

supervised learning, although by being more efficient about

what data is collected or used by the model.

• The key idea behind active learning is that a machine learning algorithm can achieve greater

accuracy with fewer training labels if it is allowed to choose the data from which it learns. An

active learner may pose queries, usually in the form of unlabeled data instances to be labeled

by an oracle (e.g., a human annotator).

• Active learning is a useful approach when there is not much

data available and new data is expensive to collect or label.

• The active learning process allows the sampling of the domain

to be directed in a way that minimizes the number of samples

and maximizes the effectiveness of the model.

12. Online Learning

• Online learning involves using the data available and updating the

model directly before a prediction is required or after the last

observation was made.

• Online learning is appropriate for those problems where

observations are provided over time and where the probability

distribution of observations is expected to also change over time.

Therefore, the model is expected to change just as frequently in

order to capture and harness those changes.

• Traditionally machine learning is performed offline, which means we have a batch of data,

and we optimize an equation […] However, if we have streaming data, we need to perform

online learning, so we can update our estimates as each new data point arrives rather

than waiting until “the end” (which may never occur).

• One example of online learning is so-called stochastic or online

gradient descent used to fit an artificial neural network.

13. Transfer Learning

• Transfer learning is a type of learning where a model is first trained on

one task, then some or all of the model is used as the starting point for

a related task.

• In transfer learning, the learner must perform two or more different

tasks, but we assume that many of the factors that explain the

variations in P1 are relevant to the variations that need to be

captured for learning P2.

• An example is image classification, where a predictive model, such as

an artificial neural network, can be trained on a large corpus of general

images, and the weights of the model can be used as a starting point

when training on a smaller more specific dataset, such as dogs and cats.

The features already learned by the model on the broader task, such as

extracting lines and patterns, will be helpful on the new related task.

14. Ensemble Learning

• Ensemble learning is an approach where two or

more modes are fit on the same data and the

predictions from each model are combined.

• The field of ensemble learning provides many ways of combining the ensemble members’

predictions, including uniform weighting and weights chosen on a validation set.

• Bagging, boosting, and stacking have been

developed over the last couple of decades, and

their performance is often astonishingly good.

Machine learning researchers have struggled to

understand why.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5822)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Sample QP - Paper 1 Edexcel Maths As-LevelDocument28 pagesSample QP - Paper 1 Edexcel Maths As-Levelmaya 1DNo ratings yet

- 3i Research Paper Noriels GroupDocument76 pages3i Research Paper Noriels GroupMICHELLE LENDESNo ratings yet

- Chapter 1 5 AssessmentDocument80 pagesChapter 1 5 AssessmentJulianna Mae OpianaNo ratings yet

- Cse Btech IV Yr Vii Sem Scheme Syllabus July 2022Document25 pagesCse Btech IV Yr Vii Sem Scheme Syllabus July 2022Ved Kumar GuptaNo ratings yet

- Sociological LensesDocument14 pagesSociological LensesMariano, Carl Jasper S.No ratings yet

- Draft Research ProposalDocument15 pagesDraft Research Proposalrufrias78No ratings yet

- Personality Classification System Using Data Mining: Abstract - Personality Is One Feature That Determines HowDocument4 pagesPersonality Classification System Using Data Mining: Abstract - Personality Is One Feature That Determines HowMeena MNo ratings yet

- Python UNIT-5Document67 pagesPython UNIT-5Chandan Kumar100% (1)

- PR2 Topic 4 - Variables and Scales of MeasurementDocument23 pagesPR2 Topic 4 - Variables and Scales of Measurementibrahim coladaNo ratings yet

- A Research Study On The Impact of Training and Development On Employee Performance During Covid-19 PandemicDocument10 pagesA Research Study On The Impact of Training and Development On Employee Performance During Covid-19 PandemicKookie ShresthaNo ratings yet

- Roles of Technology For TeachingDocument7 pagesRoles of Technology For Teachingsydney andersonNo ratings yet

- FT2022 Timetable (3-9 Semesters)Document21 pagesFT2022 Timetable (3-9 Semesters)Avin SharmaNo ratings yet

- Handouts (Workshop 1)Document50 pagesHandouts (Workshop 1)touseefahmadNo ratings yet

- I ObjectivesDocument3 pagesI ObjectivesSharie MagsinoNo ratings yet

- Jurnal Skripsi - Wilkinson Mardika R MDocument13 pagesJurnal Skripsi - Wilkinson Mardika R MWilkinson MardikaNo ratings yet

- Factors Affecting Customers PrefernceDocument58 pagesFactors Affecting Customers PreferncecityNo ratings yet

- Survival of Women: An Ecofeminist View in Anita Desai'S Fire On The MountainDocument4 pagesSurvival of Women: An Ecofeminist View in Anita Desai'S Fire On The MountainMashela mimi Suarti, S.PdNo ratings yet

- Employment 5 0 The Work of The Future and The Future - 2022 - Technology in SocDocument15 pagesEmployment 5 0 The Work of The Future and The Future - 2022 - Technology in SocTran Huong TraNo ratings yet

- Reshaping The Workplace: The Future of Work Will Be Driven by New Hybrid SpacesDocument14 pagesReshaping The Workplace: The Future of Work Will Be Driven by New Hybrid SpacesFraNo ratings yet

- MPH - Materi 10 (B) - (Penulisan Ilmiah)Document51 pagesMPH - Materi 10 (B) - (Penulisan Ilmiah)andiNo ratings yet

- UTS Module 2 - The Self Society and CultureDocument10 pagesUTS Module 2 - The Self Society and Culturegenesisrhapsodos7820No ratings yet

- Deep Convolutional Autoencoder For Assessment ofDocument7 pagesDeep Convolutional Autoencoder For Assessment ofRadim SlovakNo ratings yet

- K. O'Halloran: Multimodal Discourse Analysis: Systemic FUNCTIONAL PERSPECTIVES. Continuum, 2004Document3 pagesK. O'Halloran: Multimodal Discourse Analysis: Systemic FUNCTIONAL PERSPECTIVES. Continuum, 2004enviNo ratings yet

- Bart Graduate Book2018 Final IssuDocument40 pagesBart Graduate Book2018 Final IssuG JNo ratings yet

- Relación Entre Ley y Moral, Razonamiento de Niños Sobre Leyes InjustasDocument13 pagesRelación Entre Ley y Moral, Razonamiento de Niños Sobre Leyes InjustasKaren ForeroNo ratings yet

- Prediction of Wildfire-Induced Trips of Overhead Transmission Line Based On Data MiningDocument4 pagesPrediction of Wildfire-Induced Trips of Overhead Transmission Line Based On Data MiningLucas BotelhoNo ratings yet

- Sexty 5e CH 04 FinalDocument28 pagesSexty 5e CH 04 FinalDinusha FernandoNo ratings yet

- 2010 RICCUCCI Public Administration - Traditions of Inquiry and Philosophies of KnowledgeDocument179 pages2010 RICCUCCI Public Administration - Traditions of Inquiry and Philosophies of KnowledgeAtik SaraswatiNo ratings yet

- Chapter 03 MMDocument38 pagesChapter 03 MMBappy PaulNo ratings yet

- The Significant Relationship Between Sleep Duration and Exam Scores Amongst ABM Students in SPSPSDocument7 pagesThe Significant Relationship Between Sleep Duration and Exam Scores Amongst ABM Students in SPSPSDe Asis AndreiNo ratings yet