Professional Documents

Culture Documents

Evaluation Method Holdout

Evaluation Method Holdout

Uploaded by

jemal yahyaaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Evaluation Method Holdout

Evaluation Method Holdout

Uploaded by

jemal yahyaaCopyright:

Available Formats

CoSc 6211 Advanced Concepts of Data Mining

Classification and Prediction (3-1)

Model Evaluation and Selection

Model Evaluation and Selection

• Evaluation metrics: How can we measure accuracy? Other metrics to

consider?

• Use validation test set of class-labeled tuples instead of training set

when assessing accuracy

• Methods for estimating a classifier’s accuracy:

– Holdout method, random subsampling

– Cross-validation

– Bootstrap

• Comparing classifiers:

– Confidence intervals

– Cost-benefit analysis and ROC Curves

JJU CoSc 6211 2

Classifier Evaluation Metrics: Confusion

Matrix

• The confusion matrix is a useful tool for analyzing how well your

classifier can recognize tuples of different classes. A confusion

matrix for two classes is shown in Figure 6.27.

Actual class\Predicted class C1 ¬ C1

C1 True Positives (TP) False Negatives (FN)

¬ C1 False Positives (FP) True Negatives (TN)

• Given m classes, an entry, CMi,j in a confusion matrix indicates # of

tuples in class i that were labeled by the classifier as class j

• May have extra rows/columns to provide totals

JJU CoSc 6211 3

The confusion matrix is a useful tool for analyzing how well your classifier can

recognize tuples of different classes. TP and TN tell us when the classifier is getting

things right, while FP and FN tell us when the classifier is getting things wrong (i.e.,

mislabeling).

Predicted class

A\P C ¬C

Yes No

C TP FN P

Yes True positive False negative

¬C FP TN N Actual class

P’ N’ All

No False positive True negative

P’ is the number of tuples that were labeled as positive (TP +FP) and N’ is the number of

tuples that were labeled as negative (TN +FN). The total number of tuples is TP +TN +FP

+TN, or P +N, or P’ + N’. Note that although the confusion matrix shown is for a binary

classification problem, confusion matrices can be easily drawn for multiple classes in a

similar manner.

JJU CoSc 6211 4

Classifier Evaluation Metrics: Accuracy, Error

Rate, Sensitivity and Specificity

Class Imbalance Problem:

A\P C ¬C One class may be rare, e.g.

C TP FN P fraud, or HIV-positive

Significant majority of the

¬C FP TN N

P’ N’ All negative class and minority of

the positive class

Classifier Accuracy, or Sensitivity: True Positive

recognition rate: percentage of test recognition rate

Sensitivity = TP/P

set tuples that are correctly

Specificity: True Negative

classified

recognition rate

Accuracy = (TP + TN)/All Specificity = TN/N

Error rate: 1 – accuracy, or

Error rate = (FP + FN)/All

JJU CoSc 6211 5

Classifier Evaluation Metrics:

Precision and Recall, and F-measures

• Precision: exactness – what % of tuples that the classifier

labeled as positive are actually positive

• Recall: completeness – what % of positive tuples did the

classifier label as positive?

• Perfect score is 1.0

• Inverse relationship between precision & recall

• F measure (F1 or F-score): harmonic mean of precision and

recall,

• Fß: weighted measure of precision and recall

– assigns ß times as much weight to recall as to precision

JJU CoSc 6211

6

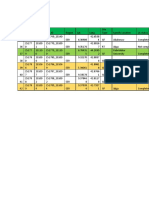

Classifier Evaluation Metrics: Example

Actual Class\Predicted class cancer = yes cancer = no Total Recognition(%)

cancer = yes 90 210 300 30.00 (sensitivity

cancer = no 140 9560 9700 98.56 (specificity)

Total 230 9770 10000 96.40 (accuracy)

Precision = TP/TP+FP

90/300 = 30.00 (sensitivity)

= 90/230 = 39.13%

9560/9770 = 98.56 (specificity)

Recall = TP/TP+FN 9560/10000 = 96.40 (accuracy)

= 90/300 = 30.00%

JJU CoSc 6211 7

Evaluating Classifier Accuracy:

Holdout & Cross-Validation Methods

• Holdout method

– Given data is randomly partitioned into two independent sets

• Training set (e.g., 2/3) for model construction

• Test set (e.g., 1/3) for accuracy estimation

– Random sampling: a variation of holdout

• Repeat holdout k times, accuracy = avg. of the accuracies obtained

• Cross-validation (k-fold, where k = 10 is most popular)

– Randomly partition the data into k mutually exclusive subsets, each approximately equal

size

– At i-th iteration, use Di as test set and others as training set

– Leave-one-out is a special case of k-fold cross-validation where k is set to the number of

initial tuples. That is, only one sample is “left out” at a time for the test set.

– In stratified cross-validation, the folds are stratified so that the class distribution of the

tuples in each fold is approximately the same as that in the initial data.

• In general, stratified 10-fold cross-validation is recommended for estimating

accuracy (even if computation power allows using more folds) due to its relatively

low bias and variance.

JJU CoSc 6211 8

Cross Validation: Holdout Method

— Break up data into groups of the same size

— Hold aside one group for testing and use the rest to build model

— Repeat

iteration

Test

JJU CoSc 6211 9

JJU CoSc 6211 10

Evaluating Classifier Accuracy: Bootstrap

• Bootstrap

– Works well with small data sets

– Samples the given training tuples uniformly with replacement

• i.e., each time a tuple is selected, it is equally likely to be selected

again and re-added to the training set

• Several bootstrap methods, and a common one is .632 boostrap

– A data set with d tuples is sampled d times, with replacement,

resulting in a training set of d samples. The data tuples that did not

make it into the training set end up forming the test set. About 63.2%

of the original data end up in the bootstrap, and the remaining 36.8%

form the test set (since (1 – 1/d)d ≈ e-1 = 0.368)

– Repeat the sampling procedure k times, overall accuracy of the model:

JJU CoSc 6211 11

Model Selection: ROC Curves

• ROC (Receiver Operating

Characteristics) curves: for visual

comparison of classification models

• Originated from signal detection theory

• Shows the trade-off between the true

positive rate and the false positive rate

• The area under the ROC curve is a Vertical axis

measure of the accuracy of the model represents the true

• Rank the test tuples in decreasing positive rate

order: the one that is most likely to Horizontal axis rep.

belong to the positive class appears at the false positive rate

the top of the list The plot also shows a

• The closer to the diagonal line (i.e., the diagonal line

closer the area is to 0.5), the less

A model with perfect

accuracy will have an

accurate is the model area of 1.0

JJU CoSc 6211 12

Issues Affecting Model Selection

• Accuracy

– classifier accuracy: predicting class label

• Speed

– time to construct the model (training time)

– time to use the model (classification/prediction time)

• Robustness: handling noise and missing values

• Scalability: efficiency in disk-resident databases

• Interpretability

– understanding and insight provided by the model

• Other measures, e.g., goodness of rules, such as decision tree size or

compactness of classification rules

JJU CoSc 6211 13

Reading assignment

• Text Book: Data Mining Concepts and

Techniques, by J.Han

• Linear regression

• Model Evaluation and Selection

JJU CoSc 6211 14

You might also like

- BS Masterfile - Latest - April 29thDocument110 pagesBS Masterfile - Latest - April 29thRahul HansNo ratings yet

- Advance Cluster - Classification: Ha Le Hoai TrungDocument50 pagesAdvance Cluster - Classification: Ha Le Hoai TrungPhuong Nguyen Thi BichNo ratings yet

- Lecture 01-Model Selection and EvaluationDocument29 pagesLecture 01-Model Selection and Evaluationmah dltNo ratings yet

- Model Evaluation and SelectionDocument37 pagesModel Evaluation and SelectionSmitNo ratings yet

- K Nearest NeighborsDocument19 pagesK Nearest NeighborsCSSCTube FCITubeNo ratings yet

- ML Model EvaluationDocument17 pagesML Model EvaluationSTYXNo ratings yet

- 2 Supervised LearningDocument52 pages2 Supervised LearningAhlam AzamNo ratings yet

- CST 42315 Dam - L9 1Document15 pagesCST 42315 Dam - L9 1Kay KhineNo ratings yet

- Classification - Performance EvlautionDocument13 pagesClassification - Performance EvlautionMhd AslamNo ratings yet

- Presentation On ClassificationDocument18 pagesPresentation On Classificationsree vishnupriyqNo ratings yet

- 8c - Model Evaluation and SelectionDocument15 pages8c - Model Evaluation and SelectionZafar IqbalNo ratings yet

- ClassificationDocument58 pagesClassificationlekhaNo ratings yet

- Module 5 Advanced Classification TechniquesDocument40 pagesModule 5 Advanced Classification TechniquesSaurabh JagtapNo ratings yet

- Lecture 2 Classifier Performance MetricsDocument60 pagesLecture 2 Classifier Performance Metricsiamboss086No ratings yet

- DM - ModelEvaluation CH 3Document14 pagesDM - ModelEvaluation CH 3Hasset Tiss Abay GenjiNo ratings yet

- Lecture 16Document36 pagesLecture 16Abood FazilNo ratings yet

- Model Evaluation and SelectionDocument41 pagesModel Evaluation and SelectionDev kartik AgarwalNo ratings yet

- Lecture 3 1611410001002Document51 pagesLecture 3 1611410001002amrasirahNo ratings yet

- Tutorial 6 Evaluation Metrics For Machine Learning Models: Classification and Regression ModelsDocument22 pagesTutorial 6 Evaluation Metrics For Machine Learning Models: Classification and Regression ModelsWenbo PanNo ratings yet

- Accuracy MeasuresDocument61 pagesAccuracy MeasuresSanoop MallisseryNo ratings yet

- Data Mining Models and Evaluation TechniquesDocument59 pagesData Mining Models and Evaluation Techniquesspsberry8No ratings yet

- Chapter 5 Evaluation MetricsDocument18 pagesChapter 5 Evaluation Metricsliyu agyeNo ratings yet

- Predictive Accuracy EvaluationDocument13 pagesPredictive Accuracy EvaluationSarFaraz Ali MemonNo ratings yet

- Cross ValidationDocument10 pagesCross Validationvinitrohit96No ratings yet

- Assignment1 191288Document7 pagesAssignment1 191288Pragam KaisthaNo ratings yet

- AI & ML NotesDocument22 pagesAI & ML Noteskarthik singaraoNo ratings yet

- Accuracy and Error MeasuresDocument46 pagesAccuracy and Error Measuresbiswanath dehuriNo ratings yet

- Class Imbalance Problem: BY Dr. Anupam Ghosh 4 SEPT, 2023Document27 pagesClass Imbalance Problem: BY Dr. Anupam Ghosh 4 SEPT, 2023Arunima DoluiNo ratings yet

- Dmunit 4Document23 pagesDmunit 4ps1406051No ratings yet

- Classification 2Document18 pagesClassification 2pppchan23100No ratings yet

- Machine Learning TerminologyDocument16 pagesMachine Learning Terminologyaditidhepe25No ratings yet

- Classifier EvaluationDocument19 pagesClassifier EvaluationR majumdarNo ratings yet

- CSC4316 9Document40 pagesCSC4316 9Jamil SalisNo ratings yet

- Model Evaluation and SelectionDocument7 pagesModel Evaluation and SelectionNikhil KanojiNo ratings yet

- Confusing MatrixDocument1 pageConfusing MatrixKristian PacquingNo ratings yet

- ML-Lecture-12 (Evaluation Metrics For Classification)Document15 pagesML-Lecture-12 (Evaluation Metrics For Classification)Md Fazle RabbyNo ratings yet

- Classification EvaluationDocument28 pagesClassification EvaluationKathy KgNo ratings yet

- Confusion Matrix: Dr. P. K. ChaurasiaDocument13 pagesConfusion Matrix: Dr. P. K. Chaurasiaaimsneet7No ratings yet

- Lecture - Model Accuracy MeasuresDocument61 pagesLecture - Model Accuracy MeasuresTanmoyNo ratings yet

- Data Mining: Practical Machine Learning Tools and TechniquesDocument73 pagesData Mining: Practical Machine Learning Tools and TechniquesArvindNo ratings yet

- Model Evaluation and SelectionDocument22 pagesModel Evaluation and SelectiondiscodancerhasanNo ratings yet

- Evaluation Measure ClassificationDocument6 pagesEvaluation Measure ClassificationEnaqkat PainavNo ratings yet

- Machine Learning Evaluation Metrics LecturerDocument30 pagesMachine Learning Evaluation Metrics Lectureryassmin khaldiNo ratings yet

- Ell409 AqDocument8 pagesEll409 Aqlovlesh royNo ratings yet

- Data Mining - Weka 3.6.0Document5 pagesData Mining - Weka 3.6.0Navee JayakodyNo ratings yet

- 06 - ML - Classificaion Performance Evaluation MeasuresDocument19 pages06 - ML - Classificaion Performance Evaluation MeasuresIn TechNo ratings yet

- 09 - ML-Model EvaluationDocument33 pages09 - ML-Model EvaluationNguyễn Tấn KhangNo ratings yet

- Cross-Validation and Model SelectionDocument46 pagesCross-Validation and Model Selectionneelam sanjeev kumarNo ratings yet

- Estimating The Predictive Accuracy of ADocument10 pagesEstimating The Predictive Accuracy of AMiguel pilamungaNo ratings yet

- TR Rain ErrorDocument6 pagesTR Rain ErrorVaggelarasBNo ratings yet

- College Physics: Machine LearningDocument32 pagesCollege Physics: Machine LearningSimarpreetNo ratings yet

- Lecture 5Document21 pagesLecture 5aly869556No ratings yet

- Steps in Logistic RegressionDocument5 pagesSteps in Logistic RegressionsilencebrownNo ratings yet

- Chap3 Part1 ClassificationDocument38 pagesChap3 Part1 Classificationhoucem.swissiNo ratings yet

- 08.06 Model EvaluationDocument28 pages08.06 Model EvaluationMuhammad UmairNo ratings yet

- CSO504 Machine Learning: Evaluation and Error Analysis Validation and Regularization Koustav Rudra 22/08/2022Document28 pagesCSO504 Machine Learning: Evaluation and Error Analysis Validation and Regularization Koustav Rudra 22/08/2022Being IITianNo ratings yet

- Int3209 - Data Mining: Week 5: Classification Model ImprovementsDocument56 pagesInt3209 - Data Mining: Week 5: Classification Model ImprovementsĐậu Việt ĐứcNo ratings yet

- Process Structure and SPCDocument50 pagesProcess Structure and SPCekdanai.aNo ratings yet

- Article - Calculation For Chi-Square TestsDocument3 pagesArticle - Calculation For Chi-Square TestsKantri YantriNo ratings yet

- Link Information - 2024-01-31 16-34-48Document35 pagesLink Information - 2024-01-31 16-34-48jemal yahyaaNo ratings yet

- Raso Town E2e & RF PlanDocument22 pagesRaso Town E2e & RF Planjemal yahyaaNo ratings yet

- Technical Impact Analys Template1Document1 pageTechnical Impact Analys Template1jemal yahyaaNo ratings yet

- Daily Achievement, Plan, Issue Waiting TaskDocument2 pagesDaily Achievement, Plan, Issue Waiting Taskjemal yahyaaNo ratings yet

- CHQ2023060216305350Document2 pagesCHQ2023060216305350jemal yahyaaNo ratings yet

- ReportDocument3 pagesReportjemal yahyaaNo ratings yet

- RAN TIA For RFC CHQ2023060216305350Document1 pageRAN TIA For RFC CHQ2023060216305350jemal yahyaaNo ratings yet

- Site Survey & TSSR Progresss Report V1.1Document14 pagesSite Survey & TSSR Progresss Report V1.1jemal yahyaaNo ratings yet

- Regional 219 LTE Expansion Project RFC RequestDocument27 pagesRegional 219 LTE Expansion Project RFC Requestjemal yahyaaNo ratings yet

- Assignment To AbdurohmanDocument5 pagesAssignment To Abdurohmanjemal yahyaaNo ratings yet

- FinalDocument3 pagesFinaljemal yahyaaNo ratings yet

- Regional 219 LTE Expansion Project Nominal Planning - E2E V1.4Document22 pagesRegional 219 LTE Expansion Project Nominal Planning - E2E V1.4jemal yahyaaNo ratings yet

- eTGO Second Forum Meeting AgendaDocument1 pageeTGO Second Forum Meeting Agendajemal yahyaaNo ratings yet

- 1204-1594-1088-1602-1608 On Site Recorded GRFDocument3 pages1204-1594-1088-1602-1608 On Site Recorded GRFjemal yahyaaNo ratings yet

- SSV-262 Reg2-09062024092131Document13 pagesSSV-262 Reg2-09062024092131jemal yahyaaNo ratings yet

- Dec 19, 2022 BrifingDocument4 pagesDec 19, 2022 Brifingjemal yahyaaNo ratings yet

- SSV-262 Reg-09062024085551Document13 pagesSSV-262 Reg-09062024085551jemal yahyaaNo ratings yet

- EER-Regional 262 LTE Layering-106 Microwave Link Swap E2E-V-1.5 - As - of - 17-11-2022Document14 pagesEER-Regional 262 LTE Layering-106 Microwave Link Swap E2E-V-1.5 - As - of - 17-11-2022jemal yahyaaNo ratings yet

- Second Phase Regional 262 LTE Layering Project - 106 MW Link Upgrade - 8G ODU Resource Mapping DataDocument2 pagesSecond Phase Regional 262 LTE Layering Project - 106 MW Link Upgrade - 8G ODU Resource Mapping Datajemal yahyaaNo ratings yet

- Edit ReportDocument1 pageEdit Reportjemal yahyaaNo ratings yet

- Second Phase Regional 262 LTE Layering Project - 106 MW Link Upgrade - 8G ODU Resource Mapping DataDocument2 pagesSecond Phase Regional 262 LTE Layering Project - 106 MW Link Upgrade - 8G ODU Resource Mapping Datajemal yahyaaNo ratings yet

- Daily Car StatusDocument1 pageDaily Car Statusjemal yahyaaNo ratings yet

- 1204-1594-1088-1602-1608 On Site Recorded GRFDocument1 page1204-1594-1088-1602-1608 On Site Recorded GRFjemal yahyaaNo ratings yet

- MW Daily ReportDocument5 pagesMW Daily Reportjemal yahyaaNo ratings yet

- GRF For Wireless JigjigaDocument1 pageGRF For Wireless Jigjigajemal yahyaaNo ratings yet

- Microwave Link 151263 - 151265-151200-151199-151414 GRFDocument1 pageMicrowave Link 151263 - 151265-151200-151199-151414 GRFjemal yahyaaNo ratings yet

- Daily Staff MappingDocument4 pagesDaily Staff Mappingjemal yahyaaNo ratings yet

- 120 New SitesDocument1 page120 New Sitesjemal yahyaaNo ratings yet

- 120 New SitesDocument3 pages120 New Sitesjemal yahyaaNo ratings yet

- (151092) - 151007Document12 pages(151092) - 151007jemal yahyaaNo ratings yet