Professional Documents

Culture Documents

0 ratings0% found this document useful (0 votes)

9 viewsEvaluating HRD Programs: Werner & Desimone (2006) 1

Evaluating HRD Programs: Werner & Desimone (2006) 1

Uploaded by

Akhileshwari Asamanichap 7

Copyright:

© All Rights Reserved

Available Formats

Download as PPT, PDF, TXT or read online from Scribd

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5834)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (350)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (824)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- SwiftKey Emoji ReportDocument19 pagesSwiftKey Emoji ReportSwiftKey92% (12)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (405)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Cambridge IGCSE Computer Science Executive PreviewDocument79 pagesCambridge IGCSE Computer Science Executive PreviewNour A0% (2)

- MSF-H Assessment ManualDocument131 pagesMSF-H Assessment Manualapi-3731030100% (2)

- Important Question For DBMSDocument34 pagesImportant Question For DBMSpreet patelNo ratings yet

- ODI12c Creating and Connecting To ODI Master and Work RepositoriesDocument6 pagesODI12c Creating and Connecting To ODI Master and Work RepositoriesElie DiabNo ratings yet

- Practice Set 5Document1 pagePractice Set 5Neil CuyosNo ratings yet

- How Evault Backup Solutions Work: Initial Seed: The First Full BackupDocument2 pagesHow Evault Backup Solutions Work: Initial Seed: The First Full BackupPradeep ShuklaNo ratings yet

- Performance Optimization Techniques For Java CodeDocument30 pagesPerformance Optimization Techniques For Java CodeGoldey Fetalvero MalabananNo ratings yet

- Theory & Definitions-1Document2 pagesTheory & Definitions-1stylishman11No ratings yet

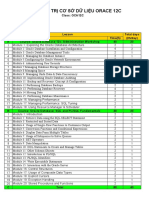

- Nội dung khóa học Oracle 12c OCA & PLSQLDocument5 pagesNội dung khóa học Oracle 12c OCA & PLSQLNgô ChúcNo ratings yet

- Development Induced DisplacementDocument13 pagesDevelopment Induced DisplacementChaudhari MahendraNo ratings yet

- Discussion 2 - Management Data Drive Starbucks Location DecisionsDocument2 pagesDiscussion 2 - Management Data Drive Starbucks Location DecisionsJayson TasarraNo ratings yet

- Descriptive AnalyticsDocument2 pagesDescriptive AnalyticsDebdeep GhoshNo ratings yet

- Expert Data Modeling With Power BI - Second Edition (Soheil Bakhshi) - English - 2023 - 2 Converted (Z-Library)Document854 pagesExpert Data Modeling With Power BI - Second Edition (Soheil Bakhshi) - English - 2023 - 2 Converted (Z-Library)terenwong.klNo ratings yet

- 23 WhonetDocument20 pages23 WhonetyudhieNo ratings yet

- Computer Skills For ResumeDocument8 pagesComputer Skills For Resumefsp06vhe100% (1)

- Crawling Hidden Objects With KNN QueriesDocument6 pagesCrawling Hidden Objects With KNN QueriesGateway ManagerNo ratings yet

- Article AdoptionTechnology TextEditorialProduction 394 1 10 20190726Document11 pagesArticle AdoptionTechnology TextEditorialProduction 394 1 10 20190726Chiheb KlibiNo ratings yet

- InvitiDocument1,277 pagesInvitiFranz AndloveNo ratings yet

- Written Assignment Data ManagementDocument3 pagesWritten Assignment Data ManagementHarrison EmekaNo ratings yet

- Informit 310519447631923Document7 pagesInformit 310519447631923EduuNo ratings yet

- Hacked by MR - SinghDocument133 pagesHacked by MR - SinghAnonymous bKlBR5cNo ratings yet

- Built-In Physical and Logical Replication in PostgresqlDocument49 pagesBuilt-In Physical and Logical Replication in PostgresqlmdrajivNo ratings yet

- Nis MPDocument14 pagesNis MPLalit BorseNo ratings yet

- MethodologyDocument35 pagesMethodologyJason BrozoNo ratings yet

- Active DirectoryDocument38 pagesActive DirectoryDeepak RajalingamNo ratings yet

- APOIDDocument16 pagesAPOIDSiva KumarNo ratings yet

- SyserrDocument5 pagesSyserrFlorin PatruNo ratings yet

- Engineered For Fast and Reliable Deployment: DATA SHEET / Oracle Exadata Database Machine X8M-2Document24 pagesEngineered For Fast and Reliable Deployment: DATA SHEET / Oracle Exadata Database Machine X8M-2Harish NaikNo ratings yet

- MM01Document3 pagesMM01sandeep_48No ratings yet

Evaluating HRD Programs: Werner & Desimone (2006) 1

Evaluating HRD Programs: Werner & Desimone (2006) 1

Uploaded by

Akhileshwari Asamani0 ratings0% found this document useful (0 votes)

9 views41 pageschap 7

Original Title

chapter-7

Copyright

© © All Rights Reserved

Available Formats

PPT, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentchap 7

Copyright:

© All Rights Reserved

Available Formats

Download as PPT, PDF, TXT or read online from Scribd

Download as ppt, pdf, or txt

0 ratings0% found this document useful (0 votes)

9 views41 pagesEvaluating HRD Programs: Werner & Desimone (2006) 1

Evaluating HRD Programs: Werner & Desimone (2006) 1

Uploaded by

Akhileshwari Asamanichap 7

Copyright:

© All Rights Reserved

Available Formats

Download as PPT, PDF, TXT or read online from Scribd

Download as ppt, pdf, or txt

You are on page 1of 41

Evaluating HRD Programs

Chapter 7

Werner & DeSimone (2006) 1

Learning Objectives

Define evaluation and explain its role/purpose

in HRD.

Compare different models of evaluation.

Discuss the various methods of data collection

for HRD evaluation.

Explain the role of research design in HRD

evaluation.

Describe the ethical issues involved in

conducting HRD evaluation.

Identify and explain the choices available for

translating evaluation results into dollar terms.

Werner & DeSimone (2006) 2

Effectiveness

The degree to which a training (or

other HRD program) achieves its

intended purpose

Measures are relative to some starting

point

Measures how well the desired goal is

achieved

Werner & DeSimone (2006) 3

Evaluation

Werner & DeSimone (2006) 4

HRD Evaluation

It is “the systematic collection of

descriptive and judgmental information

necessary to make effective training

decisions related to the selection,

adoption, value, and modification of

various instructional activities.”

Werner & DeSimone (2006) 5

In Other Words…

Are we training:

the right people

the right “stuff”

the right way

with the right materials

at the right time?

Werner & DeSimone (2006) 6

Evaluation Needs

Descriptive and judgmental

information needed

Objective and subjective data

Information gathered according to a

plan and in a desired format

Gathered to provide decision making

information

Werner & DeSimone (2006) 7

Purposes of Evaluation

Determine whether the program is

meeting the intended objectives

Identify strengths and weaknesses

Determine cost-benefit ratio

Identify who benefited most or least

Determine future participants

Provide information for improving HRD

programs

Werner & DeSimone (2006) 8

Purposes of Evaluation – 2

Reinforce major points to be made

Gather marketing information

Determine if training program is

appropriate

Establish management database

Werner & DeSimone (2006) 9

Evaluation Bottom Line

Is HRD a revenue contributor or a

revenue user?

Is HRD credible to line and upper-level

managers?

Are benefits of HRD readily evident to

all?

Werner & DeSimone (2006) 10

How Often are HRD

Evaluations Conducted?

Not often enough!!!

Frequently, only end-of-course

participant reactions are collected

Transfer to the workplace is evaluated

less frequently

Werner & DeSimone (2006) 11

Why HRD Evaluations are

Rare

Reluctance to having HRD programs

evaluated

Evaluation needs expertise and resources

Factors other than HRD cause performance

improvements – e.g.,

Economy

Equipment

Policies, etc.

Werner & DeSimone (2006) 12

Need for HRD Evaluation

Shows the value of HRD

Provides metrics for HRD efficiency

Demonstrates value-added approach

for HRD

Demonstrates accountability for HRD

activities

Werner & DeSimone (2006) 13

Make or Buy Evaluation

“I bought it, therefore it is good.”

“Since it’s good, I don’t need to post-

test.”

Who says it’s:

Appropriate?

Effective?

Timely?

Transferable to the workplace?

Werner & DeSimone (2006) 14

Models and Frameworks of

Evaluation

Table 7-1 lists six frameworks for

evaluation

The most popular is that of D.

Kirkpatrick:

Reaction

Learning

Job Behavior

Results

Werner & DeSimone (2006) 15

Kirkpatrick’s Four Levels

Reaction

Focus on trainee’s reactions

Learning

Did they learn what they were supposed to?

Job Behavior

Was it used on job?

Results

Did it improve the organization’s effectiveness?

Werner & DeSimone (2006) 16

Issues Concerning

Kirkpatrick’s Framework

Most organizations don’t evaluate at

all four levels

Focuses only on post-training

Doesn’t treat inter-stage

improvements

WHAT ARE YOUR THOUGHTS?

Werner & DeSimone (2006) 17

Data Collection for HRD

Evaluation

Possible methods:

Interviews

Questionnaires

Direct observation

Written tests

Simulation/Performance tests

Archival performance information

Werner & DeSimone (2006) 18

Interviews

Advantages: Limitations:

Flexible High reactive effects

Opportunity for High cost

clarification Face-to-face threat

Depth possible potential

Personal contact Labor intensive

Trained observers

needed

Werner & DeSimone (2006) 19

Questionnaires

Advantages: Limitations:

Low cost to Possible inaccurate

administer data

Honesty increased Response conditions

not controlled

Anonymity possible Respondents set

Respondent sets the varying paces

pace Uncontrolled return

Variety of options rate

Werner & DeSimone (2006) 20

Direct Observation

Advantages: Limitations:

Nonthreatening Possibly disruptive

Excellent way to Reactive effects are

measure behavior possible

change May be unreliable

Need trained

observers

Werner & DeSimone (2006) 21

Written Tests

Advantages: Limitations:

Low purchase cost May be threatening

Readily scored Possibly no relation

to job performance

Quickly processed

Measures only

Easily administered cognitive learning

Wide sampling Relies on norms

possible Concern for racial/

ethnic bias

Werner & DeSimone (2006) 22

Simulation/Performance Tests

Advantages: Limitations:

Reliable Time consuming

Objective Simulations often

Close relation to job difficult to create

performance High costs to

Includes cognitive, development and

psychomotor and use

affective domains

Werner & DeSimone (2006) 23

Archival Performance Data

Advantages: Limitations:

Reliable Criteria for keeping/

discarding records

Objective

Information system

Job-based discrepancies

Easy to review Indirect

Minimal reactive Not always usable

effects Records prepared

for other purposes

Werner & DeSimone (2006) 24

Choosing Data Collection

Methods

Reliability

Consistency of results, and freedom from

collection method bias and error

Validity

Does the device measure what we want to

measure?

Practicality

Does it make sense in terms of the resources

used to get the data?

Werner & DeSimone (2006) 25

Type of Data Used/Needed

Individual performance

Systemwide performance

Economic

Werner & DeSimone (2006) 26

Individual Performance Data

Individual knowledge

Individual behaviors

Examples:

Test scores

Performance quantity, quality, and

timeliness

Attendance records

Attitudes

Werner & DeSimone (2006) 27

Systemwide Performance Data

Productivity

Scrap/rework rates

Customer satisfaction levels

On-time performance levels

Quality rates and improvement rates

Werner & DeSimone (2006) 28

Economic Data

Profits

Product liability claims

Avoidance of penalties

Market share

Competitive position

Return on investment (ROI)

Financial utility calculations

Werner & DeSimone (2006) 29

Use of Self-Report Data

Most common method

Pre-training and post-training data

Problems:

Mono-method bias

Desire to be consistent between tests

Socially desirable responses

Response Shift Bias:

Trainees adjust expectations to training

Werner & DeSimone (2006) 30

Research Design

Specifies in advance:

the expected results of the study

the methods of data collection to be

used

how the data will be analyzed

Werner & DeSimone (2006) 31

Assessing the Impact of HRD

Money is the language of business.

You MUST talk dollars, not HRD

jargon.

No one (except maybe you) cares

about “the effectiveness of training

interventions as measured by and

analysis of formal pretest, posttest

control group data.”

Werner & DeSimone (2006) 32

HRD Program Assessment

HRD programs and training are investments

Line managers often see HR and HRD as

costs – i.e., revenue users, not revenue

producers

You must prove your worth to the

organization –

Or you’ll have to find another

organization…

Werner & DeSimone (2006) 33

Two Basic Methods for

Assessing Financial Impact

Evaluation of training costs

Utility analysis

Werner & DeSimone (2006) 34

Evaluation of Training Costs

Cost-benefit analysis

Compares cost of training to benefits

gained such as attitudes, reduction in

accidents, reduction in employee sick-

days, etc.

Cost-effectiveness analysis

Focuses on increases in quality, reduction

in scrap/rework, productivity, etc.

Werner & DeSimone (2006) 35

Return on Investment

Return on investment = Results/Costs

Werner & DeSimone (2006) 36

Calculating Training Return On

Investment

Results Results

Operational How Before After Differences Expressed

Results Area Measured Training Training (+ or –) in $

Quality of panels % rejected 2% rejected 1.5% rejected .5% $720 per day

1,440 panels 1,080 panels 360 panels $172,800

per day per day per year

Housekeeping Visual 10 defects 2 defects 8 defects Not measur-

inspection (average) (average) able in $

using

20-item

checklist

Preventable Number of 24 per year 16 per year 8 per year

accidents accidents

Direct cost $144,000 $96,000 per $48,000 $48,000 per

of each per year year year

accident

Total savings: $220,800.00

Return Operational Results

ROI = Investment = Training Costs

$220,800

= = 6.8

$32,564

SOURCE: From D. G. Robinson & J. Robinson (1989). Training for impact. Training and Development Journal, 43(8), 41. Printed by permission.

Werner & DeSimone (2006) 37

Measuring Benefits

Change in quality per unit measured in

dollars

Reduction in scrap/rework measured in

dollar cost of labor and materials

Reduction in preventable accidents

measured in dollars

ROI = Benefits/Training costs

Werner & DeSimone (2006) 38

Ways to Improve HRD

Assessment

Walk the walk, talk the talk: MONEY

Involve HRD in strategic planning

Involve management in HRD planning and

estimation efforts

Gain mutual ownership

Use credible and conservative estimates

Share credit for successes and blame for

failures

Werner & DeSimone (2006) 39

HRD Evaluation Steps

1. Analyze needs.

2. Determine explicit evaluation strategy.

3. Insist on specific and measurable training

objectives.

4. Obtain participant reactions.

5. Develop criterion measures/instruments to

measure results.

6. Plan and execute evaluation strategy.

Werner & DeSimone (2006) 40

Summary

Training results must be measured

against costs

Training must contribute to the

“bottom line”

HRD must justify itself repeatedly as

a revenue enhancer, not a revenue

waster

Werner & DeSimone (2006) 41

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5834)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (350)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (824)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- SwiftKey Emoji ReportDocument19 pagesSwiftKey Emoji ReportSwiftKey92% (12)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (405)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Cambridge IGCSE Computer Science Executive PreviewDocument79 pagesCambridge IGCSE Computer Science Executive PreviewNour A0% (2)

- MSF-H Assessment ManualDocument131 pagesMSF-H Assessment Manualapi-3731030100% (2)

- Important Question For DBMSDocument34 pagesImportant Question For DBMSpreet patelNo ratings yet

- ODI12c Creating and Connecting To ODI Master and Work RepositoriesDocument6 pagesODI12c Creating and Connecting To ODI Master and Work RepositoriesElie DiabNo ratings yet

- Practice Set 5Document1 pagePractice Set 5Neil CuyosNo ratings yet

- How Evault Backup Solutions Work: Initial Seed: The First Full BackupDocument2 pagesHow Evault Backup Solutions Work: Initial Seed: The First Full BackupPradeep ShuklaNo ratings yet

- Performance Optimization Techniques For Java CodeDocument30 pagesPerformance Optimization Techniques For Java CodeGoldey Fetalvero MalabananNo ratings yet

- Theory & Definitions-1Document2 pagesTheory & Definitions-1stylishman11No ratings yet

- Nội dung khóa học Oracle 12c OCA & PLSQLDocument5 pagesNội dung khóa học Oracle 12c OCA & PLSQLNgô ChúcNo ratings yet

- Development Induced DisplacementDocument13 pagesDevelopment Induced DisplacementChaudhari MahendraNo ratings yet

- Discussion 2 - Management Data Drive Starbucks Location DecisionsDocument2 pagesDiscussion 2 - Management Data Drive Starbucks Location DecisionsJayson TasarraNo ratings yet

- Descriptive AnalyticsDocument2 pagesDescriptive AnalyticsDebdeep GhoshNo ratings yet

- Expert Data Modeling With Power BI - Second Edition (Soheil Bakhshi) - English - 2023 - 2 Converted (Z-Library)Document854 pagesExpert Data Modeling With Power BI - Second Edition (Soheil Bakhshi) - English - 2023 - 2 Converted (Z-Library)terenwong.klNo ratings yet

- 23 WhonetDocument20 pages23 WhonetyudhieNo ratings yet

- Computer Skills For ResumeDocument8 pagesComputer Skills For Resumefsp06vhe100% (1)

- Crawling Hidden Objects With KNN QueriesDocument6 pagesCrawling Hidden Objects With KNN QueriesGateway ManagerNo ratings yet

- Article AdoptionTechnology TextEditorialProduction 394 1 10 20190726Document11 pagesArticle AdoptionTechnology TextEditorialProduction 394 1 10 20190726Chiheb KlibiNo ratings yet

- InvitiDocument1,277 pagesInvitiFranz AndloveNo ratings yet

- Written Assignment Data ManagementDocument3 pagesWritten Assignment Data ManagementHarrison EmekaNo ratings yet

- Informit 310519447631923Document7 pagesInformit 310519447631923EduuNo ratings yet

- Hacked by MR - SinghDocument133 pagesHacked by MR - SinghAnonymous bKlBR5cNo ratings yet

- Built-In Physical and Logical Replication in PostgresqlDocument49 pagesBuilt-In Physical and Logical Replication in PostgresqlmdrajivNo ratings yet

- Nis MPDocument14 pagesNis MPLalit BorseNo ratings yet

- MethodologyDocument35 pagesMethodologyJason BrozoNo ratings yet

- Active DirectoryDocument38 pagesActive DirectoryDeepak RajalingamNo ratings yet

- APOIDDocument16 pagesAPOIDSiva KumarNo ratings yet

- SyserrDocument5 pagesSyserrFlorin PatruNo ratings yet

- Engineered For Fast and Reliable Deployment: DATA SHEET / Oracle Exadata Database Machine X8M-2Document24 pagesEngineered For Fast and Reliable Deployment: DATA SHEET / Oracle Exadata Database Machine X8M-2Harish NaikNo ratings yet

- MM01Document3 pagesMM01sandeep_48No ratings yet