Professional Documents

Culture Documents

Chapter 01

Chapter 01

Uploaded by

Nosheen RamzanCopyright:

Available Formats

You might also like

- Thera Bank-ProjectDocument26 pagesThera Bank-Projectpratik zanke100% (12)

- Admiralty Quick Guide To ENC Symbols PDFDocument1 pageAdmiralty Quick Guide To ENC Symbols PDFMayankdeep Singh100% (3)

- The Big Book of Six Sigma Training Games: Proven Ways to Teach Basic DMAIC Principles and Quality Improvement ToolsFrom EverandThe Big Book of Six Sigma Training Games: Proven Ways to Teach Basic DMAIC Principles and Quality Improvement ToolsRating: 4 out of 5 stars4/5 (7)

- Guide To Tackling Maths Anxiety Power Maths ReportDocument32 pagesGuide To Tackling Maths Anxiety Power Maths ReportTSGNo ratings yet

- CS8751 ML&KDD Minnesota - IntroductionDocument32 pagesCS8751 ML&KDD Minnesota - Introductionf2023393007No ratings yet

- Introduction - Machine LearningDocument17 pagesIntroduction - Machine Learningf2023393007No ratings yet

- Machine LearningDocument28 pagesMachine LearningVăn QuânNo ratings yet

- Lecture 2 FinalDocument90 pagesLecture 2 FinalsomsonengdaNo ratings yet

- LSCM 453 Manufacturing Planning and Control: Fall 2021 Class 10Document19 pagesLSCM 453 Manufacturing Planning and Control: Fall 2021 Class 10cwolfe2No ratings yet

- 03 ML Notes PDFDocument16 pages03 ML Notes PDFgangadharaNo ratings yet

- Summary of Game Results Machine Learning Objectives Course Schedule Objectives of Business AnalyticsDocument21 pagesSummary of Game Results Machine Learning Objectives Course Schedule Objectives of Business AnalyticsOm MishraNo ratings yet

- K Nearest Neighbors: Probably A Duck."Document14 pagesK Nearest Neighbors: Probably A Duck."Daneil RadcliffeNo ratings yet

- Kmeans NotesDocument8 pagesKmeans Notesp_manimozhiNo ratings yet

- Chapter 4: Machine LearningDocument30 pagesChapter 4: Machine LearninggaryNo ratings yet

- Pattern Recognition: P.S.SastryDocument90 pagesPattern Recognition: P.S.SastryvasudevanitkNo ratings yet

- Machine Learning Unit 1Document56 pagesMachine Learning Unit 1sandtNo ratings yet

- ML 01Document34 pagesML 01varalakNo ratings yet

- Genetic AlgorithmDocument28 pagesGenetic Algorithmvinay rastogiNo ratings yet

- Univt - IVDocument72 pagesUnivt - IVmananrawat537No ratings yet

- Lecture3 Eval MetricDocument26 pagesLecture3 Eval MetricYasir ButtNo ratings yet

- Machine Learning - Regression: CS102 Winter 2019Document31 pagesMachine Learning - Regression: CS102 Winter 2019technetvnNo ratings yet

- Class1 PDFDocument18 pagesClass1 PDFPraba HaranNo ratings yet

- CIS 700 Advanced Machine Learning For NLP: Dan RothDocument50 pagesCIS 700 Advanced Machine Learning For NLP: Dan RothSweta PatilNo ratings yet

- AI T8 ReinfoLearningDocument38 pagesAI T8 ReinfoLearningirvingzqyNo ratings yet

- ClassificationDocument35 pagesClassificationMahesh RamalingamNo ratings yet

- Neha Chap14Document40 pagesNeha Chap14Suresh CrNo ratings yet

- Linear Algebra For Machine LearningDocument65 pagesLinear Algebra For Machine Learning黃振嘉No ratings yet

- Data Mining Unupervised TechniquesDocument27 pagesData Mining Unupervised Techniquesanantsatapathymba2023No ratings yet

- Pert 4 - Issues of ClassificationDocument53 pagesPert 4 - Issues of ClassificationPenta Al AnsyahNo ratings yet

- IntroDocument38 pagesIntroTran Kim ToaiNo ratings yet

- Applications of Math. Stat. - Test - Example - 2023 - 2024 SolutionsDocument17 pagesApplications of Math. Stat. - Test - Example - 2023 - 2024 SolutionsBeatrice D'ArpaNo ratings yet

- Ann 2Document8 pagesAnn 2sahiny883No ratings yet

- Lab Project: Course Title: Problem Solving Lab Course Code: CSE 123 Semester: Summer 2019Document13 pagesLab Project: Course Title: Problem Solving Lab Course Code: CSE 123 Semester: Summer 2019Ehsan Ahmed 193-15-13573No ratings yet

- AI 11 Reinforcement Learning IIDocument35 pagesAI 11 Reinforcement Learning IIhumanbody22No ratings yet

- Cosc309 - Video Clip 01 - IntroductoryDocument44 pagesCosc309 - Video Clip 01 - IntroductoryOmar AustinNo ratings yet

- Learningintro NotesDocument12 pagesLearningintro NotesTanviNo ratings yet

- Welcome To 620 261 Introduction To Operations ResearchDocument54 pagesWelcome To 620 261 Introduction To Operations ResearchdaritiNo ratings yet

- Deep Learning Nonlinear ControlDocument68 pagesDeep Learning Nonlinear ControlDaotao DaihocNo ratings yet

- CSC411 Tutorial #3 Cross-Validation and Decision Trees: February 3, 2016 Boris Ivanovic Csc411ta@cs - Toronto.eduDocument24 pagesCSC411 Tutorial #3 Cross-Validation and Decision Trees: February 3, 2016 Boris Ivanovic Csc411ta@cs - Toronto.eduv2k2apjNo ratings yet

- ML Supervised RegressionDocument70 pagesML Supervised RegressionAyan SarkarNo ratings yet

- Dr. Joon-Yeoul Oh: IEEN 5335 Principles of OptimizationDocument33 pagesDr. Joon-Yeoul Oh: IEEN 5335 Principles of OptimizationSoumojit KumarNo ratings yet

- IS4242 W8 Similarity, NN and ClustersDocument29 pagesIS4242 W8 Similarity, NN and Clusterswongdeshun4No ratings yet

- Reinforcement Learning 1Document11 pagesReinforcement Learning 1Mark RichardNo ratings yet

- Chapter 1 - IntroductionDocument21 pagesChapter 1 - IntroductionTrung Đức HuỳnhNo ratings yet

- Designing Machine Learning Workflows in Python Chapter1Document32 pagesDesigning Machine Learning Workflows in Python Chapter1FgpeqwNo ratings yet

- Research Trends in Machine Learning: Muhammad Kashif HanifDocument20 pagesResearch Trends in Machine Learning: Muhammad Kashif Hanifayesha iqbalNo ratings yet

- 03 - Data & LearningDocument53 pages03 - Data & LearningSoma FadiNo ratings yet

- MCQ - Practice Question Template (16.11.2020)Document25 pagesMCQ - Practice Question Template (16.11.2020)Anonymous c75J3yX33No ratings yet

- Lect 1Document24 pagesLect 1Ark MtechNo ratings yet

- CSCE 636: Deep LearningDocument30 pagesCSCE 636: Deep LearningHarshul SoniNo ratings yet

- Material Flow SystemsDocument42 pagesMaterial Flow Systemsdaniel dongNo ratings yet

- Lecture 17. Convolutional Neural Networks PDFDocument32 pagesLecture 17. Convolutional Neural Networks PDFJORGE EDUARDO HUERTA ESPARZANo ratings yet

- Modelling and Business DecisionsDocument41 pagesModelling and Business Decisionssigit sutokoNo ratings yet

- 2017 WB 2635 RobotWealth AFrameworkforApplyingMachineLearningtoSystematicTradingv2Document69 pages2017 WB 2635 RobotWealth AFrameworkforApplyingMachineLearningtoSystematicTradingv2NDameanNo ratings yet

- 228 4411 VEX IQ Robotics Camp Handbook LR 20150612Document212 pages228 4411 VEX IQ Robotics Camp Handbook LR 20150612CÉSAR PASILLAS BALDERRAMANo ratings yet

- Chapter 1 FormulationDocument33 pagesChapter 1 Formulation1er LIG Isgs 2022No ratings yet

- Multi Criteria DecisionDocument58 pagesMulti Criteria DecisionHaris AhmedNo ratings yet

- Blood DonationDocument31 pagesBlood DonationSaniya AttarNo ratings yet

- Feature Selection: LING 572 Fei Xia Week 4: 1/25/2011Document46 pagesFeature Selection: LING 572 Fei Xia Week 4: 1/25/2011linfanNo ratings yet

- Chapter 1Document34 pagesChapter 1aditya pawarNo ratings yet

- M01 Machine LearningDocument25 pagesM01 Machine Learninganjibalaji52No ratings yet

- Data Mining Overview ContinuesDocument20 pagesData Mining Overview ContinuesRenad JamalNo ratings yet

- International Biometric SocietyDocument10 pagesInternational Biometric SocietyNosheen RamzanNo ratings yet

- American Statistical Association American Society For QualityDocument9 pagesAmerican Statistical Association American Society For QualityNosheen RamzanNo ratings yet

- Association Rule LearningDocument10 pagesAssociation Rule LearningNosheen RamzanNo ratings yet

- Measures of Central TendencyDocument16 pagesMeasures of Central TendencyNosheen RamzanNo ratings yet

- 08 Tree AdvancedDocument68 pages08 Tree AdvancedNosheen RamzanNo ratings yet

- C.V and QuatilesDocument16 pagesC.V and QuatilesNosheen RamzanNo ratings yet

- Basic DefinitionsDocument35 pagesBasic DefinitionsNosheen RamzanNo ratings yet

- Basic Concept of Statistics Lecture 1Document10 pagesBasic Concept of Statistics Lecture 1Nosheen RamzanNo ratings yet

- 4.3 Frequency DistributionDocument5 pages4.3 Frequency DistributionNosheen RamzanNo ratings yet

- Introduction To SociologyDocument9 pagesIntroduction To SociologyChido Marvellous TsokaNo ratings yet

- TJUSAMO 2011 - Introduction To Proofs: Mitchell Lee and Andre KesslerDocument3 pagesTJUSAMO 2011 - Introduction To Proofs: Mitchell Lee and Andre KesslerAyush AryanNo ratings yet

- Modeling Power Law Absortion - 06Document8 pagesModeling Power Law Absortion - 06Nilson EvilásioNo ratings yet

- Correspondence - Letter of Support Request - SFN Emergency ManagementDocument7 pagesCorrespondence - Letter of Support Request - SFN Emergency ManagementTom SummerNo ratings yet

- Class Ix Biology Assignment 6 The Fundamental Unit of LifeDocument4 pagesClass Ix Biology Assignment 6 The Fundamental Unit of LifeMadhusudan BanerjeeNo ratings yet

- Project ProposalDocument5 pagesProject ProposalSarmast Bilawal Khuhro0% (1)

- A Mini Project Report On: Innovation Start-Up IdeaDocument8 pagesA Mini Project Report On: Innovation Start-Up IdeaShilpa MouryaNo ratings yet

- Arciga Powell ClassroomstructuredocumentationandcommunicationplanDocument11 pagesArciga Powell Classroomstructuredocumentationandcommunicationplanapi-664753668No ratings yet

- Physics Lab Manual - Class 11 Experiment No. 3Document3 pagesPhysics Lab Manual - Class 11 Experiment No. 3masterjedi1008No ratings yet

- MafaldaDocument286 pagesMafaldaCelia Del Palacio100% (4)

- In Development: Beyond Digital Rock PhysicsDocument6 pagesIn Development: Beyond Digital Rock Physicsdino_birds6113No ratings yet

- Haslil Penelitian Penerapan Bisnis CanvasDocument10 pagesHaslil Penelitian Penerapan Bisnis CanvasRizky MaulanaNo ratings yet

- Social Science Quarterly - 2018 - Lago - Decentralization and FootballDocument13 pagesSocial Science Quarterly - 2018 - Lago - Decentralization and FootballJackeline Valendolf NunesNo ratings yet

- Ingestable Robots: Presented By: Rakesh C N IV Sem McaDocument12 pagesIngestable Robots: Presented By: Rakesh C N IV Sem McaRamesh kNo ratings yet

- Periodic Classification - Practice Sheet - Arjuna Neet 2024Document4 pagesPeriodic Classification - Practice Sheet - Arjuna Neet 2024nirmala4273No ratings yet

- Tshoga Lo PDFDocument11 pagesTshoga Lo PDFlehlabileNo ratings yet

- Building Blocks of The Learning Organization - HBR ArticleDocument2 pagesBuilding Blocks of The Learning Organization - HBR ArticleAshvin PatelNo ratings yet

- 103 CÂU TỪ VỰNG TỪ ĐỀ CÔ VŨ MAI PHƯƠNGDocument31 pages103 CÂU TỪ VỰNG TỪ ĐỀ CÔ VŨ MAI PHƯƠNGDuong Hong AnhNo ratings yet

- Politics and GovernanceDocument4 pagesPolitics and GovernanceArlene YsabelleNo ratings yet

- Icp 201 GDocument6 pagesIcp 201 Gwilly wilantaraNo ratings yet

- Focus5 2E End of Year Test Listening UoE Reading GroupBDocument4 pagesFocus5 2E End of Year Test Listening UoE Reading GroupBNikola RozićNo ratings yet

- Roboric InspectionDocument20 pagesRoboric Inspectiongezu gebeyehuNo ratings yet

- Lab Manual (Screw Gauge) PDFDocument4 pagesLab Manual (Screw Gauge) PDFKashif Ali100% (2)

- J. Ann. Tickner Pt. 2Document6 pagesJ. Ann. Tickner Pt. 2Ana Beatriz Olmos BuenoNo ratings yet

- Gas Turbine Lab ReportDocument6 pagesGas Turbine Lab ReportMuhammad Redzuan33% (3)

- 8 Module VIII Disaster Risk Reduction and ManagementDocument39 pages8 Module VIII Disaster Risk Reduction and ManagementSunny SshiNo ratings yet

- CBK SDLC SummaryDocument2 pagesCBK SDLC SummaryParul KhannaNo ratings yet

- Samediff Activity GuideDocument2 pagesSamediff Activity GuideSyed A H AndrabiNo ratings yet

Chapter 01

Chapter 01

Uploaded by

Nosheen RamzanOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Chapter 01

Chapter 01

Uploaded by

Nosheen RamzanCopyright:

Available Formats

Machine Learning (ML) and

Knowledge Discovery in Databases (KDD)

Course Objectives

• Specific knowledge of the fields of Machine

Learning and Knowledge Discovery in Databases

(Data Mining)

– Experience with a variety of algorithms

– Experience with experimental methodology

• In-depth knowledge of two recent research papers

• Programming and implementation practice

• Presentation practice

CS 8751 ML & KDD Chapter 1 Introduction 2

Course Components

• Midterm, Oct 23 (Mon) 15:00-16:40, 300

points

• Final, Dec 16 (Sat), 14:00-15:55, 300 points

• Homework (5), 100 points

• Programming Assignments (3-5), 150 points

• Research Paper

– Presentation, 100 points

– Summary, 500 points

CS 8751 ML & KDD Chapter 1 Introduction 3

What is Learning?

Learning denotes changes in the system that are adaptive in

the sense that they enable the system to do the same task or

tasks drawn from the same population more effectively the

next time. -- Simon, 1983

Learning is making useful changes in our minds. -- Minsky,

1985

Learning is constructing or modifying representations of

what is being experienced. -- McCarthy, 1968

Learning is improving automatically with experience. --

Mitchell, 1997

CS 8751 ML & KDD Chapter 1 Introduction 4

Why Machine Learning?

• Data, Data, DATA!!!

– Examples

• World wide web

• Human genome project

• Business data (WalMart sales “baskets”)

– Idea: sift heap of data for nuggets of knowledge

• Some tasks beyond programming

– Example: driving

– Idea: learn by doing/watching/practicing (like humans)

• Customizing software

– Example: web browsing for news information

– Idea: observe user tendencies and incorporate

CS 8751 ML & KDD Chapter 1 Introduction 5

Typical Data Analysis Task

Patient103 Patient103 Patient103

time=1 time=2 time=n

Age: 23 Age: 23 Age: 23

FirstPregnancy: no FirstPregnancy: no FirstPregnancy: no

Anemia: no Anemia: no Anemia: no

Diabetes: no Diabetes: YES Diabetes: no

PreviousPrematureBirth: no PreviousPrematureBirth: no PreviousPrematureBirth: no

Ultrasound: ? Ultrasound: a bnormal Ultrasound: ?

Elective C-Section: ? Elective C-Section: no Elective C-Section: no

Emergency C-Section: ? Emergency C-Section: ? Emergency C-Section: Y ES

... ... ...

Given

– 9714 patient records, each describing a pregnancy and a birth

– Each patient record contains 215 features (some are unknown)

Learn to predict:

– Characteristics of patients at high risk for Emergency C-Section

CS 8751 ML & KDD Chapter 1 Introduction 6

Credit Risk Analysis

Customer103 Customer103 Customer103

time=10 time=11 time=n

Years of credit: 9 Years of credit: 9 Years of credit: 9

Loan balance: $2,400 Loan balance: $3,250 Loan balance: $4,500

Income: $52K Income: ? Income: ?

Own House: Yes Own House: Yes Own House: Yes

Other delinquent accts: 2 Other delinquent accts: 2 Other delinquent accts: 3

Max billing cycles late: 3 Max billing cycles late: 4 Max billing cycles late: 6

Profitable customer: ? Profitable customer: ? Profitable customer: No

... ... ...

Rules learned from data:

IF Other-Delinquent-Accounts > 2, AND

Number-Delinquent-Billing-Cycles > 1

THEN Profitable-Customer? = No [Deny Credit Application]

IF Other-Delinquent-Accounts == 0, AND

((Income > $30K) OR (Years-of-Credit > 3))

THEN Profitable-Customer? = Yes [Accept Application]

CS 8751 ML & KDD Chapter 1 Introduction 7

Analysis/Prediction Problems

• What kind of direct mail customers buy?

• What products will/won’t customers buy?

• What changes will cause a customer to leave a

bank?

• What are the characteristics of a gene?

• Does a picture contain an object (does a picture of

space contain a metereorite -- especially one

heading towards us)?

• … Lots more

CS 8751 ML & KDD Chapter 1 Introduction 8

Tasks too Hard to Program

ALVINN [Pomerleau] drives

70 MPH on highways

CS 8751 ML & KDD Chapter 1 Introduction 9

STANLEY: Stanford Racing

• http://www.stanfordracing.org

• Sebastian Thrun’s Stanley

Racing program

• Winner of the DARPA grand

challenge

• Incorporated learning/learned

components with planning and

vision components

CS 8751 ML & KDD Chapter 1 Introduction 10

Software that Customizes to User

CS 8751 ML & KDD Chapter 1 Introduction 11

Defining a Learning Problem

Learning = improving with experience at some task

– improve over task T

– with respect to performance measure P

– based on experience E

Ex 1: Learn to play checkers

T: play checkers

P: % of games won

E: opportunity to play self

Ex 2: Sell more CDs

T: sell CDs

P: # of CDs sold

E: different locations/prices of CD

CS 8751 ML & KDD Chapter 1 Introduction 12

Key Questions

T: play checkers, sell CDs

P: % games won, # CDs sold

To generate machine learner need to know:

– What experience?

• Direct or indirect?

• Learner controlled?

• Is the experience representative?

– What exactly should be learned?

– How to represent the learning function?

– What algorithm used to learn the learning function?

CS 8751 ML & KDD Chapter 1 Introduction 13

Types of Training Experience

Direct or indirect?

Direct - observable, measurable

– sometimes difficult to obtain

• Checkers - is a move the best move for a situation?

– sometimes straightforward

• Sell CDs - how many CDs sold on a day? (look at receipts)

Indirect - must be inferred from what is measurable

– Credit assignment problem

CS 8751 ML & KDD Chapter 1 Introduction 14

Types of Training Experience (cont)

Who controls?

– Learner - what is best move at each point?

(Exploitation/Exploration)

– Teacher - is teacher’s move the best? (Do we want to

just emulate the teachers moves??)

BIG Question: is experience representative of

performance goal?

– If Checkers learner only plays itself will it be able to

play humans?

– What if results from CD seller influenced by factors not

measured (holiday shopping, weather, etc.)?

CS 8751 ML & KDD Chapter 1 Introduction 15

Choosing Target Function

Checkers - what does learner do - make moves

ChooseMove - select move based on board

ChooseMove : Board Move

V : Board

ChooseMove(b): from b pick move with highest value

But how do we define V(b) for boards b?

Possible definition:

V(b) = 100 if b is a final board state of a win

V(b) = -100 if b is a final board state of a loss

V(b) = 0 if b is a final board state of a draw

if b not final state, V(b) =V(b´) where b´ is best final board

reached by starting at b and playing optimally from there

Correct, but not operational

CS 8751 ML & KDD Chapter 1 Introduction 16

Representation of Target Function

• Collection of rules?

IF double jump available THEN

make double jump

• Neural network?

• Polynomial function of problem features?

w0 w1 # blackPieces (b) w2 # redPieces (b)

w3 # blackKings (b) w4 # redKings (b)

w5 # redThreate ned (b) w6 # blackThrea tened (b)

CS 8751 ML & KDD Chapter 1 Introduction 17

Obtaining Training Examples

V (b) : the true target function

Vˆ (b) : the learned function

Vtrain (b) : the training value

One rule for estimating training values :

V (b) Vˆ ( Successor(b))

train

CS 8751 ML & KDD Chapter 1 Introduction 18

Choose Weight Tuning Rule

LMS Weight update rule:

Do repeatedly :

Select a training example b at random

1. Compute error (b) :

error (b) Vtrain (b) Vˆ (b)

2. For each board feature f i , update weight wi :

wi wi c f i error (b)

c is some small constant, say 0.1, to moderate

rate of learning

CS 8751 ML & KDD Chapter 1 Introduction 19

Design Choices

Determining Type of

Training Experience

Games against expert Table of correct moves

Games against self

Determining

Target Function

Board Move Board Value

Determining Representation

of Learned Function

Linear function of features Neural Network

Determining

Learning Algorithm

Gradient Descent Linear Programming

Completed Design

CS 8751 ML & KDD Chapter 1 Introduction 20

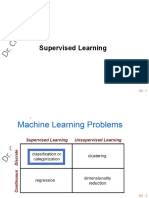

Some Areas of Machine Learning

• Inductive Learning: inferring new knowledge

from observations (not guaranteed correct)

– Concept/Classification Learning - identify

characteristics of class members (e.g., what makes a CS

class fun, what makes a customer buy, etc.)

– Unsupervised Learning - examine data to infer new

characteristics (e.g., break chemicals into similar

groups, infer new mathematical rule, etc.)

– Reinforcement Learning - learn appropriate moves to

achieve delayed goal (e.g., win a game of Checkers,

perform a robot task, etc.)

• Deductive Learning: recombine existing

knowledge to more effectively solve problems

CS 8751 ML & KDD Chapter 1 Introduction 21

Classification/Concept Learning

• What characteristic(s) predict a smile?

– Variation on Sesame Street game: why are these things a lot like the

others (or not)?

• ML Approach: infer model (characteristics that indicate) of

why a face is/is not smiling

CS 8751 ML & KDD Chapter 1 Introduction 22

Unsupervised Learning

• Clustering - group points into “classes”

• Other ideas:

– look for mathematical relationships between features

– look for anomalies in data bases (data that does not fit)

CS 8751 ML & KDD Chapter 1 Introduction 23

Reinforcement Learning

Problem Policy

G S - start

G - goal

Possible actions:

up left

S down right

• Problem: feedback (reinforcements) are delayed - how to

value intermediate (no goal states)

• Idea: online dynamic programming to produce policy

function

• Policy: action taken leads to highest future reinforcement

(if policy followed)

CS 8751 ML & KDD Chapter 1 Introduction 24

Analytical Learning

Problem!

S1 S2 S3 Backtrack!

Init S4 S5 S6 Goal

S7 S8

S9 S0

• During search processes (planning, etc.) remember work

involved in solving tough problems

• Reuse the acquired knowledge when presented with

similar problems in the future (avoid bad decisions)

CS 8751 ML & KDD Chapter 1 Introduction 25

The Present in Machine Learning

The tip of the iceberg:

• First-generation algorithms: neural nets, decision

trees, regression, support vector machines, …

• Composite algorithms - ensembles

• Significant work on assessing effectiveness, limits

• Applied to simple data bases

• Budding industry (especially in data mining)

CS 8751 ML & KDD Chapter 1 Introduction 26

The Future of Machine Learning

Lots of areas of impact:

• Learn across multiple data bases, as well as web

and news feeds

• Learn across multi-media data

• Cumulative, lifelong learning

• Agents with learning embedded

• Programming languages with learning embedded?

• Learning by active experimentation

CS 8751 ML & KDD Chapter 1 Introduction 27

What is Knowledge Discovery in

Databases (i.e., Data Mining)?

• Depends on who you ask

• General idea: the analysis of large amounts of data

(and therefore efficiency is an issue)

• Interfaces several areas, notably machine learning

and database systems

• Lots of perspectives:

– ML: learning where efficiency matters

– DBMS: extended techniques for analysis of raw data,

automatic production of knowledge

• What is all the hubbub?

– Companies make lots of money with it (e.g., WalMart)

CS 8751 ML & KDD Chapter 1 Introduction 28

Related Disciplines

• Artificial Intelligence

• Statistics

• Psychology and neurobiology

• Bioinformatics and Medical Informatics

• Philosophy

• Computational complexity theory

• Control theory

• Information theory

• Database Systems

• ...

CS 8751 ML & KDD Chapter 1 Introduction 29

Issues in Machine Learning

• What algorithms can approximate functions well

(and when)?

• How does number of training examples influence

accuracy?

• How does complexity of hypothesis representation

impact it?

• How does noisy data influence accuracy?

• What are the theoretical limits of learnability?

• How can prior knowledge of learner help?

• What clues can we get from biological learning

systems?

CS 8751 ML & KDD Chapter 1 Introduction 30

You might also like

- Thera Bank-ProjectDocument26 pagesThera Bank-Projectpratik zanke100% (12)

- Admiralty Quick Guide To ENC Symbols PDFDocument1 pageAdmiralty Quick Guide To ENC Symbols PDFMayankdeep Singh100% (3)

- The Big Book of Six Sigma Training Games: Proven Ways to Teach Basic DMAIC Principles and Quality Improvement ToolsFrom EverandThe Big Book of Six Sigma Training Games: Proven Ways to Teach Basic DMAIC Principles and Quality Improvement ToolsRating: 4 out of 5 stars4/5 (7)

- Guide To Tackling Maths Anxiety Power Maths ReportDocument32 pagesGuide To Tackling Maths Anxiety Power Maths ReportTSGNo ratings yet

- CS8751 ML&KDD Minnesota - IntroductionDocument32 pagesCS8751 ML&KDD Minnesota - Introductionf2023393007No ratings yet

- Introduction - Machine LearningDocument17 pagesIntroduction - Machine Learningf2023393007No ratings yet

- Machine LearningDocument28 pagesMachine LearningVăn QuânNo ratings yet

- Lecture 2 FinalDocument90 pagesLecture 2 FinalsomsonengdaNo ratings yet

- LSCM 453 Manufacturing Planning and Control: Fall 2021 Class 10Document19 pagesLSCM 453 Manufacturing Planning and Control: Fall 2021 Class 10cwolfe2No ratings yet

- 03 ML Notes PDFDocument16 pages03 ML Notes PDFgangadharaNo ratings yet

- Summary of Game Results Machine Learning Objectives Course Schedule Objectives of Business AnalyticsDocument21 pagesSummary of Game Results Machine Learning Objectives Course Schedule Objectives of Business AnalyticsOm MishraNo ratings yet

- K Nearest Neighbors: Probably A Duck."Document14 pagesK Nearest Neighbors: Probably A Duck."Daneil RadcliffeNo ratings yet

- Kmeans NotesDocument8 pagesKmeans Notesp_manimozhiNo ratings yet

- Chapter 4: Machine LearningDocument30 pagesChapter 4: Machine LearninggaryNo ratings yet

- Pattern Recognition: P.S.SastryDocument90 pagesPattern Recognition: P.S.SastryvasudevanitkNo ratings yet

- Machine Learning Unit 1Document56 pagesMachine Learning Unit 1sandtNo ratings yet

- ML 01Document34 pagesML 01varalakNo ratings yet

- Genetic AlgorithmDocument28 pagesGenetic Algorithmvinay rastogiNo ratings yet

- Univt - IVDocument72 pagesUnivt - IVmananrawat537No ratings yet

- Lecture3 Eval MetricDocument26 pagesLecture3 Eval MetricYasir ButtNo ratings yet

- Machine Learning - Regression: CS102 Winter 2019Document31 pagesMachine Learning - Regression: CS102 Winter 2019technetvnNo ratings yet

- Class1 PDFDocument18 pagesClass1 PDFPraba HaranNo ratings yet

- CIS 700 Advanced Machine Learning For NLP: Dan RothDocument50 pagesCIS 700 Advanced Machine Learning For NLP: Dan RothSweta PatilNo ratings yet

- AI T8 ReinfoLearningDocument38 pagesAI T8 ReinfoLearningirvingzqyNo ratings yet

- ClassificationDocument35 pagesClassificationMahesh RamalingamNo ratings yet

- Neha Chap14Document40 pagesNeha Chap14Suresh CrNo ratings yet

- Linear Algebra For Machine LearningDocument65 pagesLinear Algebra For Machine Learning黃振嘉No ratings yet

- Data Mining Unupervised TechniquesDocument27 pagesData Mining Unupervised Techniquesanantsatapathymba2023No ratings yet

- Pert 4 - Issues of ClassificationDocument53 pagesPert 4 - Issues of ClassificationPenta Al AnsyahNo ratings yet

- IntroDocument38 pagesIntroTran Kim ToaiNo ratings yet

- Applications of Math. Stat. - Test - Example - 2023 - 2024 SolutionsDocument17 pagesApplications of Math. Stat. - Test - Example - 2023 - 2024 SolutionsBeatrice D'ArpaNo ratings yet

- Ann 2Document8 pagesAnn 2sahiny883No ratings yet

- Lab Project: Course Title: Problem Solving Lab Course Code: CSE 123 Semester: Summer 2019Document13 pagesLab Project: Course Title: Problem Solving Lab Course Code: CSE 123 Semester: Summer 2019Ehsan Ahmed 193-15-13573No ratings yet

- AI 11 Reinforcement Learning IIDocument35 pagesAI 11 Reinforcement Learning IIhumanbody22No ratings yet

- Cosc309 - Video Clip 01 - IntroductoryDocument44 pagesCosc309 - Video Clip 01 - IntroductoryOmar AustinNo ratings yet

- Learningintro NotesDocument12 pagesLearningintro NotesTanviNo ratings yet

- Welcome To 620 261 Introduction To Operations ResearchDocument54 pagesWelcome To 620 261 Introduction To Operations ResearchdaritiNo ratings yet

- Deep Learning Nonlinear ControlDocument68 pagesDeep Learning Nonlinear ControlDaotao DaihocNo ratings yet

- CSC411 Tutorial #3 Cross-Validation and Decision Trees: February 3, 2016 Boris Ivanovic Csc411ta@cs - Toronto.eduDocument24 pagesCSC411 Tutorial #3 Cross-Validation and Decision Trees: February 3, 2016 Boris Ivanovic Csc411ta@cs - Toronto.eduv2k2apjNo ratings yet

- ML Supervised RegressionDocument70 pagesML Supervised RegressionAyan SarkarNo ratings yet

- Dr. Joon-Yeoul Oh: IEEN 5335 Principles of OptimizationDocument33 pagesDr. Joon-Yeoul Oh: IEEN 5335 Principles of OptimizationSoumojit KumarNo ratings yet

- IS4242 W8 Similarity, NN and ClustersDocument29 pagesIS4242 W8 Similarity, NN and Clusterswongdeshun4No ratings yet

- Reinforcement Learning 1Document11 pagesReinforcement Learning 1Mark RichardNo ratings yet

- Chapter 1 - IntroductionDocument21 pagesChapter 1 - IntroductionTrung Đức HuỳnhNo ratings yet

- Designing Machine Learning Workflows in Python Chapter1Document32 pagesDesigning Machine Learning Workflows in Python Chapter1FgpeqwNo ratings yet

- Research Trends in Machine Learning: Muhammad Kashif HanifDocument20 pagesResearch Trends in Machine Learning: Muhammad Kashif Hanifayesha iqbalNo ratings yet

- 03 - Data & LearningDocument53 pages03 - Data & LearningSoma FadiNo ratings yet

- MCQ - Practice Question Template (16.11.2020)Document25 pagesMCQ - Practice Question Template (16.11.2020)Anonymous c75J3yX33No ratings yet

- Lect 1Document24 pagesLect 1Ark MtechNo ratings yet

- CSCE 636: Deep LearningDocument30 pagesCSCE 636: Deep LearningHarshul SoniNo ratings yet

- Material Flow SystemsDocument42 pagesMaterial Flow Systemsdaniel dongNo ratings yet

- Lecture 17. Convolutional Neural Networks PDFDocument32 pagesLecture 17. Convolutional Neural Networks PDFJORGE EDUARDO HUERTA ESPARZANo ratings yet

- Modelling and Business DecisionsDocument41 pagesModelling and Business Decisionssigit sutokoNo ratings yet

- 2017 WB 2635 RobotWealth AFrameworkforApplyingMachineLearningtoSystematicTradingv2Document69 pages2017 WB 2635 RobotWealth AFrameworkforApplyingMachineLearningtoSystematicTradingv2NDameanNo ratings yet

- 228 4411 VEX IQ Robotics Camp Handbook LR 20150612Document212 pages228 4411 VEX IQ Robotics Camp Handbook LR 20150612CÉSAR PASILLAS BALDERRAMANo ratings yet

- Chapter 1 FormulationDocument33 pagesChapter 1 Formulation1er LIG Isgs 2022No ratings yet

- Multi Criteria DecisionDocument58 pagesMulti Criteria DecisionHaris AhmedNo ratings yet

- Blood DonationDocument31 pagesBlood DonationSaniya AttarNo ratings yet

- Feature Selection: LING 572 Fei Xia Week 4: 1/25/2011Document46 pagesFeature Selection: LING 572 Fei Xia Week 4: 1/25/2011linfanNo ratings yet

- Chapter 1Document34 pagesChapter 1aditya pawarNo ratings yet

- M01 Machine LearningDocument25 pagesM01 Machine Learninganjibalaji52No ratings yet

- Data Mining Overview ContinuesDocument20 pagesData Mining Overview ContinuesRenad JamalNo ratings yet

- International Biometric SocietyDocument10 pagesInternational Biometric SocietyNosheen RamzanNo ratings yet

- American Statistical Association American Society For QualityDocument9 pagesAmerican Statistical Association American Society For QualityNosheen RamzanNo ratings yet

- Association Rule LearningDocument10 pagesAssociation Rule LearningNosheen RamzanNo ratings yet

- Measures of Central TendencyDocument16 pagesMeasures of Central TendencyNosheen RamzanNo ratings yet

- 08 Tree AdvancedDocument68 pages08 Tree AdvancedNosheen RamzanNo ratings yet

- C.V and QuatilesDocument16 pagesC.V and QuatilesNosheen RamzanNo ratings yet

- Basic DefinitionsDocument35 pagesBasic DefinitionsNosheen RamzanNo ratings yet

- Basic Concept of Statistics Lecture 1Document10 pagesBasic Concept of Statistics Lecture 1Nosheen RamzanNo ratings yet

- 4.3 Frequency DistributionDocument5 pages4.3 Frequency DistributionNosheen RamzanNo ratings yet

- Introduction To SociologyDocument9 pagesIntroduction To SociologyChido Marvellous TsokaNo ratings yet

- TJUSAMO 2011 - Introduction To Proofs: Mitchell Lee and Andre KesslerDocument3 pagesTJUSAMO 2011 - Introduction To Proofs: Mitchell Lee and Andre KesslerAyush AryanNo ratings yet

- Modeling Power Law Absortion - 06Document8 pagesModeling Power Law Absortion - 06Nilson EvilásioNo ratings yet

- Correspondence - Letter of Support Request - SFN Emergency ManagementDocument7 pagesCorrespondence - Letter of Support Request - SFN Emergency ManagementTom SummerNo ratings yet

- Class Ix Biology Assignment 6 The Fundamental Unit of LifeDocument4 pagesClass Ix Biology Assignment 6 The Fundamental Unit of LifeMadhusudan BanerjeeNo ratings yet

- Project ProposalDocument5 pagesProject ProposalSarmast Bilawal Khuhro0% (1)

- A Mini Project Report On: Innovation Start-Up IdeaDocument8 pagesA Mini Project Report On: Innovation Start-Up IdeaShilpa MouryaNo ratings yet

- Arciga Powell ClassroomstructuredocumentationandcommunicationplanDocument11 pagesArciga Powell Classroomstructuredocumentationandcommunicationplanapi-664753668No ratings yet

- Physics Lab Manual - Class 11 Experiment No. 3Document3 pagesPhysics Lab Manual - Class 11 Experiment No. 3masterjedi1008No ratings yet

- MafaldaDocument286 pagesMafaldaCelia Del Palacio100% (4)

- In Development: Beyond Digital Rock PhysicsDocument6 pagesIn Development: Beyond Digital Rock Physicsdino_birds6113No ratings yet

- Haslil Penelitian Penerapan Bisnis CanvasDocument10 pagesHaslil Penelitian Penerapan Bisnis CanvasRizky MaulanaNo ratings yet

- Social Science Quarterly - 2018 - Lago - Decentralization and FootballDocument13 pagesSocial Science Quarterly - 2018 - Lago - Decentralization and FootballJackeline Valendolf NunesNo ratings yet

- Ingestable Robots: Presented By: Rakesh C N IV Sem McaDocument12 pagesIngestable Robots: Presented By: Rakesh C N IV Sem McaRamesh kNo ratings yet

- Periodic Classification - Practice Sheet - Arjuna Neet 2024Document4 pagesPeriodic Classification - Practice Sheet - Arjuna Neet 2024nirmala4273No ratings yet

- Tshoga Lo PDFDocument11 pagesTshoga Lo PDFlehlabileNo ratings yet

- Building Blocks of The Learning Organization - HBR ArticleDocument2 pagesBuilding Blocks of The Learning Organization - HBR ArticleAshvin PatelNo ratings yet

- 103 CÂU TỪ VỰNG TỪ ĐỀ CÔ VŨ MAI PHƯƠNGDocument31 pages103 CÂU TỪ VỰNG TỪ ĐỀ CÔ VŨ MAI PHƯƠNGDuong Hong AnhNo ratings yet

- Politics and GovernanceDocument4 pagesPolitics and GovernanceArlene YsabelleNo ratings yet

- Icp 201 GDocument6 pagesIcp 201 Gwilly wilantaraNo ratings yet

- Focus5 2E End of Year Test Listening UoE Reading GroupBDocument4 pagesFocus5 2E End of Year Test Listening UoE Reading GroupBNikola RozićNo ratings yet

- Roboric InspectionDocument20 pagesRoboric Inspectiongezu gebeyehuNo ratings yet

- Lab Manual (Screw Gauge) PDFDocument4 pagesLab Manual (Screw Gauge) PDFKashif Ali100% (2)

- J. Ann. Tickner Pt. 2Document6 pagesJ. Ann. Tickner Pt. 2Ana Beatriz Olmos BuenoNo ratings yet

- Gas Turbine Lab ReportDocument6 pagesGas Turbine Lab ReportMuhammad Redzuan33% (3)

- 8 Module VIII Disaster Risk Reduction and ManagementDocument39 pages8 Module VIII Disaster Risk Reduction and ManagementSunny SshiNo ratings yet

- CBK SDLC SummaryDocument2 pagesCBK SDLC SummaryParul KhannaNo ratings yet

- Samediff Activity GuideDocument2 pagesSamediff Activity GuideSyed A H AndrabiNo ratings yet