Professional Documents

Culture Documents

0 ratings0% found this document useful (0 votes)

7 viewsC3 Prob

C3 Prob

Uploaded by

Sakshi RaiCopyright:

© All Rights Reserved

Available Formats

Download as PPT, PDF, TXT or read online from Scribd

You might also like

- Design of Structures & Foundations For Vibrating MachinesDocument212 pagesDesign of Structures & Foundations For Vibrating MachinesOvidiu Genteanu97% (30)

- GrgtrhytjyjyDocument2 pagesGrgtrhytjyjyKushanNo ratings yet

- Stat MethDocument66 pagesStat MethalscribdsaNo ratings yet

- Unit-5: Probabilistic Reasoning: Dr. Gopi SanghaniDocument28 pagesUnit-5: Probabilistic Reasoning: Dr. Gopi Sanghanidemo dataNo ratings yet

- Lecture Quantifying UncertaintyDocument40 pagesLecture Quantifying UncertaintySACHIN BHAGATNo ratings yet

- Statistical Data Analysis: PH4515: 1 Course StructureDocument5 pagesStatistical Data Analysis: PH4515: 1 Course StructurePhD LIVENo ratings yet

- Bayesian Updating: Odds Class 12, 18.05 Jeremy Orloff and Jonathan BloomDocument8 pagesBayesian Updating: Odds Class 12, 18.05 Jeremy Orloff and Jonathan BloomMd CassimNo ratings yet

- Uncertainty: Chapter 13, Sections 1-6Document31 pagesUncertainty: Chapter 13, Sections 1-6jollyggNo ratings yet

- Prob ZoompdfDocument86 pagesProb Zoompdfhuy.nguyentan1704No ratings yet

- 1probability & Probability DistributionDocument45 pages1probability & Probability DistributionapurvaapurvaNo ratings yet

- Tutorial Part1Document66 pagesTutorial Part1Thuong VuNo ratings yet

- Probability L2 PTDocument14 pagesProbability L2 PTpreticool_2003No ratings yet

- C 1 ReasoningDocument19 pagesC 1 Reasoningshreya saxenaNo ratings yet

- Chapter13 UncertaintyDocument49 pagesChapter13 Uncertaintynaseem hanzilaNo ratings yet

- Intro To Basic StatisticsDocument40 pagesIntro To Basic StatisticsJason del PonteNo ratings yet

- IT8601 unitIVDocument47 pagesIT8601 unitIVSmyla LuciaNo ratings yet

- Probability Review: Dr. Kaushik DebDocument33 pagesProbability Review: Dr. Kaushik DebAfsana RahmanNo ratings yet

- Notes On MLDocument42 pagesNotes On MLtempestinifyNo ratings yet

- UncertaintyDocument60 pagesUncertaintyArnab MukherjeeNo ratings yet

- Probability and Statistics Unit - 1Document8 pagesProbability and Statistics Unit - 1Hans DuyaninNo ratings yet

- cs511 UncertaintyDocument72 pagescs511 UncertaintyGUNTUPALLI SUNIL CHOWDARYNo ratings yet

- Probability and Stochastic ModelsDocument78 pagesProbability and Stochastic ModelsKatie CookNo ratings yet

- 02 ProbIntro 2020 AnnotatedDocument44 pages02 ProbIntro 2020 AnnotatedEureka oneNo ratings yet

- Study Unit 2.1 Basic ProbabilityDocument37 pagesStudy Unit 2.1 Basic ProbabilitybronwyncloeteNo ratings yet

- Distributions ZoomDocument82 pagesDistributions Zoomvinhtran23042004No ratings yet

- 1probability & Prob DistrnDocument46 pages1probability & Prob DistrnPratik JainNo ratings yet

- Probability PresentationDocument22 pagesProbability Presentationvietnam5 haydayNo ratings yet

- ProbabilisticiirDocument44 pagesProbabilisticiirZeenathNo ratings yet

- Probability DistributionsDocument129 pagesProbability DistributionsKishore GNo ratings yet

- PSFDS Unit1 Week2Document14 pagesPSFDS Unit1 Week2Nivesh PritmaniNo ratings yet

- Uncertainity MeasureDocument64 pagesUncertainity MeasureNarender NarruNo ratings yet

- Lec 10Document10 pagesLec 10Atiq ur RehmanNo ratings yet

- IAI: Treatment of UncertaintyDocument20 pagesIAI: Treatment of UncertaintySai Venkat GudlaNo ratings yet

- A Genie-Aided Detector With A Probabilistic Description of The Side InformationDocument1 pageA Genie-Aided Detector With A Probabilistic Description of The Side InformationJohn SinhaNo ratings yet

- Math ProbabilityDocument4 pagesMath ProbabilityV CNo ratings yet

- Decision 4Document15 pagesDecision 4nitprincyjNo ratings yet

- University of Dar Es Salaam Coict: Department of Computer Science & EngDocument42 pagesUniversity of Dar Es Salaam Coict: Department of Computer Science & Engsamwel sittaNo ratings yet

- 5 - CSE3013 - Uncertainity and Knowledge EngineeringDocument24 pages5 - CSE3013 - Uncertainity and Knowledge Engineeringshreyanair.s2020No ratings yet

- Ai Unit3Document38 pagesAi Unit3hegica6739No ratings yet

- Lecture 5: Statistical Independence, Discrete Random VariablesDocument4 pagesLecture 5: Statistical Independence, Discrete Random VariablesDesmond SeahNo ratings yet

- What Is Data Science? Probability Overview Descriptive StatisticsDocument10 pagesWhat Is Data Science? Probability Overview Descriptive StatisticsMauricioRojasNo ratings yet

- 1 Classical Probability: Indian Institute of Technology BombayDocument8 pages1 Classical Probability: Indian Institute of Technology BombayRajNo ratings yet

- Stat Mining 21Document1 pageStat Mining 21Adonis HuaytaNo ratings yet

- CERN Academic Training Lectures - Practical Statistics For LHC Physicists by Prosper PDFDocument283 pagesCERN Academic Training Lectures - Practical Statistics For LHC Physicists by Prosper PDFkevinchu021195No ratings yet

- Class 2Document12 pagesClass 2Smruti RanjanNo ratings yet

- Artificial Intelligence: Adina Magda FloreaDocument36 pagesArtificial Intelligence: Adina Magda FloreaPablo Lorenzo Muños SanchesNo ratings yet

- Math FoundationsDocument48 pagesMath FoundationsEmmanuel MartínezNo ratings yet

- Bayes ReasoningDocument45 pagesBayes Reasoninglouis benNo ratings yet

- Most Compact and Complete Data Science Cheat Sheet 1672981093Document10 pagesMost Compact and Complete Data Science Cheat Sheet 1672981093Mahesh KotnisNo ratings yet

- Bayes 2Document48 pagesBayes 2N MaheshNo ratings yet

- L11a Uncertainty171105Document25 pagesL11a Uncertainty171105Queen Emefa OlivesNo ratings yet

- RD05 StatisticsDocument7 pagesRD05 StatisticsأحمدآلزهوNo ratings yet

- Chapter 3 ProbabilityDocument38 pagesChapter 3 Probabilityth8yvv4gpmNo ratings yet

- Probability and Statistics (IT302) 5 August 2020 (11:15AM-11:45AM) ClassDocument39 pagesProbability and Statistics (IT302) 5 August 2020 (11:15AM-11:45AM) Classrustom khurraNo ratings yet

- Downloadartificial Intelligence - Unit 3Document20 pagesDownloadartificial Intelligence - Unit 3blazeNo ratings yet

- DeepayanSarkar BayesianDocument152 pagesDeepayanSarkar BayesianPhat NguyenNo ratings yet

- ECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1Document7 pagesECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1wandalexNo ratings yet

- Bio StatisticsDocument24 pagesBio Statisticsvmc6gyvh9gNo ratings yet

- Elementary Probability Theory For CS648ADocument19 pagesElementary Probability Theory For CS648ATechnology TectrixNo ratings yet

- 2014 Greenbelt BokDocument8 pages2014 Greenbelt BokKhyle Laurenz DuroNo ratings yet

- Dosha Head Work DesignDocument189 pagesDosha Head Work DesignAbiued EjigueNo ratings yet

- Aman's AI Journal - Watch ListDocument32 pagesAman's AI Journal - Watch ListaliNo ratings yet

- I J E S M: A Review Paper On Concrete Mix Design of M20 ConcreteDocument4 pagesI J E S M: A Review Paper On Concrete Mix Design of M20 ConcreteKerby Brylle GawanNo ratings yet

- Feedback and Feed ForwardDocument27 pagesFeedback and Feed ForwardEngr. AbdullahNo ratings yet

- Dynamic Satellite Geodesy-SneuuwDocument436 pagesDynamic Satellite Geodesy-Sneuuwglavisimo100% (1)

- Isothermal Reactor Design: 1. Batch OperationDocument3 pagesIsothermal Reactor Design: 1. Batch Operationنزار الدهاميNo ratings yet

- Vacuum MetallurgyDocument20 pagesVacuum MetallurgyTGrey027No ratings yet

- BRKACI-2101-Basic Verification With ACIDocument153 pagesBRKACI-2101-Basic Verification With ACIflyingccie datacenterNo ratings yet

- Basic AerodynamicsDocument36 pagesBasic AerodynamicsMohamed ArifNo ratings yet

- Fluid Control Components: IndexDocument38 pagesFluid Control Components: IndexjebacNo ratings yet

- Me6016 TeDocument44 pagesMe6016 TeKALIMUTHU KNo ratings yet

- 50+ Serial Keys For Popular SoftwareDocument22 pages50+ Serial Keys For Popular SoftwareskillriveNo ratings yet

- Trout Farming A Guide To Production and Inventory ManagementDocument2 pagesTrout Farming A Guide To Production and Inventory Managementluis ruperto floresNo ratings yet

- EcoStore Manual V3.2Document52 pagesEcoStore Manual V3.2Andreea PintilieNo ratings yet

- T Rec G.8261 201308 I!!pdf eDocument116 pagesT Rec G.8261 201308 I!!pdf egcarreongNo ratings yet

- Boatti PHD PresDocument41 pagesBoatti PHD PresygfrostNo ratings yet

- MATH - Q2 - W3 (Autosaved)Document44 pagesMATH - Q2 - W3 (Autosaved)Rose Amor Mercene-LacayNo ratings yet

- ANSYS Workbench - Simulation Introduction: Training ManualDocument4 pagesANSYS Workbench - Simulation Introduction: Training ManualShamik ChowdhuryNo ratings yet

- Winsem2023-24 Bece201l TH VL2023240500575 Cat-1-Qp - KeyDocument6 pagesWinsem2023-24 Bece201l TH VL2023240500575 Cat-1-Qp - Keydhoni050709No ratings yet

- RTWA Tornillo - 70 A 125 TR PDFDocument56 pagesRTWA Tornillo - 70 A 125 TR PDFModussar IlyasNo ratings yet

- Belzona 1341 (Supermetalglide) - Instructions For UseDocument2 pagesBelzona 1341 (Supermetalglide) - Instructions For Usevangeliskyriakos8998No ratings yet

- Commissioning Motors and GeneratorsDocument83 pagesCommissioning Motors and Generatorsbookbum75% (4)

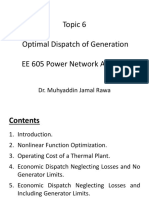

- Topic 6 Optimal Dispatch of GenerationDocument136 pagesTopic 6 Optimal Dispatch of GenerationEng. Ali Al SaedNo ratings yet

- Ncert 11 Physics 2Document181 pagesNcert 11 Physics 2shivaraj pNo ratings yet

- Ict Test PreparationDocument6 pagesIct Test Preparationshahmeerraheel123No ratings yet

- Geiger ApdDocument6 pagesGeiger Apdluisbeto027No ratings yet

- Magdy El-Masry Prof. of Cardiology Tanta UniversityDocument55 pagesMagdy El-Masry Prof. of Cardiology Tanta UniversityPrabJot SinGhNo ratings yet

- Design of Elastomeric BearingsDocument6 pagesDesign of Elastomeric BearingsHarshitha GaneshNo ratings yet

C3 Prob

C3 Prob

Uploaded by

Sakshi Rai0 ratings0% found this document useful (0 votes)

7 views12 pagesOriginal Title

C3Prob

Copyright

© © All Rights Reserved

Available Formats

PPT, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PPT, PDF, TXT or read online from Scribd

Download as ppt, pdf, or txt

0 ratings0% found this document useful (0 votes)

7 views12 pagesC3 Prob

C3 Prob

Uploaded by

Sakshi RaiCopyright:

© All Rights Reserved

Available Formats

Download as PPT, PDF, TXT or read online from Scribd

Download as ppt, pdf, or txt

You are on page 1of 12

Probability and Information

A brief review

Copyright, 1996 © Dale Carnegie & Associates,

Probability

Probability provides a way of summarizing

uncertainty that comes from our laziness and

ignorance - how wonderful it is!

Probability, belief of the truth of a sentence

1 - true, 0 - false,

0<P<1 - intermediate degrees of belief in the truth

of the sentence

Degree of truth (fuzzy logic) vs. degree of

belief

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 2

All probability statements must indicate the

evidence wrt which the probability is being

assessed.

Prior or unconditional probability

Posterior or conditional probability

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 3

Basic probability notation

Prior probability

Proposition: P(Sunny)

Random variable: P(Weather=Sunny)

Each Random Variable has a domain

Sunny, Cloudy, Rain, Snow

Probability distribution P(Weather) = <.7,.2,.08,.02>

A random variable is not a number; a number

may be obtained by observing a RV.

A random variable can be continuous or discrete

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 4

Conditional Probability

Definition

P(A|B) = P(A^B)/P(B)

Product rule

P(A^B) = P(A|B)P(B)

Probabilistic inference does not work like

logical inference.

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 5

The axioms of probability

All probabilities are between 0 and 1

Necessarily true (valid) propositions have

probability 1; false (unsatisfiable) have 0

The probability of a disjunction

P(AvB)=P(A)+P(B)-P(A^B)

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 6

The joint probability distribution

Joint completely specifies probability assignments

to all propositions in the domain

A probabilistic model consists of a set of random

variables (X1, …,Xn).

An atomic event is an assignment of particular

values to all the variables.

Marginalization rule for RV Y and Z:

P(Y) = ΣP(Y,z) over z

Let’s see an example next.

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 7

Joint Probability

An example of two Boolean variables

Toothache !Toothache

Cavity 0.04 0.06

!Cavity 0.01 0.89

Observations: mutually exclusive and collectively exhaustive

What are

P(Cavity) =

P(Cavity V Toothache) =

P(Cavity ^ Toothache) =

P(Cavity|Toothache) =

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 8

Bayes’ rule

Deriving the rule via the product rule

P(B|A) = P(A|B)P(B)/P(A)

P(A) can be viewed as a normalization factor that

makes P(B|A) + (!B|A) = 1

P(A) = P(A|B)P(B)+P(A|!B)P(!B)

A more general case is

P(X|Y) = P(Y|X)P(X)/P(Y)

Bayes’ rule conditionalized on evidence E

P(X|Y,E) = P(Y|X,E)P(X|E)/P(Y|E)

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 9

Independence

Independent events A, B

P(B|A)=P(B),

P(A|B)=P(A),

P(A,B)=P(A)P(B)

Conditional independence

P(X|Y,Z)=P(X|Z) – given Z, X and Y are independent

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 10

Entropy

Entropy measures homogeneity/purity of sets of

examples

Or as information content: the less you need to

know (to determine class of new case), the more

information you have

With two classes (P,N) in S, p & n instances; let

t=p+n. View [p, n] as class distribution of S.

Entropy(S) = - (p/t) log2 (p/t) - (n/t) log2 (n/t)

E.g., p=9, n=5; Entropy(S) = Entropy([9,5]) = - (9/14)

log2 (9/14) - (5/14) log2 (5/14) = 0.940

E.g., Entropy([14,0])=0; Entropy([7,7])=1

Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 11

Entropy curve

For p/(p+n) between 0 & 1,

the 2-class entropy is

0 when p/(p+n) is 0 1

1 when p/(p+n) is 0.5

0 when p/(p+n) is 1

monotonically increasing

between 0 and 0.5 0.5

monotonically decreasing

between 0.5 and 1

When the data is pure, only

need to send 1 bit Data Mining -- Probability

7/03 H Liu (ASU) & G Dong (WSU) 12

You might also like

- Design of Structures & Foundations For Vibrating MachinesDocument212 pagesDesign of Structures & Foundations For Vibrating MachinesOvidiu Genteanu97% (30)

- GrgtrhytjyjyDocument2 pagesGrgtrhytjyjyKushanNo ratings yet

- Stat MethDocument66 pagesStat MethalscribdsaNo ratings yet

- Unit-5: Probabilistic Reasoning: Dr. Gopi SanghaniDocument28 pagesUnit-5: Probabilistic Reasoning: Dr. Gopi Sanghanidemo dataNo ratings yet

- Lecture Quantifying UncertaintyDocument40 pagesLecture Quantifying UncertaintySACHIN BHAGATNo ratings yet

- Statistical Data Analysis: PH4515: 1 Course StructureDocument5 pagesStatistical Data Analysis: PH4515: 1 Course StructurePhD LIVENo ratings yet

- Bayesian Updating: Odds Class 12, 18.05 Jeremy Orloff and Jonathan BloomDocument8 pagesBayesian Updating: Odds Class 12, 18.05 Jeremy Orloff and Jonathan BloomMd CassimNo ratings yet

- Uncertainty: Chapter 13, Sections 1-6Document31 pagesUncertainty: Chapter 13, Sections 1-6jollyggNo ratings yet

- Prob ZoompdfDocument86 pagesProb Zoompdfhuy.nguyentan1704No ratings yet

- 1probability & Probability DistributionDocument45 pages1probability & Probability DistributionapurvaapurvaNo ratings yet

- Tutorial Part1Document66 pagesTutorial Part1Thuong VuNo ratings yet

- Probability L2 PTDocument14 pagesProbability L2 PTpreticool_2003No ratings yet

- C 1 ReasoningDocument19 pagesC 1 Reasoningshreya saxenaNo ratings yet

- Chapter13 UncertaintyDocument49 pagesChapter13 Uncertaintynaseem hanzilaNo ratings yet

- Intro To Basic StatisticsDocument40 pagesIntro To Basic StatisticsJason del PonteNo ratings yet

- IT8601 unitIVDocument47 pagesIT8601 unitIVSmyla LuciaNo ratings yet

- Probability Review: Dr. Kaushik DebDocument33 pagesProbability Review: Dr. Kaushik DebAfsana RahmanNo ratings yet

- Notes On MLDocument42 pagesNotes On MLtempestinifyNo ratings yet

- UncertaintyDocument60 pagesUncertaintyArnab MukherjeeNo ratings yet

- Probability and Statistics Unit - 1Document8 pagesProbability and Statistics Unit - 1Hans DuyaninNo ratings yet

- cs511 UncertaintyDocument72 pagescs511 UncertaintyGUNTUPALLI SUNIL CHOWDARYNo ratings yet

- Probability and Stochastic ModelsDocument78 pagesProbability and Stochastic ModelsKatie CookNo ratings yet

- 02 ProbIntro 2020 AnnotatedDocument44 pages02 ProbIntro 2020 AnnotatedEureka oneNo ratings yet

- Study Unit 2.1 Basic ProbabilityDocument37 pagesStudy Unit 2.1 Basic ProbabilitybronwyncloeteNo ratings yet

- Distributions ZoomDocument82 pagesDistributions Zoomvinhtran23042004No ratings yet

- 1probability & Prob DistrnDocument46 pages1probability & Prob DistrnPratik JainNo ratings yet

- Probability PresentationDocument22 pagesProbability Presentationvietnam5 haydayNo ratings yet

- ProbabilisticiirDocument44 pagesProbabilisticiirZeenathNo ratings yet

- Probability DistributionsDocument129 pagesProbability DistributionsKishore GNo ratings yet

- PSFDS Unit1 Week2Document14 pagesPSFDS Unit1 Week2Nivesh PritmaniNo ratings yet

- Uncertainity MeasureDocument64 pagesUncertainity MeasureNarender NarruNo ratings yet

- Lec 10Document10 pagesLec 10Atiq ur RehmanNo ratings yet

- IAI: Treatment of UncertaintyDocument20 pagesIAI: Treatment of UncertaintySai Venkat GudlaNo ratings yet

- A Genie-Aided Detector With A Probabilistic Description of The Side InformationDocument1 pageA Genie-Aided Detector With A Probabilistic Description of The Side InformationJohn SinhaNo ratings yet

- Math ProbabilityDocument4 pagesMath ProbabilityV CNo ratings yet

- Decision 4Document15 pagesDecision 4nitprincyjNo ratings yet

- University of Dar Es Salaam Coict: Department of Computer Science & EngDocument42 pagesUniversity of Dar Es Salaam Coict: Department of Computer Science & Engsamwel sittaNo ratings yet

- 5 - CSE3013 - Uncertainity and Knowledge EngineeringDocument24 pages5 - CSE3013 - Uncertainity and Knowledge Engineeringshreyanair.s2020No ratings yet

- Ai Unit3Document38 pagesAi Unit3hegica6739No ratings yet

- Lecture 5: Statistical Independence, Discrete Random VariablesDocument4 pagesLecture 5: Statistical Independence, Discrete Random VariablesDesmond SeahNo ratings yet

- What Is Data Science? Probability Overview Descriptive StatisticsDocument10 pagesWhat Is Data Science? Probability Overview Descriptive StatisticsMauricioRojasNo ratings yet

- 1 Classical Probability: Indian Institute of Technology BombayDocument8 pages1 Classical Probability: Indian Institute of Technology BombayRajNo ratings yet

- Stat Mining 21Document1 pageStat Mining 21Adonis HuaytaNo ratings yet

- CERN Academic Training Lectures - Practical Statistics For LHC Physicists by Prosper PDFDocument283 pagesCERN Academic Training Lectures - Practical Statistics For LHC Physicists by Prosper PDFkevinchu021195No ratings yet

- Class 2Document12 pagesClass 2Smruti RanjanNo ratings yet

- Artificial Intelligence: Adina Magda FloreaDocument36 pagesArtificial Intelligence: Adina Magda FloreaPablo Lorenzo Muños SanchesNo ratings yet

- Math FoundationsDocument48 pagesMath FoundationsEmmanuel MartínezNo ratings yet

- Bayes ReasoningDocument45 pagesBayes Reasoninglouis benNo ratings yet

- Most Compact and Complete Data Science Cheat Sheet 1672981093Document10 pagesMost Compact and Complete Data Science Cheat Sheet 1672981093Mahesh KotnisNo ratings yet

- Bayes 2Document48 pagesBayes 2N MaheshNo ratings yet

- L11a Uncertainty171105Document25 pagesL11a Uncertainty171105Queen Emefa OlivesNo ratings yet

- RD05 StatisticsDocument7 pagesRD05 StatisticsأحمدآلزهوNo ratings yet

- Chapter 3 ProbabilityDocument38 pagesChapter 3 Probabilityth8yvv4gpmNo ratings yet

- Probability and Statistics (IT302) 5 August 2020 (11:15AM-11:45AM) ClassDocument39 pagesProbability and Statistics (IT302) 5 August 2020 (11:15AM-11:45AM) Classrustom khurraNo ratings yet

- Downloadartificial Intelligence - Unit 3Document20 pagesDownloadartificial Intelligence - Unit 3blazeNo ratings yet

- DeepayanSarkar BayesianDocument152 pagesDeepayanSarkar BayesianPhat NguyenNo ratings yet

- ECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1Document7 pagesECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1wandalexNo ratings yet

- Bio StatisticsDocument24 pagesBio Statisticsvmc6gyvh9gNo ratings yet

- Elementary Probability Theory For CS648ADocument19 pagesElementary Probability Theory For CS648ATechnology TectrixNo ratings yet

- 2014 Greenbelt BokDocument8 pages2014 Greenbelt BokKhyle Laurenz DuroNo ratings yet

- Dosha Head Work DesignDocument189 pagesDosha Head Work DesignAbiued EjigueNo ratings yet

- Aman's AI Journal - Watch ListDocument32 pagesAman's AI Journal - Watch ListaliNo ratings yet

- I J E S M: A Review Paper On Concrete Mix Design of M20 ConcreteDocument4 pagesI J E S M: A Review Paper On Concrete Mix Design of M20 ConcreteKerby Brylle GawanNo ratings yet

- Feedback and Feed ForwardDocument27 pagesFeedback and Feed ForwardEngr. AbdullahNo ratings yet

- Dynamic Satellite Geodesy-SneuuwDocument436 pagesDynamic Satellite Geodesy-Sneuuwglavisimo100% (1)

- Isothermal Reactor Design: 1. Batch OperationDocument3 pagesIsothermal Reactor Design: 1. Batch Operationنزار الدهاميNo ratings yet

- Vacuum MetallurgyDocument20 pagesVacuum MetallurgyTGrey027No ratings yet

- BRKACI-2101-Basic Verification With ACIDocument153 pagesBRKACI-2101-Basic Verification With ACIflyingccie datacenterNo ratings yet

- Basic AerodynamicsDocument36 pagesBasic AerodynamicsMohamed ArifNo ratings yet

- Fluid Control Components: IndexDocument38 pagesFluid Control Components: IndexjebacNo ratings yet

- Me6016 TeDocument44 pagesMe6016 TeKALIMUTHU KNo ratings yet

- 50+ Serial Keys For Popular SoftwareDocument22 pages50+ Serial Keys For Popular SoftwareskillriveNo ratings yet

- Trout Farming A Guide To Production and Inventory ManagementDocument2 pagesTrout Farming A Guide To Production and Inventory Managementluis ruperto floresNo ratings yet

- EcoStore Manual V3.2Document52 pagesEcoStore Manual V3.2Andreea PintilieNo ratings yet

- T Rec G.8261 201308 I!!pdf eDocument116 pagesT Rec G.8261 201308 I!!pdf egcarreongNo ratings yet

- Boatti PHD PresDocument41 pagesBoatti PHD PresygfrostNo ratings yet

- MATH - Q2 - W3 (Autosaved)Document44 pagesMATH - Q2 - W3 (Autosaved)Rose Amor Mercene-LacayNo ratings yet

- ANSYS Workbench - Simulation Introduction: Training ManualDocument4 pagesANSYS Workbench - Simulation Introduction: Training ManualShamik ChowdhuryNo ratings yet

- Winsem2023-24 Bece201l TH VL2023240500575 Cat-1-Qp - KeyDocument6 pagesWinsem2023-24 Bece201l TH VL2023240500575 Cat-1-Qp - Keydhoni050709No ratings yet

- RTWA Tornillo - 70 A 125 TR PDFDocument56 pagesRTWA Tornillo - 70 A 125 TR PDFModussar IlyasNo ratings yet

- Belzona 1341 (Supermetalglide) - Instructions For UseDocument2 pagesBelzona 1341 (Supermetalglide) - Instructions For Usevangeliskyriakos8998No ratings yet

- Commissioning Motors and GeneratorsDocument83 pagesCommissioning Motors and Generatorsbookbum75% (4)

- Topic 6 Optimal Dispatch of GenerationDocument136 pagesTopic 6 Optimal Dispatch of GenerationEng. Ali Al SaedNo ratings yet

- Ncert 11 Physics 2Document181 pagesNcert 11 Physics 2shivaraj pNo ratings yet

- Ict Test PreparationDocument6 pagesIct Test Preparationshahmeerraheel123No ratings yet

- Geiger ApdDocument6 pagesGeiger Apdluisbeto027No ratings yet

- Magdy El-Masry Prof. of Cardiology Tanta UniversityDocument55 pagesMagdy El-Masry Prof. of Cardiology Tanta UniversityPrabJot SinGhNo ratings yet

- Design of Elastomeric BearingsDocument6 pagesDesign of Elastomeric BearingsHarshitha GaneshNo ratings yet