Professional Documents

Culture Documents

Deep Learning Tutorial 3

Deep Learning Tutorial 3

Uploaded by

Atal BihariCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Deep Learning Tutorial 3

Deep Learning Tutorial 3

Uploaded by

Atal BihariCopyright:

Available Formats

Deep Learning from

Scratch

Theory + Practical

FAHAD HUSSAIN

MCS, MSCS, DAE(CIT)

Computer Science Instructor of well known international Center

Also, Machine Learning and Deep learning Practitioner

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Artificial

Normalize/Standardize

Neural Network

𝒎

∑ 𝒘𝒊 𝒙𝒊

𝒊= 𝒙

Applying Activation

Function

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

What is an Activation Function?

Activation functions are an extremely important feature of the artificial neural networks.

They basically decide whether a neuron should be activated or not. Whether the

information that the neuron is receiving is relevant for the given information or should it

be ignored.

The activation function is the non linear transformation that we do over the input

signal. This transformed output is then seen to the next layer of neurons as input.

• Linear Activation Function

• Non Linear Activation Function

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

What is an Activation Function?

Linear Function

The function is a line or linear. Therefore, the output of

the functions will not be confined between any range

Non Linear Function

1.Threshold

They make it easy for the model to generalize or adapt 2.Sigmoid

with variety of data and to differentiate between the

output

3.Tanh

4.ReLU

The Nonlinear Activation Functions are mainly divided on 5.Leaky ReLU

the basis of their range or curves

6.Softmax

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Threshold Function?

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Sigmoid Function?

The Sigmoid Function curve looks like a S-shape

This function reduces extreme values or outliers in data without removing them.

It converts independent variables of near infinite range into simple probabilities

between 0 and 1, and most of its output will be very close to 0 or 1.

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Rectifier (Relu) Function?

ReLU is the most widely used activation function while designing networks today. First

things first, the ReLU function is non linear, which means we can easily backpropagate the

errors and have multiple layers of neurons being activated by the ReLU function.

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Leaky Relu Function?

Leaky ReLU function is nothing but an improved version of the ReLU function. As we saw that

for the ReLU function, the gradient is 0 for x<0, which made the neurons die for activations in

that region. Leaky ReLU is defined to address this problem. Instead of defining the Relu

function as 0 for x less than 0, we define it as a small linear component of x.

What we have done here is that we have simply replaced the horizontal line with a non-zero, non-horizontal line. Here

a is a small value like 0.01 or so.

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Sigmoid Function?

Pronounced “tanch,” tanh is a hyperbolic trigonometric function

The tangent represents a ratio between the opposite and adjacent sides of a right triangle,

tanh represents the ratio of the hyperbolic sine to the hyperbolic cosine: tanh(x) = sinh(x) /

cosh(x)

Unlike the Sigmoid function, the normalized range of tanh is –1 to 1 The advantage of tanh is

that it can deal more easily with negative numbers

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Softmax Function (for Multiple

Classification)?

Softmax function calculates the probabilities distribution of the event over ‘n’ different events. In general way of

saying, this function will calculate the probabilities of each target class over all possible target classes. Later the

calculated probabilities will be helpful for determining the target class for the given inputs.

The main advantage of using Softmax is the output probabilities range. The range will 0 to 1, and the sum of all

the probabilities will be equal to one. If the softmax function used for multi-classification model it returns the

probabilities of each class and the target class will have the high probability.

The formula computes the exponential (e-power) of the given input value and the sum of exponential values of

all the values in the inputs. Then the ratio of the exponential of the input value and the sum of exponential

values is the output of the softmax function.

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Activation Function Example

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

Thank You!

For further assistance, code and slide https://fahadhussaincs.blogspot.com/

YouTube Channel : https://www.youtube.com/fahadhussaintutorial

You might also like

- Introduction To Uipath or Rpa Tutorial For Beginners or Rpa Training Using Uipath or EdurekaDocument50 pagesIntroduction To Uipath or Rpa Tutorial For Beginners or Rpa Training Using Uipath or EdurekaAtal Bihari100% (1)

- Deep LearningDocument189 pagesDeep LearningmausamNo ratings yet

- Deep LearningDocument189 pagesDeep LearningRajaNo ratings yet

- Sharma S. - Activation Functions in Neural NetworksDocument11 pagesSharma S. - Activation Functions in Neural NetworksJulie KalpoNo ratings yet

- Deep Learning Unit 1Document32 pagesDeep Learning Unit 1Aditya Pratap SinghNo ratings yet

- A Gentle Introduction To The Rectified Linear Unit (ReLU)Document17 pagesA Gentle Introduction To The Rectified Linear Unit (ReLU)21008059No ratings yet

- Rpa M3Document28 pagesRpa M3VAISHNAVINo ratings yet

- Activation Functions in Neural Networks - GeeksforGeeksDocument12 pagesActivation Functions in Neural Networks - GeeksforGeekswendu felekeNo ratings yet

- Fundamentals Deep Learning Activation Functions When To Use ThemDocument15 pagesFundamentals Deep Learning Activation Functions When To Use ThemfaisalNo ratings yet

- Lecture 2.1.2activation FunctionDocument15 pagesLecture 2.1.2activation FunctionMohd YusufNo ratings yet

- How To Choose An Activation Function For Deep LearningDocument15 pagesHow To Choose An Activation Function For Deep LearningAbdul KhaliqNo ratings yet

- Need and Use of Activation Functions in Anndeep LearningDocument7 pagesNeed and Use of Activation Functions in Anndeep Learninguiop38629No ratings yet

- Notes On Introduction To Deep LearningDocument19 pagesNotes On Introduction To Deep Learningthumpsup1223No ratings yet

- Nicole Lukinov 1.4 - 1.5 Robot ShuffleDocument5 pagesNicole Lukinov 1.4 - 1.5 Robot Shufflenicole.lukinovNo ratings yet

- Experiment No. 1 SL-II (ANN)Document3 pagesExperiment No. 1 SL-II (ANN)RutujaNo ratings yet

- Tensorflow Implementation For Job Market Classification: Taras Mitran Jeff WallerDocument46 pagesTensorflow Implementation For Job Market Classification: Taras Mitran Jeff Wallersubhanshu babbarNo ratings yet

- Activation Functions - Ipynb - ColaboratoryDocument10 pagesActivation Functions - Ipynb - ColaboratoryGOURAV SAHOONo ratings yet

- Deep Learning Unit 1Document35 pagesDeep Learning Unit 1shubham.2024it1068No ratings yet

- Lec 19Document24 pagesLec 19Inam Ullah KhanNo ratings yet

- Chapter 3 Part 2Document27 pagesChapter 3 Part 24PS19EC071 Lakshmi.MNo ratings yet

- RPA Unit 5 DCDocument32 pagesRPA Unit 5 DCyogesh nikamNo ratings yet

- Soft ComputingDocument10 pagesSoft Computingjitbhav97No ratings yet

- Activation FunctionsDocument6 pagesActivation FunctionsthummalakuntasNo ratings yet

- DL Unit2 HDDocument141 pagesDL Unit2 HDanongreeenNo ratings yet

- Unit IvDocument34 pagesUnit Ivdanmanworld443No ratings yet

- 12 Types of Neural Network Activation FunctionsDocument38 pages12 Types of Neural Network Activation FunctionsNusrat UllahNo ratings yet

- Gmail - Pega RPA - Open Span Q&ADocument15 pagesGmail - Pega RPA - Open Span Q&ARaghu GunasekaranNo ratings yet

- Deep Learning - DL-2Document44 pagesDeep Learning - DL-2Hasnain AhmadNo ratings yet

- Using JOONE FrameworkDocument13 pagesUsing JOONE FrameworkVivek SheelNo ratings yet

- DeepLearing TheoryDocument51 pagesDeepLearing TheorytharunNo ratings yet

- Activation FunctionDocument13 pagesActivation FunctionPrincess SwethaNo ratings yet

- Lab5 KarnaughMapsDocument17 pagesLab5 KarnaughMapsSrinivasa Rao DNo ratings yet

- HA215 EN Col17Document181 pagesHA215 EN Col17aishwarya gatlaNo ratings yet

- Beginner GuideDocument39 pagesBeginner GuideKaustav SahaNo ratings yet

- Lecture 1Document69 pagesLecture 1Beerbhan NaruNo ratings yet

- Machine LearningDocument7 pagesMachine LearningR MuhammadNo ratings yet

- Mod3 RPADocument37 pagesMod3 RPAPoojashree KSNo ratings yet

- Learn To Program, Simulate Plc & Hmi In Minutes with Real-World Examples from Scratch. A No Bs, No Fluff Practical Hands-On Project for Beginner to Intermediate: BoxsetFrom EverandLearn To Program, Simulate Plc & Hmi In Minutes with Real-World Examples from Scratch. A No Bs, No Fluff Practical Hands-On Project for Beginner to Intermediate: BoxsetNo ratings yet

- SoftComp 02Document33 pagesSoftComp 02Aditya RautNo ratings yet

- Activation Function - Lect 1Document5 pagesActivation Function - Lect 1NiteshNarukaNo ratings yet

- Activation Functions: Sigmoid, Tanh, Relu, Leaky Relu, Prelu, Elu, Threshold Relu and Softmax Basics For Neural Networks and Deep LearningDocument15 pagesActivation Functions: Sigmoid, Tanh, Relu, Leaky Relu, Prelu, Elu, Threshold Relu and Softmax Basics For Neural Networks and Deep LearningRMolina65No ratings yet

- 4 4 Choosing The Right Activation Function For Neural NetworksDocument25 pages4 4 Choosing The Right Activation Function For Neural Networksabbas1999n8No ratings yet

- Interview QuestionsDocument6 pagesInterview Questionsbrk_318100% (1)

- Chapter 3 RPADocument9 pagesChapter 3 RPA4PS19EC071 Lakshmi.MNo ratings yet

- L39-40-SOLID PrinciplesDocument21 pagesL39-40-SOLID PrinciplesABHILASH TYAGI 21104019No ratings yet

- Integrating Workflow Programming With Abap Objects PDFDocument19 pagesIntegrating Workflow Programming With Abap Objects PDFeliseoNo ratings yet

- Section (7.) AbstractionDocument12 pagesSection (7.) Abstractionabdo.tarek.el11No ratings yet

- Unit 5 Activation FunctionDocument15 pagesUnit 5 Activation FunctiontrendysyncsNo ratings yet

- Class 1+2Document11 pagesClass 1+2Abdullah RockNo ratings yet

- Iit Python Virtual LabDocument61 pagesIit Python Virtual LabsatuNo ratings yet

- OptiSystem Tutorials CPP ComponentDocument88 pagesOptiSystem Tutorials CPP ComponentAditya MittalNo ratings yet

- OopDocument50 pagesOopAakash Floydian JoshiNo ratings yet

- Ob 3f3139 Algorithms-And-FlowchartsDocument12 pagesOb 3f3139 Algorithms-And-FlowchartsMichael T. BelloNo ratings yet

- Ai Voice Assistant PPT ProjectDocument22 pagesAi Voice Assistant PPT ProjectSHIKHAR SHARMA (RA2111003011063)No ratings yet

- Deep Learning Tutorial 5Document10 pagesDeep Learning Tutorial 5Atal BihariNo ratings yet

- VeloCloud Lab Hol 2040 91 Net - PDF - enDocument10 pagesVeloCloud Lab Hol 2040 91 Net - PDF - enpaulo_an7381No ratings yet

- Ad3451 ML Unit 4 Notes EduenggDocument36 pagesAd3451 ML Unit 4 Notes EduenggMd RafeequeNo ratings yet

- Interaction & Scripting: 2009-10 Practical Assignment 2: Storming MindsDocument4 pagesInteraction & Scripting: 2009-10 Practical Assignment 2: Storming Mindsmouseboy1No ratings yet

- Question 1: What Is The Difference Between User Exit and Function Exit? User Exit Customer ExitDocument13 pagesQuestion 1: What Is The Difference Between User Exit and Function Exit? User Exit Customer Exitmayank singhNo ratings yet

- Module 1 Java NotesDocument36 pagesModule 1 Java NotesnavalanrNo ratings yet

- 041QUIZDocument2 pages041QUIZMarc AgradeNo ratings yet

- Deep Learning Tutorial 6Document6 pagesDeep Learning Tutorial 6Atal BihariNo ratings yet

- Pythonlearn 04 FunctionsDocument24 pagesPythonlearn 04 FunctionsAtal BihariNo ratings yet

- UiPath Final Certification Exam PDFDocument5 pagesUiPath Final Certification Exam PDFAtal BihariNo ratings yet

- ML Unit IvDocument17 pagesML Unit Ivsnikhath20No ratings yet

- Applications of Deep Learning For Phishing Detection A Systematic Literature ReviewDocument44 pagesApplications of Deep Learning For Phishing Detection A Systematic Literature ReviewBernardNo ratings yet

- BDA Worksheet 5 ArmanDocument5 pagesBDA Worksheet 5 Armanarman khanNo ratings yet

- LSTMDocument24 pagesLSTMArda SuryaNo ratings yet

- A Survey On Deep Learning For Multimodal Data FusionDocument36 pagesA Survey On Deep Learning For Multimodal Data FusionllNo ratings yet

- Convolutional Neural Networks: CMSC 35246: Deep LearningDocument166 pagesConvolutional Neural Networks: CMSC 35246: Deep LearningDiego AntonioNo ratings yet

- LSTMDocument2 pagesLSTMNexgen TechnologyNo ratings yet

- Associative Memory Neural NetworksDocument35 pagesAssociative Memory Neural NetworksHager Elzayat100% (1)

- Neural NetworksDocument29 pagesNeural NetworksfareenfarzanawahedNo ratings yet

- Artificial Neural Networks Quiz Questions 1Document17 pagesArtificial Neural Networks Quiz Questions 1Monash PNo ratings yet

- FDB Brochure - Deep Learning For Computer Vision From 05.02.2024 To 10.02.2024Document2 pagesFDB Brochure - Deep Learning For Computer Vision From 05.02.2024 To 10.02.2024publicationNo ratings yet

- CNN Basic Beak of BirdDocument20 pagesCNN Basic Beak of BirdPoralla priyanka100% (1)

- Neural Networks: Aroob Amjad FarrukhDocument6 pagesNeural Networks: Aroob Amjad FarrukhAroob amjadNo ratings yet

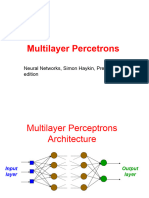

- Multi Layer Perceptron HaykinDocument50 pagesMulti Layer Perceptron HaykinAFFIFA JAHAN ANONNANo ratings yet

- Image Captioning: - A Deep Learning ApproachDocument14 pagesImage Captioning: - A Deep Learning ApproachPallavi BhartiNo ratings yet

- Neural NetworksDocument116 pagesNeural NetworksAbhishek NandaNo ratings yet

- Deep Feed-Forward Neural NetworkDocument4 pagesDeep Feed-Forward Neural NetworkAnkit MahapatraNo ratings yet

- Basics of Deep Learning: Pierre-Marc Jodoin and Christian DesrosiersDocument183 pagesBasics of Deep Learning: Pierre-Marc Jodoin and Christian DesrosiersalyasocialwayNo ratings yet

- NN LAB 13 SEP - Jupyter NotebookDocument6 pagesNN LAB 13 SEP - Jupyter Notebooksrinivasarao dhanikondaNo ratings yet

- SVM NotesDocument8 pagesSVM NotesRahul sharmaNo ratings yet

- A Deep Learning Based Multi Agent System For Intrusion DetectionDocument13 pagesA Deep Learning Based Multi Agent System For Intrusion DetectionAlexis Sebastian Tello CharcahuanaNo ratings yet

- Foundations of Deep LearningDocument48 pagesFoundations of Deep LearningtakundaNo ratings yet

- Assumptions in ANN: Information Processing Occurs at Many SimpleDocument26 pagesAssumptions in ANN: Information Processing Occurs at Many SimpleRMDNo ratings yet

- UNIT-4 Foundations of Deep LearningDocument43 pagesUNIT-4 Foundations of Deep Learningbhavana100% (1)

- 08 An Example of NN Using ReLuDocument10 pages08 An Example of NN Using ReLuHafshah DeviNo ratings yet

- Deep LearningDocument3 pagesDeep LearningAnu M100% (1)

- ASC Assignment 1 and 2 QuestionsDocument2 pagesASC Assignment 1 and 2 Questionsshen shahNo ratings yet

- Ist 407 PresentationDocument12 pagesIst 407 Presentationapi-529383903No ratings yet

- Linear Classifiers in Python: Chapter4Document24 pagesLinear Classifiers in Python: Chapter4NishantNo ratings yet

- Convolutional Neural Networks: Computer VisionDocument41 pagesConvolutional Neural Networks: Computer VisionThànhĐạt NgôNo ratings yet